Learning to Optimize with Reinforcement Learning

Since we posted our paper on “ Learning to Optimize ” last year, the area of optimizer learning has received growing attention. In this article, we provide an introduction to this line of work and share our perspective on the opportunities and challenges in this area.

Machine learning has enjoyed tremendous success and is being applied to a wide variety of areas, both in AI and beyond. This success can be attributed to the data-driven philosophy that underpins machine learning, which favours automatic discovery of patterns from data over manual design of systems using expert knowledge.

Yet, there is a paradox in the current paradigm: the algorithms that power machine learning are still designed manually. This raises a natural question: can we learn these algorithms instead? This could open up exciting possibilities: we could find new algorithms that perform better than manually designed algorithms, which could in turn improve learning capability.

Doing so, however, requires overcoming a fundamental obstacle: how do we parameterize the space of algorithms so that it is both (1) expressive, and (2) efficiently searchable? Various ways of representing algorithms trade off these two goals. For example, if the space of algorithms is represented by a small set of known algorithms, it most likely does not contain the best possible algorithm, but does allow for efficient searching via simple enumeration of algorithms in the set. On the other hand, if the space of algorithms is represented by the set of all possible programs, it contains the best possible algorithm, but does not allow for efficient searching, as enumeration would take exponential time.

One of the most common types of algorithms used in machine learning is continuous optimization algorithms. Several popular algorithms exist, including gradient descent, momentum, AdaGrad and ADAM. We consider the problem of automatically designing such algorithms. Why do we want to do this? There are two reasons: first, many optimization algorithms are devised under the assumption of convexity and applied to non-convex objective functions; by learning the optimization algorithm under the same setting as it will actually be used in practice, the learned optimization algorithm could hopefully achieve better performance. Second, devising new optimization algorithms manually is usually laborious and can take months or years; learning the optimization algorithm could reduce the amount of manual labour.

Learning to Optimize

In our paper last year ( Li & Malik, 2016 ), we introduced a framework for learning optimization algorithms, known as “Learning to Optimize”. We note that soon after our paper appeared, ( Andrychowicz et al., 2016 ) also independently proposed a similar idea.

Consider how existing continuous optimization algorithms generally work. They operate in an iterative fashion and maintain some iterate, which is a point in the domain of the objective function. Initially, the iterate is some random point in the domain; in each iteration, a step vector is computed using some fixed update formula, which is then used to modify the iterate. The update formula is typically some function of the history of gradients of the objective function evaluated at the current and past iterates. For example, in gradient descent, the update formula is some scaled negative gradient; in momentum, the update formula is some scaled exponential moving average of the gradients.

What changes from algorithm to algorithm is this update formula. So, if we can learn the update formula, we can learn an optimization algorithm. We model the update formula as a neural net. Thus, by learning the weights of the neural net, we can learn an optimization algorithm. Parameterizing the update formula as a neural net has two appealing properties mentioned earlier: first, it is expressive, as neural nets are universal function approximators and can in principle model any update formula with sufficient capacity; second, it allows for efficient search, as neural nets can be trained easily with backpropagation.

In order to learn the optimization algorithm, we need to define a performance metric, which we will refer to as the “meta-loss”, that rewards good optimizers and penalizes bad optimizers. Since a good optimizer converges quickly, a natural meta-loss would be the sum of objective values over all iterations (assuming the goal is to minimize the objective function), or equivalently, the cumulative regret. Intuitively, this corresponds to the area under the curve, which is larger when the optimizer converges slowly and smaller otherwise.

Learning to Learn

Consider the special case when the objective functions are loss functions for training other models. Under this setting, optimizer learning can be used for “learning to learn”. For clarity, we will refer to the model that is trained using the optimizer as the “base-model” and prefix common terms with “base-” and “meta-” to disambiguate concepts associated with the base-model and the optimizer respectively.

What do we mean exactly by “learning to learn”? While this term has appeared from time to time in the literature, different authors have used it to refer to different things, and there is no consensus on its precise definition. Often, it is also used interchangeably with the term “meta-learning”.

The term traces its origins to the idea of metacognition ( Aristotle, 350 BC ), which describes the phenomenon that humans not only reason, but also reason about their own process of reasoning. Work on “learning to learn” draws inspiration from this idea and aims to turn it into concrete algorithms. Roughly speaking, “learning to learn” simply means learning something about learning. What is learned at the meta-level differs across methods. We can divide various methods into three broad categories according to the type of meta-knowledge they aim to learn:

Learning What to Learn

Learning which model to learn, learning how to learn.

These methods aim to learn some particular values of base-model parameters that are useful across a family of related tasks ( Thrun & Pratt, 2012 ). The meta-knowledge captures commonalities across the family, so that base-learning on a new task from the family can be done more quickly. Examples include methods for transfer learning, multi-task learning and few-shot learning. Early methods operate by partitioning the parameters of the base-model into two sets: those that are specific to a task and those that are common across tasks. For example, a popular approach for neural net base-models is to share the weights of the lower layers across all tasks, so that they capture the commonalities across tasks. See this post by Chelsea Finn for an overview of the more recent methods in this area.

These methods aim to learn which base-model is best suited for a task ( Brazdil et al., 2008 ). The meta-knowledge captures correlations between different base-models and their performance on different tasks. The challenge lies in parameterizing the space of base-models in a way that is expressive and efficiently searchable, and in parameterizing the space of tasks that allows for generalization to unseen tasks. Different methods make different trade-offs between expressiveness and searchability: ( Brazdil et al., 2003 ) uses a database of predefined base-models and exemplar tasks and outputs the base-model that performed the best on the nearest exemplar task. While this space of base-models is searchable, it does not contain good but yet-to-be-discovered base-models. ( Schmidhuber, 2004 ) represents each base-model as a general-purpose program. While this space is very expressive, searching in this space takes exponential time in the length of the target program. ( Hochreiter et al., 2001 ) views an algorithm that trains a base-model as a black box function that maps a sequence of training examples to a sequence of predictions and models it as a recurrent neural net. Meta-training then simply reduces to training the recurrent net. Because the base-model is encoded in the recurrent net’s memory state, its capacity is constrained by the memory size. A related area is hyperparameter optimization, which aims for a weaker goal and searches over base-models parameterized by a predefined set of hyperparameters. It needs to generalize across hyperparameter settings (and by extension, base-models), but not across tasks, since multiple trials with different hyperparameter settings on the same task are allowed.

While methods in the previous categories aim to learn about the outcome of learning, methods in this category aim to learn about the process of learning. The meta-knowledge captures commonalities in the behaviours of learning algorithms. There are three components under this setting: the base-model, the base-algorithm for training the base-model, and the meta-algorithm that learns the base-algorithm. What is learned is not the base-model itself, but the base-algorithm, which trains the base-model on a task. Because both the base-model and the task are given by the user, the base-algorithm that is learned must work on a range of different base-models and tasks. Since most learning algorithms optimize some objective function, learning the base-algorithm in many cases reduces to learning an optimization algorithm. This problem of learning optimization algorithms was explored in ( Li & Malik, 2016 ), ( Andrychowicz et al., 2016 ) and a number of subsequent papers. Closely related to this line of work is ( Bengio et al., 1991 ), which learns a Hebb-like synaptic learning rule. The learning rule depends on a subset of the dimensions of the current iterate encoding the activities of neighbouring neurons, but does not depend on the objective function and therefore does not have the capability to generalize to different objective functions.

Generalization

Learning of any sort requires training on a finite number of examples and generalizing to the broader class from which the examples are drawn. It is therefore instructive to consider what the examples and the class correspond to in our context of learning optimizers for training base-models. Each example is an objective function, which corresponds to the loss function for training a base-model on a task. The task is characterized by a set of examples and target predictions, or in other words, a dataset, that is used to train the base-model. The meta-training set consists of multiple objective functions and the meta-test set consists of different objective functions drawn from the same class. Objective functions can differ in two ways: they can correspond to different base-models, or different tasks. Therefore, generalization in this context means that the learned optimizer works on different base-models and/or different tasks.

Why is generalization important?

Suppose for moment that we didn’t care about generalization. In this case, we would evaluate the optimizer on the same objective functions that are used for training the optimizer. If we used only one objective function, then the best optimizer would be one that simply memorizes the optimum: this optimizer always converges to the optimum in one step regardless of initialization. In our context, the objective function corresponds to the loss for training a particular base-model on a particular task and so this optimizer essentially memorizes the optimal weights of the base-model. Even if we used many objective functions, the learned optimizer could still try to identify the objective function it is operating on and jump to the memorized optimum as soon as it does.

Why is this problematic? Memorizing the optima requires finding them in the first place, and so learning an optimizer takes longer than running a traditional optimizer like gradient descent. So, for the purposes of finding the optima of the objective functions at hand, running a traditional optimizer would be faster. Consequently, it would be pointless to learn the optimizer if we didn’t care about generalization.

Therefore, for the learned optimizer to have any practical utility, it must perform well on new objective functions that are different from those used for training.

What should be the extent of generalization?

If we only aim for generalization to similar base-models on similar tasks, then the learned optimizer could memorize parts of the optimal weights that are common across the base-models and tasks, like the weights of the lower layers in neural nets. This would be essentially the same as learning- what -to-learn formulations like transfer learning.

Unlike learning what to learn, the goal of learning how to learn is to learn not what the optimum is, but how to find it. We must therefore aim for a stronger notion of generalization, namely generalization to similar base-models on dissimilar tasks. An optimizer that can generalize to dissimilar tasks cannot just partially memorize the optimal weights, as the optimal weights for dissimilar tasks are likely completely different. For example, not even the lower layer weights in neural nets trained on MNIST(a dataset consisting of black-and-white images of handwritten digits) and CIFAR-10(a dataset consisting of colour images of common objects in natural scenes) likely have anything in common.

Should we aim for an even stronger form of generalization, that is, generalization to dissimilar base-models on dissimilar tasks? Since these correspond to objective functions that bear no similarity to objective functions used for training the optimizer, this is essentially asking if the learned optimizer should generalize to objective functions that could be arbitrarily different.

It turns out that this is impossible. Given any optimizer, we consider the trajectory followed by the optimizer on a particular objective function. Because the optimizer only relies on information at the previous iterates, we can modify the objective function at the last iterate to make it arbitrarily bad while maintaining the geometry of the objective function at all previous iterates. Then, on this modified objective function, the optimizer would follow the exact same trajectory as before and end up at a point with a bad objective value. Therefore, any optimizer has objective functions that it performs poorly on and no optimizer can generalize to all possible objective functions.

If no optimizer is universally good, can we still hope to learn optimizers that are useful? The answer is yes: since we are typically interested in optimizing functions from certain special classes in practice, it is possible to learn optimizers that work well on these classes of interest. The objective functions in a class can share regularities in their geometry, e.g.: they might have in common certain geometric properties like convexity, piecewise linearity, Lipschitz continuity or other unnamed properties. In the context of learning- how -to-learn, each class can correspond to a type of base-model. For example, neural nets with ReLU activation units can be one class, as they are all piecewise linear. Note that when learning the optimizer, there is no need to explicitly characterize the form of geometric regularity, as the optimizer can learn to exploit it automatically when trained on objective functions from the class.

How to Learn the Optimizer

The first approach we tried was to treat the problem of learning optimizers as a standard supervised learning problem: we simply differentiate the meta-loss with respect to the parameters of the update formula and learn these parameters using standard gradient-based optimization. (We weren’t the only ones to have thought of this; ( Andrychowicz et al., 2016 ) also used a similar approach.)

This seemed like a natural approach, but it did not work: despite our best efforts, we could not get any optimizer trained in this manner to generalize to unseen objective functions, even though they were drawn from the same distribution that generated the objective functions used to train the optimizer. On almost all unseen objective functions, the learned optimizer started off reasonably, but quickly diverged after a while. On the other hand, on the training objective functions, it exhibited no such issues and did quite well. Why is this?

It turns out that optimizer learning is not as simple a learning problem as it appears. Standard supervised learning assumes all training examples are independent and identically distributed (i.i.d.); in our setting, the step vector the optimizer takes at any iteration affects the gradients it sees at all subsequent iterations. Furthermore, how the step vector affects the gradient at the subsequent iteration is not known, since this depends on the local geometry of the objective function, which is unknown at meta-test time. Supervised learning cannot operate in this setting, and must assume that the local geometry of an unseen objective function is the same as the local geometry of training objective functions at all iterations.

Consider what happens when an optimizer trained using supervised learning is used on an unseen objective function. It takes a step, and discovers at the next iteration that the gradient is different from what it expected. It then recalls what it did on the training objective functions when it encountered such a gradient, which could have happened in a completely different region of the space, and takes a step accordingly. To its dismay, it finds out that the gradient at the next iteration is even more different from what it expected. This cycle repeats and the error the optimizer makes becomes bigger and bigger over time, leading to rapid divergence.

This phenomenon is known in the literature as the problem of compounding errors . It is known that the total error of a supervised learner scales quadratically in the number of iterations, rather than linearly as would be the case in the i.i.d. setting ( Ross and Bagnell, 2010 ). In essence, an optimizer trained using supervised learning necessarily overfits to the geometry of the training objective functions. One way to solve this problem is to use reinforcement learning.

Background on Reinforcement Learning

Consider an environment that maintains a state, which evolves in an unknown fashion based on the action that is taken. We have an agent that interacts with this environment, which sequentially selects actions and receives feedback after each action is taken on how good or bad the new state is. The goal of reinforcement learning is to find a way for the agent to pick actions based on the current state that leads to good states on average.

More precisely, a reinforcement learning problem is characterized by the following components:

- A state space, which is the set of all possible states,

- An action space, which is the set of all possible actions,

- A cost function, which measures how bad a state is,

- A time horizon, which is the number of time steps,

- An initial state probability distribution, which specifies how frequently different states occur at the beginning before any action is taken, and

- A state transition probability distribution, which specifies how the state changes (probabilistically) after a particular action is taken.

While the learning algorithm is aware of what the first five components are, it does not know the last component, i.e.: how states evolve based on actions that are chosen. At training time, the learning algorithm is allowed to interact with the environment. Specifically, at each time step, it can choose an action to take based on the current state. Then, based on the action that is selected and the current state, the environment samples a new state, which is observed by the learning algorithm at the subsequent time step. The sequence of sampled states and actions is known as a trajectory. This sampling procedure induces a distribution over trajectories, which depends on the initial state and transition probability distributions and the way action is selected based on the current state, the latter of which is known as a policy . This policy is often modelled as a neural net that takes in the current state as input and outputs the action. The goal of the learning algorithm is to find a policy such that the expected cumulative cost of states over all time steps is minimized, where the expectation is taken with respect to the distribution over trajectories.

Formulation as a Reinforcement Learning Problem

Recall the learning framework we introduced above, where the goal is to find the update formula that minimizes the meta-loss. Intuitively, we think of the agent as an optimization algorithm and the environment as being characterized by the family of objective functions that we’d like to learn an optimizer for. The state consists of the current iterate and some features along the optimization trajectory so far, which could be some statistic of the history of gradients, iterates and objective values. The action is the step vector that is used to update the iterate.

Under this formulation, the policy is essentially a procedure that computes the action, which is the step vector, from the state, which depends on the current iterate and the history of gradients, iterates and objective values. In other words, a particular policy represents a particular update formula. Hence, learning the policy is equivalent to learning the update formula, and hence the optimization algorithm. The initial state probability distribution is the joint distribution of the initial iterate, gradient and objective value. The state transition probability distribution characterizes what the next state is likely to be given the current state and action. Since the state contains the gradient and objective value, the state transition probability distribution captures how the gradient and objective value are likely to change for any given step vector. In other words, it encodes the likely local geometries of the objective functions of interest. Crucially, the reinforcement learning algorithm does not have direct access to this state transition probability distribution, and therefore the policy it learns avoids overfitting to the geometry of the training objective functions.

We choose a cost function of a state to be the value of the objective function evaluated at the current iterate. Because reinforcement learning minimizes the cumulative cost over all time steps, it essentially minimizes the sum of objective values over all iterations, which is the same as the meta-loss.

We trained an optimization algorithm on the problem of training a neural net on MNIST, and tested it on the problems of training different neural nets on the Toronto Faces Dataset (TFD), CIFAR-10 and CIFAR-100. These datasets bear little similarity to each other: MNIST consists of black-and-white images of handwritten digits, TFD consists of grayscale images of human faces, and CIFAR-10/100 consists of colour images of common objects in natural scenes. It is therefore unlikely that a learned optimization algorithm can get away with memorizing, say, the lower layer weights, on MNIST and still do well on TFD and CIFAR-10/100.

As shown, the optimization algorithm trained using our approach on MNIST (shown in light red) generalizes to TFD, CIFAR-10 and CIFAR-100 and outperforms other optimization algorithms.

To understand the behaviour of optimization algorithms learned using our approach, we trained an optimization algorithm on two-dimensional logistic regression problems and visualized its trajectory in the space of the parameters. It is worth noting that the behaviours of optimization algorithms in low dimensions and high dimensions may be different, and so the visualizations below may not be indicative of the behaviours of optimization algorithms in high dimensions. However, they provide some useful intuitions about the kinds of behaviour that can be learned.

The plots above show the optimization trajectories followed by various algorithms on two different unseen logistic regression problems. Each arrow represents one iteration of an optimization algorithm. As shown, the algorithm learned using our approach (shown in light red) takes much larger steps compared to other algorithms. In the first example, because the learned algorithm takes large steps, it overshoots after two iterations, but does not oscillate and instead takes smaller steps to recover. In the second example, due to vanishing gradients, traditional optimization algorithms take small steps and therefore converge slowly. On the other hand, the learned algorithm takes much larger steps and converges faster.

More details can be found in our papers:

Learning to Optimize Ke Li, Jitendra Malik arXiv:1606.01885 , 2016 and International Conference on Learning Representations (ICLR) , 2017

Learning to Optimize Neural Nets Ke Li, Jitendra Malik arXiv:1703.00441 , 2017

I’d like to thank Jitendra Malik for his valuable feedback.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Perspective

- Published: 25 January 2022

Intelligent problem-solving as integrated hierarchical reinforcement learning

- Manfred Eppe ORCID: orcid.org/0000-0002-5473-3221 1 nAff4 ,

- Christian Gumbsch ORCID: orcid.org/0000-0003-2741-6551 2 , 3 ,

- Matthias Kerzel 1 ,

- Phuong D. H. Nguyen 1 ,

- Martin V. Butz ORCID: orcid.org/0000-0002-8120-8537 2 &

- Stefan Wermter 1

Nature Machine Intelligence volume 4 , pages 11–20 ( 2022 ) Cite this article

5328 Accesses

32 Citations

8 Altmetric

Metrics details

- Cognitive control

- Computational models

- Computer science

- Learning algorithms

- Problem solving

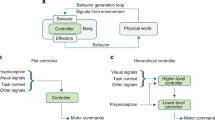

According to cognitive psychology and related disciplines, the development of complex problem-solving behaviour in biological agents depends on hierarchical cognitive mechanisms. Hierarchical reinforcement learning is a promising computational approach that may eventually yield comparable problem-solving behaviour in artificial agents and robots. However, so far, the problem-solving abilities of many human and non-human animals are clearly superior to those of artificial systems. Here we propose steps to integrate biologically inspired hierarchical mechanisms to enable advanced problem-solving skills in artificial agents. We first review the literature in cognitive psychology to highlight the importance of compositional abstraction and predictive processing. Then we relate the gained insights with contemporary hierarchical reinforcement learning methods. Interestingly, our results suggest that all identified cognitive mechanisms have been implemented individually in isolated computational architectures, raising the question of why there exists no single unifying architecture that integrates them. As our final contribution, we address this question by providing an integrative perspective on the computational challenges to develop such a unifying architecture. We expect our results to guide the development of more sophisticated cognitively inspired hierarchical machine learning architectures.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

24,99 € / 30 days

cancel any time

Subscribe to this journal

Receive 12 digital issues and online access to articles

111,21 € per year

only 9,27 € per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Phy-Q as a measure for physical reasoning intelligence

Hierarchical motor control in mammals and machines

Hierarchical generative modelling for autonomous robots

Gruber, R. et al. New Caledonian crows use mental representations to solve metatool problems. Curr. Biol. 29 , 686–692 (2019).

Article Google Scholar

Butz, M. V. & Kutter, E. F. How the Mind Comes into Being (Oxford Univ. Press, 2017).

Perkins, D. N. & Salomon, G. in International Encyclopedia of Education (eds. Husen T. & Postelwhite T. N.) 6452–6457 (Pergamon Press, 1992).

Botvinick, M. M., Niv, Y. & Barto, A. C. Hierarchically organized behavior and its neural foundations: a reinforcement learning perspective. Cognition 113 , 262–280 (2009).

Tomov, M. S., Yagati, S., Kumar, A., Yang, W. & Gershman, S. J. Discovery of hierarchical representations for efficient planning. PLoS Comput. Biol. 16 , e1007594 (2020).

Arulkumaran, K., Deisenroth, M. P., Brundage, M. & Bharath, A. A. Deep reinforcement learning: a brief survey. IEEE Signal Process. Mag. 34 , 26–38 (2017).

Li, Y. Deep reinforcement learning: an overview. Preprint at https://arxiv.org/abs/1701.07274 (2018).

Sutton, R. S. & Barto, A. G. Reinforcement Learning : An Introduction 2nd edn (MIT Press, 2018).

Neftci, E. O. & Averbeck, B. B. Reinforcement learning in artificial and biological systems. Nat. Mach. Intell. 1 , 133–143 (2019).

Eppe, M., Nguyen, P. D. H. & Wermter, S. From semantics to execution: integrating action planning with reinforcement learning for robotic causal problem-solving. Front. Robot. AI 6 , 123 (2019).

Oh, J., Singh, S., Lee, H. & Kohli, P. Zero-shot task generalization with multi-task deep reinforcement learning. In Proc. 34th International Conference on Machine Learning ( ICML ) (eds. Precup, D. & Teh, Y. W.) 2661–2670 (PMLR, 2017).

Sohn, S., Oh, J. & Lee, H. Hierarchical reinforcement learning for zero-shot generalization with subtask dependencies. In Proc. 32nd International Conference on Neural Information Processing Systems ( NeurIPS ) (eds Bengio S. et al.) Vol. 31, 7156–7166 (ACM, 2018).

Hegarty, M. Mechanical reasoning by mental simulation. Trends Cogn. Sci. 8 , 280–285 (2004).

Klauer, K. J. Teaching for analogical transfer as a means of improving problem-solving, thinking and learning. Instruct. Sci. 18 , 179–192 (1989).

Duncker, K. & Lees, L. S. On problem-solving. Psychol. Monographs 58, No.5 (whole No. 270), 85–101 https://doi.org/10.1037/h0093599 (1945).

Dayan, P. Goal-directed control and its antipodes. Neural Netw. 22 , 213–219 (2009).

Dolan, R. J. & Dayan, P. Goals and habits in the brain. Neuron 80 , 312–325 (2013).

O’Doherty, J. P., Cockburn, J. & Pauli, W. M. Learning, reward, and decision making. Annu. Rev. Psychol. 68 , 73–100 (2017).

Tolman, E. C. & Honzik, C. H. Introduction and removal of reward, and maze performance in rats. Univ. California Publ. Psychol. 4 , 257–275 (1930).

Google Scholar

Butz, M. V. & Hoffmann, J. Anticipations control behavior: animal behavior in an anticipatory learning classifier system. Adaptive Behav. 10 , 75–96 (2002).

Miller, G. A., Galanter, E. & Pribram, K. H. Plans and the Structure of Behavior (Holt, Rinehart & Winston, 1960).

Botvinick, M. & Weinstein, A. Model-based hierarchical reinforcement learning and human action control. Philos. Trans. R. Soc. B Biol. Sci. 369 , 20130480 (2014).

Wiener, J. M. & Mallot, H. A. ’Fine-to-coarse’ route planning and navigation in regionalized environments. Spatial Cogn. Comput. 3 , 331–358 (2003).

Stock, A. & Stock, C. A short history of ideo-motor action. Psychol. Res. 68 , 176–188 (2004).

Hommel, B., Müsseler, J., Aschersleben, G. & Prinz, W. The theory of event coding (TEC): a framework for perception and action planning. Behav. Brain Sci. 24 , 849–878 (2001).

Hoffmann, J. in Anticipatory Behavior in Adaptive Learning Systems : Foundations , Theories and Systems (eds Butz, M. V. et al.) 44–65 (Springer, 2003).

Kunde, W., Elsner, K. & Kiesel, A. No anticipation-no action: the role of anticipation in action and perception. Cogn. Process. 8 , 71–78 (2007).

Barsalou, L. W. Grounded cognition. Annu. Rev. Psychol. 59 , 617–645 (2008).

Butz, M. V. Toward a unified sub-symbolic computational theory of cognition. Front. Psychol. 7 , 925 (2016).

Pulvermüller, F. Brain embodiment of syntax and grammar: discrete combinatorial mechanisms spelt out in neuronal circuits. Brain Lang. 112 , 167–179 (2010).

Sutton, R. S., Precup, D. & Singh, S. Between MDPs and semi-MDPs: a framework for temporal abstraction in reinforcement learning. Artif. Intell. 112 , 181–211 (1999).

Article MathSciNet MATH Google Scholar

Flash, T. & Hochner, B. Motor primitives in vertebrates and invertebrates. Curr. Opin. Neurobiol. 15 , 660–666 (2005).

Schaal, S. in Adaptive Motion of Animals and Machines (eds. Kimura, H. et al.) 261–280 (Springer, 2006).

Feldman, J., Dodge, E. & Bryant, J. in The Oxford Handbook of Linguistic Analysis (eds Heine, B. & Narrog, H.) 111–138 (Oxford Univ. Press, 2009).

Fodor, J. A. Language, thought and compositionality. Mind Lang. 16 , 1–15 (2001).

Frankland, S. M. & Greene, J. D. Concepts and compositionality: in search of the brain’s language of thought. Annu. Rev. Psychol. 71 , 273–303 (2020).

Hummel, J. E. Getting symbols out of a neural architecture. Connection Sci. 23 , 109–118 (2011).

Haynes, J. D., Wisniewski, D., Gorgen, K., Momennejad, I. & Reverberi, C. FMRI decoding of intentions: compositionality, hierarchy and prospective memory. In Proc. 3rd International Winter Conference on Brain-Computer Interface ( BCI ), 1-3 (IEEE, 2015).

Gärdenfors, P. The Geometry of Meaning : Semantics Based on Conceptual Spaces (MIT Press, 2014).

Book MATH Google Scholar

Lakoff, G. & Johnson, M. Philosophy in the Flesh (Basic Books, 1999).

Eppe, M. et al. A computational framework for concept blending. Artif. Intell. 256 , 105–129 (2018).

Turner, M. The Origin of Ideas (Oxford Univ. Press, 2014).

Deci, E. L. & Ryan, R. M. Self-determination theory and the facilitation of intrinsic motivation. Am. Psychol. 55 , 68–78 (2000).

Friston, K. et al. Active inference and epistemic value. Cogn. Neurosci. 6 , 187–214 (2015).

Berlyne, D. E. Curiosity and exploration. Science 153 , 25–33 (1966).

Loewenstein, G. The psychology of curiosity: a review and reinterpretation. Psychol. Bull. 116 , 75–98 (1994).

Oudeyer, P.-Y., Kaplan, F. & Hafner, V. V. Intrinsic motivation systems for autonomous mental development. In IEEE Transactions on Evolutionary Computation (eds. Coello, C. A. C. et al.) Vol. 11, 265–286 (IEEE, 2007).

Pisula, W. Play and exploration in animals—a comparative analysis. Polish Psychol. Bull. 39 , 104–107 (2008).

Jeannerod, M. Mental imagery in the motor context. Neuropsychologia 33 , 1419–1432 (1995).

Kahnemann, D. & Tversky, A. in Judgement under Uncertainty : Heuristics and Biases (eds Kahneman, D. et al.) Ch. 14, 201–208 (Cambridge Univ. Press, 1982).

Wells, G. L. & Gavanski, I. Mental simulation of causality. J. Personal. Social Psychol. 56 , 161–169 (1989).

Taylor, S. E., Pham, L. B., Rivkin, I. D. & Armor, D. A. Harnessing the imagination: mental simulation, self-regulation and coping. Am. Psychol. 53 , 429–439 (1998).

Kaplan, F. & Oudeyer, P.-Y. in Embodied Artificial Intelligence , Lecture Notes in Computer Science Vol. 3139 (eds Iida, F. et al.) 259–270 (Springer, 2004).

Schmidhuber, J. Formal theory of creativity, fun, and intrinsic motivation. IEEE Trans. Auton. Mental Dev. 2 , 230–247 (2010).

Friston, K., Mattout, J. & Kilner, J. Action understanding and active inference. Biol. Cybern. 104 , 137–160 (2011).

Oudeyer, P.-Y. Computational theories of curiosity-driven learning. In The New Science of Curiosity (ed. Goren Gordon), 43-72 (Nova Science Publishers, 2018); https://arxiv.org/abs/1802.10546

Colombo, M. & Wright, C. First principles in the life sciences: the free-energy principle, organicism and mechanism. Synthese 198 , 3463–3488 (2021).

Article MathSciNet Google Scholar

Huang, Y. & Rao, R. P. Predictive coding. WIREs Cogn. Sci. 2 , 580–593 (2011).

Friston, K. The free-energy principle: a unified brain theory? Nat. Rev. Neurosci. 11 , 127–138 (2010).

Knill, D. C. & Pouget, A. The Bayesian brain: the role of uncertainty in neural coding and computation. Trends Neurosci. 27 , 712–719 (2004).

Clark, A. Whatever next? Predictive brains, situated agents, and the future of cognitive science. Behav. Brain Sci. 36 , 181–204 (2013).

Clark, A. Surfing Uncertainty : Prediction , Action and the Embodied Mind (Oxford Univ. Press, 2016).

Zacks, J. M., Speer, N. K., Swallow, K. M., Braver, T. S. & Reyonolds, J. R. Event perception: a mind/brain perspective. Psychol. Bull. 133 , 273–293 (2007).

Eysenbach, B., Ibarz, J., Gupta, A. & Levine, S. Diversity is all you need: learning skills without a reward function. In International Conference on Learning Representations (ICLR, 2019).

Frans, K., Ho, J., Chen, X., Abbeel, P. & Schulman, J. Meta learning shared hierarchies. In Proc. International Conference on Learning Representations https://openreview.net/pdf?id=SyX0IeWAW (ICLR, 2018).

Heess, N. et al. Learning and transfer of modulated locomotor controllers. Preprint at https://arxiv.org/abs/1610.05182 (2016).

Jiang, Y., Gu, S., Murphy, K. & Finn, C. Language as an abstraction for hierarchical deep reinforcement learning. In Neural Information Processing Systems ( NeurIPS ) (eds. Wallach, H. et al.) 9414–9426 (ACM, 2019).

Li, A. C., Florensa, C., Clavera, I. & Abbeel, P. Sub-policy adaptation for hierarchical reinforcement learning. In Proc. International Conference on Learning Representations https://openreview.net/forum?id=ByeWogStDS (ICLR, 2020).

Qureshi, A. H. et al. Composing task-agnostic policies with deep reinforcement learning. In Proc. International Conference on Learning Representations https://openreview.net/forum?id=H1ezFREtwH (ICLR, 2020).

Sharma, A., Gu, S., Levine, S., Kumar, V. & Hausman, K. Dynamics-aware unsupervised discovery of skills. In Proc. International Conference on Learning Representations https://openreview.net/forum?id=HJgLZR4KvH (ICLR, 2020).

Tessler, C., Givony, S., Zahavy, T., Mankowitz, D. J. & Mannor, S. A deep hierarchical approach to lifelong learning in minecraft. In Proc. 31st AAAI Conference on Artificial Intelligence 1553–1561 (AAAI, 2017).

Vezhnevets, A. et al. Strategic attentive writer for learning macro-actions. In Neural Information Processing Systems ( NIPS ) (eds. Lee, D. et al.) 3494–3502 (NIPS, 2016).

Devin, C., Gupta, A., Darrell, T., Abbeel, P. & Levine, S. Learning modular neural network policies for multi-task and multi-robot transfer. In Proc. International Conference on Robotics and Automation ( ICRA ) (eds. Okamura, A. et al.) 2169–2176 (IEEE, 2017).

Hejna, D. J., Abbeel, P. & Pinto, L. Hierarchically decoupled morphological transfer. In Proc. International Conference on Machine Learning ( ICML ) (eds. Daumé III, H. & Singh, A.) 11409–11420 (PMLR, 2020).

Hamrick, J. B. et al. On the role of planning in model-based deep reinforcement learning. In Proc. International Conference on Learning Representations https://openreview.net/pdf?id=IrM64DGB21 (ICLR, 2021).

Sutton, R. S. Integrated architectures for learning, planning, and reacting based on approximating dynamic programming. In Proc. 7th International Conference on Machine Learning ( ICML ) (eds. Porter, B. W. & Mooney, R. J.) 216–224 (Morgan Kaufmann, 1990).

Nau, D. et al. SHOP2: an HTN planning system. J. Artif. Intell. Res. 20 , 379–404 (2003).

Article MATH Google Scholar

Lyu, D., Yang, F., Liu, B. & Gustafson, S. SDRL: interpretable and data-efficient deep reinforcement learning leveraging symbolic planning. In Proc. AAAI Conference on Artificial Intelligence Vol. 33, 2970–2977 (AAAI, 2019).

Ma, A., Ouimet, M. & Cortés, J. Hierarchical reinforcement learning via dynamic subspace search for multi-agent planning. Auton. Robot. 44 , 485–503 (2020).

Bacon, P.-L., Harb, J. & Precup, D. The option-critic architecture. In Proc. 31st AAAI Conference on Artificial Intelligence 1726–1734 (AAAI, 2017).

Dietterich, T. G. State abstraction in MAXQ hierarchical reinforcement learning. In Advances in Neural Information Processing Systems ( NIPS ) (eds. Solla, S. et al.) Vol. 12, 994–1000 (NIPS, 1999).

Kulkarni, T. D., Narasimhan, K. R., Saeedi, A. & Tenenbaum, J. B. Hierarchical deep reinforcement learning: integrating temporal abstraction and intrinsic motivation. In Neural Information Processing Systems ( NIPS ) (eds. Lee, D. et al.) 3675–3683 (NIPS, 2016).

Shankar, T., Pinto, L., Tulsiani, S. & Gupta, A. Discovering motor programs by recomposing demonstrations. In Proc. International Conference on Learning Representations https://openreview.net/attachment?id=rkgHY0NYwr&name=original_pdf (ICLR, 2020).

Vezhnevets, A. S., Wu, Y. T., Eckstein, M., Leblond, R. & Leibo, J. Z. Options as responses: grounding behavioural hierarchies in multi-agent reinforcement learning. In Proc. International Conference on Machine Learning ( ICML ) (eds. Daumé III, H. & Singh, A.) 9733–9742 (PMLR, 2020).

Ghazanfari, B., Afghah, F. & Taylor, M. E. Sequential association rule mining for autonomously extracting hierarchical task structures in reinforcement learning. IEEE Access 8 , 11782–11799 (2020).

Levy, A., Konidaris, G., Platt, R. & Saenko, K. Learning multi-level hierarchies with hindsight. In Proc. International Conference on Learning Representations https://openreview.net/pdf?id=ryzECoAcY7 (ICLR, 2019).

Nachum, O., Gu, S., Lee, H. & Levine, S. Data-efficient hierarchical reinforcement learning. In Proc. 32nd International Conference on Neural Information Processing Systems (NIPS) (eds. Bengio, S. et al.) 3307–3317 (NIPS, 2018).

Rafati, J. & Noelle, D. C. Learning representations in model-free hierarchical reinforcement learning. In Proc. 33rd AAAI Conference on Artificial Intelligence 10009–10010 (AAAI, 2019).

Röder, F., Eppe, M., Nguyen, P. D. H. & Wermter, S. Curious hierarchical actor-critic reinforcement learning. In Proc. International Conference on Artificial Neural Networks ( ICANN ) (eds. Farkaš, I. et al.) 408–419 (Springer, 2020).

Zhang, T., Guo, S., Tan, T., Hu, X. & Chen, F. Generating adjacency-constrained subgoals in hierarchical reinforcement learning. In Neural Information Processing Systems ( NIPS ) (eds. Larochelle, H. et al.) 21579-21590 (NIPS, 2020).

Lample, G. & Chaplot, D. S. Playing FPS games with deep reinforcement learning. In Proc. 31st AAAI Conference on Artificial Intelligence 2140–2146 (AAAI, 2017).

Vezhnevets, A. S. et al. FeUdal networks for hierarchical reinforcement learning. In Proc. 34th International Conference on Machine Learning ( ICML ) (eds. Precup, D. & Teh, Y. W.) Vol. 70, 3540–3549 (PMLR, 2017).

Wulfmeier, M. et al. Compositional Transfer in Hierarchical Reinforcement Learning. In Robotics: Science and System XVI (RSS) (eds. Toussaint M. et al.) (Robotics: Science and Systems Foundation, 2020); https://arxiv.org/abs/1906.11228

Yang, Z., Merrick, K., Jin, L. & Abbass, H. A. Hierarchical deep reinforcement learning for continuous action control. IEEE Trans. Neural Netw. Learn. Syst. 29 , 5174–5184 (2018).

Toussaint, M., Allen, K. R., Smith, K. A. & Tenenbaum, J. B. Differentiable physics and stable modes for tool-use and manipulation planning. In Proc. Robotics : Science and Systems XIV ( RSS ) (eds. Kress-Gazit, H. et al.) https://ipvs.informatik.uni-stuttgart.de/mlr/papers/18-toussaint-RSS.pdf (Robotics: Science and Systems Foundation, 2018).

Akrour, R., Veiga, F., Peters, J. & Neumann, G. Regularizing reinforcement learning with state abstraction. In Proc. IEEE / RSJ International Conference on Intelligent Robots and Systems ( IROS ) 534–539 (IEEE, 2018).

Schaul, T. & Ring, M. Better generalization with forecasts. In Proc. 23rd International Joint Conference on Artificial Intelligence ( IJCAI ) (ed. Rossi, F.) 1656–1662 (AAAI, 2013).

Colas, C., Akakzia, A., Oudeyer, P.-Y., Chetouani, M. & Sigaud, O. Language-conditioned goal generation: a new approach to language grounding for RL. Preprint at https://arxiv.org/abs/2006.07043 (2020).

Blaes, S., Pogancic, M. V., Zhu, J. J. & Martius, G. Control what you can: intrinsically motivated task-planning agent. Neural Inf. Process. Syst. 32 , 12541–12552 (2019).

Haarnoja, T., Hartikainen, K., Abbeel, P. & Levine, S. Latent space policies for hierarchical reinforcement learning. In Proc. International Conference on Machine Learning ( ICML ) (eds. Dy, J. & Krause, A.) Vol. 4, 2965–2975 (PMLR, 2018).

Rasmussen, D., Voelker, A. & Eliasmith, C. A neural model of hierarchical reinforcement learning. PLoS ONE 12 , e0180234 (2017).

Riedmiller, M. et al. Learning by playing—solving sparse reward tasks from scratch. In Proc. International Conference on Machine Learning ( ICML ) (eds. Dy, J. & Krause, A.) Vol. 10, 6910–6919 (PMLR, 2018).

Yang, F., Lyu, D., Liu, B. & Gustafson, S. PEORL: integrating symbolic planning and hierarchical reinforcement learning for robust decision-making. In Proc. 27th International Joint Conference on Artificial Intelligence ( IJCAI ) (ed. Lang, J.) 4860–4866 (IJCAI, 2018).

Machado, M. C., Bellemare, M. G. & Bowling, M. A Laplacian framework for option discovery in reinforcement learning. In Proc. International Conference on Machine Learning (ICML) (eds. Precup, D. & Teh, Y. W.) Vol. 5, 3567–3582 (PMLR, 2017).

Pathak, D., Agrawal, P., Efros, A. A. & Darrell, T. Curiosity-driven exploration by self-supervised prediction. In Proc. 34th International Conference on Machine Learning ( ICML ) (eds. Precup, D. & Teh, Y. W.) 2778–2787 (PMLR, 2017).

Schillaci, G. et al. Intrinsic motivation and episodic memories for robot exploration of high-dimensional sensory spaces. Adaptive Behav. 29 549–566 (2020).

Colas, C., Fournier, P., Sigaud, O., Chetouani, M. & Oudeyer, P.-Y. CURIOUS: intrinsically motivated modular multi-goal reinforcement learning. In Proc. International Conference on Machine Learning ( ICML ) (eds. Chaudhuri, K. & Salakhutdinov, R.) 1331–1340 (PMLR, 2019).

Hafez, M. B., Weber, C., Kerzel, M. & Wermter, S. Improving robot dual-system motor learning with intrinsically motivated meta-control and latent-space experience imagination. Robot. Auton. Syst. 133 , 103630 (2020).

Yamamoto, K., Onishi, T. & Tsuruoka, Y. Hierarchical reinforcement learning with abductive planning. In Proc. ICML / IJCAI / AAMAS 2018 Workshop on Planning and Learning ( PAL-18 ) (2018).

Wu, B., Gupta, J. K. & Kochenderfer, M. J. Model primitive hierarchical lifelong reinforcement learning . In Proc. International Joint Conference on Autonomous Agents and Multiagent Systems ( AAMAS ) (eds. Agmon, N. et al.) Vol. 1, 34–42 (IFAAMAS, 2019).

Li, Z., Narayan, A. & Leong, T. Y. An efficient approach to model-based hierarchical reinforcement learning. In Proc. 31st AAAI Conference on Artificial Intelligence 3583–3589 (AAAI, 2017).

Hafner, D., Lillicrap, T. & Norouzi, M. Dream to control: learning behaviors by latent imagination. In Proc. International Conference on Learning Representations https://openreview.net/pdf?id=S1lOTC4tDS (ICLR, 2020).

Deisenroth, M. P., Rasmussen, C. E. & Fox, D. Learning to control a low-cost manipulator using data-efficient reinforcement learning. In Robotics : Science and Systems VII ( RSS ) (eds. Durrant-Whyte, H. et al.) 57–64 (Robotics: Science and Systems Foundation, 2011).

Ha, D. & Schmidhuber, J. Recurrent world models facilitate policy evolution. In Proc. 32nd International Conference on Neural Information Processing Systems (NeurIPS) (eds. Bengio, S. et al.) 2455–2467 (NIPS, 2018).

Battaglia, P. W. et al. Relational inductive biases, deep learning and graph networks. Preprint at https://arxiv.org/abs/1806.01261 (2018).

Andrychowicz, M. et al. Hindsight experience replay. In Proc. Neural Information Processing Systems ( NIPS ) (eds. Guyon I. et al.) 5048–5058 (NIPS, 2017); https://papers.nips.cc/paper/7090-hindsight-experience-replay.pdf

Schwartenbeck, P. et al. Computational mechanisms of curiosity and goal-directed exploration. eLife 8 , e41703 (2019).

Haarnoja, T., Zhou, A., Abbeel, P. & Levine, S. Soft actor-critic: off-policy maximum entropy deep reinforcement learning with a stochastic actor. In Proc. International Conference on Machine Learning ( ICML ) (eds. Dy, J. & Krause, A.) 1861–1870 (PMLR, 2018).

Yu, A. J. & Dayan, P. Uncertainty, neuromodulation and attention. Neuron 46 , 681–692 (2005).

Baldwin, D. A. & Kosie, J. E. How does the mind render streaming experience as events? Top. Cogn. Sci. 13 , 79–105 (2021).

Download references

Acknowledgements

We acknowledge funding from the DFG (projects IDEAS, LeCAREbot, TRR169, SPP 2134, RTG 1808 and EXC 2064/1), the Humboldt Foundation and Max Planck Research School IMPRS-IS.

Author information

Manfred Eppe

Present address: Hamburg University of Technology, Hamburg, Germany

Authors and Affiliations

Universität Hamburg, Hamburg, Germany

Manfred Eppe, Matthias Kerzel, Phuong D. H. Nguyen & Stefan Wermter

University of Tübingen, Tübingen, Germany

Christian Gumbsch & Martin V. Butz

Max Planck Institute for Intelligent Systems, Tübingen, Germany

Christian Gumbsch

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Manfred Eppe .

Ethics declarations

Competing interests.

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary information.

Supplementary Boxes 1–6 and Table 1.

Rights and permissions

Reprints and permissions

About this article

Cite this article.

Eppe, M., Gumbsch, C., Kerzel, M. et al. Intelligent problem-solving as integrated hierarchical reinforcement learning. Nat Mach Intell 4 , 11–20 (2022). https://doi.org/10.1038/s42256-021-00433-9

Download citation

Received : 18 December 2020

Accepted : 07 December 2021

Published : 25 January 2022

Issue Date : January 2022

DOI : https://doi.org/10.1038/s42256-021-00433-9

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

Efficient stacking and grasping in unstructured environments.

- Jinbiao Zhu

Journal of Intelligent & Robotic Systems (2024)

Four attributes of intelligence, a thousand questions

- Matthieu Bardal

- Eric Chalmers

Biological Cybernetics (2023)

An Alternative to Cognitivism: Computational Phenomenology for Deep Learning

- Pierre Beckmann

- Guillaume Köstner

- Inês Hipólito

Minds and Machines (2023)

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing: AI and Robotics newsletter — what matters in AI and robotics research, free to your inbox weekly.

Caltech Bootcamp / Blog / /

What is Reinforcement Learning in AI?

- Written by John Terra

- Updated on May 21, 2024

Decision-making is often a tricky thing. If you make the wrong decision, you inevitably suffer consequences. Eventually, through experience, we learn to take actions that offer the best outcomes while avoiding negative results. Machines can be trained to do this, too. It’s called reinforcement learning.

This article answers the question, “What is reinforcement learning in AI?” We will define the term and show how reinforcement learning works, including its uses, benefits, and challenges. We will also explore commonly used terms in reinforcement learning and their pros and cons. We will round things out by speculating about its future and sharing an online AI and machine learning bootcamp you can take to boost your career in this exciting field.

So, let’s begin. What is reinforcement learning in AI?

What is Reinforcement Learning?

Reinforcement learning (RL) is a sub-category of Machine Learning that trains a model via trial and error to learn optimal behavior and devise the optimal solution for a problem by making a sequence of decisions.

In essence, reinforcement learning is the science of decision, optimizing AI-driven systems by imitating natural intelligence and emulating human cognition without involving human interaction or the need for explicitly programmed AI systems.

In RL, data is accumulated from machine learning systems using trial-and-error methods. Reinforcement learning employs algorithms that learn from outcomes and decide what actions to take next. After each such action, the algorithm receives feedback that helps it decide whether the choice was correct, neutral, or incorrect.

So, reinforcement learning in AI is an autonomous, self-teaching system that learns by trial and error without humans getting involved.

Also Read: Machine Learning in Healthcare: Applications, Use Cases, and Careers

Essential Terms in Reinforcement Learning

Here are some terms often encountered when working with reinforcement learning.

- Agent . The agent is the model being trained through reinforcement learning.

- Environment. The environment is the training situation that the model must optimize.

- Action. The action covers all possible steps the model can take.

- State. The state is the current position or condition returned by the model.

- Reward . The model is rewarded with points for moving in the right direction. Rewards are given to appraise a given action.

- Policy . The policy determines how an agent behaves at any time, acting as a mapping between the action and the present state.

How Does Reinforcement Learning Work?

The reinforcement learning workflow encompasses training the agent while keeping the following key factors in mind:

- Environment

Let’s understand each one in detail.

Step 1: Define and create the environment

The reinforcement process starts by defining the environment where the agent remains active. The environment can refer to an actual physical system or a simulated environment. Once you determine the environment, you can begin experimentation for the RL process.

Step 2: Specify rewards

In the next phase, you must define the reward for the agent. The reward acts as the agent’s performance metric and lets the agent evaluate the task’s quality against its goals. Additionally, offering appropriate agent rewards may require several experimental iterations to finalize the correct one for a specific action.

Step 3: Define the agent

Once you finalize the environment and rewards, you can define and create the agent that specifies the involved policies, including the reinforcement learning training algorithm. The process typically includes these two steps:

- Using the appropriate lookup tables or neural networks to represent the policy

- Selecting the suitable RL training algorithm

Step 4: Train and validate the agent

Next, you train and validate the agent to fine-tune the training policy. Additionally, you must focus on the reward structure RL design policy architecture and perpetuate the training process. Beware- reinforcement learning training is time-intensive and could be minutes to days, depending on the end application. So, you can achieve faster training for a complex set of applications by employing a system architecture where several GPUs, CPUs, and computing systems run parallel.

Step 5: Implement the policy

Policy in an RL-enabled system serves as the decision-making component. This component is deployed using C, C++, or CUDA development code. While you implement these policies, revisit the initial stages of the RL workflow. This action is sometimes necessary when optimal results or decisions aren’t achieved. The following factors may need fine-tuning, followed by retraining the agent:

- Action/state signal detection

- Environmental variables

- Policy framework

- RL algorithm configuration

- Reward definition

- Training structure

Also Read: What is Machine Learning? A Comprehensive Guide for Beginners

The Various Uses for Reinforcement Learning

Reinforcement learning is designed to optimize the rewards agents earn as they accomplish a specific task. Consequently, RL benefits several real-life applications and scenarios, including self-driving cars, surgeons, robotics, and AI bots.

Here are some critical reinforcement learning uses in our daily lives that shape the artificial intelligence field:

- Addressing energy consumption problems. As reinforcement learning algorithms become increasingly popular, RL agents without knowledge of server conditions can control physical parameters surrounding an organization’s servers. This data is acquired via multiple sensors that collect power, temperature, and other data, helping deep neural network training. Thus, it contributes to data center cooling and regulates overall energy consumption.

- Controlling self-driving cars. Vehicles need substantial support from ML models that simulate all possible scenarios or situations that the vehicle may encounter to operate autonomously in a city environment. Reinforcement learning is a superstar in these cases as these models need training in a dynamic environment, where all possible pathways are studied and sorted through the learning process. Learning from experience makes reinforcement learning the ideal choice for self-driving cars that must quickly make optimal decisions. RL methods can competently handle multiple variables like handling traffic, managing driving zones, monitoring vehicle speeds, and controlling accidents.

- Gaming. Reinforcement learning agents learn and adapt to gaming environments as they apply logic via their experiences, achieving the desired results by performing a sequence of steps. Google’s DeepMind-created AlphaGo outclassed the master Go player in Oct. 2015, for example. But, in addition to designing games like AlphaGo that employ deep neural networks, reinforcement learning agents are used for bug detection and game testing within the gaming environment.

- Healthcare. Reinforcement learning is valuable in healthcare as DTRs (Dynamic Treatment Regimes) have aided medical professionals in handling patients’ health. DTRs employ a sequence of decisions to generate a final solution. The sequential process typically involves these steps:

- Determining the patient’s live status.

- Deciding the type of treatment.

- Discovering the appropriate medication dosage based on the patient’s condition.

- Deciding dosage timings and other related variables.

Doctors can use this sequence of decisions to fine-tune their patient treatment strategies and diagnose complex diseases such as cancer or diabetes. In addition, DTRs can further help provide treatments at the correct time, avoiding complications that may arise from delayed actions.

- Marketing. Reinforcement learning helps organizations maximize customer growth and streamline business strategies to achieve long-term goals. RL in the marketing arena helps professionals make personalized recommendations to users by predicting behavior, choices, and reactions toward specific products or services. Trained bots also consider variables such as evolving customer mindset and dynamically learning changing user requirements based on behavior. So, reinforcement learning lets businesses target quality recommendations, maximizing profit margins.

- Robotics. Robotics trains robots to mimic human behavior while performing a given task. However, today’s robots don’t have social, moral, or common sense while accomplishing these jobs. In these cases, AI sub-fields like RL and deep learning (Deep Reinforcement Learning) can be combined to achieve better results. For example, deep RL is vital for robots that aid warehouse navigation while providing critical product parts, defect inspection, packaging, assembly, etc. Additionally, RL models can be trained on multimodal data that are key to identifying cracks, scratches, missing parts, and overall damage to warehouse machines by scanning images containing billions of data points. Also, deep RL helps in inventory management since the agents are trained to isolate empty containers and immediately restock them.

- Traffic signal controls. Reinforcement learning offers a possible solution to increased urbanization and rising automobile use, as RL models introduce traffic light control based on an area’s traffic status. The model considers traffic from different directions, then adapts, learns, and adjusts traffic light signals.

Reinforcement Learning vs. Supervised Learning vs. Unsupervised Learning

The below table illustrates the differences between the three primary machine learning sub-branches.

Reinforcement Learning Challenges

Although reinforcement learning algorithms have successfully solved complex problems in many simulated environments, the real world has slowly adopted them. Here are some of the implementation obstacles RL faces:

- An RL agent requires extensive experience. RL methods generate training data autonomously through environmental interaction. Thus, the data collection rate is limited by the environment’s dynamics. Thus, environments with high latency tend to slow down the learning curve. In addition, extensive exploration is required before an ideal solution can be found in complex environments with high-dimensional state spaces.

- Delayed rewards. Learning agents can trade off short-term rewards for long-term gains. Although this foundational principle makes reinforcement learning useful, it also makes it challenging for the agent to discover optimal policies. This is particularly true in environments where you must take many sequential actions before finding the outcome. Assigning credit to previous actions becomes challenging as it introduces significant variances during training.

- A lack of interpretability. Once the reinforcement learning agent has learned the optimal policy and is deployed, it acts based on experience. The reason for these actions might be hidden from an outside observer. This lack of interpretability stifles the act of fostering trust between the agent and the observer.

Also Read: Machine Learning Interview Questions & Answers

The Advantages and Disadvantages of Reinforcement Learning

Reinforcement learning has its share of pros and cons. For example:

Advantages of Reinforcement Learning

- Reinforcement learning can be employed to tackle a diverse array of problems, including decision-making, control, and optimization

- Reinforcement learning can solve complicated problems that conventional problem-solving techniques can’t otherwise solve

- RL models can correct errors that happen during a training process

- Reinforcement learning can handle non-deterministic environments, meaning the actions’ outcomes aren’t always predictable. This is especially helpful in real-world applications where the environment is uncertain or could change over time.

- Reinforcement learning is a flexible problem-solving approach that can improve performance when used in conjunction with additional machine learning techniques, like deep learning.

Disadvantages of Reinforcement Learning

- There are better choices than reinforcement learning for solving simple problems

- Reinforcement learning requires a lot of data and computation

- Reinforcement learning highly relies on the quality of the reward function. The agent might not learn the desired behavior if the reward function is poorly designed

- Reinforcement learning can be challenging to debug and interpret. It’s only sometimes apparent why a given agent acts in a certain way, potentially making diagnosing and resolving issues more difficult.

What is the Future of Reinforcement Learning?

Deep reinforcement learning employs deep neural networks to model the value function (called “value-based”), the agent’s policy (known as “policy-based”), or both (“actor-critic”). Before the widespread success of deep neural networks, data scientists had to engineer complex features to train an RL algorithm, meaning reduced learning capacity and thus limiting the scope of reinforcement learning to only simple environments. With deep learning, however, models can be built using millions of trainable weights, thus freeing the user from redundant and tedious feature engineering. Instead, relevant features are automatically generated during training, enabling the agent to learn the best policies in complex environments.

Traditionally, reinforcement learning in AI is applied to one task at a time, with each task learned by a separate RL agent. These agents don’t share knowledge, making learning complex behaviors, like driving a car, slow and inefficient. Problems with a common information source, have related underlying structures, and are interdependent can significantly boost performance by allowing multiple agents to work together. A3C (Asynchronous Advantage Actor-Critic) is an exciting new development in this area, where multiple agents concurrently learn related tasks. This multi-task learning scenario is gradually driving RL closer to Artificial General Intelligence (AGI), where meta-agents learn how to learn, making problem-solving more autonomous than ever.

Do You Want Training in Artificial Intelligence and Machine Learning?

Artificial Intelligence and Machine Learning are dynamic, exciting fields that offer much potential. If these disciplines sound like something you would like to explore further, consider this comprehensive program in AI and machine learning .

This online course delivers a high-engagement learning experience that teaches Python, Natural Language Processing, Machine Learning, and much more. According to Indeed.com , machine learning engineers earn an average yearly salary of $166,572. So, if you want to move into a more challenging, cutting-edge career that provides security and generous compensation, check out this online AI/ML bootcamp and prepare your skills to face the exciting challenges of today’s Machine Learning revolution.

Q: What are some examples of reinforcement learning in AI? A: Examples include:

- Self-driving cars

- Industry automation

- Improved Natural Language Processing (NLP)

Q: What are the benefits of reinforcement learning in AI? A: Benefits include:

- Quicker understanding

- Reduced expenses

- Better decision making

Q: What is the importance of reinforcement learning? A: This technology lets computers learn from vast data sets faster and with better results, a vital function in our increasingly data-saturated world.

You might also like to read:

How to Become an AI Architect: A Beginner’s Guide

How to Become a Robotics Engineer? A Comprehensive Guide

Machine Learning Engineer Job Description – A Beginner’s Guide

How To Start a Career in AI and Machine Learning

Career Guide: How to Become an AI Engineer

Artificial Intelligence & Machine Learning Bootcamp

- Learning Format:

Online Bootcamp

Leave a comment cancel reply.

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

Recommended Articles

What Is Transfer Learning in Machine Learning?

This article discusses transfer learning in machine learning, including what it is, why it’s needed, when to use it, and how it works.

What is Sustainable AI? Definition, Significance, and Examples

This article covers sustainable AI, including its definition, importance, use cases, and more.

The Top 10 Natural Language Processing Applications

Every day, our society inches closer to technological innovations and devices initially found only in science fiction stories, films, and television shows. One of the

Explainable AI: Bridging the Gap Between Human Cognition and AI Models

As AI proliferates across industries, many people are worried about the veracity of something they don’t fully understand, with good reason. Enter explainable AI.

AI in Human Resources: Improving Hiring Processes with Predictive Analytics

This article discusses the role of artificial intelligence in human resources. It defines AI, shows ways AI is used in HR, and how to deploy AI in HR.

What Is an AI Chatbot, and How Do They Work?

This article discusses AI chatbots, what they are, how they work, how artificial intelligence figures into them, and their benefits.

Learning Format

Program Benefits

- 9+ top tools covered, 25+ hands-on projects

- Masterclasses by distinguished Caltech CTME instructors

- In collaboration with IBM

- Global AI and ML experts lead training

- Call us on : 1800-212-7688

Monte Carlo Methods in Reinforcement Learning

Recall that when using Dynamic Programming algorithms to solve RL problems, we made an assumption about the complete knowledge of the environment. With Monte Carlo methods, we only require experience - sample sequences of states, actions, and rewards from simulated or real interaction with an environment.

Monte Carlo Methods #

Monte Carlo , named after a casino in Monaco, simulates complex probabilistic events using simple random events, such as tossing a pair of dice to simulate the casino’s overall business model.

Monte Carlo methods have been used in several different tasks:

- Simulating a system and its probability distribution \begin{equation} x\sim\pi(x) \end{equation}

- Estimating a quantity through Monte Carlo integration \begin{equation} c=\mathbb{E}_\pi\left[f(x)\right]=\int\pi(x)f(x)\,dx \end{equation}

- Optimizing a target function to find its modes (maxima or minima) \begin{equation} x^*=\text{argmax}\,\pi(x) \end{equation}

- Learning a parameters from a training set to optimize some loss functions, such as the maximum likelihood estimation from a set of examples $\{x_i,i=1,2,\dots,M\}$ \begin{equation} \Theta^*=\text{argmax}\sum_{i=1}^{M}\log p(x_i;\Theta) \end{equation}

- Visualizing the energy landscape of a target function.

Monte Carlo Methods in Reinforcement Learning #

Monte Carlo ( MC ) methods are ways of solving the reinforcement learning problem based on averaging sample returns. Here, we define Monte Carlo methods only for episodic tasks. Or in other words, they learn from complete episodes of experience.

Monte Carlo Prediction 1 #

Since the value of a state $v_\pi(s)=\mathbb{E}_\pi\left[G_t|S_t=s\right]$ is defined as the expectation of the return when the process is started from the given state $s$, an obvious way of estimating this value from experience is to compute observed mean returns after visits to that state. As more returns are observed, the average should converge to the expected value. This is an instance of the so-called Monte Carlo method .

In particular, suppose we wish to estimate $v_\pi(s)$ given a set of episodes obtained by following $\pi$ and passing through $s$. Each time state $s$ appears in an episode, we call it a visit to $s$. There are two types of Monte Carlo methods:

- The first time $s$ is visited in an episode is referred as the first visit to $s$.

- The method estimates $v_\pi(s)$ as the average of the returns that have followed the first visit to $s$.

- The method estimates $v_\pi(s)$ as the average of the returns that have followed all visits to to $s$.

The sample mean return for state $s$ is computed as \begin{equation} v_\pi(s)=\dfrac{\sum_{t=1}^{T}𝟙\left(S_t=s\right)G_t}{\sum_{t=1}^{T}𝟙\left(S_t=s\right)}, \end{equation} where $𝟙(\cdot)$ is an indicator function. In the case of first-visit MC , $𝟙\left(S_t=s\right)$ returns $1$ only in the first time $s$ is encountered in an episode. And for every-visit MC , $𝟙\left(S_t=s\right)$ gives value of $1$ every time $s$ is visited.

Following is pseudocode of first-visit MC prediction , for estimating $V\approx v_\pi$

First-visit MC vs. every-visit MC #

Both methods converge to $v_\pi(s)$ as the number of visits (or first visits) to $s$ goes to infinity. Each average is itself an unbiased estimate, and the standard deviation of its error falls as $\frac{1}{\sqrt{n}}$, where $n$ is the number of returns averaged.

Monte Carlo Control 2 #

Monte carlo estimation of action values #.

When model is not available, it is particular useful to estimate action values rather than state values (which alone are insufficient to determine a policy). We must explicitly estimate the value of each action in order for the values to be useful in suggesting a policy. Thus, one of our primary goals for MC methods is to estimate $q_*$. To achieve this, we first consider the policy evaluation problem for action values.

Similar to when using MC method to estimate $v_\pi(s)$, we can use both first-visit MC and every-visit MC to approximate the value of $q_\pi(s,a)$. The only thing we need to keep in mind is, in this case, we work with visits to a state-action pair rather than to a state. Likewise, we define two types of MC methods for estimating $q_\pi(s,a)$:

- First-visit MC : estimates $q_\pi(s,a)$ as the average of the returns following the first time in each episode that the state $s$ was visited and the action $a$ was selected.

- Every-visit MC : estimates $q_\pi(s,a)$ as the average of the returns that have followed all the visits to state-action pair $(s,a)$.

Exploring Starts #

However, here we must exercise exploration . Because many state-action pairs may never be visited, and if $\pi$ is a deterministic policy, then returns of only single one action for each state will be observed. That leads to the consequence that the other actions will not be evaluated since there are no returns to average.

There is one way to achieve this, which is called exploring starts - an assumption that assumes the episodes start in a state-action pair , and that every pair has a nonzero probability of being selected as the start. This assumption assures that all state-action pairs will be visited an infinite number of times in the limit of an infinite number of episodes.

Monte Carlo Policy Iteration #

To learn the optimal policy by MC, we apply the idea of GPI : \begin{equation} \pi_0\overset{\small \text{E}}{\rightarrow}q_{\pi_0}\overset{\small \text{I}}{\rightarrow}\pi_1\overset{\small \text{E}}{\rightarrow}q_{\pi_1}\overset{\small \text{I}}{\rightarrow}\pi_2\overset{\small \text{E}}{\rightarrow}\dots\overset{\small \text{I}}{\rightarrow}\pi_*\overset{\small \text{E}}{\rightarrow}q_* \end{equation} Specifically,

- Policy evaluation (denoted $\overset{\small\text{E}}{\rightarrow}$): estimates action value function $q_\pi(s,a)$ using the episode generated from $s, a$, following by current policy $\pi$ \begin{equation} q_\pi(s,a)=\dfrac{\sum_{t=1}^{T}𝟙\left(S_t=s,A_t=a\right)G_t}{\sum_{t=1}^{T}𝟙\left(S_t=s,A_t=a\right)} \end{equation}

- Policy improvement (denoted $\overset{\small\text{I}}{\rightarrow}$): makes the policy greedy with the current value function (action value function in this case) \begin{equation} \pi(s)\doteq\underset{a\in\mathcal{A(s)}}{\text{argmax}},q(s,a) \end{equation} The policy improvement can be done by constructing each $\pi_{k+1}$ as the greedy policy w.r.t $q_{\pi_k}$ because \begin{align} q_{\pi_k}\left(s,\pi_{k+1}(s)\right)&=q_{\pi_k}\left(s,\underset{a}{\text{argmax}},q_{\pi_k}(s,a)\right) \\ &=\max_a q_{\pi_k}(s,a) \\ &\geq q_{\pi_k}\left(s,\pi_k(s)\right) \\ &\geq v_{\pi_k}(s) \end{align} Therefore, by the policy improvement theorem , we have that $\pi_{k+1}\geq\pi_k$.

To solve this problem with Monte Carlo policy iteration, in the 1998 version of “ Reinforcement Learning: An Introduction ”, authors of the book introduced Monte Carlo ES ( MCES ), for Monte Carlo with exploring starts .

In MCES, value function is approximated by simulated returns and a greedy policy is selected at each iteration. Although MCES does not converge to any sub-optimal policy, the convergence to optimal fixed-point is still an open question. For solutions in particular settings, you can check out some results like Tsitsiklis (2002), Chen (2018), Liu (2020). Down below is pseudocode of the Monte Carlo ES.

On-policy Monte Carlo Control 3 #

In the previous section, we used the assumption of exploring starts (ES) to design a Monte Carlo control method called MCES. In this part, without making that impractical assumption, we will be talking about another Monte Carlo control method.

In on-policy control methods , the policy is generally soft (i.e. $\pi(a|s)>0,\forall s\in\mathcal{S},a\in\mathcal{A(s)}$, but gradually shifted closer and closer to a deterministic optimal policy). We can not simply improve the policy by following a greedy policy, since no exploration will take place. Then to get rid of ES, we use the on-policy MC method with $\varepsilon$- greedy policies, e.g, most of the time they choose an action that maximal estimated action value, but with probability of $\varepsilon$ they instead select an action at random. Specifically,

- $Pr(\small\textit{non-greedy action})=\dfrac{\varepsilon}{\vert\mathcal{A(s)}\vert}$

- $Pr(\small\textit{greedy action})=1-\varepsilon+\dfrac{\varepsilon}{\vert\mathcal{A(s)}\vert}$

The $\varepsilon$-greedy policies are examples of $\varepsilon$- soft policies, defined as ones for which $\pi(a\vert s)\geq\frac{\varepsilon}{\vert\mathcal{A(s)}\vert}$ for all states and actions, for some $\varepsilon>0$. Among $\varepsilon$-soft policies, $\varepsilon$-greedy policies are in some sense those that closest to greedy.

We have that any $\varepsilon$-greedy policy w.r.t $q_\pi$ is an improvement over any $\varepsilon$-soft policy is assured by the policy improvement theorem .