Statistics Made Easy

What is a Covariate in Statistics?

In statistics, researchers are often interested in understanding the relationship between one or more explanatory variables and a response variable .

However, occasionally there may be other variables that can affect the response variable that are not of interest to researchers. These variables are known as covariates .

Covariates: Variables that affect a response variable, but are not of interest in a study.

For example, suppose researchers want to know if three different studying techniques lead to different average exam scores at a certain school. The studying technique is the explanatory variable and the exam score is the response variable.

However, there’s bound to exist some variation in the student’s studying abilities within the three groups. If this isn’t accounted for, it will be unexplained variation within the study and will make it harder to actually see the true relationship between studying technique and exam score.

One way to account for this could be to use the student’s current grade in the class as a covariate . It’s well known that the student’s current grade is likely correlated with their future exam scores.

Thus, although current grade is not a variable of interest in this study, it can be included as a covariate so that researchers can see if studying technique affects exam scores even after accounting for the student’s current grade in the class.

Covariates appear most often in two types of settings: ANOVA (analysis of variance) and Regression.

Covariates in ANOVA

When we perform an ANOVA (whether it’s a one-way ANOVA , two-way ANOVA , or something more complex), we’re interested in finding out whether or not there is a difference between the means of three or more independent groups.

In our previous example, we were interested in understanding whether or not there was a difference in mean exam scores between three different studying techniques. To understand this, we could have conducted a one-way ANOVA.

However, since we knew that a student’s current grade was also likely to affect exam scores we could include it as a covariate and instead perform an ANCOVA (analysis of covariance).

This is similar to an ANOVA, except that we include a continuous variable (student’s current grade) as a covariate so that we can understand whether or not there is a difference in mean exam scores between the three studying techniques, even after accounting for the student’s current grade .

Covariates in Regression

When we perform a linear regression, we’re interested in quantifying the relationship between one or more explanatory variables and a response variable.

For example, we could run a simple linear regression to quantify the relationship between square footage and house prices in a certain city. However, it may be known that the age of a house is also a variable that affects house price.

In particular, older houses may be correlated with lower house prices. In this case, the age of the house would be a covariate since we’re not actually interested in studying it, but we know that it has an effect on house price.

Thus, we could include house age as an explanatory variable and run a multiple linear regression with square footage and house age as explanatory variables and house price as the response variable.

Thus, the regression coefficient for square footage would then tell us the average change in house price associated with a one unit increase in square footage after accounting for house age .

Additional Resources

An Introduction to ANCOVA (Analysis of Variance) How to Interpret Regression Coefficients How to Perform an ANCOVA in Excel How to Perform Multiple Linear Regression in Excel

Featured Posts

Hey there. My name is Zach Bobbitt. I have a Masters of Science degree in Applied Statistics and I’ve worked on machine learning algorithms for professional businesses in both healthcare and retail. I’m passionate about statistics, machine learning, and data visualization and I created Statology to be a resource for both students and teachers alike. My goal with this site is to help you learn statistics through using simple terms, plenty of real-world examples, and helpful illustrations.

One Reply to “What is a Covariate in Statistics?”

It is really easy to understand. Thank you, Zach!

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Join the Statology Community

Sign up to receive Statology's exclusive study resource: 100 practice problems with step-by-step solutions. Plus, get our latest insights, tutorials, and data analysis tips straight to your inbox!

By subscribing you accept Statology's Privacy Policy.

Confusing Statistical Terms #5: Covariate

by Karen Grace-Martin 73 Comments

Covariate really has only one meaning, but it gets tricky because the meaning has different implications in different situations, and people use it in slightly different ways. And these different ways of using the term have BIG implications for what your model means.

The most precise definition is its use in Analysis of Covariance, a type of General Linear Model in which the independent variables of interest are categorical, but you also need to adjust for the effect of an observed, continuous variable–the covariate.

In this context, the covariate is always continuous, never the key independent variable, and always observed (i.e. observations weren’t randomly assigned its values, you just measured what was there).

A simple example is a study looking at the effect of a training program on math ability. The independent variable is the training condition–whether participants received the math training or some irrelevant training. The dependent variable is their math score after receiving the training.

But even within each training group, there is going to be a lot of variation in people’s math ability. If you don’t adjust for that, it is just unexplained variation. Having a lot of unexplained variation makes it pretty tough to see the actual effect of the training–it gets lost in all the noise.

So if you use pretest math score as a covariate, you can adjust for where people started out. So you get a clearer picture of whether people do well on the final test due to the training or due to the math ability they had coming in.

Okay, great. Where’s the confusion?

Covariates as Continuous Predictor Variables

The confusion is that, really, the model doesn’t care that the covariate is something you don’t have a hypothesis about. Something you’re just adjusting for. Mathematically, it’s the same model, and you run it the same way.

And so people who understand this often use the term covariate to mean ANY continuous predictor variable in your model, whether it’s just a control variable or the most important predictor in your hypothesis. And I’m guilty as charged. It’s a lot easier to say covariate than continuous predictor variable.

But SPSS does this too. You can run a linear regression model with only continuous predictor variables in SPSS GLM by putting them in the Covariate box . All the Covariate box does is define the predictor variable as continuous.

(SAS’s PROC GLM does the same thing, but it doesn’t specifically label them as Covariates. In PROC GLM, the assumption is all predictor variables are continuous. If they’re categorical, it’s up to you, the user, to specify them as such in the CLASS statement.)

Covariates as Control Variables

But the other part of the original ANCOVA definition is that a covariate is a control variable.

So sometimes people use the term Covariate to mean any control variable. Because really, you can covary out the effects of a categorical control variable just as easily.

In our little math training example, you may be unable to pretest the participants. Maybe you can only get them for one session. But it’s quick and easy to ask them, even after the test, “Did you take Calculus?” It’s not as good of a control variable as a pretest score, but you can at least get at their previous math training.

You’d use it in the model in the exact same way you would the pretest score. You’d just have to define it as categorical.

Once again, there isn’t really a good term for categorical control variable, so people sometimes refer to it as the covariate.

So what is a covariate then?

It’s hard to say. There is no disputing the first definition, so it’s clear there.

I prefer to just be careful, in setting up hypotheses, running analyses, and in writing up results, to be clear about which variables I’m hypothesizing about and which ones I’m controlling for, and whether each variable is continuous or categorical.

The names that the variables, or the models, end up having, aren’t important as long as you’re clear about what you’re testing and how.

———————————————————————————-

Read other posts on Confusing Statistical Terms and/or check out our other resources related to ANOVA and ANCOVA .

Reader Interactions

May 23, 2022 at 10:53 am

Hello everyone,

In my study, I have plant (plant population) density as factor, is it necessary to include the same plant density (number of plants at harvest) as covariate? I have been advised to do so and I urgently need your help on this.

May 14, 2020 at 3:36 am

This really helped me a lot – thanks!

September 14, 2019 at 2:53 am

Hi Thank you for your article here. I’ve read a paper which said “including gender as a covariate”, but I learned the covariate must be continuous in ANCOVA, so it really baffled me until I found your explanation here!

But there is still a question, if the categorical covariate has an interaction with the IV, how can i report it? Report the main effect of IV hierarchically? Or if there is an interaction ,the variate can not be taken as a covariate?

November 29, 2021 at 9:20 pm

Your definition of a covariate in ANCOVA is completely at odds with that given in Whitlock and Schluter (2020). The Analysis of Biological Data, 3rd ed. They specify that the covariate is categorical, and the main effect (factor/explanatory variable) is numerical (pretty much the opposite of what you state). And by the way, a numerical variable does not have to be “continuous”; it may be discrete (e.g, counts, which are integers). So you’ve even confused the term continuous with numerical.

November 30, 2021 at 3:39 pm

Very interesting. I don’t have that book. Yes, it’s absolutely true that many authors use “covariate” to means different things. But I’ve never, ever, heard of a factor in an ANCOVA being numerical. Look in any other book on ANOVA. That is so backward from usual usage I wonder if it’s a typo. I’d have to see the exact wording to really comment. That said, Factor is also another confusing term, in that it means something entirely different in the context of Factor Analysis, where it is continuous.

And you’re absolutely correct that numerical variables don’t have to be continuous. The difference is very important for dependent/outcome/response variables, since it affects the type of model you use. It’s not a big difference for predictors, since if you fit a line to a discrete predictor, you’re technically treating it as continuous.

April 14, 2019 at 7:22 pm

Brilliant post, that really unlogged a lot for me.

I was just wondering if you had information on what the actual math looked like for adding a covariate into a regression? Just as a basic example. i.e. How do you ‘adjust for the effect of said covariate’?

Sorry if I’m getting my terms mixed up.

January 31, 2019 at 6:50 am

I am confused by your example? Why do you use pre math scores as a covariate and not as timepoint 1 in a repeated measures design? Within variables: time (pre post), dependent variable (training 1 or two), no covariate. Thank you in advance Jan

March 4, 2019 at 11:17 am

It depends on the research question. See this: https://www.theanalysisfactor.com/pre-post-data-repeated-measures/

December 6, 2018 at 8:43 pm

Hi Karen, Our study’s respondents are the left behind emerging adults. The IV is the psychological distress they are undergoing and it includes academic distress. Is it right to ask if they are in college level? Though it won’t be ideal to use it because not all emerging adults are college students but mostly are. It would also help determine the academic distress which I said earlier. Thank you!

November 30, 2018 at 8:34 am

I really need your help. I don’t know what test (and I cant find any source of information about it) should I perform in this circumstances:

One IV (e.g. Gender) Two DV (continuous) One or Two Covariates (both ordinal).

What to do if the Covariates are ordinal?

November 30, 2018 at 11:52 am

Hi Armindo, I can’t give advice without really digging into the details of things like your research questions and the roles of these variables.

But I can comment on a couple things. Ordinal predictors usually need to be treated as categorical: https://www.theanalysisfactor.com/pros-and-cons-of-treating-ordinal-variables-as-nominal-or-continuous/

Once you’ve got more than one DV, you’re into multivariate statistics. So some version of a MANOVA or Multivariate linear model. https://www.theanalysisfactor.com/multiple-regression-model-univariate-or-multivariate-glm/

August 22, 2018 at 3:56 pm

What should you do if you have dramatically different sample sizes across levels of a categorical variable you are including as a covariate? For example, if you are controlling for gender with 4 categories (i.e., man, woman, prefer to self-describe, prefer not to say), is there a citation that supports either collapsing the last gender categories or even excluding them from the analysis because of their extremely small sample size to avoid skewing your results? Even with collapsing, however, you could still run into the same sample size problem. Is there a general consensus about how to handle this type of issue?

October 12, 2018 at 11:28 am

I don’t know there is a consensus, other than to be thoughtful about it and consider the pros and cons of different approaches. You may want to read this: https://www.theanalysisfactor.com/when-unequal-sample-sizes-are-and-are-not-a-problem-in-anova/

August 31, 2017 at 12:26 pm

I want to run a report where I think I will need an ANCOVA for the analysis.

I want to test 2 types of clinical outcomes for one rehab programme to see how they compare in picking up changes in pain and function. I have asked participants to fill out the 2 outcomes pre and post intervention (2 times points only).

I have the data and was thinking to run simple t-tests to show that the intervention has been successful in reducing pain and increasing function for each of the outcomes used.

Then, I want to ensure that both outcomes have picked up change successfully. In order to do this I want to run an ANCOVA, using the baseline pain scores as the covariate. This is in order to show that those with higher pain to start were perhaps less likely to end up with lowest pain scores post. Does this make sense? Here the IV would be the outcome used (either A or B) and the DV would be the pain score recorded post programme.

I would hope that the p value would be insignificant from the ANCOVA print out , showing that both outcomes (A and B) had no differenced in means , however I am getting a little confused about how I would report on the data from the covariate?

June 19, 2017 at 7:12 am

I want to do an analysis, I think it has to be an ANCOVA, but my independent variables are not probably/hopefully not independent of each other (whoch is an assumption of the ANCOVA, right). I want to analyse: first independent variable: condition (4) and second independent variable: the difference score of two measurements points.

But the difference score should be dependent of the conditions (at least I hope so) My dependent variable is the recognition score, which should not be dependent of the conditions and the difference score

So my questions is if I can use an ANCOVA because my two independent variables are linked to each other…

Thank you very much in advance!!!

Verda Simsek

May 17, 2017 at 1:58 pm

Hi Karen, I just want to ask if the covariates will have their own F value in the ANOVA. I was running alinear mixed model ANOVA with 3 fixed factors and 1 random factor but with an extra 2 covariates. But on the Anova output, the covariates are not there…should their significance ´be shown in spss too?

April 10, 2018 at 2:07 pm

I’m not sure when your reply was posted, but I figured I would reply. If too late to help you, at least others may benefit:

The primary purpose of a covariate is to illustrate an effect above an observed effect that goes beyond your manipulation. Therefore, there is already an assumption that your covariates are significantly correlated with the dependent variable. If this were not the case, there would be no point of putting them into your analysis. Basically, SPSS has no need to tell you of your significance of the covariate because you should already know that. Instead, what you should do to observe the ANCOVA at work is to analyze your model with and without the 2 covariates. What you should see is that you had more power without the covariates. However, in exchange for less power, you provide evidence that your effect extends beyond known potential confounds.

Best of luck!!

April 11, 2018 at 10:25 am

Actually, I’m surprised that SPSS didn’t include those covariates in the ANOVA table. Yes, they should be there and yes, you need to test them.

I disagree that covariates should only be there to look for effects beyond a manipulation. That may be true in experimental studies that actually have a manipulation. But many good models have both categorical predictors and continuous ones. Whether that categorical predictor is a manipulation only affects the interpretation, not the model.

May 5, 2017 at 1:54 am

Thanks for this. Its very clear. Its very helpful for me to communicate with my economist collaborator who keep insisting on using regression instead of ancova.

I must choose if I will analyze my data using regression or ANCOVA. The main problem is, if I use ancova then I will have to use all variables as covariate and use no fixed factor at all.

So my problem is to decide if a sensory data (ranking of respondents liking 1-4, like most to dislike most) can be used as fixed factor. Or should I dummy code them and put under covariate instead of fixed factor?

Please helpppp.

Best regards Mulia

March 18, 2017 at 6:23 pm

Thanks for this awesome article. I was analysing paper( https://eric.ed.gov/?id=EJ850774 ) that used “covariate” term in its abstract and it was really hard to get an idea of what the authors think covariate is. But now, after reading your explanations, it is much more clear!

February 21, 2017 at 5:26 am

hi karen, I would like to ask how to compare scores of anxiety for two means between independent variables [group 1 VS group 2]. with no experimental design or manipulation.

however, i want to prove that the difference in mean scores is accounted for even after the introduction of a control variable [which is a continuous score on a Life Stress Scale].

please advice on how i should do this? using SPSS. thank you so much 🙂

November 25, 2016 at 5:19 am

Hi Karen, I want to do covariate analysis and my response variable is Binomial (Res ponder vs Non Responder) but covariate is continuous variables. So which stat tool you would suggest to do the robust analysis.

Thanks@Ashwani

September 27, 2016 at 2:43 am

One other issue with covariates arises when actually using the results in a subsequent decision process. Variables controlled for are akin to what is lost when partially differentiating. It is important to understand the need to put this information back when making decisions. By this, if we take the math training example you used above, within the population studied, there may be subsets who do well with a particular method and those who don’t. If you simply control for this variation with pre-test scores, you effectively average the variation away in you analysis. When this is done, it is critically important to understand that you are seeing an averaged picture (and that many potentially important pieces of information are absent). I loved how Good and Hardin saw fit at the beginning of the first chapter of their Common Errors in Statistics, to state unequivocably that statistics should never be used as the sole basis for making decisions. Thus ‘missing or discarded information’ is in part, to me, why. (Essentially you need either strong uniformity conditions on a population, or explicit inspection, before you can be confident a statistical result applies to a particular instance.)

April 10, 2018 at 2:18 pm

I’m not sure if you’re mistakenly generalizing one concept to another. A successful ANCOVA will discard unwanted information. For example, let’s say a cognitive task is known to have a gender effect. I could use gender as a discrete covariate (either in ANCOVA or a multiple regression with gender as a grouping variable) to show an effect exists beyond gender. Essentially, I would be saying gender does not matter for my decision.

The topic you are talking about (I believe) is how many statistics deal with averages. In doing so, we need to keep in mind that individual differences sexist. While this is true of covariates as well, I think that while a single measure is never enough for determining an effect, a covariate can help someone decide if something should not be a factor.

April 11, 2018 at 10:30 am

Agree. Much of what you’re describing sounds like situations where there is an interaction involved.

But yes, there is a fundamental concept in the decision making literature that statistics apply to groups, not individuals, and that what is best for the average may not be best for any given individual or situation.

August 25, 2015 at 1:40 pm

Hi Karen, I have 45 participants who received an exercise intervention. I am observing the change in their physical performance pre-post and after 8 months of the intervention. I have found group differences by using RM ANOVA. Now I need to control some covariates/ confounding variables (categorical variables) like age group, marital status, education level etc. How shall I do it? I am using SPSS version 22. Please suggest the most suitable tool to use. Please also comment if I could get that tool in the following options:

Option 1: While defining the independent variable in the process of RM ANOVA, there are two more spaces available to put data on ‘between subject factor’ and ‘covariate’. Shall I put all 8 covariates under the space labelled ‘covariates’? If I can do that, Q1. Will that actually control these variables all together? Q2. Will it lose power in doing so? Q3. How shall I then term the tool of analysis? RM ANOVA with covariates or RM ANOVA or any other term? Q4. Shall I use them as time*cov for all the covariate separately?

Option 2: Shall I use mixed method ANOVA by putting one particular covariate in the ‘between subject factor’ and see if it has any effect? Q.1 Shall I look at the between subject effect in the output file to see the impact? Or need to look at the effect size or both? Q2. Shall I need to put other 7 covariates under the space labelled ‘covariates’ while considering one covariate as a between subject factor? Q3. How shall I then term the tool of analysis?

Looking forward to hearing from you.

Many thanks in advance.

July 5, 2015 at 8:01 am

I really like your explanation about the term. Enjoyed reading it. Thanks a lot. It certainly helps to stop the arguments between my students and me.

Best, Claudia

May 31, 2015 at 3:57 pm

Hi Karen, Thanks for the helpful article. I’m trying to decide which variables to include as control variables in my regression model. Aside from theoretical reasons, I have examined my correlation matrix. I have a variable that correlates with my IV, but not with my DV…Am I correct in assuming that this variable should NOT be controlled for in the model (since it is unrelated to the DV)?

Thanks for your help!

April 10, 2018 at 2:22 pm

Not sure if it is too late to help, but generally, you are correct. Covariates are variables that vary with the DV, and can be an alternative explanation for the effect of your IV. IF no correlation exists with the DV, there is no need to control for it. However, Keep in mind that for an ANCOVA your IV needs to be a discrete variable, and the DV needs to be continuous. Linear regression would not properly diagnose a relationship between these two.

May 22, 2015 at 12:28 pm

Thanks so much for this clarification. So, in other words, if I want to control for a categorical variable, I still run ANOVA. and if I want to control for a continous variable, I run ANCOVA. *phew*

June 3, 2016 at 9:15 am

Yes, exactly.

May 10, 2015 at 2:27 pm

Hi Karen, thank a lot for the site. Its really great. Currently, I’m designing an experiment on stress treatment of plants with single (heat or drought) or combined (heat+drought) fixed effect IVs to measure one or more response DVs. Each stress treatment will be applied with several levels at (combined with) 3 time points. Measurement(s) are from different experimental units and not from the same individual plant. I’m thinking using either 2-way ANOVA or MANOVA depends on the no. of DV to be measured. However, I’m a little bit confused about the time point factor. I would expect that the measurements from control plants will not be significantly changed by time (as there is no stress), but under (e.g. heat treatment) I would expect to find a significant change in the main effect factor between the 3 time points. Shall I consider the time point as a covariance factor in this case, and use, for example, one-way ANOVA instead of two-way to measure one DV under one heat. Do you recommend any specific analysis or model different from above. I would appreciate a lot your advice and help

March 14, 2015 at 9:28 pm

Hi Karen, I need some help with choosing the statistical analysis. Details of my study. all participants will complete surveys measuring self compassion, whether their psychological needs are met, how high on non attachment scale they are and other trait scales.

then, Half will be given some induction training on how to meditate. half will not.

after that, everyone will be tested to see how mindful and meditative they were using some breath counting measures.

i want to see relationship between the outcome and the survey responses and the training received.

for eg. someone whose needs are met and who received training did well on being mindful. what about someone whose needs are met but was part of the group that did not receive training, what if he does well or what if he doesn’t.

is the survey responses the covariates? How would i write up the research question?

would the test be correlation or regression? ANCOVA?

My original hypothesis was needs met led to being good at meditation. But now the control group has been included, I am confused.

Please help. Thanks in advance

January 8, 2015 at 2:46 pm

Just wanted to say thank you for the site and the easy to follow text. Great job!

December 5, 2014 at 11:07 am

First of all, thank you for your site because it has helped me a lot of times 😉

I have a doubt in the statistical analysis of my study. I am studying stress on mothers and fathers (two independent samples). An important variable in my study is the number of children (usually mothers or fathers with more children feel more stress) and my subjects have between 1, 2 or 3 children. However because I am only looking for gender (mothers vs fathers) differences in stress I want to control this variable (number of children) in my sample. Thus, I decided to apply a chi-square analysis to see if my sample of mothers differed from my sample of fathers in the number of children. The test is non significant so i assumed that my two independent samples do not differ in number of children and that number of children is not a covariate when comparing the mothers and fathers of my sample. Is this correct?

Thanks a lot*

December 1, 2014 at 11:20 pm

Very helpful. Thanks

November 25, 2014 at 2:16 pm

Maybe you could clarify something for me. I have a model that consist of 3 independent variables and on dependent variable. However, in my research I identify two other concepts that acts as mediators (social exchange and perceived organizational support). Would these concepts be considered as covariates?

November 30, 2014 at 11:56 am

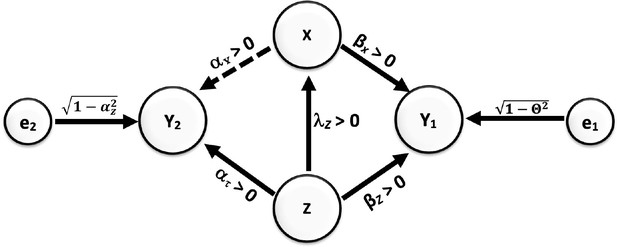

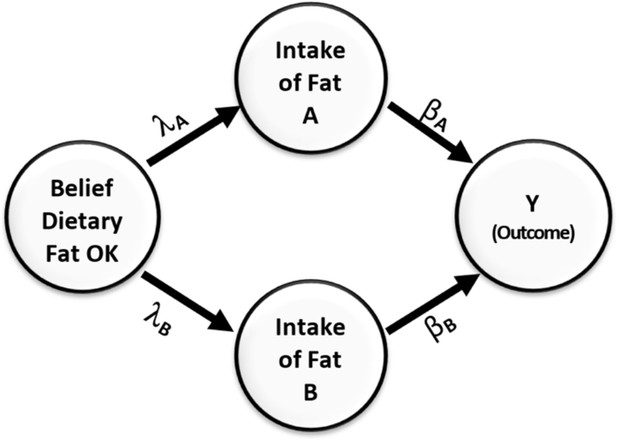

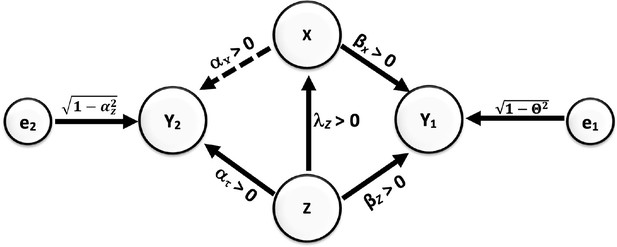

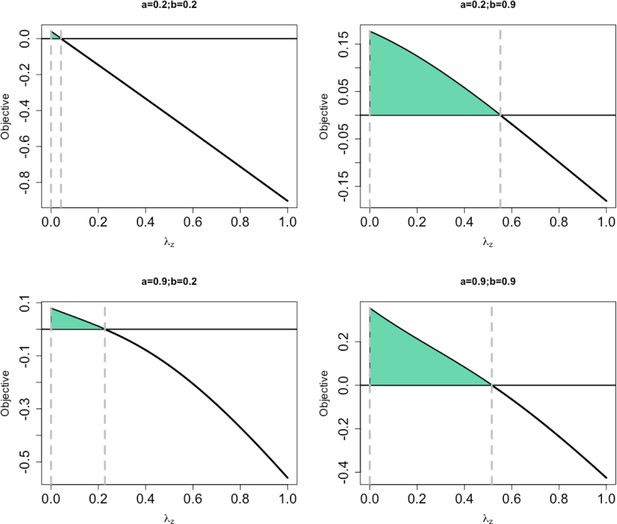

Mediators are a little different than covariates. See this: https://www.theanalysisfactor.com/five-common-relationships-among-three-variables-in-a-statistical-model/

July 30, 2014 at 1:12 am

I found your review of covariates really helpful. My research involves exploring the impact of anxiety on communication. I’m using a transmission chain methodology where one person reads a story and reproduces that for the next person in the chain, who reproduces it for the next person in the chain and so on until 4 people have read and reproduced the story. I used a mood induction procedure, which is my between subjects factor. Typically these studies classify the ‘generations’ in the transmission chain as a within subjects factor as the output of person 2-4 is dependent on what they received from the previous person (as if you are taken measurements at different points in time). I am measuring the number of positive and negative statements produced by each person in their reproductions. So what I have done is a 2x4x2 ANOVA.

What I also want to do is explore the impact of trait anxiety, which I measured prior to testing and is a continuous variable. Is it possible to enter trait anxiety as a covariate in an ANOVA/ANCOVA to determine if trait anxiety was related to/or differentially impacted performance under each condition? The hypothesis is that high trait anxiety participants, under a negative mood induction would show a different pattern of results to low trait anxious.

I can perform a median split and enter it as a between subjects factor but this will result in a small number of observations, particularly once I try to look at more than one generation and I’m worried about the loss of power.

I would really appreciate your advice.

All the best, Keely

May 26, 2016 at 6:18 am

I’m having the same problem 🙁

June 12, 2014 at 7:45 am

Dear Karen,

i was wondering if a covariate is the same as the mediator in a repeated measurement model? I am using it that way. But maybe there is another way to test a mediation?

Thanks in advance for your help

April 22, 2014 at 3:46 pm

What does the phrase “covary out the effects” mean? Your article has been very helpful in helping to clear up confusion with terms!! But I’m still at a loss as to why this phrase was used in the context of a test evaluation. In discussing potential changes to a control group (outside the bounds of the test), we were told they could “covary out the effects” of a change.

May 7, 2014 at 10:59 am

Great question, and one that will take another article to describe. 🙂 I’ll add that to my list of future articles to write.

October 15, 2013 at 4:29 pm

Hi, I am new to this site and this has always confused me. How do you “control” for a variable? Could you please explain, how “controlling “works? One of the ways to control a variable might be just taking random samples, i.e., if you want to control for age, then take a rs from all age groups. Another example would be like a “control group” (placebo in a drug experiment). Am I correct in the above examples? Also, I assume there are other standard techniques, could you please clarify how they work?

October 16, 2013 at 9:56 am

That’s a big question. Or rather, a small question with a big answer. I will see if I can write a post (or two or 10) explaining it.

October 17, 2013 at 10:11 am

Thanks Karen! Looking forward to it!!

July 11, 2013 at 7:50 pm

Hi Karen, Thanks for this article! I just want to further clarify some of the discussion on dichotomous covariates/fixed factors. Specifically, I am running a chi squared with one dichotomous outcome and one dichotomous predictor- too see how well group membership of the two-level predictor discriminates groups membership of the two level outcome. Now, I want to ensure that gender (I think my covariate/fixed factor) does not modify the relationship of my predictor’s ability to discriminate membership of my outcome variable. To answer this question I plan to run the model where both genders are combined and separately for each gender. The answer is that discriminate ability of my predictor does depend on gender to some extent.

So my question is – is gender a fixed factor here? is there a better word for it? Random factor?

Thank you so much!

July 15, 2013 at 3:44 pm

Within the context of SPSS GLM, Gender is a fixed factor. Don’t make it random–that’s a whole other thing!

But if you’re doing a chi-square, Fixed Factor and covariate aren’t really issues. Just add it in as another variable

June 16, 2013 at 2:06 pm

Easy to understand definition of Covariate for ANCOVA. Thank you!

May 31, 2013 at 5:01 am

I’m running an ANOVA with repeated measures. As I can see from the correllation matrix there are significant correllations between my possible cofounding variables and my dependent variable but here is my problem: if the possible co-variate is correllating with the dep. variable at time2 but not at time1, do I have to include it as a “normal” co-variate when I perform the GLM?

Thanks for any help. Anika

June 6, 2013 at 5:30 pm

It would be a good idea to include a covariate*time interaction. That will allow the effect of the covariate to be different at time 1 and time 2.

April 24, 2013 at 3:05 pm

I have a follow-up question please. I am looking at memory performance in young and older adults under two conditions: when a negative or a positive stereotype is activated. I’m using a mixed model to compare performance across time (before/after the intervention) across the two age groups and conditions.

I obtain a significant difference between the two age groups on levels of verbal IQ (NART scores) which is continuous, and hence a covariate (that exerts a significant effect).

The paper that I am basing my study on also obtains a difference between age groups over verbal IQ scores. They do not obtain a significant difference within age groups but between conditions, however, and so have not included it as a covariate. This seems wrong to me. Surely if a significant difference over a background variable occurs on one of your IVs you should include the covariate in the model, regardless of whether there’s a difference between groups on the second IV?

If you could clear this up for me I’d really appreciate it, as I am confused!

April 29, 2013 at 6:45 pm

I am missing something. Does verbal IQ relate to the DV? (It seems it would but you don’t mention that). So in this paper, the two stereotype condition groups have different verbal IQ scores, but age groups didn’t? It really comes down to whether the potential covariate is related to the DV.

March 21, 2013 at 9:58 pm

Thanks for the information. The way you write it very clear and easy to understand. Thanks~

April 2, 2013 at 5:43 pm

March 13, 2014 at 9:15 pm

So can we say based on your answer that every confounding variable is a covariate but not every covariate is a confounding variable?

April 4, 2014 at 9:55 am

Depends on how you’re using them. 🙂

October 9, 2012 at 2:37 pm

What is the difference between a confound and a covariate in simple terms?

October 23, 2012 at 3:36 pm

Alexa, that’s a really great question. A confound is a variable that is perfectly (or so near perfectly that you can’t distinguish) associated with another variable that you can’t tell their effects apart.

In most areas of the US, for example, neighborhood and school attended overlap so much because most kids from a neighborhood all go to the same school. So you couldn’t separate out the school effects on say, grade 3 test scores, from the neighborhood effects.

A covariate is a variable that affects the DV in addition to the IV. It doesn’t have to be correlated with the independent variable. If not, it may just explain some of the otherwise unexplained variation in the DV.

October 6, 2012 at 12:12 am

Thanks Karen! I’m so happy this website exists. I found this page because I am stuck on something related but at a way lower level (I am no statistician). I’m running a repeated measures ANOVA in SPSS, using GLM. I need to control for a between-subjects categorical variable that might be adding noise to the data and washing out any effects of my factors. I can’t figure out if the right way to do this is to put it in as a between-subjects factor, or pretend that is a continuous variable and put it in the covariate box. Can you help?

October 8, 2012 at 9:02 am

Yes. In fact, there is already an article here on that exact topic. It’s the same in all SPSS glm procedures, whether you’re using univarate, repeated measures, etc. https://www.theanalysisfactor.com/spss-glm-choosing-fixed-factors-and-covariates/

February 11, 2013 at 7:25 am

I’m still a little confused on the same issue as Emily, despite reading the article you suggested. Although the suggested article seems to clearly spell out that any true categorical variable, including dichotomous variables, should be included in an ANOVA model as a fixed factor rather than a covariate, the article above states

“In our little math training example, you may be unable to pretest the participants. Maybe you can only get them for one session. But it’s quick and easy to ask them, even after the test, “Did you take Calculus?” It’s not as good of a control variable as a pretest score, but you can at least get at their previous math training…You’d use it in the model in the exact same way you would the pretest score. You’d just have to define it as categorical.”

It’s the last sentence that I get stuck on – because if you included a dichotomous variable in the model in the exact same way you would a continuous pre-test score, you would include it as a covariate, not as a fixed factor.

Sorry if this seems obvious, perhaps I’m getting caught up in the terminology too! Any clarification would be greatly appreciated, I’ve found your explanations to be more helpful than most!

February 13, 2013 at 3:04 pm

SPSS’s definitition of “Covariate” is “continuous predictor variable.” It’s definition of “fixed factor” is categorical predictor variable. (There’s actually more to this in comparing fixed and random factors, but that’s a tangent here).

“It’s the last sentence that I get stuck on – because if you included a dichotomous variable in the model in the exact same way you would a continuous pre-test score, you would include it as a covariate, not as a fixed factor. ”

I don’t mean define it the same way, I mean use it as a control variable in the same way. I’m trying to separate out the use of the variable in the model (as something to control for vs. something about whose effect you have a hypothesis) from the way it was measured and therefore needs to be defined (categorical vs. continuous).

So yes, it goes into Fixed Factors because it’s categorical.

And don’t apologize for getting confused with terminology–that’s my whole point. The inprecision of the terminology is what makes it so confusing! 🙂 Karen

October 31, 2013 at 5:29 pm

Hello Karen

I was going through the discussion and had same confusion. my study evaluates effectiveness of a school based program on preschoolers behavior problems. my intervention and control groups differ on strength of students in class measured in categories and fathers education also measured in categories. I need to see if ANCOVA results remain significant after controlling for these baseline differences. the covariate option in SPSS should be a continues measure. So how should I do the analysis with categorical variable. Please answer soon.

November 8, 2013 at 11:36 am

If a control variable is simply a categorical variable, put it into “Fixed Factors” instead of Covariate in Univariate GLM. By default, SPSS will also add in an interaction term, but you can take that out in the Design dialog box.

fyi, if it’s helpful, we have a workshop available on demand that goes through all these details of SPSS GLM: http://theanalysisinstitute.com/spss-glm-ondemand-workshop/

August 15, 2012 at 10:40 am

Pls what is the relationship between covariate partial eta squared in ancova result and partial eta squared of treatment and moderator variables. what is the implication of that of covariate which can be the pretest being higher than that of main treatment or moderator variable

September 11, 2012 at 4:54 pm

I don’t know–I’d need more information on the model. For example, is the covariate different from the moderator? Which interactions are being included?

Thanks, Karen

July 19, 2012 at 9:26 am

Thanks a lot Caren. Your notes were very helpful. I have been looking for the answers in tens of books for several months. Thank God, many of my uncertainties on GLM command are answered today in your site. FYI, in my area of study (accountancy), GLM command is almost nonexistent in literatures.

July 19, 2012 at 10:43 am

I’m so glad.

Most of the time in the literature it will be called ANOVA, ANCOVA or linear regression. But they’re all the same model and all can be run in GLM.

May 2, 2012 at 1:05 am

Very clear. Found the answer I was looking for. Thanks much! I’ll pass on word of your site.

May 3, 2012 at 2:39 pm

Thanks, Ben! Glad it was helpful.

April 11, 2012 at 9:33 am

Hi Akinboboye,

I would suggest starting with the SPSS category link at the right.

If you need more help at the beginning level, I’d be happy to send you my book (I have a few extra copies) for the cost of shipping. Please email me directly.

If you want more help with the concepts discussed above, you really want the Running Regressions and ANCOVAs in SPSS GLM workshop. It walks you through the univariate GLM procedure step-by-step and shows where it’s the same and where it’s different from the regression procedure. You can get to that here: http://www.theanalysisinstitute.com/workshops/SPSS-GLM/index.html

It’s not running right now, but you can use our contact form to get access to it as a home study workshop.

Best, Karen

April 10, 2012 at 7:57 pm

I really enjoy this write-up. Kindly send me details on how to use spss. Thanks

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Privacy Overview

What is a Covariate in Statistics?

In statistics, researchers are often interested in understanding the relationship between one or more explanatory variables and a response variable .

However, occasionally there may be other variables that can affect the response variable that are not of interest to researchers. These variables are known as covariates .

Covariates: Variables that affect a response variable, but are not of interest in a study.

For example, suppose researchers want to know if three different studying techniques lead to different average exam scores at a certain school. The studying technique is the explanatory variable and the exam score is the response variable.

However, there’s bound to exist some variation in the student’s studying abilities within the three groups. If this isn’t accounted for, it will be unexplained variation within the study and will make it harder to actually see the true relationship between studying technique and exam score.

One way to account for this could be to use the student’s current grade in the class as a covariate . It’s well known that the student’s current grade is likely correlated with their future exam scores.

Thus, although current grade is not a variable of interest in this study, it can be included as a covariate so that researchers can see if studying technique affects exam scores even after accounting for the student’s current grade in the class.

Covariates appear most often in two types of settings: ANOVA (analysis of variance) and Regression.

Covariates in ANOVA

When we perform an ANOVA (whether it’s a one-way ANOVA , two-way ANOVA , or something more complex), we’re interested in finding out whether or not there is a difference between the means of three or more independent groups.

In our previous example, we were interested in understanding whether or not there was a difference in mean exam scores between three different studying techniques. To understand this, we could have conducted a one-way ANOVA.

However, since we knew that a student’s current grade was also likely to affect exam scores we could include it as a covariate and instead perform an ANCOVA (analysis of covariance).

This is similar to an ANOVA, except that we include a continuous variable (student’s current grade) as a covariate so that we can understand whether or not there is a difference in mean exam scores between the three studying techniques, even after accounting for the student’s current grade .

Covariates in Regression

When we perform a linear regression, we’re interested in quantifying the relationship between one or more explanatory variables and a response variable.

For example, we could run a simple linear regression to quantify the relationship between square footage and house prices in a certain city. However, it may be known that the age of a house is also a variable that affects house price.

In particular, older houses may be correlated with lower house prices. In this case, the age of the house would be a covariate since we’re not actually interested in studying it, but we know that it has an effect on house price.

Thus, we could include house age as an explanatory variable and run a multiple linear regression with square footage and house age as explanatory variables and house price as the response variable.

Thus, the regression coefficient for square footage would then tell us the average change in house price associated with a one unit increase in square footage after accounting for house age .

Additional Resources

An Introduction to ANCOVA (Analysis of Variance) How to Interpret Regression Coefficients How to Perform an ANCOVA in Excel How to Perform Multiple Linear Regression in Excel

Standardized vs. Unstandardized Regression Coefficients

How to calculate standard error of the mean in google sheets, related posts, how to normalize data between -1 and 1, how to interpret f-values in a two-way anova, how to create a vector of ones in..., vba: how to check if string contains another..., how to determine if a probability distribution is..., what is a symmetric histogram (definition & examples), how to find the mode of a histogram..., how to find quartiles in even and odd..., how to calculate sxy in statistics (with example), how to calculate sxx in statistics (with example).

- Schools & departments

Chapter 16. Understanding covariates: simple regression and analyses that combine covariates and factors

This chapter introduces approaches to model continuous data as an independent variable. We refer to continuous independent variables as ‘covariates’.

Until now, we have considered statistical tools that allow one to compare and estimate differences of averages between groups (e.g., t-tests, 1- and multi-Factor GLMs): i.e., we have modeled data where the independent variable comprises ‘treatments’ or ‘levels’. Sometimes we wish to examine effects of a ‘continuous’ variable, instead. Height, weight, speed, mass, volume, length and density are all examples of ‘continuous’ variables: they can have any real-number value within a given range. This chapter introduces approaches to model continuous data as an independent variable. We refer to continuous independent variables as ‘ covariates ’ .

We first introduce linear regression. This technique fits a straight line through data with continuous data on both the x- and y-axes (the independent and dependent variables, respectively). Linear regression allows one to estimate the slope (and y-intercept) for the relationship between the x- and y-variables, with appropriate estimates of uncertainty (i.e., standard errors, 95% confidence intervals); it also provides evidence (p-values) to judge whether the estimates of the slope or intercept differ from zero. (That said, one could also use the output from linear regression to judge whether the line’s slope differs from any arbitrary value (not just zero), which we describe, below.)

This technique allows us to ask questions like, “does a flower’s width (independent variable) affect the amount of pollen removed from the flower (dependent variable)?”, or “does metabolic rate (dependent variable) change with ambient temperature (independent variable)?”. Note that, when conducting linear regression, we assume that:

the covariate (i.e., x-axis; independent variable) affects the dependent variable (y-axis). Therefore, we must consider the variables’ functional relationship to decide which will be the (in)dependent variable. For example, in the flower size example, above, we would model flower size and amount of pollen removed as the independent- and dependent-variables, respectively.

This is because it makes biological sense to hypothesize that flower size affects pollen removal (e.g., by affecting how a pollinator handles a flower), but it makes little sense to hypothesize that the amount of pollen removed would determine how big a flower was.

the covariate (x-variable) is measured precisely (i.e., with little measurement error) or is controlled by the experimenter.

Note that some biological disciplines commonly analyze models that include multiple covariates (i.e., ‘multiple regression’). We will discuss analyses with multiple covariates in the future.

We next introduce models that include a single covariate as well as one or more factors. This approach was once called ‘ANCOVA’ (i.e., ANalysis of CO-VAriance); in a general linear model context, we simply note that a glm includes both a covariate(s) and a factor(s).

As we saw in the Chapter, “Analyzing experiments with multiple factors”, models that include both a covariate and at least one factor allow a researcher to assess evidence for multiple hypotheses, simultaneously.

For example, imagine that we wished to compare the dispersal of seeds from maple trees vs. ash trees. Both trees produce seeds with ‘wings’, but their morphology differs (see pictures, below). We might conduct an experiment involving a random sample of seeds from each tree species. We could drop a seed from a known height and measure the distance it travels; we could repeat this process for many seeds for each species at a variety of known heights (ranging from, say, 3 to 25 meters, which spans biologically plausible heights). With these data, we could address several hypotheses:

- Does the covariate (Height) affect Dispersal distance after accounting for effects of the factor (tree Species)? i.e., This hypothesis tests whether we have evidence for a linear relationship between Height and Distance, accounting for differences between species.

- Does Dispersal distance differ between levels of the factor (Tree Species) affect after accounting for effects of the covariate (Height)?

- Do the slopes of the relationships between Height and Dispersal distance differ between levels of the factor (Tree Species)? i.e., do we find evidence for an interaction between the covariate and the factor?

As noted in the Chapter, Analyzing experiments with multiple factors, these hypotheses differ qualitatively from those we might ask with, say, 1-factor glm. Therefore, understanding analyses that include covariates increases the scope of biological questions we might investigate beyond simpler methods. Studies in ecology and evolution analyze models with covariates on a regular basis. However, my experience is that analyses in biomedical sciences rarely include covariates (with notable exceptions, e.g., epidemiology), but might benefit from doing so.

We might include a covariate in an analysis for several reasons. Foremost, we could include a covariate in a model because we’re specifically interested in its biological effect; this reason should be self-evident. However, we might also model the effects of a covariate not because the covariate interests us biologically, per se, but because including the covariate may help us understand effects of another term in our model (e.g., a factor).

First, we might include a covariate to account for confounding effects in a study. For example, imagine that we wished to test whether blood pressure differed between adult human females vs. males. To test this, we might measure blood pressure for an appropriate sample of many females and males and analyze the data with a 1-factor general linear model (blood pressure and Sex would be the dependent and independent variables, respectively).

However, we might also know that body size can affect blood pressure and that, on average, mass differs females and males. Therefore, if we found evidence for differences in blood pressure between females and males in our 1-factor glm, we might wonder whether an apparent effect of Sex arose due to differences in body size between the Sexes, rather than another biological aspects of Sex. A model that included body size as a covariate would help resolve this issue because the results for the effect of Sex would have accounted for effects of body size; i.e., we test whether Sex affects blood pressure independent of differences due to body size.

Clearly, this approach can deepen understanding of biology. Second, we might include a covariate because, if the covariate accounts for a reasonable amount variation in the dependent variable, we increase statistical power to examine effects of a factor that interests us; again, this provides clear benefits.

Conversely, including a covariate that does not explain reasonable variation in the dependent variable decreases power to examine effects of a factor. Therefore, we should think carefully about including a given covariate in an analysis. But, with this careful thinking, covariates improve analyses and provide deeper biological understanding.

The videos and practice problems, below, equip you with the skills to implement models with a covariate.

Introduction to linear regression

An introduction to GLM with 1 covariate, and comparison with 1-Factor GLM

Link to sharepoint folder for example 1 regression axon

Covariate vs 1-Factor

This video demonstrates that a 1-Factor GLM works in a similar manner as a 1-covariate GLM

Example regression

An example regression analysis (with 1 covariate).

Please note that this video needs to be updated to also discuss the third residual plot (where the square root of standardized residuals lies on the y-axis). If you pause the video at this point, you will see that the red line is not perfectly flat, but it is sufficiently flat that we do not worry about equal variance.

The p-value should also be described as strong evidence for an effect.

Beware of extrapolating and a summary of regression

This video discusses perils of extrapolating beyond the data and provides a summary overview of regression.

An introduction to analyzing factors and covariates simultaneously

This video provides a conceptual introduction to GLMs that include both Factors and Covariates as independent variables. It considers the types of biological questions that can be addressed, lists assumptions of this approach, and briefly compares this approach to GLMs with multiple Factors.

GLM with factor and covariate Example: Blood Pressure

This video walks through an analysis of measurements of undergraduate students at the University of Edinburgh: we test whether weight and sex affect systolic blood pressure. The video provides:

i) simple suggestions to plot data;

ii) two approaches to analyze the data.

iii) guidance when an interaction between a factor and covariate appears present vs. absent in the data

Please note that I mis-speak at the very end of the video, where I say there's a typo about d.f., when reporting the results (362 vs 361) (there is no typo; the df come from different models, which I forgot under the pressure of arriving to the end of a long video!)

This video needs to be edited to consider the third residual plot (where we find the square root of standardized residuals on the y-axis). If you pause the video on this plot (you have to be quick!) you will see that this plot indicates the data meet the assumption of equal variance: the red line is flat and the points are evenly spread around the line.

The video should also be edited with respect to interpreting p-values. The interaction has p = 0.917, which constitutes (at most) weak evidence for an interaction. The p-value for the effect of Adj.Weight is interpreted as strong evidence for an effect; similarly, we eventually find p-values that provide strong evidence for an effect of Sex.

data on sharepoint chapter 16

Practice problems and answers

Recommended Reading

Grafen & Hails: Modern statistics for the life sciences, Chapter 2. A nice introduction to Regression

Ruxton & Colegrave: Experimental Design for the life sciences (4 th Edition),Chapter 9. This Chapter some materials that are not directly related to our current chapter on ‘covariates’. However, Ruxton & Colegrave’s chapter does nicely discuss experimental design with respect to covariates, and discusses interactions between covariates and factors.

Whitlock & Schluter: The analysis of biological data, Chapter 17. Another nice introduction to regression.

Whitlock & Schluter, ‘The analysis of biological data’; Chapter: ‘Multiple explanatory variables’. This Chapter provides a generally great introduction to models with multiple explanatory variables, including models with both factors and covariates. It includes some materials that we discuss in our previous Chapter, dealing with multi-factor models.

Covariate in Statistics: Definition and Examples

What is covariate.

In statistical experiments, researchers often measure the independent variable (main treatment variable) and the dependent variable (response to treatment).

Additionally, there could be other variables known to affect the dependent variable besides the main treatment variable. Generally, this variable is not of main interest in the experiment and is referred to as a covariate .

Definition : Covariate is a variable that is not of main interest in an experiment but can affect the dependent variable and the relationship of the independent variable with the dependent variable.

The covariate is not a planned variable but often arises in experiments due to underlying experimental conditions.

Covariate should be identified and analyzed to increase the accuracy and reduce the unexplained variation of the statistical model. Hence, the covariate is also known as a control variable .

Covariate example

For example, a plant researcher wants to test whether the yield of the plant depends on the genotype of the plant. The researcher collects the data for a yield of the different plant genotypes.

However, the researcher also knows that the height of the plants also affects the plant yield. The plant height is not of primary interest to the researcher, but it should be considered in a statistical model to get accurate results.

In this example, plant genotype is an independent variable (main treatment variable), plant yield is a dependent variable (response variable), and plant height is a covariate .

Note : Covariate is always a continuous variable

Covariate in Statistical analysis

The ANCOVA (Analysis of Covariance) and regression analysis are commonly used statistical methods that consider covariate in the model.

ANCOVA (Analysis of Covariance)

ANCOVA is an extension to ANOVA in which a covariate is considered in the statistical model.

The ANOCVA analyzes the effect of the independent variable (main treatment variable) on the dependent variable while there is a covariate in the study.

In the example discussed above, the researcher could have used one-way ANOVA to study the effect of plant genotypes on yield. But these results could be misleading without considering the effect of plant height (covariate) on plant yield.

ANCOVA considers the covariate in the model and estimates the differences in genotypes while adjusting the effect of plant height (covariate). ANCOVA increases the accuracy of the model by removing the variance associated with the covariate.

Regression analysis

Simple and multiple regression analyses are useful for studying the relationship between independent variables and a dependent variable.

The simple regression analysis is used for understanding the relationship between one independent variable with that of the dependent variable. Whereas, multiple regression analysis is used for understanding the relationship between multiple independent variables with that of the dependent variable.

For example, the effect of plant height on plant yield can be quantified using simple regression analysis.

In addition to plant height, the researcher also knows that ambient temperature also affects the yield of the plant. In this case, the ambient temperature could be used as a covariate in the model.

The multiple regression analysis can be performed in between plant height and ambient temperature as independent variables and plant yield as a dependent variable.

The multiple regression analysis reports adjusted R-squared and regression coefficients (slope), which can be used for estimating the effect of plant height on plant yield after adjusting the effect for ambient temperature.

Note : Sometimes in regression analysis, the independent variables are also known as covariates. This is because they predict the outcome of the dependent variable and can be of primary interest.

Enhance your skills with courses on statistics

- Statistics with Python Specialization

- Advanced Statistics for Data Science Specialization

- Introduction to Statistics

- Python for Everybody Specialization

- Understanding Clinical Research: Behind the Statistics

- Inferential Statistics

Subscribe to get new article to your email when published

Some of the links on this page may be affiliate links, which means we may get an affiliate commission on a valid purchase. The retailer will pay the commission at no additional cost to you.

You may also enjoy

Samtools: extract reads from specific genomic regions.

Learn how to extract reads from BAM files that fall within a specified region with samtools

Samtools: Extract Mapped and Unmapped Paired-end Reads

Learn how to filter mapped and unmapped paired-end reads with Samtools

Samtools: How to Filter Mapped and Unmapped Reads

Learn how to filter mapped and unmapped sequence reads with Samtools

What is Singleton in Bioinformatics?

This tutorial explains what is singleton in bioinformatics

Understanding covariates

What is a covariate.

Covariates are usually used in ANOVA and DOE. In these models, a covariate is any continuous variable, which is usually not controlled during data collection. Including covariates the model allows you to include and adjust for input variables that were measured but not randomized or controlled in the experiment. Adding covariates can greatly improve the accuracy of the model and may significantly affect the final analysis results. Including a covariate in the model can reduce the error in the model to increase the power of the factor tests. Common covariates include ambient temperature, humidity, and characteristics of a part or subject before a treatment is applied.

For example, an engineer wants to study the level of corrosion on four types of iron beams. The engineer exposes each beam to a liquid treatment to accelerate corrosion, but cannot control the temperature of the liquid. Temperature is a covariate that should be considered in the model.

In a DOE, an engineer may be interested in the effect of the covariate ambient temperature on the drying time of two different types of paint.

Example of adding a covariate to a general linear model

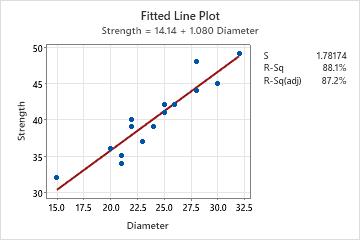

A textile company uses three different machines to manufacture monofilament fibers. They want to determine whether the breaking strength of the fiber differs based on which machine is used. They collect data on the strength and diameter for 5 randomly selected fibers from each machine. Because fiber strength is related to its diameter, they also record the fiber diameter for use as a possible covariate.

- Choose Stat > Regression > Fitted Line Plot .

- In Response (Y) (Y) enter Strength .

- In Predictor (X) (X) enter Diameter .

- Assess how closely the data fall beside the fitted line and how close R 2 is to a "perfect fit" (100%).

The fitted line plot indicates a strong linear relationship (87.2%) between diameter and strength.

- Choose Stat > ANOVA > General Linear Model > Fit General Linear Model .

- In Responses , enter Strength .

- In Factors , enter Machine .

- In Covariates , enter Diameter .

For the fiber production data, Minitab displays the following results:

General Linear Model: Strength versus Diameter, Machine

The F-statistic for machines is 2.61 and the p-value is 0.118. Because the p-value >0.05, you fail to reject the null hypothesis that the fiber strengths do not differ based on the machine used at the 5% significance level. You can assume the fiber strengths are the same on all the machines. Notice that the F-statistic for diameter (covariate) is 69.97 with a p-value of 0.000. This indicates that the covariate effect is significant. That is, diameter has a statistically significant impact on the fiber strength.

Now, suppose you rerun the analysis and omit the covariate. This will result in the following output:

General Linear Model: Strength versus Machine

Notice that the F-statistic is 4.09 with a p-value of 0.044. Without the covariate in the model, you reject the null hypothesis at the 5% significance level and conclude the fiber strengths do differ based on which machine is used.

This conclusion is completely opposite the conclusion you got when you performed the analysis with the covariate. This example shows how the failure to include a covariate can produce misleading analysis results.

- Minitab.com

- License Portal

- Cookie Settings

You are now leaving support.minitab.com.

Click Continue to proceed to:

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

Margin Size

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

9.1: Role of the Covariate

- Last updated

- Save as PDF

- Page ID 33166

- Penn State's Department of Statistics

- The Pennsylvania State University

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

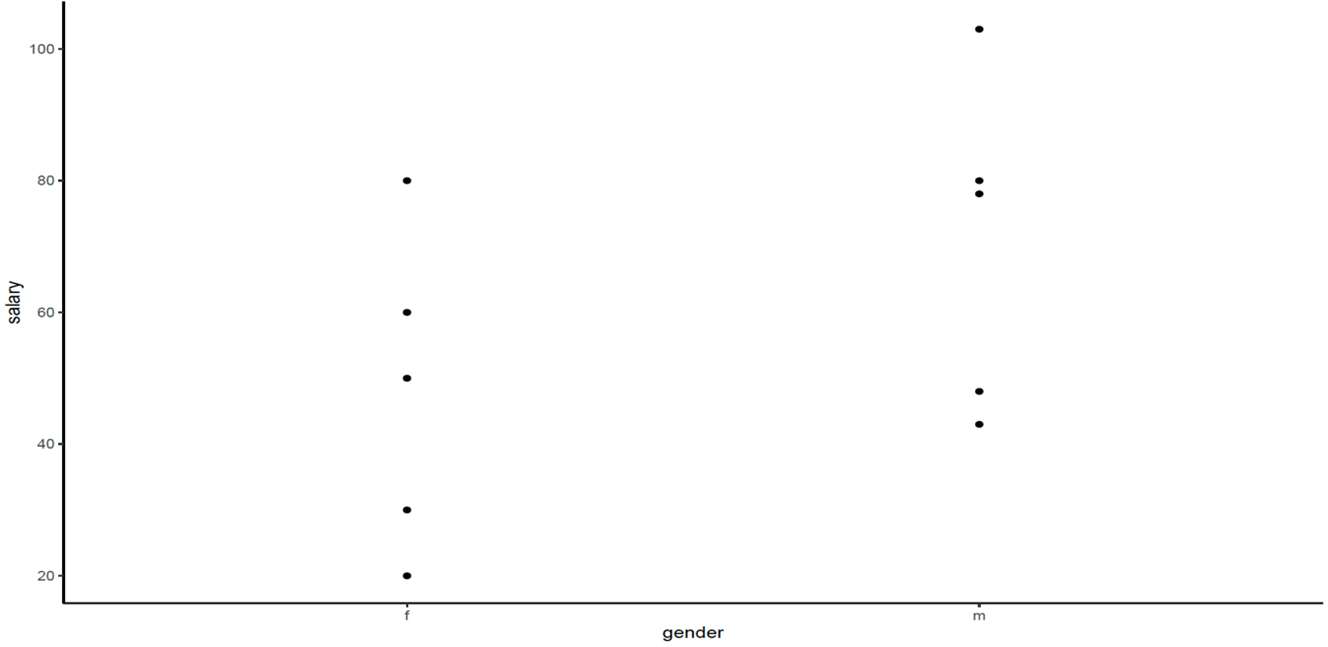

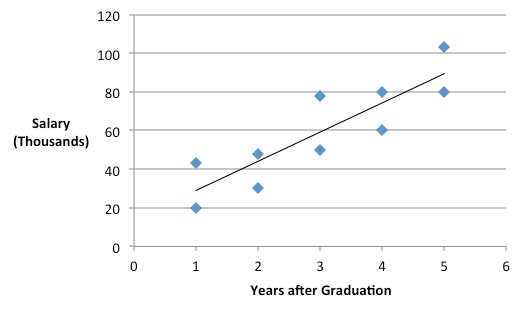

To illustrate the role the covariate has in the ANCOVA, let’s look at a hypothetical situation wherein investigators are comparing the salaries of male vs. female college graduates. A random sample of 5 individuals for each gender is compiled, and a simple one-way ANOVA is performed:

\(H_{0}: \ \mu_{\text{males}} = \mu_{\text{females}}\)

SAS Example

SAS coding for the One-way ANOVA:

Here is the output we get:

Minitab Example

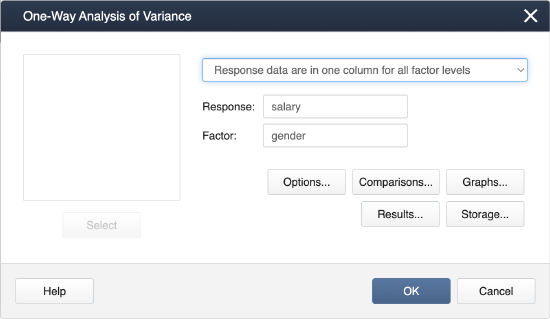

To perform a one-way ANOVA test in Minitab, you can first open the data ( ANCOVA Example Minitab Data ) and then select Stat > ANOVA > One Way…

In the pop-up window that appears, select salary as the Response and gender as the Factor.

Click OK , and the output is as follows.

Analysis of Variance

Model summary.

- Load the ANCOVA example data.

- Obtain the ANOVA table.

- Plot the data.

1. Load the ANCOVA example data and obtain the ANOVA table by using the following commands:

2. Plot for the data, salary by gender, by using the following commands:

3. Plot for the data, salary vs years, by using the following commands:

Because the \(p\)-value > \(\alpha\) (=0.05), they can't reject the \(H_{0}\).

A plot of the data shows the situation:

.png?revision=1&size=bestfit&width=476&height=365)

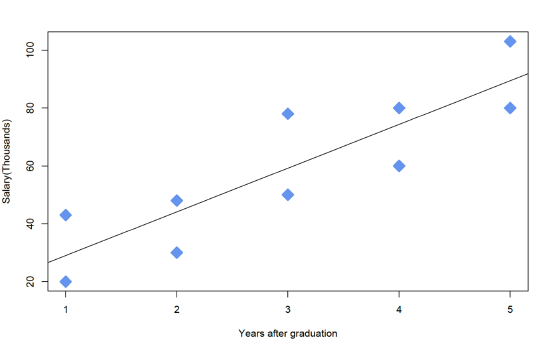

However, it is reasonable to assume that the length of time since graduation from college is also likely to influence one's income. So more appropriately, the duration since graduation, a continuous variable, should be also included in the analysis, and the required data is shown below.

The plot above indicates an upward linear trend between salary and the number of years since graduation, which could be a marker for experience and/or postgraduate education. The fundamental idea of including a covariate is to take this trend into account and to "control" it effectively. In other words, including the covariate in the ANOVA will make the comparison between Males and Females after accounting for the covariate.

- Subscriber Services

- For Authors

- Publications

- Archaeology

- Art & Architecture

- Bilingual dictionaries

- Classical studies

- Encyclopedias

- English Dictionaries and Thesauri

- Language reference

- Linguistics

- Media studies

- Medicine and health

- Names studies

- Performing arts

- Science and technology

- Social sciences

- Society and culture

- Overview Pages

- Subject Reference

- English Dictionaries

- Bilingual Dictionaries

Recently viewed (0)

- Save Search

- Share This Facebook LinkedIn Twitter

Related Content

Related overviews.

analysis of covariance

analysis of variance

confounding

More Like This

Show all results sharing this subject:

Quick Reference

(covariable) n. (in statistics) a continuous variable that is not part of the main experimental manipulation but has an effect on the dependent variable. The inclusion of covariates increases the power of the statistical test and removes the bias of confounding variables (which have effects on the dependent variable that are indistinguishable from those of the independent variable).

From: covariate in Concise Medical Dictionary »

Subjects: Medicine and health

Related content in Oxford Reference

Reference entries, covariate n..

View all reference entries »

View all related items in Oxford Reference »

Search for: 'covariate' in Oxford Reference »

- Oxford University Press

PRINTED FROM OXFORD REFERENCE (www.oxfordreference.com). (c) Copyright Oxford University Press, 2023. All Rights Reserved. Under the terms of the licence agreement, an individual user may print out a PDF of a single entry from a reference work in OR for personal use (for details see Privacy Policy and Legal Notice ).

date: 19 May 2024

- Cookie Policy

- Privacy Policy

- Legal Notice

- Accessibility

- [66.249.64.20|81.177.182.174]

- 81.177.182.174

Character limit 500 /500

An official website of the United States government

The .gov means it's official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you're on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- Browse Titles

NCBI Bookshelf. A service of the National Library of Medicine, National Institutes of Health.

Velentgas P, Dreyer NA, Nourjah P, et al., editors. Developing a Protocol for Observational Comparative Effectiveness Research: A User's Guide. Rockville (MD): Agency for Healthcare Research and Quality (US); 2013 Jan.

Developing a Protocol for Observational Comparative Effectiveness Research: A User's Guide.

- Hardcopy Version at Agency for Healthcare Research and Quality

Chapter 7 Covariate Selection

Brian Sauer , PhD, M. Alan Brookhart , PhD, Jason A Roy , PhD, and Tyler J VanderWeele , PhD.

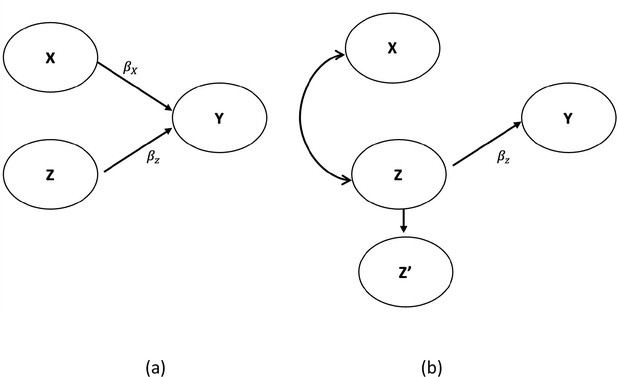

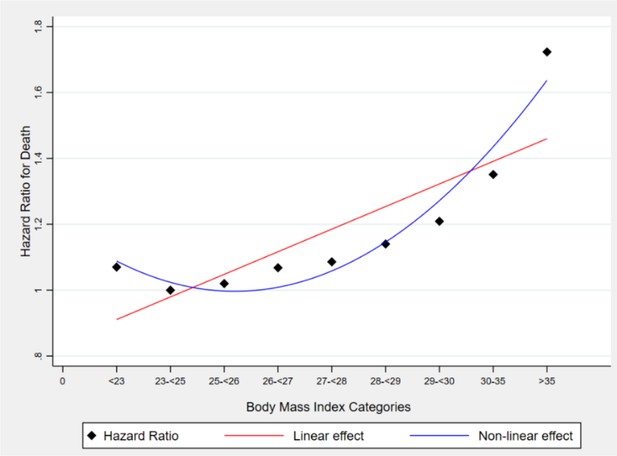

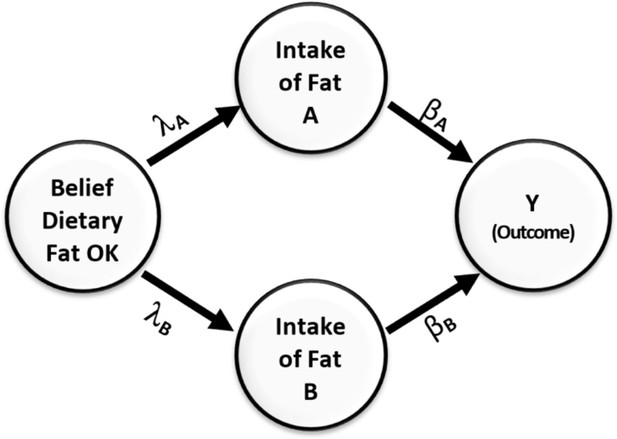

This chapter addresses strategies for selecting variables for adjustment in nonexperimental comparative effectiveness research (CER), and uses causal graphs to illustrate the causal network relating treatment to outcome. While selection approaches should be based on an understanding of the causal network representing the common cause pathways between treatment and outcome, the true causal network is rarely known. Therefore, more practical variable selection approaches are described, which are based on background knowledge when the causal structure is only partially known. These approaches include adjustment for all observed pretreatment variables thought to have some connection to the outcome, all known risk factors for the outcome, and all direct causes of the treatment or the outcome. Empirical approaches, such as forward and backward selection and automatic high-dimensional proxy adjustment, are also discussed. As there is a continuum between knowing and not knowing the causal, structural relations of variables, a practical approach to variable selection is recommended, which involves a combination of background knowledge and empirical selection using the high-dimensional approach. The empirical approach could be used to select from a set of a priori variables on the basis of the researcher's knowledge, and to ultimately select those to be included in the analysis. This more limited use of empirically derived variables may reduce confounding while simultaneously reducing the risk of including variables that could increase bias.

- Introduction

Nonexperimental studies that compare the effectiveness of treatments are often strongly affected by confounding. Confounding occurs when patients with a higher risk of experiencing the outcome are more likely to receive one treatment over another. For example, consider two drugs used to treat hypertension—calcium channel blockers (CCB) and diuretics. Since many clinicians perceive CCBs as particularly useful in treating high-risk patients with hypertension, patients with a higher risk for experiencing cardiovascular events are more likely to be channeled into the CCB group, thus confounding the relation between antihypertensive treatment and the clinical outcomes of cardiovascular events. 1 The difference in treatment groups is a result of the differing baseline risk for the outcome and the treatment effects (if any). Any attempt to compare the causal effects of CCBs and diuretics on cardiovascular events would require taking patients' underlying risk for cardiovascular events into account through some form of covariate adjustment. The use of statistical methods to make the two treatment groups similar with respect to measured confounders is sometimes called statistical adjustment, control, or conditioning.

The purpose of this chapter is to address the complex issue of selecting variables for adjustment in order to compare the causative effects of treatments. The reader should note that the recommended variable selection strategies discussed are for nonexperimental causal models and not prediction or classification models, for which approaches may differ. Recommendations for variable selection in this chapter focus primarily on fixed treatment comparisons when employing the so-called “incident user design,” which is detailed in chapter 2 .