The Smart Guide to the MEE

- MEE Jurisdictions

- Format & Overview of the MEE

- A Step-by-Step Approach on How to Read, Organize, & Draft Your Answer to an MEE Essay Question

- 15 MEE Tips to Increase Your Essay Score

- How to Study & Prepare for the MEE

- MEE Practice: How to Use Model Essay Answers & Sample Examinee Answers Effectively

- Where to Find Past MEE’s

MEE Grading & Scoring

- What’s Next?

- Download the PDF

A Guide to Mastering the Multistate Essay Exam (MEE)

What you’ll learn:.

- How the MEE is Graded & Scored

- MEE Grading Standards… with MEE Grading Key

- How an MEE Score is Determined – Raw Scores and Scaled Scores

- The Total Percentage Weight of an MEE Score (in each jurisdiction)

What You REALLY Need to Know About MEE Grading and Scoring

All written scores are combined, and then scaled using a complex formula. For UBE jurisdictions, the written portions of the exam (MEE + MPT) are combined and scaled to a number between 1-200.

Other than that, you shouldn’t really worry about the specifics of grading and scoring . Your main focus should be on studying the law, essay practice so you write an excellent essay answer, and comparing your practice essays to the MEE Analyses released by the NCBE.

How an MEE Score is Determined (Raw Scores → Scaled Scores)

- Graders use a process called Calibration to ensure fairness when grading and rank-ordering papers. Calibration is achieved by test-grading “calibration packets” of 30 student papers to see what the range of answers is, and then resolving any differences in grading among those graders and/or papers. This process ensures graders are using the same criteria so grading judgments are consistent for rank-ordering.³

- For UBE Jurisdictions , an examinee’s scores for the MEE and MPT are combined, which comprises the examinee’s combined written “raw score” for the exam. This combined written “raw score” is then scaled putting the written raw score on a 200-point scale . Specifically, the combined “raw score” is scaled to the mean and standard deviation of the Scaled MBE Scores for all examinees of the examinee’s respective jurisdiction (the state in which you take the bar exam). This means that an examinee’s written portion is scaled “relative” to the other examinee answers in that jurisdiction.

- Step # 3: The total written “scaled score” is weighted accordingly, depending on how much the written component is worth for that jurisdiction’s bar exam . For UBE jurisdictions, the total written “scaled score” is 50% of the total exam score (30% for the MEE + 20% for the MPT).

Total Weight of MEE Score

In other jurisdictions, the MEE/essays is normally worth between 30% and 45% . Some jurisdictions have additional state essays and/or have a minimum passing score for the MEE/essay portion.

| Jurisdiction | MEE / Essay % | Notes |

| Alabama | 30% | |

| Alaska | 30% | |

| Arizona | 30% | |

| Arkansas | 30% | |

| Colorado | 30% | |

| Connecticut | 30% | |

| D.C. – District of Colombia | 30% | |

| Hawaii | see note | The 6 MEE questions, 2 MPT tasks, and 15 Hawaii ethics multiple choice questions are equally weighted to 50% of the exam score. |

| Idaho | 30% | |

| Illinois | 30% | |

| Indiana | 30% | |

| Iowa | 30% | |

| Kansas | 30% | |

| Kentucky | 30% | |

| Maine | 30% | |

| Maryland | 30% | |

| Massachusetts | 30% | |

| Michigan | 30% | |

| Minnesota | 30% | |

| Mississippi | 45% | Includes 6 MEE essays + 6 Mississippi Essay Questions. |

| Missouri | 30% | |

| Montana | 30% | |

| Nebraska | 30% | |

| New Hampshire | 30% | |

| New Jersey | 30% | |

| New Mexico | 30% | |

| New York | 30% | |

| North Carolina | 30% | |

| North Dakota | 30% | |

| Ohio | 30% | |

| Oklahoma | 30% | |

| Oregon | 30% | |

| Pennsylvania | 30% | |

| Rhode Island | 30% | |

| South Carolina | 30% | |

| South Dakota | see note | Avg. score of 75% required for written component. Written component includes 2 MPT’s, 5 MEE essays, & 1 South Dakota essay. |

| Tennessee | 30% | |

| Texas | 30% | |

| Utah | 30% | |

| Vermont | 30% | |

| Washington | 30% | |

| West Virginia | 30% | |

| Wisconsin | see note | Administers varying combinations of MEE, MPT, and local essays. The weight of each component varies per exam. |

| Wyoming | 30% | |

| Guam | 38.9% | Includes 6 MEE essays + 1 Essay Question based on local law. |

| Northern Mariana Islands | 30% | Includes 6 MEE essays + 2 Local Essay Questions. |

| Palau | see note | Must score 65 or higher on each component. Includes 6 MEE essays + Palau Essay Exam (consisting of 4 to 5 questions). |

| Virgin Islands | 30% | |

MEE Grading Standards

Many jurisdictions do not release their grading standards or grading scale, but a few states do.

Here are the grading standards and scale for Washington State.

| | |

| | A answer is a answer. A answer usually indicates that the applicant has a thorough understanding of the facts, a recognition of the issues presented and the applicable principles of law, and the ability to reason to a conclusion in a well-written paper. |

| | A answer is an answer. A answer usually indicates that the applicant has a fairly complete understanding of the facts, recognizes most of the issues and the applicable principles of law, and has the ability to reason fairly well to a conclusion in a relatively well-written paper. |

| | A answer demonstrates an . A answer usually indicates that the applicant understands the facts fairly well, recognizes most of the issues and the applicable principles of law, and has the ability to reason to a conclusion in a satisfactorily written paper. |

| | A answer demonstrates a answer. A answer usually indicates that it is, on balance, inadequate. It shows that the applicant has only a limited understanding of the facts and issues and the applicable principles of law, and a limited ability to reason to a conclusion in a below average written paper. |

| | A answer demonstrates a answer. A answer usually indicates that it is, on balance, significantly flawed. It shows that the applicant has only a rudimentary understanding of the facts and/or law, very limited ability to reason to a conclusion, and poor writing ability. |

| | A answer is answers. A answer usually indicates a failure to understand the facts and the law. A answer shows virtually no ability to identify issues, reason, or write in a cogent manner. |

| | A answer indicates that there is to the question or that it is completely unresponsive to the question. |

For other MEE jurisdictions, we have confirmed the following raw essay grading scales (see chart below). The NCBE recommends a six-point (0 to 6) raw grading scale , 4 but jurisdictions can use another scale. If you know a grading scale that isn’t listed, we would appreciate that you contact us so we may include it.

| Jurisdiction | Essay Grading Scale (Raw Scale Per Essay) |

| Arizona | Each written answer is awarded a numerical grade from 0 (lowest) to 6 (highest). |

| Arkansas | 1 to 6 point scale (Note: Prior to June 15, 2023, a scale of 65 to 85 was used) |

| Colorado | 1 to 6 point scale |

| Hawaii | 1 to 5 point scale (with 5 being an Excellent answer) |

| Illinois | 0 to 6 point scale |

| Maryland | 1 to 6 point scale |

| Massachusetts | 0 to 7 point scale |

| Missouri | 10-point scale |

| New Jersey | 0 to 6 point scale |

| New York | 0 to 10 point scale |

| Pennsylvania | 0 to 20 point scale |

| Texas | 0 to 6 point scale |

| Vermont | 0 to 6 point scale |

| Washington State | 0 to 6 point scale |

Additional Resources on MEE Grading & Scaling

If you’re interested in more details on MEE grading and scaling, please see the following articles:

- 13 Best Practices for Grading Essays and Performance Tests by Sonja Olson, The Bar Examiner, Winter 2019-2020 (Vol. 88, No. 4).

- Essay Grading Fundamentals by Judith A. Gundersen, The Testing Column, The Bar Examiner, March 2015.

- Q&A: NCBE Testing and Research Department Staff Members Answer Your Questions by NCBE Testing and Research Department, The Testing Column, The Bar Examiner, Winter 2017-2018.

- It’s All Relative—MEE and MPT Grading, That Is by Judith A. Gundersen, The Testing Column, The Bar Examiner, June 2016.

- Procedure for Grading Essays and Performance Tests by Susan M. Case, Ph.D., The Testing Column, The Bar Examiner, November 2010.

- Scaling: It’s Not Just for Fish or Mountains by Mark A. Albanese, Ph.D., The Testing Column, The Bar Examiner, December 2014.

- What Everyone Needs to Know About Testing, Whether They Like It or Not by Susan M. Case, Ph.D., The Testing Column, The Bar Examiner, June 2012.

- Quality Control for Developing and Grading Written Bar Exam Components by Susan M. Case, Ph.D., The Testing Column, The Bar Examiner, June 2013.

- Frequently Asked Questions About Scaling Written Test Scores to the MBE by Susan M. Case, Ph.D., The Testing Column, The Bar Examiner, Nov. 2006.

- Demystifying Scaling to the MBE: How’d You Do That? by Susan M. Case, Ph.D., The Testing Column, The Bar Examiner, May 2005.

Want To Save This Guide For Later?

No problem! Just click below to get the PDF version of this guide for free.

³See, 13 Best Practices for Grading Essays and Performance Tests by Sonja Olson, The Bar Examiner, Winter 2019-2020 (Vol. 88, No. 4), at Item 5.

4 See Id ., at Item 3.

Fill Out This Form For Your PDF

Download now.

Bar Exam Scoring: Everything You Need to Know About Bar Exam Scores

It’s important to know how the bar exam is scored so that you can understand what’s required to pass. The following sections will provide an overview of how the bar exam is scored, including information on essay grading, the MBE scale, and converted scores.

Understanding How the Bar Exam Is Scored

First, we’ll look at the UBE (Uniform Bar Exam). This is the bar exam used in a majority of states, and it’s what we’ll be focusing on in this article.

The UBE is broken down into three sections: the MPT (Multistate Performance Test), the MBE (Multistate Bar Exam), and the MEE (Multistate Essay Exam) .

The MBE is a 200-question, multiple-choice exam covering six subject areas: contracts, torts, constitutional law, evidence, criminal law, and real property .

Each state sets its own passing bar exam score for the MBE. Thus, Pennsylvania, Georgia, New York, California, Minnesota, and Nevada bar exam scoring differs from state to state. But in general, it’s between 140 and 150.

The MEE consists of six essay questions covering the same six subject areas as the MBE.

Bar exam scoring in states may also include one or two additional, state-specific essay questions.

The MEE is graded on a scale of 0-30, and the bar exam pass score is typically between 24 and 27.

The MPT is a skills-based exam that consists of two 90-minute tasks. It’s designed to test your ability to complete common legal tasks, such as writing a memo or conducting research.

The MPT is graded on a pass/fail basis, and there is no set passing score.

Now that we’ve covered the basics of the UBE bar exam score range, let’s take a more in-depth look at how each section is graded.

Essay Grading

The MEE essays are graded by trained graders from the National Conference of Bar Examiners (NCBE).

Each essay is read and graded twice, once by a human grader, and once by a computer program.

If the human and computer grades differ by more than 2 points, a third grader will read and grade the essay.

The essays are graded on a scale of 0-30, with 6 being the lowest passing score.

To determine your score, the graders will look at the overall quality of your answer, as well as your ability to apply legal principles, analyze fact patterns, and communicate effectively.

The MBE Scale

The MBE is graded on a scale of 0-200, with 130 being the lowest passing score.

To determine your score, the NCBE will first convert your raw score (the number of questions you answered correctly) into a scaled score.

This is done to account for differences in difficulty between the exams.

After your raw score gets converted to scaled scoring, it’s then added to your MEE score to determine your overall UBE score.

Scaled Scores

The total score that an examinee receives on the bar exam is typically a scaled score.

A scaled score is a number that has been adjusted so that it can be compared to the scores of other examinees who have taken the same test.

The purpose of scaling is to ensure that the scores are accurate and reliable and to account for any differences in difficulty between different versions of the test.

For example, suppose that the average score on the MBE in one state is 100 points and the average score in another state is 10 points.

If the two states were to use the same passing score, then it would be much easier to pass the bar exam in the first state than in the second.

Scaling ensures that this is not the case by adjusting the scores so that they are comparable.

It is important to note that scaling does not necessarily mean that the scoring process is fair.

In some cases, an examinee who receives a scaled score of 140 points might have scored higher than another examinee who received a scaled score of 150 points.

However, this does not necessarily mean that the first examinee performed better on the exam overall. It could simply be that the exam was easier in the first examinee’s state than in the second examinee’s state.

What’s important is that, thanks to scaling, the two examinees have an equal chance of passing the bar exam .

Another factor that can affect an examinee’s total score is weighting.

Weighting is the process of giving certain sections of the exam more importance than others.

For example, in some states, the MBE counts for 50% of the total score, while the MEE counts for 30%, and the MPT counts for 20%.

In other states, however, the weights may be different. For example, the MBE might count for 60%, while the MEE and MPT count for 20% each.

The weights are determined by each state’s bar examiners and can be changed at any time.

It is important to note that weighting does not affect an examinee’s score on individual sections of the exam. It only affects the overall total score.

What Is a Passing UBE Score in Every State?

In order to pass the Uniform Bar Exam, examinees must earn a score of at least 260 on the MBE and MEE. However, a score of 280 is generally considered to be a good score, and a score of 300 to 330 (highest score on bar exam) is considered to be excellent.

Bar Exam Passing Score by State

It’s worth noting that a 280 will ensure a passing score in all states. And it will put you in the 73 bar exam scores percentiles.

The average baby bar exam score range is between 40 and 100. This means that the average score is between 70 and 80.

Scoring high on bar exam essays is extremely important to your overall score. For many students, essay writing is the most difficult and stressful part of the bar exam. But with a little practice and guidance, you can learn how to score high on bar exam essays so you can pass the first time to keep your bar exam costs down.

- See Top Rated Bar Review Courses

FAQs on Bar Exam Scoring

The bar exam is scored by a point system, with 400 being the highest possible score. What score do you need to pass the bar exam?

The highest possible score on the bar exam is 400.

A good bar exam score depends on the jurisdiction but is typically between 260 and 280.

Scoring for the bar exam works by awarding points for each correct answer. The number of points awarded varies depending on the question and the jurisdiction.

Kenneth W. Boyd

Kenneth W. Boyd is a former Certified Public Accountant (CPA) and the author of several of the popular "For Dummies" books published by John Wiley & Sons including 'CPA Exam for Dummies' and 'Cost Accounting for Dummies'.

Ken has gained a wealth of business experience through his previous employment as a CPA, Auditor, Tax Preparer and College Professor. Today, Ken continues to use those finely tuned skills to educate students as a professional writer and teacher.

Related Posts

The Testing Column: Scaling, Revisited

This article originally appeared in The Bar Examiner print edition, Fall 2020 (Vol. 89, No. 1), pp. 68–75.

This section begins with two reprinted Bar Examiner articles on scaling written by Susan M. Case, PhD, NCBE’s Director of Testing from 2001 to 2013. In the first article, Dr. Case answers frequently asked questions about scaling; in the second article, she explains the scaling process with an illustrative example.

The accompanying sidebars below provide additional explanations of equating and scaling and dispel a frequent myth about scaling.

Frequently Asked Questions about Scaling Written Test Scores to the MBE

By Susan M. Case, PhD Reprinted from 75(4) The Bar Examiner (November 2006) 42–44

Scaling is a topic that often arises at NCBE seminars and other meetings and has been addressed in the Bar Examiner ; yet it still seems to be a mysterious topic to many. This column addresses the most frequently asked questions about scaling in an attempt to clarify some of the issues.

What Is Scaling?

In the bar examination setting, scaling is a statistical procedure that puts essay or performance test scores on the same score scale as the Multistate Bar Examination (MBE). Despite the change in scale, the rank-ordering of individuals remains the same as it was on the original scale.

What Is the Outcome for Bar Examiners Who Do Not Scale their Written Test Scores to the MBE?

To understand the effect of not scaling written scores to the MBE but keeping them on separate scales, one must consider the equating process that adjusts MBE “raw” scores to MBE “scaled” scores. As you know, equating ensures that MBE scores retain the same meaning over time, regardless of the difficulty of the test form that a particular examinee took and regardless of the relative proficiency of the pool of candidates in which a particular examinee tested. The equating process requires that a mini-test comprised of items that have appeared on earlier versions of the test be embedded in the larger exam. The mini-test mirrors the full exam in terms of content and statistical properties of the items. The repeated items provide a direct link between the current form of the exam and previous forms. They make it possible to compare the performance of this administration’s candidates with the performance of a previous group on exactly the same set of items. Even though different forms of the MBE are designed to be as similar as possible, slight variations in difficulty are unavoidable. Similarly, candidate groups differ in proficiency from one administration to the next, and from one year to the next. Equating methods are used to adjust MBE scores to account for these differences, so that a scaled score of 135 on the MBE in July 2004 represents the same level of proficiency as a scaled score of 135 on the MBE in February 2007 or on any other test.

Equating is not possible for written tests because written questions are not reused. As a consequence, essay scores will fluctuate in meaning from administration to administration because it is impossible for graders to account for differences in the difficulty of the questions or for differences in the average proficiency of candidates over time. This phenomenon is demonstrated by the fact that average essay scores in February tend to be the same as average essay scores in July, even though we know that February candidates are consistently less proficient (as a group) than July candidates. It has also been shown that an essay of average proficiency will be graded lower if it appears in a pool of excellent essays than if it appears in a pool of poor essays. Context matters.

So what is the outcome of such fluctuation in the meaning of written test scores? An individual of average proficiency may have the misfortune of sitting for the bar with a particularly bright candidate pool. This average individual’s essay scores will be lower than they would have been in a different sitting. The same individual’s MBE score will reflect his genuine proficiency level (despite sitting with a group of particularly bright candidates), but without scaling, his essay scores may drag him down. An unscaled essay score may be affected by factors such as item difficulty or the average proficiency of the candidate pool that do not reflect the individual candidate’s performance.

What Is the Outcome for Bar Examiners Who Scale Their Written Test Scores to the MBE?

The preferred approach is to scale written test scores to the MBE—this process transforms each raw written score into a scaled written score. A scaled written test score reflects a type of secondary equating that adjusts the “raw” written test score after taking into account the average proficiency of the candidate pool identified by the equating of the MBE. Once the average proficiency of a group of candidates is determined, scaling will adjust not only for an upswing or downswing in proficiency from past years, but also for any change in the difficulty of written test questions or any change in the harshness of the graders from past years.

In our example of the individual of average proficiency who sits for the bar with a particularly bright candidate pool, this individual’s raw written scores will remain lower than they would have been in previous sittings with less able peers. But the equating of the MBE will take into account that this is a particularly bright candidate pool and that the individual in question is in fact of average ability. The individual’s written test scores will then be scaled to account for the difference in the candidate pool, and his written test scores will be brought into alignment with his demonstrated level of ability. Scaled essay scores lead to total bar examination scores that eliminate contextual issues and that accurately reflect individual proficiency.

Doesn’t This Process Disadvantage People Who Do Poorly on the MBE?

No. It is important to note that an individual might have one of the best MBE scores and one of the worst essay scores, or vice versa. Scaling written scores to the MBE does not change the rank-ordering of examinees on either test. A person who had the 83rd best MBE score and the 23rd best essay score will still have the 83rd best MBE score and the 23rd best essay score after scaling.

One analogy that might help relates to temperature. Suppose the garages on your street have thermometers that measure their temperatures in Celsius and the houses on your street have thermometers that measure their temperatures in Fahrenheit. The house temperatures range from 66° F to 74° F and the garages range from 19° C to 23° C. Suppose that you have the coldest garage and the warmest house. If the garage thermometers are all changed to the Fahrenheit scale, the temperature readings for all the garages will change, but your garage will still measure the coldest and your house will still measure the warmest. In Celsius terms, your garage temperature was 19° C; in Fahrenheit terms your garage temperature is 66° F. Either way, it is shown to be the coldest garage. (Note that this example demonstrates only the move to a different scale and the maintenance of identical rank order; it does not demonstrate the adjustments that take place during the equating of exam scores.)

In the example of the individual of average proficiency who sits with an unusually bright candidate pool, scaling the average individual’s written test scores to the MBE would not change his rank within the candidate group with which he took the examination. He would still be ranked below where he would have ranked in a less-capable candidate pool. However, his unusually low rank would no longer affect his total bar examination score. The total scores for the entire pool of candidates would reflect what was in fact the case: that it was a particularly bright pool of individuals (i.e., the total scaled scores would be higher than they were for previous administrations).

What Does the Process of Scaling Written Scores to the MBE Entail?

Scaling the written tests can be done either on an individual essay score (or MPT item score), or on the total written test score. NCBE will scale the scores for individual jurisdictions, if they wish, or will provide the jurisdictions with software to do the scaling themselves. Essentially the process results in generating new scaled essay scores that look like MBE scores. The distribution of written scores will be the same as the distribution of MBE scores—with a very similar average, a very similar minimum and maximum, and a very similar distribution of scores.

The process is described in the Testing Column that appears in the May 2005 Bar Examiner (Volume 74, No. 2). Conceptually, the result is similar to listing MBE scores in order from best to worst, and then listing written scores in order from best to worse to generate a rank-ordering of MBE scores and essay scores. The worst essay score assumes the value of the worst MBE score; the second worst is set to the second worst, etc.

Demystifying Scaling to the MBE: How’d You Do That?

By Susan M. Case, PhD Reprinted from 74(2) The Bar Examiner (May 2005) 45–46 . There seems to be a mystery about how to scale the essay scores to the Multistate Bar Examination (MBE), and the Testing Column of the statistics issue seems to be an appropriate place to run through the process.

Here are the steps in a nutshell:

Step 1. Determine the mean and standard deviation (SD) of MBE scores in your jurisdiction.

Step 2 . Determine the mean and SD of the essay scores in the jurisdiction (you can do this for the total essay score, the average essay score, or each essay individually).

Step 3 . Rescale the essay scores so that they have a mean and SD that is the same as the MBE for the same group of examinees.

Now for an example. Let’s assume you tested 15 examinees. In Table 1 , the data for each examinee are shown. The first column shows the examinee ID number. Column 2 shows the total raw essay score that each examinee received. These data were obtained from a mock essay exam where each of the 10 essays is graded on a one to six scale, but the calculations apply equally well for any number of essays and any type of grading scale. The bottom of the column shows the average score (40) and standard deviation of the scores (5.2). These are typically shown in your data output and would not usually be calculated by hand.

Table 1. Sample Essay Data Shown for Each Examinee

| Column 1 | Column 2 | Column 3 | Column 4 | Column 5 | Examinee ID | Actual Total Raw Essay Score | Total Essay Converted to SD Units | Total Essay Score Scaled to the MBE | Actual MBE Score | | 2 | 1 | 29 | (29-40) / (5.2) = -2.1 | (-2.1x15) 140 = 108.1 | 110 |

| 3 | 2 | 34 | (34-40) / (5.2) = -1.2 | (-1.2x15) + 140 = 122.6 | 120 |

| 4 | 3 | 36 | (36-40) / (5.2) = -.8 | (-.8x15) + 140 = 128.4 | 140 |

| 5 | 4 | 38 | (38-40) / (5.2) = -.4 | (-.4x15) + 140 = 134.2 | 140 |

| 6 | 5 | 38 | (38-40) / (5.2) = -.4 | (-.4x15) + 140 = 134.2 | 160 |

| 7 | 6 | 39 | (39-40) / (5.2) = -.2 | (-.2x15) + 140 = 137.1 | 127 |

| 8 | 7 | 39 | (39-40) / (5.2) = -.2 | (-.2x15) + 140 = 137.1 | 149 |

| 9 | 8 | 40 | (40-40) / (5.2) = 0 | (0x15) + 140 = 140.0 | 150 |

| 10 | 9 | 41 | (41-40) / (5.2) = .2 | (.2x15) + 140 = 142.9 | 140 |

| 11 | 10 | 42 | (42-40) / (5.2) = .4 | (.4x15) + 140 = 145.8 | 142 |

| 12 | 11 | 42 | (42-40) / (5.2) = .4 | (.4x15) + 140 = 145.8 | 138 |

| 13 | 12 | 42 | (42-40) / (5.2) = .4 | (.4x15) + 140 = 145.8 | 130 |

| 14 | 13 | 43 | (43-40) / (5.2) = .6 | (.6x15) + 140 = 148.7 | 149 |

| 15 | 14 | 45 | (45-40) / (5.2) = 1.0 | (1.0x15) + 140 = 154.5 | 136 |

| 16 | 15 | 52 | (52-40) / (5.2) = 2.3 | (2.3x15) + 140 = 174.8 | 170 |

| 17 | Mean | 40 | 0 | 140 | 140 |

| 18 | SD | 5.2 | 1 | 15 | 15 |

Your calculations begin with column 3. For each examinee, subtract the group mean essay score (40 in this example) from the total essay score that the examinee received; then divide this value by the group SD (5.2 in this example). The result is the total essay score in standard deviation units. For example, the first examinee has a total raw essay score of 29; the mean essay score is 40 and the SD is 5.2; thus, the examinee’s score in SD units is -2.1 or 2.1 standard deviations below the mean (see the Testing Column in May 2003 for a discussion of scores in SD units).

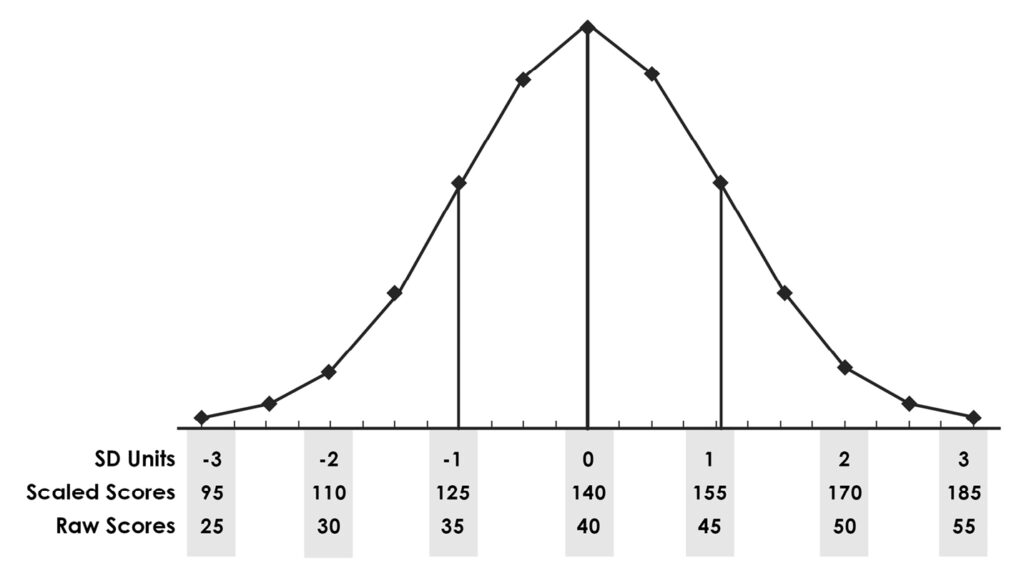

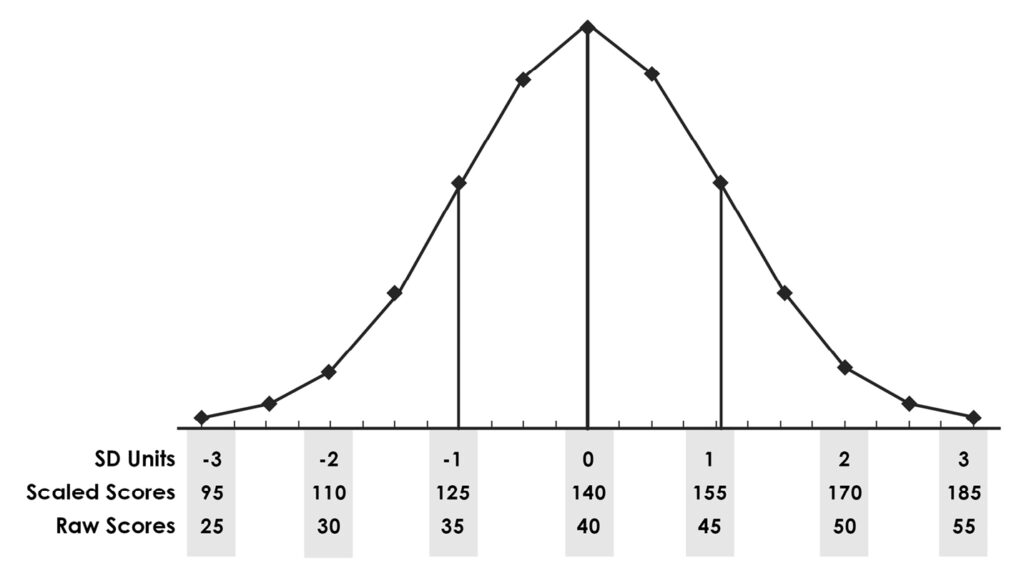

Regardless of the score range, scores in standard deviation units typically range from -3 to +3 (zero is the average score). An examinee with a score of -2.1 SDs has a very low score; an examinee with a score of zero SDs has a score at the mean of the group; and an examinee with a score of 2.3 SDs has a high score. Overall, the scores in SD units have a mean of zero and a standard deviation of one; this will always be true if the scores have a normal (bell-curve) distribution ( see Figure 1 ).

Figure 1. Sample Essay Data Shown in SD Units, as Scaled Scores, and as Raw Scores

Column 4 shows the calculations to turn this standard deviation score into a score that is scaled to the MBE. Multiply the examinee’s score in SD units by 15 (the SD of the MBE for this group); add this result to 140 (the mean of the MBE for this group). The mean and SD for the essay scores scaled to the MBE will have the same mean and SD as the MBE scores. Column 5 shows the actual MBE scores achieved by each examinee.

Note that the examinees who performed relatively poorly on the essay have a scaled essay score that is also relatively low, regardless of their actual performance on the MBE. Examinee 1 performed poorly on both the essay and the MBE. Examinees 3, 4, and 5 performed poorly on the essay but much better on the MBE; note that this comparison can be made easily by comparing the results in columns 4 and 5. Examinee 6 did much better on the essay than on the MBE. Converting the essay scores to the MBE scale did not change the rank-ordering of examinees on the essay scale; it simply made their scores easier to compare. The correlation between the raw essay score and MBE is exactly the same as between the scaled essay score and the MBE.

Scaling the essays to the MBE is an essential step in ensuring that scores have a consistent meaning over time. When essay scores are not scaled to the MBE, they tend to remain about the same: for example, it is common for the average raw July essay score to be similar to the average February score even if the July examinees are known to be more knowledgeable on average than the February examinees. Using raw essay scores rather than scaled essay scores tends to provide an unintended advantage to some examinees and an unintended disadvantage to others.

The following sidebar is an excerpt from NCBE Testing and Research Department, “ The Testing Column: Q&A: NCBE Testing and Research Department Staff Members Answer Your Questions ,” 86(4) The Bar Examiner (Winter 2017–2018) 34–39.

What is equating? Why do it? How does it work?

Equating is a statistical procedure used for most large-scale standardized tests to adjust examinee scores to compensate for differences in difficulty among test forms so that scores on the forms have the same meaning and are directly comparable. (The term test form refers to a particular set of test items, or questions, administered at a given time. The February 2017 Multistate Bar Examination [MBE] test form, for example, contains a unique set of items, and the July 2017 test form contains a different set of items.) With equating, a reported scaled score has a consistent interpretation across test forms.

Equating is necessary because using exactly the same set of items on each test form could compromise the meaning of scores and lead to unfairness for some examinees. For example, there would be no guarantee that items were not shared among examinees over time, which would degrade the meaning of scores. Not only would the scores obtained by some examinees be corrupted by the examinees’ advance knowledge of test items, but, if undetected, these inflated scores would advantage the clued-in examinees over other examinees. To avoid this problem, the collection of items on test forms for most large-scale standardized tests changes with every test administration.

Despite test developers’ best efforts to build new forms that conform to the same content and statistical specifications over time, it is nearly impossible to ensure that statistical characteristics like difficulty will be identical across the forms. Equating adjusts scores for such differences in difficulty among test forms so that no examinee is unfairly advantaged by being assigned an easier form or is unfairly disadvantaged by being assigned a more difficult form.

While there are many methods available to conduct equating, a commonly used approach for equating large-scale standardized tests—and the approach that is used for the MBE—is to embed a subset of previously administered items that serve as a “mini-test,” with the items chosen to represent as closely as possible the content and statistical characteristics of the overall examination. The items that compose this mini-test are referred to as equators. The statistical characteristics of the equators from previous administrations are used to adjust scores for differences in difficulty between the current test form and previous forms after accounting for differences between current and previous examinee performance on the equator items. 1 Conceptually, if current examinees perform better on the equators compared to previous examinees, we know that current examinee proficiency is higher than that of previous examinees and that current examinees’ MBE scaled scores should be higher (as a group) than those of previous examinees (and vice versa if performance on the equators is worse for current examinees). The equators are used as a link to previous MBE administrations and, ultimately, to MBE scaled scores.

- For more detailed descriptions and examples of equating, see Mark A. Albanese, PhD, “ The Testing Column: Equating the MBE ,” 84(3) The Bar Examiner (September 2015) 29–36; Deborah J. Harris, “ Equating the Multistate Bar Examination ,” 72(3) The Bar Examiner (August 2003) 12–18; Michael T. Kane, PhD & Andrew Mroch, “ Equating the MBE ,” 74(3) The Bar Examiner (August 2005) 22–27; Michael J. Kolen & Robert L. Brennan, Test Equating, Scaling, and Linking: Methods and Practices (Springer 3rd ed. 2014); Lee Schroeder, PhD, “ Scoring Examinations: Equating and Scaling ,” 69(1) The Bar Examiner (February 2000) 6–9. (Go back)

Can we equate essay or performance tests? (Or, what is essay scaling?)

As with MBE items, the written components of the bar exam (essay questions and performance test items) change with every administration. The difficulty of the questions/items, the proficiency of the group of examinees taking the exam, and the graders (and the stringency with which they grade) may also change. All three of these variables can affect the grades assigned by graders to examinees’ responses to these written components of the exam and can have the potential to cause variation in the level of performance the grades represent across administrations. Unlike the MBE, the answers to the written questions/items of the bar examination cannot be equated, because previously used questions/items can’t be reused or embedded in a current exam—there are too few written questions/items on the exam and they are too memorable. If essay questions or performance test items were reused, any examinee who had seen them on a previous administration would be very likely to have an unfair advantage over examinees who had not seen them previously.

Because directly equating the written components is not possible, most jurisdictions use an indirect process referred to as scaling the written component to the MBE. This process has graders assign grades to each question/item using the grading scale employed in their particular jurisdiction (e.g., 1 to 6). The individual grades on each written question/item are typically combined into a raw written score for each examinee. These raw written scores are then statistically adjusted so that collectively they have the same mean and standard deviation as do the scaled scores on the MBE in the jurisdiction. ( Standard deviation is the measure of the spread of scores—that is, the average deviation of scores from the mean. The term scaled score refers to the score as it has been applied to the scale used for the test—in the case of the MBE, the 200-point MBE scale.)

Conceptually, this process is similar to listing MBE scaled scores in order from best to worst and then listing raw written scores in order from best to worst to generate a rank-ordering of MBE scores and written scores. The best written score assumes the value of the best MBE score; the second-best written score is set to the second-best MBE score, and so on. Functionally, the process yields a distribution of scaled written scores that is the same as the jurisdiction’s distribution of the equated MBE scaled scores. Another way to think about the process is that the raw written scores are used to measure how far each examinee’s written performance is from the group’s average written performance, and then the information from the distribution of the group’s MBE scores is used to determine what “scaled” values should be associated with those distances from the average.

This conversion process leaves intact the important rank-ordering decisions made by graders, and it adjusts them so that they align with the MBE scaled score distribution. Because the MBE scaled scores have been equated, converting the written scores to the MBE scale takes advantage of the MBE equating process to indirectly equate the written scores. The justification for scaling the written scores to the MBE has been anchored on the facts that the content and concepts assessed on the MBE and written components are aligned and performance on the MBE and the written components is strongly correlated. The added benefit of having scores of both the MBE and the written component on the same score scale is that it simplifies combining the two when calculating the total bar examination score. In the end, the result of scaling (like equating) is that the scores represent the same level of performance regardless of the administration in which they were earned.

Aren’t scaling and equating the same as grading on a curve?

By NCBE Testing and Research Department staff members Mark A. Albanese, PhD; Joanne Kane, PhD; and Douglas R. Ripkey, MS

No. Grading on a curve will create a specified percentage of people who pass, no matter their proficiency. Relative grading, scaling, and equating, however, eliminates the concept of a fixed percentage; every single taker could pass (or fail) within an administration and based upon their own merits. We will do our best to illustrate the differences here, but we recommend that readers familiarize themselves with the component parts and the scoring system as a whole in order to best understand the bigger picture; having a poor understanding of any of the three integral parts of the system will result in a poor understanding of scoring and make it challenging to adequately compare and contrast the scoring system NCBE uses with alternative grading methods.

Relative Grading of the Written Component Versus Grading on a Curve

In comparison to grading on a curve, the most similar piece of NCBE’s scoring approach is the initial relative grading NCBE recommends for the written component of the exam (the Multistate Essay Examination and the Multistate Performance Test). Relative grading is similar to grading on a curve in that graders are looking to spread out the scores across a grading scale (e.g., 1 to 6—or whatever grading scale is used in their particular jurisdiction). Whereas grading on a curve usually involves quotas on what percentage of examinees receive each grade, NCBE recommends only that graders spread out scores across the grading scale as much as possible and appropriate. But the additional and truly key difference is that grading on a curve is usually a process unto itself, whereas relative grading is just one step in a larger scoring process that involves equating the MBE and then scaling the written component score to the MBE.

Equating the MBE Versus Grading on a Curve

The only thing that equating and grading on a curve have in common is that they both involve taking raw scores on a test and translating them into a different scale (such as letter grades) to obtain final information about a test taker’s performance. The rationale for each approach, the mechanisms by which the scores are translated or transformed, and the interpretations of the scores from these two paths are each very different.

With grading on a curve, the extra processing is focused on determining relative performance. That is, the final performance is interpreted relative to other people who have taken the same evaluation. It’s akin to the adage that if you are being chased by a bear, you don’t have to be the fastest person, just faster than the person next to you. When a curve is used to grade, there typically is some sort of quota set. For example, a jurisdiction (fictional) might specify that only examinees with MBE raw scores in the top 40% will be admitted. When grading on a curve, an examinee’s actual score is less important than how many examinees had lower scores, since a specified percentage of examinees will pass regardless of the knowledge level of the group and the difficulty of the materials.

Note that with a system of grading on a curve, it is not possible to track changes in the quality of examinee performances as a group over time. If a strict quota system is followed, the average grade within the class will always be the same. If a student happens to be part of a weaker cohort, they will receive a higher grade than they would have if they had belonged to a stronger cohort. On the other hand, scaling and equating would result in an examinee receiving a score that was not dependent on who else was in their cohort.

With scaling and equating, extra processing is implemented to determine performance in a more absolute sense. The final performance is interpreted as an established level of demonstrated proficiency (e.g., examinees with MBE scaled scores at or above 135 will be admitted). When raw scores are scaled and equated to final values, the difficulty of the test materials is a key component in the process. The examinee’s actual level of knowledge is more important in determining status than how their knowledge level compares to other people. The knowledge levels of examinees determine the percentage of examinees who pass. The result is that everyone could pass (or fail) if their knowledge level was sufficiently high (or low).

Further Reading

Mark A. Albanese, PhD, “ The Testing Column: Scaling: It’s Not Just for Fish or Mountains ,” 83(4) The Bar Examiner (December 2014) 50–56. Mark A. Albanese, PhD, “ The Testing Column: Equating the MBE ,” 84(3) The Bar Examiner (September 2015) 29–36. Mark A. Albanese, PhD, “ The Testing Column: Essay and MPT Grading: Does Spread Really Matter? ,” 85(4) The Bar Examiner (December 2016) 29–35. Judith A. Gundersen, “ It’s All Relative—MEE and MPT Grading, That Is ,” 85(2) The Bar Examiner (June 2016) 37–45. Lee Schroeder, PhD, “ Scoring Examinations: Equating and Scaling ,” 69(1) The Bar Examiner (February 2000) 6–9.

Contact us to request a pdf file of the original article as it appeared in the print edition.

Bar Exam Fundamentals

Addressing questions from conversations NCBE has had with legal educators about the bar exam.

Online Bar Admission Guide

Comprehensive information on bar admission requirements in all US jurisdictions.

NextGen Bar Exam of the Future

Visit the NextGen Bar Exam website for the latest news about the bar exam of the future.

BarNow Study Aids

NCBE offers high-quality, affordable study aids in a mobile-friendly eLearning platform.

2023 Year in Review

NCBE’s annual publication highlights the work of volunteers and staff in fulfilling its mission.

2023 Statistics

Bar examination and admission statistics by jurisdiction, and national data for the MBE and MPRE.

Dynamic title for modals

Are you sure.

- 0 Shopping Cart $ 0.00 -->

🎉 JD Advising’s Biggest Sale is Live! Get 33% off our top bar exam products – Shop Now 🎉

Learn to study efficiently for the bar exam with our free guides on how to pass the MEE and MBE ! Plus memorize all of the MPT formats with our attack outlines !

Overwhelmed by your bar exam outlines? Our expert-crafted, highly-tailored bar exam outlines are now available for individual purchase! It’s not too late to change your approach!

How Is The Uniform Bar Exam Scored?

How is the Uniform Bar Exam scored? In this post, we break down how to combine your MBE, MEE, and MPT scores to see what you need to pass!

What is the UBE?

First of all, the Uniform Bar Exam is a bar examination written by the National Conference of Bar Examiners (NCBE). 34 states administer the exam. It is a 2-day test given twice each year – in February and July. The test takes place the last Tuesday and Wednesday of each of those months.

Day One of the exam is the written portion, consisting of 6 essays (the MEE) and 2 performance tests (MPTs). Day Two consists of 200 multiple-choice questions (the MBE).

The written portion and the multiple-choice portion are each worth 50% of the total score on the UBE. The entire test is scored on a 400-point scale. Each jurisdiction sets its own passing score. Although the minimum score needed to pass varies by jurisdiction, the minimum passing score ranges from 260 to 280.

How is the Written Portion Scored?

Although the UBE is administered in 34 states, each state has the discretion to grade the test however they see fit. That being said, each jurisdiction that administers the UBE allocates 40% of the written score to the MPT and 60% of the written score to the essays. In other words, the MPT is worth 20% of the total score and the essays are worth 30% of the total score.

Each state hires its own graders to grade the written portion of the bar exam. It is important to remember that these graders are most likely practicing lawyers, not law professors. Each grader uses a grading rubric to score your answer. They are only going to award points for things the examinee mentions that appear on the grading rubric. This is unlike a law professor who might award additional points for a particularly creative argument or a unique point that others did not consider.

It is also important to remember that graders are paid by the essay. That means, the faster they work, the more money they make! Graders will not spend a significant amount of time reviewing an answer and trying to figure out ways to assign additional points. After the graders get comfortable with the content of the essays they are grading, we suspect that they simply skim the essays to see if the examinee mentioned keywords and phrases.

Some states have published information about the grading standards they use to grade the MPT and MEE portions. For example, some states use a scale from 0-6 to grade the MPTs and MEEs. Other states use a scale of 0-10. Other states, such as New York, use a different scoring scale altogether. Many states then use an undisclosed formula to convert the raw scores to scaled scores.

We strongly recommend that you check online to see whether your state has released information about its scoring methodology.

How is the Multiple Choice Portion Scored?

The multiple-choice portion of the bar exam, otherwise known as the MBE or multistate bar exam, consists of 200 questions. The NCBE only scores 175 of the 200 questions. The remaining 25 are unscored “test” questions used to evaluate questions in future administrations.

Each correct answer on the MBE is worth one point. The NCBE then takes this raw score and scales it according to an undisclosed formula. According to the NCBE, “This statistical process adjusts raw scores on the current examination to account for differences in difficulty as compared with past examinations.”

As explained above, that scaled MBE score is worth 50% of the total score on the bar exam. To figure out the converted MBE score needed to pass the UBE, take the total passing score in that jurisdiction divided by two. For instance, if your jurisdiction requires a 266 to pass, then a 133 is a “passing” MBE score. Note, however, that you do not necessarily need a minimum of 133 to pass the bar exam. Your multiple-choice score and written score must average at least 133. So, a higher score on the written portion could lower the score required on the MBE portion. For instance, if you achieve a score of 150 on the written portion, you would only need a 116 on the multiple choice portion.

Looking to Pass the Bar Exam?

Our July 2024 bar exam sale is live! Check out our fantastic deals on everything you need to pass the bar

Free Resources:

- 🌟 Bar Exam Free Resource Center : Access our most popular free guides, webinars, and resources to set you on the path to success.

- Free Bar Exam Guides : Expert advice on the MBE, the MEE, passing strategies, and overcoming failure.

- Free Webinars : Get insight from top bar exam experts to ace your preparation.

Paid Resources:

- 🏆 One-Sheets : Our most popular product! Master the Bar Exam with these five-star rated essentials.

- Bar Exam Outlines : Our comprehensive and condensed bar exam outlines present key information in an organized, easy-to-digest layout.

- Exclusive Mastery Classes : Dive deep into highly tested areas of the MBE, MEE, MPT, and CA bar exams in these live, one-time events.

- Specialized Private Tutoring : With years of experience under our belt, our experts provide personalized guidance to ensure you excel.

- Bar Exam Courses : On Demand and Premium options tailored to your needs.

- Bar Exam Crash Course + Mini Outlines : A great review of the topics you need to know!

🔥 NEW! Check out our Repeat Taker Bar Exam Course and our new premier Guarantee Pass Program !

Related posts

Leave a Reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Privacy Policy

- Terms of Use

- Public Interest

By using this site, you allow the use of cookies, and you acknowledge that you have read and understand our Privacy Policy and Terms of Service .

Cookie and Privacy Settings

We may request cookies to be set on your device. We use cookies to let us know when you visit our websites, how you interact with us, to enrich your user experience, and to customize your relationship with our website.

Click on the different category headings to find out more. You can also change some of your preferences. Note that blocking some types of cookies may impact your experience on our websites and the services we are able to offer.

These cookies are strictly necessary to provide you with services available through our website and to use some of its features.

Because these cookies are strictly necessary to deliver the website, refusing them will have impact how our site functions. You always can block or delete cookies by changing your browser settings and force blocking all cookies on this website. But this will always prompt you to accept/refuse cookies when revisiting our site.

We fully respect if you want to refuse cookies but to avoid asking you again and again kindly allow us to store a cookie for that. You are free to opt out any time or opt in for other cookies to get a better experience. If you refuse cookies we will remove all set cookies in our domain.

We provide you with a list of stored cookies on your computer in our domain so you can check what we stored. Due to security reasons we are not able to show or modify cookies from other domains. You can check these in your browser security settings.

We also use different external services like Google Webfonts, Google Maps, and external Video providers. Since these providers may collect personal data like your IP address we allow you to block them here. Please be aware that this might heavily reduce the functionality and appearance of our site. Changes will take effect once you reload the page.

Google Webfont Settings:

Google Map Settings:

Google reCaptcha Settings:

Vimeo and Youtube video embeds:

You can read about our cookies and privacy settings in detail on our Privacy Policy Page.

- Privacy Overview

- Strictly Necessary Cookies

This website uses cookies so that we can provide you with the best user experience possible. Cookie information is stored in your browser and performs functions such as recognising you when you return to our website and helping our team to understand which sections of the website you find most interesting and useful.

Strictly Necessary Cookie should be enabled at all times so that we can save your preferences for cookie settings.

If you disable this cookie, we will not be able to save your preferences. This means that every time you visit this website you will need to enable or disable cookies again.

Bar Exam Toolbox Podcast Episode 268: How to Avoid Common Bar Essay Writing Mistakes (w/Kelsey Lee)

In this episode, we discuss:

>Kelsey's background and work in the legal space

>Five common mistakes that students make when studying for or executing the writing portion of the See more + Welcome back to the Bar Exam Toolbox podcast! Today, we're excited to have Kelsey Lee with us -- one of our expert tutors from the Bar Exam Toolbox team. Join us as we discuss the most common pitfalls in bar essay writing, and share our top tips for mastering this portion of the exam.

>Five common mistakes that students make when studying for or executing the writing portion of the bar exam

>Five tips for mastering bar exam essay writing

>Private Bar Exam Tutoring (https://barexamtoolbox.com/private-bar-exam-tutoring/)

>Podcast Episode 10: Top 5 Bar Exam Essay Writing Tips (w/Ariel Salzer) (https://barexamtoolbox.com/podcast-episode-10-top-5-bar-exam-essay-writing-tips-w-ariel-salzer/)

>Podcast Episode 28: Balancing Law and Analysis on a Bar Exam Essay (https://barexamtoolbox.com/podcast-episode-28-balancing-law-and-analysis-on-a-bar-exam-essay/)

>Podcast Episode 69: 5 Things We Learned From Writing Bar Exam Sample Answers (https://barexamtoolbox.com/podcast-episode-69-5-things-we-learned-from-writing-bar-exam-sample-answers/)

>Podcast Episode 265: Quick Tips – What If You Run Out of Time While Writing a Bar Essay Answer? (https://barexamtoolbox.com/podcast-episode-265-quick-tips-what-if-you-run-out-of-time-while-writing-a-bar-essay-answer/)

>How To Be a Bar Exam Essay Writing Machine (https://barexamtoolbox.com/how-to-be-a-bar-exam-essay-writing-machine/)

>Legal Writing Tip: Imagine You're Talking to Your Grandma (https://lawschooltoolbox.com/legal-writing-tip-imagine-youre-talking-to-your-grandma/)

>What Are Bar Exam Graders Really Looking For? (https://barexamtoolbox.com/what-are-bar-exam-graders-really-looking-for/)

Download the Transcript (https://barexamtoolbox.com/episode-268-how-to-avoid-common-bar-essay-writing-mistakes-w-kelsey-lee/)

If you enjoy the podcast, we'd love a nice review and/or rating on Apple Podcasts (https://itunes.apple.com/us/podcast/bar-exam-toolbox-podcast-pass-bar-exam-less-stress/id1370651486) or your favorite listening app. And feel free to reach out to us directly. You can always reach us via the contact form on the Bar Exam Toolbox website (https://barexamtoolbox.com/contact-us/). Finally, if you don't want to miss anything, you can sign up for podcast updates (https://barexamtoolbox.com/get-bar-exam-toolbox-podcast-updates/)!

Thanks for listening!

Related Posts

- Bar Exam Toolbox Podcast Episode 265: Quick Tips -- What If You Run Out of Time While Writing a Bar Essay Answer? Audio

Latest Posts

- Bar Exam Toolbox Podcast Episode 268: How to Avoid Common Bar Essay Writing Mistakes (w/Kelsey Lee) Audio

- Law School Toolbox Podcast Episode 457: Essential Wardrobe Tips for Law Students and Lawyers (w/Jenny Eversole of Style Space) Audio

- Bar Exam Toolbox Podcast Episode 267: Quick Tips -- Using Past Bar Essay Questions for Practice Audio

- Law School Toolbox Podcast Episode 456: Top 10 Tips for Getting Ready for Your 3L Year Audio

See more »

Other MultiMedia by Law School Toolbox

Law School Toolbox Podcast Episode 457: Essential Wardrobe Tips for Law Students and Lawyers (w/Jenny Eversole of Style Space)

Bar Exam Toolbox Podcast Episode 267: Quick Tips -- Using Past Bar Essay Questions for Practice

Law School Toolbox Podcast Episode 456: Top 10 Tips for Getting Ready for Your 3L Year

Bar Exam Toolbox Podcast Episode 266: Quick Tips -- Pre-Writing Outlining for Bar Essays

Law School Toolbox Podcast Episode 455: Top 10 Tips for Getting Ready for Your 2L Year

Bar Exam Toolbox Podcast Episode 265: Quick Tips -- What If You Run Out of Time While Writing a Bar Essay Answer?

Law School Toolbox Podcast Episode 454: Top 10 Tips for Getting Ready for Your 1L Year

Bar Exam Toolbox Podcast Episode 264: Listen and Learn -- Concurrent Estates (Property)

Law School Toolbox Podcast Episode 453: Law School Applications (w/Hamada Zahawi of Write Track Admissions)

Law School Toolbox Podcast Episode 452: Standardized Testing Equity (w/David Klieger from Aspen Publishing)

Bar Exam Toolbox Podcast Episode 263: Navigating Accommodations and Self-Advocacy in the Legal Field (w/AJ Link)

Law School Toolbox Podcast Episode 451: From Application to Acceptance: The Law School Admissions Timeline (w/Anna Ivey)

Law School Toolbox Podcast Episode 450: Pre-OCI Hiring (w/Sadie Jones)

Bar Exam Toolbox Podcast Episode 262: Listen and Learn -- Motions for Judgment as a Matter of Law and Motions for New Trial (Civ Pro)

Law School Toolbox Podcast Episode 449: Implications of AI for the Legal Profession (w/Kevin Surace)

Bar Exam Toolbox Podcast Episode 261: Quick Tips -- Bar Exam Accommodations Basics

Law School Toolbox Podcast Episode 448: 10 Things to Consider When Applying to Law School (w/Steve Schwartz)

Bar Exam Toolbox Podcast Episode 260: Listen and Learn -- Elements of a Crime

Law School Toolbox Podcast Episode 447: Managing the Workload in a Summer Law Job (w/Sadie Jones)

Refine your interests »

Written by:

PUBLISH YOUR CONTENT ON JD SUPRA NOW

- Increased visibility

- Actionable analytics

- Ongoing guidance

Published In:

Law school toolbox on:.

"My best business intelligence, in one easy email…"

IMAGES

VIDEO

COMMENTS

Learn best practices for grading bar exam essays to ensure that they serve as reliable and valid indicators of competence to practice law.

In a Uniform Bar Exam score report, you may also see six scores for your Multistate Essay Exam (MEE) answers, and two scores for your Multistate Performance Test (MPT) answers. Not all states release this information, but most do. The vast majority of states grade on a 1–6 scale.

the new jersey bar examination Essay questions are graded on a seven-point (0- 1-2-3-4-5-6) scale in ascending order of quality. A team consisting of a Bar Examiner and Readers grades each essay question.

Because of the high-stakes nature of the bar exam, we must account for the differences in written exams across administrations, jurisdictions, and graders, and we do this by using the equated MBE score distribution as a highly reliable anchor.

In this post, we cover how the Multistate Essay Exam (MEE) is graded and scored. We tell you the grading standards and what you should aim for when you write a MEE answer. MEE Grading & Scoring: What You Need to Know A few notes about MEE graders. Keep in mind who your bar exam grader is.

Each essay is graded on a numbered scale based on the quality of the answer. The grading scale varies per jurisdiction (e.g. 0-6, 1-10). All written scores are combined, and then scaled using a complex formula.

The essays are graded on a scale of 0-30, with 6 being the lowest passing score. To determine your score, the graders will look at the overall quality of your answer, as well as your ability to apply legal principles, analyze fact patterns, and communicate effectively. The MBE Scale.

In the bar examination setting, scaling is a statistical procedure that puts essay or performance test scores on the same score scale as the Multistate Bar Examination (MBE). Despite the change in scale, the rank-ordering of individuals remains the same as it was on the original scale.

Day One of the exam is the written portion, consisting of 6 essays (the MEE) and 2 performance tests (MPTs). Day Two consists of 200 multiple-choice questions (the MBE). The written portion and the multiple-choice portion are each worth 50% of the total score on the UBE.

Bar Exam Toolbox Podcast Episode 268: How to Avoid Common Bar Essay Writing Mistakes (w/Kelsey Lee) Law School Toolbox + Follow x Following x Following - Unfollow Contact