Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Review Article

- Published: 27 March 2023

The role of facial movements in emotion recognition

- Eva G. Krumhuber ORCID: orcid.org/0000-0003-1894-2517 1 ,

- Lina I. Skora ORCID: orcid.org/0000-0002-1323-6595 2 , 3 ,

- Harold C. H. Hill ORCID: orcid.org/0000-0002-6800-7204 4 &

- Karen Lander ORCID: orcid.org/0000-0002-4738-1176 5

Nature Reviews Psychology volume 2 , pages 283–296 ( 2023 ) Cite this article

1273 Accesses

16 Citations

18 Altmetric

Metrics details

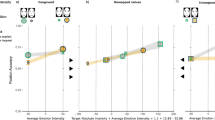

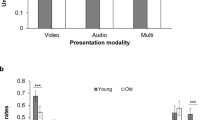

- Human behaviour

Most past research on emotion recognition has used photographs of posed expressions intended to depict the apex of the emotional display. Although these studies have provided important insights into how emotions are perceived in the face, they necessarily leave out any role of dynamic information. In this Review, we synthesize evidence from vision science, affective science and neuroscience to ask when, how and why dynamic information contributes to emotion recognition, beyond the information conveyed in static images. Dynamic displays offer distinctive temporal information such as the direction, quality and speed of movement, which recruit higher-level cognitive processes and support social and emotional inferences that enhance judgements of facial affect. The positive influence of dynamic information on emotion recognition is most evident in suboptimal conditions when observers are impaired and/or facial expressions are degraded or subtle. Dynamic displays further recruit early attentional and motivational resources in the perceiver, facilitating the prompt detection and prediction of others’ emotional states, with benefits for social interaction. Finally, because emotions can be expressed in various modalities, we examine the multimodal integration of dynamic and static cues across different channels, and conclude with suggestions for future research.

This is a preview of subscription content, access via your institution

Access options

Subscribe to this journal

Receive 12 digital issues and online access to articles

55,14 € per year

only 4,60 € per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

The temporal dynamics of emotion comparison depends on low-level attentional factors

Effects of aging on emotion recognition from dynamic multimodal expressions and vocalizations

The effect of processing partial information in dynamic face perception

Vick, S. J., Waller, B. M., Parr, L. A., Smith Pasqualini, M. C. & Bard, K. A. A cross-species comparison of facial morphology and movement in humans and chimpanzees using the facial action coding system (FACS). J. Nonverbal Behav. 31 , 1–20 (2007).

Article PubMed PubMed Central Google Scholar

Ekman, P., Friesen, W. V. & Hager, J. C. The Facial Action Coding System (Research Nexus eBook, 2002).

Ambadar, Z., Schooler, J. W. & Cohn, J. F. Deciphering the enigmatic face: the importance of facial dynamics in interpreting subtle facial expressions. Psychol. Sci. 16 , 403–410 (2005).

Article PubMed Google Scholar

Lederman, S. J. et al. Haptic recognition of static and dynamic expressions of emotion in the live face. Psychol. Sci. 18 , 158–164 (2007).

Weyers, P., Muhlberger, A., Hefele, C. & Pauli, P. Electromyographic responses to static and dynamic avatar emotional facial expressions. Psychophysiology 43 , 450–453 (2006).

Zloteanu, M., Krumhuber, E. G. & Richardson, D. C. Detecting genuine and deliberate displays of surprise in static and dynamic faces. Front. Psychol. 9 , 1184 (2018).

Johnston, P., Mayes, A., Hughes, M. & Young, A. W. Brain networks subserving the evaluation of static and dynamic facial expressions. Cortex 49 , 2462–2472 (2013).

Paulmann, S., Jessen, S. & Kotz, S. A. Investigating the multimodal nature of human communication: insights from ERPs. J. Psychophysiol. 23 , 63–76 (2009).

Article Google Scholar

Trautmann, S. A., Fehr, T. & Herrmann, M. Emotions in motion: dynamic compared to static facial expressions of disgust and happiness reveal more widespread emotion-specific activations. Brain Res. 1284 , 100–115 (2009).

Dawel, A., Miller, E. J., Horsburgh, A. & Ford, P. A. A systematic survey of face stimuli used in psychological research 2000–2020. Behav. Res. Methods 54 , 1889–1901 (2021).

Sato, W. & Yoshikawa, S. The dynamic aspects of emotional facial expressions. Cogn. Emot. 18 , 701–710 (2004).

Krumhuber, E. G., Kappas, A. & Manstead, A. S. R. Effects of dynamic aspects of facial expressions: a review. Emot. Rev. 5 , 41–46 (2013).

Krumhuber, E. & Skora, P. in Handbook of Human Motion (eds Müller, B. & Wolf, S.) 2271–2285 (Springer, 2016).

Dobs, K., Bülthoff, I. & Schultz, J. Use and usefulness of dynamic face stimuli for face perception studies — a review of behavioral findings and methodology. Front. Psychol. 9 , 1355 (2018).

Lander, K. & Butcher, N. L. Recognizing genuine from posed facial expressions: exploring the role of dynamic information and face familiarity. Front. Psychol. 11 , 1378 (2020).

Arsalidou, M., Morris, D. & Taylor, M. J. Converging evidence for the advantage of dynamic facial expressions. Brain Topogr. 24 , 149–163 (2011).

Zinchenko, O., Yaple, Z. A. & Arsalidou, M. Brain responses to dynamic facial expressions: a normative meta-analysis. Front. Hum. Neurosci. 12 , 227 (2018).

Bruce, V. & Young, A. Face Perception (Psychology Press, 2012).

Ekman, P. An argument for basic emotions. Cogn. Emot. 6 , 169–200 (1992).

Ekman, P. in The Handbook of Cognition and Emotion (eds Dalgeish, T. & Power, M. J.) 45–60 (Wiley, 1999).

Goeleven, E., De Raedt, R., Leyman, L. & Verschuere, B. The Karolinska directed emotional faces: a validation study. Cogn. Emot. 22 , 1094–1118 (2008).

Langner, O. et al. Presentation and validation of the Radboud Faces Database. Cogn. Emot. 24 , 1377–1388 (2010).

Palermo, R. & Coltheart, M. Photographs of facial expression: accuracy, response times, and ratings of intensity. Behav. Res. Methods 36 , 634–638 (2004).

Tottenham, N. et al. The NimStim set of facial expressions: judgments from untrained research participants. Psychiatry Res. 168 , 242–249 (2009).

Tracy, J. L. & Robins, R. W. The automaticity of emotion recognition. Emotion 8 , 81–95 (2008).

Jack, R. E., Sun, W., Delis, I., Garrod, O. G. & Schyns, P. G. Four not six: revealing culturally common facial expressions of emotion. J. Exp. Psychol. Gen. 145 , 708–730 (2016).

Keltner, D., Sauter, D., Tracy, J. & Cowen, A. Emotional expression: advances in basic emotion theory. J. Nonverbal Behav. 43 , 133–160 (2019).

Schmidtmann, G., Logan, A. J., Carbon, C. C., Loong, J. T. & Gold, I. In the blink of an eye: reading mental states from briefly presented eye regions. iPerception 11 , 2041669520961116 (2020).

PubMed PubMed Central Google Scholar

Calvo, M. G. & Lundqvist, D. Facial expressions of emotion (KDEF): identification under different display-duration conditions. Behav. Res. Methods 40 , 109–115 (2008).

Elfenbein, H. A. & Ambady, N. On the universality and cultural specificity of emotion recognition: a meta-analysis. Psychol. Bull. 128 , 203–235 (2002).

Haidt, J. & Keltner, D. Culture and facial expression: open-ended methods find more expressions and a gradient of recognition. Cogn. Emot. 13 , 225–266 (1999).

Kayyal, M. H. & Russell, J. A. Americans and Palestinians judge spontaneous facial expressions of emotion. Emotion 13 , 891–904 (2013).

Wagner, H. L. in The Psychology of Facial Expression (eds Russell, J. A. & Fernández-Dols, J. M.) 31–54 (Cambridge Univ. Press, 1997).

Beaudry, O., Roy-Charland, A., Perron, M., Cormier, I. & Tapp, R. Featural processing in recognition of emotional facial expressions. Cogn. Emot. 28 , 416–432 (2014).

Boucher, J. D. & Ekman, P. Facial areas and emotional information. J. Commun. 25 , 21–29 (1975).

Calder, A. J., Keane, J., Young, A. W. & Dean, M. Configural information in facial expression perception. J. Exp. Psychol. Hum. Percept. Perform. 26 , 527–551 (2000).

Smith, M. L., Cottrell, G. W., Gosselin, F. & Schyns, P. G. Transmitting and decoding facial expressions. Psychol. Sci. 16 , 184–189 (2005).

Tanaka, J. W., Kaiser, M. D., Butler, S. & Le Grand, R. Mixed emotions: holistic and analytic perception of facial expressions. Cogn. Emot. 26 , 961–977 (2012).

Blais, C., Fiset, D., Roy, C., Saumure Régimbald, C. & Gosselin, F. Eye fixation patterns for categorizing static and dynamic facial expressions. Emotion 17 , 1107–1119 (2017).

Yitzhak, N., Pertzov, Y. & Aviezer, H. The elusive link between eye-movement patterns and facial expression recognition. Soc. Personal. Psychol. Compass 15 , e12621 (2021).

Calvo, M. G. & Nummenmaa, L. Detection of emotional faces: salient physical features guide effective visual search. J. Exp. Psychol. Gen. 137 , 471–494 (2008).

Derntl, B., Seidel, E. M., Kainz, E. & Carbon, C. C. Recognition of emotional expressions is affected by inversion and presentation time. Perception 38 , 1849–1862 (2009).

Psalta, L. & Andrews, T. J. Inversion improves the recognition of facial expression in thatcherized images. Perception 43 , 715–730 (2014).

Palermo, R. et al. Impaired holistic coding of facial expression and facial identity in congenital prosopagnosia. Neuropsychologia 49 , 1226–1235 (2011).

White, M. Parts and wholes in expression recognition. Cogn. Emot. 14 , 39–60 (2000).

Etcoff, N. L. & Magee, J. J. Categorical perception of facial expressions. Cognition 44 , 227–240 (1992).

Horstmann, G. Visual search for schematic affective faces: stability and variability of search slopes with different instances. Cogn. Emot. 23 , 355–379 (2009).

Hugdahl, K., Iversen, P. M., Ness, H. M. & Flaten, M. A. Hemispheric differences in recognition of facial expressions: a VHF-study of negative, positive, and neutral emotions. Int. J. Neurosci. 45 , 205–213 (1989).

Sormaz, M., Young, A. W. & Andrews, T. J. Contributions of feature shapes and surface cues to the recognition of facial expressions. Vis. Res. 127 , 1–10 (2016).

Calder, A. J., Young, A. W., Rowland, D. & Perrett, D. I. Computer-enhanced emotion in facial expressions. Proc. R. Soc. B 264 , 919–925 (1997).

Calder, A. J. et al. Caricaturing facial expression. Cognition 76 , 105–146 (2000).

Calder, A. J., Burton, A. M., Miller, P., Young, A. W. & Akamatsu, S. A principal component analysis of facial expressions. Vis. Res. 41 , 1179–1208 (2001).

Dailey, M. N., Cottrell, G. W., Padgett, C. & Adolphs, R. Empath: a neural network that categorizes facial expressions. J. Cogn. Neurosci. 14 , 1158–1173 (2002).

Susskind, J. M., Littlewort, G., Bartlett, M. S., Movellan, J. & Anderson, A. K. Human and computer recognition of facial expressions of emotion. Neuropsychologia 45 , 152–162 (2007).

Calvo, M. G. & Nummenmaa, L. Perceptual and affective mechanisms in facial expression recognition: an integrative review. Cogn. Emot. 30 , 1081–1106 (2016).

Ullman, S. The interpretation of structure from motion. Proc. R. Soc. B 203 , 405–426 (1979).

Google Scholar

O’Toole, A. J., Roark, D. A. & Abdi, H. Recognizing moving faces: a psychological and neural synthesis. Trends Cogn. Sci. 6 , 261–266 (2002).

Black, M. J. & Yacoob, Y. Recognizing facial expressions in image sequences using local parameterized models of image motion. Int. J. Comput. Vis. 25 , 23–48 (1997).

Horowitz, B. & Pentland, A. Recovery of non-rigid motion and structure. In IEEE Computer Soc. Conf. Computer Vision and Pattern Recognition 325–330 (IEEE, 1991).

Torresani, L., Hertzmann, A. & Bregler, C. Nonrigid structure-from-motion: estimating shape and motion with hierarchical priors. IEEE Trans. Pattern Anal. Mach. 30 , 878–892 (2008).

Jensen, S. H. N., Doest, M. E. B., Aanæs, H. & Del Bue, A. A benchmark and evaluation of non-rigid structure from motion. Int. J. Comput. Vis. 129 , 882–899 (2021).

Biele, C. & Grabowska, A. Sex differences in perception of emotion intensity in dynamic and static facial expressions. Exp. Brain Res. 171 , 1–6 (2006).

Uono, S., Sato, W. & Toichi, M. Brief report: Representational momentum for dynamic facial expressions in pervasive developmental disorder. J. Autism Dev. Disord. 40 , 371–377 (2010).

Sowden, S., Schuster, B. A., Keating, C. T., Fraser, D. S. & Cook, J. L. The role of movement kinematics in facial emotion expression production and recognition. Emotion 21 , 1041–1061 (2021).

Johansson, G. Visual perception of biological motion and a model for its analysis. Percept. Psychophys. 14 , 201–211 (1973).

Atkinson, A. P., Vuong, Q. C. & Smithson, H. E. Modulation of the face- and body-selective visual regions by the motion and emotion of point-light face and body stimuli. Neuroimage 59 , 1700–1712 (2012).

Bassili, J. N. Facial motion in the perception of faces and emotional expression. J. Exp. Psychol. Hum. Percept. Perform. 4 , 373–379 (1978).

Bassili, J. N. Emotion recognition: the role of facial movement and the relative importance of the upper and lower areas of the face. J. Pers. Soc. Psychol. 37 , 2049–2058 (1979).

Dittrich, W. H. Facial motion and the recognition of emotions. Psychol. Beitr. 33 , 366–377 (1991).

Pollick, F. E., Hill, H., Calder, A. & Paterson, H. Recognising facial expression from spatially and temporally modified movements. Perception 32 , 813–826 (2003).

Bidet-Ildei, C., Decatoire, A. & Gil, S. Recognition of emotions from facial point-light displays. Front. Psychol. 11 , 1062 (2020).

Furl, N. et al. Face space representations of movement. Neuroimage 21 , 116676 (2020).

Keating, C. T., Fraser, D. S., Sowden, S. & Cook, J. L. Differences between autistic and non-autistic adults in the recognition of anger from facial motion remain after controlling for alexithymia. J. Autism Dev. Disord. 52 , 1855–1871 (2021).

Valentine, T. A unified account of the effects of distinctiveness, inversion, and race in face recognition. Q. J. Exp. Psychol. A 43 , 161–204 (1991).

Freyd, J. J. Dynamic mental representations. Psychol. Rev. 94 , 427–438 (1987).

Blais, C., Roy, C., Fiset, D., Arguin, M. & Gosselin, F. The eyes are not the window to basic emotions. Neuropsychologia 50 , 2830–2838 (2012).

Gosselin, F. & Schyns, P. G. Bubbles: a technique to reveal the use of information in recognition tasks. Vis. Res. 41 , 2261–2271 (2001).

Royer, J., Blais, C., Gosselin, F., Duncan, J. & Fiset, D. When less is more: impact of face processing ability on recognition of visually degraded faces. J. Exp. Psychol. Hum. Percept. Perform. 41 , 1179–1183 (2015).

Nusseck, M., Cunningham, D. W., Wallraven, C. & Bülthoff, H. H. The contribution of different facial regions to the recognition of conversational expressions. J. Vis. 8 , 1–23 (2008).

Back, E., Ropar, D. & Mitchell, P. Do the eyes have It? Inferring mental states from animated faces in autism. Child. Dev. 78 , 397–411 (2007).

Back, E., Jordan, T. R. & Thomas, S. M. The recognition of mental states from dynamic and static facial expressions. Vis. Cogn. 17 , 1271–1289 (2009).

Hoffmann, H., Traue, H. C., Limbrecht-Ecklundt, K., Walter, S. & Kessler, H. Static and dynamic presentation of emotions in different facial areas: fear and surprise show influences of temporal and spatial properties. Psychology 4 , 663 (2013).

Buchan, J. N., Paré, M. & Munhall, K. G. Spatial statistics of gaze fixations during dynamic face processing. Soc. Neurosci. 2 , 1–13 (2007).

Gurnsey, R., Roddy, G., Ouhnana, M. & Troje, N. F. Stimulus magnification equates identification and discrimination of biological motion across the visual field. Vis. Res. 48 , 2827–2834 (2008).

Thompson, B., Hansen, B. C., Hess, R. F. & Troje, N. F. Peripheral vision: good for biological motion, bad for signal noise segregation? J. Vis. 7 , 11–17 (2007).

Plouffe-Demers, M. P., Fiset, D., Saumure, C., Duncan, J. & Blais, C. Strategy shift toward lower spatial frequencies in the recognition of dynamic facial expressions of basic emotions: when it moves it is different. Front. Psychol. 10 , 1563 (2019).

Calvo, M. G., Fernández-Martín, A., Gutiérrez-García, A. & Lundqvist, D. Selective eye fixations on diagnostic face regions of dynamic emotional expressions: KDEF-dyn database. Sci. Rep. 8 , 17039 (2018).

Anaki, D., Boyd, J. & Moscovitch, M. Temporal integration in face perception: evidence of configural processing of temporally separated face parts. J. Exp. Psychol. Hum. Percept. Perform. 33 , 1–19 (2007).

Luo, C., Wang, Q., Schyns, P. G., Kingdom, F. A. A. & Xu, H. Facial expression aftereffect revealed by adaption to emotion-invisible dynamic bubbled faces. PLoS ONE 10 , e0145877 (2015).

Carey, S. & Diamond, R. From piecemeal to configurational representation of faces. Science 195 , 312–314 (1977).

Sergent, J. An investigation into component and configural processes underlying face perception. Br. J. Psychol. 75 , 221–242 (1984).

Tanaka, J. W. & Farah, M. J. Parts and wholes in face recognition. Q. J. Exp. Psychol. 46A , 225–245 (1993).

Rakover, S. S. Featural vs. configurational information in faces: a conceptual and empirical analysis. Br. J. Psychol. 93 , 1–30 (2002).

Schwaninger, A., Carbon, C. C. & Leder, H. in Development of Face Processing (eds Schwarzer, G. & Leder, H.) 81–97 (Hogrefe, 2003).

Favelle, S. K., Tobin, A., Piepers, D. & Robbins, R. A. Dynamic composite faces are processed holistically. Vis. Res. 112 , 26–32 (2015).

Zhao, M. & Bülthoff, I. Holistic processing of static and moving faces. J. Exp. Psychol. Learn. Mem. Cogn. 43 , 1020–1035 (2017).

Chiller-Glaus, S. D., Schwaninger, A., Hofer, F., Kleiner, M. & Knappmeyer, B. Recognition of emotion in moving and static composite faces. Swiss J. Psychol. 70 , 233–240 (2011).

Tobin, A., Favelle, S. & Palermo, R. Dynamic facial expressions are processed holistically, but not more holistically than static facial expressions. Cogn. Emot. 30 , 1208–1221 (2016).

Bould, E., Morris, N. & Wink, B. Recognising subtle emotional expressions: the role of facial movements. Cogn. Emot. 22 , 1569–1587 (2008).

Schultz, J., Brockhaus, M., Bülthoff, H. H. & Pilz, K. S. What the human brain likes about facial motion. Cereb. Cortex 23 , 1167–1178 (2013).

Bould, E. & Morris, N. Role of motion signals in recognizing subtle facial expressions of emotion. Br. J. Psychol. 99 , 167–189 (2008).

Krumhuber, E. G. & Manstead, A. S. R. Can Duchenne smiles be feigned? New evidence on felt and false smiles. Emotion 9 , 807–820 (2009).

Jack, R. E., Oliver, G. B. G. & Philippe, G. S. Dynamic facial expressions of emotion transmit an evolving hierarchy of signals over time. Curr. Biol. 24 , 187–192 (2014).

Jack, R. E. & Schyns, P. G. The human face as a dynamic tool for social communication. Curr. Biol. 25 , R621–R634 (2015).

Krumhuber, E. G. & Scherer, K. R. Affect bursts: dynamic patterns of facial expression. Emotion 11 , 825–841 (2011).

Fiorentini, C., Schmidt, S. & Viviani, P. The identification of unfolding facial expressions. Perception 41 , 532–555 (2012).

With, S. & Kaiser, S. Sequential patterning of facial actions in the production and perception of emotional expressions. Swiss J. Psychol. 70 , 241–252 (2011).

Leonard, C. M., Voeller, K. K. S. & Kuldau, J. M. When’s a smile a smile? Or how to detect a message by digitizing the signal. Psychol. Sci. 2 , 166–172 (1991).

Edwards, K. The face of time: temporal cues in facial expressions of emotion. Psychol. Sci. 9 , 270–276 (1998).

Cunningham, D. W. & Wallraven, C. Dynamic information for the recognition of conversational expressions. J. Vis. 9 , 1–17 (2009).

Takehara, T., Saruyama, M. & Suzuki, N. Dynamic facial expression recognition in low emotional intensity and shuffled sequences. New Am. J. Psychol. 19 , 359–370 (2017).

Richoz, A. R., Lao, J., Pascalis, O. & Caldara, R. Tracking the recognition of static and dynamic facial expressions of emotion across the life span. J. Vis. 18 , 1–27 (2018).

Furl, N. et al. Modulation of perception and brain activity by predictable trajectories of facial expressions. Cereb. Cortex 20 , 694–703 (2010).

Reinl, M. & Bartels, A. Perception of temporal asymmetries in dynamic facial expressions. Front. Psychol. 6 , 1107 (2015).

Reinl, M. & Bartels, A. Face processing regions are sensitive to distinct aspects of temporal sequence in facial dynamics. Neuroimage 102 , 407–415 (2014).

Wehrle, T., Kaiser, S., Schmidt, S. & Scherer, K. R. Studying the dynamics of emotional expression using synthesized facial muscle movements. J. Pers. Soc. Psychol. 78 , 105–119 (2000).

Delis, I. et al. Space-by-time manifold representation of dynamic facial expressions for emotion categorization. J. Vis. 16 , 14 (2016).

Korolkova, O. A. The role of temporal inversion in the perception of realistic and morphed dynamic transitions between facial expressions. Vis. Res. 143 , 42–51 (2018).

Sato, W., Kochiyama, T. & Yoshikawa, S. Amygdala activity in response to forward versus backward dynamic facial expressions. Brain Res. 1315 , 92–99 (2010).

Krumhuber, E. G. & Scherer, K. R. The look of fear from the eyes varies with the dynamic sequence of facial actions. Swiss J. Psychol. 75 , 5–14 (2016).

Cosker, D., Krumhuber, E. & Hilton, A. Perception of linear and nonlinear motion properties using a FACS validated 3D facial model. In Proc. 7th Symp. Applied Perception in Graphics and Visualization (APGV) (eds Gutierrez, D., Kearney, J., Banks, M. S. & Mania, K.) 101–108 (ACM, 2010).

Cosker, D., Krumhuber, E. & Hilton, A. Perceived emotionality of linear and nonlinear AUs synthesised using a 3D dynamic morphable facial model. In Proc. Facial Analysis and Animation (eds. Pucher, M. et al.) 7 (ACM, 2015).

Hoffmann, H., Traue, H. C., Bachmayr, F. & Kessler, H. Perceived realism of dynamic facial expressions of emotion: optimal durations for the presentation of emotional onsets and offsets. Cogn. Emot. 24 , 1369–1376 (2010).

Kamachi, M. et al. Dynamic properties influence the perception of facial expressions. Perception 30 , 875–887 (2001).

Wallraven, C., Breidt, M., Cunningham, D. W. & Bülthoff, H. H. Evaluating the perceptual realism of animated facial expressions. ACM Trans. Appl. Percept. 4 , 1–20 (2008).

Perdikis, D. et al. Brain synchronization during perception of facial emotional expressions with natural and unnatural dynamics. PLoS ONE 12 , e0181225 (2017).

Dobs, K. et al. Quantifying human sensitivity to spatio-temporal information in dynamic faces. Vis. Res. 100 , 78–87 (2014).

Recio, G., Schacht, A. & Sommer, W. Classification of dynamic facial expressions of emotion presented briefly. Cogn. Emot. 27 , 1486–1494 (2013).

Hess, U. & Kleck, R. E. The cues decoders use in attempting to differentiate emotion-elicited and posed facial expressions. Eur. J. Soc. Psychol. 24 , 367–381 (1994).

Ambadar, Z., Cohn, J. F. & Reed, L. I. All smiles are not created equal: morphology and timing of smiles perceived as amused, polite, and embarrassed/nervous. J. Nonverbal Behav. 33 , 17–34 (2009).

Krumhuber, E. G. & Kappas, A. Moving smiles: the role of dynamic components for the perception of the genuineness of smiles. J. Nonverbal Behav. 29 , 3–24 (2005).

Krumhuber, E. G. et al. Facial dynamics as indicators of trustworthiness and cooperative behavior. Emotion 7 , 730–735 (2007).

Krumhuber, E. G., Manstead, A. S. R. & Kappas, A. Temporal aspects of facial displays in person and expression perception: the effects of smile dynamics, head-tilt and gender. J. Nonverbal Behav. 31 , 39–56 (2007).

Krumhuber, E. G., Manstead, A. S. R., Cosker, D., Marshall, D. & Rosin, P. L. Effects of dynamic attributes of smiles in human and synthetic faces: a simulated job interview setting. J. Nonverbal Behav. 33 , 1–15 (2009).

Yu, H., Garrod, O. G. & Schyns, P. G. Perception-driven facial expression synthesis. Comput. Graph. 36 , 152–162 (2012).

Namba, S., Kabir, R. S., Miyatani, M. & Nakao, T. Dynamic displays enhance the ability to discriminate genuine and posed facial expressions of emotion. Front. Psychol. 9 , 672 (2018).

Hess, U. & Kleck, R. E. Differentiating emotion elicited and deliberate emotional facial expressions. Eur. J. Soc. Psychol. 20 , 369–385 (1990).

Cohn, J. F. & Schmidt, K. L. The timing of facial motion in posed and spontaneous smiles. Int. J. Wavelets. Multiresolution Inf. Process. 2 , 1–12 (2004).

Schmidt, K. L., Bhattacharya, S. & Denlinger, R. Comparison of deliberate and spontaneous facial movement in smiles and eyebrow raises. J. Nonverbal Behav. 33 , 35–45 (2009).

Schmidt, K. L., Ambadar, Z., Cohn, J. F. & Reed, L. I. Movement differences between deliberate and spontaneous facial expressions: zygomaticus major action in smiling. J. Nonverbal Behav. 30 , 37–52 (2006).

Namba, S., Makihara, S., Kabir, R., Miyatani, M. & Nakao, T. Spontaneous facial expressions are different from posed facial expressions: morphological properties and dynamic sequences. Curr. Psychol. 36 , 593–605 (2016).

Hanley, M., Riby, D. M., Caswell, S., Rooney, S. & Back, E. Looking and thinking: how individuals with Williams syndrome make judgements about mental states. Res. Dev. Disabil. 34 , 4466–4476 (2013).

Krumhuber, E., Lai, Y., Rosin, P. & Hugenberg, K. When facial expressions do and do not signal minds: the role of face inversion, expression dynamism, and emotion type. Emotion 19 , 746–750 (2019).

Rubenstein, A. J. Variation in perceived attractiveness: differences between dynamic and static faces. Psychol. Sci. 16 , 759–762 (2005).

Gill, D., Garrod, O. G., Jack, R. E. & Schyns, P. G. Facial movements strategically camouflage involuntary social signals of face morphology. Psychol. Sci. 25 , 1079–1086 (2014).

Bugental, D. B. Unmasking the “polite smile” situational and personal determinants of managed affect in adult–child interaction. Pers. Soc. Psychol. Bull. 12 , 7–16 (1986).

Haxby, J. V., Hoffman, E. A. & Gobbini, M. I. The distributed human neural system for face perception. Trends Cogn. Sci. 4 , 223–233 (2000).

Pitcher, D., Dilks, D. D., Saxe, R. R., Triantafyllou, C. & Kanwisher, N. Differential selectivity for dynamic versus static information in face-selective cortical regions. Neuroimage 56 , 2356–2363 (2011).

Pitcher, D., Duchaine, B. & Walsh, V. Combined TMS and fMRI reveal dissociable cortical pathways for dynamic and static face perception. Curr. Biol. 24 , 2066–2070 (2014).

Deen, B., Koldewyn, K., Kanwisher, N. & Saxe, R. Functional organization of social perception and cognition in the superior temporal sulcus. Cereb. Cortex 25 , 4596–4609 (2015).

Foley, E., Rippon, G. & Senior, C. Modulation of neural oscillatory activity during dynamic face processing. J. Cogn. Neurosci. 30 , 338–352 (2017).

Furl, N., Henson, R. N., Friston, K. J. & Calder, A. J. Top-down control of visual responses to fear by the amygdala. J. Neurosci. 33 , 17435–17443 (2013).

Sato, W. et al. Widespread and lateralized social brain activity for processing dynamic facial expressions. Hum. Brain Mapp. 40 , 3753–3768 (2019).

Trautmann-Lengsfeld, S. A., Dominguez-Borras, J., Escera, C., Hermann, M. & Fehr, T. The perception of dynamic and static facial expressions of happiness and disgust investigated by ERPs and fMRI constrained source analysis. PLoS ONE 8 , e666997 (2013).

Recio, G., Sommer, W. & Schacht, A. Electrophysiological correlates of perceiving and evaluating static and dynamic facial emotional expressions. Brain Res. 1376 , 66–75 (2011).

Recio, G., Schacht, A. & Sommer, W. Recognizing dynamic facial expressions of emotion: specificity and intensity effects in event-related brain potentials. Biol. Psychol. 96 , 111–125 (2014).

Wang, M. & Yuan, Z. EEG decoding of dynamic facial expressions of emotion: evidence from SSVEP and causal cortical network dynamics. Int. Brain Res. Org. 459 , 50–58 (2021).

Sato, W., Kochiyama, T. & Uono, S. Spatiotemporal neural network dynamics for the processing of dynamic facial expressions. Sci. Rep. 5 , 12432 (2015).

Donner, T. H. & Siegel, M. A framework for local cortical oscillation patterns. Trends Cogn. Sci. 15 , 191–199 (2011).

Xiao, N. G. et al. On the facilitative effects of face motion on face recognition and its development. Front. Psychol. 5 , 633 (2014).

Ehrlich, S. M., Schiano, D. J. & Sheridan, K. Communicating facial affect: it’s not the realism, it’s the motion. Proc. ACM CHI 2000 Conf. Human Factors in Computing Systems 151–152 (ACM, 2000).

Kätsyri, J., Saalasti, S., Tiippana, K., von Wendt, L. & Sams, M. Impaired recognition of facial emotions from low-spatial frequencies in Asperger syndrome. Neuropsychologia 46 , 1888–1897 (2008).

Kätsyri, J. & Sams, M. The effect of dynamics on identifying basic emotions from synthetic and natural faces. Int. J. Hum. Comput. Stud. 66 , 233–242 (2008).

Fujimura, T. & Suzuki, N. Effects of dynamic information in recognising facial expressions on dimensional and categorical judgments. Perception 39 , 543–552 (2010).

Harwood, N. K., Hall, L. J. & Shinkfield, A. J. Recognition of facial emotional expressions from moving and static displays by individuals with mental retardation. Am. J. Ment. Retard. 104 , 270–278 (1999).

Gepner, B., Deruelle, C. & Grynfeltt, S. Motion and emotion: a novel approach to the study of face processing by young autistic children. J. Autism Dev. Disord. 31 , 37–45 (2001).

Tardif, C., Lainé, F., Rodriguez, M. & Gepner, B. Slowing down presentation of facial movements and vocal sounds enhances facial expression recognition and induces facial–vocal imitation in children with autism. J. Autism Dev. Disord. 37 , 1469–1484 (2007).

Isaacowitz, D. M. & Stanley, J. T. Bringing an ecological perspective to the study of aging and recognition of emotional facial expressions: past, current, and future methods. J. Nonverbal Behav. 35 , 261–278 (2011).

Holland, C. A., Ebner, N. C., Lin, T. & Samanez-Larkin, G. R. Emotion identification across adulthood using the Dynamic FACES database of emotional expressions in younger, middle aged, and older adults. Cogn. Emot. 33 , 245–257 (2018).

Krendl, A. C. & Ambady, N. Older adults’ decoding of emotions: role of dynamic versus static cues and age-related cognitive decline. Psychol. Aging 25 , 788–793 (2010).

Ziaei, M., Arnold, C. & Ebner, N. C. Age-related differences in expression recognition of faces with direct and averted gaze using dynamic stimuli. Exp. Aging Res. 47 , 451–463 (2021).

Csukly, G., Czobor, P., Szily, E., Takács, B. & Simon, L. Facial expression recognition in depressed subjects: the impact of intensity level and arousal dimension. J. Nerv. Ment. Dis. 197 , 98–103 (2009).

Langenecker, S. A. et al. Face emotion perception and executive functioning deficits in depression. J. Clin. Exp. Neuropsychol. 27 , 320–333 (2005).

Persad, M. & Polivy, J. Differences between depressed and nondepressed participants in the recognition of and response to facial emotional cues. J. Abnorm. Psychol. 102 , 358–368 (1993).

Garrido-Vásquez, P., Jessen, S. & Kotz, S. A. Perception of emotion in psychiatric disorders: on the possible role of task, dynamics, and multimodality. Soc. Neurosci. 6 , 515–536 (2011).

Actis-Grosso, R., Bossi, F. & Ricciardelli, P. Emotion recognition through static faces and moving bodies: a comparison between typically developed adults and individuals with high level of autistic traits. Front. Psychol. 6 , 1570 (2015).

Jelili, S. et al. Impaired recognition of static and dynamic facial emotions in children with autism spectrum disorder using stimuli of varying intensities, different genders, and age ranges faces. Front. Psychiatry 12 , 693310 (2021).

Adolphs, R., Tranel, D. & Damasio, A. R. Dissociable neural systems for recognizing emotions. Brain Cogn. 52 , 61–69 (2003).

Bennetts, R. J., Butcher, N., Lander, K. & Bate, S. Movement cues aid face recognition in developmental prosopagnosia. Neuropsychology 29 , 855–860 (2015).

Humphreys, G. W., Donnelly, N. & Riddoch, M. J. Expression is computed separately from facial identity, and it is computed separately for moving and static faces: neuropsychological evidence. Neuropsychologia 31 , 173–181 (1993).

Longmore, C. & Tree, J. Motion as a cue to face recognition: evidence from congenital prosopagnosia. Neuropsychologia 51 , 864–875 (2013).

Richoz, A. R., Jack, R. E., Garrod, O. G., Schyns, P. G. & Caldara, R. Reconstructing dynamic mental models of facial expressions in prosopagnosia reveals distinct representations for identity and expression. Cortex 65 , 50–64 (2015).

Barker, M. S., Bidstrup, E. M., Robinson, G. A. & Nelson, N. L. “Grumpy” or “furious”? Arousal of emotion labels influences judgments of facial expressions. PLoS ONE 15 , e0235390 (2020).

Bowdring, M. A., Sayette, M. A., Girard, J. M. & Woods, W. C. In the eye of the beholder: a comprehensive analysis of stimulus type, perceiver, and target in physical attractiveness perceptions. J. Nonverbal Behav. 45 , 241–259 (2021).

Grainger, S. A., Henry, J. D., Phillips, L. H., Vanman, E. J. & Allen, R. Age deficits in facial affect recognition: the influence of dynamic cues. J. Gerontol. B Psychol. Sci. Soc. Sci. 72 , 622–632 (2015).

Nelson, N. L., Hudspeth, K. & Russell, J. A. A story superiority effect for disgust, fear, embarrassment, and pride. Br. J. Dev. Psychol. 31 , 334–348 (2013).

Nelson, N. L. & Russell, J. A. Putting motion in emotion: do dynamic presentations increase preschooler’s recognition of emotion? Cogn. Dev. 26 , 248–259 (2011).

Trichas, S., Schyns, B., Lord, R. & Hall, R. Facing leaders: facial expression and leadership perception. Leadersh. Q. 28 , 317–333 (2017).

Jiang, Z. et al. Time pressure inhibits dynamic advantage in the classification of facial expression of emotion. PLoS ONE 9 , e100162 (2014).

Fiorentini, C. & Viviani, P. Is there a dynamic advantage for facial expressions? J. Vis. 11 , 17 (2011).

Liang, Y., Liu, B., Li, X. & Wang, P. Multivariate pattern classification of facial expressions based on large-scale functional connectivity. Front. Hum. Neurosci. 12 , 94 (2018).

Widen, S. C. & Russell, J. A. Do dynamic facial expressions convey emotions to children better than do static ones? J. Cogn. Dev. 16 , 802–811 (2015).

Gold, J. M. et al. The efficiency of dynamic and static facial expression recognition. J. Vis. 13 , 23 (2013).

Jack, R. E., Garrod, O. G., Yu, H., Caldara, R. & Schyns, P. G. Facial expressions of emotion are not culturally universal. Proc. Natl Acad. Sci. USA 109 , 7241–7244 (2012).

Chung, K. M., Kim, S., Jung, W. H. & Kim, Y. Development and validation of the Yonsei face database (YFace DB). Front. Psychol. 10 , 2626 (2019).

Yitzhak, N., Gilaie-Dotan, S. & Aviezer, H. The contribution of facial dynamics to subtle expression recognition in typical viewers and developmental visual agnosia. Neuropsychologia 117 , 26–35 (2018).

Cassidy, S., Mitchell, P., Chapman, P. & Ropar, D. Processing of spontaneous emotional responses in adolescents and adults with autism spectrum disorders: effect of stimulus type. Autism Res. 8 , 534–544 (2015).

Pollux, P. M. J., Craddock, M. & Guo, K. Gaze patterns in viewing static and dynamic body expressions. Acta Psychol 198 , 102862 (2019).

Ceccarini, F. & Caudek, C. Anger superiority effect: the importance of dynamic emotional facial expressions. Vis. Cogn. 21 , 498–540 (2013).

Horstmann, G. & Ansorge, U. Visual search for facial expressions of emotions: a comparison of dynamic and static faces. Emotion 9 , 29–38 (2009).

Calvo, M. G., Avero, P., Fernandez-Martin, A. & Recio, G. Recognition thresholds for static and dynamic emotional faces. Emotion 16 , 1186–1200 (2016).

Li, W. O. & Yuen, K. S. L. The perception of time while perceiving dynamic emotional faces. Front. Psychol. 6 , 01248 (2015).

Rymarczyk, K., Biele, C., Grabowska, A. & Majczynski, H. EMG activity in response to static and dynamic facial expressions. Int. J. Psychophysiol. 79 , 330–333 (2011).

Rymarczyk, K., Zurawski, L., Jankowiak-Siuda, K. & Szatkowska, I. Do dynamic compared to static facial expressions of happiness and anger reveal enhanced facial mimicry? PLoS ONE 11 , e0158534 (2016).

Yoshikawa, S. & Sato, W. Dynamic facial expressions of emotion induce representational momentum. Cogn. Affect. Behav. Neurosci. 8 , 25–31 (2008).

Simons, R. F., Detener, B. H., Roedema, T. M. & Reiss, J. E. Emotion processing in three systems: the medium and the message. Psychophysiology 36 , 619–627 (1999).

Sato, W., Fujimura, T. & Suzuki, N. Enhanced facial EMG activity in response to dynamic facial expressions. Int. J. Psychophysiol. 70 , 70–74 (2008).

Sato, W. & Yoshikawa, S. Enhanced experience of emotional arousal in response to dynamic facial expressions. J. Nonverbal Behav. 31 , 119–135 (2007).

Hoffmann, J. Intense or malicious? The decoding of eyebrow-lowering frowning in laughter animations depends on the presentation mode. Front. Psychol. 5 , 1306 (2014).

Kaufman, J. & Johnston, P. J. Facial motion engages predictive visual mechanisms. PLoS ONE 9 , e91038 (2014).

Thornton, I. M. & Kourtzi, Z. A matching advantage for dynamic human faces. Perception 31 , 113–132 (2002).

Kouider, S., Berthet, V. & Faivre, N. Preference is biased by crowded facial expressions. Psychol. Sci. 22 , 184–189 (2013).

Sato, W., Kubota, Y. & Toichi, M. Enhanced subliminal emotional responses to dynamic facial expressions. Front. Psychol. 5 , 994 (2014).

Niedenthal, P. M. Embodying emotion. Science 316 , 1002–1005 (2007).

Rymarczyk, K., Zurawski, L., Jankowiak-Siuda, K. & Szatkowska, I. Emotional empathy and facial mimicry for static and dynamic facial expressions of fear and disgust. Front. Psychol. 7 , 1853 (2016).

Rymarczyk, K., Zurawski, L., Jankowiak-Siuda, K. & Szatkowska, I. Neural correlates of facial mimicry: simultaneous measurement of EMG and BOLD responses during perception of dynamic compared to static facial expressions. Front. Psychol. 9 , 52 (2018).

Rymarczyk, K., Zurawski, L., Jankowiak-Siuda, K. & Szatkowska, I. Empathy in facial mimicry of fear and disgust: simultaneous EMG–fMRI recordings during observation of static and dynamic facial expressions. Front. Psychol. 10 , 701 (2019).

Heyes, C. & Catmur, C. What happened to mirror neurons? Perspect. Psychol. Sci. 17 , 153–168 (2021).

Iacoboni, M. Imitation, empathy, and mirror neurons. Annu. Rev. Psychol. 60 , 653–670 (2009).

Fischer, A. & Hess, U. Mimicking emotions. Curr. Opin. Psychol. 17 , 151–155 (2017).

Fujimura, T., Sato, W. & Suzuki, N. Facial expression arousal level modulates facial mimicry. Int. J. Psychophysiol. 76 , 88–92 (2010).

Hess, U. & Fischer, A. Emotional mimicry: why and when we mimic emotions. Soc. Personal. Psychol. Compass 8 , 45–57 (2014).

Chartrand, T. L. & Lakin, J. L. The antecedents and consequences of human behavioural mimicry. Annu. Rev. Psychol. 64 , 285–308 (2013).

Keltner, D. & Cordaro, D. T. in The Science of Facial Expression (eds Fernández-Dols, J. M. & Russell, J. A.) 57–75 (Oxford Univ. Press, 2017).

Keltner, D., Tracy, J., Sauter, D. A., Cordaro, D. C. & McNeil, G. in Handbook of Emotions (eds Barrett, L. F. et al.) 467–482 (Guilford Press, 2016).

Scherer, K. R. & Ellgring, H. Multimodal expression of emotion: affect programs or componential appraisal patterns? Emotion 7 , 158–171 (2007).

Young, A. W., Frühholz, S. & Schweinberger, S. R. Face and voice perception: understanding commonalities and differences. Trends Cogn. Sci. 24 , 398–410 (2020).

Bahrick, L. E., Lickliter, R. & Flom, R. Intersensory redundancy guides the development of selective attention, perception, and cognition in infancy. Curr. Dir. Psychol. Sci. 13 , 99–102 (2004).

Campanella, S. & Belin, P. Integrating face and voice in person perception. Trends Cogn. Sci. 11 , 535–543 (2007).

App, B., Mcintosh, D. N., Reed, C. L. & Hertenstein, M. J. Nonverbal channel use in communication of emotion: how may depend on why. Emotion 11 , 603–617 (2011).

Lecker, M., Dotsch, R., Bijlstra, G. & Aviezer, H. Bidirectional contextual influence between faces and bodies in emotion perception. Emotion 20 , 1154–1164 (2020).

Mondloch, C. J., Nelson, N. L. & Horner, M. Asymmetries of influence: differential effects of body postures on perceptions of emotional facial expressions. PLoS ONE 8 , 1–17 (2013).

Klasen, M., Chen, Y. H. & Mathiak, K. Multisensory emotions: perception, combination and underlying neural processes. Rev. Neurosci. 23 , 381–392 (2012).

Collignon, O. et al. Audio-visual integration of emotion expression. Brain Res. 1242 , 126–135 (2008).

Stein, B. E. & Meredith, M. A. The Merging of the Senses (MIT Press, 1993).

Scherer, K. R., Clark-Polner, E. & Mortillaro, M. In the eye of the beholder? Universality and cultural specificity in the expression and perception of emotion. Int. J. Psychol. 46 , 401–435 (2011).

Schirmer, A. & Adolphs, R. Emotion perception from face, voice, and touch: comparisons and convergence. Trends Cogn. Sci. 21 , 216–228 (2017).

Tartter, V. C. Happy talk: perceptual and acoustic effects of smiling on speech. Percept. Psychophys. 27 , 24–27 (1980).

McGurk, H. & MacDonal, J. Hearing lips and seeing voices. Nature 264 , 746–748 (1976).

Sumby, W. H. & Pollack, I. Visual contribution to speech information in noise. J. Acoust. Soc. Am. 26 , 212–215 (1954).

Summerfield, Q. in Hearing by Eye: The Psychology of Lipreading (eds Dodd, B. & Campbell, R.) 3–51 (Lawrence Erlbaum, 1987).

Aubergé, V. & Cathiard, M. Can we hear the prosody of smile? Speech Commun. 40 , 87–97 (2003).

De Gelder, B. & Vroomen, J. The perception of emotions by ear and by eye. Cogn. Emot. 14 , 289–311 (2000).

Paulmann, S. & Pell, M. D. Is there an advantage for recognizing multi-modal emotional stimuli? Motiv. Emot. 35 , 192–201 (2011).

Juslin, P. N. & Laukka, P. Communication of emotions in vocal expression and music performance: different channels, same code? Psychol. Bull. 129 , 770–814 (2003).

Scherer, K. R. in Emotions: Essays on Emotional Theory (eds van Goozen, S. H. M., van de Poll, N. E. & Sergeant, J. A.) 161–193 (Lawrence Erlbaum, 1994).

Banse, R. & Scherer, K. R. Acoustic profiles in vocal emotion expressions. J. Pers. Soc. Psychol. 70 , 614–636 (1996).

Hawk, S. T., van Kleef, G. A., Fischer, A. H. & van der Schalk, J. “Worth a thousand words”: absolute and relative decoding of nonlinguistic affect vocalizations. Emotion 9 , 293–305 (2009).

Schröder, M. Experimental study of affect bursts. Speech Commun. 40 , 99–116 (2003).

Bänziger, T., Patel, S. & Scherer, K. R. The role of perceived voice and speech characteristics in vocal emotion communication. J. Nonverbal Behav. 38 , 31–52 (2014).

Bänziger, T., Hosoya, G. & Scherer, K. R. Path models of vocal emotion communication. PLoS ONE 10 , 1–29 (2015).

Goudbeek, M. & Scherer, K. Beyond arousal: valence and potency/control cues in the vocal expression of emotion. J. Acoust. Soc. Am. 128 , 1322 (2010).

Scherer, K. R. Expression of emotion in voice and music. J. Voice 9 , 235–248 (1995).

Adams, R. B. & Kleck, R. E. Effects of direct and averted gaze on the perception of facially communicated emotion. Emotion 5 , 3–11 (2005).

Hess, U., Adams, Æ. R. B. & Kleck, R. E. Looking at you or looking elsewhere: the influence of head orientation on the signal value of emotional facial expressions. Motiv. Emot. 31 , 137–144 (2007).

Milders, M., Hietanen, J. K., Leppänen, J. M. & Braun, M. Detection of emotional faces is modulated by the direction of eye gaze. Emotion 11 , 1456–1461 (2011).

Rigato, S. & Farroni, T. The role of gaze in the processing of emotional facial expressions. Emot. Rev. 5 , 36–40 (2013).

Bindemann, M., Burton, A. M. & Langton, S. R. H. How do eye gaze and facial expression interact? Vis. Cogn. 16 , 708–733 (2008).

Adams, R. B. & Kleck, R. E. Perceived gaze direction and the processing of facial displays of emotion. Psychol. Sci. 14 , 644–647 (2003).

Sander, D., Grandjean, D., Kaiser, S., Wehrle, T. & Scherer, K. R. Interaction effects of perceived gaze direction and dynamic facial expression: evidence for appraisal theories of emotion. Eur. J. Cogn. Psychol. 19 , 470–480 (2007).

Dalmaso, M., Castelli, L. & Galfano, G. Social modulators of gaze-mediated orienting of attention: a review. Psychon. Bull. Rev. 27 , 833–855 (2020).

Lassalle, A. & Itier, R. J. Emotional modulation of attention orienting by gaze varies with dynamic cue sequence. Vis. Cogn. 23 , 720–735 (2015).

McCrackin, S. D., Soomal, S. K., Patel, P. & Itier, R. J. Spontaneous eye-movements in neutral and emotional gaze-cuing: an eye-tracking investigation. Heliyon 5 , e01583 (2019).

Atkinson, A. P., Dittrich, W. H., Gemmell, A. J. & Young, A. W. Emotion perception from dynamic and static body expressions in point-light and full-light displays. Perception 33 , 717–746 (2004).

Tracy, J. L. & Robins, R. W. Show your pride evidence for a discrete emotion expression. Emotion 15 , 194–197 (2004).

App, B., Reed, C. L. & McIntosh, D. N. Relative contributions of face and body configurations: perceiving emotional state and motion intention. Cogn. Emot. 26 , 690–698 (2012).

Coulson, M. Attributing emotion to static body postures: recognition accuracy, confusions, and viewpoint dependence. J. Nonverbal Behav. 28 , 117–139 (2004).

Dael, N., Mortillaro, M. & Scherer, K. R. Emotion expression in body action and posture. Emotion 12 , 1085–1101 (2012).

Dittrich, W. H., Trosciankoh, T., Lea, S. E. & Morgan, D. Perception of emotion from dynamic point-light displays represented in dance. Perception 25 , 727–738 (1996).

Wallbott, H. G. Bodily expression of emotion. Eur. J. Soc. Psychol. 28 , 879–896 (1998).

Witkower, Z. & Tracy, J. L. Bodily communication of emotion: evidence for extrafacial behavioral expressions and available coding systems. Emot. Rev. 11 , 184–193 (2019).

Aviezer, H., Bentin, S., Dudarev, V. & Hassin, R. R. The automaticity of emotional face-context integration. Emotion 11 , 1406–1414 (2011).

Aviezer, H., Trope, Y. & Todorov, A. Holistic person processing: faces with bodies tell the whole story. J. Pers. Soc. Psychol. 103 , 20–37 (2012).

Lecker, M., Shoval, R., Aviezer, H. & Eitam, B. Temporal integration of bodies and faces: united we stand, divided we fall? Vis. Cogn. 25 , 477–491 (2017).

Meeren, H. K. M., Van Heijnsbergen, C. C. R. J. & De Gelder, B. Rapid perceptual integration of facial expression and emotional body language. Proc. Natl Acad. Sci. USA 102 , 16518–16523 (2005).

Mondloch, C. J. Sad or fearful? The influence of body posture on adults’ and children’s perception of facial displays of emotion. J. Exp. Child. Psychol. 111 , 180–196 (2012).

Aviezer, H. et al. Angry, disgusted, or afraid? Studies on the malleability of emotion perception. Psychol. Sci. 19 , 724–732 (2008).

Aviezer, H., Ensenberg, N. & Hassin, R. R. The inherently contextualized nature of facial emotion perception. Curr. Opin. Psychol. 17 , 47–54 (2017).

Nelson, N. L. & Mondloch, C. J. Adults’ and children’s perception of facial expressions is influenced by body postures even for dynamic stimuli. Vis. Cogn. 25 , 563–574 (2017).

Gross, M. M., Crane, E. A. & Fredrickson, B. L. Methodology for assessing bodily expression of emotion. J. Nonverbal Behav. 34 , 223–248 (2010).

Hietanen, J. K. & Leppänen, J. M. Judgment of other people’s facial expressions of emotions is influenced by their concurrent affective hand movements. Scand. J. Psychol. 49 , 221–230 (2008).

Mortillaro, M. & Dukes, D. Jumping for joy: the importance of the body and of dynamics in the expression and recognition of positive emotions. Front. Psychol. 9 , 763 (2018).

Lander, K. & Bruce, V. Recognising famous faces: exploring the benefits of facial motion. Ecol. Psychol. 12 , 259–272 (2000).

Küster, D. et al. Opportunities and challenges for using automatic human affect analysis in consumer research. Front. Neurosci. 14 , 400 (2020).

Zloteanu, M. & Krumhuber, E. G. Expression authenticity: the role of genuine and deliberate displays in emotion perception. Front. Psychol. 11 , 611248 (2021).

Krumhuber, E. G., Skora, P., Küster, D. & Fou, L. A review of dynamic datasets for facial expression research. Emot. Rev. 9 , 280–292 (2017).

Krumhuber, E., Küster, D., Namba, S., Shah, D. & Calvo, M. G. Emotion recognition from posed and spontaneous dynamic expressions: human observers vs. machine analysis. Emotion 21 , 447–451 (2021).

Krumhuber, E. G., Küster, D., Namba, S. & Skora, P. Human and machine validation of 14 databases of dynamic facial expressions. Behav. Res. Methods 53 , 686–701 (2021).

Fernández-Dols, J. M. in The Science of Facial Expression (eds Russell, J. A. & Fernández-Dols, J. M.) 1–21 (Oxford Univ. Press, 2017).

Mollahosseini, A., Hasani, B. & Mahoor, M. H. AffectNet: a database for facial expression, valence, and arousal computing in the wild. IEEE Trans. Affect. Comput. 10 , 18–31 (2019).

Srinivasan, R. & Martinez, A. M. Cross-cultural and cultural-specific production and perception of facial expressions of emotion in the wild. IEEE Trans. Affect. Comput. 12 , 707–721 (2021).

Lander, K. & Chuang, L. Why are moving faces easier to recognize? Vis. Cogn. 12 , 429–442 (2005).

Lander, K., Hill, H., Kamachi, M. & Vatikiotis-Bateson, E. It’s not what you say but the way you say it: matching faces and voices. J. Exp. Psychol. Hum. Percept. Perform. 33 , 905–914 (2007).

Partan, S. & Marler, P. Communication goes multimodal. Science 283 , 1272–1273 (1999).

Benda, M. S. & Scherf, K. S. The complex emotion expression database: a validated stimulus set of trained actors. PLoS ONE 15 , e0228248 (2020).

Cowen, A. S. et al. Sixteen facial expressions occur in similar contexts worldwide. Nature 589 , 251–257 (2021).

Ortony, A. Are all “basic emotions” emotions? A problem for the (basic) emotions construct. Perspect. Psychol. Sci. 17 , 41–61 (2022).

Russell, J. A. in The Science of Facial Expression (eds Russell, J. A. & Fernández-Dols, J. M.) (Oxford Univ. Press, 2017).

Russell, J. A. & Fernández-Dols, J. M. in The Psychology of Facial Expression (eds Russell, J. A. & Fernández-Dols, J. M.) 3–30 (Cambridge Univ. Press, 1997).

Zeng, Z., Pantic, M., Roisman, G. I. & Huang, T. S. A survey of facial affect recognition methods: audio, visual and spontaneous expressions. IEEE Trans. Pattern Anal. Mach. 31 , 39–58 (2009).

Grill-Spector, K., Weiner, K. S., Kay, K. & Gomez, J. The functional neuroanatomy of human face perception. Annu. Rev. Vis. Sci. 15 , 167–196 (2017).

Bernstein, M., Erez, Y., Blank, I. & Yovel, G. An integrated neural framework for dynamic and static face processing. Sci. Rep. 8 , 7036 (2018).

Pitcher, D. & Ungerleider, L. G. Evidence for a third visual pathway specialized for social perception. Trends Cogn. Sci. 25 , 100–110 (2021).

Bruce, V. & Young, A. Understanding face recognition. Br. J. Psychol. 77 , 305–327 (1986).

Tranel, D., Damasio, A. R. & Damasio, H. Intact recognition of facial expression, gender, and age in patients with impaired recognition of face identity. Neurology 38 , 690–696 (1988).

Young, A. W., McWeeny, K. H., Hay, D. C. & Ellis, A. W. Matching familiar and unfamiliar faces on identity and expression. Psychol. Res. 48 , 63–68 (1986).

Schweinberger, S. R. & Soukup, G. R. Asymmetric relationships among perceptions of facial identity, emotion, and facial speech. J. Exp. Psychol. Hum. Percept. Perform. 24 , 1748–1765 (1998).

Wang, Y., Fu, X., Johnston, R. A. & Yan, Z. Discriminability effect on Garner interference: evidence from recognition of facial identity and expression. Front. Psychol. 4 , 943 (2013).

Hoffman, E. A. & Haxby, J. V. Distinct representations of eye gaze and identity in the distributed human neural system for face perception. Nat. Neurosci. 3 , 80–84 (2000).

Kanwisher, N., McDermott, J. & Chun, M. M. The fusiform face area: a module in human extrastriate cortex specialized for face perception. J. Neurosci. 17 , 4302–4311 (1997).

Ganel, T., Valyear, K. F., Goshen-Gottstein, Y. & Goodale, M. A. The involvement of the “fusiform face area” in processing facial expression. Neuropsychologia 43 , 1645–1654 (2005).

Kliemann, D. et al. Cortical responses to dynamic emotional facial expressions generalize across stimuli, and are sensitive to task-relevance, in adults with and without autism. Cortex 103 , 24–43 (2018).

Lander, K., Chuang, L. & Wickham, L. Recognizing face identity from natural and morphed smiles. Q. J. Exp. Psychol. 59 , 801–808 (2006).

Pike, G. E., Kemp, R. I., Towell, N. A. & Phillips, K. C. Recognizing moving faces: the relative contribution of motion and perspective view information. Vis. Cogn. 4 , 409–438 (1997).

Rhodes, G. et al. How distinct is the coding of face identity and expression? Evidence for some common dimensions in face space. Cognition 142 , 123–137 (2015).

Van der Schalk, J., Hawk, S. T., Fischer, A. H. & Doosje, B. J. Moving faces, looking places: The Amsterdam Dynamic Facial Expressions Set (ADFES). Emotion (in the press).

Download references

Acknowledgements

The authors thank A. Young for comments on an earlier draft of this manuscript.

Author information

Authors and affiliations.

Department of Experimental Psychology, University College London, London, UK

Eva G. Krumhuber

Institute for Experimental Psychology, Heinrich-Heine-Universität Düsseldorf, Düsseldorf, Germany

Lina I. Skora

School of Psychology, University of Sussex, Falmer, UK

School of Psychology, University of Wollongong, Wollongong, New South Wales, Australia

Harold C. H. Hill

Division of Psychology, Communication and Human Neuroscience, University of Manchester, Manchester, UK

Karen Lander

You can also search for this author in PubMed Google Scholar

Contributions

E.G.K., H.C.H.H. and K.L. researched data for the article. All authors wrote the article and reviewed and/or edited the manuscript before submission.

Corresponding author

Correspondence to Eva G. Krumhuber .

Ethics declarations

Competing interests.

The authors declare no competing interests.

Peer review

Peer review information.

Nature Reviews Psychology thanks Claus-Christian Carbon, Guillermo Recio and Disa Sauter for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

Reprints and permissions

About this article

Cite this article.

Krumhuber, E.G., Skora, L.I., Hill, H.C.H. et al. The role of facial movements in emotion recognition. Nat Rev Psychol 2 , 283–296 (2023). https://doi.org/10.1038/s44159-023-00172-1

Download citation

Accepted : 06 March 2023

Published : 27 March 2023

Issue Date : May 2023

DOI : https://doi.org/10.1038/s44159-023-00172-1

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

A dynamic disadvantage social perceptions of dynamic morphed emotions differ from videos and photos.

- Casey Becker

- Russell Conduit

- Robin Laycock

Journal of Nonverbal Behavior (2024)

The Predictive Role of the Posterior Cerebellum in the Processing of Dynamic Emotions

- Gianluca Malatesta

- Anita D’Anselmo

- Luca Tommasi

The Cerebellum (2023)

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

Emotion Recognition for Everyday Life Using Physiological Signals From Wearables: A Systematic Literature Review

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

- Open access

- Published: 21 May 2024

The bright side of sports: a systematic review on well-being, positive emotions and performance

- David Peris-Delcampo 1 ,

- Antonio Núñez 2 ,

- Paula Ortiz-Marholz 3 ,

- Aurelio Olmedilla 4 ,

- Enrique Cantón 1 ,

- Javier Ponseti 2 &

- Alejandro Garcia-Mas 2

BMC Psychology volume 12 , Article number: 284 ( 2024 ) Cite this article

79 Accesses

Metrics details

The objective of this study is to conduct a systematic review regarding the relationship between positive psychological factors, such as psychological well-being and pleasant emotions, and sports performance.

This study, carried out through a systematic review using PRISMA guidelines considering the Web of Science, PsycINFO, PubMed and SPORT Discus databases, seeks to highlight the relationship between other more ‘positive’ factors, such as well-being, positive emotions and sports performance.

The keywords will be decided by a Delphi Method in two rounds with sport psychology experts.

Participants

There are no participants in the present research.

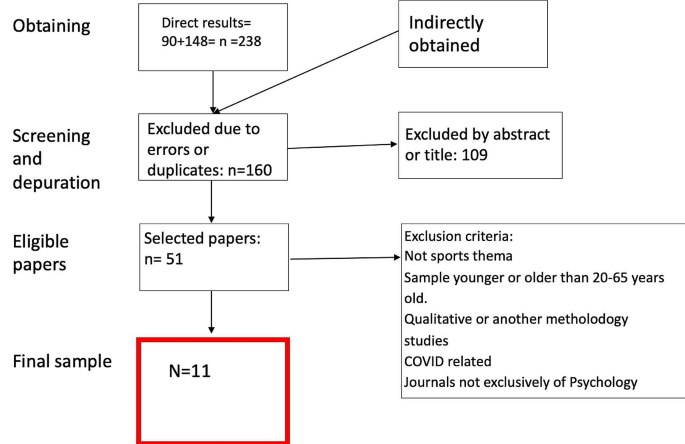

The main exclusion criteria were: Non-sport thema, sample younger or older than 20–65 years old, qualitative or other methodology studies, COVID-related, journals not exclusively about Psychology.

Main outcomes measures

We obtained a first sample of 238 papers, and finally, this sample was reduced to the final sample of 11 papers.

The results obtained are intended to be a representation of the ‘bright side’ of sports practice, and as a complement or mediator of the negative variables that have an impact on athletes’ and coaches’ performance.

Conclusions

Clear recognition that acting on intrinsic motivation continues to be the best and most effective way to motivate oneself to obtain the highest levels of performance, a good perception of competence and a source of personal satisfaction.

Peer Review reports

Introduction

In recent decades, research in the psychology of sport and physical exercise has focused on the analysis of psychological variables that could have a disturbing, unfavourable or detrimental role, including emotions that are considered ‘negative’, such as anxiety/stress, sadness or anger, concentrating on their unfavourable relationship with sports performance [ 1 , 2 , 3 , 4 ], sports injuries [ 5 , 6 , 7 ] or, more generally, damage to the athlete’s health [ 8 , 9 , 10 ]. The study of ‘positive’ emotions such as happiness or, more broadly, psychological well-being, has been postponed at this time, although in recent years this has seen an increase that reveals a field of study of great interest to researchers and professionals [ 11 , 12 , 13 ] including physiological, psychological, moral and social beneficial effects of the physical activity in comic book heroes such as Tintin, a team leader, which can serve as a model for promoting healthy lifestyles, or seeking ‘eternal youth’ [ 14 ].

Emotions in relation to their effects on sports practice and performance rarely go in one direction, being either negative or positive—generally positive and negative emotions do not act alone [ 15 ]. Athletes experience different emotions simultaneously, even if they are in opposition and especially if they are of mild or moderate intensity [ 16 ]. The athlete can feel satisfied and happy and at the same time perceive a high level of stress or anxiety before a specific test or competition. Some studies [ 17 ] have shown how sports participation and the perceived value of elite sports positively affect the subjective well-being of the athlete. This also seems to be the case in non-elite sports practice. The review by Mansfield et al. [ 18 ] showed that the published literature suggests that practising sports and dance, in a group or supported by peers, can improve the subjective well-being of the participants, and also identifies negative feelings towards competence and ability, although the quantity and quality of the evidence published is low, requiring better designed studies. All these investigations are also supported by the development of the concept of eudaimonic well-being [ 19 ], which is linked to the development of intrinsic motivation, not only in its aspect of enjoyment but also in its relationship with the perception of competition and overcoming and achieving goals, even if this is accompanied by other unpleasant hedonic emotions or even physical discomfort. Shortly after a person has practised sports, he will remember those feelings of exhaustion and possibly stiffness, linked to feelings of satisfaction and even enjoyment.

Furthermore, the mediating role of parents, coaches and other psychosocial agents can be significant. In this sense, Lemelin et al. [ 20 ], with the aim of investigating the role of autonomy support from parents and coaches in the prediction of well-being and performance of athletes, found that autonomy support from parents and coaches has positive relationships with the well-being of the athlete, but that only coach autonomy support is associated with sports performance. This research suggests that parents and coaches play important but distinct roles in athlete well-being and that coach autonomy support could help athletes achieve high levels of performance.

On the other hand, an analysis of emotions in the sociocultural environment in which they arise and gain meaning is always interesting, both from an individual perspective and from a sports team perspective. Adler et al. [ 21 ] in a study with military teams showed that teams with a strong emotional culture of optimism were better positioned to recover from poor performance, suggesting that organisations that promote an optimistic culture develop more resilient teams. Pekrun et al. [ 22 ] observed with mathematics students that individual success boosts emotional well-being, while placing people in high-performance groups can undermine it, which is of great interest in investigating the effectiveness and adjustment of the individual in sports teams.

There is still little scientific literature in the field of positive emotions and their relationship with sports practice and athlete performance, although their approach has long had its clear supporters [ 23 , 24 ]. It is comforting to observe the significant increase in studies in this field, since some authors (e.g [ 25 , 26 ]). . , point out the need to overcome certain methodological and conceptual problems, paying special attention to the development of specific instruments for the evaluation of well-being in the sports field and evaluation methodologies.

As McCarthy [ 15 ] indicates, positive emotions (hedonically pleasant) can be the catalysts for excellence in sport and deserve a space in our research and in professional intervention to raise the level of athletes’ performance. From a holistic perspective, positive emotions are permanently linked to psychological well-being and research in this field is necessary: firstly because of the leading role they play in human behaviour, cognition and affection, and secondly, because after a few years of international uncertainty due to the COVID-19 pandemic and wars, it seems ‘healthy and intelligent’ to encourage positive emotions for our athletes. An additional reason is that they are known to improve motivational processes, reducing abandonment and negative emotional costs [ 11 ]. In this vein, concepts such as emotional intelligence make sense and can help to identify and properly manage emotions in the sports field and determine their relationship with performance [ 27 ] that facilitates the inclusion of emotional training programmes based on the ‘bright side’ of sports practice [ 28 ].

Based on all of the above, one might wonder how these positive emotions are related to a given event and what role each one of them plays in the athlete’s performance. Do they directly affect performance, or do they affect other psychological variables such as concentration, motivation and self-efficacy? Do they favour the availability and competent performance of the athlete in a competition? How can they be regulated, controlled for their own benefit? How can other psychosocial agents, such as parents or coaches, help to increase the well-being of their athletes?

This work aims to enhance the leading role, not the secondary, of the ‘good and pleasant side’ of sports practice, either with its own entity, or as a complement or mediator of the negative variables that have an impact on the performance of athletes and coaches. Therefore, the objective of this study is to conduct a systematic review regarding the relationship between positive psychological factors, such as psychological well-being and pleasant emotions, and sports performance. For this, the methodological criteria that constitute the systematic review procedure will be followed.

Materials and methods

This study was carried out through a systematic review using PRISMA (Preferred Reporting Items for Systematic Reviews) guidelines considering the Web of Science (WoS) and Psycinfo databases. These two databases were selected using the Delphi method [ 29 ]. It does not include a meta-analysis because there is great data dispersion due to the different methodologies used [ 30 ].

The keywords will be decided by the Delphi Method in two rounds with sport psychology experts. The results obtained are intended to be a representation of the ‘bright side’ of sports practice, and as a complement or mediator of the negative variables that have an impact on athletes’ and coaches’ performance.

It was determined that the main construct was to be psychological well-being, and that it was to be paired with optimism, healthy practice, realisation, positive mood, and performance and sport. The search period was limited to papers published between 2000 and 2023, and the final list of papers was obtained on February 13 , 2023. This research was conducted in two languages—English and Spanish—and was limited to psychological journals and specifically those articles where the sample was formed by athletes.

Each word was searched for in each database, followed by searches involving combinations of the same in pairs and then in trios. In relation to the results obtained, it was decided that the best approach was to group the words connected to positive psychology on the one hand, and on the other, those related to self-realisation/performance/health. In this way, it used parentheses to group words (psychological well-being; or optimism; or positive mood) with the Boolean ‘or’ between them (all three refer to positive psychology); and on the other hand, it grouped those related to performance/health/realisation (realisation; or healthy practice or performance), separating both sets of parentheses by the Boolean ‘and’’. To further filter the search, a keyword included in the title and in the inclusion criteria was added, which was ‘sport’ with the Boolean ‘and’’. In this way, the search achieved results that combined at least one of the three positive psychology terms and one of the other three.

Results (first phase)

The mentioned keywords were cross-matched, obtaining the combination with a sufficient number of papers. From the first research phase, the total number of papers obtained was 238. Then screening was carried out by 4 well-differentiated phases that are summarised in Fig. 1 . These phases helped to reduce the original sample to a more accurate one.

Phases of the selection process for the final sample. Four phases were carried out to select the final sample of articles. The first phase allowed the elimination of duplicates. In the second stage, those that, by title or abstract, did not fit the objectives of the article were eliminated. Previously selected exclusion criteria were applied to the remaining sample. Thus, in phase 4, the final sample of 11 selected articles was obtained

Results (second phase)

The first screening examined the title, and the abstract if needed, excluding the papers that were duplicated, contained errors or someone with formal problems, low N or case studies. This screening allowed the initial sample to be reduced to a more accurate one with 109 papers selected.

Results (third phase)

This was followed by the second screening to examine the abstract and full texts, excluding if necessary papers related to non-sports themes, samples that were too old or too young for our interests, papers using qualitative methodologies, articles related to the COVID period, or others published in non-psychological journals. Furthermore, papers related to ‘negative psychological variables’’ were also excluded.

Results (fourth phase)

At the end of this second screening the remaining number of papers was 11. In this final phase we tried to organise the main characteristics and their main conclusions/results in a comprehensible list (Table 1 ). Moreover, in order to enrich our sample of papers, we decided to include some articles from other sources, mainly those presented in the introduction to sustain the conceptual framework of the concept ‘bright side’ of sports.

The usual position of the researcher of psychological variables that affect sports performance is to look for relationships between ‘negative’ variables, first in the form of basic psychological processes, or distorting cognitive behavioural, unpleasant or evaluable as deficiencies or problems, in a psychology for the ‘risk’ society, which emphasises the rehabilitation that stems from overcoming personal and social pathologies [ 31 ], and, lately, regarding the affectation of the athlete’s mental health [ 32 ]. This fact seems to be true in many cases and situations and to openly contradict the proclaimed psychological benefits of practising sports (among others: Cantón [ 33 ], ; Froment and González [ 34 ]; Jürgens [ 35 ]).

However, it is possible to adopt another approach focused on the ‘positive’ variables, also in relation to the athlete’s performance. This has been the main objective of this systematic review of the existing literature and far from being a novel approach, although a minority one, it fits perfectly with the definition of our area of knowledge in the broad field of health, as has been pointed out for some time [ 36 , 37 ].

After carrying out the aforementioned systematic review, a relatively low number of articles were identified by experts that met the established conditions—according to the PRISMA method [ 37 , 38 , 39 , 40 ]—regarding databases, keywords, and exclusion and inclusion criteria. These precautions were taken to obtain the most accurate results possible, and thus guarantee the quality of the conclusions.

The first clear result that stands out is the great difficulty in finding articles in which sports ‘performance’ is treated as a well-defined study variable adapted to the situation and the athletes studied. In fact, among the results (11 papers), only 3 associate one or several positive psychological variables with performance (which is evaluated in very different ways, combining objective measures with other subjective ones). This result is not surprising, since in several previous studies (e.g. Nuñez et al. [ 41 ]) using a systematic review, this relationship is found to be very weak and nuanced by the role of different mediating factors, such as previous sports experience or the competitive level (e.g. Rascado, et al. [ 42 ]; Reche, Cepero & Rojas [ 43 ]), despite the belief—even among professional and academic circles—that there is a strong relationship between negative variables and poor performance, and vice versa, with respect to the positive variables.

Regarding what has been evidenced in relation to the latter, even with these restrictions in the inclusion and exclusion criteria, and the filters applied to the first findings, a true ‘galaxy’ of variables is obtained, which also belong to different categories and levels of psychological complexity.

A preliminary consideration regarding the current paradigm of sport psychology: although it is true that some recent works have already announced the swing of the pendulum on the objects of study of PD, by returning to the study of traits and dispositions, and even to the personality of athletes [ 43 , 44 , 45 , 46 ], our results fully corroborate this trend. Faced with five variables present in the studies selected at the end of the systematic review, a total of three traits/dispositions were found, which were also the most repeated—optimism being present in four articles, mental toughness present in three, and finally, perfectionism—as the representative concepts of this field of psychology, which lately, as has already been indicated, is significantly represented in the field of research in this area [ 46 , 47 , 48 , 49 , 50 , 51 , 52 ]. In short, the psychological variables that finally appear in the selected articles are: psychological well-being (PWB) [ 53 ]; self-compassion, which has recently been gaining much relevance with respect to the positive attributional resolution of personal behaviours [ 54 ], satisfaction with life (balance between sports practice, its results, and life and personal fulfilment [ 55 ], the existence of approach-achievement goals [ 56 ], and perceived social support [ 57 ]). This last concept is maintained transversally in several theoretical frameworks, such as Sports Commitment [ 58 ].

The most relevant concept, both quantitatively and qualitatively, supported by the fact that it is found in combination with different variables and situations, is not a basic psychological process, but a high-level cognitive construct: psychological well-being, in its eudaimonic aspect, first defined in the general population by Carol Ryff [ 59 , 60 ] and introduced at the beginning of this century in sport (e.g., Romero, Brustad & García-Mas [ 13 ], ; Romero, García-Mas & Brustad [ 61 ]). It is important to note that this concept understands psychological well-being as multifactorial, including autonomy, control of the environment in which the activity takes place, social relationships, etc.), meaning personal fulfilment through a determined activity and the achievement or progress towards goals and one’s own objectives, without having any direct relationship with simpler concepts, such as vitality or fun. In the selected studies, PWB appears in five of them, and is related to several of the other variables/traits.

The most relevant result regarding this variable is its link with motivational aspects, as a central axis that relates to different concepts, hence its connection to sports performance, as a goal of constant improvement that requires resistance, perseverance, management of errors and great confidence in the possibility that achievements can be attained, that is, associated with ideas of optimism, which is reflected in expectations of effectiveness.

If we detail the relationships more specifically, we can first review this relationship with the ‘way of being’, understood as personality traits or behavioural tendencies, depending on whether more or less emphasis is placed on their possibilities for change and learning. In these cases, well-being derives from satisfaction with progress towards the desired goal, for which resistance (mental toughness) and confidence (optimism) are needed. When, in addition, the search for improvement is constant and aiming for excellence, its relationship with perfectionism is clear, although it is a factor that should be explored further due to its potential negative effect, at least in the long term.