Information

- Author Services

Initiatives

You are accessing a machine-readable page. In order to be human-readable, please install an RSS reader.

All articles published by MDPI are made immediately available worldwide under an open access license. No special permission is required to reuse all or part of the article published by MDPI, including figures and tables. For articles published under an open access Creative Common CC BY license, any part of the article may be reused without permission provided that the original article is clearly cited. For more information, please refer to https://www.mdpi.com/openaccess .

Feature papers represent the most advanced research with significant potential for high impact in the field. A Feature Paper should be a substantial original Article that involves several techniques or approaches, provides an outlook for future research directions and describes possible research applications.

Feature papers are submitted upon individual invitation or recommendation by the scientific editors and must receive positive feedback from the reviewers.

Editor’s Choice articles are based on recommendations by the scientific editors of MDPI journals from around the world. Editors select a small number of articles recently published in the journal that they believe will be particularly interesting to readers, or important in the respective research area. The aim is to provide a snapshot of some of the most exciting work published in the various research areas of the journal.

Original Submission Date Received: .

- Active Journals

- Find a Journal

- Proceedings Series

- For Authors

- For Reviewers

- For Editors

- For Librarians

- For Publishers

- For Societies

- For Conference Organizers

- Open Access Policy

- Institutional Open Access Program

- Special Issues Guidelines

- Editorial Process

- Research and Publication Ethics

- Article Processing Charges

- Testimonials

- Preprints.org

- SciProfiles

- Encyclopedia

Article Menu

- Subscribe SciFeed

- Recommended Articles

- Google Scholar

- on Google Scholar

- Table of Contents

Find support for a specific problem in the support section of our website.

Please let us know what you think of our products and services.

Visit our dedicated information section to learn more about MDPI.

JSmol Viewer

A hybrid methodology to improve speaking skills in english language learning using mobile applications.

1. Introduction

2. mobile learning in english as a second language education.

- Portability : The acquisition of knowledge can be moved to any place and time, facilitating the student’s learning regardless of where he/she is.

- Availability: Access to all information.

- Personalization: The information can be adapted to the user’s needs and style.

- Social Connectivity: Collaborative work among the same users of an application.

- Motivation: It motivates students to get involved in their education.

- The apps consider the four core English language skills: reading, speaking, listening, and writing.

- Learners can access their apps anytime, anywhere.

- Learners can interact through the apps with their peers or with other people around the world.

- The apps are free of charge.

- They can reinforce knowledge and provide tips to perform better in different skills.

- The ubiquity of mobile apps undoubtedly provides learners with an autonomous and omnipresent education. They only need a smartphone, an app of their liking and the right motivation to start the process of responsible and dynamic learning.

2.1. Mobile Assisted Language Learning

- Mobility of technology. It refers to all technological devices that have the wireless standard 802.11 (Wi-Fi). Through Wi-Fi, the teaching and learning material allows learners to acquire knowledge from anywhere and at any time; they choose the time and place where they want to learn, as well as the applications they want to use to interact with other users, create their own calendars, and relax from time to time.

- Mobility of learning . It refers to student-focused autonomous learning, where the teaching process is a personalized, collaborative, omnipresent and permanent experience that considers the level of each student, helping them to realize their level of knowledge, their learning process, their growth, their achievements, and goals.

- Mobility of learner. It refers to those free and independent learners who use teaching tools, leaving aside the barriers of age, time and learning ability. This avoids the comparison between students that usually causes shyness, fear and lack of confidence when expressing their ideas in a spoken way. In this way, mobile students personalize their learning activities based on their own interests and objectives, thus engaging in their continuous learning [ 33 ].

2.2. English Language Teaching–Learning Models

- Effective interaction only occurs through speaking skills.

- Practicing speaking skills allows the learning space to become a socially dynamic place.

- Speaking with others immerses students in real contexts and social experiences when sharing a message with others.

- The skill of speaking enhances the relationships that exist in the classroom. It all depends on the methodology used by the teacher to create a trusting classroom environment that allows everyone to express his or her opinions in a spoken manner without fear.

- The ability to speak helps students develop metacognitive and creative thinking, because they involve other actors in communication, and learning takes on a collaborative role.

- Grammar: It enables L2 learners to order and structure sentences so that they contain the correct meaning and sense and gives them the ability to communicate effectively orally and in writing. Grammar allows them to combine words based on rules and structures to create sentences that can be understood by others.

- Vocabulary: Relates to the knowledge of words, their meaning, and their use in a real context. Knowing how to pronounce them within a conversation on a specific topic is what allows communication within the speaking discourse. To fulfill this purpose, vocabulary must be familiar and extensive so that the student is able to talk about any topic without the barrier of not knowing what it means or how to say certain words.

- Pronunciation: Considers the phonology of the words and their grammatical elements that mark the sounds of the words in each language. Pronunciation is one of the most important elements of speech ability, since it is difficult for a person who does not have good pronunciation to make himself understood and communicate even if he has the correct grammatical elements and vocabulary.

- Fluency: Allows communicating naturally and accurately. It is the goal of every L2 learner to be able to communicate with others using an appropriate speed and precise pauses when constructing a conversation. This rhythm of speech indicates that the learner is knowledgeable of the language and does not take too much time to structure sentences or search for precise vocabulary, but rather is able to use expressions that come to mind quickly and naturally.

- Comprehension Allows one to understand the message transmitted and to convey a message that is understood. This encompasses all of the above, since making good use of grammar, vocabulary, pronunciation, and fluency is when comprehension is effective and opens the way for students to engage in meaningful and efficient communication.

- Traditional approach: Grammatical translation methodology

- The natural approach: Direct learning methodology and Berlitz method

- Structural approach: Audio-oral method, situational method, and audio-visual method

- Communicative approach: Communicative method and nocio-functional method

- Humanistic approach: Total physical response method, silence method, suggestopedia method

- The Direct Method

- The Audio-lingual Method

- The Total Physical Response

- Communicative Language Teaching

- Task-based Language Learning

- Suggestopedia

- Which mobile application can be used for teaching English language speaking skills?

- Is it possible to propose an innovative methodology that combines traditional methodologies and mobile learning to improve English language communication skills?

3. Selection of Methodologies and Mobile App for the Research Proposal

Identification of a mobile application for research.

- First step: The search was performed with the keyword and found about 250 applications for learning the English language and the development of communication skills.

- Second step: From this group only, we chose the apps that were top-rated in the Google Play Store (4.5–4.9) and that had been download between 50,000+ and 100,000,000+ times, as shown in Table 2 .

- Third step: A smartphone was used to download all of the apps and choose those most suitable to the educational environment. These were chosen because they were free to use, easy to use, and had outstanding educational content.

- Fourth step: We applied an evaluation rubric, as shown in Table 3 , which allowed us to quantitatively identify which application was the best to use in this research [ 40 ].

4. Findings

5. case study, 5.1. timing, 5.2. learning methodology.

- Grammar: Structuring sentences that use tenses, phrases, and grammatical rules correctly.

- Sociolinguistics: Refers to the function of communication and interaction with other actors within different social and cultural contexts.

- Strategy: Having the ability to understand and be understood when presenting ideas and overcome difficulties and misunderstandings that may arise in communication.

5.3. Limitations

6. discussion, 7. conclusions.

- Pedagogical coherence

- Feedback and self-correction

- Customization

8. Future Work

Author contributions, institutional review board statement, informed consent statement, conflicts of interest.

- Januariyansah, S.; Rohmantoro, D. The Role of Digital Classroom Facilities to Accommodate Learning Process ff The Z and Alpha Generations. In Proceedings of the 2nd International Conference on Child-Friendly Education, Muhammadiyah Surakarta University, Jawa Tengah, Indonesia, 21–22 April 2018; Volume 1994, pp. 434–439. [ Google Scholar ]

- Gooding de Palacios, F.A. Enfoques para el aprendizaje de una segunda lengua: Expectativa en el dominio del idioma inglés. Rev. Cient. Orb. Cógn. 2020 , 4 , 20–38. [ Google Scholar ] [ CrossRef ]

- Rani, K.J.; Kranthi, T.; Anjaneyulu, G. Teaching and Learning English as a Foreign Language. Int. J. Engl. Lang. Teach. 2018 , 5 , 57. [ Google Scholar ] [ CrossRef ]

- Hafifah, H. The Effectiveness of Duolingo in Improving Students’ Speaking Skill at Madrasah Aliyah Bilingual Batu School Year 2019/2020. Lang.-Edu. J. 2019 , 10 , 1–7. [ Google Scholar ]

- Prensky, M. Digital Natives, Digital Immigrants. Horizon 2001 , 9 , 1–6. [ Google Scholar ]

- Al-Masri, E.; Kabu, S.; Dixith, P. Emerging Hardware Prototyping Technologies as Tools for Learning. IEEE Access 2020 , 8 , 80207–80217. [ Google Scholar ] [ CrossRef ]

- Ritgerð Mobile Apps for Learning English. 2013. Available online: https://1library.net/document/q7o4v2oy-mobile-apps-for-learning-english.html (accessed on 21 March 2022).

- Black, E.; Richard, F.; Lindsay, T. K-12 Virtual Schooling, COVID-19, and Student Success. JAMA—J. Am. Med. Assoc. 2020 , 324 , 833–834. [ Google Scholar ] [ CrossRef ]

- Guggenberger, T.; Lockl, J.; Röglinger, M.; Schlatt, V.; Sedlmeir, J.; Stoetzer, J.-C.; Urbach, N.; Völter, F. Emerging digital technologies to combat future crises: Learnings from COVID-19 to be prepared for the future. Int. J. Innov. Technol. Manag. 2020 , 18 , 2140002. [ Google Scholar ] [ CrossRef ]

- Sarica, G.N.; Cavus, N. New trends in 21st Century English learning. Procedia—Soc. Behav. Sci. 2009 , 1 , 439–445. [ Google Scholar ] [ CrossRef ]

- Bolstad, R.; Lin, M. Students’ Experiences of Learning in Virtual Classrooms ; NZCER: Wellington, New Zealand, 2014; Volume 15, Retrieved May 2012. [ Google Scholar ]

- Arshad, M.; Saeed, M.N. Emerging technologies for e-learning and distance learning: A survey. In Proceedings of the 2014 International Conference on Web and Open Access to Learning (ICWOAL), Dubai, United Arab Emirates, 25–27 November 2014; IEEE: Piscataway, NJ, USA, 2015. [ Google Scholar ] [ CrossRef ]

- Dingli, A.; Seychell, D. The New Digital Natives ; Springer: Berlin, Germany, 2015; ISBN 9783662465905. [ Google Scholar ]

- Choo, C.C.; Devakaran, B.; Chew, P.K.H.; Zhang, M.W.B. Smartphone application in postgraduate clinical psychology training: Trainees’ perspectives. Int. J. Environ. Res. Public Health 2019 , 16 , 4206. [ Google Scholar ] [ CrossRef ]

- Sharma, S. Smartphone based language learning through mobile apps. Int. J. Recent Technol. Eng. 2019 , 8 , 8040–8043. [ Google Scholar ] [ CrossRef ]

- Parsons, D. (Ed.) Combining E-Learning and M-Learning: New Applications of Blended Educational Resources ; Massey University: Palmerston North, New Zealand, 2011. [ Google Scholar ] [ CrossRef ] [ Green Version ]

- Persson, V.; Nouri, J. A systematic review of second language learning with mobile technologies. Int. J. Emerg. Technol. Learn. 2018 , 13 , 188–210. [ Google Scholar ] [ CrossRef ]

- Vate-U-Lan, P. Mobile learning: Major challenges for engineering education. In Proceedings of the 2008 38th Annual Frontiers in Education Conference, Saratoga Springs, NY, USA, 22–25 October 2008; pp. 11–16. [ Google Scholar ] [ CrossRef ]

- Cisco. Annual Internet Report (2018–2023). 2020. Available online: https://www.cisco.com/c/en/us/solutions/collateral/executive-perspectives/annual-internet-report/white-paper-c11-741490.html (accessed on 1 April 2022).

- Segev, E. Mobile Learning_ Improve Your English Anytime, Anywhere_British Coun. 2014. Available online: https://www.britishcouncil.org/voices-magazine/mobile-learning-improve-english-anytime-anywhere (accessed on 2 April 2022).

- Godwin-Jones, R. Emerging technologies from memory palaces to spacing algorithms: Approaches to second-language vocabulary learning. Lang. Learn. Technol. 2010 , 14 , 4–11. [ Google Scholar ]

- Guo, H. Analysing and Evaluating Current Mobile Applications for Learning English Speaking. British Council ELT Master’s Dissertation Awards: Commendation 2014; pp. 2–92. Available online: https://www.teachingenglish.org.uk/sites/teacheng/files/analysing_and_evaluating_current_mobile_applications_v2.pdf (accessed on 3 April 2022).

- Hossain, M. Exploiting Smartphones and Apps for Language Learning: A Case Study with The EFL Learners in a Bangladeshi University. Rev. Public Adm. Manag. 2018 , 6 , 1. [ Google Scholar ] [ CrossRef ]

- Hashemi, M.; Ghasemi, B. Using mobile phones in language learning/teaching. Procedia-Soc. Behav. Sci. 2011 , 15 , 2947–2951. [ Google Scholar ] [ CrossRef ]

- Huang, L. Acceptance of mobile learning in classroom instruction among college English teachers in China using an extended TAM. In Proceedings of the 2017 International Conference of Educational Innovation through Technology (EITT), Osaka, Japan, 7–9 December 2017; IEEE: Piscataway, NJ, USA, 2018; Volume 2018, pp. 283–287. [ Google Scholar ] [ CrossRef ]

- Metafas, D.; Politi, A. Mobile-assisted learning: Designing class project assistant, a research-based educational app for project based learning. In Proceedings of the 2017 IEEE Global Engineering Education Conference (EDUCON), Athens, Greece, 25–28 April 2017; pp. 667–675. [ Google Scholar ] [ CrossRef ]

- Wan Azli, W.U.A.; Shah, P.M.; Mohamad, M. Perception on the Usage of Mobile Assisted Language Learning (MALL) in English as a Second Language (ESL) Learning among Vocational College Students. Creat. Educ. 2018 , 9 , 84–98. [ Google Scholar ] [ CrossRef ]

- Mierlus-Mazilu, I. M-learning objects. In Proceedings of the 2010 International Conference on Electronics and Information Engineering, Kyoto, Japan, 1–3 August 2010; Volume 1, pp. V1-113–V1-117. [ Google Scholar ] [ CrossRef ]

- Özdoǧan, K.M.; Başoǧlu, N.; Erçetin, G. Exploring major determinants of mobile learning adoption. In Proceedings of the 2012 Proceedings of PICMET ‘12: Technology Management for Emerging Technologies, Vancouver, BC, Canada, 29 July–2 August 2012; pp. 1415–1423. [ Google Scholar ]

- Yang, Z. A study on self-efficacy and its role in mobile-assisted language learning. Theory Pract. Lang. Stud. 2020 , 10 , 439–444. [ Google Scholar ] [ CrossRef ]

- Criollo-C, S.; Lujan-Mora, S.; Jaramillo-Alcazar, A. Advantages and disadvantages of m-learning in current education. In Proceedings of the IEEE World Engineering Education Conference (EDUNINE), Buenos Aires, Argentina, 11–14 March 2018. [ Google Scholar ] [ CrossRef ]

- Eshankulovna, R.A. Modern technologies and mobile apps in developing speaking skill. Linguist. Cult. Rev. 2021 , 5 , 1216–1225. [ Google Scholar ] [ CrossRef ]

- Ozdamli, F.; Cavus, N. Basic elements and characteristics of mobile learning. Procedia-Soc. Behav. Sci. 2011 , 28 , 937–942. [ Google Scholar ] [ CrossRef ]

- Zou, B.; Li, J. Exploring Mobile Apps for English Language Teaching and Learning ; Research-Publishing: Wuhan, China, 2015; pp. 564–568. [ Google Scholar ] [ CrossRef ]

- Garay-Cortes, J.; Uribe-Quevedo, A. Location-based augmented reality game to engage students in discovering institutional landmarks. In Proceedings of the 2016 7th International Conference on Information, Intelligence, Systems & Applications (IISA), Chalkidiki, Greece, 13–15 July 2016. [ Google Scholar ]

- Ameri, M. The Use of Mobile Apps in Learning English Language. Bp. Int. Res. Crit. Linguist. Educ. J. 2020 , 3 , 1363–1370. [ Google Scholar ] [ CrossRef ]

- Alzatma, A.A.; Awfiq Khader, K. Using Mobile Apps to Improve English Speaking Skills of EFL Students at the Islamic University of Gaza. Master’s Thesis, Islamic University of Gaza, Gaza, Palestine, 2020. [ Google Scholar ] [ CrossRef ]

- Rizqiningsih, S.; Hadi, M.S. Multiple Intelligences (MI) on Developing Speaking Skills. Engl. Lang. Focus 2019 , 1 , 127. [ Google Scholar ] [ CrossRef ]

- Aznar, A. Different Methodologies Teaching English. 2014. Available online: https://documents.pub/document/different-methodologies-teaching-english-277pdf-analyze-each-of-these-methods.html?page=1 (accessed on 1 April 2022).

- Chen, X. Evaluating Language-learning Mobile Apps for Second-language Learners. J. Educ. Technol. Dev. Exch. 2016 , 9 , 42–43. [ Google Scholar ] [ CrossRef ]

- Duolingo. Duolingo—La Mejor Manera de Aprender un Idioma a Nivel Mundial. 2020. Available online: https://es.duolingo.com/course/en/es/Aprender-ingl%C3%A9s (accessed on 7 July 2022).

- Teske, K. Learning Technology Review—Duolingo. Calico J. 2017 , 34 , 393–401. [ Google Scholar ] [ CrossRef ]

- Reima, A.-J. Mobile Apps in the EFL College Classroom Aims of Study Why Use Mobile Apps Types of Language Apps. J. Res. Sch. Prof. Engl. Lang. Teach. 2020 , 4 , 1–7. [ Google Scholar ]

- Cambridge. Assessing Speaking Performance—Level A2 Examiners and Speaking Assessment in the A2 Key Exam. 2011. Available online: https://docplayer.net/30294467-Assessing-speaking-performance-level-a2.html (accessed on 2 April 2022).

- Bustillo, J.; Rivera, C.; Guzmán, J.G.; Ramos Acosta, L. Benefits of using a mobile application in learning a foreign language. Sist. Telemática 2017 , 15 , 55–68. [ Google Scholar ] [ CrossRef ]

- Baldauf, M.; Brandner, A.; Wimmer, C. Mobile and gamified blended learning for language teaching—Studying requirements and acceptance by students, parents and teachers in the wild. In Proceedings of the 16th International Conference on Mobile and Ubiquitous Multimedia, Stuttgart, Germany, 26–29 November 2017; pp. 13–24. [ Google Scholar ] [ CrossRef ]

Click here to enlarge figure

| Category | Least Suitable (1–3) | Average (4–7) | Most Suitable (8–10) |

|---|---|---|---|

| Content should provide opportunities to advance learners’ English skills, with connection to their prior knowledge. | Content fails to help achieve learning goals or autonomous learning. | Content helps achieve the learning goals but is neither autonomous learning nor related to prior knowledge. | Content helps achieve the learning goals, autonomous learning, and relates prior knowledge to new content. |

| The skills provided in the app should be consistent with the targeted learning goal. | Skills (especially listening and speaking skills) reinforced in the app were not consistent with the targeted skill or concept. | Skills (especially listening and speaking skills) reinforced were prerequisite to foundation skills for the targeted skill or concept. | Skills (especially listening and speaking skills) reinforced were strongly connected to the target skill or concept. |

| Learners should be provided with feedback to conduct self-evaluation. | Feedback is limited to correct learner response. | Feedback is specific and allows for learners to try again in order to improve learning performance. | Feedback is specific, which results in improved learner performance; data are available to learners and instructors. |

| Elements are embedded to engage and motivate language learners to use the app. | No elements are embedded to encourage learners’ self-directed learning. | Limited elements are embedded to encourage learners’ self-directed learning. | Elements are embedded to encourage learners’ self-directed learning. |

| Learners are provided with clearly indicated menus and icons to easily navigate through the app. | Menus and icons are not clearly indicated, no onscreen help and/or tutorials are available, and learners need constant help to use the app. | Menus and icons are clearly indicated, but no on-screen help and/or tutorials are available. Learners need to have an instructor in order to review how to use the app. | Menus and icons are clearly indicated, and on-screen help and/or tutorials are available so that learners can launch and navigate the app independently. |

| Learners have their individualized needs met including font size and customizable settings to personalize their learning. | Text size cannot be adjusted, and few customizations are provided. | Text size can be adjusted according to user’s needs, and some customizations are provided. | Text size can be adjusted to suit diverse needs, and customizations and more individualized options are provided. |

| Learners can share their learning progress, issues, or concerns in learning. | Limited performance data, or learner’s progress is not accessible. | Performance data or learner progress is available in the app, but exporting is limited. | Specific performance summary or learner progress is saved in the app and can be exported to a teacher or an audience. |

| Mobile App (Code *) | App Name | Google Play Store Rating | Downloads | Comment |

|---|---|---|---|---|

| A | Aba English—aprender inglés | 4.6 | 10,000,000+ | does not track progress |

| B | Andy English—habla en inglés | 4.6 | 1,000,000+ | poor content |

| C | Aprende a hablar inglés (talk englihs) | 4.6 | 5,000,000+ | little content, lots of ads |

| D | Aprende inglés—escuchando y hablando | 4.7 | 1,000,000+ | no levels |

| E | Aprende inglés, vocabulario | 4.7 | 1,000,000+ | focuses on vocabulary |

| F | Aprender a hablar inglés (hello) | 4.7 | 1,000,000+ | poor vocabulary and assessment |

| G | Aprender a hablar inglés (miracle full box) | 4.7 | 1,000,000+ | no levels |

| H | Basic English for beginners | 4.7 | 1,000,000+ | low level |

| J | Bytalk: speak English online | 4.7 | 100,000+ | not safe |

| K | Cake | 4.8 | 50,000,000+ | no specific themes |

| L | Conversación en inglés | 4.6 | 1,000,000+ | focuses on listening |

| N | Curso de inglés para principiantes gratis | 4.9 | 1,000,000+ | low level |

| Q | English 1500 conversation | 4.7 | 1,000,000+ | focuses on listening |

| R | English conversation | 4.9 | 1,000,000+ | lots of ads |

| T | English conversation practice case | 4.9 | 100,000+ | time consuming |

| V | English skills—practicar y aprender inglés | 4.5 | 1,000,000+ | lack of short dialogues |

| X | Habla inglés—comunicar | 4.5 | 1,000,000+ | no audios |

| Y | Hablar inglés americano | 4.7 | 500,000+ | complicated to use |

| Z | Hallo: hablar inglés | 4.5 | 1,000,000+ | not safe |

| AA | Hello English: learn English | 4.6 | 10,000,000+ | complicated to use |

| BB | Hello English: learn English skills | 4.5 | 1,000,000+ | audios do not work |

| CC | Ielts listening English—eli | 4.9 | 1,000,000+ | standardized test |

| FF | Practica conversar en inglés (talk english) | 4.5 | 5,000,000+ | not eye-catching |

| HH | Pronunciación correcta—aprende inglés | 4.6 | 1,000,000+ | focuses or pronunciation |

| II | Reallife | 4.9 | 100,000+ | focuses on listening |

| JJ | Redkiwi: escucha&habla inglés | 4.7 | 100,000+ | focuses on listening |

| KK | English pronunciation (yobimi group) | 4.7 | 500,000+ | focus on pronunciation |

| LL | Speak English pro: American pronunciation | 4.7 | 100,000+ | focuses or pronunciation |

| MM | Speak English! | 4.5 | 1,000,000+ | not safe |

| Category | Mobile App (Code *) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| O | P | W | GG | NN | DD | S | M | EE | I | U | |

| Content quality | 7 | 7 | 6 | 8 | 8 | 3 | 8 | 8 | 8 | 3 | |

| Pedagogical coherence | 8 | 7 | 7 | 6.5 | 9 | 5 | 8 | 8 | 8 | 4 | |

| Feedback and self-correction | 8 | 5 | 7.5 | 7 | 3 | 7 | 5 | 7 | 5 | 7 | |

| Motivation | 4 | 6 | 6 | 7 | 5 | 6 | 3 | 6 | 3 | 5 | |

| Usability | 6 | 7 | 6 | 6 | 7 | 9 | 4 | 3 | 4 | 8 | |

| Customization | 8 | 8 | 6 | 3 | 6 | 4 | 5 | 4 | 4 | 5 | |

| Sharing | 7 | 7 | 6 | 6 | 3 | 7 | 7 | 4 | 4 | 3 | |

| Total score | 48 | 47 | 44.5 | 43.5 | 41 | 41 | 40 | 40 | 36 | 35 | |

| Week 1: Greetings | ||

|---|---|---|

| Objective: To identify the different forms of greetings and apply them in conversations in different spaces, such as offices, restaurants, parks, medical centers, etc. | ||

| Resources: teacher, students, flash cards, smartphones, Duolingo app | ||

| Warm up | The teacher will start the class with a game. Say “Hello”. The teacher will ask the students to go out to the playground and form a circle. Then, the teacher will explain to the students the dynamics of the game. The students should greet the classmates who meet the teacher’s characteristics. For example, say “hello” to whoever is wearing green pants. | 5 min |

| Duolingo Time | The students have already created their accounts and profiles and will start with the first session. The teacher asks the students to choose a space on the playground and work on the “Greeting” session of the application. The students will complete the following activities: complete sentences and questions to make a short conversation, match words with their meaning, read short paragraphs and choose the correct answer, answer questions, listen to sentences and repeat them or write them down, and translate sentences from Spanish to English or from English to Spanish. All of these exercises are related to the theme. The students have 15 min to do so. At the end of the lesson, the students should show the teacher their crown, which signifies that they have successfully completed their session. While the students work on the application, the teacher observes the students for any questions or doubts. | 15 min |

| Speaking in real context (Evaluation) | The teacher will ask students to enter the classroom. The teacher will hold in his/her hands two bags containing flashcards about space and time. Each group of students will choose whether their conversation takes place in the morning, afternoon, evening, or night and in which space. The teacher will ask the students to record their conversations with their cell phones and then send them to his or her email. | 15 min |

| Feedback | The teacher will take notes on errors as the activity unfolds. At the end of the speaking activity, the teacher will give general feedback on and correct errors across the board. As homework, the students will have to complete the same level of Duolingo at home. | 10 min |

| Week 1: Making Plans for the Weekend | ||

|---|---|---|

| Objective: To know how to ask a friend what he/she would like to do on the weekend. | ||

| Resources: teacher, students, Sarah’s, Lukas’s and Jake’s Photo, smartphones, Duolingo app | ||

| Warm up | The teacher will ask the students to go out to the playground and play in groups of the number she indicates. The student who is left out of a group will answer the following question: What would you like to do on the weekend? The teacher will play this game with the students several times so that different students can answer the question. The teacher will explain to the students that the theme of the class will be to talk about plans for the weekend. | 5 min |

| Duolingo Time | The teacher asks the students to choose a space on the playground and work on the “Plans” session of the application. Students will complete the following activities: complete sentences and questions to make a short conversation, match words with their meaning, read short paragraphs and choose the correct answer, answer questions, listen to sentences and repeat them or write them down, and translate sentences from Spanish to English or from English to Spanish. All of these exercises are related to the theme. The students have 15 min to do so. At the end of the lesson, the students should show the teacher their crown, which signifies that they have successfully completed their session. While the students work on the application, the teacher observes the students for any questions or doubts. | 15 min |

| Speaking in real context (Evaluation) | The teacher will show the group a picture of Sara, Jake and Lukas, teenagers. The teacher will tell the students their story. Sara is a teenager who is going out with her friends for the weekend. The teacher will ask the students to choose a character and ask their friends about their plans for the weekend. For this activity, students will work in pairs. Each pair will come to the front of the class and discuss their plans. | 15 min |

| Feedback | The teacher will take notes on errors as the activity unfolds. At the end of the speaking activity, the teacher will give general feedback on and correct errors across the board. As homework, the students will have to complete the same level of Duolingo at home. | 10 min |

| Week 2: Comparatives and Superlatives | ||

|---|---|---|

| Objective: To identify how to use and express the comparative and superlative forms in a sentence. Vocabulary (adjectives) | ||

| Resources: teacher, students, students’ family photos, smartphones, Duolingo app | ||

| Warm up | The teacher will show the students a picture of his/her family. The teacher will describe each family member using comparative and superlative sentences. Example: This is my sister Karen. She is the youngest in the family. In this way the teacher will indicate to the students the topic of the activity. | 5 min |

| Duolingo Time | The teacher asks the students to choose a space on the playground and work on the “Comparatives and Superlatives” session of the application. Students will complete the following activities: complete sentences and questions to make a short conversation, match words with their meaning, read short paragraphs and choose the correct answer, answer questions, listen to sentences and repeat them or write them down, and translate sentences from Spanish to English or from English to Spanish. All of these exercises are related to the theme. The students have 15 min to do so. At the end of the lesson, the students should show the teacher their crown, which signifies that they have successfully completed their session. While the students work on the application, the teacher observes the students for any questions or doubts. | 15 min |

| Speaking in real context (Evaluation) | The teacher will ask students to bring in a family photo. In each of the groups, the students will describe their family pictures. Example: This is my family. My brother Carlos is the youngest of all, my sister Laura is taller than my mom, but my dad is the tallest in the family. This time, students will come to the front of the class voluntarily. Only those who want to. This is to see if students have developed more confidence in public speaking in front of their peers. | 15 min |

| Feedback | The teacher will take notes on errors as the activity unfolds. At the end of the speaking activity, the teacher will give general feedback on and correct errors across the board. As homework, the students will have to complete the same level of Duolingo at home. | 10 min |

| Week 2: Likes and Dislikes | ||

|---|---|---|

| Objective: To identify how to use and express likes and dislikes in a sentence. | ||

| Resources: teacher, students, questions, smartphones, Duolingo app | ||

| Warm up | The teacher will start the class with a “Speaking Telephone” game. The teacher will ask the students to form two lines. The teacher will say the phrase “I like fruits and candy, but I don’t like soup, pizza and noodles” to the first student in each line. The students will have to relay the message to their classmates. At the end, the last student will say the message out loud. The students will compare their message with the original. The results will be a lot of fun. | 5 min |

| Duolingo Time | The teacher asks the students to choose a space on the playground and work on the “Likes and Dislikes” session of the application. Students will complete the following activities: complete sentences and questions to make a short conversation, match words with their meaning, read short paragraphs and choose the correct answer, answer questions, listen to sentences and repeat them or write them down, and translate sentences from Spanish to English or from English to Spanish. All of these exercises are related to the theme. The students have 15 min to do so. At the end of the lesson the students should show the teacher their crown, which signifies that they have successfully completed their session. While the students work on the application, the teacher observes the students for any questions or doubts. | 15 min |

| Speaking in real context (Evaluation) | The teacher will share with each group of students a list of questions. The students will have to discuss these questions and discuss and present their points of view. This activity should be recorded and sent to the teacher’s email. Questions: | 15 min |

| Feedback | The teacher will take notes on errors as the activity unfolds. At the end of the speaking activity, the teacher will give general feedback on and correct errors across the board. As homework, the students will have complete the same level of Duolingo at home. | 10 min |

| Week 3: What Kind of Friend Are You? | ||

|---|---|---|

| Objective: To identify and use adjectives to describe themselves and others to express what kinds of friends they are. | ||

| Resources: teacher, students, TikTok questions, smartphones, Duolingo app ( (accessed on 1 March 2022)) ( (accessed on 1 March 2022)) | ||

| Warm up | Students will record a video about 14 questions with their friend. The teacher will ask students to form pairs, then they should put a cell phone in front of their faces. Students should activate the questions, close their eyes and point to the friend who would be the answer to the question. For example: Who is the funniest of the two? | 5 min |

| Duolingo Time | The teacher asks the students to choose a space on the playground and work on the “Friends” session of the application. Students will complete the following activities: complete sentences and questions to make a short conversation, match words with their meaning, read short paragraphs and choose the correct answer, answer questions, listen to sentences and repeat them or write them down, and translate sentences from Spanish to English or from English to Spanish. All of these exercises are related to the theme. The students have 15 min to do so. At the end of the lesson, the students should show the teacher their crown, which signifies that they have successfully completed their session. While the students work on the application, the teacher observes the students for any questions or doubts. | 15 min |

| Speaking in real context (Evaluation) | The teacher will write the names of all the students on paper. Then ask each student to take a piece of paper, The student will read the name and describe the person to their classmates to guess who the person is. | 15 min |

| Feedback | The teacher will take notes on errors as the activity unfolds. At the end of the speaking activity, the teacher will give general feedback on and correct errors across the board. As homework, the students will have to complete the same level of Duolingo at home. | 10 min |

| Week 3: Emotions | ||

|---|---|---|

| Objective: To identify and use feelings to express how they feel about different actions or events. | ||

| Resources: teacher, students, Spider Man movie pictures, smartphones, Duolingo app | ||

| Warm up | The teacher will project in class pictures of famous actors from the latest Spider Man movie and ask the students about the emotions in each picture. If the actor or actress is happy, upset, excited and why. Students will respond to each question by expressing their point of view. | 5 min |

| Duolingo Time | The teacher asks the students to choose a space on the playground and work on the “Emotions” session of the application. Students will complete the following activities: complete sentences and questions to make a short conversation, match words with their meaning, read short paragraphs and choose the correct answer, answer questions, listen to sentences and repeat them or write them down, and translate sentences from Spanish to English or from English to Spanish. All of these exercises are related to the theme. The students have 15 min to do so. At the end of the lesson, the students should show the teacher their crown, which signifies that they have successfully completed their session. While the students work on the application, the teacher observes the students for any questions or doubts. | 15 min |

| Speaking in real context (Evaluation) | The teacher will read short paragraphs to his/her students. Students should listen and then say how they feel about the situation. For example: Pandemic, Animals in Danger of Extinction, The Premiere of a New Movie, etc. | 15 min |

| Feedback | The teacher will take notes on errors as the activity unfolds. At the end of the speaking activity, the teacher will give general feedback on and correct errors across the board. As homework, the students will have to complete the same level of Duolingo at home. | 10 min |

| Week 4: Nature | ||

|---|---|---|

| Objective: To identify nature-related vocabulary and use it in conversations. | ||

| Resources: teacher, students, nature audio, smartphone, Duolingo app (accessed on 5 April 2022) | ||

| Warm up | The teacher will play an audio of nature sounds. The teacher will ask the students to use the sounds to describe a place. Students who wish to do so will raise their hand and give a brief description of a place based on the sounds they heard. | 5 min |

| Duolingo Time | The teacher asks the students to choose a space on the playground and work on “Nature” session of the application. Students will complete the following activities: complete sentences and questions to make a short conversation, match words with their meaning, read short paragraphs and choose the correct answer, answer questions, listen to sentences and repeat them or write them down, and translate sentences from Spanish to English or from English to Spanish. All of these exercises are related to the theme. The students have 15 min to do so. At the end of the lesson, the students should show the teacher their crown, which signifies that they have successfully completed their session. While the students work on the application, the teacher observes the students for any questions or doubts. | 15 min |

| Speaking in real context (Evaluation) | The teacher will ask the students to record with their phones a video advertising a company that organizes camping trips. Students should mention in their videos the activities that can be performed at the camp. Students should send their videos to the teacher’s email address. | 15 min |

| Feedback | The teacher will take notes on errors as the activity unfolds. At the end of the speaking activity, the teacher will give general feedback on and correct errors across the board. As homework, the students will have to complete the same level of Duolingo at home. | 10 min |

| Week 4: Hobbies | ||

|---|---|---|

| Objective: To identify nature-related vocabulary and use it in conversations. | ||

| Resources: teacher, students, fancy hat, smartphones, Duolingo app | ||

| Warm up | The teacher will bring a fancy hat to class. The teacher will sit at the front of the class and ask one of the students to be the interviewer and wear the fancy hat; the topic will be hobbies. Students can take turns wearing the hat and interviewing the teacher. | 5 min |

| Duolingo Time | The teacher asks the students to choose a space on the playground and work on the “Hobbies” session of the application. Students will complete the following activities: complete sentences and questions to make a short conversation, match words with their meaning, read short paragraphs and choose the correct answer, answer questions, listen to sentences and repeat them or write them down, and translate sentences from Spanish to English or from English to Spanish. All of these exercises are related to the theme. The students have 20 min to do so. At the end of the lesson, the students should show the teacher their crown, which signifies that they have successfully completed their session. While the students work on the application, the teacher observes the students for any questions or doubts. | 15 min |

| Speaking in real context (Evaluation) | This time, the teacher will put on the fancy hat and interview the students briefly. Students will choose to be a superhero like Wonderwoman or Aquaman, wear their masks and answer questions about their hobbies. | 15 min |

| Feedback | The teacher will take notes on errors as the activity unfolds. At the end of the speaking activity, the teacher will give general feedback on and correct errors across the board. As homework, the students will have to complete the same level of Duolingo at home. | 10 min |

| Intervention Proposal | Improve Speaking Skills in English Language Teaching Process through the Use of Duolingo App. | |||||||

|---|---|---|---|---|---|---|---|---|

| x | ||||||||

| x | ||||||||

| x | ||||||||

| x | ||||||||

| x | ||||||||

| x | ||||||||

| x | ||||||||

| x | ||||||||

| Name: | Where I Am? | ||

|---|---|---|---|

| I speak fluently, clearly and loudly. | |||

| I understand what my friends are talking about. | |||

| My friends understand when I say something. | |||

| I am not afraid to express my ideas out loud. | |||

| When I work in a group, we all listen to each other. | |||

| I am more confident to speak when I work in a group than when I work alone. | |||

| B2 | Grammar and Vocabulary | Discourse Management | Pronunciation | Interactive Communication |

|---|---|---|---|---|

| Performance shares features of Bands 3 and 5 | ||||

| Performance shares features of Bands 1 and 3 | ||||

| Performance below Band 1 | ||||

| MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

Share and Cite

Criollo-C, S.; Guerrero-Arias, A.; Vidal, J.; Jaramillo-Alcazar, Á.; Luján-Mora, S. A Hybrid Methodology to Improve Speaking Skills in English Language Learning Using Mobile Applications. Appl. Sci. 2022 , 12 , 9311. https://doi.org/10.3390/app12189311

Criollo-C S, Guerrero-Arias A, Vidal J, Jaramillo-Alcazar Á, Luján-Mora S. A Hybrid Methodology to Improve Speaking Skills in English Language Learning Using Mobile Applications. Applied Sciences . 2022; 12(18):9311. https://doi.org/10.3390/app12189311

Criollo-C, Santiago, Andrea Guerrero-Arias, Jack Vidal, Ángel Jaramillo-Alcazar, and Sergio Luján-Mora. 2022. "A Hybrid Methodology to Improve Speaking Skills in English Language Learning Using Mobile Applications" Applied Sciences 12, no. 18: 9311. https://doi.org/10.3390/app12189311

Article Metrics

Article access statistics, further information, mdpi initiatives, follow mdpi.

Subscribe to receive issue release notifications and newsletters from MDPI journals

Academia.edu no longer supports Internet Explorer.

To browse Academia.edu and the wider internet faster and more securely, please take a few seconds to upgrade your browser .

Enter the email address you signed up with and we'll email you a reset link.

- We're Hiring!

- Help Center

Improving Students’ Speaking Skills through Task-Based Learning: An Action Research at the English Department

2020, International Journal of Multicultural and Multireligious Understanding

This study aims to improve students' speaking skills at the Department of English. Based on interviews carried out to get initial data on the students' speaking skills, it was shown that the students had problems in speaking due to inadequate knowledge of the language which in turn made the students felt unconfident to speak. The students were not familiar with various speaking activities facilitating them to speak. They read the text to convey ideas and a lack of strategies when speaking. To help the students, task-based learning was adapted through action research in one-semester courses. Fifteen students in the third semester participated in this study. The data were taken from the results of the pre-test to post-test, interview, and observation. The findings reveal that the use of task-based learning helps the students improve their speaking skills of three indicators assessed: accuracy, vocabulary, and comprehension. The students manage to complete the tasks by conduct...

Related Papers

International Journal of Multicultural and Multireligious Understanding

Moh. Farid Maftuh

In the preliminary study, the researchers found that the average value of fluency in English speaking is 2.25. This is because of the teacher always uses monotonous, less enjoyable learning strategy and the teacher only focuses on completing the material so that the learning process can only transfer knowledge so that the level of mastery of the material is low which ultimately decreases student achievement. To overcome this problem, researchers propose a strategy in teaching speaking skill, namely role-playing learning strategy. This study is designed to improve fluency in English speaking by using role playing learning strategy. This study aims to explain how role-playing learning strategy can improve fluency in English speaking in the 4th semester, English Study Program, Business Administration Department, State Polytechnic of Madiun. This study uses a Classroom Action Research (CAR) design which is collaborative in nature where researchers and teacher collaborate in carrying out...

khusnul khoiriyah

The aims of this research were to design the integrated-skills materials for the four grade students of Islamic elementary schools, and to find out the appropriateness of the materials developed. The type of this research was Research and Development (R & D). The research model followed the Dick and Carey model in developing the materials which simplified into six steps. This research employed three techniques in collecting the data namely survey, interview and observation. Based on the techniques, the instruments of the research were questionnaires, the guideline for interview and observation sheets. The result of this study is the integrated-skills materials for the students of Islamic elementary school entitled “Let’s learn English” which consisted of integrated-skills activities and some Islamic values in every unit. The unit design of the materials presented the warming-up activity, the main activity including integrated skills activities, games, and grammar notes, glossary, re...

eka apriani

The present research aimed at investigating kinds of cohesive devices and the problems of using those cohesive devices in writing English paragraphs. 10 undergraduate English students from an institute in Curup, Bengkulu, Indonesia were involved as the participants purposively. Document analysis was conducted towards students’ written paragraphs to garner the data about kinds of cohesive devices, and they were then interviewed to reveal information with respect to their problems of using cohesive devices. The data were analyzed using an interactive model. The present research revealed that the students had used some kinds of cohesive devices such as references in the form of personal pronoun and demonstrative reference. They used conjunctions in the form of additive, adversative, and clausal conjunctions. They used reiteration in the form of making repetitions of the same words. This condition indicated that they had moderately been able to use general cohesive devices. However, the...

Sumardi Sumardi

This research is focused on the use of Powton as a digital medium to improve the students’ pronunciation in speaking class. The researcher applies a classroom action research which consisting of two cycles with three meetings in every cycle. Collections of quantitative and qualitative data are gained from test, observation, questionnaire, interview, and diary. The quantitative data obtained from the test is analyzed in order to find out the mean score. Furthernore, the qualitative data are analyzed by: assembling the data, coding the data, comparing the data, building interpretations, and reporting the outcomes. The result of the research shows that Powtoon as a digital medium could improve: (1) the students’ pronunciation in speaking class; and (2) the students’ learning motivation. Hence, the findings reveal that improving the students’ pronunciation in speaking using Powtoon was successful viewed from some dimensions. The implementation of using Powtoon improved the teaching and...

Suranto Suranto

Speaking as one of the competences in English has become main purpose for those who study English. This competence becomes a symbol for students to show that they have mastered English. In fact, teaching speaking in school often failed because the teacher didn’t include culture in teaching it. Most teachers forget that language is an important part of the culture. The aim of this research was to investigate deeply the strategy of teaching speaking through culture. By the ethnographic design, the researcher revealed the strategy used by the teacher in teaching speaking through culture. The object of the study was students of Senior High School in the higher level. Data were collected through observation, interview with the teachers, students and document review. The finding indicated that the teacher integrated the adaptive strategy in teaching speaking with three level of culture that is: cultural knowledge, cultural awareness and cultural competence.

Sudirman Wilian

The aim of this study was to determine whether or not classroom learning environment strategy is effective to increase students’ vocabulary acquisition. The research design was experimental study. There were four groups in this design; two groups for experimental group and two groups for control group. The sampling technique was random sampling which meant each subject or unit has an equal chance of being selected. The technique of data collecting in this research were documentation and testing. Data was analyzed by using t-test formula and t-table. The sample for this study consisted of one hundred and sixty-four (164) students. The data were collected through pre-test, treatment, and post-test where the experimental groups were treated by classroom learning environment strategy whereas the control groups were treated by using common teaching. The finding showed that the t-test value was higher than t-table. 5.3839 and 7.0249 > 1.990 at significant level .05 5.3839 and 7.0249 &g...

tarlan ghashghaei

The emergence of Computer Assisted Language Learning (CALL) has drastically changed the mode of teaching in many educational contexts. CALL can not only facilitate meaningful language learning but it can also accelerate it while giving students’ learning more depth and breath. Different types of learning in general and distance learning in particular, which is now prevalent in the present Corona pandemic, is not feasible without computer-based training. A mixed-method research design was adopted in one semester. The study measurement consisted of two sections: a quantitative section in which a survey questionnaire was utilized and a qualitative one in which, on the whole, 30 sessions of semi-structured interviews were conducted with participants to understand instructors’ opinion about merits of using CALL in teaching English. Using non-parametric test of Spearman correlation and the independent samples t-test, it was illuminated that having computer facilities impels instructors to...

Mansour Amini

This experimental study investigated the relationship between noticing of corrective feedback and L2 development considering the learners’ perspective on error correction. Specifically, it aimed to uncover the noticeability and effectiveness of recasts, prompts, a combination of the two, to determine a relationship between noticing of CF and learning of the past tense. The participants were four groups of college ESL learners (n = 40). Each group was assigned to a treatment condition, but the researcher taught the control group. CF was provided to learners in response to their mistakes in forming the past tense. While noticing of CF was assessed through immediate recall and questionnaire responses, learning outcomes were measured through picture description administered via pre-test, post-test, and delayed post-test design. Learner beliefs about CF were probed by means of a 40-item questionnaire. The results indicated that the noticeability of CF is dependent on the grammatical targ...

2017 IEEE International Conference on Power, Control, Signals and Instrumentation Engineering (ICPCSI)

anagha vaidya

Endang Situmorang

This research purpose is to investigate the compatibility of materials in the textbook Bahasa Inggris based on Tomlinson’s theory. The researchers use descriptive research as the type of the research. The data of the study are the content of English textbook entitled Bahasa Inggris SMA/SMK K-13. The analysis is done by using Three Level of Analysis by Little john (2011). They are: 1) Level 1 Analysis: ‘What is There’ (Objective Description), 2) Level 2 Analysis: ‘What is Required of Users’ (Subjective Analysis), and 3) Level 3 Analysis: ‘What is Implied’ (Subjective Inference). The result of this study shows that the English textbook Bahasa Inggris fulfills 15 criteria or 93,75% of Tomlinson’s theory. Therefore the textbook is suitable to be used by the students

Loading Preview

Sorry, preview is currently unavailable. You can download the paper by clicking the button above.

RELATED PAPERS

Ady Tandjung

Desti Ariani

Elok Nimasari

Harvest: An International Multidisciplinary and Multilingual Research Journal

Maulina Maulina

armyta puspitasari

Endang Fauziati

Journal of English Teaching and Learning Issues

Mochammad Ircham Maulana

Sara Kashefian-Naeeini

ENGLISH JOURNAL

Alan Jaelani

International Journal of Multicultural and Multireligious Understanding IJMMU

Mansour Amini , International Journal of Multicultural and Multireligious Understanding IJMMU

Asian Journal of Education and Training

Khomkrit Tachom

Raheni Suhita

The Asian EFL Journal

Rahmatullah Syaripuddin

Didascein : Journal of English Education

Fitri Novia

JEELS (Journal of English Education and Linguistics Studies)

Moh. Fikri Nugraha Kholid

Hassan M . Kassem

mutiarani pionera

Andang Saehu

International Journal of English Language Education

Mohammed Makhlouf

Oktaviana Ratnawati

mohamad sumantri

Language Testing in Asia

Mahani Stapa

Theory and Practice in Language Studies

vildana dubravac

Untung Waluyo

International Journal of Instruction

Hakim Yassi

Journal of English Teaching, Applied Linguistics and Literatures (JETALL)

Shofiah Nur Azizah

Proceedings of the 6th International Conference on Educational Research and Innovation (ICERI 2018)

helti maisyarah

Christian Lewier

Linguists : Journal Of Linguistics and Language Teaching

adjie pangestu

Cinthya Olivares Garita

Dwi Suputra

Yudi Juniardi

RELATED TOPICS

- We're Hiring!

- Help Center

- Find new research papers in:

- Health Sciences

- Earth Sciences

- Cognitive Science

- Mathematics

- Computer Science

- Academia ©2024

SYSTEMATIC REVIEW article

Assessing speaking proficiency: a narrative review of speaking assessment research within the argument-based validation framework.

- 1 Language Testing Research Centre, The University of Melbourne, Melbourne, VIC, Australia

- 2 Department of Linguistics, University of Illinois at Urbana-Champaign, Champaign, IL, United States

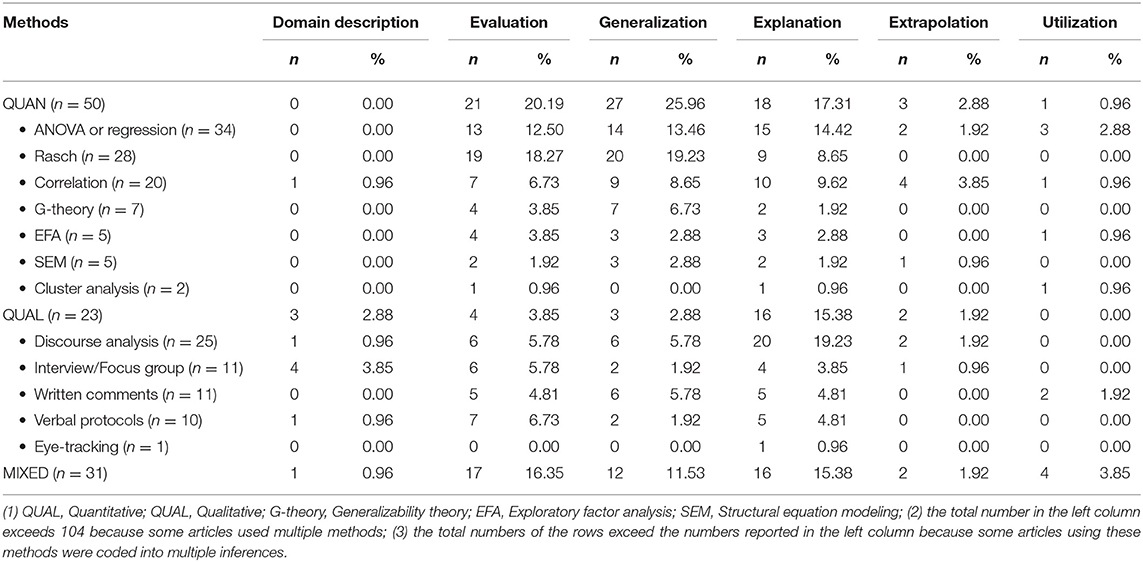

This paper provides a narrative review of empirical research on the assessment of speaking proficiency published in selected journals in the field of language assessment. A total of 104 published articles on speaking assessment were collected and systematically analyzed within an argument-based validation framework ( Chapelle et al., 2008 ). We examined how the published research is represented in the six inferences of this framework, the topics that were covered by each article, and the research methods that were employed in collecting the backings to support the assumptions underlying each inference. Our analysis results revealed that: (a) most of the collected articles could be categorized into the three inferences of evaluation, generalization , and explanation ; (b) the topics most frequently explored by speaking assessment researchers included the constructs of speaking ability, rater effects, and factors that affect spoken performance, among others; (c) quantitative methods were more frequently employed to interrogate the inferences of evaluation and generalization whereas qualitative methods were more frequently utilized to investigate the explanation inference. The paper concludes with a discussion of the implications of this study in relation to gaining a more nuanced understanding of task- or domain-specific speaking abilities, understanding speaking assessment in classroom contexts, and strengthening the interfaces between speaking assessment, and teaching and learning practices.

Introduction

Speaking is a crucial language skill which we use every day to communicate with others, to express our views, and to project our identity. In today's globalized world, speaking skills are recognized as essential for international mobility, entrance to higher education, and employment ( Fulcher, 2015a ; Isaacs, 2016 ), and are now a major component in most international and local language examinations, due at least in part to the rise of the communicative movement in language teaching and assessment ( Fulcher, 2000 ). However, despite its primacy in language pedagogy and assessment, speaking has been considered as an intangible construct which is challenging to conceptualize and assess in a reliable and valid manner. This could be attributable to the dynamic and context-embedded nature of speaking, but may be also due to the various forms that it can assume (e.g., monolog, paired conversation, group discussion) and the different conditions under which speaking happens (e.g., planned or spontaneous) (e.g., Luoma, 2004 ; Carter and McCarthy, 2017 ). When assessing speaking proficiency, multiple factors come into play which potentially affect test takers' performance and subsequently their test scores, including task features, interlocutor characteristics, rater effects, and rating scale, among others ( McNamara, 1996 ; Fulcher, 2015a ). In the field of language assessment, considerable research attention and efforts have been dedicated to researching speaking assessment. This is evidenced by the increasing number of research papers with a focus on speaking assessment that have been published in the leading journals in the field.

This prolonged growth in speaking assessment research warrants a systematic review of major findings that can help subsequent researchers and practitioners to navigate the plethora of published research, or provide them with sound recommendations for future explorations in the speaking assessment domain. Several review or position papers are currently available on speaking assessment, either reviewing the developments in speaking assessment more broadly (e.g., Ginther, 2013 ; O'Sullivan, 2014 ; Isaacs, 2016 ) or examining a specific topic in speaking assessment, such as pronunciation ( Isaacs, 2014 ), rating spoken performance ( Winke, 2012 ) and interactional competence ( Galaczi and Taylor, 2018 ). Needless to say, these papers are valuable in surveying related developments in speaking proficiency assessment and sketching a broad picture of speaking assessment for researchers and practitioners in the field. Nonetheless, they typically adopt the traditional literature review approach, as opposed to the narrative review approach that was employed in this study. According to Norris and Ortega (2006 , p. 5, cited in Ellis, 2015 , p. 285), a narrative review aims to “scope out and tell a story about the empirical territory.” Compared with traditional literature review which tends to rely on a reviewer's subjective evaluation of the important or critical aspects of the existing knowledge on a topic, a narrative review is more objective and systematic in the sense the results are usually based on the coding analysis of the studies that are collected through applying some pre-specified criteria. Situated within the argument-based validation framework ( Chapelle et al., 2008 ), this study is aimed at presenting a narrative review of empirical research on speaking assessment published in two leading journals in the field of language assessment, namely, Language Testing (LT) and Language Assessment Quarterly (LAQ). Through following the systematic research procedures of narrative review (e.g., Cooper et al., 2019 ), we survey the topics of speaking assessment that have been explored by researchers as well as the research methods that have been utilized with a view to providing recommendations for future speaking assessment research and practice.

Theoretical Framework

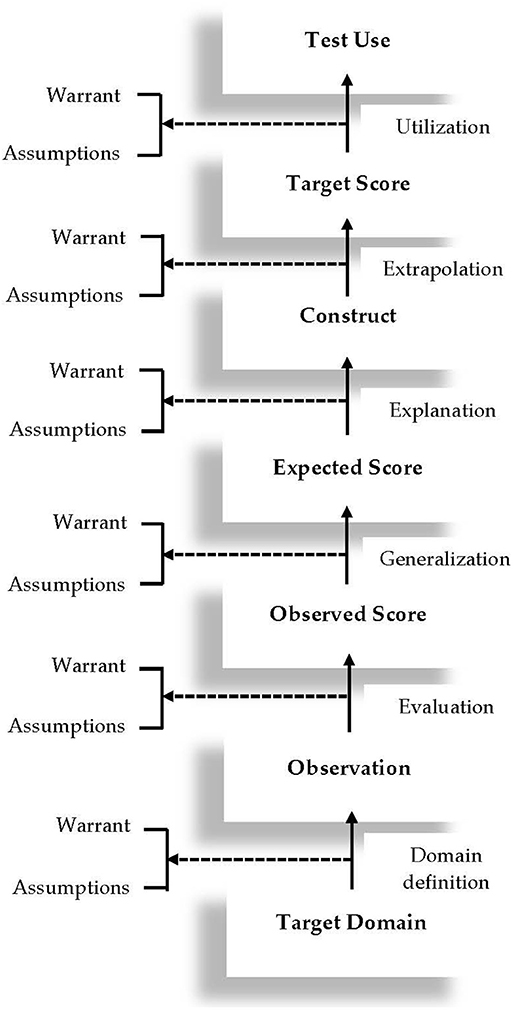

Emerging from the validation of the revised Test of English as a Foreign Language (TOEFL), the argument-based validation framework adopted in this study represents an expansion of Kane's (2006) argument-based validation model, which posits that a network of inferences needs to be verified to support test score interpretation and use. A graphic display of this framework is presented in Figure 1 . As shown in this figure, the plausibility of six inferences need to be verified to build a validity argument for a language test, including: domain definition, evaluation, generalization, explanation, extrapolation , and utilization . Also included in the framework are the key warrants that license each inference and its underlying assumptions. This framework was adopted as the guiding theoretical framework of this review study in the sense that each article collected for this study was classified into one or several of these six inferences in the framework. As such, it is necessary to briefly explain these inferences in Figure 1 in the context of speaking assessment. The explanation of the inferences, together with their warrants and assumptions, is largely based on Chapelle et al. (2008) and Knoch and Chapelle (2018) . To facilitate readers' understanding of these inferences, we use the TOEFL speaking test as an example to provide an illustration of the warrants, key assumptions, and backings for each inference.

Figure 1 . The argument-based validation framework (adapted from Chapelle et al., 2008 , p. 18).

The first inference, domain definition , links the target language use (TLU) domain to test takers' observed performance on a speaking test. The warrant supporting this inference is that observation of test takers' performance on a speaking test reveals the speaking abilities and skills required in the TLU domain. In the case of the TOEFL speaking test, the TLU domain is the English-medium institutions of higher education. Therefore, the plausibility of this inference hinges on whether observation of test takers' performance on the speaking tasks reveals essential academic speaking abilities and skills in English-medium universities. An important assumption underlying this inference is that speaking tasks that are representative of language use in English-medium universities can be identified and simulated. Backings in support of this assumption can be collected through interviews with academic English experts to investigate speaking abilities and skills that are required in English-medium universities.

The warrant for the next inference, evaluation , is that test takers' performance on the speaking tasks is evaluated to provide observed scores which are indicative of their academic speaking abilities. The first key assumption underlying this warrant is that the rating scales for the TOEFL speaking test function as intended by the test provider. Backings for this assumption may include: a) using statistical analyses (e.g., many-facets Rasch measurement, or MFRM) to investigate the functioning of the rating scales for the speaking test; and b) using qualitative methods (e.g., raters' verbal protocols) to explore raters' use of the rating scales for the speaking test. Another assumption for this warrant is that raters provide consistent ratings on each task of the speaking test. Backing for this assumption typically entails the use of statistical analyses to examine rater reliability on each task of the speaking test. The third assumption is that detectable rater characteristics do not introduce systematic construct-irrelevant variance into their ratings of test takers' performance. Bias analyses are usually implemented to explore whether certain rater characteristics (e.g., experience, L1 background) interact with test taker characteristics (e.g., L1 background) in significant ways.

The third inference is generalization . The warrant that licenses this inference is that test takers' observed scores reflect their expected scores over multiple parallel versions of the speaking test and across different raters. A few key assumptions that underlie this inference include: a) a sufficient number of tasks are included in the TOEFL speaking test to provide stable estimates of test takers' speaking ability; b) multiple parallel versions of the speaking test feature similar levels of difficulty and tap into similar academic English speaking constructs; and c) raters rate test takers' performance consistently at the test level. To support the first assumption, generalizability theory (i.e., G-theory) analyses can be implemented to explore the number of tasks that is required to achieve the desired level of reliability. For the second assumption, backings can be collected through: (a) statistical analyses to ascertain whether multiple parallel versions of the speaking test have comparable difficulty levels; and (b) qualitative methods such as expert review to explore whether the parallel versions of the speaking test tap into similar academic English speaking constructs. Backing of the third assumption typically entails statistical analyses of the scores that raters have awarded to test takers to examine their reliability at the test level.

The fourth inference is explanation . The warrant of this inference is that test takers' expected scores can be used to explain the academic English speaking constructs that the test purports to assess. The key assumptions for this inference include: (a) features of the spoken discourse produced by test takers on the TOEFL speaking test can effectively distinguish L2 speakers at different proficiency levels; (b) the rating scales are developed based on academic English speaking constructs that are clearly defined; and (c) raters' cognitive processes when rating test takers' spoken performance are aligned with relevant theoretical models of L2 speaking. Backings of these three assumptions can be collected through: (a) discourse analysis studies aiming to explore the linguistic features of spoken discourse that test takers produce on the speaking tasks; (b) expert review of the rating scales to ascertain whether they reflect relevant theoretical models of L2 speaking proficiency; and (c) rater verbal protocol studies to examine raters' cognitive processes when rating performance on the speaking test.

The fifth inference in the framework is extrapolation . The warrant that supports this inference is that the speaking constructs that are assessed in the speaking test account for test takers' spoken performance in English-medium universities. The first key assumption underlying this warrant is that test takers' performance on the TOEFL speaking test is related to their ability to use language in English-medium universities. Backing for this assumption is typically collected through correlation studies, that is, correlating test takers' performance on the speaking test with an external criterion representing their ability to use language in the TLU domains (e.g., teachers' evaluation of students' speaking proficiency of academic English). The second key assumption for extrapolation is that raters' use of the rating scales reflects how spoken performance is evaluated in English-medium universities. For this assumption, qualitative studies can be undertaken to compare raters' cognitive processes with those of linguistic laypersons in English-medium universities such as subject teachers.

The last inference is utilization . The warrant supporting this inference is that the speaking test scores are communicated in appropriate ways and are useful for making decisions. The assumptions that underlie the warrant include: (a) the meaning of the TOEFL speaking test scores is clearly interpreted by relevant stakeholders, such as admissions officers, test takers, and teachers; (b) cut scores are appropriate for making relevant decisions about students; and (c) the TOEFL speaking test has a positive influence on English teaching and learning. To collect the backings for the first assumption, qualitative studies (e.g., interviews, focus groups) can be conducted to explore stakeholders' perceptions of how the speaking test scores are communicated. For the second assumption, standard setting studies are often implemented to interrogate the appropriateness of cut scores. The last assumption is usually investigated through test washback studies, exploring how the speaking test influences English teaching and learning practices.

The framework was used in the validation of the revised TOEFL, as reported in Chapelle et al. (2008) , as well as in low-stakes classroom-based assessment contexts (e.g., Chapelle et al., 2015 ). According to Chapelle et al. (2010) , this framework features several salient advantages over other alternatives. First, given the dynamic and context-mediated nature of language ability, it is extremely challenging to use the definition of a language construct as the basis for building the validity argument. Instead of relying on an explicit definition of the construct, the argument-based approach advocates the specification of a network of inferences, together with their supporting warrants and underlying assumptions that link test takers' observed performances to score interpretation and use. This framework also makes it easier to formulate validation research plans. Since every assumption is associated with a specific inference, research questions targeting each assumption are developed ‘in a more principled way as a piece of an interpretative argument' ( Chapelle et al., 2010 , p. 8). As such, the relationship between validity argument and validation research becomes more apparent. Another advantage of this approach to test validation it that it enables the structuring and synthesis of research results into a logical and coherent validity argument, not merely an amalgamation of research evidence. By so doing, it depicts the logical progression of how the conclusion from one inference becomes the starting point of the next one, and how each inference is supported by research. Finally, by constructing a validity argument, this approach allows for a critical evaluation of the logical development of the validity argument as well as the research that supports each inference. In addition to the advantages mentioned above for test validation research, this framework is also very comprehensive, making it particularly suitable for this review study.

By incorporating this argument-based validation framework in a narrative review of the published research on speaking assessment, this study aims to address the following research questions:

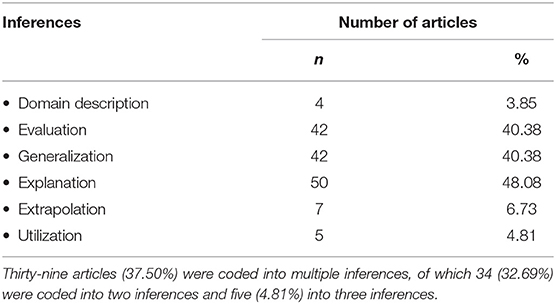

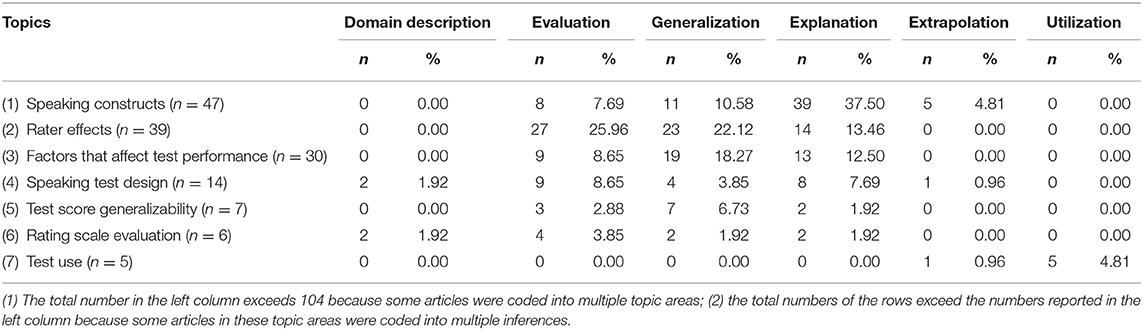

RQ1. How does the published research on speaking assessment represent the six inferences in the argument-based validation framework?

RQ2. What are the speaking assessment topics that constituted the focus of the published research?

RQ3. What methods did researchers adopt to collect backings for the assumptions involved in each inference?

This study followed the research synthesis steps recommended by Cooper et al. (2019) , including: (1) problem formation; (2) literature search; (3) data evaluation; (4) data analysis; (5) interpretation of results; and (6) public presentation. This section includes details regarding article search and selection, and methods for synthesizing our collected studies.

Article Search and Selection

We collected the articles on speaking assessment that were published in LT from 1984 1 to 2018 and LAQ from 2004 to 2018. These two journals were targeted because: (a) both are recognized as leading high-impact journals in the field of language assessment; (b) both have an explicit focus on assessment of language abilities and skills. We understand that numerous other journals in the field of applied linguistics or educational evaluation also publish research on speaking and its assessment. Admittedly, if the scope of our review extends to include more journals, the findings might be different; however, given the high impact of these two journals in the field, a review of their published research on speaking assessment in the past three decades or so should provide sufficient indication of the directions in assessing speaking proficiency. This limitation is discussed at the end of this paper.

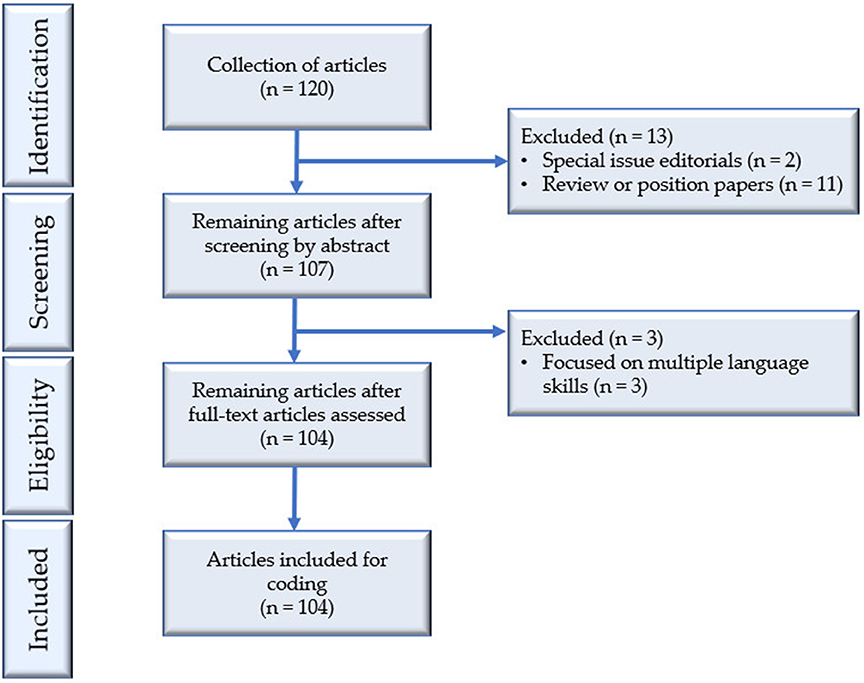

The PRISMA flowchart in Figure 2 illustrates the process of article search and selection in this study. A total of 120 articles were initially retrieved through manually surveying each issue in the electronic archives of the two journals, containing all articles published in LT from 1984 to 2018 and LAQ from 2004 to 2018. Two inclusion criteria were applied: (a) the article had a clear focus on speaking assessment. Articles that targeted the whole language test involving multiple skills were not included; (b) the article reported an empirical study in the sense that it investigated one or more aspects of speaking assessment through the analysis of data from either speaking assessments or designed experimental studies.

Figure 2 . PRISMA flowchart of article search and collection.

Through reading the abstracts carefully, 13 articles were excluded from our analysis, with two special issue editorials and 11 review or position papers. A further examination of the remaining 107 articles revealed that three of them involved multiple language skills, suggesting a lack of primary focus on speaking assessment. These three articles were therefore excluded from our analysis, yielding 104 studies in our collection. Of the 104 articles, 73 (70.19%) were published in LT and 31 (29.81%) were published in LAQ . All these articles were downloaded in PDF format and imported into NVivo 12 ( QSR, 2018 ) for analysis.

Data Analysis

To respond to RQ1, we coded the collected articles into the six inferences in the argument-based validation framework based on the focus of investigation for each article, which was determined by a close examination of the abstract and research questions. If the primary focus did not emerge clearly in this process, we read the full text. As the coding progressed, we noticed that some articles had more than one focus, and therefore should be coded into multiple inferences. For instance, Sawaki (2007) interrogated several aspects of an L2 speaking test that were considered as essential to its construct validity, including the interrelationships between the different dimensions of spoken performance and the reliability of test scores. The former was considered as pertinent to the explanation inference, as it explores the speaking constructs through the analysis of test scores; the latter, however, was deemed more relevant to the generalization inference, as it concerns the consistency of test scores at the whole test level ( Knoch and Chapelle, 2018 ). Therefore, this article was coded into both explanation and generalization inference.