IMRAD Format For Research Papers: The Complete Guide

Thank you for reading DrAiMD’s Substack. This post is public so feel free to share it.

Writing a strong research paper is key to succeeding in academia, but it can be overwhelming to know where to start. That’s where the IMRAD format comes in. IMRAD provides a clear structure to help you organize and present your research logically and coherently. In this comprehensive guide, we’ll explain the IMRAD format, why it’s so important for research writing, and how to use it effectively. Follow along to learn the ins and outs of crafting papers in the gold-standard IMRAD structure. In this article, I’ll walk you through the IMRAD format step-by-step. I’ll explain each section, how to write it, and what to avoid. By the end of this article, you’ll be able to write a research paper that is clear, concise, and well-organized.

What is IMRAD Format?

IMRAD stands for Introduction, Methods, Results, and Discussion . It’s a way of organizing a scientific paper to make the information flow logically and help readers easily find key details. The IMRAD structure originated in medical journals but is now the standard format for many scientific fields.

Thanks for reading DrAiMD’s Substack! Subscribe for free to receive new posts and support my work.

Here’s a quick overview of each section’s purpose:

Introduction : Summary of prior research and objective of your study

Methods : How you carried out the study

Results : Key findings and analysis

Discussion : Interpretation of results and implications

Most papers also include an abstract at the beginning and a conclusion at the end to summarize the entire report.

Why is the IMRAD Format Important?

Using the IMRAD structure has several key advantages:

It’s conventional and familiar. Since I MRAD is so widely used , it helps ensure editors, reviewers, and readers can easily find the details they need. This enhances clarity and comprehension.

It emphasizes scientific rigor. The methods and results sections encourage thorough reporting of how you conducted the research. This supports transparency, credibility, and reproducibility.

It encourages precision. The structure necessitates concise writing focused only on the core aims and findings. This avoids rambling or repetition.

It enables efficient reading. Readers can quickly skim to the sections most relevant to them, like only reading the methods. IMRAD facilitates this selective reading.

In short, the IMRAD format ensures your writing is clear, precise, rigorous, and accessible – crucial qualities in scientific communication.

When Should You Use IMRAD Format?

The IMRAD structure is ideal for:

Primary research papers that report new data and findings

Review papers that comprehensively summarize prior research

Grant proposals requesting funding for research

IMRAD is not typically used for other paper types like:

Editorials and opinion pieces

Popular science articles for general audiences

Essays analyzing a topic rather than presenting new data

So, if you are writing a scholarly scientific paper based on experiments, investigations, or observational studies, the IMRAD format is likely expected. Embrace this conventional structure to help communicate your exciting discoveries.

Now that we’ve covered the key basics let’s dive into how to write each section of an IMRAD paper.

The abstract is a succinct summary of your entire paper, typically around 200 words. Many readers will only read the abstract, so craft it carefully to function as a standalone piece highlighting your most important points.

Elements to include:

Research problem, question, or objectives

Methods and design

Major findings or developments

Conclusions and implications

While written first, refine the abstract last to accurately encapsulate your final paper. A clear precise abstract can help attract readers and set the tone for your work. Take a look at our complete guide to abstract writing here !

INTRODUCTION

The Introduction provides the necessary background context and sets up the rationale for your research. Start by briefly summarizing the core findings from previous studies related to your topic to orient readers to the field. Provide more detail on the specific gaps, inconsistencies, or unanswered questions in the research your study aims to address. Then, clearly state your research questions, objectives, experimental hypotheses, and overall purpose or anticipated contributions. The Introduction establishes why your research is needed and clarifies your specific aims. Strive for a concise yet comprehensive overview that lets readers learn more about your fascinating study. Writing a good introduction is like writing a good mini-literature review on a subject. Take a look at our complete guide to literature review writing here!

The methods section is the nuts and bolts, where you comprehensively describe how you carried out the research. Sufficient detail is crucial so others can assess your work and reproduce the study. Take a look at our complete guide to writing an informative and tight literature review here!

Research Design

Start by explaining the overall design and approach. Specify:

Research types like experimental, survey, observational, etc.

Study duration

Sample size

Control vs experimental groups

Clarify the variables, treatments, and factors involved.

Participants

Provide relevant characteristics of the study population or sample, such as:

Health status

Geographic location

For human studies, include recruitment strategies and consent procedures.

List any instruments, tests, assays, chemicals, or other materials utilized. Include details like manufacturers and catalog numbers.

Chronologically explain each step of the experimental methods. Be precise and thorough to enable replication. Use past tense and passive voice.

Data Analysis

Describe any statistical tests, data processing, or software used to analyze the data.

The methods section provides the roadmap of your research journey. Strive for clarity and completeness. Now we’re ready for the fun part – the results!

This section shares the key findings and data from your study without interpretation. The results should mirror the methods used.

Report Findings Concisely

Use text, figures, and tables to present the core results:

Focus only on key data directly related to your objectives

Avoid lengthy explanations and extraneous details

Highlight the most groundbreaking findings

Use Visuals to Present Complex Data

Tables and figures efficiently communicate more complex data:

Tables organize detailed numerical or textual data

Figures vividly depict relationships like graphs, diagrams, photos

Include clear captions explaining what is shown

Refer to each visual in the text

Reporting your results objectively lays the groundwork for the next section – making sense of it all through discussion.

Here, you interpret the data, explain the implications, acknowledge limitations, and make recommendations for future research. The discussion allows you to show the greater meaning of your study.

Interpret the Findings

Analyze the results in the context of your initial hypothesis and prior studies:

How do your findings compare to past research? Are they consistent or contradictory?

What conclusions can you draw from the data?

What theories or mechanisms could explain the outcomes?

Discuss the Implications

Address the impact and applications of the research:

How do the findings advance scientific understanding or technical capability?

Can the results improve processes, design, or policies in related fields?

What innovations or new research directions do they enable?

Identify Limitations and Future Directions

No study is perfect, so discuss potential weaknesses and areas for improvement:

Were there any methodological limitations that could influence the results?

Can the research be expanded by testing new variables or conditions?

How could future studies build on your work? What questions remain unanswered?

A thoughtful discussion emphasizes the meaningful contributions of your research.

The conclusion recaps the significance of your study and key takeaways. Like the abstract, many readers may only read your opening and closing, so ensure the conclusion packs a punch.

Elements to cover:

Restate the research problem and objectives

Summarize the major findings and main points

Emphasize broader implications and applications

The conclusion provides the perfect opportunity to drive home the importance of your work. End on a high note that resonates with readers.

The IMRAD format organizes research papers into logical sections that improve scientific communication. By following the Introduction-Methods-Results-and-Discussion structure, you can craft clear, credible, and impactful manuscripts. Use IMRAD to empower readers to comprehend and assess your exciting discoveries efficiently. With this gold-standard format under your belt, your next great paper is within reach.

Ready for more?

- Communicating in STEM Disciplines

- Features of Academic STEM Writing

- STEM Writing Tips

- Academic Integrity in STEM

- Strategies for Writing

- Science Writing Videos – YouTube Channel

- Educator Resources

- Lesson Plans, Activities and Assignments

- Strategies for Teaching Writing

- Grading Techniques

IMRAD (Introduction, Methods, Results and Discussion)

Academic research papers in STEM disciplines typically follow a well-defined I-M-R-A-D structure: Introduction, Methods, Results And Discussion (Wu, 2011). Although not included in the IMRAD name, these papers often include a Conclusion.

Introduction

The Introduction typically provides everything your reader needs to know in order to understand the scope and purpose of your research. This section should provide:

- Context for your research (for example, the nature and scope of your topic)

- A summary of how relevant scholars have approached your research topic to date, and a description of how your research makes a contribution to the scholarly conversation

- An argument or hypothesis that relates to the scholarly conversation

- A brief explanation of your methodological approach and a justification for this approach (in other words, a brief discussion of how you gather your data and why this is an appropriate choice for your contribution)

- The main conclusions of your paper (or the “so what”)

- A roadmap, or a brief description of how the rest of your paper proceeds

The Methods section describes exactly what you did to gather the data that you use in your paper. This should expand on the brief methodology discussion in the introduction and provide readers with enough detail to, if necessary, reproduce your experiment, design, or method for obtaining data; it should also help readers to anticipate your results. The more specific, the better! These details might include:

- An overview of the methodology at the beginning of the section

- A chronological description of what you did in the order you did it

- Descriptions of the materials used, the time taken, and the precise step-by-step process you followed

- An explanation of software used for statistical calculations (if necessary)

- Justifications for any choices or decisions made when designing your methods

Because the methods section describes what was done to gather data, there are two things to consider when writing. First, this section is usually written in the past tense (for example, we poured 250ml of distilled water into the 1000ml glass beaker). Second, this section should not be written as a set of instructions or commands but as descriptions of actions taken. This usually involves writing in the active voice (for example, we poured 250ml of distilled water into the 1000ml glass beaker), but some readers prefer the passive voice (for example, 250ml of distilled water was poured into the 1000ml beaker). It’s important to consider the audience when making this choice, so be sure to ask your instructor which they prefer.

The Results section outlines the data gathered through the methods described above and explains what the data show. This usually involves a combination of tables and/or figures and prose. In other words, the results section gives your reader context for interpreting the data. The results section usually includes:

- A presentation of the data obtained through the means described in the methods section in the form of tables and/or figures

- Statements that summarize or explain what the data show

- Highlights of the most important results

Tables should be as succinct as possible, including only vital information (often summarized) and figures should be easy to interpret and be visually engaging. When adding your written explanation to accompany these visual aids, try to refer your readers to these in such a way that they provide an additional descriptive element, rather than simply telling people to look at them. This can be especially helpful for readers who find it hard to see patterns in data.

The Discussion section explains why the results described in the previous section are meaningful in relation to previous scholarly work and the specific research question your paper explores. This section usually includes:

- Engagement with sources that are relevant to your work (you should compare and contrast your results to those of similar researchers)

- An explanation of the results that you found, and why these results are important and/or interesting

Some papers have separate Results and Discussion sections, while others combine them into one section, Results and Discussion. There are benefits to both. By presenting these as separate sections, you’re able to discuss all of your results before moving onto the implications. By presenting these as one section, you’re able to discuss specific results and move onto their significance before introducing another set of results.

The Conclusion section of a paper should include a brief summary of the main ideas or key takeaways of the paper and their implications for future research. This section usually includes:

- A brief overview of the main claims and/or key ideas put forth in the paper

- A brief discussion of potential limitations of the study (if relevant)

- Some suggestions for future research (these should be clearly related to the content of your paper)

Sample Research Article

Resource Download

Wu, Jianguo. “Improving the writing of research papers: IMRAD and beyond.” Landscape Ecology 26, no. 10 (November 2011): 1345–1349. http://dx.doi.org/10.1007/s10980-011-9674-3.

Further reading:

- Organization of a Research Paper: The IMRAD Format by P. K. Ramachandran Nair and Vimala D. Nair

- George Mason University Writing Centre’s guide on Writing a Scientific Research Report (IMRAD)

- University of Wisconsin Writing Centre’s guide on Formatting Science Reports

IMRaD Paper Example: A Guide to Understand Scientific Writing

Learn how to structure an IMRaD paper, explore an IMRaD paper example, and master the art of scientific writing.

Welcome to our guide on IMRaD papers, an essential format for scientific writing. In this article, we will explore what an IMRaD paper is, discuss its structure, and provide an IMRaD paper example to help you understand how to effectively organize and present your scientific research. Whether you are a student, researcher, or aspiring scientist, mastering the IMRaD format will enhance your ability to communicate your findings clearly and concisely.

What Is An IMRaD Paper?

IMRaD stands for Introduction, Methods, Results, and Discussion . It is a widely used format for structuring scientific research papers. Following the IMRaD paper example below, you will see that the IMRaD format provides a logical flow of information, allowing readers to understand the context, methods, results, and interpretation of the study in a systematic manner.

The IMRaD structure follows the scientific method, where researchers propose a hypothesis, design and conduct experiments, analyze data, and draw conclusions. By adhering to the IMRaD format, researchers can present their work in a standardized way, enabling effective communication and facilitating the dissemination of scientific knowledge.

Structure Of An IMRaD Paper

- Introduction : The introduction section provides an overview of the research topic, presents the research question or hypothesis, and outlines the significance and rationale of the study. It should provide background information, a literature review, and clearly state the objectives and aims of the research.

- Methods : The methods section describes the experimental design, materials, and procedures used in the study. It should provide sufficient detail to allow other researchers to replicate the study. This section should include information on the sample or participants, data collection methods, measurements, and statistical analysis techniques employed.

- Results : The results section presents the findings of the study in a clear and concise manner. It should focus on reporting the empirical data obtained from the experiments or analyses conducted. Results are typically presented through tables, figures, or graphs and should be accompanied by relevant statistical analyses. Avoid interpretation or discussion of the results in this section.

- Discussion : The discussion section interprets the results, relates them to the research question or hypothesis, and places them within the context of existing knowledge. It provides an analysis of the findings, discusses their implications, and addresses any limitations or weaknesses of the study. The discussion section may also highlight areas for future research or propose alternative explanations for the results.

Follow This IMRaD Paper Example

“ The Effect of Exercise on Cognitive Function in Older Adults “

Introduction

The introduction section will begin by providing a comprehensive overview of the importance of cognitive function in aging populations. It would discuss the prevalence of cognitive decline and its impact on quality of life. Additionally, it would highlight the potential role of exercise in maintaining cognitive health and improving cognitive function. The introduction would present relevant theories or previous studies supporting the hypothesis that regular exercise can positively affect cognitive function in elderly adults. Finally, it would clearly state the research question: “Does regular exercise improve cognitive function in elderly adults?”

The methods section will describe in detail the study design, participant recruitment process, and intervention details. It would specify the inclusion and exclusion criteria for participants, such as age range and health status. Additionally, it would outline the cognitive assessments used to measure cognitive function, providing information on their reliability and validity. The section would provide a detailed description of the exercise program, including the type, duration, frequency, and intensity of the exercise sessions. It would also explain any control group or comparison conditions employed. Ethical considerations, such as obtaining informed consent and maintaining participant confidentiality, will be addressed in this section.

The results section will present the findings of the study in a clear and organized manner. It would include statistical analyses of the data collected, such as t-tests or ANOVA, to determine the significance of any observed effects. The results would be presented using tables, figures, or graphs, allowing for easy interpretation and comparison. The section will provide a summary of the main findings related to the effect of exercise on cognitive function, including any statistically significant improvements observed.

The discussion section would interpret the results in light of the research question and relevant literature. It would discuss the implications of the findings, considering both the strengths and limitations of the study. Any unexpected or contradictory results would be addressed, and potential explanations or alternative interpretations would be explored. The section would also highlight the theoretical and practical implications of the study’s findings, such as the potential for exercise interventions to be implemented in geriatric care settings. Finally, the discussion would conclude with suggestions for future research directions, such as investigating the long-term effects of exercise on cognitive function or examining the impact of different exercise modalities on specific cognitive domains.

Clear Communication Of Scientific Research

An IMRaD paper follows a standardized structure that enables clear communication of scientific research. By understanding the purpose and content of each section— introduction, methods, results, and discussion —you can effectively organize and present your own research findings. Remember that the example provided is a simplified representation, and actual IMRaD papers may vary in length and complexity depending on the study and the specific journal requirements.

Your Creations, Ready Within Minutes

Mind the Graph is an online platform that provides scientists and researchers with an easy-to-use tool to create visually appealing scientific presentations , posters, and graphical abstracts . It offers a wide range of templates, pre-designed icons, and illustrations that researchers can use to create stunning visuals that effectively communicate their research findings.

Subscribe to our newsletter

Exclusive high quality content about effective visual communication in science.

Unlock Your Creativity

Create infographics, presentations and other scientifically-accurate designs without hassle — absolutely free for 7 days!

Content tags

Research Paper Basics: IMRaD

- Finding Databases in GALILEO

- Finding Journals in GALILEO

- Finding Materials in GIL-Find

- ProQuest Research Companion

- How to Search JSTOR

- Scholarly/Peer-Reviewed vs. Popular

- Tutorial: Why Citations Matter

- Literature Review

- Annotated Bibliography

- Podcast Studio

- Reserve a Room

- Share Your Work

- Finding Images

- Using RICOH Boards

- Writing Guides

- The Research Process

What is IMRaD?

IMRaD is an acronym for Introduction , Methods , Results , and Discussion . It describes the format for the sections of a research report. The IMRaD (or IMRD) format is often used in the social sciences, as well as in the STEM fields.

Credit: IMRD: The Parts of a Research Paper by Wordvice Editing Service on YouTube

Outline of Scholarly Writing

With some variation among the different disciplines, most scholarly articles of original research follow the IMRD model, which consists of the following components:

Introduction

- Statement of Problem (i.e. "the Gap")

- Plan to Solve the Problem

Method & Results

- How Research was Done

- What Answers were Found

- Interpretation of Results (What Does It Mean?)

- Implications for the Field

This form is most obvious in scientific studies, where the methods are clearly defined and described, and data is often presented in tables or graphs for analysis.

In other fields, such as history, the method and results may be embedded in a narrative, perhaps describing and interpreting events from archival sources. In this case, the method is the selection of archival sources and how they were interpreted, while the results are the interpretation and resultant story.

In full-length books, you might see this general pattern followed over the entire book, within each chapter, or both.

Credit: Howard-Tilton Memorial Library at Tulane University. This work is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License .

IMRAD Format

- Writing Center | George Mason University

- IMRAD Outlining | Excelsior College

- Florida Atlantic University Libraries

- << Previous: Annotated Bibliography

- Next: Group Project Tools >>

- Last Updated: Apr 18, 2024 9:25 PM

- URL: https://libguides.ccga.edu/researchbasics

Gould Memorial Library College of Coastal Georgia One College Drive Brunswick, GA 31520 (912) 279-5874 Library Hours Camden Center Library College of Coastal Georgia 8001 Lakes Blvd / Wildcat Blvd Kingsland, GA 31548 (912) 510-3332 Library Hours

- Research article

- Open access

- Published: 21 July 2011

The introduction, methods, results and discussion (IMRAD) structure: a Survey of its use in different authoring partnerships in a students' journal

- Loraine Oriokot 1 ,

- William Buwembo 2 ,

- Ian G Munabi 2 &

- Stephen C Kijjambu 3

BMC Research Notes volume 4 , Article number: 250 ( 2011 ) Cite this article

134k Accesses

6 Citations

3 Altmetric

Metrics details

Globally, the role of universities as providers of research education in addition to leading in main - stream research is gaining more importance with demand for evidence based practices. This paper describes the effect of various students and faculty authoring partnerships on the use of the IMRAD style of writing for a university student journal.

This was an audit of the Makerere University Students' Journal publications over an 18-year period. Details of the authors' affiliation, year of publication, composition of the authoring teams and use of IMRAD formatting were noted. Data analysis gave results summarised as frequencies and, effect sizes from correlations and the non parametric test. There were 209 articles found with the earliest from 1990 to latest in 2007 of which 48.3% were authored by faculty only teams, 41.1% were authored by student only teams, 6.2% were authored by students and faculty teams, and 4.3% had no contribution from the above mentioned teams. There were significant correlations between the different teams and the years of the publication ( r s = -0. 338 p < 0.01 one tailed). Use of the IMRAD formatting was significantly affected by the composition of the teams (Χ 2 (2df) = 25.621, p < 0.01) especially when comparing the student only teams to the faculty only teams. (U = 3165 r = - 0.289). There was a significant trend towards student only teams over the years sampled. ( z = -4.764, r = -0.34).

Conclusions

In the surveyed publications, there was evidence of reduced faculty student authoring teams as evidenced by the trends towards students only authoring teams and reduced use of IMRAD formatting in articles published in the students' journal. Since the university is expected to lead in teaching of research, there is need for increased support for undergraduate research, as a starting point for research education.

Globally there is an increasing awareness of the importance of research for developing guidelines to direct social and economic interventions [ 1 , 2 ]. Research involves the critical analysis of each and every solution to a problem using the scientific method to identify the best evidence based solution for action at the time. Research is thus the foundation of evidence based practice [ 3 , 4 ]. Society expects universities to lead both the teaching and carrying out of research. This expectation has led to various policy recommendations and initiatives to promote research and innovation. An example of such a policy recommendation can be found in United States of America, where Gonzalez (2001) identifies the 1998 Boyer commission report encouraging universities to place more emphasis on undergraduate research experiences [ 5 ]. According to Laskowitz et al (2010), Stanford and Duke Universities have been running undergraduate research programmes for the last 40 years that instil in students an appreciation for rigorous research in academic medicine [ 6 ]. In Australia, students picked life skills like time management so long as they dealt with authentic science and had good supervision [ 7 ]. In Africa the demand for high quality research at undergraduate level of education, is yet to be met [ 8 ].

Research and innovation are critical for national social and economic development [ 2 ]. In response to the drive for more economic development, universities are redefining their roles and interactions with society by going from being the traditional storehouses of knowledge to becoming interactive knowledge hubs [ 9 ]. One way of ensuring that the Universities actually act as knowledge hubs is through promoting institutional visibility by encouraging research publication by students and faculty using internationally recognised scientific writing formats like Introduction, Methods, Results and Discussion, [IMRAD] [ 5 , 9 , 10 ]. In addition to visibility, the adoption of high quality international standards benefits the university by the creation of a pool of individuals who are conversant with scientific writing. Having such a pool of people supports Gonzales (2001) recognition that research takes place anywhere, and the "teaching of research is a role that is increasingly becoming the preserve of the university" [ 5 ]. This role of how research is taught is further extended with Gonzales (2001) arguing that undergraduate research is actually the beginning of a "five stage continuum of research education that ends with a post-doctoral experience" [ 5 ]. Research education promotes the uniform conduction, interpretation and response to research findings reported using familiar standard formats of scientific writing. Finally according to Aravamudhan and Frantsve (2009) research education and adoption of uniform formats of scientific writing promotes evidence based practice by improving information awareness, seeking and eventual application of new practices [ 3 ]. The rapid increase in the volume of very advanced knowledge and equally rapid changes in the working environment make it increasingly important to equip students with key research skills like scientific writing to keep abreast [ 3 , 4 ].

This paper looks at work done on the Makerere Medical Journal (MMJ), one of the students' journals at Makerere University. MMJ is run for and by the health professional student body at the former Faculty of Medicine (FoM) that with the School of Public Health became Makerere University College of Health Sciences (MakCHS) in 2008, [ 11 – 13 ] one of the Colleges of Makerere University (one of the oldest universities in Sub-Saharan Africa). With the University's Vision to become a leader in research in Africa, there is a high demand for research and scientific writing currently focusing on graduate research [ 14 ]. The effect of student faculty partnerships on undergraduate scientific writing to our knowledge is not well documented. The paper describes the role of student faculty partnerships in determining the formatting of the MMJ articles over an 18 year (1990-2007) period in the journal's existence.

This was a retrospective audit of the Medical Journal MMJ, a publication of the health professional student body. The MMJ is a peer-reviewed publication that provides a platform for students to: share and exchange medical knowledge; develop writing and analytical abilities; promote awareness of students' contributions to health care; provide continuing medical education and foster valuable leadership and editorial skills. MMJ is published bi-annually and has been in existence from the early 1960's. The journal publishes: original articles, reviews, reports, letters to the editor, case reports, includes sections like: educational quizzes and cross word puzzles.

A hand search was made for complete journal volumes from various sources that included the Sir Albert Cook Library which is the main MakCHS library, personal collections and the journal editorial teams' files. For each article found, the following information was captured; the articles' authors and their affiliations, the use of the IMRAD format of writing papers, the composition of the authoring teams and the year of the publication. The data was analyzed using the Statistical Package for Social Sciences Inc. (version 12.0 for Windows, Chicago, Illinois) with the calculation of odd ratios and trend analysis being made with the aid of online Open Epi programme version 2.3.1 http://www.openepi.com [ 15 ]. The results were summarised as frequencies and presented in bar graphs and tables with calculation of odds ratios, effect sizes and trend analysis. Additional inferences were made with the aid of spearman's correlations and non parametric tests with the level of significance set as P value of less than 0.05.

Permission to use the data for this study was obtained from the editorial team for the journal. None of the authors' identification details were used during the analysis and the preparation of the paper.

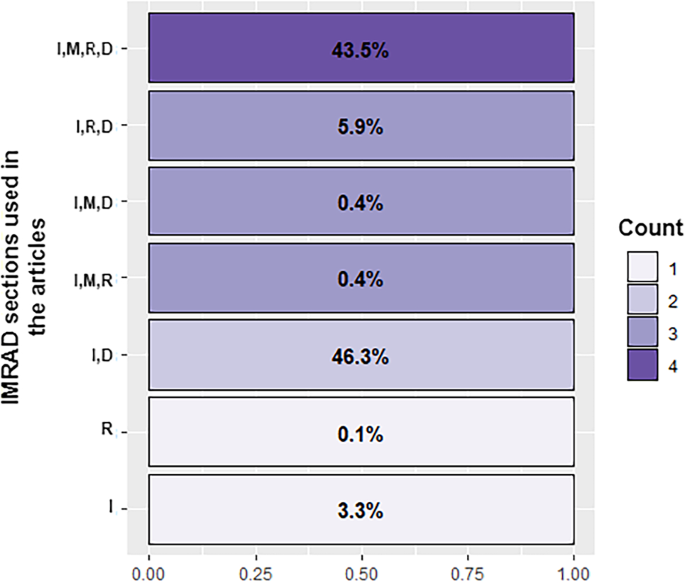

Two hundred and nine (209) journal articles were found during the survey. The earliest publication was of the year 1990 and the most recent from 2007 from 13 volumes of the journal. Of the 209 articles 101/209 (48.3%) were authored by faculty only teams, 86/209 (41.1%) were authored by student only teams, 13/209 (6.2%) were authored by student faculty teams, and 9/209 (4.3%) had no affiliation indicated thus not classified into any of the above mentioned teams. Examination of the paper formatting revealed that only 70/209 (33.5%) of the papers were written using the IMRAD format. The number of articles found by year are summarised in Table 1 , with the highest number of 33 in 2007 and lowest number of 5 seen in 1990. There was no significant change in the odds for IMRAD use over the years. (Mantel Hertz chi square for trend = 1.71 p value 0.1906). There were significant correlations between the different teams and the years of the publication r s = - 0.338 (p < 0.01 one tailed) and for teams and use of IMRAD formatting r s = - 0.265 (p < 0.01 one tailed).

Use of the IMRAD formatting was significantly affected by the composition of the teams Χ 2 (2df) = 25.621, p < 0.001 using the Kruskal Wallis test. Post hoc Mann-Whitney team pair specific tests whose level of significance set at 0.025 showed that the use of IMRAD was not significant when comparing the mixed students-faculty with faculty only teams (U = 444, r = - 0.21), but, was significantly different when comparing the students only to faculty only teams (U = 3165, r = -0.289). Jonkheere's test revealed no trend in the use of IMRAD over the years sampled J = 10100, z = 0.211, r = 0.086. However there was a significant trend to more students only teams over the years sampled J = 6802, z = -4.764, r = -0.34.

The analysis of the data reveals that there is an increase in the number of students only teams submitting articles to the journal. This can be seen in the number of articles submitted which was highest at 33 in the 2007 journal. The increased interest in publication could be the result of a more aggressive editorial team or represent an increasing interest on the part of the student body in the value of research. Increase in undergraduate students interest in research is supported by the observation that globally there is increased interest in research at the undergraduate level as the beginning of research education [ 5 ]. The other factor that could support increased interest in research is the adoption of adult learning approaches to curriculum delivery by the FoM in 2003 [ 16 ].

Sadly the increased student interest in research is also accompanied by a significant trend towards reduced faculty engagement with students in research ( r = - 0.34). Reduced faculty engagement also manifests in two other ways as seen in no change in the use of IMRAD over time ( J = 10100, z = 0.211, r = 0.086) and the observation that the students only teams use IMRAD less than the faculty teams (U = 3165, r = -0.289). Even where the journal article had mixed student faculty teams there was no significant increase in the use of IMRAD when compared to faculty only teams (U = 444, r = - 0.21). Reduced engagement could also point to a different trend developing over time, there seems to be little support for undergraduate research in both the curricula and in extracurricular activities. This seems to have been going on for quite some time considering that most of the faculty were once students at this same university. Examining global trends as described by Gonzales (2001), research education has moved from being the premise of graduate students to a continuum that begins in undergraduate education [ 5 ]. Active support for undergraduate research is happening in more developed settings as is seen in the example of Duke and Stanford universities [ 6 ]. According to Lappato (2007) in undergraduate research experiences students' learn by being positively influenced by the process of investigation, and learning or from modelling higher order methods of thinking as they test and later communicate their research findings [ 17 ]. This makes the undergraduate research experiences a powerful tool for quickly increasing the number of high calibre researchers [ 18 ]. If one assumed that the use of the IMRAD format is a measure of scientific writing skill transfer then the deductions from the analysis of the data obtained from the student journal articles, suggests that for this population research is undergoing a slow but sure decline. This trend has been observed by other researchers concerning the African continent [ 8 ].

Given the powerful nature of the undergraduate research experiences as tools for grooming the next generation of scientists, it is important to look at other factors like the need for extra effort and time of faculty to transfer scholarly writing skills to students [ 19 ]. There is need for urgently exploration of mentoring undergraduates in research in line with global research education trends [ 5 ]. Some other interventions for consideration include using a training or mentoring programme each new MMJ editorial team [ 20 ], and use of the student assessment process as is done at the graduate level [ 8 ]. Using student assessment to promote scientific writing requires clear documentation of the different roles of the various participants and subsequent supervision, [ 21 ] in addition to the creation of an enabling environment using an institution wide research governance framework[ 22 ]. Given that individuals who participate in research as students will more likely continue to participate in research as faculty, it is important that all efforts are made to ensure that the students develop these vital scientific writing skills [ 19 , 23 ].

Study limitations

This retrospective study of the MMJ had some limitations like: the poor journal publication record keeping, annual turnover of the volunteer student editorial board and use of abbreviated names made it difficult to identify some of the author details. Despite this, it was possible to obtain an adequate sample of the journal's publication for detailed analysis.

This survey demonstrates that in the surveyed university population, faculty student partnerships are not producing the desired level of undergraduate research mentoring as evidenced by the reduced use of the IMRAD formatting in articles published in the MMJ. Given that the use of IMRAD is one of the core competencies for one to be an active member of the scientific community, inability to transfer this skill could help explain some of the identified gaps related to scientific writing in this university and Africa at large [ 8 ]. There is need to support undergraduate research in Africa using active mentoring programmes, providing training support for student journal editorial teams and use of innovative pro-scientific writing curricula. Such support could result in the quicker uptake and promotion of scientific writing and the reading of scientific literature in Africa over time.

Mason J, Eccles M, Freemantle N, Drummond M: Incorporating economic analysis in evidence-based guidelines for mental health: the profile approach. J Ment Health Policy Econ. 1999, 2: 13-19. 10.1002/(SICI)1099-176X(199903)2:1<13::AID-MHP34>3.0.CO;2-M.

Article PubMed Google Scholar

Zoltan JA, Sameeksha D, Jolanda H: Entrepreneurship, economic development and institutions. Small Bus Econ. 2008, 31: 219-234. 10.1007/s11187-008-9135-9.

Article Google Scholar

Aravamudhan K, Frantsve-Hawley J: American Dental Association's Resources to Support Evidence-Based Dentistry. J Evid Based Dent Pract. 2009, 9: 139-144. 10.1016/j.jebdp.2009.06.011.

Article PubMed CAS PubMed Central Google Scholar

Johnson N, List-Ivankovic J, Eboh WO, Ireland J, Adams D, Mowatt E, Martindale S: Research and evidence based practice: Using a blended approach to teaching and learning in undergraduate nurse education. Nurse Educ Pract. 2009

Google Scholar

Gonzalez C: Undergraduate research, graduate mentoring, and the university's mission. Science. 2001, 293: 1624-1626. 10.1126/science.1062714.

Article PubMed CAS Google Scholar

Laskowitz DT, Drucker RP, Parsonnet J, Cross PC, Gesundheit N: Engaging Students in Dedicated Research and Scholarship During Medical School: The Long-Term Experiences at Duke and Stanford. Acad Med. 2010, 85: 419-428. 10.1097/ACM.0b013e3181ccc77a.

Howitt S, Wilson A, Wilson K, Roberts P: Please remember we are not all brilliant': undergraduates' experiences of an elite, research-intensive degree at a research-intensive university. Higher Education Research & Development. 2010, 29: 405-420. 10.1080/07294361003601883.

Kabiru CW, Izugbara CO, Wambugu SW, Ezeh AC: Capacity development for health research in Africa: experiences managing the African Doctoral Dissertation Research Fellowship Program. Health Res Policy Syst. 2010, 8: 21-10.1186/1478-4505-8-21.

Article PubMed PubMed Central Google Scholar

Youtie J, Shapira P: Building an innovation hub: A case study of the transformation of university roles in regional technological and economic development. Research Policy. 2008, 37: 1188-1204. 10.1016/j.respol.2008.04.012.

Sollaci LB, Pereira MG: The introduction, methods, results, and discussion (IMRAD) structure: a fifty-year survey. J Med Libr Assoc. 2004, 92: 364-367.

PubMed PubMed Central Google Scholar

Foster WD: Makerere Medical School: 50th anniversary. Br Med J. 1974, 3: 675-678. 10.1136/bmj.3.5932.675.

Kizza IB, Tugumisirize J, Tweheyo R, Mbabali S, Kasangaki A, Nshimye E, Sekandi J, Groves S, Kennedy CE: Makerere University College of Health Sciences' role in addressing challenges in health service provision at Mulago National Referral Hospital. BMC Int Health Hum Rights. 11 (Suppl 1): S7-

Pariyo G, Serwadda D, Sewankambo NK, Groves S, Bollinger RC, Peters DH: A grander challenge: the case of how Makerere University College of Health Sciences (MakCHS) contributes to health outcomes in Africa. BMC Int Health Hum Rights. 11 (Suppl 1): S2-

Nankinga Z, Kutyabami P, Kibuule D, Kalyango J, Groves S, Bollinger RC, Obua C: An assessment of Makerere University College of Health Sciences: optimizing health research capacity to meet Uganda's priorities. BMC Int Health Hum Rights. 11 (Suppl 1): S12-

OpenEpi: Open Source Epidemiologic Statistics for Public Health. [ http://www.openepi.com ]

Kiguli-Malwadde E, Kijjambu S, Kiguli S, Galukande M, Mwanika A, Luboga S, Sewankambo N: Problem Based Learning, curriculum development and change process at Faculty of Medicine, Makerere University, Uganda. African Health Sciences. 2006, 6: 127-130.

PubMed CAS PubMed Central Google Scholar

Lopatto D: Undergraduate research experiences support science career decisions and active learning. CBE Life Sci Educ. 2007, 6: 297-306.

Villarejo M, Barlow AE, Kogan D, Veazey BD, Sweeney JK: Encouraging minority undergraduates to choose science careers: career paths survey results. CBE Life Sci Educ. 2008, 7: 394-409.

Hunter A-B, Laursen SL, Seymour E: Becoming a Scientist: The Role of Undergraduate Research in Students' Cognitive, Personal, and Professional Development. Sci Ed. 2007, 91: 36-74. 10.1002/sce.20173.

Garrow J, Butterfield M, Marshall J, Williamson A: The Reported Training and Experience of Editors in Chief of Specialist Clinical Medical Journals. The Editors and Their Journals. 1998, [ http://www.ama-assn.org/public/peer/7_15_98/jpv71014.htm ]

Whiteside U, Pantelone DW, Hunter-Reel D, Eland J, Kleiber B, Larimer M: Initial Suggestions for Supervising and Mentoring Undergraduate Research Assistants at Large Research Universities. International Journal of Teaching and Learning in Higher Education. 2007, 19: 325-330.

Robinson L, Drewery S, Ellershaw J, Smith J, Whittle S, Murdoch-Eaton D: Research governance: impeding both research and teaching? A survey of impact on undergraduate research opportunities. Medical Education. 2007, 41: 729-736. 10.1111/j.1365-2923.2007.02776.x.

Munabi IG, Katabira ET, Konde-Lule J: Early undergraduate research experience at Makerere University Faculty of Medicine: a tool for promoting medical research. Afr Health Sci. 2006, 6: 182-186.

Download references

Acknowledgements

The authors express their gratitude to the faculty in the Albert Cook Library, members of the editorial team who participated in searching for the various past volumes of the journal, the journal's reviewers who provided many insightful comments and to Ms Evelyn Bakengesa for the time she set aside to proof read the final draft of the paper.

Author information

Authors and affiliations.

Former Editor Makerere Medical Students Journal, Makerere University College of Health Sciences, New Mulago Hospital Complex, Kampala Uganda

Loraine Oriokot

Department of Human Anatomy, School of Biomedical Sciences, Makerere University College of Health Sciences, New Mulago Hospital Complex, Kampala Uganda

William Buwembo & Ian G Munabi

Dean's office, School of Medicine, Makerere University College of Health Sciences, New Mulago Hospital Complex, Kampala Uganda

Stephen C Kijjambu

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Ian G Munabi .

Additional information

Competing interests.

The authors declare that they have no competing interests.

Authors' contributions

All the authors read and approved the final manuscript. LO: Participated in the conceptualisation, data collection and write up of the final paper. WB: Participated in all phases of the papers write up from conceptualisation, analysis to the final write up IGM: Participated in all phases of the study; conceptualization, data collection, analysis and write up. SCK: participated in the conceptualisation of the paper and review of the various drafts of the paper prior to submission.

Loraine Oriokot, William Buwembo and Stephen C Kijjambu contributed equally to this work.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Authors’ original file for figure 1

Rights and permissions.

This article is published under license to BioMed Central Ltd. This is an open access article distributed under the terms of the Creative Commons Attribution License ( http://creativecommons.org/licenses/by/2.0 ), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Reprints and permissions

About this article

Cite this article.

Oriokot, L., Buwembo, W., Munabi, I.G. et al. The introduction, methods, results and discussion (IMRAD) structure: a Survey of its use in different authoring partnerships in a students' journal. BMC Res Notes 4 , 250 (2011). https://doi.org/10.1186/1756-0500-4-250

Download citation

Received : 06 October 2010

Accepted : 21 July 2011

Published : 21 July 2011

DOI : https://doi.org/10.1186/1756-0500-4-250

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- publications

- undergraduate

BMC Research Notes

ISSN: 1756-0500

- Submission enquiries: [email protected]

- General enquiries: [email protected]

Structure of a Research Paper

Structure of a Research Paper: IMRaD Format

I. The Title Page

- Title: Tells the reader what to expect in the paper.

- Author(s): Most papers are written by one or two primary authors. The remaining authors have reviewed the work and/or aided in study design or data analysis (International Committee of Medical Editors, 1997). Check the Instructions to Authors for the target journal for specifics about authorship.

- Keywords [according to the journal]

- Corresponding Author: Full name and affiliation for the primary contact author for persons who have questions about the research.

- Financial & Equipment Support [if needed]: Specific information about organizations, agencies, or companies that supported the research.

- Conflicts of Interest [if needed]: List and explain any conflicts of interest.

II. Abstract: “Structured abstract” has become the standard for research papers (introduction, objective, methods, results and conclusions), while reviews, case reports and other articles have non-structured abstracts. The abstract should be a summary/synopsis of the paper.

III. Introduction: The “why did you do the study”; setting the scene or laying the foundation or background for the paper.

IV. Methods: The “how did you do the study.” Describe the --

- Context and setting of the study

- Specify the study design

- Population (patients, etc. if applicable)

- Sampling strategy

- Intervention (if applicable)

- Identify the main study variables

- Data collection instruments and procedures

- Outline analysis methods

V. Results: The “what did you find” --

- Report on data collection and/or recruitment

- Participants (demographic, clinical condition, etc.)

- Present key findings with respect to the central research question

- Secondary findings (secondary outcomes, subgroup analyses, etc.)

VI. Discussion: Place for interpreting the results

- Main findings of the study

- Discuss the main results with reference to previous research

- Policy and practice implications of the results

- Strengths and limitations of the study

VII. Conclusions: [occasionally optional or not required]. Do not reiterate the data or discussion. Can state hunches, inferences or speculations. Offer perspectives for future work.

VIII. Acknowledgements: Names people who contributed to the work, but did not contribute sufficiently to earn authorship. You must have permission from any individuals mentioned in the acknowledgements sections.

IX. References: Complete citations for any articles or other materials referenced in the text of the article.

- IMRD Cheatsheet (Carnegie Mellon) pdf.

- Adewasi, D. (2021 June 14). What Is IMRaD? IMRaD Format in Simple Terms! . Scientific-editing.info.

- Nair, P.K.R., Nair, V.D. (2014). Organization of a Research Paper: The IMRAD Format. In: Scientific Writing and Communication in Agriculture and Natural Resources. Springer, Cham. https://doi.org/10.1007/978-3-319-03101-9_2

- Sollaci, L. B., & Pereira, M. G. (2004). The introduction, methods, results, and discussion (IMRAD) structure: a fifty-year survey. Journal of the Medical Library Association : JMLA , 92 (3), 364–367.

- Cuschieri, S., Grech, V., & Savona-Ventura, C. (2019). WASP (Write a Scientific Paper): Structuring a scientific paper. Early human development , 128 , 114–117. https://doi.org/10.1016/j.earlhumdev.2018.09.011

Structured abstract generator (SAG) model: analysis of IMRAD structure of articles and its effect on extractive summarization

- Open access

- Published: 07 May 2024

Cite this article

You have full access to this open access article

- Ayşe Esra Özkan Çelik ORCID: orcid.org/0000-0002-2553-0361 1 &

- Umut Al 2

110 Accesses

Explore all metrics

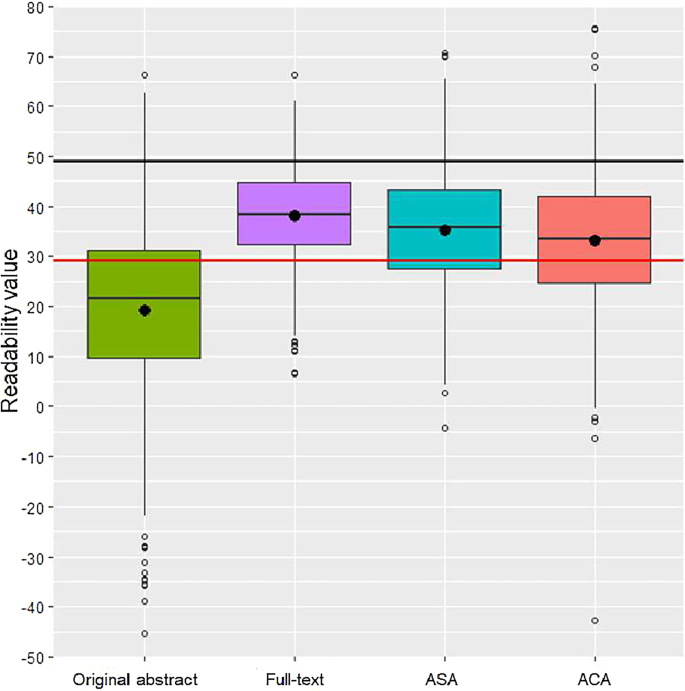

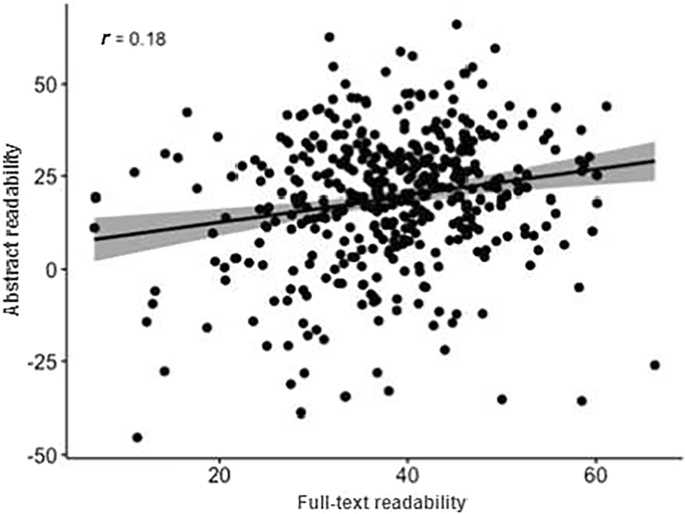

An abstract is the most crucial element that may convince readers to read the complete text of a scientific publication. However, studies show that in terms of organization, readability, and style, abstracts are also among the most troublesome parts of the pertinent manuscript. The ultimate goal of this article is to produce better understandable abstracts with automatic methods that will contribute to scientific communication in Turkish. We propose a summarization system based on extractive techniques combining general features that have been shown to be beneficial for Turkish. To construct the data set for this aim, a sample of 421 peer-reviewed Turkish articles in the field of librarianship and information science was developed. First, the structure of the full-texts, and their readability in comparison with author abstracts, were examined for text quality evaluation. A content-based evaluation of the system outputs was then carried out. System outputs, in cases of using and ignoring structural features of full-texts, were compared. Structured outputs outperformed classical outputs in terms of content and text quality. Each output group has better readability levels than their original abstracts. Additionally, it was discovered that higher-quality outputs are correlated with more structured full-texts, highlighting the importance of structural writing. Finally, it was determined that our system can facilitate the scholarly communication process as an auxiliary tool for authors and editors. Findings also indicate the significance of structural writing for better scholarly communication.

Similar content being viewed by others

Assessing GPT-4 Generated Abstracts: Text Relevance and Detectors Based on Faithfulness, Expressiveness, and Elegance Principle

CovSumm: an unsupervised transformer-cum-graph-based hybrid document summarization model for CORD-19

Semantic Graph Based Automatic Summarization of Multiple Related Work Sections of Scientific Articles

Avoid common mistakes on your manuscript.

1 Introduction

Abstracts are the most important textual tools in enabling potential readers to read the relevant full-texts from the huge stack of electronic information retrieved through the Internet. It is reported that there is a correlation between a scientific article’s readability and impact determined by its subsequent citations or the possibility of being published in a top 5 journal in a relevant subject [ 1 , 2 ]. However, compared to the relevant full-texts, abstracts are even much more subject to readability issues and structural flaws in their contents [ 3 , 4 , 5 , 6 ].

The electronic versions of scientific publications have become more preferred than the printed ones in a short time, with their advanced functionality that accelerates the access and publishing process [ 7 ]. However, electronic formats of scientific publications are almost identical to the printed formats. Thus, the electronic forms of publications have not increased the user experience in terms of readability [ 8 ]. In contrast, online communication brings new challenges to the scientific community for analyzing retrieved documents. These challenges include the distraction caused by being online, the obligation to choose from a stack of related articles, and the difficulty of maintaining focus while navigating through linked web pages [ 9 , 10 , 11 ]. Research has shown that reading and comprehending a lengthy electronic text, which requires scrolling and navigating back and forth, demands more mental effort than reading a printed text [ 12 , 13 ]. Screen reading has been found to be inherently distracting, mainly because of the above mentioned multitasking nature of online reading [ 14 ].

While reading lengthy electronic texts can be challenging, scientific publications are constructed and archived following certain rules, making them highly structured text data [ 15 ]. The components of a scientific article, including title, abstract, keywords, article body, acknowledgments, bibliography, and appendices, each have very specific functions and are located in particular places within a manuscript. The article bodies also follow a well-defined structure over time, largely due to the introduction of the IMRAD (Introduction, Methods, Results, and Discussion) format by Pasteur in 1876 [ 3 ]. The IMRAD format is now widely adopted by the scientific community as it ensures that articles are well-organized and easy to read, regardless of whether they are published in electronic or print format. Each section has a specific role in communicating the research findings as follows:

Introduction: What was studied and why?

Methods: How was the study conducted?

Results: What were the findings?

Discussion: What do the findings mean?

Before reading the body text, readers first encounter titles and sometimes keywords that contain very limited information about the article. Abstracts, on the other hand, are the first and last stop for the reader to learn the content before proceeding to review the full-text. Therefore, for most readers, an article is as interesting as its abstract. Studies have shown that nearly half of the readers of scientific articles who read the abstracts also read the full-texts [ 16 ]. In a study, users’ transaction records of more than 1000 scientists, and 17,000 sessions on ScienceDirect were examined [ 6 , 17 ]. It was found that at least 20% of the users only read abstracts and that they trust the abstracts to select the relevant articles and to provide the necessary preliminary information for their research.

The language used in the abstract should be clear enough so that everyone can understand it, even if they don’t know much about the topic or English isn’t their first language. However, it’s often the case that abstracts are more difficult to read than the main body of an article [ 3 , 4 , 5 , 18 , 19 ]. Moreover, the abstract section should also cover the major information given in the full-text. Studies have found that skipping necessary information in abstracts is a frequently observed problem [ 6 , 20 , 21 , 22 ].

How can abstracts be written to persuade readers to read the full text, especially if the reader has difficulty understanding the abstract? Structured abstract writing may be a solution, as it can improve readability and comprehension by dividing the text into subheadings [ 23 ]. In this way the informativeness of the abstract increases. When compared to unstructured abstracts, structured abstracts have significantly higher information quality [ 24 ]. Further, the indexing performance of the publication increases. It provides ease of access to the user and increased relevance in search results. This facilitates access to the article for all users with varying degrees of familiarity with the subject of the publication. The structural headings can help readers to find and understand the information they need more easily. It is easier for the author to write an abstract using a structured format than a classical one. The author cannot forget to mention all parts of the publication in the abstract. In that manner, abstract full-text consistency increases. It is preferred more by the readers and authors than the classical versions [ 23 ].

Given the critical role of abstracts in scholarly communication, this study is conducted to enhance the informativeness of abstracts by utilizing the high readability of full-text sentences and the structured ordering inherited from the full-text articles.

2 Literature review

The main research topics related to abstracts in the literature deal with organizational issues, readability issues and presentation issues in general. Many researchers have found that abstracts do not follow the structural order followed in the full-text, if the journal does not have a specific policy on this issue.

In the process of deciding whether to read the full text of an academic article, readers are most interested in descriptive information about the research problem, method, or results. Skipping information about these parts in abstracts is a frequently observed problem [ 6 , 20 , 21 , 22 ]. The abstract of a scientific paper often contains long, inverted sentences with conjunctions and intensive use of specific technical terms or jargon related to the field. The conscious preference for such sophisticated language features has resulted in abstracts becoming progressively more difficult to read over time. The readability of an abstract is usually found more difficult than the other parts of the article [ 3 , 4 , 5 , 18 , 19 ]. Although the subject of the presentation is an element that should be considered separately from the readability context [ 25 ], it is difficult to read an abstract written in a single block without paragraphs and subtitles, in fonts smaller than the full-text, and sometimes in italics [ 27 , 27 ]. The abstract formats required by journals vary. The two most dominant formats are classical (or traditional) abstracts and structured abstracts. Classical abstracts which are preferred by most journals, are not produced in a format that will attract the attention of the reader within the scope of the presentation. Abstracts that are written in a single block in an unstructured format, without paragraphs and subheadings, are generally called classical. Structured abstracts must be produced by filling in all the structural titles specified by the journal.

Luhn [ 28 ] carried out his pioneering work in the field of automatic text summarization in order to save the reader time and effort in finding useful information in an article or report when the widespread use of the Internet and information technologies were not yet on the agenda. Since then, the summarization of scientific textual data has become a necessary and crucial task in Natural Language Processing (NLP) [ 29 , 30 ]. However, there are certain difficulties such as the abstract generation, having labeled training and test corpora, and the scaling of collections of large documents.

Research in automatic text summarization has witnessed a proliferation of techniques since the beginning. The process generally involves several stages, including pre-processing the source document, extracting relevant features, and applying a summary generation method or algorithms. In the pre-processing stage, text documents are prepared for the next stages using linguistic techniques such as sentence segmentation, punctuation removal, stop word filtering, stemming, etc. Then, words are converted to numbers for computers to decode language patterns. Common methods include bag-of-words, n-grams, tf-idf, and word embeddings. For feature extraction, some of the commonly used features [ 31 ] that are used at both the word and sentence level to identify and extract salient sentences from documents are listed below:

Word level features

Keywords (content words): Nouns, verbs, adjectives, and adverbs with high TF-IDF scores suggesting sentence importance.

Title words: Sentences containing words from the title are likely to be relevant to the topic of the document.

Cue Phrases: Phrases such as “conclusion”, “because”, “this information”, etc. that indicate structure or importance.

Biased words: Domain-specific words that reflect the topic of the document are considered important.

Capitalized words: Names or acronyms such as “UNICEF” that indicate important entities.

Sentence level features

Sentence Location: Sentences in the document are prioritized due to information hierarchy. For instance, beginning and ending sentences are likely to hold more weight.

Length: Optimal length of sentences plays an important role in identifying excessive detail or lack of information.

Paragraph Location: Similar to sentence location, beginning and ending paragraphs of the document carry higher weight.

Sentence-Sentence Similarity: Sentences with higher similarity to other sentences of the document indicate their importance.

Text summarization methods are typically confined to extractive and abstractive summarization. In extractive text summarization, supervised and unsupervised learning methods are applied. Supervised learning needs a labeled dataset containing both summarized and non-summarized text, while unsupervised learning uses advanced algorithms such as fuzzy-based, graph-based, concept-based, and latent semantics to process input automatically [ 32 ].

Summarization of scientific papers is one of the applications of automatic summarization. Abstract generation-based applications and citation-based applications are two main branches of scientific article summarization. Other applications focus on specific problems such as the summarization of tables, figures, or specific sections of the related article [ 29 ]. Turkish text summarization studies primarily used extractive techniques due to a deficiency of trained corpora, a requirement that is still unmet in languages with limited resources like Turkish [ 33 ].

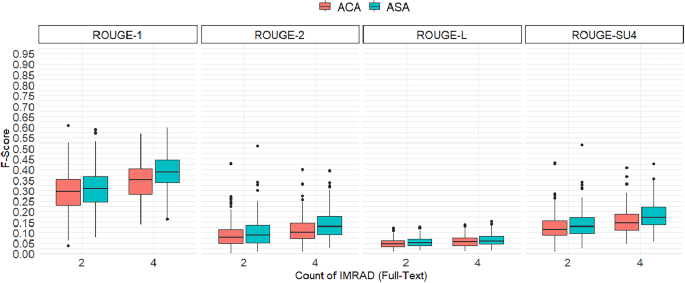

In addition, in scientific article summarization, single-article summarization with extractive techniques has predominantly been used with the high dominance of combinations of statistical and machine learning approaches, and intrinsic evaluation methods which are largely based on ROUGE (Recall-Oriented Understudy for Gisting Evaluation) metrics [ 29 ]. The ROUGE evaluation of an automated scientific article summarization system that focused on the dataset containing academic articles shows that the extractive algorithms are better than the abstractive algorithms [ 34 ].

Our summarization model is based on a study [ 35 ] that evaluated the performance of 15 different extractive-based sentence selection methods, both individually and combined, on 20 Turkish news documents. The study aimed to select the most important sentences in a document. They analyzed the outputs of the methods based on the summaries of sentences hand-selected by 30 evaluators. The best results were obtained when the sentence position, number of common adjacencies, and inclusion of nouns were combined. While these features were combined in a linear function, their weights were kept equal.

3 Research objectives and questions

We propose a summarization model based on extractive techniques combining general sentence selection features that have been shown by human judgments to be beneficial for Turkish [ 35 ]. Our study aims to assess the suitability of the Turkish librarianship and information science (LIS) corpus for automatic summarization methods by evaluating it from a broad perspective, rather than developing our own method. We focus on the full-text structural order to improve the extractive sentence selection process. Additionally, we compare the readability levels of full texts and abstracts to emphasize the significance of readability in scholarly communication. Raising awareness of this issue is also important, especially among LIS professionals.

The field of LIS is a broad and interdisciplinary field that encompasses a wide range of research topics. That is characterized by integrating research paradigms and methodologies from various disciplines [ 36 ]. This interdisciplinary nature makes LIS an ideal domain to examine the structural layouts of various approaches employed in scientific articles which can be extended to other fields. Due to this characteristic, LIS was selected as the domain in this study.

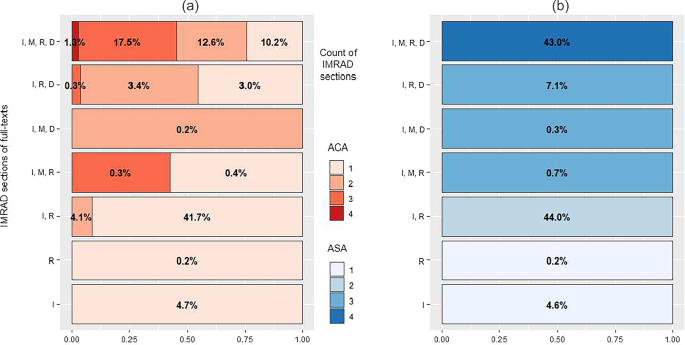

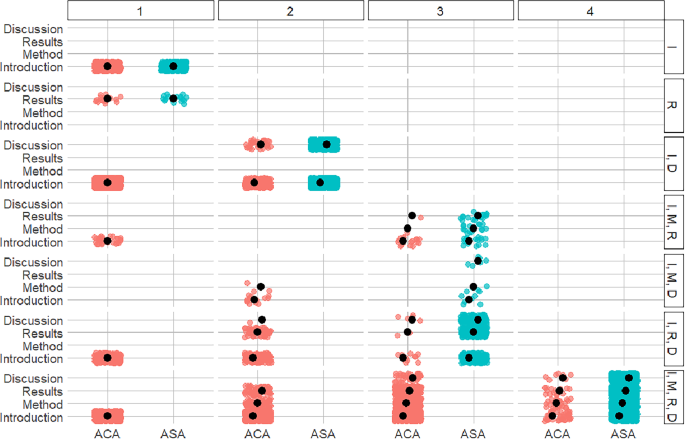

SAG architecture

The main goal of this study is to understand the benefits of generating structured abstracts using extractive methods. We aim to identify the most feasible way to generate abstracts for scholarly communication in Turkish. It is clear that choosing the most important sentences from each structural section of a scientific article and presenting them under the structural headings will facilitate the abstract generation process. Moreover, such structural sectioning increases the semantic integrity and readability of an abstract. Our main hypothesis is “Considering the structural features of full-texts in extracting abstract sentences with automatic methods will increase the quality of the outputs”. The study attempts to answer the following research questions: (1) Are the full-texts of Turkish LIS articles organized taking into consideration the basic structural features that are expected to exist in a scientific publication? (2) What is the readability of the full-texts and the abstracts of Turkish LIS articles, based on the readability scale? (3) Does using full-text structural features in extracting abstracts with automated methods improve output quality?

In our study, we examined articles published in the field of LIS with classical abstracts. The corpus was analyzed to determine whether the full-texts of the articles are more readable and better structured than the classical author abstracts. We generate a simple automatic abstract generator model that chooses the most important sentences from each structural section of each article.

4 Methodology

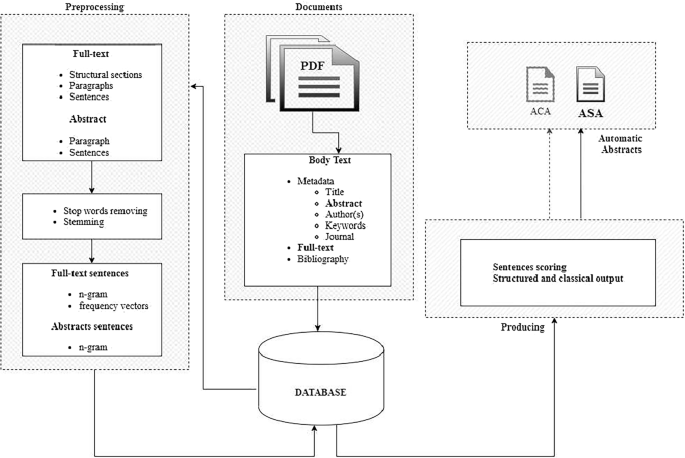

We utilized an extractive automatic summarization system named, Structured Abstract Generator (SAG), which depends on the extraction of the most important sentences from all structural parts of the full-texts of articles. Figure 1 demonstrates the architecture of the SAG. This section describes the methodology used in the study.

4.1 Data collection and representation

To construct a corpus for the study, Türk Kütüphaneciliği -Turkish Librarianship (TL) and Bilgi Dünyası -Information World (IW), which are major journals in the field of librarianship and information science in Turkey, have been used. Both journals asked the authors to develop classical abstracts. In addition, both journals do not set either an IMRAD or similar clear template for full-texts. However, IW draws a framework in line with the IMRAD regarding the arrangement of the content. All refereed articles written in Turkish were included in the study. Since each journal is open access, there was no problem in accessing these articles. This study is the first in Turkish to conduct a detailed full-text analysis of a large corpus of LIS literature.

In the initial stage, all articles were saved in PDF format with a unique identifier that encoded the journal name, year, volume, and issue information. For example, the identifier BD200011 indicates an article published in the year 2000, which is the 1st volume of the year and the 1st article of the volume in the IW (BD in Turkish) journal.

Once the articles were identified, they were converted into.txt format using UTF-8 character encoding to ensure the correct representation of Turkish characters. Then, article metadata was automatically extracted. This included author names, titles, abstracts, body text, and keywords, which are clear indicators of the content and are located in specific places in the document.

After processing 421 documents from two journals (172 IW, 249 TL), a relational database was created using MySQL. This database enabled the efficient processing of article full-text sentences as vectors, where each component is assigned to the corresponding structural section of the document, as well as the document’s metadata. The IMRAD format, which is the most prominent organizational structure for full-text in scientific writing, was used in this study.

To facilitate further stages, web-based interfaces were developed to enable the monitoring and management of rules governing the structural layout decisions for each article. The development of a web-based system offered inherent advantages in terms of providing flexible work arrangements and enabling quick control over individuals in operator roles. The solution was designed to be compatible with both mobile and desktop devices, enabling the team to operate flexibly and remotely.

The team of operators consisted of six professionals, two undergraduate students, and four PhD students from the Department of Information Management. These individuals had prior expertise regarding the structural components of scientific articles. Two roles were identified for the expert team: operator (4 experts) and administrator (2 experts).

Operators copied and pasted the body text from these interfaces according to IMRAD headings, retaining complete control over the process. After the completion of the IMRAD marking procedure for an article, operators were unable to make any additional modifications using the interface. However, administrators retained the authorization to execute final supervision and operational functions subsequent to this stage. This control was important to ensure that the IMRAD structure of the articles, which was inherited by paragraphs, was determined correctly. To ensure inter-annotator agreement of scholarship decisions, each article was tagged by at least two operators and one expert doctoral student during the manual step.

By implementing this work plan, the expert team successfully achieved the systematic and efficient classification of the boundaries and structural sections (according to the IMRAD format) of each paragraph of the body text. Consequently, the work of carefully adhering to the sequential arrangement of sentences in all articles was successfully completed within a brief timeframe. This hierarchical structure of body text was further applied to the sentence level through the utilization of a relational database. At the end of the two main steps mentioned above, 101,019 sentences were extracted from 421 articles. Next, word frequency vectors and n-gram sequences were obtained using Zemberek [ 37 ] and then stored in the database.

Table 1 shows an example of the data representation for a sentence of an article. The ID BD200011 indicates that the sentence is from the first article of the first volume of the year 2000 of the IW (BD in Turkish) journal. The remaining information refers to the 27th sentence of the 5th paragraph of the 1st IMRAD section of the relevant article. In this study, we used the following section numbers: 1 for Introduction, 2 for Method, 3 for Results, and 4 for Discussion. The title information indicates the title of the paragraph to which this sentence belongs.

4.2 Stemming

Since Turkish has an agglutinative morphology, inflectional or plural suffixes may produce multiple words from one root. Turkish words that appear in different ways in the text but have the same meaning in terms of their roots can be shown in a single way. Due to the high reduction rate provided in the size of the document-term matrix, it is strongly recommended to apply to stemming in Turkish texts [ 38 ]. For root finding, we utilize Zemberek [ 37 ], a natural language processing toolkit for Turkish for root finding. Although sentences of articles had been parsed under the supervision of the operators, we employed data-cleaning methods on the raw data.

After the stemming and data-cleaning processes, word frequency vectors are produced. Table 2 depicts the example of a vector representation of a sentence whose raw data is seen in Table 1 .

4.3 Extractive summarization and evaluation process

Extractive automatic summarization methods include the process of scoring, sorting and selecting sentences in the document. Automatic text summarization approaches and methods are employed to identify key representative sentences from the full-text. Sentences are scored based on their predetermined features, and the significance of each sentence in the document is determined by these scores. Sentence selection functions that bring together each feature by weighting are another stage of the extractive automatic summarization systems. Features used in sentence scoring are as follows.

4.3.1 Sentence position

This feature assumes that the most important information in a text is usually presented at the beginning. It assigns a higher ranking score to sentences that are closer to the beginning of the text, using the following formula

here i is the sequence number of the sentence in the document and n is the number of sentences in the document.

Formula 1 gives, each sentence ranking points from 1 to 0 depending on the order of appearance in the article.

4.3.2 Sentence centrality

Centrality is the most widely used feature in automatic text summarization for a variety of text types and corpora. It is based on finding the degrees of representing the basic information given in the full-text, in terms of the scoring of the sentences. It is calculated by considering how many other sentences in the document are connected to it. There are many different ways to calculate centrality. Within the scope of the study, the centrality of each sentence for a document with n sentences was obtained as in Formula ( 2 ) [ 39 ].

here \(\mathrm {\ i\ne j} \text { and } \textrm{cos}\left( \textrm{s}_\textrm{i}\textrm{,} \textrm{s}_\textrm{j}\right) \mathrm {\ge 0.16}\) .

Sentence centrality based on three factors: the similarity between a sentence \(\textrm{s}_\textrm{i}\) and other sentences \(\textrm{s}_\textrm{j}\) in the document, the number of shared words (n-friends) between \(\textrm{s}_\textrm{i}\) and \(\textrm{s}_\textrm{j}\) , and the presence of common n-grams between them. The resulting sum is then normalized by dividing it by n-1, where n is the number of sentences in the document. An experimentally determined threshold value of \(\textrm{cos}\left( \textrm{s}_\textrm{i}\textrm{,} \textrm{s}_\textrm{j}\right) \mathrm {\ge 0.16}\) was found to be appropriate. Accordingly,

where \(\mathrm {\ i\ne j}\) . Here, the number of shared affinities are calculated as in Formula 3 over sets of sentences similar to both \(\textrm{s}_\textrm{i}\) and \(\textrm{s}_\textrm{j}\) . 2-grams were used for shared n-grams in Formula 4. \(\mathrm {\left| X \right| }\) gives the number of elements of the set X.