Volume 17 Supplement 2

Selected articles from the International Conference on Intelligent Biology and Medicine (ICIBM) 2016: medical informatics and decision making

- Open access

- Published: 05 July 2017

Evaluation of the informatician perspective: determining types of research papers preferred by clinicians

- Boshu Ru 1 ,

- Xiaoyan Wang 2 &

- Lixia Yao 1 , 3

BMC Medical Informatics and Decision Making volume 17 , Article number: 74 ( 2017 ) Cite this article

4772 Accesses

5 Citations

Metrics details

To deliver evidence-based medicine, clinicians often reference resources that are useful to their respective medical practices. Owing to their busy schedules, however, clinicians typically find it challenging to locate these relevant resources out of the rapidly growing number of journals and articles currently being published. The literature-recommender system may provide a possible solution to this issue if the individual needs of clinicians can be identified and applied.

We thus collected from the CiteULike website a sample of 96 clinicians and 6,221 scientific articles that they read. We examined the journal distributions, publication types, reading times, and geographic locations. We then compared the distributions of MeSH terms associated with these articles with those of randomly sampled MEDLINE articles using two-sample Z-test and multiple comparison correction, in order to identify the important topics relevant to clinicians.

We determined that the sampled clinicians followed the latest literature in a timely manner and read papers that are considered landmarks in medical research history. They preferred to read scientific discoveries from human experiments instead of molecular-, cellular- or animal-model-based experiments. Furthermore, the country of publication may impact reading preferences, particularly for clinicians from Egypt, India, Norway, Senegal, and South Africa.

These findings provide useful guidance for developing personalized literature-recommender systems for clinicians.

Medicine is a field that is continually changing as knowledge of disease and health continues to advance. In the age of translational medicine, clinicians constantly face challenges in transforming scientific evidence into ordinary clinical practice. Peer-reviewed scientific literature is a major, minimally biased resource for scientists and other researchers to communicate their discoveries based on experiments and their findings from rigorously implemented trials and thoughtfully balanced clinical guidelines. Thus, remaining current on medical literature can help clinicians provide the best evidence-based care for their patients [ 1 ].

Nevertheless, a widely held belief is that most clinicians rarely read scientific literature on account of their hectic schedules evaluating patients and preparing the required paperwork. According to a survey by Saint et al. US internists reported that they read medical journals for an average of 4.4 h per week in 2000 [ 2 ]. Analysis of the web log of 55,000 Australian clinicians by Westbrook et al. revealed an average of 2.32 online literature accesses per clinician per month in a period from October 2000 to February 2001 [ 3 ]. Moreover, a 2007 survey report by Tenopir et al. reported that 666 pediatrician participants spent 49 to 61 h per year (equivalent to 0.94 to 1.2 h per week) reading journal articles [ 4 ]. In the same year, McKibbon and colleagues showed that primary care physicians not affiliated with an academic medical center in the US accessed an average of one online journal article per month, while specialists not affiliated with an academic medical center accessed an average of 1.9 online journal articles each month [ 5 ].

The time constraint of clinicians is the most commonly suggested reason for the small number of articles read. Moreover, access to journals and publication databases is sometimes limited to clinicians with academic affiliations, which can subsequently limit the number of articles accessed and read. Another consideration is whether scientific journal reading comprised everyday practice during training. The majority of clinicians presently in practice were trained in the pre-digital era and likely did not learn the literature-searching skills necessary to keep updated [ 6 , 7 , 8 , 9 ]. Furthermore, they may not have the advanced technical knowledge (e.g., statistical modeling) often required to understand and apply the findings in scientific articles to clinical practice [ 7 , 10 , 11 ].

Moreover, the aims of clinicians by reading are often discordant with the goals of researchers in their publishing endeavors. A clinician usually seeks information that is relevant to his/her respective medical practice, whereas many researchers do not emphasize the clinical relevance of their work to an extent that is sufficient to address the clinician’s needs [ 8 , 10 , 12 ]. Researchers often use specialized and nuanced language to describe complex medical discoveries in scientific literature. Such language can be opaque for practicing clinicians. In addition, clinicians may be frustrated by many discrepancies and knowledge gaps in the literature [ 13 , 14 , 15 , 16 ] because they require conclusive and actionable information in practice.

To address this issue, some researchers have strived to improve the user experience with literature search engines. They have added the functions of sorting and clustering search results, as well as extracting and displaying semantic relations among concepts [ 17 ]. In addition, considerable research efforts have been made in building intelligent recommender systems that automatically recommend literature to users by employing content-, collaborative-, or graph-based filtering methods [ 18 , 19 , 20 , 21 , 22 ]. Although these studies differed in the methods used, they all targeted scientists and other researchers for whom literature review is an integral part of their daily work.

Clinicians, on the other hand, who search for and read scientific literature, are transcending their daily routine to satisfy their intellectual curiosity, increase their awareness of the latest scientific advancements, and obtain knowledge for treating their patients. The distinction in this regard between the needs of clinicians versus professional researchers has inspired us to investigate the specific needs of clinicians and their preferences for reading literature. Our goal is to learn from clinicians what types of medical research papers they prefer to read and their modes of accessing and assimilating the knowledge.

In this study, we identified a group of clinicians and the scientific papers they were likely to read from the CiteULike.org website. We investigated what type of job they perform, in what specialty they practice, in which country they live and work, the length of time they have practiced, and what scientific research was interesting to them. This type of systematic examination of clinician reading libraries is an important pre-market evaluation for developing a personalized literature-recommender system to improve clinician reading experiences and overall promote the reading of scientific literature.

The workflow of the entire study is illustrated in Fig. 1 with each step further described below.

A workflow to determining types of research papers preferred by clinicians

Data collection

We employed CiteULike.org [ 23 ] to identify a sample of clinicians who read scientific literature. Since 2004, CiteULike.org has provided free online reference management services with the goal of promoting the sharing of scientific references and fostering communication among researchers. It enables registered users to add publications they like to their own libraries and identify their research fields in profiles. The system then groups users of the same research field.

Moreover, the libraries and basic information of CiteULike.org users are openly shared on the website, making it an ideal data source choice for our study. We selected clinical medicine in the primary research field and retrieved 2,472 users on May 1, 2014. We manually verified whether each of these users is a clinician based on the combination of name, location, job title and affiliation information provided in the profile. In this process, we eliminated 91.7% of the users because significant amounts of information were missing from their profiles and we could not confirm that they were clinicians.

We further excluded 109 users who had fewer than five articles in their libraries because these users are less likely to be active users on CiteULike. Including them could complicate the analysis and results interpretation. After filtering according to these stringent selection criteria, our final sample of reading clinicians was comprised of 96 individuals, who claimed to be clinicians and had relatively complete information in their profiles. These 96 users cited 8,511 articles in their CiteULike libraries. For those articles with PubMed IDs (PMIDs), the unique identifier used by the PubMed search engine to access the MEDLINE bibliographic database of life sciences, we retrieved the complete abstracts in MEDLINE format. For the remainder, we searched PubMed using the article title and retrieved the publication if there was only one returned hit, which retrieved the exact match of the article most of the time, but not always. In this process, 2,290 articles (26.9%) could not be retrieved on account of missing bibliographic information, article exclusion from MEDLINE, or difficulty with the title search.

For example, some users did not list the complete article titles and other valid bibliographic information. Instead, they listed keywords identifiable only to themselves, such as sharing and managing data , aging and the brain, and public health and Web 2.0 . These keywords were too general for PubMed to precisely locate corresponding articles. Other articles, such as “The Dos and Don’ts of PowerPoint Presentations” and “Inside Microsoft SQL Server 7.0 (Mps),” were not indexed by MEDLINE. Meanwhile, some articles were indexed in MEDLINE but could not be retrieved using the title search field in PubMed. We ultimately identified 6,221 publications that were cited in the user libraries, and we employed them in further analysis.

Content analysis by MeSH

Medical Subject Headings (MeSH) is used by the National Library of Medicine (NLM) to annotate biomedical concepts and supporting facts addressed in each article indexed in the MEDLINE bibliographic database for information-retrieval purposes [ 24 ]. Each MEDLINE article is assigned two types of MeSH terms: major terms, which represent the main topics of the article, and minor terms, which represent the concepts and facts that are related to the experimental subjects and design attributes, such as humans or animals, adults or children, gender, and countries. MeSH annotations have been used in text mining and data mining tasks [ 25 , 26 , 27 ] and provided unique and key information on the topic and content preferences of the sampled clinicians in our study. All MeSH terms were included in the MEDLINE format abstracts that we downloaded.

We were eager to understand what content and topics, if different, that a dummy tool, without prior knowledge of its clinician users, might recommend. Therefore, we randomly selected 6,000 publications by sampling from PMIDs. We compared the MeSH terms from the real clinician reading libraries to the randomly sampled MEDLINE articles. The MeSH terms over-represented in the real clinician reading libraries were expected to suggest contents and topics that were more relevant to the clinicians. This information can provide useful clues for designing a personalized literature-recommender system that targets clinicians. In this experiment, we wrote Python scripts to extract and count the MeSH terms indexed in each article.

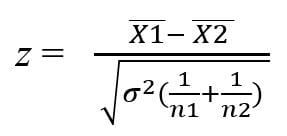

Two-sample Z-test

The two-sample Z-test is often used for validating whether there is a significant difference between two groups based on a single categorical attribute. For example, it can validate whether there are more female vegetarians than male [ 28 ]. We chose this statistical test for our study because we intended to learn what contents and topics (in MeSH terms) are more interesting to clinicians. Our null hypothesis was that the frequency of MeSH term t in the clinician reading libraries was identical to that in the randomly sampled articles recommended by the dummy tool ( H 0 : f t,clinician = f t,random ). The frequency was therein defined as the number of articles that were assigned the term t divided by the total number of articles in that group. We calculated the z-scores and two-side p -values in Excel to validate the null hypothesis.

Multiple comparison correction

We considered the multiple comparison problem and avoided p -values that became ‘significant’ because of random effects [ 29 ] when performing Z-test for thousands of MeSH terms. We conducted the Benjamini-Hochberg false discovery rate (FDR) controlling procedure [ 30 ] in Excel to set more stringent p -value thresholds instead of 0.05.

We first examined the professional backgrounds of the 96 sampled clinicians. Of these clinicians, 58 were practicing doctors (60.4%), 19 were medical school faculty members (19.8%), 12 were medical doctors in atypical career paths, such as managerial/consulting positions in various healthcare organizations (12.5%), five were students advancing in post-graduate medical studies (5.2%), and two were practicing nurses (2.0%). In addition, we evaluated the time lengths of the clinicians’ medical practices. For each clinician, we calculated the number of years since he/she graduated from the professional school. The number of years range from 1 to 45, with an average of 16.0 (Fig. 2a ).

Demographic information for the sampled clinicians. a histogram of clinician practicing years after medical school graduation; b distribution of specialties; c distribution of countries of residence

In terms of specialty, 30 clinicians specialized in the internal medicine (31.3%), 12 in the surgery (12.5%), 6 in the pediatrics (6.3%), 5 in the psychiatry (5.2%), 25 in other medical specialty areas (26.0%, individual specialties with the number of clinicians in each were listed in Fig. 2b ), 11 not actively seeing patients (11.5%) and 7 in an undisclosed specialty (7.3%). Geographically (Fig. 2c ), 33 clinicians resided in the United States (34.4%), 16 in the United Kingdom (16.7%), five in the Germany (5.2%), five in the India (5.2%), four in the France (4.2%) and the remaining 23 clinicians were in other countries (24.0%, see Additional file 1 : Table S1).

We then examined the clinician reading libraries. First, we plotted a histogram of the publication years for all 6,221 publications (Fig. 3a ). Of the articles read by clinicians, 89.9% are published after 2000, with the peak centering between 2008 and 2010. In both 2013 and 2014, a significant decrease occurs. The 2013 decrease in the number of articles read by clinicians may be due to the fact that many users are no longer active on this website, whereas for 2014, we collected the data from CiteULike in May.

Temporal analysis of articles read by clinicians. a histogram of articles published each year; b histogram of age of articles when being read by clinicians (age = year read - publication year)

To examine how soon after an article is published that a clinician reads it, we plotted a histogram for the age of the article at the reading time, which is defined by the year when the article was read by a clinician minus its publication year. Figure 3b shows that articles published and read by clinicians in the same year are the highest, and a steady decreasing trend is evident when the article age increases. This result is strong evidence that clinicians in fact read the latest publications.

Interestingly, nine clinicians additionally read 51 papers published more than 30 years ago. These papers were published on journals with an average impact factor of 10.84 and have been cited for an average of 573.88 times according to Google Scholar, and thus can be considered as landmark articles in medical research. For example, “Studies of Illness in the Aged. Index of ADL: A Standardized Measure of Biological and Psychological Function” and “Functional Evaluation: The Barthel Index” were published in 1963 in the Journal of the American Medical Association (JAMA) and the Maryland State Medical Journal , with a Google Scholar citation of more than 7,000 and 9,400 times. These are the original publications of the most appropriate and extensively adopted measurement for evaluating functional status in the elderly population.

We summarized the types of publications for 6,221 articles and found that the majority are journal articles, including original research articles (3,698 or 59.4%), reviews (1,093 or 17.6%), reports of clinical trials (508 or 8.2%), case reports (259 or 4.2%), evaluation and validation studies (185 or 3.0%), comments (147 or 2.4%), and clinical guidelines (29 or 0.5%). The remaining 4.9% of articles belong to opinion and announcement categories, such as letters, editorials, and breaking news (see Additional file 1 : Table S2).

In addition, we investigated what journals are read most often by clinicians and found that 6,221 articles are widely distributed among 1,664 journals. Nearly 50% (823) of the journals are cited only once in the clinician reading libraries, and 53 journals are cited 20 or more times (Table 1 ). Such a sparse distribution of journals suggests a need to further evaluate the impact of journals in a reliable recommender system model.

We later evaluated what journals that the clinicians of different specialty groups read (see Additional file 1 : Table S3). The results indicate that prestige was not the most important factor when different specialty groups choose what scientific journals to read. Specialists tend to read journals closely related to their practice fields, rather than medical journals with high impact factors that target a broader readership. For instance, Arthroscopy: The Journal of Arthroscopic & Related Surgery is the most widely read journal among surgeons, while The Lancet ranks only in 108th place in their reading.

To determine whether the country of residence and language or culture of practice can affect the clinician reading preferences, we analyzed the association between the clinician and author countries of residence for the articles in their libraries. If an article has authors from different countries, we used the first author’s country of residence. In the heat map of Fig. 4 , each row represents a country of residence for the clinicians; each column represents the country of residence for the authors. The cell color changes horizontally from green (minimum number of articles) to red (maximum number of articles). According to the findings, articles written by American and British authors are extensively read by many clinicians in our sample, given the fact that these two countries publish a considerable amount of medical research. However, clinicians residing in Egypt, India, Norway, Senegal, and South Africa prefer works by authors of their own countries.

Clinician country of residence versus author country of residence in the reading libraries. Each row represents a country of residence of the sampled clinicians; each column represents the country of residence of the authors of the cited articles. The cell color changes from green (minimal count) to red (maximal count) for each row

We performed a comprehensive statistical analysis to examine whether the topics of articles read by the sampled clinicians, in MeSH terms, differed from those recommended by the dummy tool without prior knowledge of the users. We found that 119 major MeSH terms and 288 minor MeSH terms have significantly different occurrence frequencies in the two groups (see Additional file 1 : Table S4 and S5). Among the MeSH terms with the highest frequency variations in the two groups (Table 2 ), clear distinctions exist. Clinicians read more topics relating to patient issues and needs, such as pain, hip joints, drug therapy, surgery, arthroscopy, and therapeutic uses and adverse effects of analgesics. They prefer meta-analyses, reviews of literature, and quality of life research. Moreover, they are interested in research on human subjects, instead of molecule-, cell-, or animal-based studies, likely because human-based research is more relevant to treating their patients.

Discussions

The proliferation of scientific publications and reduction of clinician reading times warrants the need for a method of enabling clinicians to quickly identify the latest results from scientific publications to more effectively practice evidence-based medicine. In the past decade, many clinicians have employed digital resources for the most recent and relevant findings and guidelines. Previous studies show that 60 to 70% of US clinicians access the Internet for professional purposes, and searching for literature in journals and databases is one of their most frequent online activities [ 31 ]. However, an overwhelming amount of information, coupled with the inadequate search skills of readers, remain major obstacles for clinicians to access research literature.

In recent years, several literature reading applications have been developed for clinicians to access research articles on smartphones and tablets [ 32 ], so that fragmented time between patient care could be better utilized. Some of them provided paper recommendation. For example, the Read by QxMD [ 33 ] suggests the latest research papers based on the users’ specialties and their choice of key words and journals. Docphin [ 34 ] tracks new and landmark papers related to the medical topics and authors specified by users. The mobile application offered by UpToDate.com [ 35 ] populates users’ reading list with articles picked by a board of medical experts. None of these implementations go beyond keyword-based recommendations and are not fully adopted by clinicians. A truly personalized literature-recommender system that alleviates the obstacles for clinician to access and read research literature requires more cognitive study to understand their reading preference and habits, which motivates us to carry out this work.

Our study advanced previous research [ 2 , 3 , 4 , 5 , 7 , 8 , 9 , 31 , 36 ] by determining clinician reading preferences based on bibliographic and content aspects. We employed CiteULike, a contemporary data source, to identify the reading materials that are favored by the site’s clinician users. The publically available user profiles and reading library information on the website makes it more desirable for this study than other websites such as Mendeley. Moreover, compared to the widely used methods in previous studies [ 9 ] such as interviews, surveys, and tracking library access of limited samples within an institution, CiteULike offers two advantages. First, it is a non-invasive collection; the unnecessary response bias that is common in interview- or survey-based studies is avoided. Secondly, the sampled users on CiteULike represent clinicians from far more diverse geographic locations and medical specialties than in a specific hospital or institution.

In this study, we determined that research articles published in peer-reviewed journals are the most highly valued type; moreover, articles are usually read within the first 1 or 2 years after the publication date. Landmark papers in medical research history are also a significant category. Reviews, reports of clinical trials, meta-analysis studies, and case reports are likewise well represented across specialties and countries of practice.

In selection of the reading material, whether a paper is published in a prestigious journal with a high impact factor carries less weight than the topic of the paper and experimental design. The country of publication apparently also plays a role in reading preference. For example, some readers from Egypt, India, Norway, Senegal, and South Africa seem to prefer works by authors from their own countries (Fig. 4 ).

In content analysis, we determined that patient-oriented topics, meta-analyses, literature reviews, studies involving human subjects, and quality of life research are significantly more prevalent in clinician reading choices than in the overall publications.

These results provide important insights for designing a personalized literature recommender system that would be more welcome by clinicians, who are eager to learn the latest scientific discoveries relevant to patient care. For example, the system would possibly recommend not only latest research articles published in peer-reviewed journals, but also some landmark research works. The language and culture background, together with significantly over-represented MeSH terms, could be used in conjunction with the specialty information, so that recommendation could be optimized for different user groups.

However, the findings of this study must be considered in the context of the following limitations. First, the sample size of clinicians was small. The distribution of their demographic and professional attributes may not represent the entire clinician population. Secondly, we learned the paper reading preference of the sampled clinicians from articles cited in their CiteULike libraries. We assumed that the clinicians have read those articles in their libraries, which might not be true all the time. Thirdly, we randomly selected 6,000 papers from the MEDLINE bibliographic database based on PMID, and we used these papers to represent the possible recommendations by a dummy tool. This collection is not an ideal one for a comparison because it can include papers that clinicians may like to read. Consequently, we may have missed meaningful MeSH terms because the difference between groups was not statistically significant. In other words, we traded recall for precision when identifying the relevant MeSH terms. Finally, the content analysis was limited to user demographics and the bibliographic features documented by MEDLINE and CiteULike, such as practice specialty, publication year, and MeSH terms. On the other hand, analysis based on full-text articles is expected to provide a more comprehensive understanding of clinician preferences. Nevertheless, such a study would demand advanced text mining and natural language processing technologies.

Conclusions

Despite the limitations of the present study, our findings on clinician reading preferences can serve as useful information for developing a personalized literature-recommender system for clinicians who work at the front-line of patient care. In the future, further research and development should be performed in this area so that clinicians can more effectively and conveniently access the most relevant scientific results. In addition, connecting clinicians and researchers for collaborations through a publication-based social network is another interesting aspect to be explored. Existing social network sites such as ResearchGate and Academia.edu have attracted a great number of scientists, but an online research community connecting researchers and clinicians is not available yet. Such a social network can improve the communication and collaboration between clinicians and medical scientists so that scientific breakthroughs can be applied to clinical settings faster, while medical scientists can more effectively learn and focus on patient-relevant problems.

Abbreviations

- Medical subject headings

National Library of Medicine

The unique identifier number for each article entered into PubMed System

English RA, Lebovitz Y, Giffin RB. Transforming clinical research in the United States: challenges and opportunities: workshop summary. Washington DC: National Academies Press (US); 2010.

Google Scholar

Saint S, Christakis DA, Saha S, Elmore JG, Welsh DE, Baker P, et al. Journal reading habits of internists. J Gen Intern Med. 2000;15:881–4.

Article CAS PubMed PubMed Central Google Scholar

Westbrook JI, Gosling AS, Coiera E. Do Clinicians Use Online Evidence to Support Patient Care? A Study of 55,000 Clinicians. J Am Med Inform Assoc. 2004;11(2):113–20. doi: 10.1197/jamia.M1385 .

Article PubMed PubMed Central Google Scholar

Tenopir C, King DW, Clarke MT, Na K, Zhou X. Journal reading patterns and preferences of pediatricians. J Med Libr Assoc. 2007;95(1):56–63.

PubMed PubMed Central Google Scholar

McKibbon KA, Haynes RB, McKinlay RJ, Lokker C. Which journals do primary care physicians and specialists access from an online service? J Med Libr Assoc. 2007;95(3):246–54. doi: 10.3163/1536-5050.95.3.246 .

Neale T. How doctors can stay up to date with current medical information. MedPage Today. 2009. http://www.kevinmd.com/blog/2009/12/doctors-stay-date-current-medical-information.html . Accessed 1 Aug 2014.

Majid S, Foo S, Luyt B, Zhang X, Theng Y-L, Chang Y-K, et al. Adopting evidence-based practice in clinical decision making: nurses’ perceptions, knowledge, and barriers. J Med Libr Assoc. 2011;99(3):229–36.

Basow D. Use of evidence-based resources by clinicians improves patient outcomes. Minneapolis: Wolters Kluwer Health, UpToDate.com; 2010. http://www.uptodate.com/sites/default/files/cms-files/landing-pages/EBMWhitePaper.pdf . Accessed 6 Apr 2014.

Clarke MA, Belden JL, Koopman RJ, Steege LM, Moore JL, Canfield SM, et al. Information needs and information-seeking behaviour analysis of primary care physicians and nurses: a literature review. Health Info Libr J. 2013;30(3):178–90. doi: 10.1111/hir.12036 .

Article PubMed Google Scholar

Barraclough K. Why doctors don’t read research papers. BMJ. 2004;329(7479):1411. doi: 10.1136/bmj.329.7479.1411-a .

Article PubMed Central Google Scholar

Homer-Vanniasinkam S, Tsui J. The continuing challenges of translational research: clinician-scientists’ perspective. Cardiol Res Pract. 2012;2012:246710. doi: 10.1155/2012/246710 .

O’Donnell M. Why doctors don’t read research papers. BMJ. 2005;330(7485):256. doi: 10.1136/bmj.330.7485.256-a .

Becker JE, Krumholz HM, Ben-Josef G, Ross JS. Reporting of results in ClinicalTrials.gov and high-impact journals. J Am Med Assoc. 2014;311(10):1063–5. doi: 10.1001/jama.2013.285634 .

Article CAS Google Scholar

Hoenselaar R. Saturated fat and cardiovascular disease: the discrepancy between the scientific literature and dietary advice. Nutrition. 2012;28(2):118–23.

Sherwin BB. The critical period hypothesis: can it explain discrepancies in the oestrogen-cognition literature? J Neuroendocrinol. 2007;19(2):77–81. doi: 10.1111/j.1365-2826.2006.01508.x .

Article CAS PubMed Google Scholar

Glasziou P, Altman DG, Bossuyt P, Boutron I, Clarke M, Julious S, et al. Reducing waste from incomplete or unusable reports of biomedical research. Lancet. 2014;383(9913):267–76. doi: 10.1016/S0140-6736(13)62228-X .

Lu Z. PubMed and beyond: a survey of web tools for searching biomedical literature. J Biol Databases Curation. 2011;2011:baq036. doi: 10.1093/database/baq036 .

Wang C, Blei DM. Collaborative topic modeling for recommending scientific articles, Proceedings of the 17th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. San Diego: ACM; 2011. p. 448-56.

Huang Z, Chung W, Ong T-H, Chen H. A graph-based recommender system for digital library, Proceedings of the 2nd ACM/IEEE-CS Joint Conference on Digital Libraries. Portland: ACM; 2002. p. 65-73.

Hess C, Schlieder C. Trust-based recommendations for documents. AI Commun. 2008;21(2):145–53.

Gipp B, Beel J, Hentschel C. Scienstein: a research paper recommender system. International Conference on Emerging Trends in Computing. 2009. p. 309–15.

Beel J, Gipp B, Langer S, Breitinger C. Research paper recommender systems: a literature survey. Int J Digit Libr. 2015;17:305–38. doi: 10.1007/s00799-015-0156-0 .

Article Google Scholar

CiteULike. Frequently asked questions. 2014. http://www.citeulike.org/faq/faq.adp . Accessed 4 Apr 2014.

Lowe HJ, Barnett GO. Understanding and using the medical subject headings (MeSH) vocabulary to perform literature searches. J Am Med Assoc. 1994;271(14):1103–8.

Li S, Shin HJ, Ding EL, Van Dam RM. Adiponectin levels and risk of type 2 diabetes: a systematic review and meta-analysis. J Am Med Assoc. 2009;302(2):179–88.

Hristovski D, Peterlin B, Mitchell JA, Humphrey SM. Using literature-based discovery to identify disease candidate genes. Int J Med Inform. 2005;74(2):289–98.

Cooper GF, Miller RA. An experiment comparing lexical and statistical methods for extracting MeSH terms from clinical free text. J Am Med Inform Assoc. 1998;5(1):62–75.

Daniel WW, Cross CL. Biostatistics: a foundation for analysis in the health sciences. 10 ed. Hoboken (NJ): Wiley; 2013.

Miller RG. Simultaneous statistical inference. New York: Springer; 1966.

Benjamini Y, Hochberg Y. Controlling the false discovery rate: a practical and powerful approach to multiple testing. J R Stat Soc Series B Stat Methodol. 1995;57(1):289–300.

Masters K. For what purpose and reasons do doctors use the Internet: a systematic review. Int J Med Inform. 2008;77(1):4–16.

Capdarest-Arest N, Glassman NR. Keeping up to date: apps to stay on top of the medical literature. J Electron Res Med Libraries. 2015;12(3):171–81.

QxMD Software Inc. Read by QxMD. 2016. http://www.qxmd.com/apps/read-by-qxmd-app . Accessed 12 Dec 2016.

Johnson T. Docphin. J Med Libr Assoc. 2014;102(2):137. doi: 10.3163/1536-5050.102.2.022 .

UpToDate.com. About us. 2016. http://www.uptodate.com/home/about-us . Accessed 12 Dec 2016.

Bennett NL, Casebeer LL, Kristofco R, Collins BC. Family physicians’ information seeking behaviors: a survey comparison with other specialties. BMC Med Inform Decis Mak. 2005;5(1):9.

Download references

Acknowledgments

The authors thank Lejla Hadzikadic Gusic for sharing her valuable perspectives on this topic as a practicing oncologist, Melanie Sorrell, the librarian in liaison to biological sciences and informatics subjects at the University of North Carolina at Charlotte, and Jennifer W. Weller, biological scientist, for her feedback on the manuscript.

Publication of this article was funded by LY’s startup funding at UNC Charlotte and Mayo Clinic, MN.

Availability of data and materials

The de-identified clinicians’ reading data from this study will be made available to academic users upon request.

Authors’ contributions

BR performed the data collection and data analysis. LY conceived the idea and supervised the entire project. BR and LY drafted the manuscript. XW offered advice on the study design and provided feedback on the manuscript. All authors read and approved the final manuscript.

Competing interests

The authors declare that they have no competing interests.

Consent for publication

Not applicable.

Ethics approval and consent to participate

In this study, we mined only publicly available information from CiteULike website, without interacting with, intervening, or manipulating/changing the website’s environment. The study does not include “human subject” data and is approved by the Office of Research and Compliance without IRB requirement at UNC Charlotte.

About this supplement

This article has been published as part of BMC Medical Informatics and Decision Making Volume 17 Supplement 2, 2017: Selected articles from the International Conference on Intelligent Biology and Medicine (ICIBM) 2016: medical informatics and decision making. The full contents of the supplement are available online at https://bmcmedinformdecismak.biomedcentral.com/articles/supplements/volume-17-supplement-2 .

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Author information

Authors and affiliations.

Department of Software and Information Systems, The University of North Carolina at Charlotte, Charlotte, NC, 28223, USA

Boshu Ru & Lixia Yao

Department of Family Medicine and Center for Quantitative Medicine, The University of Connecticut Health Center, Farmington, CT, 06030, USA

Xiaoyan Wang

Department of Health Sciences Research, Mayo Clinic, Rochester, MN, 55905, USA

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Lixia Yao .

Additional file

Additional file 1: table s1..

Country of residence distributions of the clinicians. Table S2. Publication type distributions of the articles read by the clinicians. Table S3. Times of the journals read by the clinicians in each medical specialty group. Table S4. List of major MeSH terms having significant different frequencies between clinicians’ reading libraries and a random sample Table S5. List of minor MeSH terms having significant different frequencies between clinicians’ reading libraries and a random sample. (XLS 313 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License ( http://creativecommons.org/licenses/by/4.0/ ), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver ( http://creativecommons.org/publicdomain/zero/1.0/ ) applies to the data made available in this article, unless otherwise stated.

Reprints and permissions

About this article

Cite this article.

Ru, B., Wang, X. & Yao, L. Evaluation of the informatician perspective: determining types of research papers preferred by clinicians. BMC Med Inform Decis Mak 17 (Suppl 2), 74 (2017). https://doi.org/10.1186/s12911-017-0463-z

Download citation

Published : 05 July 2017

DOI : https://doi.org/10.1186/s12911-017-0463-z

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Clinicians’ reading preference

- Literature recommender systems

BMC Medical Informatics and Decision Making

ISSN: 1472-6947

- General enquiries: [email protected]

- First Online: 27 December 2012

Cite this chapter

- Randall Schumacker 3 &

- Sara Tomek 3

216k Accesses

Many research questions involve testing differences between two population proportions (percentages). For example, Is there a significant difference between the proportion of girls and boys who smoke cigarettes in high school?, Is there a significant difference in the proportion of foreign and domestic automobile sales?, or Is there a significant difference in the proportion of girls and boys passing the Iowa Test of Basic Skills? These research questions involve testing the differences in population proportions between two independent groups. Other types of research questions can involve differences in population proportions between related or dependent groups. For example, Is there a significant difference in the proportion of adults smoking cigarettes before and after attending a stop smoking clinic?, Is there a significant difference in the proportion of foreign automobiles sold in the U.S. between years 1999 and 2000?, or Is there a significant difference in the proportion of girls passing the Iowa Test of Basic Skills between the years 1980 and 1990? Research questions involving differences in independent and dependent population proportions can be tested using a z-test statistic. Unfortunately, these types of tests are not available in most statistical packages, and therefore you will need to use a calculator or spreadsheet program to conduct the test.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Get 10 units per month

- Download Article/Chapter or Ebook

- 1 Unit = 1 Article or 1 Chapter

- Cancel anytime

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

- Durable hardcover edition

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Author information

Authors and affiliations.

University of Alabama, Tuscaloosa, AL, USA

Randall Schumacker & Sara Tomek

You can also search for this author in PubMed Google Scholar

Rights and permissions

Reprints and permissions

Copyright information

© 2013 Springer Science+Business Media New York

About this chapter

Schumacker, R., Tomek, S. (2013). z-Test. In: Understanding Statistics Using R. Springer, New York, NY. https://doi.org/10.1007/978-1-4614-6227-9_9

Download citation

DOI : https://doi.org/10.1007/978-1-4614-6227-9_9

Published : 27 December 2012

Publisher Name : Springer, New York, NY

Print ISBN : 978-1-4614-6226-2

Online ISBN : 978-1-4614-6227-9

eBook Packages : Mathematics and Statistics Mathematics and Statistics (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- My Bibliography

- Collections

- Citation manager

Save citation to file

Email citation, add to collections.

- Create a new collection

- Add to an existing collection

Add to My Bibliography

Your saved search, create a file for external citation management software, your rss feed.

- Search in PubMed

- Search in NLM Catalog

- Add to Search

Hypothesis testing I: proportions

Affiliation.

- 1 Department of Radiology, Brigham and Women's Hospital, Harvard Medical School, Boston, MA 02115, USA. [email protected].

- PMID: 12601204

- DOI: 10.1148/radiol.2263011500

Statistical inference involves two analysis methods: estimation and hypothesis testing, the latter of which is the subject of this article. Specifically, Z tests of proportion are highlighted and illustrated with imaging data from two previously published clinical studies. First, to evaluate the relationship between nonenhanced computed tomographic (CT) findings and clinical outcome, the authors demonstrate the use of the one-sample Z test in a retrospective study performed with patients who had ureteral calculi. Second, the authors use the two-sample Z test to differentiate between primary and metastatic ovarian neoplasms in the diagnosis and staging of ovarian cancer. These data are based on a subset of cases from a multiinstitutional ovarian cancer trial conducted by the Radiologic Diagnostic Oncology Group, in which the roles of CT, magnetic resonance imaging, and ultrasonography (US) were evaluated. The statistical formulas used for these analyses are explained and demonstrated. These methods may enable systematic analysis of proportions and may be applied to many other radiologic investigations.

PubMed Disclaimer

Similar articles

- Computer tomography, magnetic resonance imaging, and positron emission tomography or positron emission tomography/computer tomography for detection of metastatic lymph nodes in patients with ovarian cancer: a meta-analysis. Yuan Y, Gu ZX, Tao XF, Liu SY. Yuan Y, et al. Eur J Radiol. 2012 May;81(5):1002-6. doi: 10.1016/j.ejrad.2011.01.112. Epub 2011 Feb 23. Eur J Radiol. 2012. PMID: 21349672 Review.

- Primary versus secondary ovarian malignancy: imaging findings of adnexal masses in the Radiology Diagnostic Oncology Group Study. Brown DL, Zou KH, Tempany CM, Frates MC, Silverman SG, McNeil BJ, Kurtz AB. Brown DL, et al. Radiology. 2001 Apr;219(1):213-8. doi: 10.1148/radiology.219.1.r01ap28213. Radiology. 2001. PMID: 11274559

- Diagnosis and staging of ovarian cancer: comparative values of Doppler and conventional US, CT, and MR imaging correlated with surgery and histopathologic analysis--report of the Radiology Diagnostic Oncology Group. Kurtz AB, Tsimikas JV, Tempany CM, Hamper UM, Arger PH, Bree RL, Wechsler RJ, Francis IR, Kuhlman JE, Siegelman ES, Mitchell DG, Silverman SG, Brown DL, Sheth S, Coleman BG, Ellis JH, Kurman RJ, Caudry DJ, McNeil BJ. Kurtz AB, et al. Radiology. 1999 Jul;212(1):19-27. doi: 10.1148/radiology.212.1.r99jl3619. Radiology. 1999. PMID: 10405715 Clinical Trial.

- [Imaging procedures in diagnosis of ovarian carcinoma]. Häusler G, Schurz B. Häusler G, et al. Wien Med Wochenschr. 1996;146(1-2):8-10. Wien Med Wochenschr. 1996. PMID: 8835488 German.

- [Computerized tomography and MR tomography in diagnosis of ovarian tumors]. Hamm B. Hamm B. Radiologe. 1994 Jul;34(7):362-9. Radiologe. 1994. PMID: 7938483 Review. German.

- Vitamin D Supplementation During COVID-19 Lockdown and After 20 Months: Follow-Up Study on Slovenian Women Aged Between 44 and 66. Vičič V, Pandel Mikuš R. Vičič V, et al. Zdr Varst. 2023 Oct 4;62(4):182-189. doi: 10.2478/sjph-2023-0026. eCollection 2023 Dec. Zdr Varst. 2023. PMID: 37799414 Free PMC article.

- A Method for Identifying the Spatial Range of Mining Disturbance Based on Contribution Quantification and Significance Test. Zhang C, Zheng H, Li J, Qin T, Guo J, Du M. Zhang C, et al. Int J Environ Res Public Health. 2022 Apr 24;19(9):5176. doi: 10.3390/ijerph19095176. Int J Environ Res Public Health. 2022. PMID: 35564574 Free PMC article.

- Statistics in clinical research: Important considerations. Barkan H. Barkan H. Ann Card Anaesth. 2015 Jan-Mar;18(1):74-82. doi: 10.4103/0971-9784.148325. Ann Card Anaesth. 2015. PMID: 25566715 Free PMC article.

- Research methodology in dentistry: Part II - The relevance of statistics in research. Krithikadatta J, Valarmathi S. Krithikadatta J, et al. J Conserv Dent. 2012 Jul;15(3):206-13. doi: 10.4103/0972-0707.97937. J Conserv Dent. 2012. PMID: 22876003 Free PMC article.

- Two-dimensional combinatorial screening of a bacterial rRNA A-site-like motif library: defining privileged asymmetric internal loops that bind aminoglycosides. Tran T, Disney MD. Tran T, et al. Biochemistry. 2010 Mar 9;49(9):1833-42. doi: 10.1021/bi901998m. Biochemistry. 2010. PMID: 20108982 Free PMC article.

Publication types

- Search in MeSH

Grants and funding

- U-01 CA9398-03/CA/NCI NIH HHS/United States

LinkOut - more resources

Full text sources.

- MedlinePlus Health Information

- Citation Manager

NCBI Literature Resources

MeSH PMC Bookshelf Disclaimer

The PubMed wordmark and PubMed logo are registered trademarks of the U.S. Department of Health and Human Services (HHS). Unauthorized use of these marks is strictly prohibited.

- Skip to secondary menu

- Skip to main content

- Skip to primary sidebar

Statistics By Jim

Making statistics intuitive

Z Test: Uses, Formula & Examples

By Jim Frost Leave a Comment

What is a Z Test?

Use a Z test when you need to compare group means. Use the 1-sample analysis to determine whether a population mean is different from a hypothesized value. Or use the 2-sample version to determine whether two population means differ.

A Z test is a form of inferential statistics . It uses samples to draw conclusions about populations.

For example, use Z tests to assess the following:

- One sample : Do students in an honors program have an average IQ score different than a hypothesized value of 100?

- Two sample : Do two IQ boosting programs have different mean scores?

In this post, learn about when to use a Z test vs T test. Then we’ll review the Z test’s hypotheses, assumptions, interpretation, and formula. Finally, we’ll use the formula in a worked example.

Related post : Difference between Descriptive and Inferential Statistics

Z test vs T test

Z tests and t tests are similar. They both assess the means of one or two groups, have similar assumptions, and allow you to draw the same conclusions about population means.

However, there is one critical difference.

Z tests require you to know the population standard deviation, while t tests use a sample estimate of the standard deviation. Learn more about Population Parameters vs. Sample Statistics .

In practice, analysts rarely use Z tests because it’s rare that they’ll know the population standard deviation. It’s even rarer that they’ll know it and yet need to assess an unknown population mean!

A Z test is often the first hypothesis test students learn because its results are easier to calculate by hand and it builds on the standard normal distribution that they probably already understand. Additionally, students don’t need to know about the degrees of freedom .

Z and T test results converge as the sample size approaches infinity. Indeed, for sample sizes greater than 30, the differences between the two analyses become small.

William Sealy Gosset developed the t test specifically to account for the additional uncertainty associated with smaller samples. Conversely, Z tests are too sensitive to mean differences in smaller samples and can produce statistically significant results incorrectly (i.e., false positives).

When to use a T Test vs Z Test

Let’s put a button on it.

When you know the population standard deviation, use a Z test.

When you have a sample estimate of the standard deviation, which will be the vast majority of the time, the best statistical practice is to use a t test regardless of the sample size.

However, the difference between the two analyses becomes trivial when the sample size exceeds 30.

Learn more about a T-Test Overview: How to Use & Examples and How T-Tests Work .

Z Test Hypotheses

This analysis uses sample data to evaluate hypotheses that refer to population means (µ). The hypotheses depend on whether you’re assessing one or two samples.

One-Sample Z Test Hypotheses

- Null hypothesis (H 0 ): The population mean equals a hypothesized value (µ = µ 0 ).

- Alternative hypothesis (H A ): The population mean DOES NOT equal a hypothesized value (µ ≠ µ 0 ).

When the p-value is less or equal to your significance level (e.g., 0.05), reject the null hypothesis. The difference between your sample mean and the hypothesized value is statistically significant. Your sample data support the notion that the population mean does not equal the hypothesized value.

Related posts : Null Hypothesis: Definition, Rejecting & Examples and Understanding Significance Levels

Two-Sample Z Test Hypotheses

- Null hypothesis (H 0 ): Two population means are equal (µ 1 = µ 2 ).

- Alternative hypothesis (H A ): Two population means are not equal (µ 1 ≠ µ 2 ).

Again, when the p-value is less than or equal to your significance level, reject the null hypothesis. The difference between the two means is statistically significant. Your sample data support the idea that the two population means are different.

These hypotheses are for two-sided analyses. You can use one-sided, directional hypotheses instead. Learn more in my post, One-Tailed and Two-Tailed Hypothesis Tests Explained .

Related posts : How to Interpret P Values and Statistical Significance

Z Test Assumptions

For reliable results, your data should satisfy the following assumptions:

You have a random sample

Drawing a random sample from your target population helps ensure that the sample represents the population. Representative samples are crucial for accurately inferring population properties. The Z test results won’t be valid if your data do not reflect the population.

Related posts : Random Sampling and Representative Samples

Continuous data

Z tests require continuous data . Continuous variables can assume any numeric value, and the scale can be divided meaningfully into smaller increments, such as fractional and decimal values. For example, weight, height, and temperature are continuous.

Other analyses can assess additional data types. For more information, read Comparing Hypothesis Tests for Continuous, Binary, and Count Data .

Your sample data follow a normal distribution, or you have a large sample size

All Z tests assume your data follow a normal distribution . However, due to the central limit theorem, you can ignore this assumption when your sample is large enough.

The following sample size guidelines indicate when normality becomes less of a concern:

- One-Sample : 20 or more observations.

- Two-Sample : At least 15 in each group.

Related posts : Central Limit Theorem and Skewed Distributions

Independent samples

For the two-sample analysis, the groups must contain different sets of items. This analysis compares two distinct samples.

Related post : Independent and Dependent Samples

Population standard deviation is known

As I mention in the Z test vs T test section, use a Z test when you know the population standard deviation. However, when n > 30, the difference between the analyses becomes trivial.

Related post : Standard Deviations

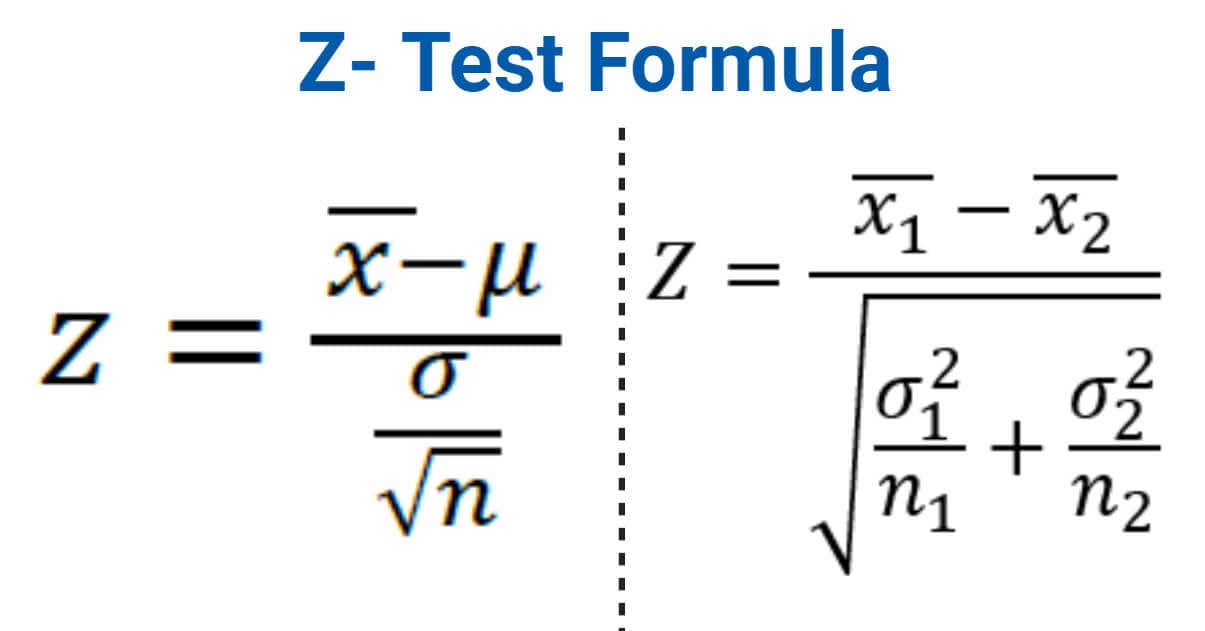

Z Test Formula

These Z test formulas allow you to calculate the test statistic. Use the Z statistic to determine statistical significance by comparing it to the appropriate critical values and use it to find p-values.

The correct formula depends on whether you’re performing a one- or two-sample analysis. Both formulas require sample means (x̅) and sample sizes (n) from your sample. Additionally, you specify the population standard deviation (σ) or variance (σ 2 ), which does not come from your sample.

I present a worked example using the Z test formula at the end of this post.

Learn more about Z-Scores and Test Statistics .

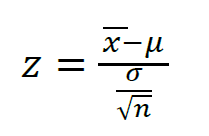

One Sample Z Test Formula

The one sample Z test formula is a ratio.

The numerator is the difference between your sample mean and a hypothesized value for the population mean (µ 0 ). This value is often a strawman argument that you hope to disprove.

The denominator is the standard error of the mean. It represents the uncertainty in how well the sample mean estimates the population mean.

Learn more about the Standard Error of the Mean .

Two Sample Z Test Formula

The two sample Z test formula is also a ratio.

The numerator is the difference between your two sample means.

The denominator calculates the pooled standard error of the mean by combining both samples. In this Z test formula, enter the population variances (σ 2 ) for each sample.

Z Test Critical Values

As I mentioned in the Z vs T test section, a Z test does not use degrees of freedom. It evaluates Z-scores in the context of the standard normal distribution. Unlike the t-distribution , the standard normal distribution doesn’t change shape as the sample size changes. Consequently, the critical values don’t change with the sample size.

To find the critical value for a Z test, you need to know the significance level and whether it is one- or two-tailed.

| 0.01 | Two-Tailed | ±2.576 |

| 0.01 | Left Tail | –2.326 |

| 0.01 | Right Tail | +2.326 |

| 0.05 | Two-Tailed | ±1.960 |

| 0.05 | Left Tail | +1.650 |

| 0.05 | Right Tail | –1.650 |

Learn more about Critical Values: Definition, Finding & Calculator .

Z Test Worked Example

Let’s close this post by calculating the results for a Z test by hand!

Suppose we randomly sampled subjects from an honors program. We want to determine whether their mean IQ score differs from the general population. The general population’s IQ scores are defined as having a mean of 100 and a standard deviation of 15.

We’ll determine whether the difference between our sample mean and the hypothesized population mean of 100 is statistically significant.

Specifically, we’ll use a two-tailed analysis with a significance level of 0.05. Looking at the table above, you’ll see that this Z test has critical values of ± 1.960. Our results are statistically significant if our Z statistic is below –1.960 or above +1.960.

The hypotheses are the following:

- Null (H 0 ): µ = 100

- Alternative (H A ): µ ≠ 100

Entering Our Results into the Formula

Here are the values from our study that we need to enter into the Z test formula:

- IQ score sample mean (x̅): 107

- Sample size (n): 25

- Hypothesized population mean (µ 0 ): 100

- Population standard deviation (σ): 15

The Z-score is 2.333. This value is greater than the critical value of 1.960, making the results statistically significant. Below is a graphical representation of our Z test results showing how the Z statistic falls within the critical region.

We can reject the null and conclude that the mean IQ score for the population of honors students does not equal 100. Based on the sample mean of 107, we know their mean IQ score is higher.

Now let’s find the p-value. We could use technology to do that, such as an online calculator. However, let’s go old school and use a Z table.

To find the p-value that corresponds to a Z-score from a two-tailed analysis, we need to find the negative value of our Z-score (even when it’s positive) and double it.

In the truncated Z-table below, I highlight the cell corresponding to a Z-score of -2.33.

The cell value of 0.00990 represents the area or probability to the left of the Z-score -2.33. We need to double it to include the area > +2.33 to obtain the p-value for a two-tailed analysis.

P-value = 0.00990 * 2 = 0.0198

That p-value is an approximation because it uses a Z-score of 2.33 rather than 2.333. Using an online calculator, the p-value for our Z test is a more precise 0.0196. This p-value is less than our significance level of 0.05, which reconfirms the statistically significant results.

See my full Z-table , which explains how to use it to solve other types of problems.

Share this:

Reader Interactions

Comments and questions cancel reply.

- Open access

- Published: 06 April 2022

Design of a new Z -test for the uncertainty of Covid-19 events under Neutrosophic statistics

- Muhammad Aslam ORCID: orcid.org/0000-0003-0644-1950 1

BMC Medical Research Methodology volume 22 , Article number: 99 ( 2022 ) Cite this article

4699 Accesses

5 Citations

Metrics details

The existing Z-test for uncertainty events does not give information about the measure of indeterminacy/uncertainty associated with the test.

This paper introduces the Z-test for uncertainty events under neutrosophic statistics. The test statistic of the existing test is modified under the philosophy of the Neutrosophy. The testing process is introduced and applied to the Covid-19 data.

Based on the information, the proposed test is interpreted as the probability that there is no reduction in uncertainty of Covid-19 is accepted with a probability of 0.95, committing a type-I error is 0.05 with the measure of an indeterminacy 0.10. Based on the analysis, it is concluded that the proposed test is informative than the existing test. The proposed test is also better than the Z-test for uncertainty under fuzzy-logic as the test using fuzz-logic gives the value of the statistic from 2.20 to 2.42 without any information about the measure of indeterminacy. The test under interval statistic only considers the values within the interval rather than the crisp value.

Conclusions

From the Covid-19 data analysis, it is found that the proposed Z-test for uncertainty events under the neutrosophic statistics is efficient than the existing tests under classical statistics, fuzzy approach, and interval statistics in terms of information, flexibility, power of the test, and adequacy.

Peer Review reports

The Z-test is playing an important role in analyzing the data. The main aim of the Z-test is to test the mean of the unknown population in decision-making. The Z-test for uncertainty events is applied to test the reduction in the uncertainty of past events. This type of test is applied to test the null hypothesis that there is no reduction in uncertainty against the alternative hypothesis that there is a significant reduction in uncertainty of past events. The Z-test for uncertainty events uses the information of the past events for testing the reduction of uncertainty [ 1 ]. discussed the performance of the statistical test under uncertainty [ 2 ]. discussed the design of the Z-test for uncertainty events [ 3 ]. worked on the test in the presence of uncertainty [ 4 ]. worked on the modification of non-parametric test. The applications of [ 5 ], [ 6 ], [ 7 ] and [ 8 ].

[ 9 ] mentioned that “statistical data are frequently not precise numbers but more or less non-precise also called fuzzy. Measurements of continuous variables are always fuzzy to a certain degree”. In such cases, the existing Z-tests cannot be applied for the testing of the mean of population or reduction in uncertainty. Therefore, the existing Z-tests are modified under the fuzzy-logic to deal with uncertain, fuzzy, and vague data [ 10 ]., [ 11 ], [ 12 ], [ 13 ], [ 14 ], [ 15 ], [ 16 ], [ 17 ], [ 18 ], [ 19 ] worked on the various statistical tests using the fuzzy-logic.

Nowadays, neutrosophic logic attracts researchers due to its many applications in a variety of fields. The neutrosophic logic counters the measure of indeterminacy that is considered by the fuzzy logic, see [ 20 ] [ 21 ]. proved that neutrosophic logic is efficient than interval-based analysis. More applications of neutrosophic logic can be seen in [ 22 ], [ 23 ], [ 24 ] and [ 25 ] [ 26 ]. applied the neutrosophic statistics to deal with uncertain data [ 27 ]. and [ 28 ] presented neutrosophic statistical methods to analyze the data. Some applications of neutrosophic tests can be seen in [ 29 ], [ 30 ] and [ 31 ].

The existing Z-test for uncertainty events under classical statistics does not consider the measure of indeterminacy when testing the reduction in events. By exploring the literature and according to the best of our knowledge, there is no work on Z-test for uncertainty events under neutrosophic statistics. In this paper, the medication of Z-test for uncertainty events under neutrosophic statistics will be introduced. The application of the proposed test will be given using the Covid-19 data. It is expected that the proposed Z-test for uncertainty events under neutrosophic statistics will be more efficient than the existing tests in terms of the power of the test, information, and adequacy.

The existing Z-test for uncertainty events can be applied only when the probability of events is known. The existing test does not evaluate the effect of the measure of indeterminacy/uncertainty in the reduction of uncertainty of past events. We now introduce the modification of the Z-test for uncertainty events under neutrosophic statistics. With the aim that the proposed test will be more effective than the existing Z-test for uncertainty events under classical statistics. Let \({A}_N={A}_L+{A}_U{I}_{A_N};{I}_{A_N}\epsilon \left[{I}_{A_L},{I}_{A_U}\right]\) and \({B}_N={B}_L+{B}_U{I}_{B_N};{I}_{B_N}\epsilon \left[{I}_{B_L},{I}_{B_U}\right]\) be two neutrosophic events, where lower values A L , B L denote the determinate part of the events, upper values \({A}_U{I}_{A_N}\) , \({B}_U{I}_{B_N}\) be the indeterminate part, and \({I}_{A_N}\epsilon \left[{I}_{A_L},{I}_{A_U}\right]\) , \({I}_{B_N}\epsilon \left[{I}_{B_L},{I}_{B_U}\right]\) be the measure of indeterminacy associated with these events. Note here that the events A N ϵ [ A L , A U ] and B N ϵ [ B L , B U ] reduces to events under classical statistics (determinate parts) proposed by [ 2 ] if \({I}_{A_L}={I}_{B_L}\) =0. Suppose n N = n L + n U I N ; I N ϵ [ I L , I U ] be a neutrosophic random sample where n L is the lower (determinate) sample size and n U I N be the indeterminate part and I N ϵ [ I L , I U ] be the measure of uncertainty in selecting the sample size. The neutrosophic random sample reduces to random sample if no uncertainty is found in the sample size. The methodology of the proposed Z-test for uncertainty events is explained as follows.

Suppose that the probability that an event A N ϵ [ A L , A U ] occurs (probability of truth) is P ( A N ) ϵ [ P ( A L ), P ( A U )], the probability that an event A N ϵ [ A L , A U ] does not occur (probability of false) is \(P\left({A}_N^c\right)\epsilon \left[P\left({A}_L^c\right),P\left({A}_U^c\right)\right]\) , the probability that an event B N ϵ [ B L , B U ] occurs (probability of truth) is P ( B N ) ϵ [ P ( B L ), P ( B U )], the probability that an event B N ϵ [ B L , B U ] does not occur (probability of false) is \(P\left({B}_N^c\right)\epsilon \left[P\left({B}_L^c\right),P\left({B}_U^c\right)\right]\) . It is important to note that sequential analysis is done to reduce the uncertainty by using past events information. The purpose of the proposed test is whether the reduction of uncertainty is significant or not. Let Z N ϵ [ Z L , Z U ] be neutrosophic test statistic, where Z L and Z U are the lower and upper values of statistic, respectively and defined by.

Note that P ( B + kN | A N ) = P ( B N | A N ) at lag k N , where P ( B N | A N ) ϵ [ P ( B L | A L ), P ( B U | A U )] denotes the conditional probability. It means that the probability of event P ( B N ) ϵ [ P ( B L ), P ( B U )] will be calculated when the event A N ϵ [ A L , A U ] has occurred.

The neutrosophic form of the proposed test statistic, say Z N ϵ [ Z L , Z U ] is defined by.

The alternative form of Eq. ( 2 ) can be written as.

The proposed test Z N ϵ [ Z L , Z U ] is the extension of several existing tests. The proposed test reduces to the existing Z test under classical statistics when I ZN =0. The proposed test is also an extension of the Z test under fuzzy approach and interval statistics.

The proposed test will be implemented as follows.

Step-1: state the null hypothesis H 0 : there is no reduction in uncertainty vs. the alternative hypothesis H 1 : there is a significant reduction in uncertainty.

Step-2: Calculate the statistic Z N ϵ [ Z L , Z U . ]

Step-3: Specify the level of significance α and select the critical value from [ 2 ].

Step-4: Do not accept the null hypothesis if the value of Z N ϵ [ Z L , Z U ] is larger than the critical value.

The application of the proposed test is given in the medical field. The decision-makers are interested to test the reduction in uncertainty of Covid-19 when the measure of indeterminacy/uncertainty is I ZN ϵ [0,0.10]. The decision-makers are interested to test that the reduction in death due to Covid-19 (event A N ) with the increase in Covid-19 vaccines (event B N ). By following [ 2 ], the sequence in which both events occur is given as

where n N ϵ [12, 12], k N ϵ [1, 1], P ( A N ) = 6/12 = 0.5 and P ( B N ) = 6/12 = 0.5.

Note here that event A N occurs 6 times and that of these 6 times B N occurs immediately after A N five times. Given that A N has occurred, we get

( B + kN | A N ) = P ( B N | A N ) = 5/6 = 0.83 at lag 1. The value of Z N ϵ [ Z L , Z U ] is calculated as

\({Z}_N=\left(1+0.1\right)\frac{0.83-0.50}{\sqrt{\frac{0.50\left[1-0.50\right]\left[1-0.50\right]}{\left(12-1\right)0.50}}}=2.42;{I}_{ZN}\epsilon \left[\mathrm{0,0.1}\right]\) . From [ 2 ], the critical value is 1.96.

The proposed test for the example will be implemented as follows

Step-1: state the null hypothesis H 0 : there is no reduction in uncertainty of Covid-19 vs. the alternative hypothesis H 1 : there is a significant reduction in uncertainty of Covid-19.

Step-2: the value of the statistic is 2.42.

Step-3: Specify the level of significance α = 0.05 and select the critical value from [ 2 ] which is 1.96.

Step-4: Do not accept the null hypothesis as the value of Z N is larger than the critical value.

From the analysis, it can be seen that the calculated value of Z N ϵ [ Z L , Z U ] is larger than the critical value of 1.96. Therefore, the null hypothesis H 0 : there is no reduction in uncertainty of Covid-19 will be rejected in favor of H 1 : there is a significant reduction in uncertainty of Covid-19. Based on the study, it is concluded that there is a significant reduction in the uncertainty of Covid-19.

Simulation study

In this section, a simulation study is performed to see the effect of the measure of indeterminacy on statistic Z N ϵ [ Z L , Z U ]. For this purpose, a neutrosophic form of Z N ϵ [ Z L , Z U ] obtained from the real data will be used. The neutrosophic form of Z N ϵ [ Z L , Z U ] is given as

To analyze the effect on H 0 , the various values of I ZN ϵ [ I ZL , I ZU ] are considered. The computed values of Z N ϵ [ Z L , Z U ] along with the decision on H 0 are reported in Table 1 . For this study α = 0.05 and the critical value is 1.96. The null hypothesis H 0 will be accepted if the calculated value of Z N is less than 1.96. From Table 1 , it can be seen that as the values of I ZN ϵ [ I ZL , I ZU ] increases from 0.01 to 2, the values of Z N ϵ [ Z L , Z U ] increases. Although, a decision about H 0 remains the same at all values of measure of indeterminacy I ZN ϵ [ I ZL , I ZU ] but the difference between Z N ϵ [ Z L , Z U ] and the critical value of 1.96 increases as I ZU increases. From the study, it can be concluded that the measure of indeterminacy I ZN ϵ [ I ZL , I ZU ] affects the values of Z N ϵ [ Z L , Z U ].

Comparative studies

As mentioned earlier, the proposed Z-test for uncertainty events is an extension of several tests. In this section, a comparative study is presented in terms of measure of indeterminacy, flexibility and information. We will compare the efficiency of the proposed Z-test for uncertainty with the proposed Z-test for uncertainty under classical statistics, proposed Z-test for uncertainty under fuzzy logic and proposed Z-test for uncertainty under interval statistics. The neutrosophic form of the proposed statistic Z N ϵ [ Z L , Z U ] is expressed as Z N = 2.20 + 2.20 I ZN ; I ZN ϵ [0,0.1]. Note that the first 2.20 presents the existing Z-test for uncertainty under classical statistics, the second part 2.20 I ZN is an indeterminate part and 0.1 is a measure of indeterminacy associated with the test. From the neutrosophic form, it can be seen that the proposed test is flexible as it gives the values of Z N ϵ [ Z L , Z U ] in an interval from 2.20 to 2.42 when I ZU =0. On the other hand, the existing test gives the value of 2.20. In addition, the proposed test uses information about the measure of indeterminacy that the existing test does not consider. Based on the information, the proposed test is interpreted as the probability that H 0 : there is no reduction in uncertainty of Covid-19 is accepted with a probability of 0.95, committing a type-I error is 0.05 with the measure of an indeterminacy 0.10. Based on the analysis, it is concluded that the proposed test is informative than the existing test. The proposed test is also better than the Z-test for uncertainty under fuzzy-logic as the test using fuzz-logic gives the value of the statistic from 2.20 to 2.42 without any information about the measure of indeterminacy. The test under interval statistic only considers the values within the interval rather than the crisp value. On the other hand, the analysis based on neutrosophic considers any type of set. Based on the analysis, it is concluded that the proposed Z-test is efficient than the existing tests in terms of information, flexibility, and indeterminacy.

Comparison using power of the test

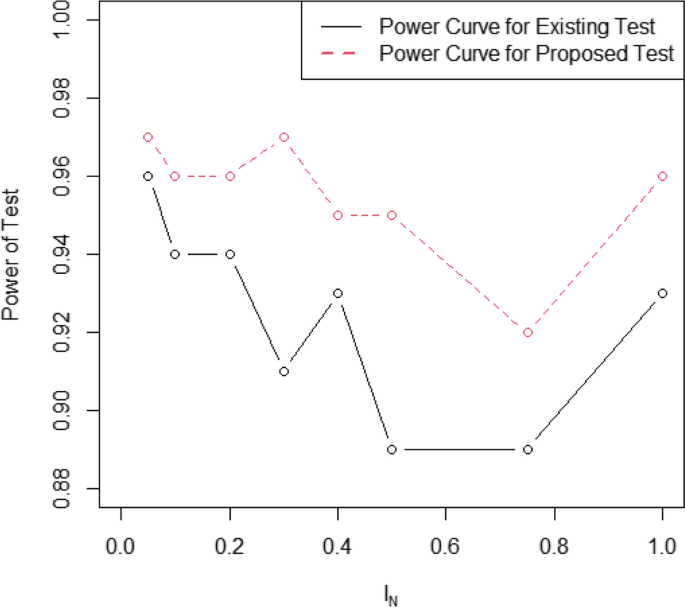

In this section, the efficiency of the proposed test is compared with the existing test in terms of the power of the test. The power of the test is defined as the probability of rejecting H 0 when it is false and it is denoted by β . As mentioned earlier, the probability of rejecting H 0 when it is true is known as a type-I error is denoted by α . The values of Z N ϵ [ Z L , Z U ] are simulated using the classical standard normal distribution and neutrosophic standard normal distribution. During the simulation 100 values of Z N ϵ [ Z L , Z U ] are generated from a classical standard normal distribution and neutrosophic standard normal distribution with mean \({\mu}_N={\mu}_L+{\mu}_U{I}_{\mu_N};{I}_{\mu_N}\epsilon \left[{I}_{\mu_L},{I}_{\mu_U}\right]\) , where μ L = 0 presents the mean of classical standard normal distribution, \({\mu}_U{I}_{\mu_N}\) denote the indeterminate value and \({I}_{\mu_N}\epsilon \left[{I}_{\mu_L},{I}_{\mu_U}\right]\) is a measure of indeterminacy. Note that when \({I}_{\mu_L}\) =0, μ N reduces to μ L . The values of Z N ϵ [ Z L , Z U ] are compared with the tabulated value at α =0.05. The values of the power of the test for the existing test and for the proposed test for various values of \({I}_{\mu_U}\) are shown in Table 2 . From Table 2, it is clear that the existing test under classical statistics provides smaller values of the power of the test as compared to the proposed test at all values of \({I}_{\mu_U}\) . For example, when \({I}_{\mu_U}\) =0.1, the power of the test provided by the Z-test for uncertainty events under classical statistics is 0.94 and the power of the test provided by the proposed Z-test for uncertainty events is 0.96. The values of the power of the test for Z-test for uncertainty events under classical statistics and Z-test for uncertainty events under neutrosophic statistics are plotted in Fig. 1 . From Fig. 1, it is quite clear that the power curve of the proposed test is higher than the power curve of the existing test. Based on the analysis, it can be concluded that the proposed Z-test for uncertainty events under neutrosophic statistics is efficient than the existing Z-test for uncertainty events.

The power curves of the two tests

The Z-test of uncertainty was introduced under neutrosophic statistics in this paper. The proposed test was a generalization of the existing Z-test of uncertain events under classical statistics, fuzzy-based test, and interval statistics. The performance of the proposed test was compared with the listed existing tests. From the real data and simulation study, the proposed test was found to be more efficient in terms of information and power of the test. Based on the information, it is recommended to apply the proposed test to check the reduction in uncertainty under an indeterminate environment. The proposed test for big data can be considered as future research. The proposed test using double sampling can also be studied as future research. The estimation of sample size and other properties of the proposed test can be studied in future research.

Availability of data and materials

All data generated or analysed during this study are included in this published article

DOLL H, CARNEY S. Statistical approaches to uncertainty: p values and confidence intervals unpacked. BMJ evidence-based medicine. 2005;10(5):133–4.

Article Google Scholar

Kanji, G.K, 100 statistical tests 2006: Sage.

Lele SR. How should we quantify uncertainty in statistical inference? Front Ecol Evol. 2020;8:35.

Wang F, et al. Re-evaluation of the power of the mann-kendall test for detecting monotonic trends in hydrometeorological time series. Front Earth Sci. 2020;8:14.

Maghsoodloo S, Huang C-Y. Comparing the overlapping of two independent confidence intervals with a single confidence interval for two normal population parameters. J Stat Plan Inference. 2010;140(11):3295–305.

Rono BK, et al. Application of paired student t-test on impact of anti-retroviral therapy on CD4 cell count among HIV Seroconverters in serodiscordant heterosexual relationships: a case study of Nyanza region. Kenya.

Zhou X-H. Inferences about population means of health care costs. Stat Methods Med Res. 2002;11(4):327–39.

Niwitpong S, Niwitpong S-a. Confidence interval for the difference of two normal population means with a known ratio of variances. Appl Math Sci. 2010;4(8):347–59.

Google Scholar

Viertl R. Univariate statistical analysis with fuzzy data. Comput Stat Data Anal. 2006;51(1):133–47.

Filzmoser P, Viertl R. Testing hypotheses with fuzzy data: the fuzzy p-value. Metrika. 2004;59(1):21–9.

Tsai C-C, Chen C-C. Tests of quality characteristics of two populations using paired fuzzy sample differences. Int J Adv Manuf Technol. 2006;27(5):574–9.

Taheri SM, Arefi M. Testing fuzzy hypotheses based on fuzzy test statistic. Soft Comput. 2009;13(6):617–25.

Jamkhaneh EB, Ghara AN. Testing statistical hypotheses with fuzzy data. In: 2010 International Conference on Intelligent Computing and Cognitive Informatics: IEEE; 2010.

Chachi J, Taheri SM, Viertl R. Testing statistical hypotheses based on fuzzy confidence intervals. Austrian J Stat. 2012;41(4):267–86.

Kalpanapriya D, Pandian P. Statistical hypotheses testing with imprecise data. Appl Math Sci. 2012;6(106):5285–92.

Parthiban, S. and P. Gajivaradhan, A Comparative Study of Two-Sample t-Test Under Fuzzy Environments Using Trapezoidal Fuzzy Numbers.

Montenegro M, et al. Two-sample hypothesis tests of means of a fuzzy random variable. Inf Sci. 2001;133(1-2):89–100.

Park S, Lee S-J, Jun S. Patent big data analysis using fuzzy learning. Int J Fuzzy Syst. 2017;19(4):1158–67.

Garg H, Arora R. Generalized Maclaurin symmetric mean aggregation operators based on Archimedean t-norm of the intuitionistic fuzzy soft set information. Artif Intell Rev. 2020:1–41.