Presentation Graphic Stream (SUP files) BluRay Subtitle Format

The Presentation Graphic Stream (PGS) specification is defined in the US Patent US 20090185789 A1 . This graphic stream definition is used to show subtitles in BluRay movies. When a subtitle stream in PGS format is ripped from a BluRay disc is usually saved in a file with the SUP extension (Subtitle Presentation).

A Presentation Graphic Stream (PGS) is made of several functional segments one after another. These segments have the following header:

The DTS should indicate a time when the decoding of the sub picture starts, and the PTS indicates a time when the decoding ends and the sub picture is shown on the screen. DTS is always zero in practice (at least from what I have found so far), so you can freely ignore this value. These timestamps have an accuracy of 90 kHz. This means that for example, if you have a PTS value of 0x0004C11C and you want to know the milliseconds from the start of the movie when the sub picture is shown, you have to divide the decimal value (311,580) by 90, and the result is the value you are looking for: 3,462 milliseconds (or 3.462 seconds).

As you can see, there are five types of segments used in PGS:

- Presentation Composition Segment (PCS)

- Window Definition Segment (WDS)

- Palette Definition Segment (PDS)

- Object Definition Segment (ODS)

- End of Display Set Segment (END)

The Presentation Composition Segment (PCS) is also called the Control Segment because it indicates a new Display Set (DS) definition, composed by definition segments (WDS, PDS, ODS) until an END segment is found.

A Display Set (DS) is a sub picture definition that might look like this:

In a DS there can be several windows, palette and object definitions, and the composition objects define what is going to be shown on the screen.

Presentation Composition Segment

The Presentation Composition Segment is used for composing a sub picture. It is made of the following fields:

The composition state can be one of three values:

- Epoch Start : This defines a new display. The Epoch Start contains all functional segments needed to display a new composition on the screen.

- Acquisition Point : This defines a display refresh . This is used to compose in the middle of the Epoch. It includes functional segments with new objects to be used in a new composition, replacing old objects with the same Object ID.

- Normal : This defines a display update , and contains only functional segments with elements that are different from the preceding composition. It’s mostly used to stop displaying objects on the screen by defining a composition with no composition objects (a value of zero in the Number of Composition Objects flag) but also used to define a new composition with new objects and objects defined since the Epoch Start.

The composition objects, also known as window information objects, define the position on the screen of every image that will be shown. They have the following structure:

When the Object Cropped Flag is set to true (or actually 0x40), then the sub picture is cropped to show only a portion of it. This is used for example when you don’t want to show the whole subtitle at first, but just a few words first, and then the rest.

Window Definition Segment

This segment is used to define the rectangular area on the screen where the sub picture will be shown. This rectangular area is called a Window . This segment can define several windows, and all the fields from Window ID up to Window Height will repeat each other in the segment defining each window. You can see it more clearly in the example at the end of this page. Its structure is as follows:

Palette Definition Segment

This segment is used to define a palette for color conversion. It’s composed of the following fields:

There can be several palette entries, with different palette IDs so the last five fields can repeat.

Object Definition Segment

This segment defines the graphics object. These are images with rendered text on a transparent background. Its structure is explained in the following table:

The Run-length encoding method is defined in the US 7912305 B1 patent . Here’s a quick and dirty definition to this method:

End Segment

The end segment always has a segment size of zero and indicates the end of a Display Set (DS) definition. It appears immediately after the last ODS in one DS.

Let’s see a real world example. Take a look at this section of a SUP file:

This is a complete Display Set. These are the segments:

- Magic Number: “PG” (0x5047)

- Presentation Time: 17:11.822 (92,863,980 / 90)

- Decoding Time: 0

- Segment Type: PCS (0x16)

- Segment Size: 0x13 bytes

- Width: 1920 (0x780)

- Height: 1080 (0x438)

- Frame rate: 0x10

- Composition Number: 430 (0x1ae)

- Composition State: Epoch Start (0x80)

- Palette Update Flag: false

- Palette ID: 0

- Number of Composition Objects: 1

- Object ID: 0

- Window ID: 0

- Object Cropped Flag: false

- Object Horizontal Position: 773 (0x305)

- Object Vertical Position: 108 (0x06c)

- Segment Type: WDS (0x17)

- Number of Windows: 2

- Window Horizontal Position: 773 (0x305)

- Window Vertical Position: 108 (0x06c)

- Window Width: 377 (0x179)

- Window Height 43 (0x02b)

- Window ID: 1

- Window Horizontal Position: 739 (0x2e3)

- Window Vertical Position: 928 (0x3a0)

- Window Width: 472 (0x1d8)

- Segment Type: PDS (0x14)

- Segment Size: 0x9d bytes

- Palette Version: 0

- 31 palette entries

- Segment Type: PDS (0x15)

- Segment Size: 0x21c2 bytes

- Object Version Number: 0

- Last in sequence flag: First and last sequence (0xC0)

- Object Data Length: 0x0021bb bytes

- Width: 377 (0x179)

- Height: 43 (0x02b)

- Segment Type: END (0x80)

- Segment Size: 0 bytes

This Display Set will show an image of 377×43 in size, starting at 17 minutes, 11.822 seconds on the screen at offset 773×108.

Share this:

- Click to share on Twitter (Opens in new window)

- Click to share on Facebook (Opens in new window)

- Click to share on LinkedIn (Opens in new window)

- Click to share on Pinterest (Opens in new window)

- Click to share on Reddit (Opens in new window)

4 thoughts to “Presentation Graphic Stream (SUP files) BluRay Subtitle Format”

I am currently working on a script for changing the size of subtitles after cropping a video in Handbrake. This article has been very usefull, however I think you forgot to include the “number of windows” byte of the Window Definition Segment and that the windows repeat over that number. I had to look through the source code of BDSup2sub before I realized this, and after realizing this I finally understood how your WDS could have 0x13 in size which didn’t add up with the 9 bytes specified in the WDS segment.

You’re absolutely right! Even in the example you can see that there are two windows. I’ll update the post with that information. The problem is that the Patent Document doesn’t specify it, because it isn’t clear there.

Useful note: PGS supports up to 64 presentation objects in one epoch. But only 2 objects can be shown simultaneously. I.e. only 2 objects can be in one Presentation Composition Segment.

It should be noted that for the ODS packets, the value of “Object Data Length” also includes the four bytes of the “Width” and “Height” fields that immediately follow it. Therefore, the correct length of “Object Data” is actually the value of “Object Data Length” minus four bytes.

Leave a Reply Cancel reply

Subtitle File Formats: A Comprehensive Overview

Subtitle file formats are essential for enhancing the accessibility and understanding of video content. Different formats offer unique features and compatibility options. In this article, we will provide a comprehensive overview of popular subtitle file types and discuss their characteristics.

In the dynamic world of video and media, subtitle file formats are essential for ensuring accessibility and improving viewer experiences. With a wide range of formats to choose from, each designed for particular needs, this article explores some of the most popular subtitle formats. From the straightforward SRT to the more complex capabilities of TTML, these formats meet various needs, including compatibility with different players, support for detailed formatting, and synchronization accuracy. Understanding these formats is crucial for anyone aiming to master the complexities of contemporary media consumption and creation.

There are two main types of formats:

Text-Based Formats : These formats, like SRT or SSA, store subtitles as plain text that you can read and even edit easily with a regular text editor. They’re commonly used in online videos and offline players, making them super accessible.

Binary Formats : Binary formats, such as IDX/SUB and STL, are a bit trickier. They store subtitles in a way that’s not human-readable without special software. You’ll find these in DVDs and Blu-ray discs, making them perfect for your movie nights.

Understanding these two categories is your ticket to mastering subtitles in today’s digital age, whether you’re a content creator or just a movie enthusiast.

Let’s explore some of the most widely used text-based subtitle formats.

Text-based subtitle formats

These text-based subtitle formats cater to various needs, from basic captioning to advanced styling and platform compatibility. Understanding the options available empowers content creators and viewers alike to make the most of subtitles in the digital age.

SubRipper (.srt)

The SubRipper format, commonly known as SRT, is widely supported by subtitle converters and players. It features a concise and easily understandable structure. When opened with a text editor, an SRT file displays the time when the text appears and the corresponding subtitles. This format is widely compatible and can be edited without difficulty.

The SRT Structure

Let’s take a closer look at how SRT works. Imagine a subtitle as a tiny snippet of text that appears on your screen when you’re watching a movie or video. SRT organizes these snippets neatly.

Each snippet in an SRT file has three main parts:

The Sequence Number: Think of it as a tag that says which subtitle comes first, second, third, and so on.

Timing Information: This part tells your video player when to display the subtitle and when to remove it. It’s like a stopwatch for your subtitles.

The Text: This is the actual subtitle, the words you see on the screen. It can be anything from spoken dialogue to captions.

An Example of SRT in Action:

In this SRT example, each snippet has a sequence number, timing information, and text. When you play the video, your player reads the SRT file and displays each snippet at the right time.

The Pros of SRT Subtitles

Now that we’ve introduced you to SRT (SubRip Text) subtitles, let’s dive deeper into what makes them truly remarkable. SRT subtitles have captured the hearts of content creators and viewers alike for several compelling reasons:

Universal Compatibility : SRT subtitles are like the friendly neighborhood superhero of the subtitle world. They work seamlessly with a wide range of video players and platforms. Whether you’re watching a video on your favorite streaming service or a personal project on your computer, chances are, SRT has got you covered.

Human-Friendly Editing : Have you ever wanted to make a quick change to a subtitle? With SRT, you don’t need to be a tech wizard. SRT files are plain text, which means you can edit them with a regular text editor. It’s as simple as updating a document on your computer. No need for complicated software or special skills.

Flexibility at Your Fingertips : SRT subtitles offer a level of flexibility that’s hard to beat. You have precise control over when each subtitle appears and disappears in your video. This precision ensures that your subtitles sync perfectly with the spoken dialogue or action on screen. It’s like having a personal conductor for your subtitles, making sure they harmonize with your video’s rhythm.

Accessible to All : One of the most beautiful things about SRT subtitles is their accessibility. They bridge language barriers, making it possible for people from different parts of the world to enjoy your content. Whether you’re sharing a heartwarming story, a tutorial, or a funny moment, SRT subtitles open the doors to a global audience.

User-Friendly for Everyone : Whether you’re a seasoned content creator or just someone who loves watching videos, SRT subtitles make the experience more enjoyable. They’re there to enhance your understanding, add context, and make sure you never miss a moment.

In a world where video content knows no boundaries, SRT subtitles are your trusted companions. They ensure that your message reaches far and wide, transcending languages and cultures. So, the next time you see those neat lines of text at the bottom of your screen, know that it’s not just text—it’s the magic of SRT subtitles, making your video experience exceptional.

That’s why SRT is one of the formats fully supported by Matesub for export. So, the next time you use Matesub to create or edit subtitles, rest assured that you can export them in the user-friendly and widely compatible SRT format, ready to enhance your videos and reach a global audience.

MicroDVD (.sub)

SUB subtitles are like the reliable Swiss Army knife of subtitles. They are named after MicroDVD, the software that popularized this format. What sets SUB apart is its straightforwardness and effectiveness.

The SUB Structure

To truly appreciate SUB subtitles, it’s essential to understand their structure. Imagine each subtitle as a small puzzle piece that fits perfectly into your video. SUB organizes these pieces neatly.

Each subtitle in a SUB file consists of three key elements:

The Sequence Number: This serves as an indicator, telling your video player the order in which the subtitles should appear.

Timing Information: Just like SRT, SUB subtitles come with precise timing details. These details dictate when a subtitle should begin and end. It’s like having a conductor directing each subtitle’s entrance and exit.

The Text: This is the heart of the subtitle—the actual words that appear on the screen. It can be dialogues, captions, or translations.

An Example of SUB in Action:

In this example, each subtitle consists of three parts: the sequence number, timing information, and the text. When you play the video, your player reads the SUB file and showcases each subtitle at the precise moment.

The Pros of SUB Subtitles

Having familiarized ourselves with the SUB format, let’s now explore its key advantages and why it could be a valuable choice for subtitles:

Precise Timing: SUB subtitles shine in the realm of synchronization. Their timing information ensures that subtitles align precisely with spoken dialogue and on-screen actions. It’s like having a conductor orchestrating each subtitle’s entrance and exit, delivering a seamless viewing experience.

Efficiency and Compactness: SUB files are renowned for their efficiency. They are compact, making them an ideal choice when storage space is a concern. Despite their small size, SUB subtitles pack a punch in delivering clear and effective communication.

Universal Appeal: SUB subtitles enjoy widespread support across various video players and platforms. This universal compatibility makes them versatile and accessible to a broad and diverse audience, transcending language and geographical boundaries.

WebVTT (.vtt)

The WebVTT format, often referred to as VTT, holds a prominent place in the world of subtitles. It’s recognized as a W3C standard , making it a dependable choice for web-based content. VTT subtitles are prized for their simplicity and compatibility, ensuring accessibility across various platforms.

Here are the key features of WebVTT:

Simple Text-Based Format : WebVTT is a plain text format, making it easy to create and edit using basic text editors. It uses a straightforward structure, making it human-readable and editable without specialized software.

Timestamps : WebVTT supports precise timing of captions and subtitles. Each caption or subtitle line is associated with a specific timestamp in hours, minutes, seconds, and milliseconds (HH:MM:SS.sss).

Cue Settings : You can specify various settings for individual cues (captions or subtitles) using the “::cue” selector. These settings include text styling (font, color, background), positioning, and voice differentiation.

Cue Styles : WebVTT allows you to define global styles or styles specific to certain cues. This enables you to customize the appearance of captions and subtitles to match your design or to distinguish between different speakers or voices.

Support for Multiple Languages : WebVTT supports multiple language tracks within a single file. You can include captions or subtitles in different languages, and viewers can select their preferred language if the video player supports it.

Line Breaks and Positioning : You can control line breaks in subtitles, ensuring that text is displayed in a readable manner. Additionally, you can specify the positioning of captions on the video screen.

Comments : You can include comments within a WebVTT file by using lines that begin with “NOTE.” These comments are ignored by the video player and can be used for documentation or annotations.

Compatibility : WebVTT is widely supported by HTML5 video players and web browsers, making it a reliable choice for adding captions and subtitles to web-based video content.

Accessibility : WebVTT supports accessibility features, allowing you to provide text descriptions for audio content (audio descriptions) and textual representations of non-speech sounds (sound descriptions) to ensure accessibility for users with disabilities.

Error Handling : WebVTT includes error-checking mechanisms to help identify and resolve formatting issues in the file, making it more robust.

Extensibility : While WebVTT provides a standardized format, it also allows for extensions and custom cues, which can be helpful in specific use cases.

Overall, WebVTT is a versatile and user-friendly format for adding captions, subtitles, and other text tracks to web videos, making them more inclusive and accessible to a wide range of viewers.

The WebVTT Structure

The WebVTT structure is relatively straightforward and consists of key components:

Header : The header section of a WebVTT file contains metadata and settings for the entire subtitle track. It begins with the keyword “WEBVTT” on the first line, which indicates that the file is using the WebVTT format. The header may also include settings for styling, positioning, and language preferences.

Style Block : The style block is an optional section within the header where you can define styles for captions and subtitles. These styles include font properties, colors, background, text shadow, and more. You can use the “::cue” selector to apply these styles globally to all cues or use specific selectors to target cues with specific attributes (e.g., speakers).

Cues : Cues are the individual subtitle or caption segments in a WebVTT file. Each cue starts with a timestamp indicating the cue’s start and end times, followed by the text content of the cue. Cues are separated by empty lines.

Comments : You can include comments within a WebVTT file to provide annotations or additional information. Comments start with the keyword “NOTE” followed by the comment text. These comments are typically ignored by video players.

Whitespace : WebVTT allows for some flexibility in terms of whitespace. You can have multiple spaces or tabs between elements, but line breaks are significant as they separate different components (e.g., header, cues).

Encoding : WebVTT files are typically encoded in UTF-8 to support various character sets and languages.

Language Support : WebVTT supports the inclusion of subtitles or captions in multiple languages within a single file, allowing viewers to select their preferred language if the video player supports it.

Accessibility Features : WebVTT also supports features for accessibility, including text descriptions for audio content (audio descriptions) and textual representations of non-speech sounds (sound descriptions).

In summary, a WebVTT file has a simple structure that includes a header with optional styling information, followed by individual cues that specify the timing and content of subtitles or captions. It is designed to be human-readable and easily editable with basic text editors, making it a popular choice for web-based video content.

Let’s consider a simple example thatillustrates the use of WebVTT to style and structure subtitles or captions for a video, including the differentiation of speakers (Speaker1 and Speaker2) and applying specific styles to their text:

Let’s have a look at The STYLE section (where you define the styling rules for subtitles or captions):

- ::cue applies the specified styles to all cues (subtitles) by default.

- In this example, all cues have a semi-transparent black background ( rgba(#000, 0.56) ), white text color ( #fff ), a bold Arial font ( font-family: Arial; font-weight: bold ), and a text shadow for readability.

- Additionally, cues with the attribute voice="Speaker1" have green text color ( color: #00FF00; ), and cues with voice="Speaker2" have blue text color ( color: #0000FF; ).

Advanced SubStation Alpha (.ass) and SubStation Alpha (.ssa)

The Advanced SubStation Alpha (.ass) and SubStation Alpha (.ssa) subtitle formats are powerful and feature-rich options for adding subtitles and captions to video content. These formats are favored by experienced subtitlers and offer a wide range of capabilities. Both .ass and .ssa formats share similarities, and .ass is considered an enhanced version of .ssa. Here, we’ll explore the structure and advantages of these formats.

The .ass and .ssa Structure

Both .ass and .ssa formats follow a similar structure that includes key components:

Script Info : This section contains metadata and settings for the subtitle file, including the title, author, and various configuration options. It may specify the video resolution, default font, and more.

V4 Styles : .ass and .ssa formats offer extensive styling options. The V4 Styles section defines how subtitles are displayed, including font properties (typeface, size, color, bold, italic, underline), positioning, alignment, and more.

Events : The Events section contains the main body of the subtitles. Each subtitle event includes timing information (start and end times), layering information (important for complex formatting), and the actual text content.

An Example of .ass/.ssa in Action:

In this example, the .ass/.ssa format includes metadata in the Script Info section, styling information in the V4 Styles section, and the actual subtitle events in the Events section. Each event specifies timing, styling, and the text content to be displayed.

The Pros of .ass/.ssa Subtitles

1. Advanced Styling : .ass/.ssa formats provide extensive control over subtitle styling. You can specify fonts, colors, sizes, bold, italic, underline, and more for precise subtitle appearance.

2. Complex Formatting : These formats support complex formatting options, such as multiple text layers, rotation, and advanced positioning. This flexibility is particularly useful for typesetting and stylized subtitles.

3. Rich Metadata : The Script Info section allows you to include detailed metadata about the subtitle file, enhancing its documentation and organization.

4. Script Type : .ass/.ssa formats support advanced script types, making them suitable for various applications, including karaoke, complex animations, and typesetting.

5. Versatility : .ass/.ssa subtitles work well with multimedia players that support them, making them suitable for a wide range of video content.

6. Multilingual Support : These formats can handle subtitles in multiple languages within a single file, making them versatile for international audiences.

7. Precise Timing : .ass/.ssa formats enable precise control over subtitle timing, ensuring synchronization with video dialogues and actions.

8. Editability : While they are more complex than some other formats, .ass/.ssa files are still human-readable and can be edited using text editors, providing flexibility for subtitlers and content creators.

9. Community and Tool Support : There is an active community of subtitlers and tools like Aegisub that facilitate the creation and editing of .ass/.ssa subtitles.

10. Compatibility : .ass/.ssa formats are supported by various media players and multimedia applications, making them suitable for both professional and amateur subtitlers.

In conclusion, the .ass and .ssa subtitle formats offer powerful styling, formatting, and timing capabilities, making them a preferred choice for subtitlers who require advanced features and precise control over subtitle appearance. These formats are widely compatible and versatile for a range of multimedia content.

Timed Text Markup Language (TTML)

Timed Text Markup Language (TTML) is a comprehensive and standardized format for representing subtitles, captions, and other timed text in multimedia content. TTML is widely used in broadcasting, streaming, and web-based video services. It offers a rich set of features for creating accessible and styled text tracks. Here, we’ll explore the structure and advantages of TTML for subtitles and captions.

The TTML Structure

TTML documents consist of XML markup that represents timed text content. The structure of a TTML document typically includes the following components:

Head : The head section contains metadata and styling information for the entire TTML document. It may include details such as the language of the subtitles, styling preferences, and metadata about the content.

Body : The body section contains the main content of the subtitles or captions. It includes individual text elements that are associated with specific timing cues.

Styling : TTML allows for detailed styling of subtitles, including font family, size, color, background, positioning, and more. Styling is typically defined within the head section and can be applied to individual text elements within the body.

Timing Information : Each text element within the body of the TTML document is associated with timing information, specifying when the text should be displayed and when it should disappear.

An Example of TTML in Action:

In this TTML example:

- The <tt> element defines the TTML document.

- The <head> section contains metadata and styling information.

- The <body> section contains the main content, with each subtitle enclosed in a <div> element.

- Timing information (begin and end attributes) specifies when each subtitle is displayed.

- Styling information is defined in the <styling> section and applied to each <div> element.

The Pros of TTML Subtitles

1. Standardization : TTML is an industry-standard format with well-defined specifications, making it suitable for professional and broadcast applications.

2. Rich Styling : TTML supports extensive styling options, allowing for precise control over the appearance of subtitles, including font properties, colors, backgrounds, and positioning.

3. Internationalization : TTML provides excellent support for multilingual content and ensures accurate text rendering for various languages and scripts.

4. Accessibility : TTML supports accessibility features, such as text descriptions, ensuring that content is accessible to individuals with disabilities.

5. Timing Precision : TTML allows for precise timing control, ensuring synchronization with video dialogues and actions.

6. Versatility : TTML can be used in various scenarios, including broadcasting, streaming services, and web-based multimedia content.

7. Compatibility : TTML is supported by a wide range of multimedia players and platforms, making it a reliable choice for content distribution.

8. Community and Tool Support : There are numerous authoring tools and software applications available for creating and editing TTML subtitles, facilitating content production.

9. XML Format : Being based on XML, TTML documents are structured and machine-readable, enabling automated processing and integration with other systems.

10. Global Adoption : TTML is widely adopted by broadcasters and streaming platforms worldwide, making it a global standard for timed text representation.

In summary, TTML is a robust and versatile format for creating subtitles and captions in multimedia content. Its standardization, rich styling options, internationalization support, and accessibility features make it a preferred choice for professional and accessible video content distribution.

SAMI Format (.smi)

The SAMI (Synchronized Accessible Media Interchange) format, often referred to as SMI, is a versatile format for creating subtitles and captions in multimedia content. It is widely supported and offers a structured and easy-to-understand framework for displaying text alongside video or audio content. When viewed in a text editor, an SMI file displays the time when text appears and corresponding subtitles, similar to the SRT format.

The SMI Structure

Let’s take a closer look at how SMI works. Think of a subtitle as a brief snippet of text that appears on your screen while you’re watching a video or listening to audio. SMI organizes these snippets in a clear and structured manner.

Each snippet in an SMI file typically consists of three main parts:

Sequence Number : Similar to SRT, the sequence number tags each subtitle, indicating the order in which they should appear.

Timing Information : This section specifies when the subtitle should be displayed and when it should disappear. It serves as a time reference for your media player, ensuring precise synchronization.

Text Content : The text content is the actual subtitle or caption. It represents the spoken dialogue, captions, or any relevant text that accompanies the media.

Here’s an example of SMI in action:

In this SMI example, each snippet is enclosed within <SYNC> tags, specifying the timing for when the text should be displayed. The text content is styled with font color attributes to enhance readability.

The Pros of SMI Subtitles

1. Versatile Format : SMI is a versatile format suitable for both video and audio content, making it a valuable tool for multimedia creators.

2. Standard Structure : Similar to SRT, SMI uses a standardized structure that is easy to understand and edit, even with basic text editors.

3. Precise Timing : SMI provides precise timing control, ensuring that subtitles are displayed and removed at the right moments, enhancing the viewer’s experience.

4. Accessibility : SMI supports accessibility features, making it suitable for creating content that is inclusive and accessible to individuals with disabilities.

5. Styling Options : Like the example, you can style SMI subtitles using HTML and CSS attributes, allowing for customization and improved visual appeal.

6. Global Compatibility : SMI is supported by various media players and platforms, ensuring compatibility with a wide range of devices and applications.

7. Multilingual Support : SMI can accommodate subtitles and captions in multiple languages within a single file, making it ideal for reaching a diverse audience.

8. User-Friendly Editing : SMI files are human-readable and can be edited with standard text editors, simplifying the editing process for content creators.

9. Educational Applications : SMI is commonly used in educational contexts to provide transcripts, translations, and additional context for audio and video materials.

In summary, the SAMI format (SMI) offers a structured and accessible way to display subtitles and captions alongside multimedia content. Its versatility, standardized structure, and compatibility make it a valuable choice for multimedia creators aiming to enhance the accessibility and comprehension of their videos and audio clips.

Binary subtitles formats

While text-based formats like SRT and WebVTT are popular for their simplicity and universality, there exists an intriguing world of binary subtitle formats. These formats offer a distinct approach. In this chapter, we’ll explore the world of binary subtitle formats, examining their unique features and applications in the realm of video content.

STL (Spruce Subtitle File)

STL (Spruce Subtitle File) is a widely used binary subtitle format primarily employed in the broadcasting and video production industries. STL files contain graphical representations of subtitles in the form of bitmaps, allowing for precise styling, positioning, and timing. Here’s more information about STL:

1. Bitmap-Based Subtitles: STL subtitles are image-based, which means that each subtitle is represented as a bitmap image. These bitmap images can include text, symbols, and other graphical elements that make up the subtitles.

2. Precise Timing: STL files include timing information that specifies when each subtitle should appear and disappear during video playback. This timing information is crucial for synchronizing subtitles with the corresponding video or audio content.

3. Positioning and Styling: STL subtitles offer flexibility in terms of positioning and styling. Subtitle images can be placed at specific locations on the screen, and various font styles, colors, and sizes can be applied to enhance readability and visual appeal.

4. Compatibility: STL is a widely recognized and supported format in the broadcasting industry. It is compatible with professional broadcast equipment and video editing software used by broadcasters and post-production professionals.

5. Standardization: STL adheres to specific standards, making it suitable for television broadcast and professional video production. The format ensures consistent rendering of subtitles across different broadcasting systems.

6. Multilingual Support: STL supports multiple languages and character sets, making it suitable for international broadcasting and providing subtitles in various languages.

7. Editing: While STL files are primarily used for broadcast purposes, they can be edited using specialized software to adjust timing, positioning, and styling of subtitles when necessary.

8. Subtitle Placement: STL subtitles can be positioned at different locations on the screen, such as the top, bottom, or sides, depending on the requirements of the content and broadcasting standards.

9. Legacy Format: STL has been in use for many years and is considered a legacy format in the broadcasting industry. However, it continues to be a reliable choice for adding subtitles to television programs, documentaries, and other broadcasted content.

10. European Variant (EBU STL): The European Broadcasting Union (EBU) has its variant of STL, known as EBU STL. EBU STL adheres to European broadcasting standards and includes support for international character sets and symbols commonly used in European languages.

STL subtitles are essential for professional video production and broadcasting, ensuring that subtitles are accurately timed, visually appealing, and compliant with industry standards. While text-based subtitle formats are more common for consumer video content, STL remains a trusted format for delivering high-quality subtitles in the broadcast industry, where precision and consistency are paramount.

PAC (Presentation Audio/Video Coding)

PAC (Presentation Audio/Video Coding) is a binary subtitle format primarily used for storing and displaying subtitles in the context of DVDs (Digital Versatile Discs) and DVD-Video. PAC files contain graphical representations of subtitles in the form of bitmap images and are used to provide high-quality, visually appealing subtitles for DVD content. Here’s more information about PAC:

1. Bitmap-Based Subtitles: PAC subtitles are image-based, meaning that each subtitle is represented as a bitmap image. These bitmap images can include text, symbols, and other graphical elements that make up the subtitles.

2. High Quality: One of the key features of PAC is its ability to provide high-quality and visually appealing subtitles. The use of bitmap images allows for detailed and well-styled subtitles, including various font styles, sizes, and colors.

3. Precise Timing: PAC files include timing information that specifies when each subtitle should appear and disappear during DVD playback. This timing information ensures that subtitles are synchronized with the corresponding video or audio content.

4. Versatility: PAC subtitles are versatile and can support subtitles in multiple languages, making them suitable for international DVDs. Different language tracks can be included, allowing viewers to select their preferred language.

5. DVD Compatibility: PAC is commonly used in the context of DVDs, including DVD movies, TV series, and other video content. It is recognized by DVD players and is part of the DVD-Video standard.

6. Editing: While PAC files are primarily used for DVD production, they can be edited using specialized software to adjust timing, positioning, and styling of subtitles when necessary. This is particularly important for DVD authoring and post-production.

7. Overlaying Capability: PAC subtitles can be overlaid on the video content, ensuring that they appear seamlessly during DVD playback. This overlaying capability contributes to the overall viewing experience.

8. Advanced Styling: PAC allows for advanced styling options, including the use of different fonts, font sizes, colors, and special effects. This flexibility enables DVD producers to create subtitles that match the visual style of the content.

9. Legacy Format: PAC is considered a legacy format, primarily used for DVDs and DVD-Video. While newer formats may offer more features and flexibility, PAC continues to be used in the DVD industry.

PAC subtitles play a crucial role in enhancing the viewer’s experience when watching DVDs, ensuring that subtitles are not only informative but also visually engaging. While this format is less common in modern streaming platforms and digital media, it remains essential for DVD production, where high-quality subtitles are a key component of the content.

Other binary formats

Aside from STL and PAC, there are several other binary subtitle formats that you may consider for various purposes. These formats may vary in terms of importance or adoption depending on your specific requirements and industry. Here is a list of some binary subtitle formats in approximate order of their importance and adoption:

CineCanvas : Specifically designed for digital cinema, CineCanvas provides high-quality, image-based subtitles for cinematic presentations. It’s crucial in the film industry.

DVB-Subtitles : Used in digital television broadcasting, including both image-based and text-based subtitle options. DVB subtitles are prevalent in Europe and other regions.

IMX Subtitles : Supported in the IMX professional video format, used in broadcast and post-production environments. It allows for image-based subtitles.

OP-47 and OP-42 : Formats for encoding and decoding bitmap subtitles within MPEG-2 (OP-47) and MPEG-4 (OP-42) video streams, commonly used in digital broadcasting.

HDMV PGS (Presentation Graphic Stream) : A format used in Blu-ray Discs, providing graphic-based subtitles. Important for authoring Blu-ray content.

Teletext : A binary subtitle format used for analog and digital television broadcasting, commonly found in some regions.

ATSC A/53 Part 4 Captions : Used for closed captions in ATSC digital television broadcasts in North America.

CPCM : Used for bitmap-based captions in digital television broadcasting, with various regional implementations.

XDS (Extended Data Services) : Provides a way to deliver additional data, including captions and subtitles, in the context of television broadcasting.

The importance and adoption of these formats can vary widely based on geographic location, industry, and the specific use case. For example, STL and PAC are crucial in broadcasting and DVD production, while formats like CineCanvas are vital in digital cinema. It’s essential to consider your specific needs and the standards prevalent in your region or industry when choosing a binary subtitle format.

You may also like

Simplified subtitling with matesub: exploring various user-friendly workflows.

Understanding Automatic Speech Recognition (ASR) for Translators and Subtitlers

Captions, Closed Captions and Subtitles: What is the Difference?

How Subtitles Boost Engagement For Your Videos

Subtitles for the Deaf and Hard of Hearing - An overview

This activity has received funding from the European Institute of Innovation and Technology (EIT). This body of the European Union receives support from the European Union's Horizon 2020 research and innovation programme.

Winxvideo AI

- Data Transfer

- Download Center

- WinX DVD Ripper Platinum

Rip a full DVD to MP4 (H.264/HEVC) in 5 mins. Backup DVD collection to hard drive, USB, etc with original quality. GPU Accelerated.

- Support old/new/99-title DVD

- 1:1 copy DVD

- Full GPU acceleration

More DVD tips and solutions >>

AI-powered video/image enhancer. Complete toolkit to upscale, stabilize, convert, compress, record, & edit 4K/8K/HDR videos. Cinema-grade quality. Full GPU accelerated.

- AI Video Enhance

- AI Image Restore/Enhance

- Convert Video

- Record Screen

More video tips and tutorials >>

- WinX MediaTrans

Manage, backup & transfer videos, music, photos between iPhone iPad and computer in an easier way. Free up space and fast two-way sync.

- Backup Photo

- Manage Music

- Transfer Video

- Make Ringtone

- Encrypt File

More data transfer solutions and guides >>

Find the answers to purchase benefits, license code, refund, etc.

Get help yourself if you have any questions with Digiarty Software.

Tutorials and step-by-step guides to learn how to use our products.

We've been focused on multimedia software solutions, since 2006.

Contact Support Team Have any questions on purchase or need technical support, please contact us >>

- All-in-one subtitle toolbox. Search, download, extract, and add subtitles.

- Convert & compress HD, 4K, 8K, MP4, MKV, HEVC, AV1, ProRes and more videos.

- Record screen, webcam, gameplay, online courses, etc.

- Edit videos: cut, trim, crop, split, rotate, watermark, effect, etc.

- AI-powered video & image enhancer: upscale, stabilize, interpolate, denoise, etc.

Presentation Grapic Stream Subtitle Format

The Blu-ray MKV or m2ts format allows for two types of on-screen overlays that can be used for subtitles. One is based on text but as for now I've seen no Blu-ray using this one for subs. The other one is the PGS (Presentation Grapic Stream) and consists in bitmaps (and the timeframes on which they have to be displayed). That second stream is by far the most commonly used by Blu-ray discs. That’s no surprising you see a lot of MakeMKV Blu-ray rips with PGS subtitles. As we'll see below, tools exist to extract that stream to .sup files. It's not the same format as .sup files that some tools extract from DVDs.

Note that HD DVD have also a .sup format which is slightly different from the Blu-ray one. As far as the PCH is not (yet) able to display PGS, the only way to get subs for MKV, ts or m2ts material is to use a side text file (.srt) containing the subs. In the following section, you will learn what is PGS subtitle, how it differs from SRT and what to do when PGS subtitles not showing.

Table of Contents

- Part 1. What Is PGS Subtitle? What's the Difference between SRT and PGS?

Part 2. Can I Extract PGS Subtitles from MKV for Editing?

- Part 3. What to Do When HDMV PGS subtitles Not Showing?

- Part 4. FAQ about PGS subtitles

Part 1. What Is PGS Subtitle? What's the Difference between SRT vs PGS?

From time to time, you may notice that most movies have the PGS. So what’s the difference between PGS subtitles and SRT subtitles? Frankly, SRT is a text in .txt with timing and the lines of text inside, which you can modify even with WordPad. Compared with SRT, PGS usually have a lot of colors, styles, etc, especially for karaokes in Disney movies or so, which cannot be modified easily. And the size of PGS is therefore much bigger than that of SRT.

Frankly, PGS subtitles are graphics-based, which are extremely difficult to remove or edit from the video stream once they are embedded in the video. Extracting or changing them requires OCR program.

Have you ever met with problems with Plex when you try to stream 4K MakeMKV rips with PGS subtitles. Typically, the original source video is transcoded down to 1080p instead of direct play. But if the PGS subtitles are turned off, it will revert back to original 4K quality.

This is because the plex transcoding issue happens whenever you are using an image based subtitle format like PGS. So if you want to play original 4k on Plex without resolution downscaled, you’d better to make sure the 4K HDR files have SRT subtitles rather than PSG subtitles which Plex cannot play them directly. To fix the error, you can use Subtitle Edit to convert .pgs to srt. It uses OCR to basically convert PGS subtitles to SRT, so Plex wont transcode. Actually, the PGS subtitles not showing error also occurs when you are playing the video on Samsung or other TVs. You need to convert PGS subtitles to SRT with tools like Subtitle Edit. You can follow the steps below:

- Go to https://www.nikse.dk/subtitleedit and download the app, install and launch it.

- Click File and import subtitle from MKV file. Or you can drag and drop the file into the program.

- Select the video file with PGS subtitles and open it by tapping Open, after which the OCR window pops up.

- Press Start OCR and let Subtitle Edit transcribe it. Modify the incorrect transcriptions if necessary, located at the left side of OCR button.

- Click OK. Then click on Format, select SubRip (.srt), set Encoding as Unicode (UTF-8). Click File > Save as, customize the filename and click Save. It will begin converting PGS to SRT subtitles.

Note: OCR through SubtitleEdit is the diy method, which is time consuming and unreliable someway. Here is an alternative. You can download SRT subtitles from somewhere like subscrene.com. If you don’t know where to go, here are some free sites to download subtitles for movies and TV shows. Then use MKVtoolNix to remove PGS subtitles, add SRT files to the MKV file. It will not re-encode the files and run fast.

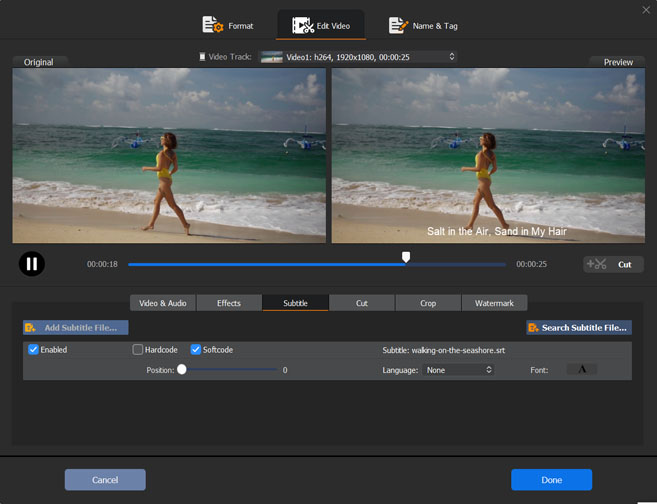

Part 3. HDMV PGS subtitles Not Showing? Hardcode Subtitles to MKV, MP4 or Other Files

Mostly, subtitles won’t show or appear when playing video with PGS subtitles on PC, TV, mobile, or media player. This is also true when the names of the video file and subtitles are not the same. The way to fix the problem is to hardcode subtitles to video. Winxvideo AI is a free converter and editor that can help you hardcode subtitles to any video, be it 4K, MP4, or MKV, and therefore make it playable on your device.

How to Hardcode Subtitles to Video with Winxvideo AI?

- Load video into the program. Click +Video button to import the MKV rip with subtitles

- Choose the video format if needed. For the best compatibility, you can choose MP4 H.264 as per your needs.

- . Hardcode subtitles to the video. Click the Edit button on the main interface to activate the basic editing feature. Go to Subtitles > Add subtitle files > and hardcode or softcode a subtitle file to video. If you haven’t any subtitle file, you can also click the Search subtitle file to to download your preferred subtitles beforehand.

- Click Browse button to save the subtitled video, and press RUN button to beging hardcoding subtitles to MKV or MP4.

Related: learn how to add subtitles to video in detail >>

Part 4. FAQ about PGS Subtitles

1. how to display pgs subtitles in plex.

If your media contains embedded PGS subtitles, you have local subtitles. You can configure the Local Media Assets by following the steps below: Launch the Plex Web App, choose Settings from the top right of the home screen, select Plex Media Server from the horizontal list, choose Agents, select the library type and agent to change, check Local Media Assents and make it topmost in the list. Plex supports some PGS subtitles, but it will transcode the video into burned-in subtitles for streaming.

2. Why does Handbrake Burn in Subtitles from Blu-ray Source?

Some people noticed that Handbrake always burned in subtitles when creating an MP4 from a blu-ray source. The case is true. Handbrake offers two methods of subtitle output: hard burn and soft subtitles. When it comes to the soft subtitles, Handbrake burns only 1 PGS subtitle track into MP4 video as it doesn’t support PGS pass-through, while it passes through multiple PGS subtitle tracks with MKV. So if you are exporting video to MP4, the PGS subtitles are burned in the video automatically.

ABOUT THE AUTHOR

Jack Watt is a sought-after editor at Digiarty. He is responsible for digital and multimedia world, delivering definitive video and audio related software reviews, enlightening guides, and incisive analysis. As a fan of Apple, Jack Watt also brings his experience to more readers and focuses on writing of the Apple ecosystem at large.

Portable Network Graphics (PNG) Specification (Third Edition)

W3C Candidate Recommendation Snapshot 21 September 2023

Copyright © 1996-2023 World Wide Web Consortium . W3C ® liability , trademark and permissive document license rules apply.

This document describes PNG (Portable Network Graphics), an extensible file format for the lossless , portable, well-compressed storage of static and animated raster images. PNG provides a patent-free replacement for GIF and can also replace many common uses of TIFF. Indexed-colour , greyscale , and truecolour images are supported, plus an optional alpha channel. Sample depths range from 1 to 16 bits.

PNG is designed to work well in online viewing applications, such as the World Wide Web, so it is fully streamable with a progressive display option. PNG is robust, providing both full file integrity checking and simple detection of common transmission errors. Also, PNG can store colour space data for improved colour matching on heterogeneous platforms.

This specification defines two Internet Media Types, image/png and image/apng.

Status of This Document

This section describes the status of this document at the time of its publication. A list of current W3C publications and the latest revision of this technical report can be found in the W3C technical reports index at https://www.w3.org/TR/.

This specification is intended to become an International Standard, but is not yet one. It is inappropriate to refer to this specification as an International Standard.

This document was published by the Portable Network Graphics (PNG) Working Group as a Candidate Recommendation Snapshot using the Recommendation track .

Publication as a Candidate Recommendation does not imply endorsement by W3C and its Members. A Candidate Recommendation Snapshot has received wide review , is intended to gather implementation experience , and has commitments from Working Group members to royalty-free licensing for implementations.

This Candidate Recommendation is not expected to advance to Proposed Recommendation any earlier than 21 December 2023.

This document was produced by a group operating under the W3C Patent Policy . W3C maintains a public list of any patent disclosures made in connection with the deliverables of the group; that page also includes instructions for disclosing a patent. An individual who has actual knowledge of a patent which the individual believes contains Essential Claim(s) must disclose the information in accordance with section 6 of the W3C Patent Policy .

This document is governed by the 12 June 2023 W3C Process Document .

1. Introduction

The design goals for this specification were:

- Portability: encoding, decoding, and transmission should be software and hardware platform independent.

- Completeness: it should be possible to represent truecolour , indexed-colour , and greyscale images, in each case with the option of transparency, colour space information, and ancillary information such as textual comments.

- Serial encode and decode: it should be possible for datastreams to be generated serially and read serially, allowing the datastream format to be used for on-the-fly generation and display of images across a serial communication channel.

- Progressive presentation: it should be possible to transmit datastreams so that an approximation of the whole image can be presented initially, and progressively enhanced as the datastream is received.

- Robustness to transmission errors: it should be possible to detect datastream transmission errors reliably.

- Losslessness: filtering and compression should preserve all information.

- Performance: any filtering, compression, and progressive image presentation should be aimed at efficient decoding and presentation. Fast encoding is a less important goal than fast decoding. Decoding speed may be achieved at the expense of encoding speed.

- Compression: images should be compressed effectively, consistent with the other design goals.

- Simplicity: developers should be able to implement the standard easily.

- Interchangeability: any standard-conforming PNG decoder shall be capable of reading all conforming PNG datastreams.

- Flexibility: future extensions and private additions should be allowed for without compromising the interchangeability of standard PNG datastreams.

- Freedom from legal restrictions: no algorithms should be used that are not freely available.

This specification specifies a datastream and an associated file format, Portable Network Graphics (PNG, pronounced "ping"), for a lossless , portable, compressed individual computer graphics image or frame-based animation, transmitted across the Internet.

3. Terms, definitions, and abbreviated terms

For the purposes of this specification the following definitions apply.

Chromaticity is a measure of the quality of a color regardless of its luminance.

The foreground image is said to be composited against the background.

SOURCE: [ RFC1951 ]

Software causes an image to appear on screen by loading the image into the frame buffer .

Luminance and chromaticity together fully define a perceived colour. A formal definition of luminance is found at [ COLORIMETRY ].

Only RGB may be used in PNG, ICtCp is NOT supported.

a four-byte unsigned integer limited to the range 0 to 2 31 -1.

The restriction is imposed in order to accommodate languages that have difficulty with unsigned four-byte values.

Standard dynamic range is independent of the primaries and hence, gamut. Wide color gamut SDR formats are supported by PNG.

deflate -style compression method.

SOURCE: [ rfc1950 ]

Also refers to the name of a library containing a sample implementation of this method

type of check value designed to detect most transmission errors.

A decoder calculates the CRC for the received data and checks by comparing it to the CRC calculated by the encoder and appended to the data. A mismatch indicates that the data or the CRC were corrupted in transit

4. Concepts

4.1 static and animated images.

All PNG images contain a single static image .

Some PNG images — called Animated PNG ( APNG ) — also contain a frame-based animation sequence, the animated image . The first frame of this may be — but need not be — the static image . Non-animation-capable displays (such as printers) will display the static image rather than the animation sequence.

The static image , and each individual frame of an animated image , corresponds to a reference image and is stored as a PNG image .

This specification specifies the PNG datastream, and places some requirements on PNG encoders, which generate PNG datastreams, PNG decoders, which interpret PNG datastreams, and PNG editors , which transform one PNG datastream into another. It does not specify the interface between an application and either a PNG encoder, decoder, or editor. The precise form in which an image is presented to an encoder or delivered by a decoder is not specified. Four kinds of image are distinguished.

The relationships between the four kinds of image are illustrated in Figure 1 .

The relationships between samples, channels, pixels, and sample depth are illustrated in Figure 2 .

4.3 Colour spaces

The RGB colour space in which colour samples are situated may be specified in one of four ways:

- by CICP image format signaling metadata;

- by an ICC profile;

- by specifying explicitly that the colour space is sRGB when the samples conform to this colour space;

- by specifying a gamma value and the 1931 CIE x,y chromaticities of the red, green, and blue primaries used in the image and the reference white point .

For high-end applications the first two methods provides the most flexibility and control. The third method enables one particular, but extremely common, colour space to be indicated. The fourth method, which was standardized before ICC profiles were widely adopted, enables the exact chromaticities of the RGB data to be specified, along with the gamma correction to be applied (see C. Gamma and chromaticity ). However, colour-aware applications will prefer one of the first three methods, while colour-unaware applications will typically ignore all four methods.

Gamma correction is not applied to the alpha channel, if present. Alpha samples always represent a linear fraction of full opacity.

Mastering metadata may also be provided

4.4 Reference image to PNG image transformation

Introduction.

A number of transformations are applied to the reference image to create the PNG image to be encoded (see Figure 3 ). The transformations are applied in the following sequence, where square brackets mean the transformation is optional:

When every pixel is either fully transparent or fully opaque, the alpha separation, alpha compaction, and indexing transformations can cause the recovered reference image to have an alpha sample depth different from the original reference image, or to have no alpha channel. This has no effect on the degree of opacity of any pixel. The two reference images are considered equivalent, and the transformations are considered lossless. Encoders that nevertheless wish to preserve the alpha sample depth may elect not to perform transformations that would alter the alpha sample depth.

4.4.1 Alpha separation

If all alpha samples in a reference image have the maximum value, then the alpha channel may be omitted, resulting in an equivalent image that can be encoded more compactly.

4.4.2 Indexing

If the number of distinct pixel values is 256 or less, and the RGB sample depths are not greater than 8, and the alpha channel is absent or exactly 8 bits deep or every pixel is either fully transparent or fully opaque, then the alternative indexed-colour representation, achieved through an indexing transformation, may be more efficient for encoding. In the indexed-colour representation, each pixel is replaced by an index into a palette. The palette is a list of entries each containing three 8-bit samples (red, green, blue). If an alpha channel is present, there is also a parallel table of 8-bit alpha samples, called the alpha table .

A suggested palette or palettes may be constructed even when the PNG image is not indexed-colour in order to assist viewers that are capable of displaying only a limited number of colours.

For indexed-colour images, encoders can rearrange the palette so that the table entries with the maximum alpha value are grouped at the end. In this case the table can be encoded in a shortened form that does not include these entries.

Encoders creating indexed-color PNG must not insert index values greater than the actual length of the palette table; to do so is an error, and decoders will vary in their handling of this error.

4.4.3 RGB merging

If the red, green, and blue channels have the same sample depth, and, for each pixel, the values of the red, green, and blue samples are equal, then these three channels may be merged into a single greyscale channel.

4.4.4 Alpha compaction

For non-indexed images, if there exists an RGB (or greyscale) value such that all pixels with that value are fully transparent while all other pixels are fully opaque, then the alpha channel can be represented more compactly by merely identifying the RGB (or greyscale) value that is transparent.

4.4.5 Sample depth scaling

In the PNG image, not all sample depths are supported (see 6.1 Colour types and values ), and all channels shall have the same sample depth. All channels of the PNG image use the smallest allowable sample depth that is not less than any sample depth in the reference image, and the possible sample values in the reference image are linearly mapped into the next allowable range for the PNG image. Figure 5 shows how samples of depth 3 might be mapped into samples of depth 4.

Allowing only a few sample depths reduces the number of cases that decoders have to cope with. Sample depth scaling is reversible with no loss of data, because the reference image sample depths can be recorded in the PNG datastream. In the absence of recorded sample depths, the reference image sample depth equals the PNG image sample depth. See 12.4 Sample depth scaling and 13.12 Sample depth rescaling .

4.5 PNG image

The transformation of the reference image results in one of five types of PNG image (see Figure 6 ) :

The format of each pixel depends on the PNG image type and the bit depth. For PNG image types other than indexed-colour, the bit depth specifies the number of bits per sample, not the total number of bits per pixel. For indexed-colour images, the bit depth specifies the number of bits in each palette index, not the sample depth of the colours in the palette or alpha table. Within the pixel the samples appear in the following order, depending on the PNG image type.

- Truecolour with alpha : red, green, blue, alpha.

- Greyscale with alpha : grey, alpha.

- Truecolour : red, green, blue.

- Greyscale : grey.

- Indexed-colour : palette index.

4.6 Encoding the PNG image

A conceptual model of the process of encoding a PNG image is given in Figure 7 . The steps refer to the operations on the array of pixels or indices in the PNG image. The palette and alpha table are not encoded in this way.

- Pass extraction: to allow for progressive display, the PNG image pixels can be rearranged to form several smaller images called reduced images or passes.

- Scanline serialization: the image is serialized a scanline at a time. Pixels are ordered left to right in a scanline and scanlines are ordered top to bottom.

- Filtering: each scanline is transformed into a filtered scanline using one of the defined filter types to prepare the scanline for image compression.

- Compression: occurs on all the filtered scanlines in the image.

- Chunking: the compressed image is divided into conveniently sized chunks. An error detection code is added to each chunk.

- Datastream construction: the chunks are inserted into the datastream.

4.6.1 Pass extraction

Pass extraction (see Figure 7 ) splits a PNG image into a sequence of reduced images where the first image defines a coarse view and subsequent images enhance this coarse view until the last image completes the PNG image. The set of reduced images is also called an interlaced PNG image. Two interlace methods are defined in this specification. The first method is a null method; pixels are stored sequentially from left to right and scanlines from top to bottom. The second method makes multiple scans over the image to produce a sequence of seven reduced images. The seven passes for a sample image are illustrated in Figure 7 . See 8. Interlacing and pass extraction .

4.6.2 Scanline serialization

Each row of pixels, called a scanline, is represented as a sequence of bytes.

4.6.3 Filtering

PNG allows image data to be filtered before it is compressed. Filtering can improve the compressibility of the data. The filter operation is deterministic, reversible, and lossless. This allows the decompressed data to be reverse-filtered in order to obtain the original data. See 7.3 Filtering .

4.6.4 Compression

The sequence of filtered scanlines in the pass or passes of the PNG image is compressed (see Figure 9 ) by one of the defined compression methods. The concatenated filtered scanlines form the input to the compression stage. The output from the compression stage is a single compressed datastream. See 10. Compression .

4.6.5 Chunking

Chunking provides a convenient breakdown of the compressed datastream into manageable chunks (see Figure 9 ). Each chunk has its own redundancy check. See 11. Chunk specifications .

4.7 Additional information

Ancillary information may be associated with an image. Decoders may ignore all or some of the ancillary information. The types of ancillary information provided are described in Table 1 .

4.8 PNG datastream

4.8.1 chunks.

The PNG datastream consists of a PNG signature (see 5.2 PNG signature ) followed by a sequence of chunks (see 11. Chunk specifications ). Each chunk has a chunk type which specifies its function.

4.8.2 Chunk types

Chunk types are four-byte sequences chosen so that they correspond to readable labels when interpreted in the ISO 646.IRV:1991 [ ISO646 ] character set. The first four are termed critical chunks, which shall be understood and correctly interpreted according to the provisions of this specification. These are:

- IHDR : image header, which is the first chunk in a PNG datastream.

- PLTE : palette table associated with indexed PNG images.

- IDAT : image data chunks.

- IEND : image trailer, which is the last chunk in a PNG datastream.

The remaining chunk types are termed ancillary chunk types, which encoders may generate and decoders may interpret.

- Transparency information: tRNS (see 11.3.1 Transparency information ).

- Colour space information: cHRM , gAMA , iCCP , sBIT , sRGB , cICP , mDCv (see 11.3.2 Colour space information ).

- Textual information: iTXt , tEXt , zTXt (see 11.3.3 Textual information ).

- Miscellaneous information: bKGD , hIST , pHYs , sPLT , eXIf (see 11.3.4 Miscellaneous information ).

- Time information: tIME (see 11.3.5 Time stamp information ).

- Animation information: acTL , fcTL , fdAT (see 11.3.6 Animation information ).

4.9 APNG : frame-based animation

Animated PNG ( APNG ) extends the original, static-only PNG format, adding support for frame -based animated images. It is intended to be a replacement for simple animated images that have traditionally used the GIF format [ GIF ], while adding support for 24-bit images and 8-bit transparency, which GIF lacks.

APNG is backwards-compatible with earlier versions of PNG; a non-animated PNG decoder will ignore the ancillary APNG -specific chunks and display the static image .

4.9.1 Structure

An APNG stream is a normal PNG stream as defined in previous versions of the PNG Specification, with three additional chunk types describing the animation and providing additional frame data.

To be recognized as an APNG , an acTL chunk must appear in the stream before any IDAT chunks. The acTL structure is described below .

Conceptually, at the beginning of each play the output buffer shall be completely initialized to a fully transparent black rectangle, with width and height dimensions from the IHDR chunk.

The static image may be included as the first frame of the animation by the presence of a single fcTL chunk before IDAT . Otherwise, the static image is not part of the animation.

Subsequent frames are encoded in fdAT chunks, which have the same structure as IDAT chunks, except preceded by a sequence number . Information for each frame about placement and rendering is stored in fcTL chunks. The full layout of fdAT and fcTL chunks is described below .

The boundaries of the entire animation are specified by the width and height parameters of the IHDR chunk, regardless of whether the default image is part of the animation. The default image should be appropriately padded with fully transparent black pixels if extra space will be needed for later frames.

Each frame is identical for each play, therefore it is safe for applications to cache the frames.

4.9.2 Sequence numbers

The fcTL and fdAT chunks have a zero-based, 4 byte sequence number. Both chunk types share the sequence. The purpose of this number is to detect (and optionally correct) sequence errors in an Animated PNG, since this specification does not impose ordering restrictions on ancillary chunks.

The first fcTL chunk shall contain sequence number 0, and the sequence numbers in the remaining fcTL and fdAT chunks shall be in ascending order, with no gaps or duplicates.

The tables below illustrate the use of sequence numbers for images with more than one frame, and more than one fdAT chunk for the second frame. ( IHDR and IEND chunks omitted in these tables, for clarity).

4.9.3 Output buffer

The output buffer is a pixel array with dimensions specified by the width and height parameters of the PNG IHDR chunk. Conceptually, each frame is constructed in the output buffer before being composited onto the canvas . The contents of the output buffer are available to the decoder. The corners of the output buffer are mapped to the corners of the canvas .

4.9.4 Canvas

The canvas is the area on the output device on which the frames are to be displayed. The contents of the canvas are not necessarily available to the decoder. If a bKGD chunk exists, it may be used to fill the canvas if there is no preferable background.

4.10 Error handling

Errors in a PNG datastream fall into two general classes:

- transmission errors or damage to a computer file system, which tend to corrupt much or all of the datastream;

- syntax errors, which appear as invalid values in chunks, or as missing or misplaced chunks. Syntax errors can be caused not only by encoding mistakes, but also by the use of registered or private values, if those values are unknown to the decoder.

PNG decoders should detect errors as early as possible, recover from errors whenever possible, and fail gracefully otherwise. The error handling philosophy is described in detail in 13.1 Error handling .

4.11 Extensions

This section is non-normative.

The PNG format exposes several extension points:

- chunk type ;

- text keyword ; and

- private field values .

Some of these extension points are reserved by W3C , while others are available for private use.

5. Datastream structure

5.1 png datastream.

The PNG datastream consists of a PNG signature followed by a sequence of chunks. It is the result of encoding a PNG image .

The term datastream is used rather than "file" to describe a byte sequence that may be only a portion of a file. It is also used to emphasize that the sequence of bytes might be generated and consumed "on the fly", never appearing in a stored file at all.

5.2 PNG signature

The first eight bytes of a PNG datastream always contain the following hexadecimal values:

This signature indicates that the remainder of the datastream contains a single PNG image, consisting of a series of chunks beginning with an IHDR chunk and ending with an IEND chunk.

This signature differentiates a PNG datastream from other types of datastream and allows early detection of some transmission errors.

5.3 Chunk layout

Each chunk consists of three or four fields (see Figure 10 ). The meaning of the fields is described in Table 4 . The chunk data field may be empty.

The chunk data length may be any number of bytes up to the maximum; therefore, implementors cannot assume that chunks are aligned on any boundaries larger than bytes.

5.4 Chunk naming conventions

Chunk types are chosen to be meaningful names when the bytes of the chunk type are interpreted as ISO 646 letters [ ISO646 ]. Chunk types are assigned so that a decoder can determine some properties of a chunk even when the type is not recognized. These rules allow safe, flexible extension of the PNG format, by allowing a PNG decoder to decide what to do when it encounters an unknown chunk.

The naming rules are normally of interest only when the decoder does not recognize the chunk's type, as specified at 13. PNG decoders and viewers .

Four bits of the chunk type, the property bits, namely bit 5 (value 32) of each byte, are used to convey chunk properties. This choice means that a human can read off the assigned properties according to whether the letter corresponding to each byte of the chunk type is uppercase (bit 5 is 0) or lowercase (bit 5 is 1).

The property bits are an inherent part of the chunk type, and hence are fixed for any chunk type. Thus, CHNK and cHNk would be unrelated chunk types, not the same chunk with different properties.

The semantics of the property bits are defined in Table 5 .

The hypothetical chunk type " cHNk " has the property bits:

Therefore, this name represents an ancillary, public, safe-to-copy chunk.

5.5 CRC algorithm

CRC fields are calculated using standardized CRC methods with pre and post conditioning, as defined by [ ISO-3309 ] and [ ITU-T-V.42 ]. The CRC polynomial employed— which is identical to that used in the GZIP file format specification [ RFC1952 ]— is

x 32 + x 26 + x 23 + x 22 + x 16 + x 12 + x 11 + x 10 + x 8 + x 7 + x 5 + x 4 + x 2 + x + 1

In PNG, the 32-bit CRC is initialized to all 1's, and then the data from each byte is processed from the least significant bit (1) to the most significant bit (128). After all the data bytes are processed, the CRC is inverted (its ones complement is taken). This value is transmitted (stored in the datastream) MSB first. For the purpose of separating into bytes and ordering, the least significant bit of the 32-bit CRC is defined to be the coefficient of the x 31 term.

Practical calculation of the CRC often employs a precalculated table to accelerate the computation. See D. Sample CRC implementation .

5.6 Chunk ordering

The constraints on the positioning of the individual chunks are listed in Table 6 and illustrated diagrammatically for static images in Figure 11 and Figure 12 , for animated images where the static image forms the first frame in Figure 13 and Figure 14 , and for animated images where the static image is not part of the animation in Figure 15 and Figure 16 . These lattice diagrams represent the constraints on positioning imposed by this specification. The lines in the diagrams define partial ordering relationships. Chunks higher up shall appear before chunks lower down. Chunks which are horizontally aligned and appear between two other chunk types (higher and lower than the horizontally aligned chunks) may appear in any order between the two higher and lower chunk types to which they are connected. The superscript associated with the chunk type is defined in Table 7 . It indicates whether the chunk is mandatory, optional, or may appear more than once. A vertical bar between two chunk types indicates alternatives.

5.7 Defining chunks

5.7.1 general.

All chunks, private and public, SHOULD be listed at [ PNG-EXTENSIONS ].

5.7.2 Defining public chunks

Public chunks are reserved for definition by the W3C .

Public chunks are intended for broad use consistent with the philosophy of PNG.

Organizations and applications are encouraged to submit any chunk that meet the criteria above for definition as a public chunk by the PNG Working Group .

The definition as a public chunk is neither automatic nor immediate. A proposed public chunk type SHALL not be used in publicly available software or datastreams until defined as such.

The definition of new critical chunk types is discouraged unless necessary.

5.7.3 Defining private chunks

Organizations and applications MAY define private chunks for private and experimental use.

A private chunk SHOULD NOT be defined merely to carry textual information of interest to a human user. Instead iTXt chunk SHOULD BE used and corresponding keyword SHOULD BE used and a suitable keyword defined.

Listing private chunks at [ PNG-EXTENSIONS ] reduces, but does not eliminate, the chance that the same private chunk is used for incompatible purposes by different applications. If a private chunk type is used, additional identifying information SHOULD BE be stored at the beginning of the chunk data to further reduce the risk of conflicts.

An ancillary chunk type, not a critical chunk type, SHOULD be used for all private chunks that store information that is not absolutely essential to view the image.

Private critical chunks SHOULD NOT be defined because PNG datastreams containing such chunks are not portable, and SHOULD NOT be used in publicly available software or datastreams. If a private critical chunk is essential for an application, it SHOULD appear near the start of the datastream, so that a standard decoder need not read very far before discovering that it cannot handle the datastream.