Sampling Methods & Strategies 101

Everything you need to know (including examples)

By: Derek Jansen (MBA) | Expert Reviewed By: Kerryn Warren (PhD) | January 2023

If you’re new to research, sooner or later you’re bound to wander into the intimidating world of sampling methods and strategies. If you find yourself on this page, chances are you’re feeling a little overwhelmed or confused. Fear not – in this post we’ll unpack sampling in straightforward language , along with loads of examples .

Overview: Sampling Methods & Strategies

- What is sampling in a research context?

- The two overarching approaches

Simple random sampling

Stratified random sampling, cluster sampling, systematic sampling, purposive sampling, convenience sampling, snowball sampling.

- How to choose the right sampling method

What (exactly) is sampling?

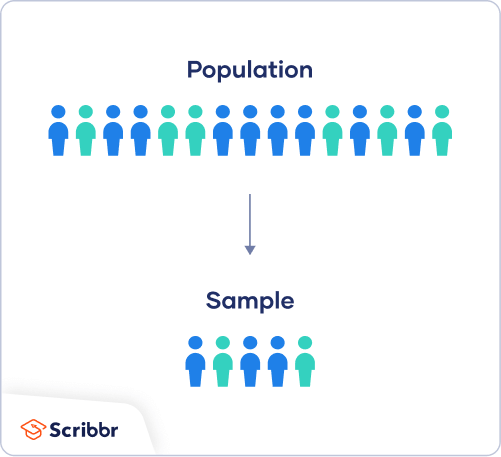

At the simplest level, sampling (within a research context) is the process of selecting a subset of participants from a larger group . For example, if your research involved assessing US consumers’ perceptions about a particular brand of laundry detergent, you wouldn’t be able to collect data from every single person that uses laundry detergent (good luck with that!) – but you could potentially collect data from a smaller subset of this group.

In technical terms, the larger group is referred to as the population , and the subset (the group you’ll actually engage with in your research) is called the sample . Put another way, you can look at the population as a full cake and the sample as a single slice of that cake. In an ideal world, you’d want your sample to be perfectly representative of the population, as that would allow you to generalise your findings to the entire population. In other words, you’d want to cut a perfect cross-sectional slice of cake, such that the slice reflects every layer of the cake in perfect proportion.

Achieving a truly representative sample is, unfortunately, a little trickier than slicing a cake, as there are many practical challenges and obstacles to achieving this in a real-world setting. Thankfully though, you don’t always need to have a perfectly representative sample – it all depends on the specific research aims of each study – so don’t stress yourself out about that just yet!

With the concept of sampling broadly defined, let’s look at the different approaches to sampling to get a better understanding of what it all looks like in practice.

The two overarching sampling approaches

At the highest level, there are two approaches to sampling: probability sampling and non-probability sampling . Within each of these, there are a variety of sampling methods , which we’ll explore a little later.

Probability sampling involves selecting participants (or any unit of interest) on a statistically random basis , which is why it’s also called “random sampling”. In other words, the selection of each individual participant is based on a pre-determined process (not the discretion of the researcher). As a result, this approach achieves a random sample.

Probability-based sampling methods are most commonly used in quantitative research , especially when it’s important to achieve a representative sample that allows the researcher to generalise their findings.

Non-probability sampling , on the other hand, refers to sampling methods in which the selection of participants is not statistically random . In other words, the selection of individual participants is based on the discretion and judgment of the researcher, rather than on a pre-determined process.

Non-probability sampling methods are commonly used in qualitative research , where the richness and depth of the data are more important than the generalisability of the findings.

If that all sounds a little too conceptual and fluffy, don’t worry. Let’s take a look at some actual sampling methods to make it more tangible.

Need a helping hand?

Probability-based sampling methods

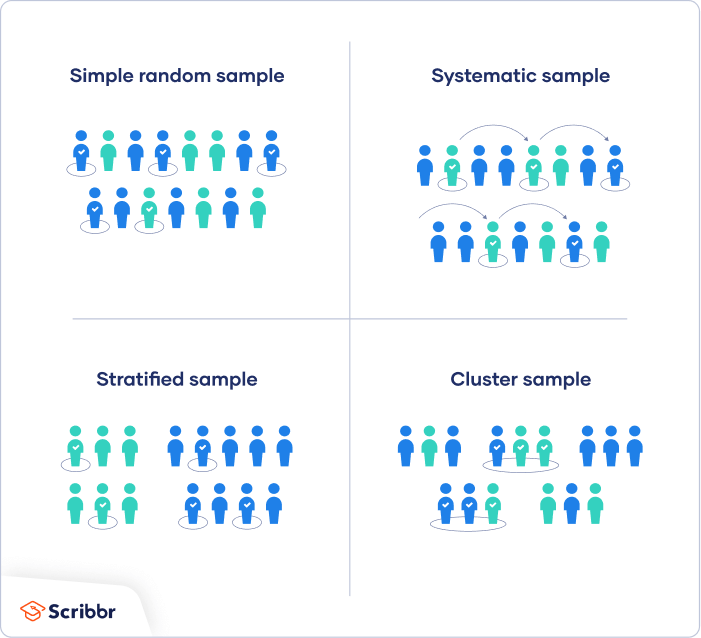

First, we’ll look at four common probability-based (random) sampling methods:

Importantly, this is not a comprehensive list of all the probability sampling methods – these are just four of the most common ones. So, if you’re interested in adopting a probability-based sampling approach, be sure to explore all the options.

Simple random sampling involves selecting participants in a completely random fashion , where each participant has an equal chance of being selected. Basically, this sampling method is the equivalent of pulling names out of a hat , except that you can do it digitally. For example, if you had a list of 500 people, you could use a random number generator to draw a list of 50 numbers (each number, reflecting a participant) and then use that dataset as your sample.

Thanks to its simplicity, simple random sampling is easy to implement , and as a consequence, is typically quite cheap and efficient . Given that the selection process is completely random, the results can be generalised fairly reliably. However, this also means it can hide the impact of large subgroups within the data, which can result in minority subgroups having little representation in the results – if any at all. To address this, one needs to take a slightly different approach, which we’ll look at next.

Stratified random sampling is similar to simple random sampling, but it kicks things up a notch. As the name suggests, stratified sampling involves selecting participants randomly , but from within certain pre-defined subgroups (i.e., strata) that share a common trait . For example, you might divide the population into strata based on gender, ethnicity, age range or level of education, and then select randomly from each group.

The benefit of this sampling method is that it gives you more control over the impact of large subgroups (strata) within the population. For example, if a population comprises 80% males and 20% females, you may want to “balance” this skew out by selecting a random sample from an equal number of males and females. This would, of course, reduce the representativeness of the sample, but it would allow you to identify differences between subgroups. So, depending on your research aims, the stratified approach could work well.

Next on the list is cluster sampling. As the name suggests, this sampling method involves sampling from naturally occurring, mutually exclusive clusters within a population – for example, area codes within a city or cities within a country. Once the clusters are defined, a set of clusters are randomly selected and then a set of participants are randomly selected from each cluster.

Now, you’re probably wondering, “how is cluster sampling different from stratified random sampling?”. Well, let’s look at the previous example where each cluster reflects an area code in a given city.

With cluster sampling, you would collect data from clusters of participants in a handful of area codes (let’s say 5 neighbourhoods). Conversely, with stratified random sampling, you would need to collect data from all over the city (i.e., many more neighbourhoods). You’d still achieve the same sample size either way (let’s say 200 people, for example), but with stratified sampling, you’d need to do a lot more running around, as participants would be scattered across a vast geographic area. As a result, cluster sampling is often the more practical and economical option.

If that all sounds a little mind-bending, you can use the following general rule of thumb. If a population is relatively homogeneous , cluster sampling will often be adequate. Conversely, if a population is quite heterogeneous (i.e., diverse), stratified sampling will generally be more appropriate.

The last probability sampling method we’ll look at is systematic sampling. This method simply involves selecting participants at a set interval , starting from a random point .

For example, if you have a list of students that reflects the population of a university, you could systematically sample that population by selecting participants at an interval of 8 . In other words, you would randomly select a starting point – let’s say student number 40 – followed by student 48, 56, 64, etc.

What’s important with systematic sampling is that the population list you select from needs to be randomly ordered . If there are underlying patterns in the list (for example, if the list is ordered by gender, IQ, age, etc.), this will result in a non-random sample, which would defeat the purpose of adopting this sampling method. Of course, you could safeguard against this by “shuffling” your population list using a random number generator or similar tool.

Non-probability-based sampling methods

Right, now that we’ve looked at a few probability-based sampling methods, let’s look at three non-probability methods :

Again, this is not an exhaustive list of all possible sampling methods, so be sure to explore further if you’re interested in adopting a non-probability sampling approach.

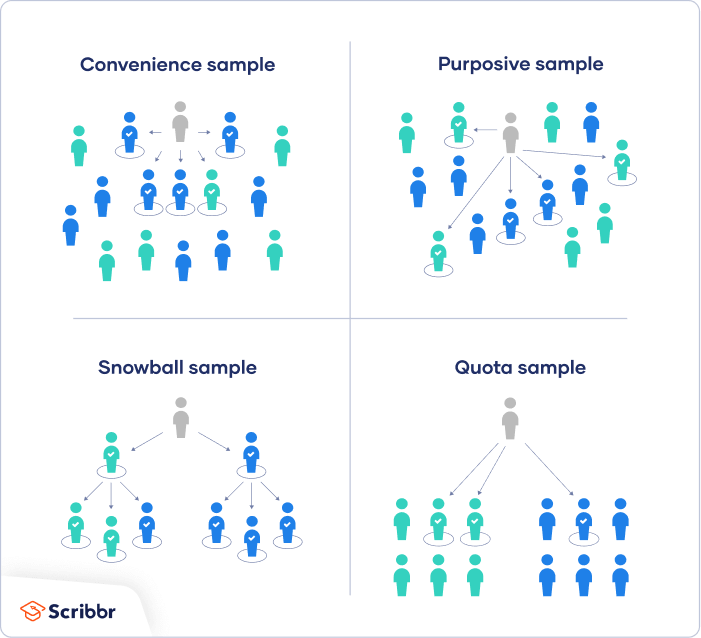

First up, we’ve got purposive sampling – also known as judgment , selective or subjective sampling. Again, the name provides some clues, as this method involves the researcher selecting participants using his or her own judgement , based on the purpose of the study (i.e., the research aims).

For example, suppose your research aims were to understand the perceptions of hyper-loyal customers of a particular retail store. In that case, you could use your judgement to engage with frequent shoppers, as well as rare or occasional shoppers, to understand what judgements drive the two behavioural extremes .

Purposive sampling is often used in studies where the aim is to gather information from a small population (especially rare or hard-to-find populations), as it allows the researcher to target specific individuals who have unique knowledge or experience . Naturally, this sampling method is quite prone to researcher bias and judgement error, and it’s unlikely to produce generalisable results, so it’s best suited to studies where the aim is to go deep rather than broad .

Next up, we have convenience sampling. As the name suggests, with this method, participants are selected based on their availability or accessibility . In other words, the sample is selected based on how convenient it is for the researcher to access it, as opposed to using a defined and objective process.

Naturally, convenience sampling provides a quick and easy way to gather data, as the sample is selected based on the individuals who are readily available or willing to participate. This makes it an attractive option if you’re particularly tight on resources and/or time. However, as you’d expect, this sampling method is unlikely to produce a representative sample and will of course be vulnerable to researcher bias , so it’s important to approach it with caution.

Last but not least, we have the snowball sampling method. This method relies on referrals from initial participants to recruit additional participants. In other words, the initial subjects form the first (small) snowball and each additional subject recruited through referral is added to the snowball, making it larger as it rolls along .

Snowball sampling is often used in research contexts where it’s difficult to identify and access a particular population. For example, people with a rare medical condition or members of an exclusive group. It can also be useful in cases where the research topic is sensitive or taboo and people are unlikely to open up unless they’re referred by someone they trust.

Simply put, snowball sampling is ideal for research that involves reaching hard-to-access populations . But, keep in mind that, once again, it’s a sampling method that’s highly prone to researcher bias and is unlikely to produce a representative sample. So, make sure that it aligns with your research aims and questions before adopting this method.

How to choose a sampling method

Now that we’ve looked at a few popular sampling methods (both probability and non-probability based), the obvious question is, “ how do I choose the right sampling method for my study?”. When selecting a sampling method for your research project, you’ll need to consider two important factors: your research aims and your resources .

As with all research design and methodology choices, your sampling approach needs to be guided by and aligned with your research aims, objectives and research questions – in other words, your golden thread. Specifically, you need to consider whether your research aims are primarily concerned with producing generalisable findings (in which case, you’ll likely opt for a probability-based sampling method) or with achieving rich , deep insights (in which case, a non-probability-based approach could be more practical). Typically, quantitative studies lean toward the former, while qualitative studies aim for the latter, so be sure to consider your broader methodology as well.

The second factor you need to consider is your resources and, more generally, the practical constraints at play. If, for example, you have easy, free access to a large sample at your workplace or university and a healthy budget to help you attract participants, that will open up multiple options in terms of sampling methods. Conversely, if you’re cash-strapped, short on time and don’t have unfettered access to your population of interest, you may be restricted to convenience or referral-based methods.

In short, be ready for trade-offs – you won’t always be able to utilise the “perfect” sampling method for your study, and that’s okay. Much like all the other methodological choices you’ll make as part of your study, you’ll often need to compromise and accept practical trade-offs when it comes to sampling. Don’t let this get you down though – as long as your sampling choice is well explained and justified, and the limitations of your approach are clearly articulated, you’ll be on the right track.

Let’s recap…

In this post, we’ve covered the basics of sampling within the context of a typical research project.

- Sampling refers to the process of defining a subgroup (sample) from the larger group of interest (population).

- The two overarching approaches to sampling are probability sampling (random) and non-probability sampling .

- Common probability-based sampling methods include simple random sampling, stratified random sampling, cluster sampling and systematic sampling.

- Common non-probability-based sampling methods include purposive sampling, convenience sampling and snowball sampling.

- When choosing a sampling method, you need to consider your research aims , objectives and questions, as well as your resources and other practical constraints .

If you’d like to see an example of a sampling strategy in action, be sure to check out our research methodology chapter sample .

Last but not least, if you need hands-on help with your sampling (or any other aspect of your research), take a look at our 1-on-1 coaching service , where we guide you through each step of the research process, at your own pace.

Psst... there’s more!

This post was based on one of our popular Research Bootcamps . If you're working on a research project, you'll definitely want to check this out ...

You Might Also Like:

Excellent and helpful. Best site to get a full understanding of Research methodology. I’m nolonger as “clueless “..😉

Excellent and helpful for junior researcher!

Grad Coach tutorials are excellent – I recommend them to everyone doing research. I will be working with a sample of imprisoned women and now have a much clearer idea concerning sampling. Thank you to all at Grad Coach for generously sharing your expertise with students.

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- Sampling Methods | Types, Techniques, & Examples

Sampling Methods | Types, Techniques, & Examples

Published on 3 May 2022 by Shona McCombes . Revised on 10 October 2022.

When you conduct research about a group of people, it’s rarely possible to collect data from every person in that group. Instead, you select a sample. The sample is the group of individuals who will actually participate in the research.

To draw valid conclusions from your results, you have to carefully decide how you will select a sample that is representative of the group as a whole. There are two types of sampling methods:

- Probability sampling involves random selection, allowing you to make strong statistical inferences about the whole group. It minimises the risk of selection bias .

- Non-probability sampling involves non-random selection based on convenience or other criteria, allowing you to easily collect data.

You should clearly explain how you selected your sample in the methodology section of your paper or thesis.

Table of contents

Population vs sample, probability sampling methods, non-probability sampling methods, frequently asked questions about sampling.

First, you need to understand the difference between a population and a sample , and identify the target population of your research.

- The population is the entire group that you want to draw conclusions about.

- The sample is the specific group of individuals that you will collect data from.

The population can be defined in terms of geographical location, age, income, and many other characteristics.

It is important to carefully define your target population according to the purpose and practicalities of your project.

If the population is very large, demographically mixed, and geographically dispersed, it might be difficult to gain access to a representative sample.

Sampling frame

The sampling frame is the actual list of individuals that the sample will be drawn from. Ideally, it should include the entire target population (and nobody who is not part of that population).

You are doing research on working conditions at Company X. Your population is all 1,000 employees of the company. Your sampling frame is the company’s HR database, which lists the names and contact details of every employee.

Sample size

The number of individuals you should include in your sample depends on various factors, including the size and variability of the population and your research design. There are different sample size calculators and formulas depending on what you want to achieve with statistical analysis .

Prevent plagiarism, run a free check.

Probability sampling means that every member of the population has a chance of being selected. It is mainly used in quantitative research . If you want to produce results that are representative of the whole population, probability sampling techniques are the most valid choice.

There are four main types of probability sample.

1. Simple random sampling

In a simple random sample , every member of the population has an equal chance of being selected. Your sampling frame should include the whole population.

To conduct this type of sampling, you can use tools like random number generators or other techniques that are based entirely on chance.

You want to select a simple random sample of 100 employees of Company X. You assign a number to every employee in the company database from 1 to 1000, and use a random number generator to select 100 numbers.

2. Systematic sampling

Systematic sampling is similar to simple random sampling, but it is usually slightly easier to conduct. Every member of the population is listed with a number, but instead of randomly generating numbers, individuals are chosen at regular intervals.

All employees of the company are listed in alphabetical order. From the first 10 numbers, you randomly select a starting point: number 6. From number 6 onwards, every 10th person on the list is selected (6, 16, 26, 36, and so on), and you end up with a sample of 100 people.

If you use this technique, it is important to make sure that there is no hidden pattern in the list that might skew the sample. For example, if the HR database groups employees by team, and team members are listed in order of seniority, there is a risk that your interval might skip over people in junior roles, resulting in a sample that is skewed towards senior employees.

3. Stratified sampling

Stratified sampling involves dividing the population into subpopulations that may differ in important ways. It allows you draw more precise conclusions by ensuring that every subgroup is properly represented in the sample.

To use this sampling method, you divide the population into subgroups (called strata) based on the relevant characteristic (e.g., gender, age range, income bracket, job role).

Based on the overall proportions of the population, you calculate how many people should be sampled from each subgroup. Then you use random or systematic sampling to select a sample from each subgroup.

The company has 800 female employees and 200 male employees. You want to ensure that the sample reflects the gender balance of the company, so you sort the population into two strata based on gender. Then you use random sampling on each group, selecting 80 women and 20 men, which gives you a representative sample of 100 people.

4. Cluster sampling

Cluster sampling also involves dividing the population into subgroups, but each subgroup should have similar characteristics to the whole sample. Instead of sampling individuals from each subgroup, you randomly select entire subgroups.

If it is practically possible, you might include every individual from each sampled cluster. If the clusters themselves are large, you can also sample individuals from within each cluster using one of the techniques above. This is called multistage sampling .

This method is good for dealing with large and dispersed populations, but there is more risk of error in the sample, as there could be substantial differences between clusters. It’s difficult to guarantee that the sampled clusters are really representative of the whole population.

The company has offices in 10 cities across the country (all with roughly the same number of employees in similar roles). You don’t have the capacity to travel to every office to collect your data, so you use random sampling to select 3 offices – these are your clusters.

In a non-probability sample , individuals are selected based on non-random criteria, and not every individual has a chance of being included.

This type of sample is easier and cheaper to access, but it has a higher risk of sampling bias . That means the inferences you can make about the population are weaker than with probability samples, and your conclusions may be more limited. If you use a non-probability sample, you should still aim to make it as representative of the population as possible.

Non-probability sampling techniques are often used in exploratory and qualitative research . In these types of research, the aim is not to test a hypothesis about a broad population, but to develop an initial understanding of a small or under-researched population.

1. Convenience sampling

A convenience sample simply includes the individuals who happen to be most accessible to the researcher.

This is an easy and inexpensive way to gather initial data, but there is no way to tell if the sample is representative of the population, so it can’t produce generalisable results.

You are researching opinions about student support services in your university, so after each of your classes, you ask your fellow students to complete a survey on the topic. This is a convenient way to gather data, but as you only surveyed students taking the same classes as you at the same level, the sample is not representative of all the students at your university.

2. Voluntary response sampling

Similar to a convenience sample, a voluntary response sample is mainly based on ease of access. Instead of the researcher choosing participants and directly contacting them, people volunteer themselves (e.g., by responding to a public online survey).

Voluntary response samples are always at least somewhat biased, as some people will inherently be more likely to volunteer than others.

You send out the survey to all students at your university and many students decide to complete it. This can certainly give you some insight into the topic, but the people who responded are more likely to be those who have strong opinions about the student support services, so you can’t be sure that their opinions are representative of all students.

3. Purposive sampling

Purposive sampling , also known as judgement sampling, involves the researcher using their expertise to select a sample that is most useful to the purposes of the research.

It is often used in qualitative research , where the researcher wants to gain detailed knowledge about a specific phenomenon rather than make statistical inferences, or where the population is very small and specific. An effective purposive sample must have clear criteria and rationale for inclusion.

You want to know more about the opinions and experiences of students with a disability at your university, so you purposely select a number of students with different support needs in order to gather a varied range of data on their experiences with student services.

4. Snowball sampling

If the population is hard to access, snowball sampling can be used to recruit participants via other participants. The number of people you have access to ‘snowballs’ as you get in contact with more people.

You are researching experiences of homelessness in your city. Since there is no list of all homeless people in the city, probability sampling isn’t possible. You meet one person who agrees to participate in the research, and she puts you in contact with other homeless people she knows in the area.

A sample is a subset of individuals from a larger population. Sampling means selecting the group that you will actually collect data from in your research.

For example, if you are researching the opinions of students in your university, you could survey a sample of 100 students.

Statistical sampling allows you to test a hypothesis about the characteristics of a population. There are various sampling methods you can use to ensure that your sample is representative of the population as a whole.

Samples are used to make inferences about populations . Samples are easier to collect data from because they are practical, cost-effective, convenient, and manageable.

Probability sampling means that every member of the target population has a known chance of being included in the sample.

Probability sampling methods include simple random sampling , systematic sampling , stratified sampling , and cluster sampling .

In non-probability sampling , the sample is selected based on non-random criteria, and not every member of the population has a chance of being included.

Common non-probability sampling methods include convenience sampling , voluntary response sampling, purposive sampling , snowball sampling , and quota sampling .

Sampling bias occurs when some members of a population are systematically more likely to be selected in a sample than others.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

McCombes, S. (2022, October 10). Sampling Methods | Types, Techniques, & Examples. Scribbr. Retrieved 3 June 2024, from https://www.scribbr.co.uk/research-methods/sampling/

Is this article helpful?

Shona McCombes

Other students also liked, what is quantitative research | definition & methods, a quick guide to experimental design | 5 steps & examples, controlled experiments | methods & examples of control.

Sampling Methods: A guide for researchers

Affiliation.

- 1 Arizona School of Dentistry & Oral Health A.T. Still University, Mesa, AZ, USA [email protected].

- PMID: 37553279

Sampling is a critical element of research design. Different methods can be used for sample selection to ensure that members of the study population reflect both the source and target populations, including probability and non-probability sampling. Power and sample size are used to determine the number of subjects needed to answer the research question. Characteristics of individuals included in the sample population should be clearly defined to determine eligibility for study participation and improve power. Sample selection methods differ based on study design. The purpose of this short report is to review common sampling considerations and related errors.

Keywords: research design; sample size; sampling.

Copyright © 2023 The American Dental Hygienists’ Association.

- Research Design*

- Sample Size

- En español – ExME

- Em português – EME

What are sampling methods and how do you choose the best one?

Posted on 18th November 2020 by Mohamed Khalifa

This tutorial will introduce sampling methods and potential sampling errors to avoid when conducting medical research.

Introduction to sampling methods

Examples of different sampling methods, choosing the best sampling method.

It is important to understand why we sample the population; for example, studies are built to investigate the relationships between risk factors and disease. In other words, we want to find out if this is a true association, while still aiming for the minimum risk for errors such as: chance, bias or confounding .

However, it would not be feasible to experiment on the whole population, we would need to take a good sample and aim to reduce the risk of having errors by proper sampling technique.

What is a sampling frame?

A sampling frame is a record of the target population containing all participants of interest. In other words, it is a list from which we can extract a sample.

What makes a good sample?

A good sample should be a representative subset of the population we are interested in studying, therefore, with each participant having equal chance of being randomly selected into the study.

We could choose a sampling method based on whether we want to account for sampling bias; a random sampling method is often preferred over a non-random method for this reason. Random sampling examples include: simple, systematic, stratified, and cluster sampling. Non-random sampling methods are liable to bias, and common examples include: convenience, purposive, snowballing, and quota sampling. For the purposes of this blog we will be focusing on random sampling methods .

Example: We want to conduct an experimental trial in a small population such as: employees in a company, or students in a college. We include everyone in a list and use a random number generator to select the participants

Advantages: Generalisable results possible, random sampling, the sampling frame is the whole population, every participant has an equal probability of being selected

Disadvantages: Less precise than stratified method, less representative than the systematic method

Example: Every nth patient entering the out-patient clinic is selected and included in our sample

Advantages: More feasible than simple or stratified methods, sampling frame is not always required

Disadvantages: Generalisability may decrease if baseline characteristics repeat across every nth participant

Example: We have a big population (a city) and we want to ensure representativeness of all groups with a pre-determined characteristic such as: age groups, ethnic origin, and gender

Advantages: Inclusive of strata (subgroups), reliable and generalisable results

Disadvantages: Does not work well with multiple variables

Example: 10 schools have the same number of students across the county. We can randomly select 3 out of 10 schools as our clusters

Advantages: Readily doable with most budgets, does not require a sampling frame

Disadvantages: Results may not be reliable nor generalisable

How can you identify sampling errors?

Non-random selection increases the probability of sampling (selection) bias if the sample does not represent the population we want to study. We could avoid this by random sampling and ensuring representativeness of our sample with regards to sample size.

An inadequate sample size decreases the confidence in our results as we may think there is no significant difference when actually there is. This type two error results from having a small sample size, or from participants dropping out of the sample.

In medical research of disease, if we select people with certain diseases while strictly excluding participants with other co-morbidities, we run the risk of diagnostic purity bias where important sub-groups of the population are not represented.

Furthermore, measurement bias may occur during re-collection of risk factors by participants (recall bias) or assessment of outcome where people who live longer are associated with treatment success, when in fact people who died were not included in the sample or data analysis (survivors bias).

By following the steps below we could choose the best sampling method for our study in an orderly fashion.

Research objectiveness

Firstly, a refined research question and goal would help us define our population of interest. If our calculated sample size is small then it would be easier to get a random sample. If, however, the sample size is large, then we should check if our budget and resources can handle a random sampling method.

Sampling frame availability

Secondly, we need to check for availability of a sampling frame (Simple), if not, could we make a list of our own (Stratified). If neither option is possible, we could still use other random sampling methods, for instance, systematic or cluster sampling.

Study design

Moreover, we could consider the prevalence of the topic (exposure or outcome) in the population, and what would be the suitable study design. In addition, checking if our target population is widely varied in its baseline characteristics. For example, a population with large ethnic subgroups could best be studied using a stratified sampling method.

Random sampling

Finally, the best sampling method is always the one that could best answer our research question while also allowing for others to make use of our results (generalisability of results). When we cannot afford a random sampling method, we can always choose from the non-random sampling methods.

To sum up, we now understand that choosing between random or non-random sampling methods is multifactorial. We might often be tempted to choose a convenience sample from the start, but that would not only decrease precision of our results, and would make us miss out on producing research that is more robust and reliable.

References (pdf)

Mohamed Khalifa

Leave a reply cancel reply.

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

No Comments on What are sampling methods and how do you choose the best one?

Thank you for this overview. A concise approach for research.

really helps! am an ecology student preparing to write my lab report for sampling.

I learned a lot to the given presentation.. It’s very comprehensive… Thanks for sharing…

Very informative and useful for my study. Thank you

Oversimplified info on sampling methods. Probabilistic of the sampling and sampling of samples by chance does rest solely on the random methods. Factors such as the random visits or presentation of the potential participants at clinics or sites could be sufficiently random in nature and should be used for the sake of efficiency and feasibility. Nevertheless, this approach has to be taken only after careful thoughts. Representativeness of the study samples have to be checked at the end or during reporting by comparing it to the published larger studies or register of some kind in/from the local population.

Thank you so much Mr.mohamed very useful and informative article

Subscribe to our newsletter

You will receive our monthly newsletter and free access to Trip Premium.

Related Articles

How to read a funnel plot

This blog introduces you to funnel plots, guiding you through how to read them and what may cause them to look asymmetrical.

Internal and external validity: what are they and how do they differ?

Is this study valid? Can I trust this study’s methods and design? Can I apply the results of this study to other contexts? Learn more about internal and external validity in research to help you answer these questions when you next look at a paper.

Cluster Randomized Trials: Concepts

This blog summarizes the concepts of cluster randomization, and the logistical and statistical considerations while designing a cluster randomized controlled trial.

- Privacy Policy

Home » Sampling Methods – Types, Techniques and Examples

Sampling Methods – Types, Techniques and Examples

Table of Contents

Sampling refers to the process of selecting a subset of data from a larger population or dataset in order to analyze or make inferences about the whole population.

In other words, sampling involves taking a representative sample of data from a larger group or dataset in order to gain insights or draw conclusions about the entire group.

Sampling Methods

Sampling methods refer to the techniques used to select a subset of individuals or units from a larger population for the purpose of conducting statistical analysis or research.

Sampling is an essential part of the Research because it allows researchers to draw conclusions about a population without having to collect data from every member of that population, which can be time-consuming, expensive, or even impossible.

Types of Sampling Methods

Sampling can be broadly categorized into two main categories:

Probability Sampling

This type of sampling is based on the principles of random selection, and it involves selecting samples in a way that every member of the population has an equal chance of being included in the sample.. Probability sampling is commonly used in scientific research and statistical analysis, as it provides a representative sample that can be generalized to the larger population.

Type of Probability Sampling :

- Simple Random Sampling: In this method, every member of the population has an equal chance of being selected for the sample. This can be done using a random number generator or by drawing names out of a hat, for example.

- Systematic Sampling: In this method, the population is first divided into a list or sequence, and then every nth member is selected for the sample. For example, if every 10th person is selected from a list of 100 people, the sample would include 10 people.

- Stratified Sampling: In this method, the population is divided into subgroups or strata based on certain characteristics, and then a random sample is taken from each stratum. This is often used to ensure that the sample is representative of the population as a whole.

- Cluster Sampling: In this method, the population is divided into clusters or groups, and then a random sample of clusters is selected. Then, all members of the selected clusters are included in the sample.

- Multi-Stage Sampling : This method combines two or more sampling techniques. For example, a researcher may use stratified sampling to select clusters, and then use simple random sampling to select members within each cluster.

Non-probability Sampling

This type of sampling does not rely on random selection, and it involves selecting samples in a way that does not give every member of the population an equal chance of being included in the sample. Non-probability sampling is often used in qualitative research, where the aim is not to generalize findings to a larger population, but to gain an in-depth understanding of a particular phenomenon or group. Non-probability sampling methods can be quicker and more cost-effective than probability sampling methods, but they may also be subject to bias and may not be representative of the larger population.

Types of Non-probability Sampling :

- Convenience Sampling: In this method, participants are chosen based on their availability or willingness to participate. This method is easy and convenient but may not be representative of the population.

- Purposive Sampling: In this method, participants are selected based on specific criteria, such as their expertise or knowledge on a particular topic. This method is often used in qualitative research, but may not be representative of the population.

- Snowball Sampling: In this method, participants are recruited through referrals from other participants. This method is often used when the population is hard to reach, but may not be representative of the population.

- Quota Sampling: In this method, a predetermined number of participants are selected based on specific criteria, such as age or gender. This method is often used in market research, but may not be representative of the population.

- Volunteer Sampling: In this method, participants volunteer to participate in the study. This method is often used in research where participants are motivated by personal interest or altruism, but may not be representative of the population.

Applications of Sampling Methods

Applications of Sampling Methods from different fields:

- Psychology : Sampling methods are used in psychology research to study various aspects of human behavior and mental processes. For example, researchers may use stratified sampling to select a sample of participants that is representative of the population based on factors such as age, gender, and ethnicity. Random sampling may also be used to select participants for experimental studies.

- Sociology : Sampling methods are commonly used in sociological research to study social phenomena and relationships between individuals and groups. For example, researchers may use cluster sampling to select a sample of neighborhoods to study the effects of economic inequality on health outcomes. Stratified sampling may also be used to select a sample of participants that is representative of the population based on factors such as income, education, and occupation.

- Social sciences: Sampling methods are commonly used in social sciences to study human behavior and attitudes. For example, researchers may use stratified sampling to select a sample of participants that is representative of the population based on factors such as age, gender, and income.

- Marketing : Sampling methods are used in marketing research to collect data on consumer preferences, behavior, and attitudes. For example, researchers may use random sampling to select a sample of consumers to participate in a survey about a new product.

- Healthcare : Sampling methods are used in healthcare research to study the prevalence of diseases and risk factors, and to evaluate interventions. For example, researchers may use cluster sampling to select a sample of health clinics to participate in a study of the effectiveness of a new treatment.

- Environmental science: Sampling methods are used in environmental science to collect data on environmental variables such as water quality, air pollution, and soil composition. For example, researchers may use systematic sampling to collect soil samples at regular intervals across a field.

- Education : Sampling methods are used in education research to study student learning and achievement. For example, researchers may use stratified sampling to select a sample of schools that is representative of the population based on factors such as demographics and academic performance.

Examples of Sampling Methods

Probability Sampling Methods Examples:

- Simple random sampling Example : A researcher randomly selects participants from the population using a random number generator or drawing names from a hat.

- Stratified random sampling Example : A researcher divides the population into subgroups (strata) based on a characteristic of interest (e.g. age or income) and then randomly selects participants from each subgroup.

- Systematic sampling Example : A researcher selects participants at regular intervals from a list of the population.

Non-probability Sampling Methods Examples:

- Convenience sampling Example: A researcher selects participants who are conveniently available, such as students in a particular class or visitors to a shopping mall.

- Purposive sampling Example : A researcher selects participants who meet specific criteria, such as individuals who have been diagnosed with a particular medical condition.

- Snowball sampling Example : A researcher selects participants who are referred to them by other participants, such as friends or acquaintances.

How to Conduct Sampling Methods

some general steps to conduct sampling methods:

- Define the population: Identify the population of interest and clearly define its boundaries.

- Choose the sampling method: Select an appropriate sampling method based on the research question, characteristics of the population, and available resources.

- Determine the sample size: Determine the desired sample size based on statistical considerations such as margin of error, confidence level, or power analysis.

- Create a sampling frame: Develop a list of all individuals or elements in the population from which the sample will be drawn. The sampling frame should be comprehensive, accurate, and up-to-date.

- Select the sample: Use the chosen sampling method to select the sample from the sampling frame. The sample should be selected randomly, or if using a non-random method, every effort should be made to minimize bias and ensure that the sample is representative of the population.

- Collect data: Once the sample has been selected, collect data from each member of the sample using appropriate research methods (e.g., surveys, interviews, observations).

- Analyze the data: Analyze the data collected from the sample to draw conclusions about the population of interest.

When to use Sampling Methods

Sampling methods are used in research when it is not feasible or practical to study the entire population of interest. Sampling allows researchers to study a smaller group of individuals, known as a sample, and use the findings from the sample to make inferences about the larger population.

Sampling methods are particularly useful when:

- The population of interest is too large to study in its entirety.

- The cost and time required to study the entire population are prohibitive.

- The population is geographically dispersed or difficult to access.

- The research question requires specialized or hard-to-find individuals.

- The data collected is quantitative and statistical analyses are used to draw conclusions.

Purpose of Sampling Methods

The main purpose of sampling methods in research is to obtain a representative sample of individuals or elements from a larger population of interest, in order to make inferences about the population as a whole. By studying a smaller group of individuals, known as a sample, researchers can gather information about the population that would be difficult or impossible to obtain from studying the entire population.

Sampling methods allow researchers to:

- Study a smaller, more manageable group of individuals, which is typically less time-consuming and less expensive than studying the entire population.

- Reduce the potential for data collection errors and improve the accuracy of the results by minimizing sampling bias.

- Make inferences about the larger population with a certain degree of confidence, using statistical analyses of the data collected from the sample.

- Improve the generalizability and external validity of the findings by ensuring that the sample is representative of the population of interest.

Characteristics of Sampling Methods

Here are some characteristics of sampling methods:

- Randomness : Probability sampling methods are based on random selection, meaning that every member of the population has an equal chance of being selected. This helps to minimize bias and ensure that the sample is representative of the population.

- Representativeness : The goal of sampling is to obtain a sample that is representative of the larger population of interest. This means that the sample should reflect the characteristics of the population in terms of key demographic, behavioral, or other relevant variables.

- Size : The size of the sample should be large enough to provide sufficient statistical power for the research question at hand. The sample size should also be appropriate for the chosen sampling method and the level of precision desired.

- Efficiency : Sampling methods should be efficient in terms of time, cost, and resources required. The method chosen should be feasible given the available resources and time constraints.

- Bias : Sampling methods should aim to minimize bias and ensure that the sample is representative of the population of interest. Bias can be introduced through non-random selection or non-response, and can affect the validity and generalizability of the findings.

- Precision : Sampling methods should be precise in terms of providing estimates of the population parameters of interest. Precision is influenced by sample size, sampling method, and level of variability in the population.

- Validity : The validity of the sampling method is important for ensuring that the results obtained from the sample are accurate and can be generalized to the population of interest. Validity can be affected by sampling method, sample size, and the representativeness of the sample.

Advantages of Sampling Methods

Sampling methods have several advantages, including:

- Cost-Effective : Sampling methods are often much cheaper and less time-consuming than studying an entire population. By studying only a small subset of the population, researchers can gather valuable data without incurring the costs associated with studying the entire population.

- Convenience : Sampling methods are often more convenient than studying an entire population. For example, if a researcher wants to study the eating habits of people in a city, it would be very difficult and time-consuming to study every single person in the city. By using sampling methods, the researcher can obtain data from a smaller subset of people, making the study more feasible.

- Accuracy: When done correctly, sampling methods can be very accurate. By using appropriate sampling techniques, researchers can obtain a sample that is representative of the entire population. This allows them to make accurate generalizations about the population as a whole based on the data collected from the sample.

- Time-Saving: Sampling methods can save a lot of time compared to studying the entire population. By studying a smaller sample, researchers can collect data much more quickly than they could if they studied every single person in the population.

- Less Bias : Sampling methods can reduce bias in a study. If a researcher were to study the entire population, it would be very difficult to eliminate all sources of bias. However, by using appropriate sampling techniques, researchers can reduce bias and obtain a sample that is more representative of the entire population.

Limitations of Sampling Methods

- Sampling Error : Sampling error is the difference between the sample statistic and the population parameter. It is the result of selecting a sample rather than the entire population. The larger the sample, the lower the sampling error. However, no matter how large the sample size, there will always be some degree of sampling error.

- Selection Bias: Selection bias occurs when the sample is not representative of the population. This can happen if the sample is not selected randomly or if some groups are underrepresented in the sample. Selection bias can lead to inaccurate conclusions about the population.

- Non-response Bias : Non-response bias occurs when some members of the sample do not respond to the survey or study. This can result in a biased sample if the non-respondents differ from the respondents in important ways.

- Time and Cost : While sampling can be cost-effective, it can still be expensive and time-consuming to select a sample that is representative of the population. Depending on the sampling method used, it may take a long time to obtain a sample that is large enough and representative enough to be useful.

- Limited Information : Sampling can only provide information about the variables that are measured. It may not provide information about other variables that are relevant to the research question but were not measured.

- Generalization : The extent to which the findings from a sample can be generalized to the population depends on the representativeness of the sample. If the sample is not representative of the population, it may not be possible to generalize the findings to the population as a whole.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Stratified Random Sampling – Definition, Method...

Quota Sampling – Types, Methods and Examples

Simple Random Sampling – Types, Method and...

Convenience Sampling – Method, Types and Examples

Volunteer Sampling – Definition, Methods and...

Probability Sampling – Methods, Types and...

SYSTEMATIC REVIEW article

This article is part of the research topic.

Reviews in Gastroenterology 2023

Electrogastrography Measurement Systems and Analysis Methods Used in Clinical Practice and Research: Comprehensive Review Provisionally Accepted

- 1 VSB-Technical University of Ostrava, Czechia

The final, formatted version of the article will be published soon.

Electrogastrography (EGG) is a non-invasive method with high diagnostic potential for the prevention of gastroenterological pathologies in clinical practice. In this paper, a review of the measurement systems, procedures, and methods of analysis used in electrogastrography is presented. A critical review of historical and current literature is conducted, focusing on electrode placement, measurement apparatus, measurement procedures, and time-frequency domain methods of filtration and analysis of the non-invasively measured electrical activity of the stomach.As a result a total of 129 relevant articles with primary aim on experimental diet were reviewed in this study. Scopus, PubMed and Web of Science databases were used to search for articles in English language, according to the specific query and using PRISMA method. The research topic of electrogastrography has been continuously growing in popularity since the first measurement by professor Alvarez 100 years ago and there are many researchers and companies interested in EGG nowadays. Measurement apparatus and procedures are still being developed in both commercial and research settings. There are plenty variable electrode layouts, ranging from minimal numbers of electrodes for ambulatory measurements to very high numbers of electrodes for spatial measurements. Most authors used in their research anatomically approximated layout with 2 active electrodes in bipolar connection and commercial electrogastrograph with sampling rate of 2 or 4 Hz. Test subjects were usually healthy adults and diet was controlled. However, evaluation methods are being developed at a slower pace and usually the signals are classified only based on dominant frequency. The main review contributions include the overview of spectrum of measurement systems and procedures for electrogastrography developed by many authors, but a firm medical standard has not yet been defined. Therefore, it is not possible to use this method in clinical practice for objective diagnosis.

Keywords: electrogastrography, non-invasive method, Measurement systems, Electrode placement, Measurement apparatus, Signal processing

Received: 19 Jan 2024; Accepted: 03 Jun 2024.

Copyright: © 2024 Oczka, Augustynek, Penhaker and Kubicek. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY) . The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

* Correspondence: Dr. Jan Kubicek, VSB-Technical University of Ostrava, Ostrava, 708 33, Moravian-Silesian Region, Czechia

People also looked at

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- HHS Author Manuscripts

Purposeful sampling for qualitative data collection and analysis in mixed method implementation research

Lawrence a. palinkas.

1 School of Social Work, University of Southern California, Los Angeles, CA 90089-0411

Sarah M. Horwitz

2 Department of Child and Adolescent Psychiatry, New York University, New York, NY

Carla A. Green

3 Center for Health Research, Kaiser Permanente Northwest, Portland, OR

Jennifer P. Wisdom

4 George Washington University, Washington DC

Naihua Duan

5 New York State Neuropsychiatric Institute and Department of Psychiatry, Columbia University, New York, NY

Kimberly Hoagwood

Purposeful sampling is widely used in qualitative research for the identification and selection of information-rich cases related to the phenomenon of interest. Although there are several different purposeful sampling strategies, criterion sampling appears to be used most commonly in implementation research. However, combining sampling strategies may be more appropriate to the aims of implementation research and more consistent with recent developments in quantitative methods. This paper reviews the principles and practice of purposeful sampling in implementation research, summarizes types and categories of purposeful sampling strategies and provides a set of recommendations for use of single strategy or multistage strategy designs, particularly for state implementation research.

Recently there have been several calls for the use of mixed method designs in implementation research ( Proctor et al., 2009 ; Landsverk et al., 2012 ; Palinkas et al. 2011 ; Aarons et al., 2012). This has been precipitated by the realization that the challenges of implementing evidence-based and other innovative practices, treatments, interventions and programs are sufficiently complex that a single methodological approach is often inadequate. This is particularly true of efforts to implement evidence-based practices (EBPs) in statewide systems where relationships among key stakeholders extend both vertically (from state to local organizations) and horizontally (between organizations located in different parts of a state). As in other areas of research, mixed method designs are viewed as preferable in implementation research because they provide a better understanding of research issues than either qualitative or quantitative approaches alone ( Palinkas et al., 2011 ). In such designs, qualitative methods are used to explore and obtain depth of understanding as to the reasons for success or failure to implement evidence-based practice or to identify strategies for facilitating implementation while quantitative methods are used to test and confirm hypotheses based on an existing conceptual model and obtain breadth of understanding of predictors of successful implementation ( Teddlie & Tashakkori, 2003 ).

Sampling strategies for quantitative methods used in mixed methods designs in implementation research are generally well-established and based on probability theory. In contrast, sampling strategies for qualitative methods in implementation studies are less explicit and often less evident. Although the samples for qualitative inquiry are generally assumed to be selected purposefully to yield cases that are “information rich” (Patton, 2001), there are no clear guidelines for conducting purposeful sampling in mixed methods implementation studies, particularly when studies have more than one specific objective. Moreover, it is not entirely clear what forms of purposeful sampling are most appropriate for the challenges of using both quantitative and qualitative methods in the mixed methods designs used in implementation research. Such a consideration requires a determination of the objectives of each methodology and the potential impact of selecting one strategy to achieve one objective on the selection of other strategies to achieve additional objectives.

In this paper, we present different approaches to the use of purposeful sampling strategies in implementation research. We begin with a review of the principles and practice of purposeful sampling in implementation research, a summary of the types and categories of purposeful sampling strategies, and a set of recommendations for matching the appropriate single strategy or multistage strategy to study aims and quantitative method designs.

Principles of Purposeful Sampling

Purposeful sampling is a technique widely used in qualitative research for the identification and selection of information-rich cases for the most effective use of limited resources ( Patton, 2002 ). This involves identifying and selecting individuals or groups of individuals that are especially knowledgeable about or experienced with a phenomenon of interest ( Cresswell & Plano Clark, 2011 ). In addition to knowledge and experience, Bernard (2002) and Spradley (1979) note the importance of availability and willingness to participate, and the ability to communicate experiences and opinions in an articulate, expressive, and reflective manner. In contrast, probabilistic or random sampling is used to ensure the generalizability of findings by minimizing the potential for bias in selection and to control for the potential influence of known and unknown confounders.

As Morse and Niehaus (2009) observe, whether the methodology employed is quantitative or qualitative, sampling methods are intended to maximize efficiency and validity. Nevertheless, sampling must be consistent with the aims and assumptions inherent in the use of either method. Qualitative methods are, for the most part, intended to achieve depth of understanding while quantitative methods are intended to achieve breadth of understanding ( Patton, 2002 ). Qualitative methods place primary emphasis on saturation (i.e., obtaining a comprehensive understanding by continuing to sample until no new substantive information is acquired) ( Miles & Huberman, 1994 ). Quantitative methods place primary emphasis on generalizability (i.e., ensuring that the knowledge gained is representative of the population from which the sample was drawn). Each methodology, in turn, has different expectations and standards for determining the number of participants required to achieve its aims. Quantitative methods rely on established formulae for avoiding Type I and Type II errors, while qualitative methods often rely on precedents for determining number of participants based on type of analysis proposed (e.g., 3-6 participants interviewed multiple times in a phenomenological study versus 20-30 participants interviewed once or twice in a grounded theory study), level of detail required, and emphasis of homogeneity (requiring smaller samples) versus heterogeneity (requiring larger samples) ( Guest, Bunce & Johnson., 2006 ; Morse & Niehaus, 2009 ; Padgett, 2008 ).

Types of purposeful sampling designs

There exist numerous purposeful sampling designs. Examples include the selection of extreme or deviant (outlier) cases for the purpose of learning from an unusual manifestations of phenomena of interest; the selection of cases with maximum variation for the purpose of documenting unique or diverse variations that have emerged in adapting to different conditions, and to identify important common patterns that cut across variations; and the selection of homogeneous cases for the purpose of reducing variation, simplifying analysis, and facilitating group interviewing. A list of some of these strategies and examples of their use in implementation research is provided in Table 1 .

Purposeful sampling strategies in implementation research

Embedded in each strategy is the ability to compare and contrast, to identify similarities and differences in the phenomenon of interest. Nevertheless, some of these strategies (e.g., maximum variation sampling, extreme case sampling, intensity sampling, and purposeful random sampling) are used to identify and expand the range of variation or differences, similar to the use of quantitative measures to describe the variability or dispersion of values for a particular variable or variables, while other strategies (e.g., homogeneous sampling, typical case sampling, criterion sampling, and snowball sampling) are used to narrow the range of variation and focus on similarities. The latter are similar to the use of quantitative central tendency measures (e.g., mean, median, and mode). Moreover, certain strategies, like stratified purposeful sampling or opportunistic or emergent sampling, are designed to achieve both goals. As Patton (2002 , p. 240) explains, “the purpose of a stratified purposeful sample is to capture major variations rather than to identify a common core, although the latter may also emerge in the analysis. Each of the strata would constitute a fairly homogeneous sample.”

Challenges to use of purposeful sampling

Despite its wide use, there are numerous challenges in identifying and applying the appropriate purposeful sampling strategy in any study. For instance, the range of variation in a sample from which purposive sample is to be taken is often not really known at the outset of a study. To set as the goal the sampling of information-rich informants that cover the range of variation assumes one knows that range of variation. Consequently, an iterative approach of sampling and re-sampling to draw an appropriate sample is usually recommended to make certain the theoretical saturation occurs ( Miles & Huberman, 1994 ). However, that saturation may be determined a-priori on the basis of an existing theory or conceptual framework, or it may emerge from the data themselves, as in a grounded theory approach ( Glaser & Strauss, 1967 ). Second, there are a not insignificant number in the qualitative methods field who resist or refuse systematic sampling of any kind and reject the limiting nature of such realist, systematic, or positivist approaches. This includes critics of interventions and “bottom up” case studies and critiques. However, even those who equate purposeful sampling with systematic sampling must offer a rationale for selecting study participants that is linked with the aims of the investigation (i.e., why recruit these individuals for this particular study? What qualifies them to address the aims of the study?). While systematic sampling may be associated with a post-positivist tradition of qualitative data collection and analysis, such sampling is not inherently limited to such analyses and the need for such sampling is not inherently limited to post-positivist qualitative approaches ( Patton, 2002 ).

Purposeful Sampling in Implementation Research

Characteristics of implementation research.

In implementation research, quantitative and qualitative methods often play important roles, either simultaneously or sequentially, for the purpose of answering the same question through convergence of results from different sources, answering related questions in a complementary fashion, using one set of methods to expand or explain the results obtained from use of the other set of methods, using one set of methods to develop questionnaires or conceptual models that inform the use of the other set, and using one set of methods to identify the sample for analysis using the other set of methods ( Palinkas et al., 2011 ). A review of mixed method designs in implementation research conducted by Palinkas and colleagues (2011) revealed seven different sequential and simultaneous structural arrangements, five different functions of mixed methods, and three different ways of linking quantitative and qualitative data together. However, this review did not consider the sampling strategies involved in the types of quantitative and qualitative methods common to implementation research, nor did it consider the consequences of the sampling strategy selected for one method or set of methods on the choice of sampling strategy for the other method or set of methods. For instance, one of the most significant challenges to sampling in sequential mixed method designs lies in the limitations the initial method may place on sampling for the subsequent method. As Morse and Neihaus (2009) observe, when the initial method is qualitative, the sample selected may be too small and lack randomization necessary to fulfill the assumptions for a subsequent quantitative analysis. On the other hand, when the initial method is quantitative, the sample selected may be too large for each individual to be included in qualitative inquiry and lack purposeful selection to reduce the sample size to one more appropriate for qualitative research. The fact that potential participants were recruited and selected at random does not necessarily make them information rich.

A re-examination of the 22 studies and an additional 6 studies published since 2009 revealed that only 5 studies ( Aarons & Palinkas, 2007 ; Bachman et al., 2009 ; Palinkas et al., 2011 ; Palinkas et al., 2012 ; Slade et al., 2003) made a specific reference to purposeful sampling. An additional three studies ( Henke et al., 2008 ; Proctor et al., 2007 ; Swain et al., 2010 ) did not make explicit reference to purposeful sampling but did provide a rationale for sample selection. The remaining 20 studies provided no description of the sampling strategy used to identify participants for qualitative data collection and analysis; however, a rationale could be inferred based on a description of who were recruited and selected for participation. Of the 28 studies, 3 used more than one sampling strategy. Twenty-one of the 28 studies (75%) used some form of criterion sampling. In most instances, the criterion used is related to the individual’s role, either in the research project (i.e., trainer, team leader), or the agency (program director, clinical supervisor, clinician); in other words, criterion of inclusion in a certain category (criterion-i), in contrast to cases that are external to a specific criterion (criterion-e). For instance, in a series of studies based on the National Implementing Evidence-Based Practices Project, participants included semi-structured interviews with consultant trainers and program leaders at each study site ( Brunette et al., 2008 ; Marshall et al., 2008 ; Marty et al., 2007; Rapp et al., 2010 ; Woltmann et al., 2008 ). Six studies used some form of maximum variation sampling to ensure representativeness and diversity of organizations and individual practitioners. Two studies used intensity sampling to make contrasts. Aarons and Palinkas (2007) , for example, purposefully selected 15 child welfare case managers representing those having the most positive and those having the most negative views of SafeCare, an evidence-based prevention intervention, based on results of a web-based quantitative survey asking about the perceived value and usefulness of SafeCare. Kramer and Burns (2008) recruited and interviewed clinicians providing usual care and clinicians who dropped out of a study prior to consent to contrast with clinicians who provided the intervention under investigation. One study ( Hoagwood et al., 2007 ), used a typical case approach to identify participants for a qualitative assessment of the challenges faced in implementing a trauma-focused intervention for youth. One study ( Green & Aarons, 2011 ) used a combined snowball sampling/criterion-i strategy by asking recruited program managers to identify clinicians, administrative support staff, and consumers for project recruitment. County mental directors, agency directors, and program managers were recruited to represent the policy interests of implementation while clinicians, administrative support staff and consumers were recruited to represent the direct practice perspectives of EBP implementation.

Table 2 below provides a description of the use of different purposeful sampling strategies in mixed methods implementation studies. Criterion-i sampling was most frequently used in mixed methods implementation studies that employed a simultaneous design where the qualitative method was secondary to the quantitative method or studies that employed a simultaneous structure where the qualitative and quantitative methods were assigned equal priority. These mixed method designs were used to complement the depth of understanding afforded by the qualitative methods with the breadth of understanding afforded by the quantitative methods (n = 13), to explain or elaborate upon the findings of one set of methods (usually quantitative) with the findings from the other set of methods (n = 10), or to seek convergence through triangulation of results or quantifying qualitative data (n = 8). The process of mixing methods in the large majority (n = 18) of these studies involved embedding the qualitative study within the larger quantitative study. In one study (Goia & Dziadosz, 2008), criterion sampling was used in a simultaneous design where quantitative and qualitative data were merged together in a complementary fashion, and in two studies (Aarons et al., 2012; Zazelli et al., 2008 ), quantitative and qualitative data were connected together, one in sequential design for the purpose of developing a conceptual model ( Zazelli et al., 2008 ), and one in a simultaneous design for the purpose of complementing one another (Aarons et al., 2012). Three of the six studies that used maximum variation sampling used a simultaneous structure with quantitative methods taking priority over qualitative methods and a process of embedding the qualitative methods in a larger quantitative study ( Henke et al., 2008 ; Palinkas et al., 2010; Slade et al., 2008 ). Two of the six studies used maximum variation sampling in a sequential design ( Aarons et al., 2009 ; Zazelli et al., 2008 ) and one in a simultaneous design (Henke et al., 2010) for the purpose of development, and three used it in a simultaneous design for complementarity ( Bachman et al., 2009 ; Henke et al., 2008; Palinkas, Ell, Hansen, Cabassa, & Wells, 2011 ). The two studies relying upon intensity sampling used a simultaneous structure for the purpose of either convergence or expansion, and both studies involved a qualitative study embedded in a larger quantitative study ( Aarons & Palinkas, 2007 ; Kramer & Burns, 2008 ). The single typical case study involved a simultaneous design where the qualitative study was embedded in a larger quantitative study for the purpose of complementarity ( Hoagwood et al., 2007 ). The snowball/maximum variation study involved a sequential design where the qualitative study was merged into the quantitative data for the purpose of convergence and conceptual model development ( Green & Aarons, 2011 ). Although not used in any of the 28 implementation studies examined here, another common sequential sampling strategy is using criteria sampling of the larger quantitative sample to produce a second-stage qualitative sample in a manner similar to maximum variation sampling, except that the former narrows the range of variation while the latter expands the range.

Purposeful sampling strategies and mixed method designs in implementation research