Artificial Intelligence in Education: A Review

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

Share this page:

Artificial Intelligence in Education: A Review

Authors: Lijia Chen, Pingping Chen, and Zhijian Lin

Published in IEEE Xplore 17 April 2020 View in IEEE Xplore

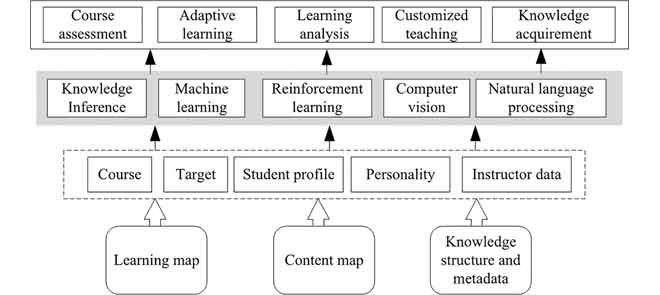

The purpose of this study was to assess the impact of Artificial Intelligence (AI) on education. Premised on a narrative and framework for assessing AI identified from a preliminary analysis, the scope of the study was limited to the application and effects of AI in administration, instruction, and learning. A qualitative research approach, leveraging the use of literature review as a research design and approach was used and effectively facilitated the realization of the study purpose. Artificial intelligence is a field of study and the resulting innovations and developments that have culminated in computers, machines, and other artifacts having human-like intelligence characterized by cognitive abilities, learning, adaptability, and decision-making capabilities. The study ascertained that AI has extensively been adopted and used in education, particularly by education institutions, in different forms. AI initially took the form of computer and computer related technologies, transitioning to web-based and online intelligent education systems, and ultimately with the use of embedded computer systems, together with other technologies, the use of humanoid robots and web-based chatbots to perform instructors’ duties and functions independently or with instructors. Using these platforms, instructors have been able to perform different administrative functions, such as reviewing and grading students’ assignments more effectively and efficiently, and achieve higher quality in their teaching activities. On the other hand, because the systems leverage machine learning and adaptability, curriculum and content has been customized and personalized in line with students’ needs, which has fostered uptake and retention, thereby improving learners experience and overall quality of learning.

View this article on IEEE Xplore

At a Glance

- Journal: IEEE Access

- Format: Open Access

- Frequency: Continuous

- Submission to Publication: 4-6 weeks (typical)

- Topics: All topics in IEEE

- Average Acceptance Rate: 27%

- Impact Factor: 3.9

- Model: Binary Peer Review

- Article Processing Charge: US $1,995

Featured Articles

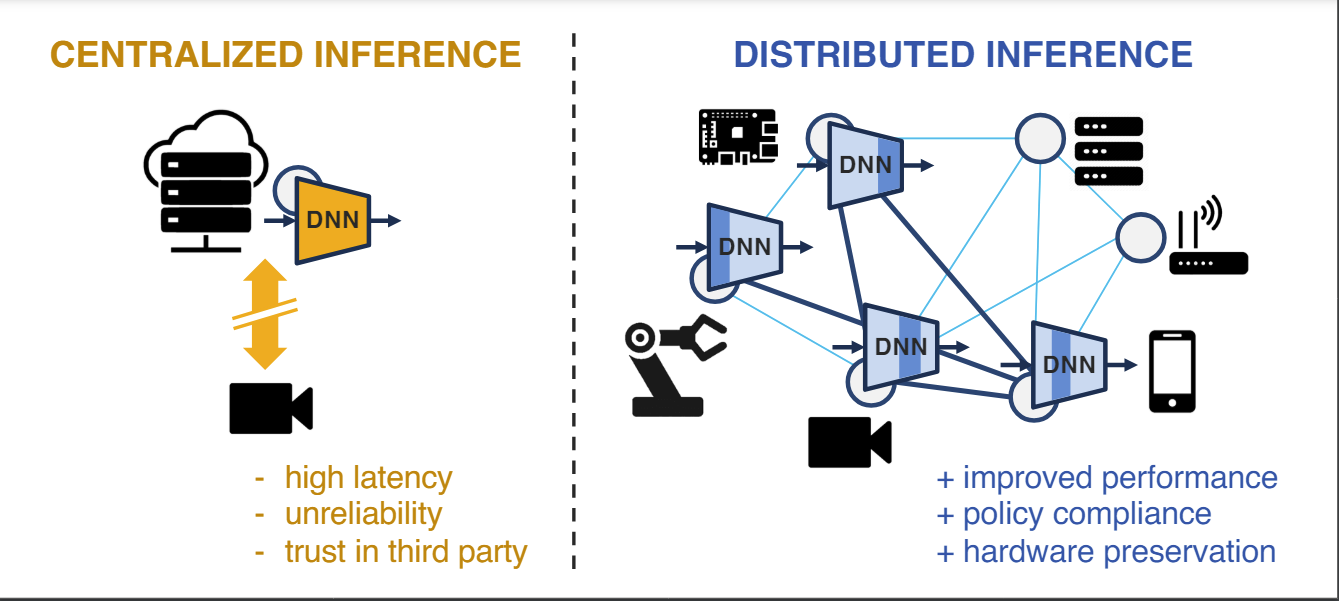

DNN Partitioning for Inference Throughput Acceleration at the Edge

View in IEEE Xplore

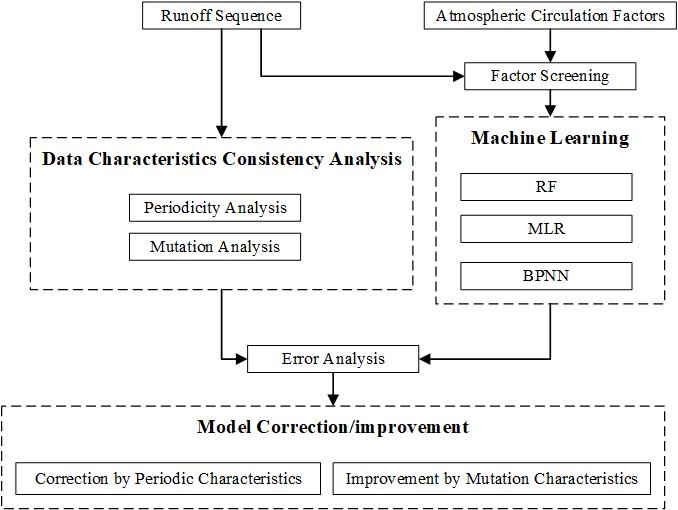

Effect of Data Characteristics Inconsistency on Medium and Long-Term Runoff Forecasting by Machine Learning

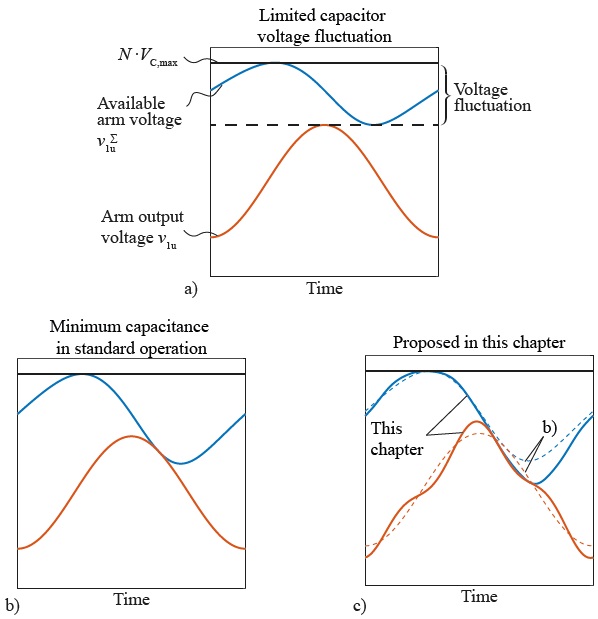

Reducing Losses and Energy Storage Requirements of Modular Multilevel Converters With Optimal Harmonic Injection

Submission guidelines.

© 2024 IEEE - All rights reserved. Use of this website signifies your agreement to the IEEE TERMS AND CONDITIONS.

A not-for-profit organization, IEEE is the world’s largest technical professional organization dedicated to advancing technology for the benefit of humanity.

AWARD RULES:

NO PURCHASE NECESSARY TO ENTER OR WIN. A PURCHASE WILL NOT INCREASE YOUR CHANCES OF WINNING.

These rules apply to the “2024 IEEE Access Best Video Award Part 1″ (the “Award”).

- Sponsor: The Sponsor of the Award is The Institute of Electrical and Electronics Engineers, Incorporated (“IEEE”) on behalf of IEEE Access , 445 Hoes Lane, Piscataway, NJ 08854-4141 USA (“Sponsor”).

- Eligibility: Award is open to residents of the United States of America and other countries, where permitted by local law, who are the age of eighteen (18) and older. Employees of Sponsor, its agents, affiliates and their immediate families are not eligible to enter Award. The Award is subject to all applicable state, local, federal and national laws and regulations. Entrants may be subject to rules imposed by their institution or employer relative to their participation in Awards and should check with their institution or employer for any relevant policies. Void in locations and countries where prohibited by law.

- Agreement to Official Rules : By participating in this Award, entrants agree to abide by the terms and conditions thereof as established by Sponsor. Sponsor reserves the right to alter any of these Official Rules at any time and for any reason. All decisions made by Sponsor concerning the Award including, but not limited to the cancellation of the Award, shall be final and at its sole discretion.

- How to Enter: This Award opens on January 1, 2024 at 12:00 AM ET and all entries must be received by 11:59 PM ET on June 30, 2024 (“Promotional Period”).

Entrant must submit a video with an article submission to IEEE Access . The video submission must clearly be relevant to the submitted manuscript. Only videos that accompany an article that is accepted for publication in IEEE Access will qualify. The video may be simulations, demonstrations, or interviews with other experts, for example. Your video file should not exceed 100 MB.

Entrants can enter the Award during Promotional Period through the following method:

- The IEEE Author Portal : Entrants can upload their video entries while submitting their article through the IEEE Author Portal submission site .

- Review and Complete the Terms and Conditions: After submitting your manuscript and video through the IEEE Author Portal, entrants should then review and sign the Terms and Conditions .

Entrants who have already submitted a manuscript to IEEE Access without a video can still submit a video for inclusion in this Award so long as the video is submitted within 7 days of the article submission date. The video can be submitted via email to the article administrator. All videos must undergo peer review and be accepted along with the article submission. Videos may not be submitted after an article has already been accepted for publication.

The criteria for an article to be accepted for publication in IEEE Access are:

- The article must be original writing that enhances the existing body of knowledge in the given subject area. Original review articles and surveys are acceptable even if new data/concepts are not presented.

- Results reported must not have been submitted or published elsewhere (although expanded versions of conference publications are eligible for submission).

- Experiments, statistics, and other analyses must be performed to a high technical standard and are described in sufficient detail.

- Conclusions must be presented in an appropriate fashion and are supported by the data.

- The article must be written in standard English with correct grammar.

- Appropriate references to related prior published works must be included.

- The article must fall within the scope of IEEE Access

- Must be in compliance with the IEEE PSPB Operations Manual.

- Completion of the required IEEE intellectual property documents for publication.

- At the discretion of the IEEE Access Editor-in-Chief.

- Disqualification: The following items will disqualify a video from being considered a valid submission:

- The video is not original work.

- A video that is not accompanied with an article submission.

- The article and/or video is rejected during the peer review process.

- The article and/or video topic does not fit into the scope of IEEE Access .

- The article and/or do not follow the criteria for publication in IEEE Access .

- Videos posted in a comment on IEEE Xplore .

- Content is off-topic, offensive, obscene, indecent, abusive or threatening to others.

- Infringes the copyright, trademark or other right of any third party.

- Uploads viruses or other contaminating or destructive features.

- Is in violation of any applicable laws or regulations.

- Is not in English.

- Is not provided within the designated submission time.

- Entrant does not agree and sign the Terms and Conditions document.

Entries must be original. Entries that copy other entries, or the intellectual property of anyone other than the Entrant, may be removed by Sponsor and the Entrant may be disqualified. Sponsor reserves the right to remove any entry and disqualify any Entrant if the entry is deemed, in Sponsor’s sole discretion, to be inappropriate.

- Entrant’s Warranty and Authorization to Sponsor: By entering the Award, entrants warrant and represent that the Award Entry has been created and submitted by the Entrant. Entrant certifies that they have the ability to use any image, text, video, or other intellectual property they may upload and that Entrant has obtained all necessary permissions. IEEE shall not indemnify Entrant for any infringement, violation of publicity rights, or other civil or criminal violations. Entrant agrees to hold IEEE harmless for all actions related to the submission of an Entry. Entrants further represent and warrant, if they reside outside of the United States of America, that their participation in this Award and acceptance of a prize will not violate their local laws.

- Intellectual Property Rights: Entrant grants Sponsor an irrevocable, worldwide, royalty free license to use, reproduce, distribute, and display the Entry for any lawful purpose in all media whether now known or hereinafter created. This may include, but is not limited to, the IEEE A ccess website, the IEEE Access YouTube channel, the IEEE Access IEEE TV channel, IEEE Access social media sites (LinkedIn, Facebook, Twitter, IEEE Access Collabratec Community), and the IEEE Access Xplore page. Facebook/Twitter/Microsite usernames will not be used in any promotional and advertising materials without the Entrants’ expressed approval.

- Number of Prizes Available, Prizes, Approximate Retail Value and Odds of winning Prizes: Two (2) promotional prizes of $350 USD Amazon gift cards. One (1) grand prize of a $500 USD Amazon gift card. Prizes will be distributed to the winners after the selection of winners is announced. Odds of winning a prize depend on the number of eligible entries received during the Promotional Period. Only the corresponding author of the submitted manuscript will receive the prize.

The grand prize winner may, at Sponsor’ discretion, have his/her article and video highlighted in media such as the IEEE Access Xplore page and the IEEE Access social media sites.

The prize(s) for the Award are being sponsored by IEEE. No cash in lieu of prize or substitution of prize permitted, except that Sponsor reserves the right to substitute a prize or prize component of equal or greater value in its sole discretion for any reason at time of award. Sponsor shall not be responsible for service obligations or warranty (if any) in relation to the prize(s). Prize may not be transferred prior to award. All other expenses associated with use of the prize, including, but not limited to local, state, or federal taxes on the Prize, are the sole responsibility of the winner. Winner(s) understand that delivery of a prize may be void where prohibited by law and agrees that Sponsor shall have no obligation to substitute an alternate prize when so prohibited. Amazon is not a sponsor or affiliated with this Award.

- Selection of Winners: Promotional prize winners will be selected based on entries received during the Promotional Period. The sponsor will utilize an Editorial Panel to vote on the best video submissions. Editorial Panel members are not eligible to participate in the Award. Entries will be ranked based on three (3) criteria:

- Presentation of Technical Content

- Quality of Video

Upon selecting a winner, the Sponsor will notify the winner via email. All potential winners will be notified via their email provided to the sponsor. Potential winners will have five (5) business days to respond after receiving initial prize notification or the prize may be forfeited and awarded to an alternate winner. Potential winners may be required to sign an affidavit of eligibility, a liability release, and a publicity release. If requested, these documents must be completed, signed, and returned within ten (10) business days from the date of issuance or the prize will be forfeited and may be awarded to an alternate winner. If prize or prize notification is returned as undeliverable or in the event of noncompliance with these Official Rules, prize will be forfeited and may be awarded to an alternate winner.

- General Prize Restrictions: No prize substitutions or transfer of prize permitted, except by the Sponsor. Import/Export taxes, VAT and country taxes on prizes are the sole responsibility of winners. Acceptance of a prize constitutes permission for the Sponsor and its designees to use winner’s name and likeness for advertising, promotional and other purposes in any and all media now and hereafter known without additional compensation unless prohibited by law. Winner acknowledges that neither Sponsor, Award Entities nor their directors, employees, or agents, have made nor are in any manner responsible or liable for any warranty, representation, or guarantee, express or implied, in fact or in law, relative to any prize, including but not limited to its quality, mechanical condition or fitness for a particular purpose. Any and all warranties and/or guarantees on a prize (if any) are subject to the respective manufacturers’ terms therefor, and winners agree to look solely to such manufacturers for any such warranty and/or guarantee.

11.Release, Publicity, and Privacy : By receipt of the Prize and/or, if requested, by signing an affidavit of eligibility and liability/publicity release, the Prize Winner consents to the use of his or her name, likeness, business name and address by Sponsor for advertising and promotional purposes, including but not limited to on Sponsor’s social media pages, without any additional compensation, except where prohibited. No entries will be returned. All entries become the property of Sponsor. The Prize Winner agrees to release and hold harmless Sponsor and its officers, directors, employees, affiliated companies, agents, successors and assigns from and against any claim or cause of action arising out of participation in the Award.

Sponsor assumes no responsibility for computer system, hardware, software or program malfunctions or other errors, failures, delayed computer transactions or network connections that are human or technical in nature, or for damaged, lost, late, illegible or misdirected entries; technical, hardware, software, electronic or telephone failures of any kind; lost or unavailable network connections; fraudulent, incomplete, garbled or delayed computer transmissions whether caused by Sponsor, the users, or by any of the equipment or programming associated with or utilized in this Award; or by any technical or human error that may occur in the processing of submissions or downloading, that may limit, delay or prevent an entrant’s ability to participate in the Award.

Sponsor reserves the right, in its sole discretion, to cancel or suspend this Award and award a prize from entries received up to the time of termination or suspension should virus, bugs or other causes beyond Sponsor’s control, unauthorized human intervention, malfunction, computer problems, phone line or network hardware or software malfunction, which, in the sole opinion of Sponsor, corrupt, compromise or materially affect the administration, fairness, security or proper play of the Award or proper submission of entries. Sponsor is not liable for any loss, injury or damage caused, whether directly or indirectly, in whole or in part, from downloading data or otherwise participating in this Award.

Representations and Warranties Regarding Entries: By submitting an Entry, you represent and warrant that your Entry does not and shall not comprise, contain, or describe, as determined in Sponsor’s sole discretion: (A) false statements or any misrepresentations of your affiliation with a person or entity; (B) personally identifying information about you or any other person; (C) statements or other content that is false, deceptive, misleading, scandalous, indecent, obscene, unlawful, defamatory, libelous, fraudulent, tortious, threatening, harassing, hateful, degrading, intimidating, or racially or ethnically offensive; (D) conduct that could be considered a criminal offense, could give rise to criminal or civil liability, or could violate any law; (E) any advertising, promotion or other solicitation, or any third party brand name or trademark; or (F) any virus, worm, Trojan horse, or other harmful code or component. By submitting an Entry, you represent and warrant that you own the full rights to the Entry and have obtained any and all necessary consents, permissions, approvals and licenses to submit the Entry and comply with all of these Official Rules, and that the submitted Entry is your sole original work, has not been previously published, released or distributed, and does not infringe any third-party rights or violate any laws or regulations.

12.Disputes: EACH ENTRANT AGREES THAT: (1) ANY AND ALL DISPUTES, CLAIMS, AND CAUSES OF ACTION ARISING OUT OF OR IN CONNECTION WITH THIS AWARD, OR ANY PRIZES AWARDED, SHALL BE RESOLVED INDIVIDUALLY, WITHOUT RESORTING TO ANY FORM OF CLASS ACTION, PURSUANT TO ARBITRATION CONDUCTED UNDER THE COMMERCIAL ARBITRATION RULES OF THE AMERICAN ARBITRATION ASSOCIATION THEN IN EFFECT, (2) ANY AND ALL CLAIMS, JUDGMENTS AND AWARDS SHALL BE LIMITED TO ACTUAL OUT-OF-POCKET COSTS INCURRED, INCLUDING COSTS ASSOCIATED WITH ENTERING THIS AWARD, BUT IN NO EVENT ATTORNEYS’ FEES; AND (3) UNDER NO CIRCUMSTANCES WILL ANY ENTRANT BE PERMITTED TO OBTAIN AWARDS FOR, AND ENTRANT HEREBY WAIVES ALL RIGHTS TO CLAIM, PUNITIVE, INCIDENTAL, AND CONSEQUENTIAL DAMAGES, AND ANY OTHER DAMAGES, OTHER THAN FOR ACTUAL OUT-OF-POCKET EXPENSES, AND ANY AND ALL RIGHTS TO HAVE DAMAGES MULTIPLIED OR OTHERWISE INCREASED. ALL ISSUES AND QUESTIONS CONCERNING THE CONSTRUCTION, VALIDITY, INTERPRETATION AND ENFORCEABILITY OF THESE OFFICIAL RULES, OR THE RIGHTS AND OBLIGATIONS OF ENTRANT AND SPONSOR IN CONNECTION WITH THE AWARD, SHALL BE GOVERNED BY, AND CONSTRUED IN ACCORDANCE WITH, THE LAWS OF THE STATE OF NEW JERSEY, WITHOUT GIVING EFFECT TO ANY CHOICE OF LAW OR CONFLICT OF LAW, RULES OR PROVISIONS (WHETHER OF THE STATE OF NEW JERSEY OR ANY OTHER JURISDICTION) THAT WOULD CAUSE THE APPLICATION OF THE LAWS OF ANY JURISDICTION OTHER THAN THE STATE OF NEW JERSEY. SPONSOR IS NOT RESPONSIBLE FOR ANY TYPOGRAPHICAL OR OTHER ERROR IN THE PRINTING OF THE OFFER OR ADMINISTRATION OF THE AWARD OR IN THE ANNOUNCEMENT OF THE PRIZES.

- Limitation of Liability: The Sponsor, Award Entities and their respective parents, affiliates, divisions, licensees, subsidiaries, and advertising and promotion agencies, and each of the foregoing entities’ respective employees, officers, directors, shareholders and agents (the “Released Parties”) are not responsible for incorrect or inaccurate transfer of entry information, human error, technical malfunction, lost/delayed data transmissions, omission, interruption, deletion, defect, line failures of any telephone network, computer equipment, software or any combination thereof, inability to access web sites, damage to a user’s computer system (hardware and/or software) due to participation in this Award or any other problem or error that may occur. By entering, participants agree to release and hold harmless the Released Parties from and against any and all claims, actions and/or liability for injuries, loss or damage of any kind arising from or in connection with participation in and/or liability for injuries, loss or damage of any kind, to person or property, arising from or in connection with participation in and/or entry into this Award, participation is any Award-related activity or use of any prize won. Entry materials that have been tampered with or altered are void. If for any reason this Award is not capable of running as planned, or if this Award or any website associated therewith (or any portion thereof) becomes corrupted or does not allow the proper playing of this Award and processing of entries per these rules, or if infection by computer virus, bugs, tampering, unauthorized intervention, affect the administration, security, fairness, integrity, or proper conduct of this Award, Sponsor reserves the right, at its sole discretion, to disqualify any individual implicated in such action, and/or to cancel, terminate, modify or suspend this Award or any portion thereof, or to amend these rules without notice. In the event of a dispute as to who submitted an online entry, the entry will be deemed submitted by the authorized account holder the email address submitted at the time of entry. “Authorized Account Holder” is defined as the person assigned to an email address by an Internet access provider, online service provider or other organization responsible for assigning email addresses for the domain associated with the email address in question. Any attempt by an entrant or any other individual to deliberately damage any web site or undermine the legitimate operation of the Award is a violation of criminal and civil laws and should such an attempt be made, the Sponsor reserves the right to seek damages and other remedies from any such person to the fullest extent permitted by law. This Award is governed by the laws of the State of New Jersey and all entrants hereby submit to the exclusive jurisdiction of federal or state courts located in the State of New Jersey for the resolution of all claims and disputes. Facebook, LinkedIn, Twitter, G+, YouTube, IEEE Xplore , and IEEE TV are not sponsors nor affiliated with this Award.

- Award Results and Official Rules: To obtain the identity of the prize winner and/or a copy of these Official Rules, send a self-addressed stamped envelope to Kimberly Rybczynski, IEEE, 445 Hoes Lane, Piscataway, NJ 08854-4141 USA.

Artificial Intelligence in Education: A Panoramic Review

K Ahmad , J Qadir , A Al-Fuqaha , W Iqbal , M Ayyash

展开

Motivated by the importance of education in an individual's and a society's development, researchers have been exploring the use of Artificial Intelligence (AI) in the domain and have come up with myriad potential applications. This paper pays particular attention to this issue by highlighting the future scope and market opportunities for AI in education, the existing tools and applications deployed in several applications of AI in education, research trends, current limitations and pitfalls of AI in education. In particular, the paper reviews the various applications of AI in education including student grading and evaluations, students' retention and drop out prediction, sentiment analysis, intelligent tutoring, classrooms' monitoring and recommendation systems. The paper also provides a detailed bibliometric analysis to highlight the research trends in the domain over six years (2014--2019). For this study, we analyze research publications in various related sub-domains such as learning analytics, educational data mining (EDM), and big data in education. The paper analyzes educational applications from different perspectives. On the one hand, it provides a detailed description of the tools and platforms developed as the outcome of the research work achieved in these applications. On the other side, it identifies the potential challenges, current limitations and hints for further improvement. We also provide important insights into the use and pitfalls of AI in education. We believe such rigorous analysis will provide a baseline for future research in the domain.

10.35542/osf.io/zvu2n

通过 文献互助 平台发起求助,成功后即可免费获取论文全文。

我们已与文献出版商建立了直接购买合作。

你可以通过身份认证进行实名认证,认证成功后本次下载的费用将由您所在的图书馆支付

您可以直接购买此文献,1~5分钟即可下载全文,部分资源由于网络原因可能需要更长时间,请您耐心等待哦~

百度学术集成海量学术资源,融合人工智能、深度学习、大数据分析等技术,为科研工作者提供全面快捷的学术服务。在这里我们保持学习的态度,不忘初心,砥砺前行。 了解更多>>

©2024 Baidu 百度学术声明 使用百度前必读

London Review of Education

- The role and challenges of education for responsible AI

- Reconceptualizing the ‘problem’ of widening participation in higher education in England

- Do higher education students really seek ‘value for money’?: Debunking the myth

- Book review: From ‘Teach for America’ to ‘Teach for China’: Global teacher education reform and equity in education, by Sara Lam

The use of AI in education: Practicalities and ethical considerations

- Specialized, systematic and powerful knowledge

- Language, citizenship and schooling: A minority teacher’s perspective

- Book review: Diversity, Transformative Knowledge, and Civic Education: Selected essays, by James A. Banks

- Rise of the machines? The evolving role of AI technologies in high-stakes assessment

- AI and the human in education: Editorial

- Narrative practices in developing professional identities: Issues of objectivity and agency

- Book review: The Governance of British Higher Education: The impact of governmental, financial and market pressures, by Michael Shattock and Aniko Horvath

- The frugal life and why we should educate for it

- London, race and territories: Young people’s stories of a divided city

- Book review: Hannah Arendt on Educational Thinking and Practice in Dark Times: Education for a world in crisis, edited by Wayne Veck and Helen M. Gunter

- ‘Decolonising the Medical Curriculum‘: Humanising medicine through epistemic pluralism, cultural safety and critical consciousness

- Can less be more? Instruction time and attainment in English secondary schools: Evidence from panel data

- Decolonization in a higher education STEMM institution – is ‘epistemic fragility’ a barrier?

- Diversity or decolonization? Searching for the tools to dismantle the ‘master’s house’

- Book review: The Good Ancestor: How to think long term in a short-term world, by Roman Krznaric

- Researching literacy policy: Conceptualizing trends in the field

- Decolonize this art history: Imagining a decolonial art history programme at Kalamazoo College

- Decolonising the curriculum beyond the surge: Conceptualisation, positionality and conduct

- Curriculum contexts, recontextualisation and attention for higher-order thinking

- Book review: P.C. Chang and the Universal Declaration of Human Rights, by Hans Ingvar Roth

- Decolonising globalised curriculum landscapes: The identity and agency of academics

- In the face of sociopolitical and cultural challenges: Educational leaders’ strategic thinking skills

- The decolonial turn: reference lists in PhD theses as markers of theoretical shift/stasis in media and journalism studies at selected South African universities

- Children’s narratives on migrant refugees: a practice of global citizenship

- Educating on democracy in a time of environmental disasters

- Book review: Becoming a Scholar: Cross-cultural reflections on identity and agency in an education doctorate, edited by Maria Savva and Lynn P. Nygaard

- Rapid reviews as an emerging approach to evidence synthesis in education

- Record : found

- Abstract : found

- Article : found

- Download PDF

- Review article

- Invite someone to review

There is a wide diversity of views on the potential for artificial intelligence (AI), ranging from overenthusiastic pronouncements about how it is imminently going to transform our lives to alarmist predictions about how it is going to cause everything from mass unemployment to the destruction of life as we know it. In this article, I look at the practicalities of AI in education and at the attendant ethical issues it raises. My key conclusion is that AI in the near- to medium-term future has the potential to enrich student learning and complement the work of (human) teachers without dispensing with them. In addition, AI should increasingly enable such traditional divides as ‘school versus home’ to be straddled with regard to learning. AI offers the hope of increasing personalization in education, but it is accompanied by risks of learning becoming less social. There is much that we can learn from previous introductions of new technologies in school to help maximize the likelihood that AI can help students both to flourish and to learn powerful knowledge. Looking further ahead, AI has the potential to be transformative in education, and it may be that such benefits will first be seen for students with special educational needs. This is to be welcomed.

Main article text

Introduction.

The use of computers in education has a history of several decades – with somewhat mixed consequences. Computers have not always helped deliver the results their proponents envisaged ( McFarlane, 2019 ). In their review, Baker et al. (2019) found that examples of educational technology that succeeded in achieving impact at scale and making a desired difference to school systems as a whole (beyond the particular context of a small number of schools) are rarer than might be supposed. More positively, Baker et al. (2019) examined nine examples – three in Italy, three in the rest of Europe and three in the rest of the world – where technology is having beneficial impacts for large numbers of learners. One of the examples is the partnership between the Lemann Foundation and the Khan Academy in Brazil; this has been running since 2012 and has resulted in millions of students registering on the Khan Academy platform. The context is that in most Brazilian schools, students attend for just one of three daily sessions, only receiving about four hours of teaching a day. Evaluations of this partnership have been positive, for example, showing increased mathematics attainment compared to controls ( Fundação Lemann, 2018 ).

Nowadays, talk of artificial intelligence (AI) is widespread – and there have been both overenthusiastic pronouncements about how it is imminently going to transform our lives, particularly for learners (for example, Seldon with Abidoye, 2018 ), and dire predictions about how it is going to cause everything from mass unemployment to the destruction of life as we know it (for example, Bostrom, 2014 ).

Precisely what is meant by AI is itself somewhat contentious ( Wilks, 2019 ). To a biologist such as myself, intelligence is not restricted to humans. Indeed, there is an entire academic field, animal cognition, devoted to the study of the mental capacities of non-human animals, including their intelligence ( Reader et al., 2011 ). Members of the species Homo sapiens are the products of something like four thousand million years of evolution. Unless one is a creationist, humans are descended from inorganic matter. If yesterday’s inorganic matter gave rise to today’s humans, it hardly seems remarkable that humans, acting intentionally, should be able to manufacture inorganic entities with at least the rudiments of intelligence. After all, even single-celled organisms show apparent purposiveness in their lives as they move, using information from chemical gradients, to places where they are more likely to obtain food (or the building blocks of food) and are less likely themselves to be consumed ( Cooper, 2000 ).

Without endorsing the Scala Naturae , still less the Great Chain of Being, it is clear that many species have their own intelligence. This is most obvious to us in the other great apes – gorillas, bonobos, chimpanzees and orangutans – but evolutionary biologists and some philosophers are wary of binary classifications (humans versus all other species, or great apes versus all other species), preferring to see intelligence as an emergent property found in different manifestations and to varying extents ( Spencer-Smith, 1995 ; Kaplan and Robson, 2002 ). For example, some species have much better spatial memories than we do – in bird species such as chickadees, tits, jays, nutcrackers and nuthatches, individuals scatter hoard sometimes thousands of nuts and other edible items as food stores, each in a different location ( Crystal and Shettleworth, 1994 ). Their memories allow them to retrieve the great majority of such items, sometimes many months later.

None of this is to diminish the exceptional and distinctive nature of human intelligence. To give just one example, the way that we use language, while clearly related to the simple modes of communication used by non-human animals, is of a different order ( Scruton, 2017 ). From our birth, before we begin to learn from our parents and others, we have – without going into the nature–nurture debate in detail – an innate capacity to relate to others and to take in information ( Nicoglou, 2018 ). As the newborn infant takes in this information, it begins to process this, just as it takes in milk and emulates walking. As has long been noted, 4-year-olds can do things (recognize faces, manifest a theory of mind, use conditional probabilistic reasoning) that even the most sophisticated AI struggles to do. Furthermore, it is the same 4-year-old who does all this, whereas we still employ different AI systems to cope (or attempt to cope) with each of these, highlighting the point that AI is still quite narrow, whereas human cognition is far broader in comparison ( Boden, 2016 ).

There is no need here to get into a detailed discussion about the relationship between robots and AI – although there are interesting questions on the extent to which the materiality that robots possess and that software does not makes, or will make, a difference to the capacity to manifest high levels of intelligence ( Reiss, 2020 ). It is worth noting that our criteria for AI seem to change over time (see Wang, 2019 ). Every time there is a substantial advance in machine performance, the bar for ‘true AI’ gets raised. The reality is that there are now not only machines that can play games such as chess and go better than any of us can, but also machines (admittedly not the same machines) that can make certain diagnoses (for example, breast cancer, diabetic retinopathy) at least as accurately as experienced doctors.

It should be remembered, however, that within every AI system there are the fruits of countless hours of human thinking. When AlphaGo beat 18-times world go champion Lee Sedol in 2016 by four games to one, in a sense it was not AlphaGo alone but also all the programmers and go experts who worked to produce the software. Indeed, the same point holds for all technologies and all human activities. Human intelligence, demonstrated through such things as teaching the next generation and the invention of long-lasting records (writing, for example), has meant that the abilities manifested by each of us or our products (such as software) are the results of a long history of human thought and action.

There are endless debates as to whether or not machines can yet pass the Turing test. The reality is that the internet is filled with bots that regularly convince humans that they are other humans ( Ishowo-Oloko et al., 2019 ). Some of the saddest instances are the bots that appear on dating websites. Worryingly, the standard advice as to how to spot them (messages look scripted, grammar is poor, they ask for money, they respond too rapidly) will presumably soon become dated as technology ‘improves’ and would also disqualify quite a few humans.

So, AI is here – it is already making a huge impact on almost every aspect of manufacturing, and there are sensible predictions that it will be used increasingly in a large number of professions, including medicine, law and social care ( Frey and Osborne, 2013 ; POST, 2018 ). What are its effects likely to be in education, and should we welcome it or not?

AI and its use in non-teaching aspects of education

The main concern of this article is with the use of AI for teaching. However, schools are complex organizations and there is little doubt that AI will play an increasing role in what might be termed the non-teaching aspects of education. Some of these have little or nothing to do with the classroom teacher – for example, the allocation of students to schools in places where such decisions are still made outside individual schools, improved recruitment procedures for teachers and other staff, better procurement systems for materials used in schools and more accurate registration of students. Other aspects do involve the teacher – for example, improved design and marking of terminal assessments, more valid provision of information about students to their parents/guardians (reports) and so on. The importance of these for the lives of teachers should not be underestimated. Many teachers would be delighted if AI could reduce what they often characterize as bureaucracy that wears them down (see, for example, Towers, 2017 ; Skinner et al., 2019 ).

A range of software tools to help with some of these aspects of school life already exists – for example, for timetabling (FET, Lantiv Timetabler, among others) – and there is a burgeoning market for the development of AI for assessment purposes by Pearson and other commercial organizations ( Jiao and Lissitz, 2020 ). Obviously, automated systems can be used (and have been for many years) in ‘objective marking’ (as in a multiple choice test). The deeper question is about the efficacy and occurrence of any unintended consequences when automated systems are used for more open-ended assignments. The research literature is cautiously optimistic, for both summative and formative assessment purposes (for example, Shute and Rahimi, 2017 ; van Groen and Eggen, 2020 ). At the same time, it should not be presumed that the use of AI for such purposes will necessarily be unproblematic. Enough is now known about bias in AI (for example, unintended racial profiling) for us to be cautious ( Burbidge et al., 2020 ).

Some of the benefits that schools can provide for students are not covered by the term ‘teaching’, and AI may prove useful here. For example, a number of schools in England, both independent and state, are using an AI tool which is designed to predict self-harm, drug abuse and eating disorders. It has been claimed that this is already decreasing self-harm incidents ( Manthorpe, 2019 ), although Carly Kind, Director of the Ada Lovelace Institute (a research and deliberative body with a mission to ensure that data and AI work for people and society), points out that ‘With these types of technologies there is a concern that they are implemented for one reason and later used for other reasons’ ( Manthorpe, 2019 ).

AI and the personalization of education

Some of the claims made for AI in education are extremely unlikely to be realized. For example, Nikolas Kairinos, founder and CEO of Fountech.ai, has been quoted as saying that within 20 years, our heads will be boosted with special implants, so ‘you won’t need to memorise anything’ ( White, 2019 ). The reasons why this is unlikely ever to be the case, let alone within 20 years, are discussed by Aldridge (2018) , who examines the possibility of such knowledge ‘insertion’ (see Gibson, 1984 ). Aldridge (2018) draws on a phenomenological account of knowledge to reject such a possibility. Puddifoot and O’Donnell (2018) argue that too great a reliance on technologies to store information for us – information that in previous times we would have had to remember – may be counterproductive, resulting in missed opportunities for the memory systems of students to form abstractions and generate insights from newly learned information.

Moving to a more conceivable, although still very optimistic, instance of the potential of AI for education, Anthony Seldon writes:

Two of the most important variants are the quality of teaching and class sizes. In proverbial terms, AI offers the prospect of ‘an Eton quality teacher for all’. Class sizes for those children fortunate enough to attend a school will be reduced from 30 or more, where the individual student’s needs are often lost, down to 1 on 1 instruction. Students will still be grouped into classes which may well have 10, 20, 30 or more children in them, but each student will enjoy a personalised learning programme. They will spend part of the day in front of a screen or headsets, and in time a surface on to which a hologram will be projected. There will be little need for stand-alone robots for teaching itself. The ‘face’ on the screen or hologram will be that of an individualised teacher, which will know the mind of the student, and will deliver lessons individually to them, moving at the student’s optimal pace, know how to motivate them, understand when they are tired or distracted and be adept at bringing them back onto task. The ‘teacher’ themselves will be as effective as the most gifted teacher in any class in any school in the world, with the added benefit of having a finely honed understanding of each student, their learning difficulties and psychologies whose accumulated knowledge will not evaporate at the end of the school year. ( Seldon with Abidoye, 2018 : Chapter 9: 2)

For all that this passage seems to have been written in a rush (‘in front of a screen or headsets’, ‘on to which’, ‘onto task’), it is worth examining, both because it manifests some of the hyperbole that attends AI in education and because it is written by someone who is not only a vice chancellor of a university and a former headteacher, but also (according to his website, www.anthonyseldon.co.uk ) one of Britain’s leading educationalists.

I agree with Seldon that personalization of teaching is likely to be one of the principal benefits of AI in education, but I do not have quite the unbounded enthusiasm for one-to-one teaching of school students that he does. There are times when one-to-one teaching is ideal – indeed, most of my own teaching since I took up my present post in 2001 has been one-to-one (doctoral students). However, there are two principal reasons why one-to-one teaching, on its own, is less ideal for younger students – one is concerned with the nature of what is to be learnt; the other is concerned with how it is to be learnt (see Baines et al., 2007 ). With younger students, quite a high proportion of what is to be learnt is not distinctive to the learner, in contrast to doctoral teaching, where most of it is. When what is to be learnt is common to a number of learners, they can learn from each other, as well as from the official teacher. When I spent quite a bit of time giving one-to-one tutorials in mathematics to teenagers desperately trying to pass their school examinations, the experience convinced me that, while there is much to be said for one-to-one tuition, there is also a vital role for group discussion. Indeed, there is no reason to pit AI and group learning in opposition: the two can complement one another ( Bursali and Yilmaz, 2019 ).

Then there is the fact that Seldon seems to have an interesting notion of quality school teaching, in which the teacher does not need to have any individualized knowledge of their students: ‘The “teacher” themselves will be as effective as the most gifted teacher in any class in any school in the world, with the added benefit of having a finely honed understanding of each student ’ ( Seldon with Abidoye, 2018 : Chapter 9: 2, my emphasis). This seems to be an extreme version of transmission (‘banking’) education ( Freire, 2017 ), in which what is to be taught is independent of the learner. Freire argued that it was this notion of transmission education that prevents critical thinking (‘conscientization’) and so enables oppression to continue. A naive assumption that AI can be ‘efficient’ by enabling learners to learn rapidly could therefore lead to the same lack of criticality and ownership of their learning.

I am also a bit more sceptical than Seldon about the presumption that ‘The “face” on the screen or hologram will … know how to motivate them’ ( Seldon with Abidoye, 2018 : Chapter 9: 2). Perhaps he and I taught in rather different sorts of schools, but my memory of my schoolteaching days was that motivation was all too often about using every ounce of my social skills to know when to be firm and when to banter, when to stay on task and when to make a leap from the subject matter at hand to aspects of the lives of my students (see Wentzel and Miele, 2016 ). It is not impossible that AI could manage this – but I suspect that this will be a very considerable time in the future.

There is also a somewhat disembodied model of teaching apparent in Seldon’s vision (‘The “face” on the screen or hologram’). To a certain extent, this may work better for some subjects (such as mathematics) than others. As a science teacher, I suspect that the actuality of some ‘thing’ (I grant that this could in principle be a robot) moving around the classroom or school laboratory, interacting with students as it teaches, particularly during practical activities, is valuable (see Abrahams and Reiss, 2012 ). I also note that there is a growing literature – some, but not all, of it centred on science education – on the importance of gesture and other material manifestations of the teacher (for example, Kress et al., 2001 ; Roth, 2010 ).

Finally, the present reality of any learning innovation that makes use of technology, including AI, is that one of its first effects is to widen inequalities, particularly those based on financial capital, but often also with respect to other variables such as gender and geography (for example, differential access to broadband in rural versus urban communities) ( Ansong et al., 2020 ). In addition, for all that AI may promise increasing personalization, Selwyn (2017) points out that digital provision often results in ‘more of the same’. Furthermore, such digital provision is accompanied by increasing commercialization:

Technology is already allowing big businesses and for-profit organisations to provide education, and this trend will increase over the next fifty years. Whatever companies are the equivalent of Pearson and Kaplan in 2065 will be running schools, and we will not think twice about it. ( Selwyn, 2017 : 178–9)

Nevertheless, personalization does seem likely to represent a major route by which AI will be influential in education. I can remember designing with colleagues (Angela Hall took the lead, with Anne Scott and myself supporting her) software packages (‘interactive tutorials’) for 16–19-year-old biology students in 2002–3 ( Hall et al., 2003 ). The key point of these packages was that, depending on students’ responses to early questions, the students were directed along different paths, in an attempt to ensure that the material with which they were presented was personally suitable. By today’s standards, it would seem rather clunky, but it constituted an early version of personalization (that is, ‘differentiation’).

Neil Selwyn (2019) traces this approach back to the beginnings of computer-aided instruction in the 1960s. Many of the systems are based on a ‘mastery’ approach (as in many computer games), where one only progresses to the next level having succeeded at the present one. Selwyn is generally regarded as something of a sceptic about many of the claims for computers in education, so his comment that ‘these software tutors are certainly seen to be as good as the teaching that most people are likely to experience in their lifetime’ ( Selwyn, 2019 : 56) is notable.

As these systems improve – not least as a result of machine learning, as well as increases in processing capacity – it seems likely that their value in education will increase considerably. For example, the Chinese company Squirrel (which attained ‘unicorn’ status at the end of 2018, with a valuation of US$1 billion) has teams of engineers that break down the subjects it teaches into the smallest possible conceptual units. Middle school mathematics, for example, is broken into a large number of atomic elements or ‘knowledge points’ ( Hao, 2019 ). Once the knowledge points have been determined, how they build on each other and relate to each other are encoded in a ‘knowledge graph’. Video lectures, notes, worked examples and practice problems are then used to help teach knowledge points through software – Squirrel students do not meet any human teachers:

A student begins a course of study with a short diagnostic test to assess how well she understands key concepts. If she correctly answers an early question, the system will assume she knows related concepts and skip ahead. Within 10 questions, the system has a rough sketch of what she needs to work on, and uses it to build a curriculum. As she studies, the system updates its model of her understanding and adjusts the curriculum accordingly. As more students use the system, it spots previously unrealized connections between concepts. The machine-learning algorithms then update the relationships in the knowledge graph to take these new connections into account. ( Hao, 2019 : n.p.)

What remains unclear is the extent to which such systems will replace teachers. I suspect that what is more likely is that in schools they will increasingly be seen as another pedagogical instrument that is useful to teachers. One area where AI is likely to prove of increasing value is the provision of ‘real-time’ (‘just-in-time’) formative assessment. Luckin et al. (2016 : 35) envisage that ‘AIEd [Artificial Intelligence in Education] will enable learning analytics to identify changes in learner confidence and motivation while learning a foreign language, say, or a tricky equation’. Indeed, while some students will no doubt respond better to humans as teachers, there is considerable anecdotal evidence that some prefer software – after all, software is available for us whenever we want it, and it does not get irritated if we take far longer than most students to get to grips with simultaneous equations, the causes of the First World War or irregular French verbs.

It has also been suggested that AI will lead to a time when there is no (well, let us say ‘less’) need for terminal assessment in education, on the grounds that such assessment provides just a snapshot, and typically covers only a small proportion of a curriculum, whereas AI has far more relevant data to hand. It is a bit like very high-quality teacher assessment, but without the problem that teachers often find it difficult to be dispassionate in their assessments of students that they have taught and know.

I will return to the issue of personalized learning in the section on ‘Special educational needs’ below.

AI and the home–school divide in education

Traditionally, schools are places to which adults send children for whom they are responsible, so that the children can learn. One not infrequently reads denouncements of schools on the grounds that their selection of subjects and their model of learning date mainly from the nineteenth century and are outdated for today’s societies (see, for example, White, 2003 ). Even in the case of science, where there have clearly been substantial changes in what we know about the material world, changes in how science is taught in schools over the last hundred years have been modest (see, for example, Jenkins, 2019 ). Furthermore, science courses typically assume that there is little or no valid knowledge of the subject that children can learn away from school. Outside-the-classroom learning is generally viewed as a source of misconceptions more than anything else.

Nowadays, however, and even without the benefits of AI, there is a range of ways of learning science away from school. For example, when I type ‘learning astronomy’ into Google, I get a wonderful array of websites. I remember the satisfaction I felt when, in about 2004, a student who was ill and had to spend two terms (eight months) away from school while studying an A-level biology course for 16–18-year-olds that I helped develop (Salters-Nuffield Advanced Biology), as well as two other A levels, was able to continue with her biology course because of its large, online component, whereas she had to give up her other two A levels. It seems clear that one of the things that AI in education will do is help to break down the home–school divide in education. The implications for schooling may be profound – for all that a cynical analysis might conclude that schools provide a relatively affordable child-minding system while both parents go out to work.

Having said that, the near-worldwide disruption to conventional schooling caused by COVID-19, including the widespread closure of schools, indicates how far any distance-learning educational technology is from supplanting humans, for which millions of harassed parents, carers and teachers doing their best at a distance can vouch. Even when the technology works perfectly (and is not overloaded), and there has been plenty of time to prepare, home schooling is demanding ( Lees, 2013 ).

Nor should it be presumed that learners away from school must necessarily work on their own. Most of us are already familiar with online forums that permit (near) real-time conversations. Luckin et al. (2016) argue that AI can be used to facilitate high-quality collaborative learning. For instance, AI can bring together (virtually) individuals with complementary knowledge and skills, and it can identify effective collaborative problem-solving strategies, mediate online student interactions, moderate groups and summarize group discussions.

Ethical issues of AI in education

The aims of education.

The use of AI to facilitate learning emphasizes the need to look fundamentally at the aims of education. With John White ( Reiss and White, 2013 ), I have argued that education should aim to promote flourishing – principally human flourishing, although a broader application of the concept would widen the notion to the non-human environment. Such a broadening is especially important at a time when there is increasing realization of the accelerating impact that our species is having on habitat destruction, global climate change and the extinction of species.

Establishing that human flourishing is the aim of education does not contradict the aim of enabling students to acquire powerful knowledge ( Young, 2008 ) – the sort of knowledge that in the absence of schools, students would not learn – but it is not to be equated with it. Human flourishing is a broader conceptualization of the aim of education ( Reiss, 2018 ). The argument that education should promote human flourishing begins with the assertion that this aim has two sub-aims: to enable each learner to lead a life that is personally flourishing and to enable each learner to help others lead such lives too. Specifically, it can be argued that a central aim of a school should be to prepare students for a life of autonomous, wholehearted and successful engagement in worthwhile relationships, activities and experiences. This aim involves acquainting students with possible options from which to choose, although it needs to be recognized that students vary in the extent to which they are able to make such ‘choices’. With students’ development towards autonomous adulthood in mind, schools should provide their students with increasing opportunities to decide between the pursuits that best suit them. Young children are likely to need greater guidance from their teachers, just as they do from their parents. Part of the function of schooling, and indeed parenting, is to prepare children for the time when they will need, and be able, to make decisions more independently.

The idea that humans should (can) lead flourishing lives is among the oldest of ethical principles, one that is emphasized particularly by Aristotle in his Nicomachean Ethics and Politics . There are many accounts as to what precisely constitutes a flourishing life. A Benthamite hedonist sees it in terms of maximizing pleasurable feelings and minimizing painful ones. More everyday perspectives may tie it to wealth, fame, consumption or, more generally, satisfying one’s major desires, whatever these may be. There are difficulties with all of these accounts. For example, a problem with desire satisfaction is that it allows ways of life that virtually all of us would deny were flourishing – a life wholly devoted to tidying one’s bedroom, for instance.

A richer conceptualization of flourishing in an educational context is provided by the concept of Bildung . This German term refers to a process of maturation in which an individual grows so that they develop their identity and, without surrendering their individuality, learns to be a member of society. The extensive literary tradition of Bildungsroman (sometimes described in English as ‘coming-of-age’ stories), in which an individual grows psychologically and morally from youth to adulthood, illustrates the concept (examples include Candide , The Red and the Black , Jane Eyre , Great Expectations , Sons and Lovers , A Portrait of the Artist as a Young Man and The Magic Mountain ).

The relevance of this for a future where AI plays an increasing role in education is that, while any teacher needs to reflect on their aims, there is a greater risk of such reflection not taking place when the teacher lacks self-awareness and the capacity for reflexivity and questioning, as is currently manifestly the case when AI provides the teaching. Furthermore, given the emphasis to date on subjects such as mathematics in computer-based learning, there is a danger that AI education systems will focus on a narrow conceptualization of education in which the acquisition of knowledge or a narrow set of skills come to dominate. Even without presuming a Dead Poets Society view of the subject, it is likely to be harder to devise AI packages to teach literature well than to teach physics. Looking across the curriculum, we want students to become informed and active citizens. This means encouraging them to take an interest in political affairs at local, national and global levels from the standpoint of a concern for the general good, and to do this with due regard to values such as freedom, individual autonomy, equal consideration and cooperation. Young people also need to possess whatever sorts of understanding these dispositions entail, for example, an understanding of the nature of democracy, of divergences of opinion about it and of its application to the circumstances of their own society ( Reiss, 2018 ).

The possible effect of AI on the lives of teachers and teaching assistants

It is not only students whose lives will increasingly be affected by the use of AI in education. It is difficult to predict what the consequences will be for (human) teachers. It might be that AI leads to more motivated students – something that just about every teacher wants, if only because it means they can spend less time and effort on classroom management issues and more on enabling learning. On the other hand, the same concerns I discuss below about student tracking – with risks to privacy and an increasing culture of surveillance – might apply to teachers too. There was a time when a classroom was a teacher’s sanctuary. The walls have already got thinner, but with increasing data on student performance and attainment, teachers may find that they are observed as much as their students. Even if it transpires that AI has little or no effect on the number of teachers who are needed, teaching might become an even more stressful occupation than it is already.

The position of teaching assistants seems more precarious than that of teachers. In a landmark study that evaluated a major expansion of teaching assistants in classrooms in England – an expansion costed at about £1 billion – Blatchford et al. (2012) reached the surprising conclusion, well supported by statistical analysis, that children who received the most support from teaching assistants made significantly less progress in their learning than did similar children who received less support. Much subsequent work has been undertaken which demonstrates that this finding can be reversed if teaching assistants are given careful support and training ( Webster et al., 2013 ). Nevertheless, the arguments as to why large numbers of teaching assistants will be needed in an AI future seem shakier than the arguments as to why large numbers of teachers will still be needed.

Special educational needs

The potential for AI to tailor the educational offer more precisely to a student’s needs and wishes (the ‘personalization’ argument considered above) should prove to have special benefits for students with special educational needs (SEN) – a broad category that includes attention deficit hyperactivity disorder, autistic spectrum disorder, dyslexia, dyscalculia and specific language impairment, as well as such poorly defined categories as moderate learning difficulties and profound and multiple learning disabilities (see Astle et al., 2019 ). If we consider a typical class with, say, 25 students, almost by definition, SEN students are likely to find that a smaller percentage of any lesson is directly relevant to them compared to other students. This point, of course, holds as well for students sometimes described as gifted and talented (G&T) as for students who find learning (either in general or for a particular subject) much harder than most, taking substantially longer to make progress.

To clarify, for all that some school students may require a binary determination as to whether they are SEN or not, or G&T or not, in reality these are not dichotomous variables – they lie on continua. Indeed, one of the advantages of the use of AI is precisely that it need not make the sort of crude classifications that conventional education sometimes requires (for reasons of funding decisions and allocation of specialist staff). If it turns out (which is the case) that when learning chemistry, I am well above average in my capacity to use mathematics, but below average in my spatial awareness, any decent educational software should soon be aware of this and adjust itself accordingly – roughly speaking, in the case of chemistry, by going over material that requires spatial awareness (for example, stereoisomers) more slowly and incrementally, but making bigger jumps and going further in such areas as chemical calculations.

Estimates of the percentage of students who have SEN vary. In England, definitions have changed over the years, but a figure of about 15 per cent is typical. The percentage of students who are G&T is usually stated to be considerably smaller – 2 per cent to 5 per cent are the figures sometimes cited – but it is clear that even using this crude classification, about one in five or one in six students fit into the SEN or G&T categories. And there are many other students with what any parent would regard as special needs, even if they do not fit into the official categories. I am a long-standing trustee of Red Balloon , a charity that supports young people who self-exclude from school, and who are missing education because of bullying or other trauma. One of the most successful of our initiatives has been Red Balloon of the Air; teaching is not (yet) done with AI, but it is provided online by qualified teachers, with students working either on their own or in small groups. It is easy to envisage AI coming to play a role in such teaching, without removing the need for humans as teachers. Indeed, AI seems likely to be of particular value when it complements human teachers by providing access to topics (even whole subjects) that individual teachers are not able to, thereby broadening the educational offer.

Student tracking

In the West, we often shake our heads at some of the ways in which the confluence of biometrics and AI in some countries is leading to ever tighter tracking of people. Betty Li is a 22-year-old student at a university in north-west China. To enter her dormitory, she needs to get through scanners, and in class, facial recognition cameras above the blackboards keep an eye on her and her fellow students’ attentiveness ( Xie, 2019 ). In some Chinese high schools, such cameras are being used to categorize each student at each moment in time as happy, sad, disappointed, angry, scared, surprised or neutral. At present, it seems that little use is really being made of such data, but that could change, particularly as the technology advances.

Sandra Leaton Gray (2019) has written about how the convergence of AI and biometrics in education keeps her awake at night. She points out that the proliferation of online school textbooks means that publishers already have data on how long students spend on each page and which pages they skip. She goes on:

In the future, they might even be able to watch facial expressions as pupils read the material, or track the relationship between how they answer questions online during their course with their final GCSE or A Level results, especially if the pupil sits an exam produced by the assessment arm of the same parent company. This doesn’t happen at the moment, but it is technically possible already. ( Leaton Gray, 2019 : n.p.)

It is a standard trope of technology studies to maintain that technologies are rarely good or bad in themselves: what matters is how they are used. Leaton Gray (2019) is right to question the confluence of AI and biometrics. While this has the potential to advance learning, it is all too easy to see how a panopticon-like surveillance could have dystopian consequences (see books such as We and Nineteen Eighty-Four and films such as Das Leben der Anderen , Brazil and Minority Report ).

Conclusions

There is no doubt that AI is here to stay in education. It is possible that in the short- to medium-term (roughly, the next decade) it will have only modest effects – whereas its effects in many other areas of our lives will almost certainly be very substantial. At some point, however, AI is likely to have profound effects on education. It is possible that these will not all be positive, and it is more than possible that the early days of AI in education will see a widening of educational inequality (in the way that almost any important new technology widens inequality until penetration approaches 100 per cent). In time, though, AI has the potential to make major positive contributions to learning, both in school and out of school. It should increase personalization in learning, for all students, including those not well served by current schooling. The consequences for teachers are harder to predict, although there may be reductions in the number of teaching assistants who work in classrooms.

Acknowledgements

I am very grateful to the editors of this special issue, to the editor of the journal and to two reviewers for extremely helpful feedback which led to considerable improvements to this article.

Notes on the contributor

Michael J. Reiss is Professor of Science Education at UCL Institute of Education, UK, a Fellow of the Academy of Social Sciences and Visiting Professor at the University of York and the Royal Veterinary College. The former Director of Education at the Royal Society, he is a member of the Nuffield Council on Bioethics and has written extensively about curricula, pedagogy and assessment in education. He is currently working on a project on AI and citizenship.

Abrahams I, Reiss MJ. 2012. Practical work: Its effectiveness in primary and secondary schools in England. Journal of Research in Science Teaching . Vol. 49:1035–55. [ Cross Ref ]

Aldridge D. 2018. Cheating education and the insertion of knowledge. Educational Theory . Vol. 68(6):609–24. [ Cross Ref ]

Ansong D, Okumu M, Albritton TJ, Bahnuk EP, Small E. 2020. The role of social support and psychological well-being in STEM performance trends across gender and locality: Evidence from Ghana. Child Indicators Research . Vol. 13:1655–73. [ Cross Ref ]

Astle DE, Bathelt J; the CALM Team; Holmes J. 2019. Remapping the cognitive and neural profiles of children who struggle at school. Developmental Science . Vol. 22:[ Cross Ref ]

Baines E, Blatchford P, Chowne A. 2007. Improving the effectiveness of collaborative group work in primary schools: Effects on science attainment. British Educational Research Journal . Vol. 33(5):663–80. [ Cross Ref ]

Baker T, Tricarico L, Bielli S. 2019. Making the Most of Technology in Education: Lessons from school systems around the world . London: Nesta. Accessed 7 December 2020 https://media.nesta.org.uk/documents/Making_the_Most_of_Technology_in_Education_03-07-19.pdf

Blatchford P, Russell A, Webster R. 2012. Reassessing the Impact of Teaching Assistants: How research challenges practice and policy . Abingdon: Routledge.

Boden MA. 2016. AI: Its nature and future . Oxford: Oxford University Press.

Bostrom N. 2014. Superintelligence: Paths, dangers, strategies . Oxford: Oxford University Press.

Burbidge D, Briggs A, Reiss MJ. 2020. Citizenship in a Networked Age: An agenda for rebuilding our civic ideals . Oxford: University of Oxford. Accessed 7 December 2020 https://citizenshipinanetworkedage.org

Bursali H, Yilmaz RM. 2019. Effect of augmented reality applications on secondary school students’ reading comprehension and learning permanency. Computers in Human Behavior . Vol. 95:126–35. [ Cross Ref ]

Cooper GM. 2000. The Cell: A molecular approach . 2nd ed. Sunderland, MA: Sinauer Associates.

Crystal JD, Shettleworth SJ. 1994. Spatial list learning in black-capped chickadees. Animal Learning & Behavior . Vol. 22:77–83. [ Cross Ref ]

Freire P. 2017. Pedagogy of the Oppressed . Ramos MB. London: Penguin.

Frey CB, Osborne MA. 2013. The future of employment: How susceptible are jobs to computerisation . Accessed 7 December 2020 www.oxfordmartin.ox.ac.uk/downloads/academic/The_Future_of_Employment.pdf

Fundação Lemann. 2018. Five Years of Khan Academy in Brazil: Impact and lessons learned . São Paulo: Fundação Lemann.

Gibson W. 1984. Neuromancer . New York: Ace.

Hall A, Reiss MJ, Rowell C, Scott C. 2003. Designing and implementing a new advanced level biology course. Journal of Biological Education . Vol. 37:161–7. [ Cross Ref ]

Hao K. 2019. China has started a grand experiment in AI education. It could reshape how the world learns. MIT Technology Review . 2–August Accessed 7 December 2020 www.technologyreview.com/s/614057/china-squirrel-has-started-a-grand-experiment-in-ai-education-it-could-reshape-how-the/

Ishowo-Oloko F, Bonnefon J, Soroye Z, Crandall J, Rahwan I, Rahwan T. 2019. Behavioural evidence for a transparency–efficiency tradeoff in human–machine cooperation. Nature Machine Intelligence . Vol. 1:517–21. [ Cross Ref ]

Jenkins E. 2019. Science for All: The struggle to establish school science in England . London: UCL IOE Press.

Jiao H, Lissitz RW. 2020. Application of Artificial Intelligence to Assessment . Charlotte, NC: Information Age Publishing.

Kaplan HS, Robson AJ. 2002. The emergence of humans: The coevolution of intelligence and longevity with intergenerational transfers. Proceedings of the National Academy of Sciences . Vol. 99(15):10221–6. [ Cross Ref ]

Kress G, Carey J, Ogborn J, Tsatsarelis C. 2001. Multimodal Teaching and Learning: The rhetorics of the science classroom . London: Continuum.

Leaton Gray S. 2019 What keeps me awake at night? The convergence of AI and biometrics in education . 2–November Accessed 7 December 2020 https://sandraleatongray.wordpress.com/2019/11/02/what-keeps-me-awake-at-night-the-convergence-of-ai-and-biometrics-in-education/

Lees HE. 2013. Education without Schools: Discovering alternatives . Bristol: Polity Press.

Luckin R, Holmes W, Griffiths M, Forcier LB. 2016. Intelligence Unleashed: An argument for AI in education . London: Pearson. Accessed 7 December 2020 https://static.googleusercontent.com/media/edu.google.com/en//pdfs/Intelligence-Unleashed-Publication.pdf

McFarlane A. 2019. Growing up Digital: What do we really need to know about educating the digital generation . London: Nuffield Foundation. Accessed 7 December 2020 www.nuffieldfoundation.org/sites/default/files/files/Growing%20Up%20Digital%20-%20final.pdf

Manthorpe R. 2019. Artificial intelligence being used in schools to detect self-harm and bullying. Sky news . 21–September Accessed 7 December 2020 https://news.sky.com/story/artificial-intelligence-being-used-in-schools-to-detect-self-harm-and-bullying-11815865

Nicoglou A. 2018. The concept of plasticity in the history of the nature–nurture debate in the early twentieth centuryMeloni M, Cromby J, Fitzgerald D, Lloyd S. The Palgrave Handbook of Biology and Society . London: Palgrave Macmillan. p. 97–122

POST (Parliamentary Office of Science & Technology). 2018. Robotics in Social Care . London: Parliamentary Office of Science & Technology. Accessed 7 December 2020 https://researchbriefings.parliament.uk/ResearchBriefing/Summary/POST-PN-0591#fullreport

Puddifoot K, O’Donnell C. 2018. Human memory and the limits of technology in education. Educational Theory . Vol. 68(6):643–55. [ Cross Ref ]

Reader SM, Hager Y, Laland KN. 2011. The evolution of primate general and cultural intelligence. Philosophical Transactions of the Royal Society B: Biological Sciences . Vol. 366(1567):1017–27. [ Cross Ref ]

Reiss MJ. 2018. The curriculum arguments of Michael Young and John WhiteGuile D, Lambert D, Reiss MJ. Sociology, Curriculum Studies and Professional Knowledge: New perspectives on the work of Michael Young . Abingdon: Routledge. p. 121–31

Reiss MJ. 2020. Robots as persons? Implications for moral education. Journal of Moral Education . [ Cross Ref ]

Reiss MJ, White J. 2013. An Aims-based Curriculum: The significance of human flourishing for schools . London: IOE Press.

Roth WM. 2010. Language, Learning, Context: Talking the talk . London: Routledge.

Scruton R. 2017. On Human Nature . Princeton, NJ: Princeton University Press.

Seldon A, Abidoye O. 2018. The Fourth Education Revolution: Will artificial intelligence liberate or infantilise humanity . Buckingham: University of Buckingham Press.

Selwyn N. 2017. Education and Technology: Key issues and debates . 2nd ed. London: Bloomsbury Academic.

Selwyn N. 2019. Should Robots Replace Teachers . Cambridge: Polity Press.

Shute VJ, Rahimi S. 2017. Review of computer-based assessment for learning in elementary and secondary education. Journal of Computer Assisted Learning . Vol. 33(1):1–19. [ Cross Ref ]

Skinner B, Leavey G, Rothi D. 2019. Managerialism and teacher professional identity: Impact on well-being among teachers in the UK. Educational Review . [ Cross Ref ]

Spencer-Smith R. 1995. Reductionism and emergent properties. Proceedings of the Aristotelian Society . Vol. 95: 113–29

Towers E. 2017. ‘“Stayers”: A qualitative study exploring why teachers and headteachers stay in challenging London primary schools’. PhD thesis . King’s College London.

van Groen MM, Eggen TJHM. 2020. Educational test approaches: The suitability of computer-based test types for assessment and evaluation in formative and summative contexts. Journal of Applied Testing Technology . Vol. 21(1):12–24

Wang P. 2019. On defining artificial intelligence. Journal of Artificial General Intelligence . Vol. 10(2):1–37. [ Cross Ref ]

Webster R, Blatchford P, Russell A. 2013. Challenging and changing how schools use teaching assistants: Findings from the Effective Deployment of Teaching Assistants project. School Leadership & Management: Formerly School Organisation . Vol. 33(1):78–96. [ Cross Ref ]

Wentzel KR, Miele DB. 2016. Handbook of Motivation at School . 2nd ed. New York: Routledge.

White D. 2019. MEGAMIND: “Google brain” implants could mean end of school as anyone will be able to learn anything instantly. The Sun . 25–March Accessed 7 December 2020 www.thesun.co.uk/tech/8710836/google-brain-implants-could-mean-end-of-school-as-anyone-will-be-able-to-learn-anything-instantly/

White J. 2003. Rethinking the School Curriculum: Values, aims and purposes . London: RoutledgeFalmer.

Wilks Y. 2019. Artificial Intelligence: Modern magic or dangerous future . London: Icon Books.

Xie E. 2019. Artificial intelligence is watching China’s students but how well can it really see. South China Morning Post . 16–September Accessed 7 December 2020 www.scmp.com/news/china/politics/article/3027349/artificial-intelligence-watching-chinas-students-how-well-can

Young MFD. 2008. Bringing Knowledge Back In: From social constructivism to social realism in the sociology of knowledge . London: Routledge.

Author and article information

Affiliations, author notes, author information.

This is an open-access article distributed under the terms of the Creative Commons Attribution Licence (CC BY) 4.0 https://creativecommons.org/licenses/by/4.0/ , which permits unrestricted use, distribution and reproduction in any medium, provided the original author and source are credited.

Comment on this article

- Publisher Home

- About the Journal

- Editorial Team

- Article Processing Fee

- Privacy Statement

- Crossmark Policy

- Copyright Statement

- GDPR Policy

- Open Access Policy

- Publication Ethics Statement

- Author Guidelines

- Announcements

What Can AI Learn from Teachers and Students? A Contribution to Build the Research Gap Between AI Technologies and Pedagogical Knowledge

- Lucimar Dantas

Lucimar Dantas

Search for the other articles from the author in:

- Elsa Estrela

Elsa Estrela

- Zhe Yuan

Abstract Views 729

Downloads 407

##plugins.themes.bootstrap3.article.sidebar##

##plugins.themes.bootstrap3.article.main##