- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Evaluation Research: Definition, Methods and Examples

Content Index

- What is evaluation research

- Why do evaluation research

Quantitative methods

Qualitative methods.

- Process evaluation research question examples

- Outcome evaluation research question examples

What is evaluation research?

Evaluation research, also known as program evaluation, refers to research purpose instead of a specific method. Evaluation research is the systematic assessment of the worth or merit of time, money, effort and resources spent in order to achieve a goal.

Evaluation research is closely related to but slightly different from more conventional social research . It uses many of the same methods used in traditional social research, but because it takes place within an organizational context, it requires team skills, interpersonal skills, management skills, political smartness, and other research skills that social research does not need much. Evaluation research also requires one to keep in mind the interests of the stakeholders.

Evaluation research is a type of applied research, and so it is intended to have some real-world effect. Many methods like surveys and experiments can be used to do evaluation research. The process of evaluation research consisting of data analysis and reporting is a rigorous, systematic process that involves collecting data about organizations, processes, projects, services, and/or resources. Evaluation research enhances knowledge and decision-making, and leads to practical applications.

LEARN ABOUT: Action Research

Why do evaluation research?

The common goal of most evaluations is to extract meaningful information from the audience and provide valuable insights to evaluators such as sponsors, donors, client-groups, administrators, staff, and other relevant constituencies. Most often, feedback is perceived value as useful if it helps in decision-making. However, evaluation research does not always create an impact that can be applied anywhere else, sometimes they fail to influence short-term decisions. It is also equally true that initially, it might seem to not have any influence, but can have a delayed impact when the situation is more favorable. In spite of this, there is a general agreement that the major goal of evaluation research should be to improve decision-making through the systematic utilization of measurable feedback.

Below are some of the benefits of evaluation research

- Gain insights about a project or program and its operations

Evaluation Research lets you understand what works and what doesn’t, where we were, where we are and where we are headed towards. You can find out the areas of improvement and identify strengths. So, it will help you to figure out what do you need to focus more on and if there are any threats to your business. You can also find out if there are currently hidden sectors in the market that are yet untapped.

- Improve practice

It is essential to gauge your past performance and understand what went wrong in order to deliver better services to your customers. Unless it is a two-way communication, there is no way to improve on what you have to offer. Evaluation research gives an opportunity to your employees and customers to express how they feel and if there’s anything they would like to change. It also lets you modify or adopt a practice such that it increases the chances of success.

- Assess the effects

After evaluating the efforts, you can see how well you are meeting objectives and targets. Evaluations let you measure if the intended benefits are really reaching the targeted audience and if yes, then how effectively.

- Build capacity

Evaluations help you to analyze the demand pattern and predict if you will need more funds, upgrade skills and improve the efficiency of operations. It lets you find the gaps in the production to delivery chain and possible ways to fill them.

Methods of evaluation research

All market research methods involve collecting and analyzing the data, making decisions about the validity of the information and deriving relevant inferences from it. Evaluation research comprises of planning, conducting and analyzing the results which include the use of data collection techniques and applying statistical methods.

Some of the evaluation methods which are quite popular are input measurement, output or performance measurement, impact or outcomes assessment, quality assessment, process evaluation, benchmarking, standards, cost analysis, organizational effectiveness, program evaluation methods, and LIS-centered methods. There are also a few types of evaluations that do not always result in a meaningful assessment such as descriptive studies, formative evaluations, and implementation analysis. Evaluation research is more about information-processing and feedback functions of evaluation.

These methods can be broadly classified as quantitative and qualitative methods.

The outcome of the quantitative research methods is an answer to the questions below and is used to measure anything tangible.

- Who was involved?

- What were the outcomes?

- What was the price?

The best way to collect quantitative data is through surveys , questionnaires , and polls . You can also create pre-tests and post-tests, review existing documents and databases or gather clinical data.

Surveys are used to gather opinions, feedback or ideas of your employees or customers and consist of various question types . They can be conducted by a person face-to-face or by telephone, by mail, or online. Online surveys do not require the intervention of any human and are far more efficient and practical. You can see the survey results on dashboard of research tools and dig deeper using filter criteria based on various factors such as age, gender, location, etc. You can also keep survey logic such as branching, quotas, chain survey, looping, etc in the survey questions and reduce the time to both create and respond to the donor survey . You can also generate a number of reports that involve statistical formulae and present data that can be readily absorbed in the meetings. To learn more about how research tool works and whether it is suitable for you, sign up for a free account now.

Create a free account!

Quantitative data measure the depth and breadth of an initiative, for instance, the number of people who participated in the non-profit event, the number of people who enrolled for a new course at the university. Quantitative data collected before and after a program can show its results and impact.

The accuracy of quantitative data to be used for evaluation research depends on how well the sample represents the population, the ease of analysis, and their consistency. Quantitative methods can fail if the questions are not framed correctly and not distributed to the right audience. Also, quantitative data do not provide an understanding of the context and may not be apt for complex issues.

Learn more: Quantitative Market Research: The Complete Guide

Qualitative research methods are used where quantitative methods cannot solve the research problem , i.e. they are used to measure intangible values. They answer questions such as

- What is the value added?

- How satisfied are you with our service?

- How likely are you to recommend us to your friends?

- What will improve your experience?

LEARN ABOUT: Qualitative Interview

Qualitative data is collected through observation, interviews, case studies, and focus groups. The steps for creating a qualitative study involve examining, comparing and contrasting, and understanding patterns. Analysts conclude after identification of themes, clustering similar data, and finally reducing to points that make sense.

Observations may help explain behaviors as well as the social context that is generally not discovered by quantitative methods. Observations of behavior and body language can be done by watching a participant, recording audio or video. Structured interviews can be conducted with people alone or in a group under controlled conditions, or they may be asked open-ended qualitative research questions . Qualitative research methods are also used to understand a person’s perceptions and motivations.

LEARN ABOUT: Social Communication Questionnaire

The strength of this method is that group discussion can provide ideas and stimulate memories with topics cascading as discussion occurs. The accuracy of qualitative data depends on how well contextual data explains complex issues and complements quantitative data. It helps get the answer of “why” and “how”, after getting an answer to “what”. The limitations of qualitative data for evaluation research are that they are subjective, time-consuming, costly and difficult to analyze and interpret.

Learn more: Qualitative Market Research: The Complete Guide

Survey software can be used for both the evaluation research methods. You can use above sample questions for evaluation research and send a survey in minutes using research software. Using a tool for research simplifies the process right from creating a survey, importing contacts, distributing the survey and generating reports that aid in research.

Examples of evaluation research

Evaluation research questions lay the foundation of a successful evaluation. They define the topics that will be evaluated. Keeping evaluation questions ready not only saves time and money, but also makes it easier to decide what data to collect, how to analyze it, and how to report it.

Evaluation research questions must be developed and agreed on in the planning stage, however, ready-made research templates can also be used.

Process evaluation research question examples:

- How often do you use our product in a day?

- Were approvals taken from all stakeholders?

- Can you report the issue from the system?

- Can you submit the feedback from the system?

- Was each task done as per the standard operating procedure?

- What were the barriers to the implementation of each task?

- Were any improvement areas discovered?

Outcome evaluation research question examples:

- How satisfied are you with our product?

- Did the program produce intended outcomes?

- What were the unintended outcomes?

- Has the program increased the knowledge of participants?

- Were the participants of the program employable before the course started?

- Do participants of the program have the skills to find a job after the course ended?

- Is the knowledge of participants better compared to those who did not participate in the program?

MORE LIKE THIS

Data Information vs Insight: Essential differences

May 14, 2024

Pricing Analytics Software: Optimize Your Pricing Strategy

May 13, 2024

Relationship Marketing: What It Is, Examples & Top 7 Benefits

May 8, 2024

The Best Email Survey Tool to Boost Your Feedback Game

May 7, 2024

Other categories

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

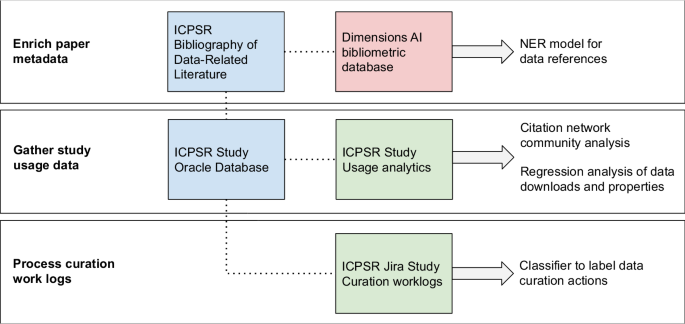

Introduction to finding data and statistics

- Data Concepts

- Analyze your data needs

- Postal Code Conversion File (PCCF)

- Eurostat microdata

Evaluate your data

- Cite your sources

- Presentations & workshops

Once you’ve chosen a data set that you believe will work, take care to carefully evaluate it. Why is it important to evaluate our data and ensure that we are using quality data? Data that has been organized and interpreted into sets, phrases, or patterns, becomes information. We use information to identify needs, measure impacts and inform our decision making. If the data underlying that information are incorrect in some respect, then our decisions and result could also be wrong or misleading.

Ask yourself, does the data cover your Who, What, When, and How requirements? Always read the metadata and documentation to ensure that the analysis you are planning to do really measures what you want it to.

Who collected the data

The “who” factor impacts the data’s reliability and whether or not we ultimately opt to utilize or trust it. Data from sources like professional organizations or government agencies will have a reputation for trustworthiness not commonly associated with data gathered from less credible sources. Consider the extent to which the data producer is perceived as authoritative on the subject matter.

What is the data provider's purpose

It's important to gauge objectivity and intent, especially when examining data from commercial businesses or say political parties. Is there an incentive to be biased? The integrity of such research might be compromised, so think critically of the data you find.

When was the data collected

Depending on the nature of your research question, it could be important to find the most accurate and relevant information available. This holds true especially when seeking data about the latest trends in a particular industry, for instance.

How was the data collected

What methods were used to collected the data? What methodology was used? Consider comparing to other similar research to see if any inconsistencies arise.

- TEDNYC: 3 ways to spot a bad statistics. Mona Chalabi Sometimes it's hard to know what statistics are worthy of trust. But we shouldn't count out stats altogether ... instead, we should learn to look behind them.

Helpful Resources

- Evaluation criteria (uOttawa Library) To look at information critically means you approach it like a “critic”. You must question, analyse and contextualize your sources in order to make a decision about their value and appropriateness. Several factors or “critical lenses” can be used to assess information.

- Statistics Canada's Data quality toolkit The objective of this toolkit is to raise awareness about data quality assurance practices.

- "Become Data Literate in 3 Simple Steps" Learn how to evaluate data by investigating three simple questions.

C onsultez le guide en français

- << Previous: Eurostat microdata

- Next: Cite your sources >>

- Partnerships

- White Papers

- Bias in Generative AI: Types, Examples, Solutions

- AI Consulting

- AI Software Development

- Data Science Services

- Machine Learning Consulting

- Machine Learning Development

- Customer Experience Consulting

- AI Mobile App Development

- ChatGPT Prompt Engineering

- Marketing Campaign Performance Optimization

- Generative AI Consulting

- Generative AI Development

- GPT Integration Services

- AI Chatbot Development

- LLM Development

- ChatGPT Use Cases For Business

- Generative AI – Everything You Need to Know

- Big Data Development

- Modern Data Architecture

- Data Engineering Services

- Big Data Analytics

- Data Warehouse

- BI & Data Visualizations

- Cloud Services

- Investment Data Management Solution

- Food Supply Chain Management

- Custom Web Development

- Intelligent AI Cooking Assistant

- Full-Cycle Web Application Development for a Retail Company

- Virtual Assistant Tool

- Text Analysis

- Computer Vision

- Custom Large Language Models

- AI Call Center Solutions

- Image Recognition

- Natural Language Processing

- Predictive Analytics

- Pose Estimation

- Consumer Sentiment Analysis

- Recommendation Systems

- Data Capture & OCR

- Healthcare & Pharma

- Game & Entertainment

- Sport & Wellness

- Marketing & Advertising

- Media & Entertainment

- InData Labs Services

- Generative Artificial Intelligence

- AI Call Center Solutions

- Recommendation systems

- All Success Stories

Data evaluation

Data is the world’s most valuable resource, so businesses’ investments in analysis are rising. However, many organizations overlook the importance of data evaluation, hindering the accuracy of their artificial intelligence (AI) models and other initiatives.

In today’s environment, every business is becoming a data science company in some capacity. Amid that shift, organizations must make decisions based on accurate, relevant, and high-quality information. Evaluation provides that assurance.

What is data evaluation?

Businesses must define data evaluation before understanding why and how to implement it. Generally speaking, data evaluation includes reviewing information, its format, and sources to ensure it’s accurate, complete and can help companies achieve their goals.

This evaluation is common in healthcare solutions and other processes in scientific industries, as reviewing the reliability of a study’s sources is a crucial step in the scientific method. In these applications, organizations typically review where their information came from, who collected it, their purpose and how they gathered it. However, different analytics use cases may evaluate their data differently.

Data evaluation in accounting will likely hold information to a different standard than evaluation for AI-driven data science . The two fields use different types of information and need various things from it, so each will have a unique evaluation process.

Why data evaluation is important?

Data evaluation is becoming increasingly critical to businesses’ success as companies make more decisions based on data. Organizations employ analytics technologies like predictive performance models and center their strategic decision-making around these processes, so the costs of inaccurate or misleading data rise.

Source: Unsplash

Many companies today rely on AI to inform decisions like targeting a specific niche, responding to demand changes, reorganizing supply chains, and more. However, AI is only as reliable as the data it analyzes.

Businesses that collect and analyze inaccurate, incomplete, irrelevant, or otherwise misleading information will get poor-quality insights. Basing decisions on those inaccuracies could result in significant losses.

Data evaluation methods

Multiple data evaluation methods exist since information and analytics processes come in several forms. The most common way to divide these varying strategies is by quantitative and qualitative data.

Quantitative data evaluation

Quantitative data evaluation centers on what most people call “hard data.” This data evaluation strategy looks at rigid, well-defined figures representing concrete facts, such as percentages and specific measurements.

This information’s structured nature makes it an ideal fit for processes that rely on black-and-white dynamics or specific values. For example, computer vision software solutions typically identify objects by a strict “yes-or-no” dynamic. Consequently, the data these systems train on must be specific and concrete.

Collecting quantitative data involves processes like scientific experiments, multiple-choice surveys and mechanical measurements. Evaluating its validity requires similar approaches. Because the information in question consists of hard facts and figures, data evaluation tools must compare it to known, specific standards.

Qualitative data evaluation

By contrast, qualitative data evaluation focuses on non-statistical, less rigid, and more nuanced information than complex numbers. Whereas quantitative analysis answers “what” and “when,” qualitative analysis is better suited for questions about “why” and “how.”

This analysis typically looks at more open-ended, subjective data, including social media interactions, focus group interviews, and expert opinions. The resulting information may be difficult to base mechanical or mathematical processes on, but it can help provide context for decision-making or understanding abstract concepts.

Evaluating qualitative data is a less scientific but still crucial process. It requires a human touch and may involve asking questions about potential biases, limitations of a study, or if some information may be outdated. Raising these questions is important for evaluating clinical report data or other processes that require disclosure about how some information may skew a certain way.

How to analyze and evaluate data

Because different data evaluation techniques fit various use cases, how to analyze and evaluate data best depends on the specific situation. However, the overall process looks similar across all applications.

1. Collect the data

Data collection for evaluation is the first step. Before a business can verify its information’s accuracy, it has to collect it in the first place. The most effective data evaluation examples keep this need for precision in mind when performing this initial gathering.

Gathering contextual information around actionable data is a crucial but often overlooked step. While it’s good only to collect what a specific use case needs, taking too narrow an approach can leave out the important context that changes the real-world meaning.

2. Choose the optimal evaluation method

The next step in evaluation planning and data collection is to choose the ideal data evaluation technique. As with the first step, the best approach depends on the company’s data goals. Quantitative data is often some of the most helpful information because it provides specificity and objective results, but some data evaluation examples require qualitative information.

For example, organizations with higher onboarding maturity are four times more likely to see improved employee retention, but how do you measure that maturity? There’s no well-defined, agreed-upon scientific measurement for such an abstract concept, so businesses wanting to measure it need data sources like interviews and expert insights, requiring qualitative evaluation.

3. Organize and clean the data

After data collection, organizations must clean their data. This process is the first round of evaluation and involves parsing for incomplete records, spelling errors, duplicates, and other mistakes.

Cleaning data ensures records are accurate regarding what they say they are. Looking for and fixing these mistakes will make it easier to evaluate the data further and prevent inaccurate results stemming from simple, correctable errors. Many data evaluation software solutions also include cleaning features to automate this process, reducing the risk of human error.

Businesses should organize their data during this process. Putting information into defined categories will make it easier to spot inaccuracies or other issues down the line and enables faster analysis.

4. Look for gaps and other issues

Next comes the bulk of the data evaluation work. Businesses should look through their cleaned, organized records and ask questions to see if it’s reliable and relevant to their goals.

Data gaps are one of the most important issues to look for. These include any information businesses don’t have that they may need to get the most accurate answers to their questions. Sometimes, it may be impossible to identify data gaps until after the analysis process, so repeating this step after getting results from an analytics program is essential.

Teams may also use various data evaluation tools like automated programs to compare quantitative information to known standards. They might turn to experts to ask questions about bias or research limitations. Businesses should expect incomplete records or unanswered questions. However, if any of these issues are common throughout the data set or seem particularly substantial, it may be worth revisiting the evaluation planning and data collection process.

5. Submit data for analysis and interpretation

After businesses are confident in their data evaluation’s validity and the accuracy of their records, they can submit it for analysis. A thorough evaluation process should streamline the analytics phase, which looks through the verified, organized information to draw actionable conclusions .

While it’s possible to analyze data manually, it’s often best to turn to AI software implementation , as AI is often faster and better at spotting patterns than humans. Businesses with these insights should monitor the results of any projects based on this data. If they fall short of expectations, teams should review their data collection programs and evaluation methods to ensure they use accurate, relevant information.

Data management in monitoring and evaluation

Because data evaluation involves a considerable amount of information, businesses should give a lot of thought to data management in monitoring and evaluation. Poor management techniques could result in breaches or inaccurate results.

Organizations can ensure they don’t miss important contexts by keeping all information in one place. Given rising data volumes, that means using scalable cloud storage solutions to store anything they collect. Similarly, teams should use software that lets them access all this information from a single point, which streamlines the process. Companies can make these large data volumes more manageable by frequently reviewing information and deleting anything that’s no longer relevant.

It’s also essential to ensure a high level of cybersecurity. Data breaches cost $9.44 million on average in the U.S ., and storing large volumes of information in one place can make organizations a valuable target. Limiting access permissions, using automated monitoring technologies, requiring strong password management, and implementing up-to-date security software are all necessary.

Evaluation is essential for data-driven organizations

Knowing how to analyze and evaluate data is essential in today’s data-driven environment. Businesses that know why and how to assess their data can rest assured that their AI tools and other analytics processes produce reliable results. They can then get all they can out of their most valuable resource.

April Miller is a senior writer with more than 3 years of experience writing on AI and ML topics.

Implement custom Big data solutions for improved data analysis

Generate transformative insights to be ahead of the competition with custom data evaluation solutions. Contact us for more information upon your request, and we’ll reach out to you.

Subscribe to our newsletter!

AI and data science news, trends, use cases, and the latest technology insights delivered directly to your inbox.

By clicking Subscribe, you agree to our Terms of Use and Privacy Policy .

Please leave this field empty.

Related articles

- BI and Big Data

- Data Engineering

- Data Science and AI solutions

- Data Strategy

- ML Consulting

- Generative AI/NLP

- Sentiment/Text Analysis

- InData Labs News

Privacy Overview

Data Evaluation and Sensemaking

- First Online: 01 January 2023

Cite this chapter

- Kathleen Gregory 4 , 5 &

- Laura Koesten 4

Part of the book series: Synthesis Lectures on Information Concepts, Retrieval, and Services ((SLICRS))

This chapter discusses how individuals evaluate and make sense of data which they discover. Evaluating data for potential use includes drawing on different types of information from and about data (Sect. 5.1). Once selected, individuals go “ in” the data , as they try to make sense of the data themselves (Sect. 5.2). We emphasize that this process of evaluation and sensemaking is not sequential. It includes different cycles of evaluating, selecting and trying to explore data in more depth. This can in turn lead to refining one's selection criteria and going back to evaluating and selecting different data sources to start the process again. The chapter ends by drawing three conclusions (Sect. 5.3) about how people evaluate and make sense of data for reuse.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as EPUB and PDF

- Read on any device

- Instant download

- Own it forever

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

- Durable hardcover edition

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Author information

Authors and affiliations.

Faculty of Computer Science, University of Vienna, Vienna, Austria

Kathleen Gregory & Laura Koesten

School of Information Studies, Scholarly Communications Lab, University of Ottawa, Ottawa, Canada

Kathleen Gregory

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Kathleen Gregory .

Rights and permissions

Reprints and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Switzerland AG

About this chapter

Gregory, K., Koesten, L. (2022). Data Evaluation and Sensemaking. In: Human-Centered Data Discovery. Synthesis Lectures on Information Concepts, Retrieval, and Services. Springer, Cham. https://doi.org/10.1007/978-3-031-18223-5_5

Download citation

DOI : https://doi.org/10.1007/978-3-031-18223-5_5

Published : 01 January 2023

Publisher Name : Springer, Cham

Print ISBN : 978-3-031-18222-8

Online ISBN : 978-3-031-18223-5

eBook Packages : Synthesis Collection of Technology (R0) eBColl Synthesis Collection 11

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

- Privacy Policy

Home » Evaluating Research – Process, Examples and Methods

Evaluating Research – Process, Examples and Methods

Table of Contents

Evaluating Research

Definition:

Evaluating Research refers to the process of assessing the quality, credibility, and relevance of a research study or project. This involves examining the methods, data, and results of the research in order to determine its validity, reliability, and usefulness. Evaluating research can be done by both experts and non-experts in the field, and involves critical thinking, analysis, and interpretation of the research findings.

Research Evaluating Process

The process of evaluating research typically involves the following steps:

Identify the Research Question

The first step in evaluating research is to identify the research question or problem that the study is addressing. This will help you to determine whether the study is relevant to your needs.

Assess the Study Design

The study design refers to the methodology used to conduct the research. You should assess whether the study design is appropriate for the research question and whether it is likely to produce reliable and valid results.

Evaluate the Sample

The sample refers to the group of participants or subjects who are included in the study. You should evaluate whether the sample size is adequate and whether the participants are representative of the population under study.

Review the Data Collection Methods

You should review the data collection methods used in the study to ensure that they are valid and reliable. This includes assessing the measures used to collect data and the procedures used to collect data.

Examine the Statistical Analysis

Statistical analysis refers to the methods used to analyze the data. You should examine whether the statistical analysis is appropriate for the research question and whether it is likely to produce valid and reliable results.

Assess the Conclusions

You should evaluate whether the data support the conclusions drawn from the study and whether they are relevant to the research question.

Consider the Limitations

Finally, you should consider the limitations of the study, including any potential biases or confounding factors that may have influenced the results.

Evaluating Research Methods

Evaluating Research Methods are as follows:

- Peer review: Peer review is a process where experts in the field review a study before it is published. This helps ensure that the study is accurate, valid, and relevant to the field.

- Critical appraisal : Critical appraisal involves systematically evaluating a study based on specific criteria. This helps assess the quality of the study and the reliability of the findings.

- Replication : Replication involves repeating a study to test the validity and reliability of the findings. This can help identify any errors or biases in the original study.

- Meta-analysis : Meta-analysis is a statistical method that combines the results of multiple studies to provide a more comprehensive understanding of a particular topic. This can help identify patterns or inconsistencies across studies.

- Consultation with experts : Consulting with experts in the field can provide valuable insights into the quality and relevance of a study. Experts can also help identify potential limitations or biases in the study.

- Review of funding sources: Examining the funding sources of a study can help identify any potential conflicts of interest or biases that may have influenced the study design or interpretation of results.

Example of Evaluating Research

Example of Evaluating Research sample for students:

Title of the Study: The Effects of Social Media Use on Mental Health among College Students

Sample Size: 500 college students

Sampling Technique : Convenience sampling

- Sample Size: The sample size of 500 college students is a moderate sample size, which could be considered representative of the college student population. However, it would be more representative if the sample size was larger, or if a random sampling technique was used.

- Sampling Technique : Convenience sampling is a non-probability sampling technique, which means that the sample may not be representative of the population. This technique may introduce bias into the study since the participants are self-selected and may not be representative of the entire college student population. Therefore, the results of this study may not be generalizable to other populations.

- Participant Characteristics: The study does not provide any information about the demographic characteristics of the participants, such as age, gender, race, or socioeconomic status. This information is important because social media use and mental health may vary among different demographic groups.

- Data Collection Method: The study used a self-administered survey to collect data. Self-administered surveys may be subject to response bias and may not accurately reflect participants’ actual behaviors and experiences.

- Data Analysis: The study used descriptive statistics and regression analysis to analyze the data. Descriptive statistics provide a summary of the data, while regression analysis is used to examine the relationship between two or more variables. However, the study did not provide information about the statistical significance of the results or the effect sizes.

Overall, while the study provides some insights into the relationship between social media use and mental health among college students, the use of a convenience sampling technique and the lack of information about participant characteristics limit the generalizability of the findings. In addition, the use of self-administered surveys may introduce bias into the study, and the lack of information about the statistical significance of the results limits the interpretation of the findings.

Note*: Above mentioned example is just a sample for students. Do not copy and paste directly into your assignment. Kindly do your own research for academic purposes.

Applications of Evaluating Research

Here are some of the applications of evaluating research:

- Identifying reliable sources : By evaluating research, researchers, students, and other professionals can identify the most reliable sources of information to use in their work. They can determine the quality of research studies, including the methodology, sample size, data analysis, and conclusions.

- Validating findings: Evaluating research can help to validate findings from previous studies. By examining the methodology and results of a study, researchers can determine if the findings are reliable and if they can be used to inform future research.

- Identifying knowledge gaps: Evaluating research can also help to identify gaps in current knowledge. By examining the existing literature on a topic, researchers can determine areas where more research is needed, and they can design studies to address these gaps.

- Improving research quality : Evaluating research can help to improve the quality of future research. By examining the strengths and weaknesses of previous studies, researchers can design better studies and avoid common pitfalls.

- Informing policy and decision-making : Evaluating research is crucial in informing policy and decision-making in many fields. By examining the evidence base for a particular issue, policymakers can make informed decisions that are supported by the best available evidence.

- Enhancing education : Evaluating research is essential in enhancing education. Educators can use research findings to improve teaching methods, curriculum development, and student outcomes.

Purpose of Evaluating Research

Here are some of the key purposes of evaluating research:

- Determine the reliability and validity of research findings : By evaluating research, researchers can determine the quality of the study design, data collection, and analysis. They can determine whether the findings are reliable, valid, and generalizable to other populations.

- Identify the strengths and weaknesses of research studies: Evaluating research helps to identify the strengths and weaknesses of research studies, including potential biases, confounding factors, and limitations. This information can help researchers to design better studies in the future.

- Inform evidence-based decision-making: Evaluating research is crucial in informing evidence-based decision-making in many fields, including healthcare, education, and public policy. Policymakers, educators, and clinicians rely on research evidence to make informed decisions.

- Identify research gaps : By evaluating research, researchers can identify gaps in the existing literature and design studies to address these gaps. This process can help to advance knowledge and improve the quality of research in a particular field.

- Ensure research ethics and integrity : Evaluating research helps to ensure that research studies are conducted ethically and with integrity. Researchers must adhere to ethical guidelines to protect the welfare and rights of study participants and to maintain the trust of the public.

Characteristics Evaluating Research

Characteristics Evaluating Research are as follows:

- Research question/hypothesis: A good research question or hypothesis should be clear, concise, and well-defined. It should address a significant problem or issue in the field and be grounded in relevant theory or prior research.

- Study design: The research design should be appropriate for answering the research question and be clearly described in the study. The study design should also minimize bias and confounding variables.

- Sampling : The sample should be representative of the population of interest and the sampling method should be appropriate for the research question and study design.

- Data collection : The data collection methods should be reliable and valid, and the data should be accurately recorded and analyzed.

- Results : The results should be presented clearly and accurately, and the statistical analysis should be appropriate for the research question and study design.

- Interpretation of results : The interpretation of the results should be based on the data and not influenced by personal biases or preconceptions.

- Generalizability: The study findings should be generalizable to the population of interest and relevant to other settings or contexts.

- Contribution to the field : The study should make a significant contribution to the field and advance our understanding of the research question or issue.

Advantages of Evaluating Research

Evaluating research has several advantages, including:

- Ensuring accuracy and validity : By evaluating research, we can ensure that the research is accurate, valid, and reliable. This ensures that the findings are trustworthy and can be used to inform decision-making.

- Identifying gaps in knowledge : Evaluating research can help identify gaps in knowledge and areas where further research is needed. This can guide future research and help build a stronger evidence base.

- Promoting critical thinking: Evaluating research requires critical thinking skills, which can be applied in other areas of life. By evaluating research, individuals can develop their critical thinking skills and become more discerning consumers of information.

- Improving the quality of research : Evaluating research can help improve the quality of research by identifying areas where improvements can be made. This can lead to more rigorous research methods and better-quality research.

- Informing decision-making: By evaluating research, we can make informed decisions based on the evidence. This is particularly important in fields such as medicine and public health, where decisions can have significant consequences.

- Advancing the field : Evaluating research can help advance the field by identifying new research questions and areas of inquiry. This can lead to the development of new theories and the refinement of existing ones.

Limitations of Evaluating Research

Limitations of Evaluating Research are as follows:

- Time-consuming: Evaluating research can be time-consuming, particularly if the study is complex or requires specialized knowledge. This can be a barrier for individuals who are not experts in the field or who have limited time.

- Subjectivity : Evaluating research can be subjective, as different individuals may have different interpretations of the same study. This can lead to inconsistencies in the evaluation process and make it difficult to compare studies.

- Limited generalizability: The findings of a study may not be generalizable to other populations or contexts. This limits the usefulness of the study and may make it difficult to apply the findings to other settings.

- Publication bias: Research that does not find significant results may be less likely to be published, which can create a bias in the published literature. This can limit the amount of information available for evaluation.

- Lack of transparency: Some studies may not provide enough detail about their methods or results, making it difficult to evaluate their quality or validity.

- Funding bias : Research funded by particular organizations or industries may be biased towards the interests of the funder. This can influence the study design, methods, and interpretation of results.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Data Collection – Methods Types and Examples

Delimitations in Research – Types, Examples and...

Research Process – Steps, Examples and Tips

Research Design – Types, Methods and Examples

Institutional Review Board – Application Sample...

Research Questions – Types, Examples and Writing...

Working with Data

- What is data?

- Evaluating Data

- Data Sources

- Preparing and Analyzing Data

- Tutorials and Resources

Evaluating Data Sources

Remember that all data is gathered by people who make decisions about what to collect. A good way to evaluate a dataset is to look at the data's source. Generally, data from non-profit or governmental organizations is reliable. Data from private sources or data collection firms should be examined to determine its suitability for study. Here are some questions you can ask of a dataset:

- Who gathered it? A group of researchers, a corporation, a government agency?

- For what purpose was it gathered? Was it gathered to answer a specific question? Or perhaps to prove a specific observation? You cannot ask questions of a dataset that it cannot answer, so carefully consider whether the data you have found is relevant to your research question.

- What decisions did they make about the dataset? These could be data cleaning decisions, choices about which data to publish, or something else. Decisions already made will affect what you're able to do with the data.

- Are you allowed to reuse it? If so, are there privacy or ethical considerations? See the Ethics in Data Use section below.

The answers to these questions can often be found in data documentation or by web searching.

Learn more about evaluating sources .

Ethics in Data Use

Ethical data use involves keeping an eye to privacy and reuse restrictions and interrogating how and why data was collected.

Privacy and reuse

Data can include information that is potentially harmful if made public. For example, if a social scientist collects information from people addicted to drugs, and shares that information without appropriately anonymizing the dataset, that could affect someone's ability to get a loan, a job, or cause family issues. Ethical data use almost always include anonymizing data or limit these risks. Similarly, if reusing data that contains potentially harmful information, think about what you might be able to omit from your analysis to protect privacy.

Data collection

Remember that data is only as good as its collection methods, and interrogate why data was collected in a certain way. Do you notice certain groups or factors are conspicuously missing? Could the data collection method have violated privacy?

- << Previous: Finding Data

- Next: Data Sources >>

- Last Updated: Mar 20, 2024 10:13 AM

- URL: https://guides.lib.udel.edu/datalit

- Evaluation Research Design: Examples, Methods & Types

As you engage in tasks, you will need to take intermittent breaks to determine how much progress has been made and if any changes need to be effected along the way. This is very similar to what organizations do when they carry out evaluation research.

The evaluation research methodology has become one of the most important approaches for organizations as they strive to create products, services, and processes that speak to the needs of target users. In this article, we will show you how your organization can conduct successful evaluation research using Formplus .

What is Evaluation Research?

Also known as program evaluation, evaluation research is a common research design that entails carrying out a structured assessment of the value of resources committed to a project or specific goal. It often adopts social research methods to gather and analyze useful information about organizational processes and products.

As a type of applied research , evaluation research typically associated with real-life scenarios within organizational contexts. This means that the researcher will need to leverage common workplace skills including interpersonal skills and team play to arrive at objective research findings that will be useful to stakeholders.

Characteristics of Evaluation Research

- Research Environment: Evaluation research is conducted in the real world; that is, within the context of an organization.

- Research Focus: Evaluation research is primarily concerned with measuring the outcomes of a process rather than the process itself.

- Research Outcome: Evaluation research is employed for strategic decision making in organizations.

- Research Goal: The goal of program evaluation is to determine whether a process has yielded the desired result(s).

- This type of research protects the interests of stakeholders in the organization.

- It often represents a middle-ground between pure and applied research.

- Evaluation research is both detailed and continuous. It pays attention to performative processes rather than descriptions.

- Research Process: This research design utilizes qualitative and quantitative research methods to gather relevant data about a product or action-based strategy. These methods include observation, tests, and surveys.

Types of Evaluation Research

The Encyclopedia of Evaluation (Mathison, 2004) treats forty-two different evaluation approaches and models ranging from “appreciative inquiry” to “connoisseurship” to “transformative evaluation”. Common types of evaluation research include the following:

- Formative Evaluation

Formative evaluation or baseline survey is a type of evaluation research that involves assessing the needs of the users or target market before embarking on a project. Formative evaluation is the starting point of evaluation research because it sets the tone of the organization’s project and provides useful insights for other types of evaluation.

- Mid-term Evaluation

Mid-term evaluation entails assessing how far a project has come and determining if it is in line with the set goals and objectives. Mid-term reviews allow the organization to determine if a change or modification of the implementation strategy is necessary, and it also serves for tracking the project.

- Summative Evaluation

This type of evaluation is also known as end-term evaluation of project-completion evaluation and it is conducted immediately after the completion of a project. Here, the researcher examines the value and outputs of the program within the context of the projected results.

Summative evaluation allows the organization to measure the degree of success of a project. Such results can be shared with stakeholders, target markets, and prospective investors.

- Outcome Evaluation

Outcome evaluation is primarily target-audience oriented because it measures the effects of the project, program, or product on the users. This type of evaluation views the outcomes of the project through the lens of the target audience and it often measures changes such as knowledge-improvement, skill acquisition, and increased job efficiency.

- Appreciative Enquiry

Appreciative inquiry is a type of evaluation research that pays attention to result-producing approaches. It is predicated on the belief that an organization will grow in whatever direction its stakeholders pay primary attention to such that if all the attention is focused on problems, identifying them would be easy.

In carrying out appreciative inquiry, the research identifies the factors directly responsible for the positive results realized in the course of a project, analyses the reasons for these results, and intensifies the utilization of these factors.

Evaluation Research Methodology

There are four major evaluation research methods, namely; output measurement, input measurement, impact assessment and service quality

- Output/Performance Measurement

Output measurement is a method employed in evaluative research that shows the results of an activity undertaking by an organization. In other words, performance measurement pays attention to the results achieved by the resources invested in a specific activity or organizational process.

More than investing resources in a project, organizations must be able to track the extent to which these resources have yielded results, and this is where performance measurement comes in. Output measurement allows organizations to pay attention to the effectiveness and impact of a process rather than just the process itself.

Other key indicators of performance measurement include user-satisfaction, organizational capacity, market penetration, and facility utilization. In carrying out performance measurement, organizations must identify the parameters that are relevant to the process in question, their industry, and the target markets.

5 Performance Evaluation Research Questions Examples

- What is the cost-effectiveness of this project?

- What is the overall reach of this project?

- How would you rate the market penetration of this project?

- How accessible is the project?

- Is this project time-efficient?

- Input Measurement

In evaluation research, input measurement entails assessing the number of resources committed to a project or goal in any organization. This is one of the most common indicators in evaluation research because it allows organizations to track their investments.

The most common indicator of inputs measurement is the budget which allows organizations to evaluate and limit expenditure for a project. It is also important to measure non-monetary investments like human capital; that is the number of persons needed for successful project execution and production capital.

5 Input Evaluation Research Questions Examples

- What is the budget for this project?

- What is the timeline of this process?

- How many employees have been assigned to this project?

- Do we need to purchase new machinery for this project?

- How many third-parties are collaborators in this project?

- Impact/Outcomes Assessment

In impact assessment, the evaluation researcher focuses on how the product or project affects target markets, both directly and indirectly. Outcomes assessment is somewhat challenging because many times, it is difficult to measure the real-time value and benefits of a project for the users.

In assessing the impact of a process, the evaluation researcher must pay attention to the improvement recorded by the users as a result of the process or project in question. Hence, it makes sense to focus on cognitive and affective changes, expectation-satisfaction, and similar accomplishments of the users.

5 Impact Evaluation Research Questions Examples

- How has this project affected you?

- Has this process affected you positively or negatively?

- What role did this project play in improving your earning power?

- On a scale of 1-10, how excited are you about this project?

- How has this project improved your mental health?

- Service Quality

Service quality is the evaluation research method that accounts for any differences between the expectations of the target markets and their impression of the undertaken project. Hence, it pays attention to the overall service quality assessment carried out by the users.

It is not uncommon for organizations to build the expectations of target markets as they embark on specific projects. Service quality evaluation allows these organizations to track the extent to which the actual product or service delivery fulfils the expectations.

5 Service Quality Evaluation Questions

- On a scale of 1-10, how satisfied are you with the product?

- How helpful was our customer service representative?

- How satisfied are you with the quality of service?

- How long did it take to resolve the issue at hand?

- How likely are you to recommend us to your network?

Uses of Evaluation Research

- Evaluation research is used by organizations to measure the effectiveness of activities and identify areas needing improvement. Findings from evaluation research are key to project and product advancements and are very influential in helping organizations realize their goals efficiently.

- The findings arrived at from evaluation research serve as evidence of the impact of the project embarked on by an organization. This information can be presented to stakeholders, customers, and can also help your organization secure investments for future projects.

- Evaluation research helps organizations to justify their use of limited resources and choose the best alternatives.

- It is also useful in pragmatic goal setting and realization.

- Evaluation research provides detailed insights into projects embarked on by an organization. Essentially, it allows all stakeholders to understand multiple dimensions of a process, and to determine strengths and weaknesses.

- Evaluation research also plays a major role in helping organizations to improve their overall practice and service delivery. This research design allows organizations to weigh existing processes through feedback provided by stakeholders, and this informs better decision making.

- Evaluation research is also instrumental to sustainable capacity building. It helps you to analyze demand patterns and determine whether your organization requires more funds, upskilling or improved operations.

Data Collection Techniques Used in Evaluation Research

In gathering useful data for evaluation research, the researcher often combines quantitative and qualitative research methods . Qualitative research methods allow the researcher to gather information relating to intangible values such as market satisfaction and perception.

On the other hand, quantitative methods are used by the evaluation researcher to assess numerical patterns, that is, quantifiable data. These methods help you measure impact and results; although they may not serve for understanding the context of the process.

Quantitative Methods for Evaluation Research

A survey is a quantitative method that allows you to gather information about a project from a specific group of people. Surveys are largely context-based and limited to target groups who are asked a set of structured questions in line with the predetermined context.

Surveys usually consist of close-ended questions that allow the evaluative researcher to gain insight into several variables including market coverage and customer preferences. Surveys can be carried out physically using paper forms or online through data-gathering platforms like Formplus .

- Questionnaires

A questionnaire is a common quantitative research instrument deployed in evaluation research. Typically, it is an aggregation of different types of questions or prompts which help the researcher to obtain valuable information from respondents.

A poll is a common method of opinion-sampling that allows you to weigh the perception of the public about issues that affect them. The best way to achieve accuracy in polling is by conducting them online using platforms like Formplus.

Polls are often structured as Likert questions and the options provided always account for neutrality or indecision. Conducting a poll allows the evaluation researcher to understand the extent to which the product or service satisfies the needs of the users.

Qualitative Methods for Evaluation Research

- One-on-One Interview

An interview is a structured conversation involving two participants; usually the researcher and the user or a member of the target market. One-on-One interviews can be conducted physically, via the telephone and through video conferencing apps like Zoom and Google Meet.

- Focus Groups

A focus group is a research method that involves interacting with a limited number of persons within your target market, who can provide insights on market perceptions and new products.

- Qualitative Observation

Qualitative observation is a research method that allows the evaluation researcher to gather useful information from the target audience through a variety of subjective approaches. This method is more extensive than quantitative observation because it deals with a smaller sample size, and it also utilizes inductive analysis.

- Case Studies

A case study is a research method that helps the researcher to gain a better understanding of a subject or process. Case studies involve in-depth research into a given subject, to understand its functionalities and successes.

How to Formplus Online Form Builder for Evaluation Survey

- Sign into Formplus

In the Formplus builder, you can easily create your evaluation survey by dragging and dropping preferred fields into your form. To access the Formplus builder, you will need to create an account on Formplus.

Once you do this, sign in to your account and click on “Create Form ” to begin.

- Edit Form Title

Click on the field provided to input your form title, for example, “Evaluation Research Survey”.

Click on the edit button to edit the form.

Add Fields: Drag and drop preferred form fields into your form in the Formplus builder inputs column. There are several field input options for surveys in the Formplus builder.

Edit fields

Click on “Save”

Preview form.

- Form Customization

With the form customization options in the form builder, you can easily change the outlook of your form and make it more unique and personalized. Formplus allows you to change your form theme, add background images, and even change the font according to your needs.

- Multiple Sharing Options

Formplus offers multiple form sharing options which enables you to easily share your evaluation survey with survey respondents. You can use the direct social media sharing buttons to share your form link to your organization’s social media pages.

You can send out your survey form as email invitations to your research subjects too. If you wish, you can share your form’s QR code or embed it on your organization’s website for easy access.

Conclusion

Conducting evaluation research allows organizations to determine the effectiveness of their activities at different phases. This type of research can be carried out using qualitative and quantitative data collection methods including focus groups, observation, telephone and one-on-one interviews, and surveys.

Online surveys created and administered via data collection platforms like Formplus make it easier for you to gather and process information during evaluation research. With Formplus multiple form sharing options, it is even easier for you to gather useful data from target markets.

Connect to Formplus, Get Started Now - It's Free!

- characteristics of evaluation research

- evaluation research methods

- types of evaluation research

- what is evaluation research

- busayo.longe

You may also like:

Assessment vs Evaluation: 11 Key Differences

This article will discuss what constitutes evaluations and assessments along with the key differences between these two research methods.

Formal Assessment: Definition, Types Examples & Benefits

In this article, we will discuss different types and examples of formal evaluation, and show you how to use Formplus for online assessments.

What is Pure or Basic Research? + [Examples & Method]

Simple guide on pure or basic research, its methods, characteristics, advantages, and examples in science, medicine, education and psychology

Recall Bias: Definition, Types, Examples & Mitigation

This article will discuss the impact of recall bias in studies and the best ways to avoid them during research.

Formplus - For Seamless Data Collection

Collect data the right way with a versatile data collection tool. try formplus and transform your work productivity today..

.webp)

Evaluative Research: What It Is and Why It Matters

Evaluative research can shed light on your product's performance and how well it aligns with users' needs. From the early stages of development until after your product is launched, this research method can help bring user insights to the forefront.

In this comprehensive guide, we will demystify evaluative research, delving into its significance, comparing it with its counterpart— generative research , and highlighting when and how to use it.

We will also explore some of the most widely used tools and methods in evaluative research such as usability testing, A/B testing, tree testing, closed card sorting, and user surveys .

Why is evaluative research important?

Evaluative research, also referred to as evaluation research, primarily focuses on assessing the performance, usability, and effectiveness of your existing product or service. Its importance stems from its capacity to bring the user's voice into the decision-making process .

It allows you to understand how users interact with your product, what their pain points are, and what they appreciate or dislike it, therefore being a key element of UX research strategy .

By providing this feedback , evaluative research can help you prevent costly design mistakes, improve user satisfaction, and increase the overall success of a product.

Furthermore, evaluative research is important for understanding the context in which your product or service is used . It offers insights into the environments, motivations, and behaviors of users, all of which can influence the design and functionality of the product.

Evaluative research can also guide decision-making and prioritization and show which areas require immediate attention, making it an important part of your user research process .

Evaluative vs. generative research

While both evaluative and generative research are integral to product development, they serve different purposes and are conducted at different stages of the product life cycle.

Generative research, also known as exploratory or formative research, is typically conducted in the early stages of product development. Its main purpose is to generate ideas, concepts, and directions for a new product or service.

This type of research seeks to understand user needs, behaviors, and motivations, often using techniques such as user interviews, ethnographic field studies, and contextual inquiry.

On the other hand, evaluative research is often performed at later stages of product development, often once a product or feature is already in place. It focuses on evaluating the usability and effectiveness of the product or service, identifying potential issues and areas for improvement.

While generative research is more qualitative and exploratory , evaluative research tends to be more quantitative and focused , employing methods such as usability testing, surveys, and A/B testing.

However, both types of research are vital for the success of your product, complementing each other in providing a comprehensive understanding of user behavior and product performance.

When should you conduct evaluative research?

The timing of evaluative research depends on the product life cycle and the specific needs and goals of the project.

However, it's typically conducted at several points:

Prototype stage

You can carry out evaluative research as soon as a functional prototype is available. It can help you assess if the design is moving in the right direction and whether users can accomplish key tasks. The issues you identify at this stage can be relatively inexpensive to fix compared to later stages.

Pre-launch stage

You can conduct evaluative research prior to launching a product or a major update to ensure there are no critical usability issues that might negatively affect user adoption.

Post-launch stage

Even after a product has been launched, you should conduct evaluative research periodically. This allows you to monitor user satisfaction, understand how your product is being used in real-world scenarios, and identify areas for further enhancement.

One of the factors that sometimes gets overlooked post-launch is the language used for labels, features, and descriptions within a product. With this short product copy clarity survey , you can assess whether the wording you use makes sense to your users:

Evaluative research tools and methods

There are several tools and methods used in evaluative research , each with its unique benefits and appropriate scenarios for use. Here we discuss five main methods: usability testing, A/B testing, tree testing, closed card sorting, and surveys.

Owing to their versatile nature, user surveys can serve multiple functions in evaluative research.

They are used to gather both quantitative and qualitative data , facilitating the analysis of user behaviors, perceptions, and experiences with a product or service, as well as creating user personas .

Surveys allow you to collect a large amount of data from a broad spectrum of users, including demographic information, user satisfaction, self-reported usage behaviors, and specific feedback on product features.

The resulting data can inform decisions around product improvement, usability, and the overall user experience.

When using a survey tool, you can opt for standardized surveys, such as the universal Net Promoter Score below, or create your own templates with questions that suit your needs .

You can also use surveys post-interaction to capture immediate user responses and identify potential problems or areas for improvement. The template below, for instance, allows you to gauge user satisfaction with your checkout process:

Surveys can also be used to perform longitudinal studies , tracking changes in user responses over time. This can provide valuable insight into how product changes or updates are affecting the user experience.

Usability testing

Usability testing involves observing users as they engage with your product, often while they complete specified tasks.

It allows you to identify any difficulties or stumbling blocks users face, making it a robust tool for uncovering usability issues.

You can apply usability testing at various stages of product development, from early prototypes to released products.

When used early, it can help you catch and rectify issues before a product goes to market, potentially saving time and resources. In the case of existing products, usability testing provides invaluable insights into areas needing improvement, offering a roadmap for updates or new features.

Additionally, usability testing can provide you with context and a deeper understanding of quantitative data.

For example, if analytics data shows users are dropping off at a specific point in a digital product, usability testing could reveal why this is happening.

A/B testing

A/B testing consists, in short, of comparing two versions of a product or feature to determine which performs better.

Each version is randomly shown to a subset of users, and their behavior is tracked. The version that achieves better results—as determined by predefined metrics such as conversion rates, time spent on a page, or click-through rates—is typically considered the more effective design.

The primary advantage of A/B testing is its ability to provide definitive, data-driven results. Unlike other methods which can sometimes rely on subjective interpretation, A/B testing delivers clear, quantifiable data, making decision-making simpler and more precise.

This form of testing is particularly beneficial for fine-tuning product designs and optimizing user experience. Whether it's deciding on a color scheme, the positioning of a call-to-action button, or the content of a landing page, A/B testing gives you direct insight into what design or content resonates best with your user base, leading to more effective design choices and, ultimately, better business outcomes.

Watch this short video for tips on how to incorporate A/B testing into your user research:

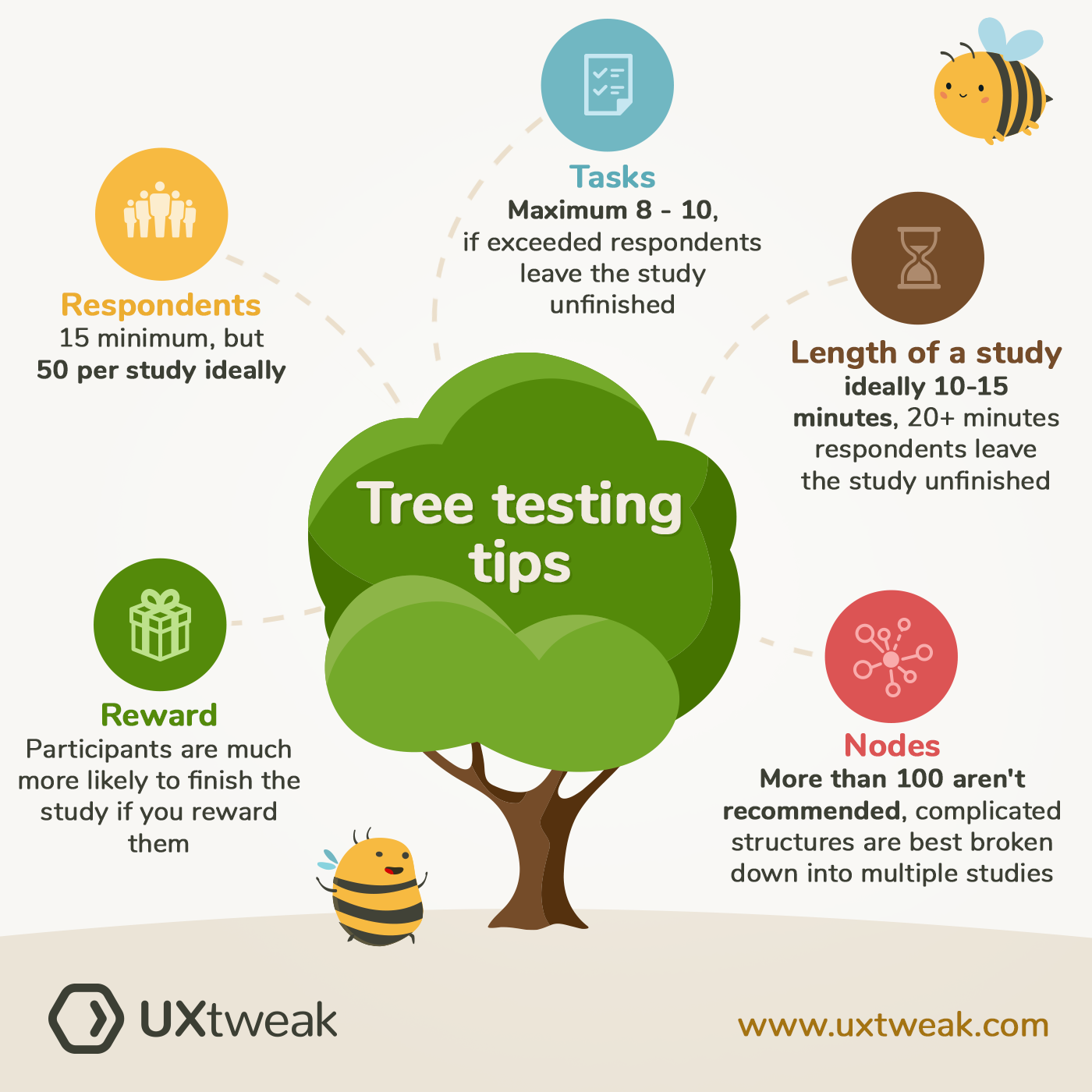

Tree testing

Tree testing can help you understand how well users can find items within a product's structure, essentially testing the "findability" of information.

In a tree test, users are presented with a simplified version of the product's structure, often represented as a text-based tree. They are then given tasks that require them to navigate this tree to find specific items.

Their journey through the tree, including the paths they take and any difficulties encountered, provides valuable insight into the effectiveness of the product's information architecture.

Evaluative research through tree testing can highlight potential issues such as confusing category names, poorly structured paths, or misaligned user expectations about where to find certain items.

Closed card sorting

Closed card sorting involves users organizing items into pre-existing categories, thereby shedding light on how they perceive and classify information.

In a closed card sort, participants are given a set of cards, each labeled with a topic or feature, and a list of category names. The task for the users is to sort these cards into the provided categories in a way that makes sense to them.

As users engage in this activity, researchers can gather insights into how they group information and understand their logic and reasoning.

This type of research can identify patterns in how users categorize information, highlight inconsistencies or confusion in the current categorization or labeling, and suggest improvements.

Boost your evaluative research with surveys

Evaluative research plays a vital role in the product development cycle. While it is typically associated with later stages of product development, it can and should be conducted at various points to ensure the product's continued success.

Surveys, one of the key tools used in evaluative research, can turn a spotlight on areas of your product that users love, as well as highlight those that could benefit from refinement or improvement.

With Survicate, you can keep your finger on the pulse of user feedback and maintain a competitive edge. Simply sign up for free , integrate surveys into your evaluative research, and watch as they provide the fuel you need to drive your product's success to new heights.

We’re also there

What is evaluation? And how does it differ from research? by Dana Wanzer

Hi! I’m Dana Wanzer, doctoral candidate at Claremont Graduate University and an avid #EvalTwitter user!

Many people new to evaluation—students and clients alike—struggle with understanding what evaluation is, and many evaluators struggle with how to communicate evaluation to others. This issue is particularly difficult when evaluation is so similar to related fields like auditing and research.

There are many great resources on what evaluation is and how it differs from research, including John LaVelle’s AEA365 blog post , Sandra Mathison’s book chapter , and Patricia Rogers’ Better Evaluation blog post . I wanted to examine these findings more in depth, so I conducted a study with AEA and AERA members to see how evaluators and researchers defined program evaluation and differentiated evaluation from research.

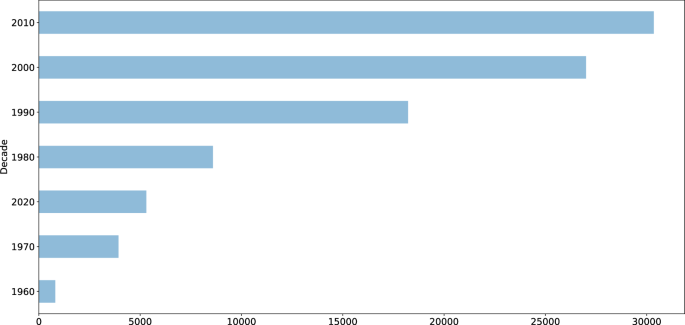

In this study, I recruited members of AEA (who were primarily members of the PreK-12 Educational Evaluation and Youth-Focused Evaluation TIGs) and members of Division H (Research, Evaluation, and Assessment in Schools) of the American Educational Research Association (AERA). A total of 522 participants completed the survey which, among some other questions, had them define evaluation, choose which of the below diagrams matches their definition of evaluation, and rate how much evaluation and research differs across a variety of study areas (e.g., purpose, audience, design, methods, drawing conclusions, reporting results).

Lesson Learned : Evaluators and researchers alike mostly define evaluation like Scriven’s definition of determining the merit, significance, or worth of something—essentially, coming to a value judgment. However, many evaluators also think evaluation is about learning, informing decision making, and improving programming, indicating the purpose of evaluation beyond simply the process .

Lesson Learned : Mathison described five ways in which evaluation and research could be related:

Half of participants thought research and evaluation overlap like a Venn diagram, which is similar to the hourglass model from LaVelle’s blog post, and a third thought evaluation is a sub-component of research. However, evaluators were more likely to think research and evaluation intersect whereas researchers were more likely to think evaluation is a sub-component of research. Evaluators are seeing greater distinction between evaluation and research than researchers are!

Lesson Learned : Participants agreed that evaluation most differs from research by the purpose, audience, providing recommendations, disseminating results, and generalizing results and are most similar in study designs, methods, and analyses. However, more evaluators thought evaluation and research differed greatly across a multitude of study-related factors like these compared to researchers.