- Our Mission

A Framework for Lesson Planning

Using learning intentions and success criteria can help teachers ensure that their activities align with what they want students to know.

As an instructional coach, I collaborate with nearly 65 teachers at an urban high school. My goal is to support teachers of many subjects in embedding literacy in their lessons without disrupting their classroom objectives.

I often work with our novice teachers and student teachers by reviewing their lesson plans and recommending literacy skills that reinforce their learning intentions and success criteria, which are defined by Douglas Fisher and Nancy Frey as “what you want students to know and be able to do by the end of one or more lessons.” Without learning intentions and success criteria, they write, “lessons wander and students become confused and frustrated.”

When I ask new teachers to tell me the purpose of their lessons, they often describe the activities they’ve created. For example, recently I was collaborating with a student teacher who was eager to teach the Bill of Rights to her freshmen. She began our conversation by explaining that she was going to read real-life scenarios with differing perspectives and ask students to move to the front of the classroom if they agreed with a particular scenario or to the back of the classroom if they disagreed. Afterward, she would ask students to explain their decisions.

Her excitement was palpable. She showed me the scenarios she had written, the “Agree” and “Disagree” signs she had created, and the worksheet she had designed so that students could brainstorm their own Bill of Rights.

When she finished, I commended her on the work she had done. Clearly, she had thought about the activity in detail. Next I asked her about the point of the lesson—what she wanted students to get out of it.

What she wanted—for her students to know what the Bill of Rights is, where to find it, why it’s important, and why we still need it today—was not actually conveyed by the activity. She hadn’t written a learning intention and the accompanying success criteria yet because she had been so excited to refine her activity. Without them, however, all she had was an activity—one that was not aligned with her goals for the day.

Learning Intentions and Success Criteria

Crafting a quality learning intention takes planning. Often, teachers will use an activity as their learning intention—but a learning intention goes beyond an activity. It focuses on the goal of the learning—the thing we want our students to know and do. The learning intention helps students stay focused and involved.

It’s important to create the learning intention first, and then determine the success criteria that students can use to assess their understanding—and then create the activity and some open-ended questions that help students learn.

When I was working with the teacher on her Bill of Rights lesson, we took a step back to develop the learning intention and its success criteria. The learning intention was this: “I can explain the Bill of Rights, its purpose, and its relevance to my life.” The success criteria were built around students’ ability to annotate and paraphrase the Bill of Rights, and to explain its importance, both in general and in their own lives. Annotating, paraphrasing, and analyzing are skills that are based on ACT College and Career Readiness Standards and Common Core State Standards, and they could be seamlessly incorporated into the lesson with minimal effort.

Learning intentions and success criteria are valuable across all subjects. In algebra, for example, a learning intention might be “I can understand the structure of a coordinate grid and relate the procedure of plotting points in quadrants to the structure of a coordinate grid.” The success criteria for this intention could be that students can talk and write about that procedure, using the correct vocabulary; that they can plot and label points in each quadrant on a coordinate grid; and that they can create a rule about coordinates for each quadrant.

In environmental science, if the learning intention is “I can recognize the history, interactions, and trends of climate change,” the success criteria could be that students are able to locate credible research about the history of climate change and share their research with their peers, that they can demonstrate the interactions of climate change and explain the value of those interactions, and that they can show the trends of climate change utilizing a graph and explain the value of the trends.

A Way to Focus Lesson Planning

Although engaging students in their learning is certainly necessary, the student teacher I was working with became acutely aware of the value of the skills she was attempting to help students develop and why those skills—not the activity—should drive instruction.

During her next class, she posted the learning intention and success criteria where students could readily see them. Next, she asked her students to paraphrase the success criteria, making sure they understood what they were about to do. She referred to the learning intention and success criteria several times throughout the lesson so that students could determine their own level of understanding and, if necessary, decide which skills they understood and which ones still needed support. She followed up with an exit ticket, asking students what they had learned in the lesson, how they learned it, and why learning it was important.

No matter what subject you teach, as you plan your instruction, ask yourself these questions:

- What do you want your students to know? Why is that important?

- Can they learn this information another way? How?

Only once you’ve thought though your answers should you begin writing your learning intention and success criteria. Keep the activities you’ve created—but don’t make them the center of the lesson or the goal of the lesson. Spend your time designing a learning intention and success criteria that will support your students’ learning and skills that they can apply to all facets of their academic life.

The English Classroom

A GUIDE FOR PRESERVICE AND GRADUATE TEACHERS

Learning Intentions

The situation.

Students need to understand the overall purpose of learning.

The Solution

Students al ways need to understand why the learning is important.

Learning Intention

A learning intention is a statement that summarises what a student should know , understand or be able to do at the end of a lesson or series of lessons. The purpose of a learning intention is to ensure that students understand the direction and purpose of the lesson. These statements are presented at the start of a lesson (Something we call ‘visible learning’) and should be discussed with students throughout the lesson, or when necessary. They are used to summarise the learning; that is, we return to the learning intentions to evaluate whether students understand what they explored.

As mentioned, learning intentions are written with the stem: know , understand or be able to . For example:

- Know the definition of a metaphor.

- Understand how metaphors are used to create imagery in a text.

- Be able to identify three metaphors in a short poem.

As you can see, the stems a associated with levels of thinking . When sequencing your lessons, consider whether the skills students are learning are low order, such as know a definition, or higher-order, such as applying this knowledge to a text.

How to Present Learning Intention

The learning intentions should be presented on the whiteboard and/or powerpoint, depending on the context of your classroom. Unpack the language in the statement. If a word is unfamiliar to a student, tell them why. For example, know the definition of a metaphor. This might be the first time students have come across the word metaphor. That is okay. Reinforce that you will be looking at this new vocabulary at the beginning of the lesson and they can practice understand the term later.

The language needs to be clear and direct, much like a SMART goal. Furthermore, it needs to be something that they can achieve based on their current skill level. The skill itself cannot be so complicated that they cannot obtain a rudimentary understanding from their prior knowledge.

When introducing the learning intention, quiz students on what they know. You will be surprised at what information that they may come across or even how accurate their guesses can be. Use this prior knowledge to help guide their understanding of what they will learn.

Stop and reflect

After each activity, stop and pauses. Ask students whether they feel they’re working towards the learning intention successfully. This will help you understand whether they are on the right track or you need to adjust your teaching.

Share this:

Published by The English Classroom

View all posts by The English Classroom

3 thoughts on “ Learning Intentions ”

- Pingback: Success Criteria | Cosmik Egg

- Pingback: Designing Learning Programs | The English Classroom

- Pingback: Teaching…Propaganda | The English Classroom

Leave a comment Cancel reply

- Already have a WordPress.com account? Log in now.

- Subscribe Subscribed

- Copy shortlink

- Report this content

- View post in Reader

- Manage subscriptions

- Collapse this bar

A Step-by-Step Plan for Teaching Narrative Writing

July 29, 2018

Can't find what you are looking for? Contact Us

Listen to this post as a podcast:

Sponsored by Peergrade and Microsoft Class Notebook

This post contains Amazon Affiliate links. When you make a purchase through these links, Cult of Pedagogy gets a small percentage of the sale at no extra cost to you.

“Those who tell the stories rule the world.” This proverb, attributed to the Hopi Indians, is one I wish I’d known a long time ago, because I would have used it when teaching my students the craft of storytelling. With a well-told story we can help a person see things in an entirely new way. We can forge new relationships and strengthen the ones we already have. We can change a law, inspire a movement, make people care fiercely about things they’d never given a passing thought.

But when we study storytelling with our students, we forget all that. Or at least I did. When my students asked why we read novels and stories, and why we wrote personal narratives and fiction, my defense was pretty lame: I probably said something about the importance of having a shared body of knowledge, or about the enjoyment of losing yourself in a book, or about the benefits of having writing skills in general.

I forgot to talk about the power of story. I didn’t bother to tell them that the ability to tell a captivating story is one of the things that makes human beings extraordinary. It’s how we connect to each other. It’s something to celebrate, to study, to perfect. If we’re going to talk about how to teach students to write stories, we should start by thinking about why we tell stories at all . If we can pass that on to our students, then we will be going beyond a school assignment; we will be doing something transcendent.

Now. How do we get them to write those stories? I’m going to share the process I used for teaching narrative writing. I used this process with middle school students, but it would work with most age groups.

A Note About Form: Personal Narrative or Short Story?

When teaching narrative writing, many teachers separate personal narratives from short stories. In my own classroom, I tended to avoid having my students write short stories because personal narratives were more accessible. I could usually get students to write about something that really happened, while it was more challenging to get them to make something up from scratch.

In the “real” world of writers, though, the main thing that separates memoir from fiction is labeling: A writer might base a novel heavily on personal experiences, but write it all in third person and change the names of characters to protect the identities of people in real life. Another writer might create a short story in first person that reads like a personal narrative, but is entirely fictional. Just last weekend my husband and I watched the movie Lion and were glued to the screen the whole time, knowing it was based on a true story. James Frey’s book A Million Little Pieces sold millions of copies as a memoir but was later found to contain more than a little bit of fiction. Then there are unique books like Curtis Sittenfeld’s brilliant novel American Wife , based heavily on the early life of Laura Bush but written in first person, with fictional names and settings, and labeled as a work of fiction. The line between fact and fiction has always been really, really blurry, but the common thread running through all of it is good storytelling.

With that in mind, the process for teaching narrative writing can be exactly the same for writing personal narratives or short stories; it’s the same skill set. So if you think your students can handle the freedom, you might decide to let them choose personal narrative or fiction for a narrative writing assignment, or simply tell them that whether the story is true doesn’t matter, as long as they are telling a good story and they are not trying to pass off a fictional story as fact.

Here are some examples of what that kind of flexibility could allow:

- A student might tell a true story from their own experience, but write it as if it were a fiction piece, with fictional characters, in third person.

- A student might create a completely fictional story, but tell it in first person, which would give it the same feel as a personal narrative.

- A student might tell a true story that happened to someone else, but write it in first person, as if they were that person. For example, I could write about my grandmother’s experience of getting lost as a child, but I might write it in her voice.

If we aren’t too restrictive about what we call these pieces, and we talk about different possibilities with our students, we can end up with lots of interesting outcomes. Meanwhile, we’re still teaching students the craft of narrative writing.

A Note About Process: Write With Your Students

One of the most powerful techniques I used as a writing teacher was to do my students’ writing assignments with them. I would start my own draft at the same time as they did, composing “live” on the classroom projector, and doing a lot of thinking out loud so they could see all the decisions a writer has to make.

The most helpful parts for them to observe were the early drafting stage, where I just scratched out whatever came to me in messy, run-on sentences, and the revision stage, where I crossed things out, rearranged, and made tons of notes on my writing. I have seen over and over again how witnessing that process can really help to unlock a student’s understanding of how writing actually gets made.

A Narrative Writing Unit Plan

Before I get into these steps, I should note that there is no one right way to teach narrative writing, and plenty of accomplished teachers are doing it differently and getting great results. This just happens to be a process that has worked for me.

Step 1: Show Students That Stories Are Everywhere

Getting our students to tell stories should be easy. They hear and tell stories all the time. But when they actually have to put words on paper, they forget their storytelling abilities: They can’t think of a topic. They omit relevant details, but go on and on about irrelevant ones. Their dialogue is bland. They can’t figure out how to start. They can’t figure out how to end.

So the first step in getting good narrative writing from students is to help them see that they are already telling stories every day . They gather at lockers to talk about that thing that happened over the weekend. They sit at lunch and describe an argument they had with a sibling. Without even thinking about it, they begin sentences with “This one time…” and launch into stories about their earlier childhood experiences. Students are natural storytellers; learning how to do it well on paper is simply a matter of studying good models, then imitating what those writers do.

So start off the unit by getting students to tell their stories. In journal quick-writes, think-pair-shares, or by playing a game like Concentric Circles , prompt them to tell some of their own brief stories: A time they were embarrassed. A time they lost something. A time they didn’t get to do something they really wanted to do. By telling their own short anecdotes, they will grow more comfortable and confident in their storytelling abilities. They will also be generating a list of topic ideas. And by listening to the stories of their classmates, they will be adding onto that list and remembering more of their own stories.

And remember to tell some of your own. Besides being a good way to bond with students, sharing your stories will help them see more possibilities for the ones they can tell.

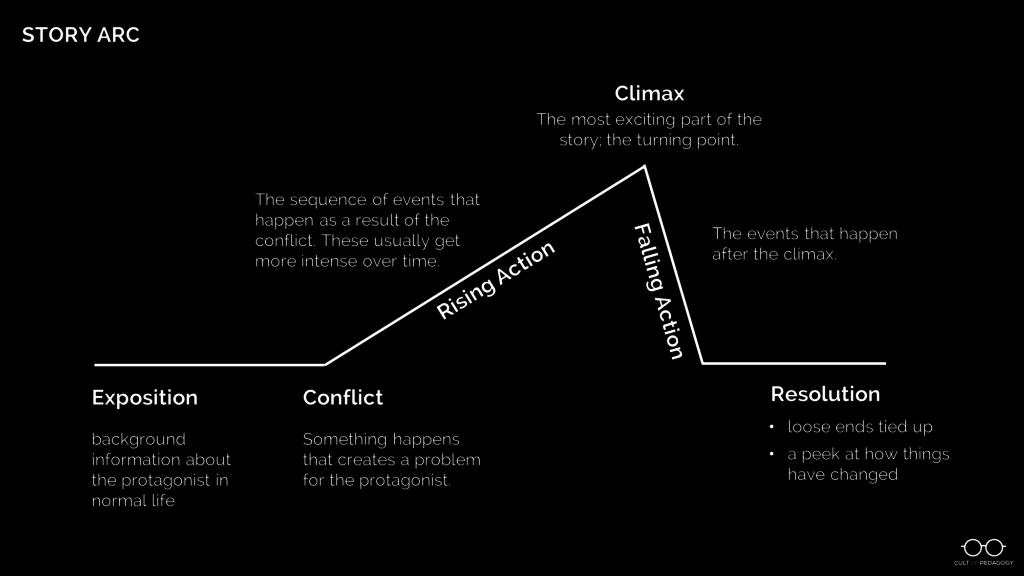

Step 2: Study the Structure of a Story

Now that students have a good library of their own personal stories pulled into short-term memory, shift your focus to a more formal study of what a story looks like.

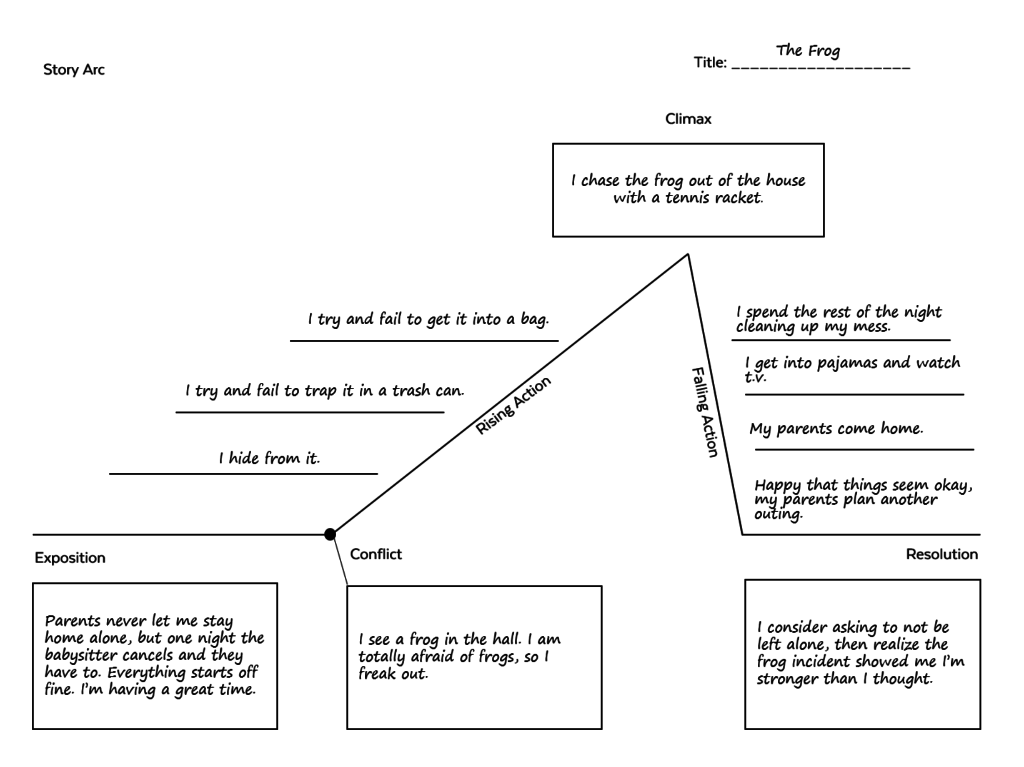

Use a diagram to show students a typical story arc like the one below. Then, using a simple story (try a video like The Present or Room ), fill out the story arc with the components from that story. Once students have seen this story mapped out, have them try it with another one, like a story you’ve read in class, a whole novel, or another short video.

Step 3: Introduce the Assignment

Up to this point, students have been immersed in storytelling. Now give them specific instructions for what they are going to do. Share your assignment rubric so they understand the criteria that will be used to evaluate them; it should be ready and transparent right from the beginning of the unit. As always, I recommend using a single point rubric for this.

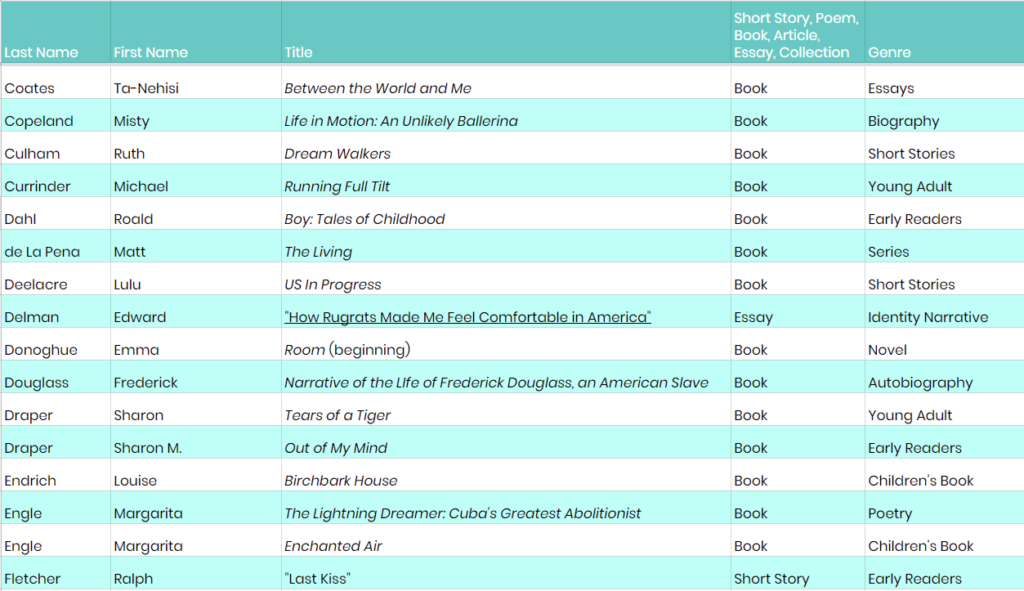

Step 4: Read Models

Once the parameters of the assignment have been explained, have students read at least one model story, a mentor text that exemplifies the qualities you’re looking for. This should be a story on a topic your students can kind of relate to, something they could see themselves writing. For my narrative writing unit (see the end of this post), I wrote a story called “Frog” about a 13-year-old girl who finally gets to stay home alone, then finds a frog in her house and gets completely freaked out, which basically ruins the fun she was planning for the night.

They will be reading this model as writers, looking at how the author shaped the text for a purpose, so that they can use those same strategies in their own writing. Have them look at your rubric and find places in the model that illustrate the qualities listed in the rubric. Then have them complete a story arc for the model so they can see the underlying structure.

Ideally, your students will have already read lots of different stories to look to as models. If that isn’t the case, this list of narrative texts recommended by Cult of Pedagogy followers on Twitter would be a good place to browse for titles that might be right for your students. Keep in mind that we have not read most of these stories, so be sure to read them first before adopting them for classroom use.

Step 5: Story Mapping

At this point, students will need to decide what they are going to write about. If they are stuck for a topic, have them just pick something they can write about, even if it’s not the most captivating story in the world. A skilled writer could tell a great story about deciding what to have for lunch. If they are using the skills of narrative writing, the topic isn’t as important as the execution.

Have students complete a basic story arc for their chosen topic using a diagram like the one below. This will help them make sure that they actually have a story to tell, with an identifiable problem, a sequence of events that build to a climax, and some kind of resolution, where something is different by the end. Again, if you are writing with your students, this would be an important step to model for them with your own story-in-progress.

Step 6: Quick Drafts

Now, have students get their chosen story down on paper as quickly as possible: This could be basically a long paragraph that would read almost like a summary, but it would contain all the major parts of the story. Model this step with your own story, so they can see that you are not shooting for perfection in any way. What you want is a working draft, a starting point, something to build on for later, rather than a blank page (or screen) to stare at.

Step 7: Plan the Pacing

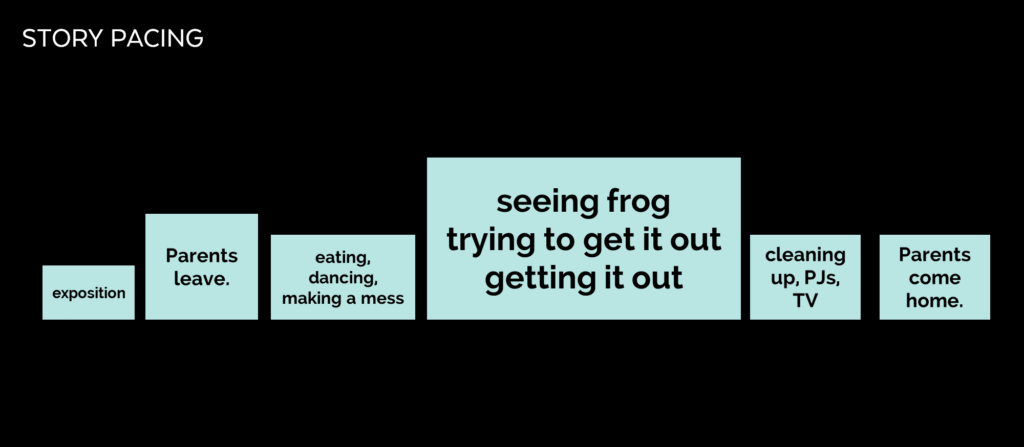

Now that the story has been born in raw form, students can begin to shape it. This would be a good time for a lesson on pacing, where students look at how writers expand some moments to create drama and shrink other moments so that the story doesn’t drag. Creating a diagram like the one below forces a writer to decide how much space to devote to all of the events in the story.

Step 8: Long Drafts

With a good plan in hand, students can now slow down and write a proper draft, expanding the sections of their story that they plan to really draw out and adding in more of the details that they left out in the quick draft.

Step 9: Workshop

Once students have a decent rough draft—something that has a basic beginning, middle, and end, with some discernible rising action, a climax of some kind, and a resolution, you’re ready to shift into full-on workshop mode. I would do this for at least a week: Start class with a short mini-lesson on some aspect of narrative writing craft, then give students the rest of the period to write, conference with you, and collaborate with their peers. During that time, they should focus some of their attention on applying the skill they learned in the mini-lesson to their drafts, so they will improve a little bit every day.

Topics for mini-lessons can include:

- How to weave exposition into your story so you don’t give readers an “information dump”

- How to carefully select dialogue to create good scenes, rather than quoting everything in a conversation

- How to punctuate and format dialogue so that it imitates the natural flow of a conversation

- How to describe things using sensory details and figurative language; also, what to describe…students too often give lots of irrelevant detail

- How to choose precise nouns and vivid verbs, use a variety of sentence lengths and structures, and add transitional words, phrases, and features to help the reader follow along

- How to start, end, and title a story

Step 10: Final Revisions and Edits

As the unit nears its end, students should be shifting away from revision , in which they alter the content of a piece, toward editing , where they make smaller changes to the mechanics of the writing. Make sure students understand the difference between the two: They should not be correcting each other’s spelling and punctuation in the early stages of this process, when the focus should be on shaping a better story.

One of the most effective strategies for revision and editing is to have students read their stories out loud. In the early stages, this will reveal places where information is missing or things get confusing. Later, more read-alouds will help them immediately find missing words, unintentional repetitions, and sentences that just “sound weird.” So get your students to read their work out loud frequently. It also helps to print stories on paper: For some reason, seeing the words in print helps us notice things we didn’t see on the screen.

To get the most from peer review, where students read and comment on each other’s work, more modeling from you is essential: Pull up a sample piece of writing and show students how to give specific feedback that helps, rather than simply writing “good detail” or “needs more detail,” the two comments I saw exchanged most often on students’ peer-reviewed papers.

Step 11: Final Copies and Publication

Once revision and peer review are done, students will hand in their final copies. If you don’t want to get stuck with 100-plus papers to grade, consider using Catlin Tucker’s station rotation model , which keeps all the grading in class. And when you do return stories with your own feedback, try using Kristy Louden’s delayed grade strategy , where students don’t see their final grade until they have read your written feedback.

Beyond the standard hand-in-for-a-grade, consider other ways to have students publish their stories. Here are some options:

- Stories could be published as individual pages on a collaborative website or blog.

- Students could create illustrated e-books out of their stories.

- Students could create a slideshow to accompany their stories and record them as digital storytelling videos. This could be done with a tool like Screencastify or Screencast-O-Matic .

So this is what worked for me. If you’ve struggled to get good stories from your students, try some or all of these techniques next time. I think you’ll find that all of your students have some pretty interesting stories to tell. Helping them tell their stories well is a gift that will serve them for many years after they leave your classroom. ♦

Want this unit ready-made?

If you’re a writing teacher in grades 7-12 and you’d like a classroom-ready unit like the one described above, including slideshow mini-lessons on 14 areas of narrative craft, a sample narrative piece, editable rubrics, and other supplemental materials to guide students through every stage of the process, take a look at my Narrative Writing unit . Just click on the image below and you’ll be taken to a page where you can read more and see a detailed preview of what’s included.

What to Read Next

Categories: Instruction , Podcast

Tags: English language arts , Grades 6-8 , Grades 9-12 , teaching strategies

52 Comments

Wow, this is a wonderful guide! If my English teachers had taught this way, I’m sure I would have enjoyed narrative writing instead of dreading it. I’ll be able to use many of these suggestions when writing my blog! BrP

Lst year I was so discouraged because the short stories looked like the quick drafts described in this article. I thought I had totally failed until I read this and realized I did not fai,l I just needed to complete the process. Thank you!

I feel like you jumped in my head and connected my thoughts. I appreciate the time you took to stop and look closely at form. I really believe that student-writers should see all dimensions of narrative writing and be able to live in whichever style and voice they want for their work.

Can’t thank you enough for this. So well curated that one can just follow it blindly and ace at teaching it. Thanks again!

Great post! I especially liked your comments about reminding kids about the power of storytelling. My favourite podcasts and posts from you are always about how to do things in the classroom and I appreciate the research you do.

On a side note, the ice breakers are really handy. My kids know each other really well (rural community), and can tune out pretty quickly if there is nothing new to learn about their peers, but they like the games (and can remember where we stopped last time weeks later). I’ve started changing them up with ‘life questions’, so the editable version is great!

I love writing with my students and loved this podcast! A fun extension to this narrative is to challenge students to write another story about the same event, but use the perspective of another “character” from the story. Books like Wonder (R.J. Palacio) and Wanderer (Sharon Creech) can model the concept for students.

Thank you for your great efforts to reveal the practical writing strategies in layered details. As English is not my first language, I need listen to your podcast and read the text repeatedly so to fully understand. It’s worthy of the time for some great post like yours. I love sharing so I send the link to my English practice group that it can benefit more. I hope I could be able to give you some feedback later on.

Thank you for helping me get to know better especially the techniques in writing narrative text. Im an English teacher for 5years but have little knowledge on writing. I hope you could feature techniques in writing news and fearute story. God bless and more power!

Thank you for this! I am very interested in teaching a unit on personal narrative and this was an extremely helpful breakdown. As a current student teacher I am still unsure how to approach breaking down the structures of different genres of writing in a way that is helpful for me students but not too restrictive. The story mapping tools you provided really allowed me to think about this in a new way. Writing is such a powerful way to experience the world and more than anything I want my students to realize its power. Stories are how we make sense of the world and as an English teacher I feel obligated to give my students access to this particular skill.

The power of story is unfathomable. There’s this NGO in India doing some great work in harnessing the power of storytelling and plots to brighten children’s lives and enlighten them with true knowledge. Check out Katha India here: http://bit.ly/KathaIndia

Thank you so much for this. I did not go to college to become a writing professor, but due to restructuring in my department, I indeed am! This is a wonderful guide that I will use when teaching the narrative essay. I wonder if you have a similar guide for other modes such as descriptive, process, argument, etc.?

Hey Melanie, Jenn does have another guide on writing! Check out A Step-by-Step Plan for Teaching Argumentative Writing .

Hi, I am also wondering if there is a similar guide for descriptive writing in particular?

Hey Melanie, unfortunately Jenn doesn’t currently have a guide for descriptive writing. She’s always working on projects though, so she may get around to writing a unit like this in the future. You can always check her Teachers Pay Teachers page for an up-to-date list of materials she has available. Thanks!

I want to write about the new character in my area

That’s great! Let us know if you need any supports during your writing process!

I absolutely adore this unit plan. I teach freshmen English at a low-income high school and wanted to find something to help my students find their voice. It is not often that I borrow material, but I borrowed and adapted all of it in the order that it is presented! It is cohesive, understandable, and fun. Thank you!!

So glad to hear this, Nicole!

Thanks sharing this post. My students often get confused between personal narratives and short stories. Whenever I ask them to write a short story, she share their own experiences and add a bit of fiction in it to make it interesting.

Thank you! My students have loved this so far. I do have a question as to where the “Frog” story mentioned in Step 4 is. I could really use it! Thanks again.

This is great to hear, Emily! In Step 4, Jenn mentions that she wrote the “Frog” story for her narrative writing unit . Just scroll down the bottom of the post and you’ll see a link to the unit.

I also cannot find the link to the short story “Frog”– any chance someone can send it or we can repost it?

This story was written for Jenn’s narrative writing unit. You can find a link to this unit in Step 4 or at the bottom of the article. Hope this helps.

I cannot find the frog story mentioned. Could you please send the link.? Thank you

Hi Michelle,

The Frog story was written for Jenn’s narrative writing unit. There’s a link to this unit in Step 4 and at the bottom of the article.

Debbie- thanks for you reply… but there is no link to the story in step 4 or at the bottom of the page….

Hey Shawn, the frog story is part of Jenn’s narrative writing unit, which is available on her Teachers Pay Teachers site. The link Debbie is referring to at the bottom of this post will take you to her narrative writing unit and you would have to purchase that to gain access to the frog story. I hope this clears things up.

Thank you so much for this resource! I’m a high school English teacher, and am currently teaching creative writing for the first time. I really do value your blog, podcast, and other resources, so I’m excited to use this unit. I’m a cyber school teacher, so clear, organized layout is important; and I spend a lot of time making sure my content is visually accessible for my students to process. Thanks for creating resources that are easy for us teachers to process and use.

Do you have a lesson for Informative writing?

Hey Cari, Jenn has another unit on argumentative writing , but doesn’t have one yet on informative writing. She may develop one in the future so check back in sometime.

I had the same question. Informational writing is so difficult to have a good strong unit in when you have so many different text structures to meet and need text-dependent writing tasks.

Creating an informational writing unit is still on Jenn’s long list of projects to get to, but in the meantime, if you haven’t already, check out When We All Teach Text Structures, Everyone Wins . It might help you out!

This is a great lesson! It would be helpful to see a finished draft of the frog narrative arc. Students’ greatest challenge is transferring their ideas from the planner to a full draft. To see a full sample of how this arc was transformed into a complete narrative draft would be a powerful learning tool.

Hi Stacey! Jenn goes into more depth with the “Frog” lesson in her narrative writing unit – this is where you can find a sample of what a completed story arc might look. Also included is a draft of the narrative. If interested in checking out the unit and seeing a preview, just scroll down to the bottom of the post and click on the image. Hope this helps!

Helped me learn for an entrance exam thanks very much

Is the narrative writing lesson you talk about in https://www.cultofpedagogy.com/narrative-writing/

Also doable for elementary students you think, and if to what levels?

Love your work, Sincerely, Zanyar

Hey Zanyar,

It’s possible the unit would work with 4th and 5th graders, but Jenn definitely wouldn’t recommend going any younger. The main reason for this is that some of the mini-lessons in the unit could be challenging for students who are still concrete thinkers. You’d likely need to do some adjusting and scaffolding which could extend the unit beyond the 3 weeks. Having said that, I taught 1st grade and found the steps of the writing process, as described in the post, to be very similar. Of course learning targets/standards were different, but the process itself can be applied to any grade level (modeling writing, using mentor texts to study how stories work, planning the structure of the story, drafting, elaborating, etc.) Hope this helps!

This has made my life so much easier. After teaching in different schools systems, from the American, to British to IB, one needs to identify the anchor standards and concepts, that are common between all these systems, to build well balanced thematic units. Just reading these steps gave me the guidance I needed to satisfy both the conceptual framework the schools ask for and the standards-based practice. Thank you Thank you.

Would this work for teaching a first grader about narrative writing? I am also looking for a great book to use as a model for narrative writing. Veggie Monster is being used by his teacher and he isn’t connecting with this book in the least bit, so it isn’t having a positive impact. My fear is he will associate this with writing and I don’t want a negative association connected to such a beautiful process and experience. Any suggestions would be helpful.

Thank you for any information you can provide!

Although I think the materials in the actual narrative writing unit are really too advanced for a first grader, the general process that’s described in the blog post can still work really well.

I’m sorry your child isn’t connecting with The Night of the Veggie Monster. Try to keep in mind that the main reason this is used as a mentor text is because it models how a small moment story can be told in a big way. It’s filled with all kinds of wonderful text features that impact the meaning of the story – dialogue, description, bold text, speech bubbles, changes in text size, ellipses, zoomed in images, text placement, text shape, etc. All of these things will become mini-lessons throughout the unit. But there are lots of other wonderful mentor texts that your child might enjoy. My suggestion for an early writer, is to look for a small moment text, similar in structure, that zooms in on a problem that a first grader can relate to. In addition to the mentor texts that I found in this article , you might also want to check out Knuffle Bunny, Kitten’s First Full Moon, When Sophie Gets Angry Really Really Angry, and Whistle for Willie. Hope this helps!

I saw this on Pinterest the other day while searching for examples of narritives units/lessons. I clicked on it because I always click on C.o.P stuff 🙂 And I wasn’t disapointed. I was intrigued by the connection of narratives to humanity–even if a student doesn’t identify as a writer, he/she certainly is human, right? I really liked this. THIS clicked with me.

A few days after I read the P.o.C post, I ventured on to YouTube for more ideas to help guide me with my 8th graders’ narrative writing this coming spring. And there was a TEDx video titled, “The Power of Personal Narrative” by J. Christan Jensen. I immediately remembered the line from the article above that associated storytelling with “power” and how it sets humans apart and if introduced and taught as such, it can be “extraordinary.”

I watched the video and to the suprise of my expectations, it was FANTASTIC. Between Jennifer’s post and the TEDx video ignited within me some major motivation and excitement to begin this unit.

Thanks for sharing this with us! So glad that Jenn’s post paired with another text gave you some motivation and excitement. I’ll be sure to pass this on to Jenn!

Thank you very much for this really helpful post! I really love the idea of helping our students understand that storytelling is powerful and then go on to teach them how to harness that power. That is the essence of teaching literature or writing at any level. However, I’m a little worried about telling students that whether a piece of writing is fact or fiction does not matter. It in fact matters a lot precisely because storytelling is powerful. Narratives can shape people’s views and get their emotions involved which would, in turn, motivate them to act on a certain matter, whether for good or for bad. A fictional narrative that is passed as factual could cause a lot of damage in the real world. I believe we should. I can see how helping students focus on writing the story rather than the truth of it all could help refine the needed skills without distractions. Nevertheless, would it not be prudent to teach our students to not just harness the power of storytelling but refrain from misusing it by pushing false narratives as factual? It is true that in reality, memoirs pass as factual while novels do as fictional while the opposite may be true for both cases. I am not too worried about novels passing as fictional. On the other hand, fictional narratives masquerading as factual are disconcerting and part of a phenomenon that needs to be fought against, not enhanced or condoned in education. This is especially true because memoirs are often used by powerful people to write/re-write history. I would really like to hear your opinion on this. Thanks a lot for a great post and a lot of helpful resources!

Thank you so much for this. Jenn and I had a chance to chat and we can see where you’re coming from. Jenn never meant to suggest that a person should pass off a piece of fictional writing as a true story. Good stories can be true, completely fictional, or based on a true story that’s mixed with some fiction – that part doesn’t really matter. However, what does matter is how a student labels their story. We think that could have been stated more clearly in the post , so Jenn decided to add a bit about this at the end of the 3rd paragraph in the section “A Note About Form: Personal Narrative or Short Story?” Thanks again for bringing this to our attention!

You have no idea how much your page has helped me in so many ways. I am currently in my teaching credential program and there are times that I feel lost due to a lack of experience in the classroom. I’m so glad I came across your page! Thank you for sharing!

Thanks so much for letting us know-this means a whole lot!

No, we’re sorry. Jenn actually gets this question fairly often. It’s something she considered doing at one point, but because she has so many other projects she’s working on, she’s just not gotten to it.

I couldn’t find the story

Hi, Duraiya. The “Frog” story is part of Jenn’s narrative writing unit, which is available on her Teachers Pay Teachers site. The link at the bottom of this post will take you to her narrative writing unit, which you can purchase to gain access to the story. I hope this helps!

I am using this step-by-step plan to help me teach personal narrative story writing. I wanted to show the Coca-Cola story, but the link says the video is not available. Do you have a new link or can you tell me the name of the story so I can find it?

Thank you for putting this together.

Hi Corri, sorry about that. The Coca-Cola commercial disappeared, so Jenn just updated the post with links to two videos with good stories. Hope this helps!

Leave a Reply

Your email address will not be published.

You are here

Writing an argument - learning intention guide.

- This assessment schedule could be used with the following resources: Persuasive language III , Persuasive speech II , P.E. - is it worth it? , Organ donation , Single Sex Education , School Uniforms , or with teacher-developed assessment tasks.

- This assessment guide could be used for either self- or peer-assessment purposes, or a combination of both.

- After selecting the criteria for the focus of the teaching and learning, teachers can print off a guide without the reflection boxes (which are present on the student's guide).

- The teacher's guide could be enlarged as a chart for sharing, and/or for working up examples in the contexts relevant to students.

- Students should be familiar with how to self- and/or peer-assess before using this guide, and with the features of an argument.

- Ideally, the assessment would be followed-up with a teacher conference.

- The 'next time' section of the assessment guide is for students to set their next goals. This section could be glued into the student's work book as a record.

- When explaining to students how to complete the assessment task, teachers could include the following points:

- Use the assessment guide to help you plan and write your argument.

- Write your argument.

- When you have finished use the guide to assess and reflect on your work.

- Writing an explanation - Learning intention guide

- Learning intention guides

- Proofreading your writing - Learning intention guide

- Writing a report - Learning intention guide

- Writing a recount - Learning intention guide

- Writing instructions - Learning intention guide

- Editing your writing - Learning intention guide

What Is Learning? Essay about Learning Importance

- To find inspiration for your paper and overcome writer’s block

- As a source of information (ensure proper referencing)

- As a template for you assignment

What Is learning? 👨🎓️ Why is learning important? Find the answers here! 🔤 This essay on learning describes its outcomes and importance in one’s life.

Introduction

- The Key Concepts

Learning is a continuous process that involves the transformation of information and experience into abilities and knowledge. Learning, according to me, is a two way process that involves the learner and the educator leading to knowledge acquisition as well as capability.

It informs my educational sector by making sure that both the students and the teacher participate during the learning process to make it more real and enjoyable so that the learners can clearly understand. There are many and different learning concepts held by students and ways in which the different views affect teaching and learning.

What Is Learning? The Key Concepts

One of the learning concept held by students is, presentation of learning material that is precise. This means that any material that is meant for learning should be very clear put in a language that the learners comprehend (Blackman & Benson 2003). The material should also be detailed with many examples that are relevant to the prior knowledge of the learner.

This means that the learner must have pertinent prior knowledge. This can be obtained by the teacher explaining new ideas and words that are to be encountered in a certain field or topic that might take more consecutive lessons. Different examples assist the students in approaching ideas in many perspectives.

The learner is able to get similarities from the many examples given thus leading to a better understanding of a concept since the ideas are related and linked.

Secondly, new meanings should be incorporated into the students’ prior knowledge, instead of remembering only the definitions or procedures. Therefore, to promote expressive learning, instructional methods that relate new information to the learner’s prior knowledge should be used.

Moreover, significant learning involves the use of evaluation methods that inspire learners to relate their existing knowledge with new ideas. For the students to comprehend complex ideas, they must be combined with the simple ideas they know.

Teaching becomes very easy when a lesson starts with simple concepts that the students are familiar with. The students should start by understanding what they know so that they can use the ideas in comprehending complex concepts. This makes learning smooth and easy for both the learner and the educator (Chermak& Weiss 1999).

Thirdly, acquisition of the basic concepts is very essential for the student to understand the threshold concepts. This is because; the basic concepts act as a foundation in learning a certain topic or procedure. So, the basic concepts must be comprehended first before proceeding to the incorporation of the threshold concepts.

This makes the student to have a clear understanding of each stage due to the possession of initial knowledge (Felder &Brent 1996). A deeper foundation of the study may also be achieved through getting the differences between various concepts clearly and by knowing the necessary as well as the unnecessary aspects. Basic concepts are normally taught in the lower classes of each level.

They include defining terms in each discipline. These terms aid in teaching in all the levels because they act as a foundation. The stage of acquiring the basics determines the students’ success in the rest of their studies.

This is because lack of basics leads to failure since the students can not understand the rest of the context in that discipline, which depends mostly on the basics. For learning to become effective to the students, the basics must be well understood as well as their applications.

Learning by use of models to explain certain procedures or ideas in a certain discipline is also another learning concept held by students. Models are helpful in explaining complex procedures and they assist the students in understanding better (Blackman & Benson 2003).

For instance, in economics, there are many models that are used by the students so that they can comprehend the essential interrelationships in that discipline. A model known as comparative static is used by the students who do economics to understand how equilibrium is used in economic reason as well as the forces that bring back equilibrium after it has been moved.

The students must know the importance of using such kind of models, the main aspect in the model and its relationship with the visual representation. A model is one of the important devices that must be used by a learner to acquire knowledge. They are mainly presented in a diagram form using symbols or arrows.

It simplifies teaching especially to the slow learners who get the concept slowly but clearly. It is the easiest and most effective method of learning complex procedures or directions. Most models are in form of flowcharts.

Learners should get used to learning incomplete ideas so that they can make more complete ideas available to them and enjoy going ahead. This is because, in the process of acquiring the threshold concepts, the prior knowledge acquired previously might be transformed.

So, the students must be ready to admit that every stage in the learning process they get an understanding that is temporary. This problem intensifies when the understanding of an idea acquired currently changes the understanding of an idea that had been taught previously.

This leads to confusion that can make the weak students lose hope. That is why the teacher should always state clear similarities as well as differences of various concepts. On the other hand, the student should be able to compare different concepts and stating their similarities as well as differences (Watkins & Regmy 1992).

The student should also be careful when dealing with concepts that seem similar and must always be attentive to get the first hand information from the teacher. Teaching and learning becomes very hard when learners do not concentrate by paying attention to what the teacher is explaining. For the serious students, learning becomes enjoyable and they do not get confused.

According to Chemkar and Weiss (1999), learners must not just sit down and listen, but they must involve themselves in some other activities such as reading, writing, discussing or solving problems. Basically, they must be very active and concentrate on what they are doing. These techniques are very essential because they have a great impact to the learners.

Students always support learning that is active than the traditional lecture methods because they master the content well and aids in the development of most skills such as writing and reading. So methods that enhance active learning motivate the learners since they also get more information from their fellow learners through discussions.

Students engage themselves in discussion groups or class presentations to break the monotony of lecture method of learning. Learning is a two way process and so both the teacher and the student must be involved.

Active learning removes boredom in the class and the students get so much involved thus improving understanding. This arouses the mind of the student leading to more concentration. During a lecture, the student should write down some of the important points that can later be expounded on.

Involvement in challenging tasks by the learners is so much important. The task should not be very difficult but rather it should just be slightly above the learner’s level of mastery. This makes the learner to get motivated and instills confidence. It leads to success of the learner due to the self confidence that aids in problem solving.

For instance, when a learner tackles a question that deemed hard and gets the answer correct, it becomes the best kind of encouragement ever. The learner gets the confidence that he can make it and this motivates him to achieve even more.

This kind of encouragement mostly occurs to the quick learners because the slow learners fail in most cases. This makes the slow learners fear tackling many problems. So, the concept might not apply to all the learners but for the slow learners who are determined, they can always seek for help incase of such a problem.

Moreover, another concept held by students is repetition because, the most essential factor in learning is efficient time in a task. For a student to study well he or she should consider repetition, that is, looking at the same material over and over again.

For instance, before a teacher comes for the lesson, the student can review notes and then review the same notes after the teacher gets out of class. So, the student reviews the notes many times thus improving the understanding level (Felder & Brent 1996). This simplifies revising for an exam because the student does not need to cram for it.

Reviewing the same material makes teaching very easy since the teacher does not need to go back to the previous material and start explaining again. It becomes very hard for those students who do not review their work at all because they do not understand the teacher well and are faced by a hard time when preparing for examinations.

Basically, learning requires quite enough time so that it can be effective. It also becomes a very big problem for those who do not sacrifice their time in reviews.

Acquisition of the main points improves understanding of the material to the student. Everything that is learnt or taught may not be of importance. Therefore, the student must be very keen to identify the main points when learning. These points should be written down or underlined because they become useful when reviewing notes before doing an exam. It helps in saving time and leads to success.

For those students who do not pay attention, it becomes very difficult for them to highlight the main points. They read for the sake of it and make the teacher undergo a very hard time during teaching. To overcome this problem, the students must be taught how to study so that learning can be effective.

Cooperative learning is also another concept held by the students. It is more detailed than a group work because when used properly, it leads to remarkable results. This is very encouraging in teaching and the learning environment as well.

The students should not work with their friends so that learning can be productive, instead every group should have at least one top level student who can assist the weak students. The groups assist them in achieving academic as well as social abilities due to the interaction. This learning concept benefits the students more because, a fellow student can explain a concept in a better way than how the teacher can explain in class.

Assignments are then given to these groups through a selected group leader (Felder& Brent 1996). Every member must be active in contributing ideas and respect of one’s ideas is necessary. It becomes very easy for the teacher to mark such kind of assignments since they are fewer than marking for each individual.

Learning becomes enjoyable because every student is given a chance to express his or her ideas freely and in a constructive manner. Teaching is also easier because the students encounter very many new ideas during the discussions. Some students deem it as time wastage but it is necessary in every discipline.

Every group member should be given a chance to become the group’s facilitator whose work is to distribute and collect assignments. Dormant students are forced to become active because every group member must contribute his or her points. Cooperative learning is a concept that requires proper planning and organization.

Completion of assignments is another student held learning concept. Its main aim is to assist the student in knowing whether the main concepts in a certain topic were understood. This acts as a kind of self evaluation to the student and also assists the teacher to know whether the students understood a certain topic. The assignments must be submitted to the respective teacher for marking.

Those students who are focused follow the teacher after the assignments have been marked for clarification purposes. This enhances learning and the student understands better. Many students differ with this idea because they do not like relating with the teacher (Marton &Beaty 1993). This leads to very poor grades since communication is a very essential factor in learning.

Teaching becomes easier and enjoyable when there is a student- teacher relationship. Assignment corrections are necessary to both the student and the teacher since the student comprehends the right method of solving a certain problem that he or she could not before.

Lazy students who do not do corrections make teaching hard for the teacher because they make the other students to lag behind. Learning may also become ineffective for them due to low levels of understanding.

Acquisition of facts is still another student held concept that aims at understanding reality. Students capture the essential facts so that they can understand how they suit in another context. Many students fail to obtain the facts because they think that they can get everything taught in class or read from books.

When studying, the student must clearly understand the topic so that he or she can develop a theme. This helps in making short notes by eliminating unnecessary information. So, the facts must always be identified and well understood in order to apply them where necessary. Teaching becomes easier when the facts are well comprehended by the students because it enhances effective learning.

Effective learning occurs when a student possesses strong emotions. A strong memory that lasts for long is linked with the emotional condition of the learner. This means that the learners will always remember well when learning is incorporated with strong emotions. Emotions develop when the students have a positive attitude towards learning (Marton& Beaty 1993).

This is because they will find learning enjoyable and exciting unlike those with a negative attitude who will find learning boring and of no use to them. Emotions affect teaching since a teacher will like to teach those students with a positive attitude towards what he is teaching rather than those with a negative attitude.

The positive attitude leads to effective learning because the students get interested in what they are learning and eventually leads to success. Learning does not become effective where students portray a negative attitude since they are not interested thus leading to failure.

Furthermore, learning through hearing is another student held concept. This concept enables them to understand what they hear thus calling for more attention and concentration. They prefer instructions that are given orally and are very keen but they also participate by speaking. Teaching becomes very enjoyable since the students contribute a lot through talking and interviewing.

Learning occurs effectively because the students involve themselves in oral reading as well as listening to recorded information. In this concept, learning is mostly enhanced by debating, presenting reports orally and interviewing people. Those students who do not prefer this concept as a method of learning do not involve themselves in debates or oral discussions but use other learning concepts.

Learners may also use the concept of seeing to understand better. This makes them remember what they saw and most of them prefer using written materials (Van Rosum & Schenk 1984). Unlike the auditory learners who grasp the concept through hearing, visual learners understand better by seeing.

They use their sight to learn and do it quietly. They prefer watching things like videos and learn from what they see. Learning occurs effectively since the memory is usually connected with visual images. Teaching becomes very easy when visual images are incorporated. They include such things like pictures, objects, graphs.

A teacher can use charts during instruction thus improving the students’ understanding level or present a demonstration for the students to see. Diagrams are also necessary because most students learn through seeing.

Use of visual images makes learning to look real and the student gets the concept better than those who learn through imaginations. This concept makes the students to use text that has got many pictures, diagrams, graphics, maps and graphs.

In learning students may also use the tactile concept whereby they gain knowledge and skills through touching. They gain knowledge mostly through manipulative. Teaching becomes more effective when students are left to handle equipments for themselves for instance in a laboratory practical. Students tend to understand better because they are able to follow instructions (Watkins & Regmy 1992).

After applying this concept, the students are able to engage themselves in making perfect drawings, making models and following procedures to make something. Learning may not take place effectively to those students who do not like manipulating because it arouses the memory and the students comprehends the concept in a better way.

Learning through analysis is also another concept held by students because they are able to plan their work in an organized manner which is based on logic ideas only. It requires individual learning and effective learning occurs when information is given in steps. This makes the teacher to structure the lessons properly and the goals should be clear.

This method of organizing ideas makes learning to become effective thus leading to success and achievement of the objectives. Analysis improves understanding of concepts to the learners (Watkins & Regmy 1992). They also understand certain procedures used in various topics because they are sequential.

Teaching and learning becomes very hard for those students who do not know how to analyze their work. Such students learn in a haphazard way thus leading to failure.

If all the learning concepts held by students are incorporated, then remarkable results can be obtained. A lot information and knowledge can be obtained through learning as long as the learner uses the best concepts for learning. Learners are also different because there are those who understand better by seeing while others understand through listening or touching.

So, it is necessary for each learner to understand the best concept to use in order to improve the understanding level. For the slow learners, extra time should be taken while studying and explanations must be clear to avoid confusion. There are also those who follow written instructions better than those instructions that are given orally. Basically, learners are not the same and so require different techniques.

Reference List

Benson, A., & Blackman, D., 2003. Can research methods ever be interesting? Active Learning in Higher Education, Vol. 4, No. 1, 39-55.

Chermak, S., & Weiss, A., 1999. Activity-based learning of statistics: Using practical applications to improve students’ learning. Journal of Criminal Justice Education , Vol. 10, No. 2, pp. 361-371.

Felder, R., & Brent, R., 1996. Navigating the bumpy road to student-centered instruction. College Teaching , Vol. 44, No. 2, pp. 43-47.

Marton, F. & Beaty, E., 1993. Conceptions of learning. International Journal of Educational Research , Vol. 19, pp. 277-300.

Van Rossum, E., & Schenk, S., 1984. The relationship between learning conception, study strategy and learning outcome. British Journal of Educational Psychology , Vol. 54, No.1, pp. 73-85.

Watkins, D., & Regmy, M., 1992. How universal are student conceptions of learning? A Nepalese investigation. Psychologia , Vol. 25, No. 2, pp. 101-110.

What Is Learning? FAQ

- Why Is Learning Important? Learning means gaining new knowledge, skills, and values, both in a group or on one’s own. It helps a person to develop, maintain their interest in life, and adapt to changes.

- Why Is Online Learning Good? Online learning has a number of advantages over traditional learning. First, it allows you to collaborate with top experts in your area of interest, no matter where you are located geographically. Secondly, it encourages independence and helps you develop time management skills. Last but not least, it saves time on transport.

- How to Overcome Challenges in Online Learning? The most challenging aspects of distant learning are the lack of face-to-face communication and the lack of feedback. The key to overcoming these challenges is effective communication with teachers and classmates through videoconferencing, email, and chats.

- How to Motivate Students to Learn Essay

- Apple’s iBook Using in Schools

- Narrative Essay as a Teaching Instrument

- Concept of Learning Geometry in School

- Distance Learning OL and Interactive Video in Higher Education

- Taxonomy of Learning Objectives

- Importance of social interaction to learning

- Comparing learning theories

- Chicago (A-D)

- Chicago (N-B)

IvyPanda. (2019, May 2). What Is Learning? Essay about Learning Importance. https://ivypanda.com/essays/what-is-learning-essay/

"What Is Learning? Essay about Learning Importance." IvyPanda , 2 May 2019, ivypanda.com/essays/what-is-learning-essay/.

IvyPanda . (2019) 'What Is Learning? Essay about Learning Importance'. 2 May.

IvyPanda . 2019. "What Is Learning? Essay about Learning Importance." May 2, 2019. https://ivypanda.com/essays/what-is-learning-essay/.

1. IvyPanda . "What Is Learning? Essay about Learning Importance." May 2, 2019. https://ivypanda.com/essays/what-is-learning-essay/.

Bibliography

IvyPanda . "What Is Learning? Essay about Learning Importance." May 2, 2019. https://ivypanda.com/essays/what-is-learning-essay/.

- Foundation-2

- Health and Physical Education

- Humanities and Social Sciences

- Digital Downloads

- Reset Lost Password

- English Lesson Plans

- Year 5 English Lesson Plans

- Year 6 English Lesson Plans

- Year 7 English Lesson Plans

- Year 8 English Lesson Plans

Persuasive Writing Techniques

A small lesson on understanding the techniques of persuasion and how to get better at persuasive writing. Children act out persuasion techniques in small groups and also sort from most powerful to least powerful.

Australian Curriculum Links:

- Understand how authors often innovate on text structures and play with language features to achieve particular aesthetic , humorous and persuasive purposes and effects (ACELA1518)

- Select, navigate and read texts for a range of purposes, applying appropriate text processing strategies and interpreting structural features, for example table of contents, glossary, chapters, headings and subheadings (ACELY1712)

- Plan, draft and publish imaginative, informative and persuasive texts , choosing and experimenting with text structures , language features , images and digital resources appropriate to purpose and audience (ACELY1714)

- Reread and edit students’ own and others’ work using agreed criteria and explaining editing choices (ACELY1715)

- Plan, rehearse and deliver presentations, selecting and sequencing appropriate content and multimodal elements to influence a course of action (ACELY1751)

Lesson Outline:

Timeframe: 45 mins – 1 hour 30 mins

Introduction:

- Set learning intention on board so students are clear on what they are learning and why (Learning how to use persuasive language to persuade people to believe our thoughts, like politicians and lawyers do outside of school)

- Watch a variety of election promises from politicians and discuss their strength in persuading you (children) to believe them.

- Handout Persuasive Language Techniques and ask children to get into groups and look at creating 4 examples for 1 of the techniques (e.g. Group 1 has ‘ATTACKS’ so they have to come up with 4 different examples) to be modeled back to the class (role play)

- At the end of the role play, give every child 3 sticky notes and list all the Persuasive Language Techniques on the board. Ask small groups to come up and place their 3 sticky notes next to the techniques that they saw as most powerful.

- Discuss results when all children have finished and clarify which are more powerful if some students haven’t seen it.

Assessment:

- Success criteria (developed by children at the start): I know I will be successful when…

- Anecdotal notes

- Photo of sticky notes (with names on them) showing that they understand which techniques are more powerful than others.

- Persuasive Language Techniques (Word Document)

- Sticky notes

RELATED RESOURCES MORE FROM AUTHOR

Remote Learning Lesson – Procedural Texts and Fractions with Pizza!

The Long ‘A’ Sound – A Phonics and Spelling Unit for Years 1-4

Who Would Win? Writing About Animals

Leave a reply cancel reply.

Log in to leave a comment

STAY CONNECTED

Popular categories.

- Mathematics Lessons 108

- English Lesson Plans 102

- Year 4 Mathematics Lesson Plans 72

- Year 5 Mathematics Lesson Plans 67

- Year 3 Mathematics Lesson Plans 61

- Year 3 English Lesson Plans 57

Bengal Tigers Teaching Resource – Reading in Grade 1/2

What’s the Weather Like Today? – A Science/Geography Lesson Plan for...

10 Ways to Detect AI Writing Without Technology

As more of my students have submitted AI-generated work, I’ve gotten better at recognizing it.

AI-generated papers have become regular but unwelcome guests in the undergraduate college courses I teach. I first noticed an AI paper submitted last summer, and in the months since I’ve come to expect to see several per assignment, at least in 100-level classes.

I’m far from the only teacher dealing with this. Turnitin recently announced that in the year since it debuted its AI detection tool, about 3 percent of papers it reviewed were at least 80 percent AI-generated.

Just as AI has improved and grown more sophisticated over the past 9 months, so have teachers. AI often has a distinct writing style with several tells that have become more and more apparent to me the more frequently I encounter any.

Before we get to these strategies, however, it’s important to remember that suspected AI use isn’t immediate grounds for disciplinary action. These cases should be used as conversation starters with students and even – forgive the cliché – as a teachable moment to explain the problems with using AI-generated work.

To that end, I’ve written previously about how I handled these suspected AI cases , the troubling limitations and discriminatory tendencies of existing AI detectors , and about what happens when educators incorrectly accuse students of using AI .

With those caveats firmly in place, here are the signs I look for to detect AI use from my students.

1. How to Detect AI Writing: The Submission is Too Long

When an assignment asks students for one paragraph and a student turns in more than a page, my spidey sense goes off.

Tech & Learning Newsletter

Tools and ideas to transform education. Sign up below.

Almost every class does have one overachieving student who will do this without AI, but that student usually sends 14 emails the first week and submits every assignment early, and most importantly, while too long, their assignment is often truly well written. A student who suddenly overproduces raises a red flag.

2. The Answer Misses The Mark While Also Being Too Long

Being long in and of itself isn’t enough to identify AI use, but it's often overlong assignments that have additional strange features that can make it suspicious.

For instance, the assignment might be four times the required length yet doesn’t include the required citations or cover page. Or it goes on and on about something related to the topic but doesn’t quite get at the specifics of the actual question asked.

3. AI Writing is Emotionless Even When Describing Emotions

If ChatGPT was a musician it would be Kenny G or Muzak. As it stands now, AI writing is the equivalent of verbal smooth jazz or grey noise. ChatGPT, for instance, has this very peppy positive vibe that somehow doesn’t convey actual emotion.

One assignment I have asks students to reflect on important memories or favorite hobbies. You immediately sense the hollowness of ChatGPT's response to this kind of prompt. For example, I just told ChatGPT I loved skateboarding as a kid and asked it for an essay describing that. Here’s how ChatGPT started:

As a kid, there was nothing more exhilarating than the feeling of cruising on my skateboard. The rhythmic sound of wheels against pavement, the wind rushing through my hair, and the freedom to explore the world on four wheels – skateboarding was not just a hobby; it was a source of unbridled joy.

You get the point. It’s like an extended elevator jazz sax solo but with words.

4. Cliché Overuse

Part of the reason AI writing is so emotionless is that its cliché use is, well, on steroids.

Take the skateboarding example in the previous entry. Even in the short sample, we see lines such as “the wind rushing through my hair, and the freedom to explore the world on four wheels.” Students, regardless of their writing abilities, always have more original thoughts and ways of seeing the world than that. If a student actually wrote something like that, we’d encourage them to be more authentic and truly descriptive.

Of course, with more prompt adjustments, ChatGPT and other AI’s tools can do better, but the students using AI for assignments rarely put in this extra time.

5. The Assignment Is Submitted Early

I don’t want to cast aspersions on those true overachievers who get their suitcases packed a week before vacation starts, finish winter holiday shopping in July, and have already started saving for retirement, but an early submission may be the first signal that I’m about to read some robot writing.

For example, several students this semester submitted an assignment the moment it became available. That is unusual, and in all of these cases, their writing also exhibited other stylistic points consistent with AI writing.

Warning: Use this tip with caution as it is also true that many of my best students have submitted assignments early over the years.

6. The Setting Is Out of Time

AI image generators frequently have little tells that signal the AI model that created it doesn’t understand what the world actually looks like — think extra fingers on human hands or buildings that don’t really follow the laws of physics.

When AI is asked to write fiction or describe something from a student’s life, similar mistakes often occur. Recently, a short story assignment in one of my classes resulted in several stories that took place in a nebulous time frame that jumped between modern times and the past with no clear purpose.

If done intentionally this could actually be pretty cool and give the stories a kind of magical realism vibe, but in these instances, it was just wonky and out-of-left-field, and felt kind of alien and strange. Or, you know, like a robot had written it.

7. Excessive Use of Lists and Bullet Points

Here are some reasons that I suspect students are using AI if their papers have many lists or bullet points:

1. ChatGPT and other AI generators frequently present information in list form even though human authors generally know that’s not an effective way to write an essay.

2. Most human writers will not inherently write this way, especially new writers who often struggle with organizing information.

3. While lists can be a good way to organize information, presenting more complex ideas in this manner can be .…

4 … annoying.

5. Do you see what I mean?

6. (Yes, I know, it's ironic that I'm complaining about this here given that this story is also a list.)

8. It’s Mistake-Free

I’ve criticized ChatGPT’s writing here yet in fairness it does produce very clean prose that is, on average, more error-free than what is submitted by many of my students. Even experienced writers miss commas, have long and awkward sentences, and make little mistakes – which is why we have editors. ChatGPT’s writing isn’t too “perfect” but it’s too clean.

9. The Writing Doesn’t Match The Student’s Other Work

Writing instructors know this inherently and have long been on the lookout for changes in voice that could be an indicator that a student is plagiarizing work.

AI writing doesn't really change that. When a student submits new work that is wildly different from previous work, or when their discussion board comments are riddled with errors not found in their formal assignments, it's time to take a closer look.

10. Something Is Just . . . Off

The boundaries between these different AI writing tells blur together and sometimes it's a combination of a few things that gets me to suspect a piece of writing. Other times it’s harder to tell what is off about the writing, and I just get the sense that a human didn’t do the work in front of me.

I’ve learned to trust these gut instincts to a point. When confronted with these more subtle cases, I will often ask a fellow instructor or my department chair to take a quick look (I eliminate identifying student information when necessary). Getting a second opinion helps ensure I’ve not gone down a paranoid “my students are all robots and nothing I read is real” rabbit hole. Once a colleague agrees something is likely up, I’m comfortable going forward with my AI hypothesis based on suspicion alone, in part, because as mentioned previously, I use suspected cases of AI as conversation starters rather than to make accusations.

Again, it is difficult to prove students are using AI and accusing them of doing so is problematic. Even ChatGPT knows that. When I asked it why it is bad to accuse students of using AI to write papers, the chatbot answered: “Accusing students of using AI without proper evidence or understanding can be problematic for several reasons.”

Then it launched into a list.

- Best Free AI Detection Sites

- My Student Was Submitting AI Papers. Here's What I Did