- Engineering Mathematics

- Discrete Mathematics

- Operating System

- Computer Networks

- Digital Logic and Design

- C Programming

- Data Structures

- Theory of Computation

- Compiler Design

- Computer Org and Architecture

Static Single Assignment (with relevant examples)

- Solidity - Assignment Operators

- How to Implement Move Assignment Operator in C++?

- Augmented Assignment Operators in Python

- How to Create Custom Assignment Operator in C++?

- How to create static classes in PHP ?

- Java - Lambda Expression Variable Capturing with Examples

- Directed Acyclic graph in Compiler Design (with examples)

- Java Assignment Operators with Examples

- std::is_assignable template in C++ with Examples

- std::is_trivially_assignable in C++ with Examples

- std::is_nothrow_assignable in C++ with Examples

- std::is_copy_assignable in C++ with Examples

- std::is_move_assignable C++ with Examples

- std::is_trivially_move_assignable in C++ with Examples

- std::is_nothrow_copy_assignable in C++ with Examples

- std::is_trivially_copy_assignable class in C++ with Examples

- Static Variables in Java with Examples

- Different Forms of Assignment Statements in Python

- Can we Overload or Override static methods in java ?

Static Single Assignment was presented in 1988 by Barry K. Rosen, Mark N, Wegman, and F. Kenneth Zadeck.

In compiler design, Static Single Assignment ( shortened SSA) is a means of structuring the IR (intermediate representation) such that every variable is allotted a value only once and every variable is defined before it’s use. The prime use of SSA is it simplifies and improves the results of compiler optimisation algorithms, simultaneously by simplifying the variable properties. Some Algorithms improved by application of SSA –

- Constant Propagation – Translation of calculations from runtime to compile time. E.g. – the instruction v = 2*7+13 is treated like v = 27

- Value Range Propagation – Finding the possible range of values a calculation could result in.

- Dead Code Elimination – Removing the code which is not accessible and will have no effect on results whatsoever.

- Strength Reduction – Replacing computationally expensive calculations by inexpensive ones.

- Register Allocation – Optimising the use of registers for calculations.

Any code can be converted to SSA form by simply replacing the target variable of each code segment with a new variable and substituting each use of a variable with the new edition of the variable reaching that point. Versions are created by splitting the original variables existing in IR and are represented by original name with a subscript such that every variable gets its own version.

Example #1:

Convert the following code segment to SSA form:

Here x,y,z,s,p,q are original variables and x 2 , s 2 , s 3 , s 4 are versions of x and s.

Example #2:

Here a,b,c,d,e,q,s are original variables and a 2 , q 2 , q 3 are versions of a and q.

Phi function and SSA codes

The three address codes may also contain goto statements, and thus a variable may assume value from two different paths.

Consider the following example:-

Example #3:

When we try to convert the above three address code to SSA form, the output looks like:-

Attempt #3:

We need to be able to decide what value shall y take, out of x 1 and x 2 . We thus introduce the notion of phi functions, which resolves the correct value of the variable from two different computation paths due to branching.

Hence, the correct SSA codes for the example will be:-

Solution #3:

Thus, whenever a three address code has a branch and control may flow along two different paths, we need to use phi functions for appropriate addresses.

Please Login to comment...

Similar reads, improve your coding skills with practice.

What kind of Experience do you want to share?

ENOSUCHBLOG

Programming, philosophy, pedaling., understanding static single assignment forms, oct 23, 2020 tags: llvm , programming .

This post is at least a year old.

With thanks to Niki Carroll , winny, and kurufu for their invaluable proofreading and advice.

By popular demand , I’m doing another LLVM post. This time, it’s single static assignment (or SSA) form, a common feature in the intermediate representations of optimizing compilers.

Like the last one , SSA is a topic in compiler and IR design that I mostly understand but could benefit from some self-guided education on. So here we are.

How to represent a program

At the highest level, a compiler’s job is singular: to turn some source language input into some machine language output . Internally, this breaks down into a sequence of clearly delineated 1 tasks:

- Lexing the source into a sequence of tokens

- Parsing the token stream into an abstract syntax tree , or AST 2

- Validating the AST (e.g., ensuring that all uses of identifiers are consistent with the source language’s scoping and definition rules) 3

- Translating the AST into machine code, with all of its complexities (instruction selection, register allocation, frame generation, &c)

In a single-pass compiler, (4) is monolithic: machine code is generated as the compiler walks the AST, with no revisiting of previously generated code. This is extremely fast (in terms of compiler performance) in exchange for some a few significant limitations:

Optimization potential: because machine code is generated in a single pass, it can’t be revisited for optimizations. Single-pass compilers tend to generate extremely slow and conservative machine code.

By way of example: the System V ABI (used by Linux and macOS) defines a special 128-byte region beyond the current stack pointer ( %rsp ) that can be used by leaf functions whose stack frames fit within it. This, in turn, saves a few stack management instructions in the function prologue and epilogue.

A single-pass compiler will struggle to take advantage of this ABI-supplied optimization: it needs to emit a stack slot for each automatic variable as they’re visited, and cannot revisit its function prologue for erasure if all variables fit within the red zone.

Language limitations: single-pass compilers struggle with common language design decisions, like allowing use of identifiers before their declaration or definition. For example, the following is valid C++:

| Rect { public: int area() { return width() * height(); } int width() { return 5; } int height() { return 5; } }; |

C and C++ generally require pre-declaration and/or definition for identifiers, but member function bodies may reference the entire class scope. This will frustrate a single-pass compiler, which expects Rect::width and Rect::height to already exist in some symbol lookup table for call generation.

Consequently, (virtually) all modern compilers are multi-pass .

Multi-pass compilers break the translation phase down even more:

- The AST is lowered into an intermediate representation , or IR

- Analyses (or passes) are performed on the IR, refining it according to some optimization profile (code size, performance, &c)

- The IR is either translated to machine code or lowered to another IR, for further target specialization or optimization 4

So, we want an IR that’s easy to correctly transform and that’s amenable to optimization. Let’s talk about why IRs that have the static single assignment property fill that niche.

At its core, the SSA form of any program source program introduces only one new constraint: all variables are assigned (i.e., stored to) exactly once .

By way of example: the following (not actually very helpful) function is not in a valid SSA form with respect to the flags variable:

| helpful_open(char *fname) { int flags = O_RDWR; if (!access(fname, F_OK)) { flags |= O_CREAT; } int fd = open(fname, flags, 0644); return fd; } |

Why? Because flags is stored to twice: once for initialization, and (potentially) again inside the conditional body.

As programmers, we could rewrite helpful_open to only ever store once to each automatic variable:

| helpful_open(char *fname) { if (!access(fname, F_OK)) { int flags = O_RDWR | O_CREAT; return open(fname, flags, 0644); } else { int flags = O_RDWR; return open(fname, flags, 0644); } } |

But this is clumsy and repetitive: we essentially need to duplicate every chain of uses that follow any variable that is stored to more than once. That’s not great for readability, maintainability, or code size.

So, we do what we always do: make the compiler do the hard work for us. Fortunately there exists a transformation from every valid program into an equivalent SSA form, conditioned on two simple rules.

Rule #1: Whenever we see a store to an already-stored variable, we replace it with a brand new “version” of that variable.

Using rule #1 and the example above, we can rewrite flags using _N suffixes to indicate versions:

| helpful_open(char *fname) { int flags_0 = O_RDWR; // Declared up here to avoid dealing with C scopes. int flags_1; if (!access(fname, F_OK)) { flags_1 = flags_0 | O_CREAT; } int fd = open(fname, flags_1, 0644); return fd; } |

But wait a second: we’ve made a mistake!

- open(..., flags_1, ...) is incorrect: it unconditionally assigns O_CREAT , which wasn’t in the original function semantics.

- open(..., flags_0, ...) is also incorrect: it never assigns O_CREAT , and thus is wrong for the same reason.

So, what do we do? We use rule 2!

Rule #2: Whenever we need to choose a variable based on control flow, we use the Phi function (φ) to introduce a new variable based on our choice.

Using our example once more:

| helpful_open(char *fname) { int flags_0 = O_RDWR; // Declared up here to avoid dealing with C scopes. int flags_1; if (!access(fname, F_OK)) { flags_1 = flags_0 | O_CREAT; } int flags_2 = φ(flags_0, flags_1); int fd = open(fname, flags_2, 0644); return fd; } |

Our quandary is resolved: open always takes flags_2 , where flags_2 is a fresh SSA variable produced applying φ to flags_0 and flags_1 .

Observe, too, that φ is a symbolic function: compilers that use SSA forms internally do not emit real φ functions in generated code 5 . φ exists solely to reconcile rule #1 with the existence of control flow.

As such, it’s a little bit silly to talk about SSA forms with C examples (since C and other high-level languages are what we’re translating from in the first place). Let’s dive into how LLVM’s IR actually represents them.

SSA in LLVM

First of all, let’s see what happens when we run our very first helpful_open through clang with no optimizations:

| dso_local i32 @helpful_open(i8* %fname) #0 { entry: %fname.addr = alloca i8*, align 8 %flags = alloca i32, align 4 %fd = alloca i32, align 4 store i8* %fname, i8** %fname.addr, align 8 store i32 2, i32* %flags, align 4 %0 = load i8*, i8** %fname.addr, align 8 %call = call i32 @access(i8* %0, i32 0) #4 %tobool = icmp ne i32 %call, 0 br i1 %tobool, label %if.end, label %if.then if.then: ; preds = %entry %1 = load i32, i32* %flags, align 4 %or = or i32 %1, 64 store i32 %or, i32* %flags, align 4 br label %if.end if.end: ; preds = %if.then, %entry %2 = load i8*, i8** %fname.addr, align 8 %3 = load i32, i32* %flags, align 4 %call1 = call i32 (i8*, i32, ...) @open(i8* %2, i32 %3, i32 420) store i32 %call1, i32* %fd, align 4 %4 = load i32, i32* %fd, align 4 ret i32 %4 } |

(View it on Godbolt .)

So, we call open with %3 , which comes from…a load from an i32* named %flags ? Where’s the φ?

This is something that consistently slips me up when reading LLVM’s IR: only values , not memory, are in SSA form. Because we’ve compiled with optimizations disabled, %flags is just a stack slot that we can store into as many times as we please, and that’s exactly what LLVM has elected to do above.

As such, LLVM’s SSA-based optimizations aren’t all that useful when passed IR that makes direct use of stack slots. We want to maximize our use of SSA variables, whenever possible, to make future optimization passes as effective as possible.

This is where mem2reg comes in:

This file (optimization pass) promotes memory references to be register references. It promotes alloca instructions which only have loads and stores as uses. An alloca is transformed by using dominator frontiers to place phi nodes, then traversing the function in depth-first order to rewrite loads and stores as appropriate. This is just the standard SSA construction algorithm to construct “pruned” SSA form.

(Parenthetical mine.)

mem2reg gets run at -O1 and higher, so let’s do exactly that:

| dso_local i32 @helpful_open(i8* nocapture readonly %fname) local_unnamed_addr #0 { entry: %call = call i32 @access(i8* %fname, i32 0) #4 %tobool.not = icmp eq i32 %call, 0 %spec.select = select i1 %tobool.not, i32 66, i32 2 %call1 = call i32 (i8*, i32, ...) @open(i8* %fname, i32 %spec.select, i32 420) #4, !dbg !22 ret i32 %call1, !dbg !23 } |

Foiled again! Our stack slots are gone thanks to mem2reg , but LLVM has actually optimized too far : it figured out that our flags value is wholly dependent on the return value of our access call and erased the conditional entirely.

Instead of a φ node, we got this select :

| = select i1 %tobool.not, i32 66, i32 2 |

which the LLVM Language Reference describes concisely:

The ‘select’ instruction is used to choose one value based on a condition, without IR-level branching.

So we need a better example. Let’s do something that LLVM can’t trivially optimize into a select (or sequence of select s), like adding an else if with a function that we’ve only provided the declaration for:

| filesize(char *); int helpful_open(char *fname) { int flags = O_RDWR; if (!access(fname, F_OK)) { flags |= O_CREAT; } else if (filesize(fname) > 0) { flags |= O_TRUNC; } int fd = open(fname, flags, 0644); return fd; } |

| dso_local i32 @helpful_open(i8* %fname) local_unnamed_addr #0 { entry: %call = call i32 @access(i8* %fname, i32 0) #5 %tobool.not = icmp eq i32 %call, 0 br i1 %tobool.not, label %if.end4, label %if.else if.else: ; preds = %entry %call1 = call i64 @filesize(i8* %fname) #5 %cmp.not = icmp eq i64 %call1, 0 %spec.select = select i1 %cmp.not, i32 2, i32 514 br label %if.end4 if.end4: ; preds = %if.else, %entry %flags.0 = phi i32 [ 66, %entry ], [ %spec.select, %if.else ] %call5 = call i32 (i8*, i32, ...) @open(i8* %fname, i32 %flags.0, i32 420) #5 ret i32 %call5 } |

That’s more like it! Here’s our magical φ:

| = phi i32 [ 66, %entry ], [ %spec.select, %if.else ] |

LLVM’s phi is slightly more complicated than the φ(flags_0, flags_1) that I made up before, but not by much: it takes a list of pairs (two, in this case), with each pair containing a possible value and that value’s originating basic block (which, by construction, is always a predecessor block in the context of the φ node).

The Language Reference backs us up:

The type of the incoming values is specified with the first type field. After this, the ‘phi’ instruction takes a list of pairs as arguments, with one pair for each predecessor basic block of the current block. Only values of first class type may be used as the value arguments to the PHI node. Only labels may be used as the label arguments. There must be no non-phi instructions between the start of a basic block and the PHI instructions: i.e. PHI instructions must be first in a basic block.

Observe, too, that LLVM is still being clever: one of our φ choices is a computed select ( %spec.select ), so LLVM still managed to partially erase the original control flow.

So that’s cool. But there’s a piece of control flow that we’ve conspicuously ignored.

What about loops?

| do_math(int count, int base) { for (int i = 0; i < count; i++) { base += base; } return base; } |

| dso_local i32 @do_math(i32 %count, i32 %base) local_unnamed_addr #0 { entry: %cmp5 = icmp sgt i32 %count, 0 br i1 %cmp5, label %for.body, label %for.cond.cleanup for.cond.cleanup: ; preds = %for.body, %entry %base.addr.0.lcssa = phi i32 [ %base, %entry ], [ %add, %for.body ] ret i32 %base.addr.0.lcssa for.body: ; preds = %entry, %for.body %i.07 = phi i32 [ %inc, %for.body ], [ 0, %entry ] %base.addr.06 = phi i32 [ %add, %for.body ], [ %base, %entry ] %add = shl nsw i32 %base.addr.06, 1 %inc = add nuw nsw i32 %i.07, 1 %exitcond.not = icmp eq i32 %inc, %count br i1 %exitcond.not, label %for.cond.cleanup, label %for.body, !llvm.loop !26 } |

Not one, not two, but three φs! In order of appearance:

Because we supply the loop bounds via count , LLVM has no way to ensure that we actually enter the loop body. Consequently, our very first φ selects between the initial %base and %add . LLVM’s phi syntax helpfully tells us that %base comes from the entry block and %add from the loop, just as we expect. I have no idea why LLVM selected such a hideous name for the resulting value ( %base.addr.0.lcssa ).

Our index variable is initialized once and then updated with each for iteration, so it also needs a φ. Our selections here are %inc (which each body computes from %i.07 ) and the 0 literal (i.e., our initialization value).

Finally, the heart of our loop body: we need to get base , where base is either the initial base value ( %base ) or the value computed as part of the prior loop ( %add ). One last φ gets us there.

The rest of the IR is bookkeeping: we need separate SSA variables to compute the addition ( %add ), increment ( %inc ), and exit check ( %exitcond.not ) with each loop iteration.

So now we know what an SSA form is , and how LLVM represents them 6 . Why should we care?

As I briefly alluded to early in the post, it comes down to optimization potential: the SSA forms of programs are particularly suited to a number of effective optimizations.

Let’s go through a select few of them.

Dead code elimination

One of the simplest things that an optimizing compiler can do is remove code that cannot possibly be executed . This makes the resulting binary smaller (and usually faster, since more of it can fit in the instruction cache).

“Dead” code falls into several categories 7 , but a common one is assignments that cannot affect program behavior, like redundant initialization:

| main(void) { int x = 100; if (rand() % 2) { x = 200; } else if (rand() % 2) { x = 300; } else { x = 400; } return x; } |

Without an SSA form, an optimizing compiler would need to check whether any use of x reaches its original definition ( x = 100 ). Tedious. In SSA form, the impossibility of that is obvious:

| main(void) { int x_0 = 100; // Just ignore the scoping. Computers aren't real life. if (rand() % 2) { int x_1 = 200; } else if (rand() % 2) { int x_2 = 300; } else { int x_3 = 400; } return φ(x_1, x_2, x_3); } |

And sure enough, LLVM eliminates the initial assignment of 100 entirely:

| dso_local i32 @main() local_unnamed_addr #0 { entry: %call = call i32 @rand() #3 %0 = and i32 %call, 1 %tobool.not = icmp eq i32 %0, 0 br i1 %tobool.not, label %if.else, label %if.end6 if.else: ; preds = %entry %call1 = call i32 @rand() #3 %1 = and i32 %call1, 1 %tobool3.not = icmp eq i32 %1, 0 %. = select i1 %tobool3.not, i32 400, i32 300 br label %if.end6 if.end6: ; preds = %if.else, %entry %x.0 = phi i32 [ 200, %entry ], [ %., %if.else ] ret i32 %x.0 } |

Constant propagation

Compilers can also optimize a program by substituting uses of a constant variable for the constant value itself. Let’s take a look at another blob of C:

| some_math(int x) { int y = 7; int z = 10; int a; if (rand() % 2) { a = y + z; } else if (rand() % 2) { a = y + z; } else { a = y - z; } return x + a; } |

As humans, we can see that y and z are trivially assigned and never modified 8 . For the compiler, however, this is a variant of the reaching definition problem from above: before it can replace y and z with 7 and 10 respectively, it needs to make sure that y and z are never assigned a different value.

Let’s do our SSA reduction:

| some_math(int x) { int y_0 = 7; int z_0 = 10; int a_0; if (rand() % 2) { int a_1 = y_0 + z_0; } else if (rand() % 2) { int a_2 = y_0 + z_0; } else { int a_3 = y_0 - z_0; } int a_4 = φ(a_1, a_2, a_3); return x + a_4; } |

This is virtually identical to our original form, but with one critical difference: the compiler can now see that every load of y and z is the original assignment. In other words, they’re all safe to replace!

| some_math(int x) { int y = 7; int z = 10; int a_0; if (rand() % 2) { int a_1 = 7 + 10; } else if (rand() % 2) { int a_2 = 7 + 10; } else { int a_3 = 7 - 10; } int a_4 = φ(a_1, a_2, a_3); return x + a_4; } |

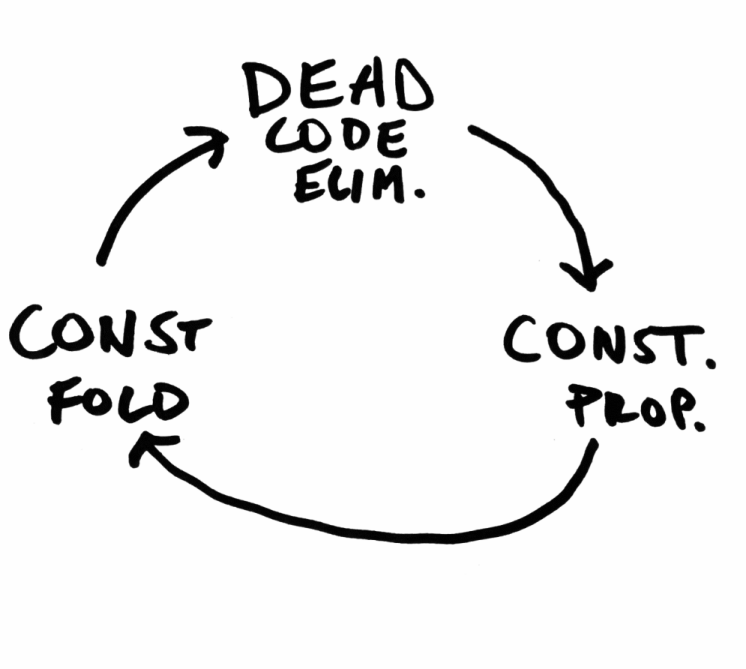

So we’ve gotten rid of a few potential register operations, which is nice. But here’s the really critical part: we’ve set ourselves up for several other optimizations :

Now that we’ve propagated some of our constants, we can do some trivial constant folding : 7 + 10 becomes 17 , and so forth.

In SSA form, it’s trivial to observe that only x and a_{1..4} can affect the program’s behavior. So we can apply our dead code elimination from above and delete y and z entirely!

This is the real magic of an optimizing compiler: each individual optimization is simple and largely independent, but together they produce a virtuous cycle that can be repeated until gains diminish.

Register allocation

Register allocation (alternatively: register scheduling) is less of an optimization itself , and more of an unavoidable problem in compiler engineering: it’s fun to pretend to have access to an infinite number of addressable variables, but the compiler eventually insists that we boil our operations down to a small, fixed set of CPU registers .

The constraints and complexities of register allocation vary by architecture: x86 (prior to AMD64) is notoriously starved for registers 9 (only 8 full general purpose registers, of which 6 might be usable within a function’s scope 10 ), while RISC architectures typically employ larger numbers of registers to compensate for the lack of register-memory operations.

Just as above, reductions to SSA form have both indirect and direct advantages for the register allocator:

Indirectly: Eliminations of redundant loads and stores reduces the overall pressure on the register allocator, allowing it to avoid expensive spills (i.e., having to temporarily transfer a live register to main memory to accommodate another instruction).

Directly: Compilers have historically lowered φs into copies before register allocation, meaning that register allocators traditionally haven’t benefited from the SSA form itself 11 . There is, however, (semi-)recent research on direct application of SSA forms to both linear and coloring allocators 12 13 .

A concrete example: modern JavaScript engines use JITs to accelerate program evaluation. These JITs frequently use linear register allocators for their acceptable tradeoff between register selection speed (linear, as the name suggests) and acceptable register scheduling. Converting out of SSA form is a timely operation of its own, so linear allocation on the SSA representation itself is appealing in JITs and other contexts where compile time is part of execution time.

There are many things about SSA that I didn’t cover in this post: dominance frontiers , tradeoffs between “pruned” and less optimal SSA forms, and feedback mechanisms between the SSA form of a program and the compiler’s decision to cease optimizing, among others. Each of these could be its own blog post, and maybe will be in the future!

In the sense that each task is conceptually isolated and has well-defined inputs and outputs. Individual compilers have some flexibility with respect to whether they combine or further split the tasks. ↩

The distinction between an AST and an intermediate representation is hazy: Rust converts their AST to HIR early in the compilation process, and languages can be designed to have ASTs that are amendable to analyses that would otherwise be best on an IR. ↩

This can be broken up into lexical validation (e.g. use of an undeclared identifier) and semantic validation (e.g. incorrect initialization of a type). ↩

This is what LLVM does: LLVM IR is lowered to MIR (not to be confused with Rust’s MIR ), which is subsequently lowered to machine code. ↩

Not because they can’t: the SSA form of a program can be executed by evaluating φ with concrete control flow. ↩

We haven’t talked at all about minimal or pruned SSAs, and I don’t plan on doing so in this post. The TL;DR of them: naïve SSA form generation can lead to lots of unnecessary φ nodes, impeding analyses. LLVM (and GCC, and anything else that uses SSAs probably) will attempt to translate any initial SSA form into one with a minimally viable number of φs. For LLVM, this tied directly to the rest of mem2reg . ↩

Including removing code that has undefined behavior in it, since “doesn’t run at all” is a valid consequence of invoking UB. ↩

And are also function scoped, meaning that another translation unit can’t address them. ↩

x86 makes up for this by not being a load-store architecture : many instructions can pay the price of a memory round-trip in exchange for saving a register. ↩

Assuming that %esp and %ebp are being used by the compiler to manage the function’s frame. ↩

LLVM, for example, lowers all φs as one of its very first preparations for register allocation. See this 2009 LLVM Developers’ Meeting talk . ↩

Wimmer 2010a: “Linear Scan Register Allocation on SSA Form” ( PDF ) ↩

Hack 2005: “Towards Register Allocation for Programs in SSA-form” ( PDF ) ↩

Lesson 6: Static Single Assignment

- discussion thread

- static single assignment

- SSA slides from Todd Mowry at CMU another presentation of the pseudocode for various algorithms herein

- Revisiting Out-of-SSA Translation for Correctness, Code Quality, and Efficiency by Boissinot on more sophisticated was to translate out of SSA form

- tasks due March 8

You have undoubtedly noticed by now that many of the annoying problems in implementing analyses & optimizations stem from variable name conflicts. Wouldn’t it be nice if every assignment in a program used a unique variable name? Of course, people don’t write programs that way, so we’re out of luck. Right?

Wrong! Many compilers convert programs into static single assignment (SSA) form, which does exactly what it says: it ensures that, globally, every variable has exactly one static assignment location. (Of course, that statement might be executed multiple times, which is why it’s not dynamic single assignment.) In Bril terms, we convert a program like this:

Into a program like this, by renaming all the variables:

Of course, things will get a little more complicated when there is control flow. And because real machines are not SSA, using separate variables (i.e., memory locations and registers) for everything is bound to be inefficient. The idea in SSA is to convert general programs into SSA form, do all our optimization there, and then convert back to a standard mutating form before we generate backend code.

Just renaming assignments willy-nilly will quickly run into problems. Consider this program:

If we start renaming all the occurrences of a , everything goes fine until we try to write that last print a . Which “version” of a should it use?

To match the expressiveness of unrestricted programs, SSA adds a new kind of instruction: a ϕ-node . ϕ-nodes are flow-sensitive copy instructions: they get a value from one of several variables, depending on which incoming CFG edge was most recently taken to get to them.

In Bril, a ϕ-node appears as a phi instruction:

The phi instruction chooses between any number of variables, and it picks between them based on labels. If the program most recently executed a basic block with the given label, then the phi instruction takes its value from the corresponding variable.

You can write the above program in SSA like this:

It can also be useful to see how ϕ-nodes crop up in loops.

(An aside: some recent SSA-form IRs, such as MLIR and Swift’s IR , use an alternative to ϕ-nodes called basic block arguments . Instead of making ϕ-nodes look like weird instructions, these IRs bake the need for ϕ-like conditional copies into the structure of the CFG. Basic blocks have named parameters, and whenever you jump to a block, you must provide arguments for those parameters. With ϕ-nodes, a basic block enumerates all the possible sources for a given variable, one for each in-edge in the CFG; with basic block arguments, the sources are distributed to the “other end” of the CFG edge. Basic block arguments are a nice alternative for “SSA-native” IRs because they avoid messy problems that arise when needing to treat ϕ-nodes differently from every other kind of instruction.)

Bril in SSA

Bril has an SSA extension . It adds support for a phi instruction. Beyond that, SSA form is just a restriction on the normal expressiveness of Bril—if you solemnly promise never to assign statically to the same variable twice, you are writing “SSA Bril.”

The reference interpreter has built-in support for phi , so you can execute your SSA-form Bril programs without fuss.

The SSA Philosophy

In addition to a language form, SSA is also a philosophy! It can fundamentally change the way you think about programs. In the SSA philosophy:

- definitions == variables

- instructions == values

- arguments == data flow graph edges

In LLVM, for example, instructions do not refer to argument variables by name—an argument is a pointer to defining instruction.

Converting to SSA

To convert to SSA, we want to insert ϕ-nodes whenever there are distinct paths containing distinct definitions of a variable. We don’t need ϕ-nodes in places that are dominated by a definition of the variable. So what’s a way to know when control reachable from a definition is not dominated by that definition? The dominance frontier!

We do it in two steps. First, insert ϕ-nodes:

Then, rename variables:

Converting from SSA

Eventually, we need to convert out of SSA form to generate efficient code for real machines that don’t have phi -nodes and do have finite space for variable storage.

The basic algorithm is pretty straightforward. If you have a ϕ-node:

Then there must be assignments to x and y (recursively) preceding this statement in the CFG. The paths from x to the phi -containing block and from y to the same block must “converge” at that block. So insert code into the phi -containing block’s immediate predecessors along each of those two paths: one that does v = id x and one that does v = id y . Then you can delete the phi instruction.

This basic approach can introduce some redundant copying. (Take a look at the code it generates after you implement it!) Non-SSA copy propagation optimization can work well as a post-processing step. For a more extensive take on how to translate out of SSA efficiently, see “Revisiting Out-of-SSA Translation for Correctness, Code Quality, and Efficiency” by Boissinot et al.

- One thing to watch out for: a tricky part of the translation from the pseudocode to the real world is dealing with variables that are undefined along some paths.

- Previous 6120 adventurers have found that it can be surprisingly difficult to get this right. Leave yourself plenty of time, and test thoroughly.

- You will want to make sure the output of your “to SSA” pass is actually in SSA form. There’s a really simple is_ssa.py script that can check that for you.

- You’ll also want to make sure that programs do the same thing when converted to SSA form and back again. Fortunately, brili supports the phi instruction, so you can interpret your SSA-form programs if you want to check the midpoint of that round trip.

- For bonus “points,” implement global value numbering for SSA-form Bril code.

- What is the Static Single Assignment Form (SSA) and when to use it?

When coding, we are used to reassigning variables repeatedly. Just take for (int i = 0; i < 100; i++) { } as an example, where the value of i changes a hundred times after its initialization. But what happens when we allow variables to be assigned only once? And why should we even do it?

By applying static single assignment form , or short SSA , each variable is assigned exactly once. This concept is utilized, for instance, in intermediate representations such as in compilers. For achieving SSA, variables get versioned, usually by adding an index to the variable’s name. For example, let’s translate the following lines of code into SSA:

The variables a_0 , a_1 and b_0 are assigned to a value only once whereas a is set twice, resulting in two versions of the variable name in SSA.

Why is SSA important?

In the example above, it is easy for the human eye to see that the first assignment a = 1 is not necessary since this value of the variable is never used. For a computer, however, it is not, as it would need to perform further analysis to spot this. But when SSA is applied to the code, even a computer can immediately recognize that a_0 is not used at all.

In this manner, SSA enables different kinds of optimization in compilers. It aids tasks such as eliminating dead code (i.e. code that has no effect on the outcome) or determining when two operations are equivalent in order to replace expensive computations with cheaper, equivalent ones.

What does SSA have to do with Symflower’s core technology?

Similarly to a compiler, Symflower’s symbolic execution needs to traverse the given source code, or respectively, an intermediate representation of it and translate it to something it can work with. By symbolically executing all parts of a program, Symflower can find all relevant paths through the code and, at the same time, the corresponding conditions around how the paths are reached. In order to assemble these constraints, we use the translation of the code into what you might have already guessed: SSA. This way, a constraint solver can efficiently produce values that satisfy the collected requirements.

The results computed by the symbolic execution are program inputs that trigger certain behavior. Read the first blog post of this series on Symflower’s Core Technology to learn more about symbolic execution.

The example we investigated in the first blog post was the following function:

We applied symbolic execution by hand to the problem of finding a division by zero in the last statement of the function. Let’s see how the collected constraints look like in SSA.

Please note that the variables which we want the solver to compute the value for are not given a value, they are only defined, so that the assignment can still be done by the solver. Due to this, the SSA variables in Symflower’s symbolic execution have exactly one value only after we query the results from the solver. Until that point, they have at most one . In these pictures, we represent the definition of variables without assignments with the prefix “var”. Therefore, the first assignments of the variables x and y and their corresponding constraints look as follows:

Please check out the blog post on the topic to find out how the paths and their constraints are collected. The result in SSA is the following:

Along each path, every SSA variable is assigned at most once. In the solvable case, each variable has exactly one value as expected.

If you enjoyed learning about SSA and peeking into Symflower’s Core Technology, stay tuned for the next blog posts of this blog series. Don’t forget to subscribe to our newsletter , and follow us on Twitter , Facebook , and LinkedIn for more content.

Series: Core Technology

- What is symbolic execution for software programs?

- Methods for automated test value generation

Online ordering is currently unavailable due to technical issues. We apologise for any delays responding to customers while we resolve this. For further updates please visit our website: https://www.cambridge.org/news-and-insights/technical-incident

We use cookies to distinguish you from other users and to provide you with a better experience on our websites. Close this message to accept cookies or find out how to manage your cookie settings .

Login Alert

- > Modern Compiler Implementation in C

- > Static Single-Assignment Form

Book contents

- Frontmatter

- Part I Fundamentals of Compilation

- Part II Advanced Topics

- 13 Garbage Collection

- 14 Object-Oriented Languages

- 15 Functional Programming Languages

- 16 Polymorphic Types

- 17 Dataflow Analysis

- 18 Loop Optimizations

- 19 Static Single-Assignment Form

- 20 Pipelining and Scheduling

- 21 The Memory Hierarchy

- Appendix: Tiger Language Reference Manual

- Bibliography

19 - Static Single-Assignment Form

Published online by Cambridge University Press: 05 June 2012

dom-i-nate: to exert the supreme determining or guiding influence on

Many dataflow analyses need to find the use-sites of each defined variable or the definition-sites of each variable used in an expression. The def-use chain is a data structure that makes this efficient: for each statement in the flow graph, the compiler can keep a list of pointers to all the use sites of variables defined there, and a list of pointers to all definition sites of the variables used there. In this way the compiler can hop quickly from use to definition to use to definition.

An improvement on the idea of def-use chains is static single-assignment form , or SSA form , an intermediate representation in which each variable has only one definition in the program text. The one (static) definition-site may be in a loop that is executed many (dynamic) times, thus the name static singleassignment form instead of single-assignment form (in which variables are never redefined at all).

The SSA form is useful for several reasons:

Dataflow analysis and optimization algorithms can be made simpler when each variable has only one definition.

If a variable has N uses and M definitions (which occupy about N + M instructions in a program), it takes space (and time) proportional to N · M to represent def-use chains – a quadratic blowup (see Exercise 19.8). For almost all realistic programs, the size of the SSA form is linear in the size of the original program (but see Exercise 19.9).

Access options

Save book to kindle.

To save this book to your Kindle, first ensure [email protected] is added to your Approved Personal Document E-mail List under your Personal Document Settings on the Manage Your Content and Devices page of your Amazon account. Then enter the ‘name’ part of your Kindle email address below. Find out more about saving to your Kindle .

Note you can select to save to either the @free.kindle.com or @kindle.com variations. ‘@free.kindle.com’ emails are free but can only be saved to your device when it is connected to wi-fi. ‘@kindle.com’ emails can be delivered even when you are not connected to wi-fi, but note that service fees apply.

Find out more about the Kindle Personal Document Service .

- Static Single-Assignment Form

- Andrew W. Appel , Princeton University, New Jersey

- With Maia Ginsburg

- Book: Modern Compiler Implementation in C

- Online publication: 05 June 2012

- Chapter DOI: https://doi.org/10.1017/CBO9781139174930.020

Save book to Dropbox

To save content items to your account, please confirm that you agree to abide by our usage policies. If this is the first time you use this feature, you will be asked to authorise Cambridge Core to connect with your account. Find out more about saving content to Dropbox .

Save book to Google Drive

To save content items to your account, please confirm that you agree to abide by our usage policies. If this is the first time you use this feature, you will be asked to authorise Cambridge Core to connect with your account. Find out more about saving content to Google Drive .

Next: Alias analysis , Previous: SSA Operands , Up: Analysis and Optimization of GIMPLE tuples [ Contents ][ Index ]

13.3 Static Single Assignment ¶

Most of the tree optimizers rely on the data flow information provided by the Static Single Assignment (SSA) form. We implement the SSA form as described in R. Cytron, J. Ferrante, B. Rosen, M. Wegman, and K. Zadeck. Efficiently Computing Static Single Assignment Form and the Control Dependence Graph. ACM Transactions on Programming Languages and Systems, 13(4):451-490, October 1991 .

The SSA form is based on the premise that program variables are assigned in exactly one location in the program. Multiple assignments to the same variable create new versions of that variable. Naturally, actual programs are seldom in SSA form initially because variables tend to be assigned multiple times. The compiler modifies the program representation so that every time a variable is assigned in the code, a new version of the variable is created. Different versions of the same variable are distinguished by subscripting the variable name with its version number. Variables used in the right-hand side of expressions are renamed so that their version number matches that of the most recent assignment.

We represent variable versions using SSA_NAME nodes. The renaming process in tree-ssa.cc wraps every real and virtual operand with an SSA_NAME node which contains the version number and the statement that created the SSA_NAME . Only definitions and virtual definitions may create new SSA_NAME nodes.

Sometimes, flow of control makes it impossible to determine the most recent version of a variable. In these cases, the compiler inserts an artificial definition for that variable called PHI function or PHI node . This new definition merges all the incoming versions of the variable to create a new name for it. For instance,

Since it is not possible to determine which of the three branches will be taken at runtime, we don’t know which of a_1 , a_2 or a_3 to use at the return statement. So, the SSA renamer creates a new version a_4 which is assigned the result of “merging” a_1 , a_2 and a_3 . Hence, PHI nodes mean “one of these operands. I don’t know which”.

The following functions can be used to examine PHI nodes

Returns the SSA_NAME created by PHI node phi (i.e., phi ’s LHS).

Returns the number of arguments in phi . This number is exactly the number of incoming edges to the basic block holding phi .

Returns i th argument of phi .

Returns the incoming edge for the i th argument of phi .

Returns the SSA_NAME for the i th argument of phi .

- Preserving the SSA form

- Examining SSA_NAME nodes

- Walking the dominator tree

13.3.1 Preserving the SSA form ¶

Some optimization passes make changes to the function that invalidate the SSA property. This can happen when a pass has added new symbols or changed the program so that variables that were previously aliased aren’t anymore. Whenever something like this happens, the affected symbols must be renamed into SSA form again. Transformations that emit new code or replicate existing statements will also need to update the SSA form.

Since GCC implements two different SSA forms for register and virtual variables, keeping the SSA form up to date depends on whether you are updating register or virtual names. In both cases, the general idea behind incremental SSA updates is similar: when new SSA names are created, they typically are meant to replace other existing names in the program.

For instance, given the following code:

Suppose that we insert new names x_10 and x_11 (lines 4 and 8 ).

We want to replace all the uses of x_1 with the new definitions of x_10 and x_11 . Note that the only uses that should be replaced are those at lines 5 , 9 and 11 . Also, the use of x_7 at line 9 should not be replaced (this is why we cannot just mark symbol x for renaming).

Additionally, we may need to insert a PHI node at line 11 because that is a merge point for x_10 and x_11 . So the use of x_1 at line 11 will be replaced with the new PHI node. The insertion of PHI nodes is optional. They are not strictly necessary to preserve the SSA form, and depending on what the caller inserted, they may not even be useful for the optimizers.

Updating the SSA form is a two step process. First, the pass has to identify which names need to be updated and/or which symbols need to be renamed into SSA form for the first time. When new names are introduced to replace existing names in the program, the mapping between the old and the new names are registered by calling register_new_name_mapping (note that if your pass creates new code by duplicating basic blocks, the call to tree_duplicate_bb will set up the necessary mappings automatically).

After the replacement mappings have been registered and new symbols marked for renaming, a call to update_ssa makes the registered changes. This can be done with an explicit call or by creating TODO flags in the tree_opt_pass structure for your pass. There are several TODO flags that control the behavior of update_ssa :

- TODO_update_ssa . Update the SSA form inserting PHI nodes for newly exposed symbols and virtual names marked for updating. When updating real names, only insert PHI nodes for a real name O_j in blocks reached by all the new and old definitions for O_j . If the iterated dominance frontier for O_j is not pruned, we may end up inserting PHI nodes in blocks that have one or more edges with no incoming definition for O_j . This would lead to uninitialized warnings for O_j ’s symbol.

- TODO_update_ssa_no_phi . Update the SSA form without inserting any new PHI nodes at all. This is used by passes that have either inserted all the PHI nodes themselves or passes that need only to patch use-def and def-def chains for virtuals (e.g., DCE).

WARNING: If you need to use this flag, chances are that your pass may be doing something wrong. Inserting PHI nodes for an old name where not all edges carry a new replacement may lead to silent codegen errors or spurious uninitialized warnings.

- TODO_update_ssa_only_virtuals . Passes that update the SSA form on their own may want to delegate the updating of virtual names to the generic updater. Since FUD chains are easier to maintain, this simplifies the work they need to do. NOTE: If this flag is used, any OLD->NEW mappings for real names are explicitly destroyed and only the symbols marked for renaming are processed.

13.3.2 Examining SSA_NAME nodes ¶

The following macros can be used to examine SSA_NAME nodes

Returns the statement s that creates the SSA_NAME var . If s is an empty statement (i.e., IS_EMPTY_STMT ( s ) returns true ), it means that the first reference to this variable is a USE or a VUSE.

Returns the version number of the SSA_NAME object var .

13.3.3 Walking the dominator tree ¶

This function walks the dominator tree for the current CFG calling a set of callback functions defined in struct dom_walk_data in domwalk.h . The call back functions you need to define give you hooks to execute custom code at various points during traversal:

- Once to initialize any local data needed while processing bb and its children. This local data is pushed into an internal stack which is automatically pushed and popped as the walker traverses the dominator tree.

- Once before traversing all the statements in the bb .

- Once for every statement inside bb .

- Once after traversing all the statements and before recursing into bb ’s dominator children.

- It then recurses into all the dominator children of bb .

- After recursing into all the dominator children of bb it can, optionally, traverse every statement in bb again (i.e., repeating steps 2 and 3).

- Once after walking the statements in bb and bb ’s dominator children. At this stage, the block local data stack is popped.

- Docs »

- Control-Flow Constructs »

- Single-Static Assignment Form and PHI

- Edit on GitHub

Single-Static Assignment Form and PHI ¶

We’ll take a look at the same very simple max function, as in the previous section.

Translated to LLVM IR:

We can see that the function allocates space on the stack with alloca [2] , where the bigger value is stored. In one branch %a is stored, while in the other branch %b is stored to the stack allocated memory. However, we want to avoid using memory load/store operation and use registers instead, whenever possible. So we would like to write something like this:

This is not valid LLVM IR, because it violates the static single assignment form (SSA, [1] ) of the LLVM IR. SSA form requires that every variable is assigned only exactly once. SSA form enables and simplifies a vast number of compiler optimizations, and is the de-facto standard for intermediate representations in compilers of imperative programming languages.

Now how would one implement the above code in proper SSA form LLVM IR? The answer is the magic phi instruction. The phi instruction is named after the φ function used in the theory of SSA. This functions magically chooses the right value, depending on the control flow. In LLVM you have to manually specify the name of the value and the previous basic block.

Here we instruct the phi instruction to choose %a if the previous basic block was %btrue . If the previous basic block was %bfalse , then %b will be used. The value is then assigned to a new variable %retval . Here you can see the full code listing:

PHI in the Back End ¶

Let’s have a look how the @max function now maps to actual machine code. We’ll have a look what kind of assembly code is generated by the compiler back end. In this case we’ll look at the code generated for x86 64-bit, compiled with different optimization levels. We’ll start with a non-optimizing backend ( llc -O0 -filetype=asm ). We will get something like this assembly:

The parameters %a and %b are passed in %edi and %esi respectively. We can see that the compiler back end generated code that uses the stack to store the bigger value. So the code generated by the compiler back end is not what we had in mind, when we wrote the LLVM IR. The reason for this is that the compiler back end needs to implement the phi instruction with real machine instructions. Usually that is done by assigning to one register or storing to one common stack memory location. Usually the compiler back end will use the stack for implementing the phi instruction. However, if we use a little more optimization in the back end (i.e., llc -O1 ), we can get a more optimized version:

Here the phi function is implemented by using the %edi register. In one branch %edi already contains the desired value, so nothing happens. In the other branch %esi is copied to %edi . At the %end basic block, %edi contains the desired value from both branches. This is more like what we had in mind. We can see that optimization is something that needs to be applied through the whole compilation pipeline.

| [3] |

Static vs. dynamic IP addresses: Why you need to know the difference

An IP address is a way for every device on a network to be seen. Without IP addresses, it would be impossible for those devices to be located.

Think of your computer's IP address like your house's street address. Without a street address, it would be challenging (if not impossible) for others to find you. Unlike a computer's IP address, however, the only time your home address changes is when you move. On the other hand, can easily change your devices' IP addresses (depending on the device type).

Also: How to change your IP address, why you'd want to - and when you shouldn't

There are two main types of IP addresses -- static and dynamic. I'll explain them both -- and why you would choose one over the other.

Static IP addresses

Simply put, a static IP address does not change automatically. Once you set a static IP address, it remains until you manually change it. (A static IP address is the one most analogous to your home address.)

Also: What is a static IP address and what is it used for?

Static IP addresses are generally assigned for machines where the IP address needs to stay the same. For example, I have a network share on my desktop computer (running Pop!_OS Linux ). If I used a dynamic IP address on that machine, the IP address could -- and eventually would -- change on me.

If I was on a different machine within my home network, and I went to save a file to that share, I'd be prevented from doing so because the IP address would no longer be the same. I'd have to go to my desktop, locate the IP address (such as with the command ip -a ), and then reconnect the other machine to the share.

Had that desktop machine been configured with a static IP address, there would be no need to worry about that IP address changing.

There's an inherent problem with this. Let's say you assign the IP address 192.168.1.100 to your desktop, and it works great. What if, at some point, your router assigns that same address to another machine (because the router doesn't know you've already used that address)? Should that happen, you'd wind up with IP address conflicts -- and problems could occur.

Say you're on your laptop and need to mount the network share. If your router has assigned that same IP address to another machine, your laptop might not know which machine to use and would fail to mount the share.

Here's how to avoid that problem: If you need to assign static IP addresses to machines on your home network, configure your router to assign dynamic IP addresses only within a specific range. For instance, you might set the dynamic range between 192.168.1.1 and 192.168.1.99. That way, you can use IP addresses 192.168.1.100 and up for static usage.

Also: What is 5G home internet? Here's what to know before you sign up

How you assign a static IP address will vary, depending on your operating system. Generally speaking, you go to your network settings tool, locate the connection to be configured (such as wired or Wi-Fi), open the options for that device, and configure the following details:

- Gateway (usually the address of your router or modem)

- DNS (third-party services, such as Cloudflare's 1.1.1.1 and 1.0.0.1)

Dynamic IP addresses

Dynamic IP addresses are assigned to the devices on your network by your router and DHCP (Dynamic Host Configuration Protocol). They are called dynamic because they can change. The change is defined by what's called a lease. The period of the lease varies, depending on your router. Lease periods can be anywhere from one week to several months.

Here's how this works:

- A machine requests a new lease for an IP address.

- The address is assigned by the router.

- Halfway through the DHCP lease period, the machine attempts to renew the lease (so it can keep the same IP address).

- If the renewal fails, the machine gets a new IP address.

Many routers allow you to configure the lease periods, but most users should stick to the default settings.

Also: This is the fastest and most expensive Wi-Fi router I've ever tested

For the most part, dynamic IP addresses are easier to use because they ensure you won't have to worry about IP address conflicts. Most devices default to dynamic IP address assignment, so you don't have to configure anything (beyond the possible selection of the network you want to use).

As noted earlier, the only time you'd want to opt for a static IP address is if you have a machine on your network that serves a specific purpose and a change in IP address could disrupt that purpose. Even then, the machine most often will successfully renew its DHCP lease, so there shouldn't be any problems. However, if you do encounter a problem, consider going the static route. Just make sure you can configure your router's dynamic IP address range to avoid IP conflicts.

That's the gist of static and dynamic IP addresses. Chances are high that you'll never have to deal with any of this, but on the occasion that you do, you now understand the difference.

What is a static IP address and what is it used for?

How to find your ip address in any operating system - and why you'd want to, mesh routers vs. wi-fi routers: what's best for your home office.

What Is a Static Website? The Absolute Beginner’s Guide

Trying to decide between a static website vs a dynamic website for your next project? Or are you not even sure what a static website is in the first place?

Either way, this article is here to help. It has everything that you need to know about static websites, including what they are, how they work, how they’re different from dynamic websites, and some of the pros and cons of static websites vs dynamic websites.

By the end, you should have a good idea of which approach might be the best fit for your project, as well as how you can get started.

What Is a Static Website?

A static website is a website that serves pages using a fixed number of pre-built files composed of HTML , CSS, and JavaScript .

A static website has no backend server-side processing and no database. Any “dynamic” functionality associated with the static site is performed on the client side, which means the code is executed in visitors’ browsers rather than on the server.

In non-technical terms, this means that your hosting delivers the website files to visitors’ browsers exactly as those files appear on the server.

What’s more, every single visitor gets the same static file delivered to their browsers, which means largely having the exact same experiences and seeing the exact same content.

What Is a Dynamic Website?

A dynamic website is a website that’s controlled on the server side and relies on some type of scripting language (e.g. PHP). Every time someone visits the site*, the server processes its code and/or query the database to generate the finished page.

This allows the server to display different content for each visit. For example, someone who’s logged in to the site might see one version of the page, while someone who’s not logged in might see completely different content when they visit the page.

It also means that any changes you make in your site management dashboard is visible right away because the content management system automatically starts delivering the page with the latest content/changes.

How Do Static Websites Work?

Let’s go over how to make a static website in more depth later on, but the basic approach that most static sites use is as follows:

- Choose how to build your site – while you could just manually create your static HTML, CSS, and JavaScript files for very simple websites, many modern static websites use some type of Static Site Generator (SSG) tool or headless CMS .

- Set up your site – build your site using your chosen tool. Unlike WordPress, you won’t control your site from a live production server.

- Deploy your site – this means that you move your site’s static files onto live hosting so that users can access them.

Examples of Static Websites and Tools

When looking at a website, there’s no hard and fast rule to tell whether it’s static or dynamic. Instead, it’s more helpful to look at examples of tools that help you build and manage static websites.

Here are some of the most popular static website examples when it comes to software for creating static websites:

- Eleventy (11ty)

All of these tools let you create static websites, but they go about it in very different ways. For example, Publii gives you a code-free desktop interface, while some other tools require you to use the command line.

Examples of Dynamic Websites and Tools

To help you compare and contrast with the static website examples above, let’s also take a look at some dynamic website examples.

The most popular example of dynamic websites is WordPress software , which powers over 43% of all websites on the internet.

While you can use WordPress for static websites by installing a static site generator plugin, WordPress functions as a dynamic website builder by default.

Here are some other well-known examples of dynamic websites that use similar approaches:

- Shopify (along with pretty much every other eCommerce platform )

Static vs Dynamic Websites: Pros and Cons

Now that you have a solid understanding of what a static website is and how it compares to a dynamic website, let’s run through some of the pros and cons of static vs dynamic websites.

Static Website Pros

- Easier to optimize for performance – because static websites have fewer “moving parts”, so to speak, they’re a lot easier to optimize for performance. Static websites are generally very lean and quick to load, especially if you build them well.

- Requires fewer server resources – because static websites don’t require any server-side processing, the server needs to do a lot less work for each visit. This improves performance and helps your website perform better under scale. You can even deploy your static website straight to a CDN and skip using a web server altogether (which means, in part, that your site has no static IP address ).

- It’s very cheap to host static websites – for simple hobby projects/portfolios, you could host them on our Static Site Hosting for free or use other free hosting services such as GitHub Pages or Cloudflare Pages.

- Easier to secure – because static sites don’t rely on server-side processing or databases, there are fewer attack surfaces for malicious actors. It is still possible for a static website to be hacked – but it’s a lot less likely to happen.

- Very easy to launch simple websites – you can launch static websites very quickly because you don’t need to worry about setting up technical details such as databases.

Static Website Cons

- Can be more complex to apply content/design updates – with a static site, you need to redeploy your website every time you make a change or apply updates – even for something very small like changing an item in your navigation menu. This can add some extra complexity, especially if you’re regularly changing your website. However, when you deploy through your Git repository via a Static Site Hosting like Kinsta, you can turn on Automatic deployment to automatically trigger deployment from your Git repository when there is any change.

- Can be more technical to add features – with dynamic website tools like WordPress, you can install a new plugin when you want to add functionality to your site . With static sites, it’s usually, but not always , more complex.

- Content management functionality usually isn’t as strong – static website tools generally aren’t as strong at managing content, which can be cumbersome if you have a large website with thousands of pieces of content and lots of taxonomies for organization. Some static site generators are doing a lot to reduce this issue, though.

- Reliant on third-party services for even basic functionality like web forms – for example, most static websites use form endpoint APIs such as FormBold or Getform . Or, you could embed third-party form services such as Google Forms (or one of these Google Forms alternatives ). With a dynamic content management system like WordPress, you could just install a form plugin and store everything in your site’s database.

Dynamic Website Pros

- Can create personalized visitor experiences – you’re able to dynamically adjust the content of a page based on the person who’s viewing it. For example, you could create a membership site where paying members are able to see the full content of a page while anonymous visitors are only able to see a small preview.

- Easy to update your site (content and design) – it’s very easy to apply content and design updates on a dynamic site. As soon as you apply the update in your site management dashboard, you should instantly see that change reflected on your live site. There’s no need to redeploy your entire site like you might need to do with a static site.

- Easier to add new features via plugins/apps – for example, you can just install new plugins to add features if you’re using WordPress as your dynamic website content management system.

- Stronger content management features at scale – dynamic website tools are generally better for managing lots of content. For example, if you’re building a directory of thousands of local businesses, a dynamic website tool is usually a better option.

Dynamic Website Cons

- Can be more expensive to host – all things equal, a dynamic website is usually more expensive to host because it requires more server resources for server-side processing.

- Harder to secure – dynamic websites have more attack surfaces than static websites, which makes them a little more complex to secure. For example, you need to worry about SQL injection attacks , which isn’t something that can affect static sites ( because static sites don’t have a database ).

- Requires more effort for performance optimization – optimizing a dynamic website is more complex because you need to worry about both frontend and backend performance. For example, dynamic websites might need to spend time optimizing database queries and performance , which isn’t an issue for static sites.

- Slightly more complex setup process – for example, if you want to make a WordPress website, you need to set up your database and make sure your site has the necessary technologies (e.g. a recent version of PHP , MySQL/MariaDB , and so on). However, using managed WordPress hosting like Kinsta can greatly simplify managing your WordPress site by letting you launch WordPress sites using a simple interface.

How To Build a Static Website

If you think the advantages of building a static website might make it a good fit for your next project, here’s a quick guide on how to create a static website.

We’re intentionally keeping this guide high level so that you can adapt it to your own use case.

1. Choose a Static Site Generator or CMS

To start, choose a static site generator or a content management system that allows you to deploy a static site.

If you have the technical knowledge, you can always create a static site from scratch using your own HTML, CSS , and JavaScript, along with your favorite HTML editor software . However, this can quickly become unwieldy as your site grows and you may or may not have the technical knowledge to do everything from scratch .

A static site generator gives you a simpler way to build your static site. It also makes it easier to update your site in the future.

Rather than needing to edit every single HTML file when you make a change (such as adding a new item to your navigation menu), the static site generator can help you deploy new versions of all your site’s files that reflect the changes.

Here are some popular static site generators that you can consider:

- Jekyll – one of the most popular open-source static website generators. Can work for a variety of sites and supports blogging. We have a whole post on how to create a static site with Jekyll .

- Hugo – another popular open-source generator that can work for all different types of sites. We also have a post on how to create a static site with Hugo .

- WordPress + a static site generator plugin – you can use WordPress to build your site but then deploy it as a static HTML site using your preferred static site generator plugin.

For more options, check out our post with the top static site generators .

2. Build Your Website

Once you’ve chosen your preferred tool, use that tool to build your website.

How that works depends on which tool you choose, so there’s no single guide that applies to all static site generators.

If you come from a WordPress background, using WordPress itself as a static site generator offers one of the simplest ways to get your toes wet and create your first static site.

Here’s an example of what it might look like to build a static website with WordPress:

- Create a local WordPress site using DevKinsta . This is not the site that your visitors actually interact with – it’s just where you manage your content and website design.

- Set up your site using your favorite design tool . You could use the native WordPress block editor , or you could use your favorite page builder plugin such as Elementor or Divi .

- Install a static site generator plugin . This is what you use to deploy your static website in the next step. Popular options include Simply Static and WP2Static , though there are also newer alternatives such as Staatic .

Another option is to pair WordPress with Gatsby using GraphQL .

3. Deploy Your Website

Once you’ve built your website, you need to deploy it to your hosting service. Essentially, this means you need a way to get the static HTML files from your computer to the internet.

There are a couple of different routes you can take here.

One approach is to utilize a Static Site Hosting service. With this hosting solution, you can effortlessly set up automatic and continuous deployment through your preferred Git provider. Kinsta offers an exceptional Static Site Hosting service that builds and serves your deployed site within seconds.

For example, pushing new files to GitHub might automatically trigger a process like this:

- When you push a change in GitHub, it automatically notifies your server, for example, Kinsta.

- Your server pulls the latest files from GitHub and runs your Static Site’s build command.

- Your server moves the files into the live site environment.

For another Git-based option, you can also use GitHub pages for static websites .

As an alternative approach, you can deploy directly to a CDN rather than using any type of web server. This approach looks something like this:

- You upload your site’s files to some type of online storage environment. Many tools can do this automatically or via the command line.

- You set up a CDN to pull from that storage.

- When you publish new files to your storage, the CDN automatically starts pulling in those new files.

If your tool doesn’t offer any special features to simplify deploying your site, you need to:

- Generate your site’s static HTML files.

- Manually upload those files to your static website hosting service.

Should You Use a Static Website As Your Next Project?

Whether or not a static website is a good fit for your next project depends on the purpose of your website and your own knowledge level.

As such, there’s no single one-size-fits-all answer here.

Instead, let’s go through some situations when it might or might not make sense to use a static website.

When a Static Website Might Make Sense

A static website works best for websites where all visitors see the same content and the content doesn’t change very often – especially for smaller websites with not a lot of content.

Here are some types of websites that can work very well with the static approach:

- Portfolio websites

- Basic business brochure websites

- Resume websites

- Simple blogs that don’t publish that often

- Documentation content/knowledge bases

- Landing pages

These types of websites are able to achieve all of the benefits of the static approach but with very few tradeoffs.

For example, you’re unlikely to need to personalize a basic business brochure website, so you’re not “losing” any functionality by using the static approach.

Similarly, these types of sites don’t change very often, so you won’t need to worry about constantly redeploying your static pages.

When a Dynamic Website Might Make Sense

To start, dynamic websites are generally always the best choice for situations where you need the ability to personalize the site experience, such as ecommerce stores , membership websites , online courses , and so on.

Basically, if you want users to be able to see different content on a page, you typically want to use a dynamic website.

While there are ways to build the aforementioned types of websites using a static website, the tradeoffs that you have to make usually aren’t worth it, which is why a dynamic website might be a better choice.

Dynamic websites can also often make more sense for content-heavy sites, especially sites with lots of taxonomies and other methods of organization

Similarly, if you’re regularly publishing new content all the time, that might be a reason to go with a dynamic website.

For example, a personal blog where you only publish 1 new post every 2 weeks might be fine as a static website, but a monetization-focused blog that’s publishing five new posts every day is probably better as a dynamic website.

To recap, a static website is a website that serves fixed HTML pages and does all of its processing on the client side. When you create a static site, every single user receives the same static HTML file/content when they visit a page.

This static website approach has been growing in popularity, thanks in large part to static website generator tools such as Hugo, Jekyll, Gatsby, and others.

However, building a static website has both pros and cons, so it won’t be the right approach for all websites.

If you want to get started with your own static website, you can choose a static site generator and launch your website for free with Kinsta’s Static Site Hosting within a few seconds.

As an alternative to Static Site Hosting, you can opt for deploying your static site with Kinsta’s Application Hosting , which provides greater hosting flexibility, a wider range of benefits, and access to more robust features. For example, scalability, customized deployment using a Dockerfile, and comprehensive analytics encompassing real-time and historical data.

What do you think about static sites? Share your thoughts and experiences with us in the comments section below.

Related Articles

How To Craft a Stylish Static Website with Eleventy (11ty)

Top 6 React Static Site Generators to Consider

Intro to Building Websites with Gatsby and WordPress (Fast and Static)

COMMENTS

Static single-assignment form. In compiler design, static single assignment form (often abbreviated as SSA form or simply SSA) is a type of intermediate representation (IR) where each variable is assigned exactly once. SSA is used in most high-quality optimizing compilers for imperative languages, including LLVM, the GNU Compiler Collection ...

Static Single Assignment was presented in 1988 by Barry K. Rosen, Mark N, Wegman, and F. Kenneth Zadeck. In compiler design, Static Single Assignment ( shortened SSA) is a means of structuring the IR (intermediate representation) such that every variable is allotted a value only once and every variable is defined before it's use. The prime ...

•Static Single Assignment (SSA) •CFGs but with immutable variables •Plus a slight "hack" to make graphs work out •Now widely used (e.g., LLVM) •Intra-procedural representation only •An SSA representation for whole program is possible (i.e., each global variable and memory location has static single

SSA form. Static single-assignment form arranges for every value computed by a program to have. aa unique assignment (aka, "definition") A procedure is in SSA form if every variable has (statically) exactly one definition. SSA form simplifies several important optimizations, including various forms of redundancy elimination. Example.

By popular demand, I'm doing another LLVM post. This time, it's single static assignment (or SSA) form, a common feature in the intermediate representations of optimizing compilers. Like the last one, SSA is a topic in compiler and IR design that I mostly understand but could benefit from some self-guided education on.

Many compilers convert programs into static single assignment (SSA) form, which does exactly what it says: it ensures that, globally, every variable has exactly one static assignment location. (Of course, that statement might be executed multiple times, which is why it's not dynamic single assignment.) In Bril terms, we convert a program like ...

SSA. Static single assignment is an IR where every variable is assigned a value at most once in the program text. E as y for a b asi c bl ock : assign to a fresh variable at each stmt. each use uses the most recently defined var. (Si mil ar to V al ue N umb eri ng) Straight-line SSA. . + y.

Static single assignment is an IR where every variable is assigned a value at most once in the program text. Easy for a basic block (reminiscent of Value Numbering): Visit each instruction in program order: LHS: assign to a fresh version of the variable. RHS: use the most recent version of each variable. = x + y.

Computing Static Single Assignment (SSA) Form Overview † What is SSA? † Advantages of SSA over use-def chains † \Flavors" of SSA † Dominance frontiers revisited † Inserting f-nodes † Renaming the variables † Translating out of SSA form R. Cytron, J. Ferrante, B. K. Rosen, M. N. Wegman, and F. K. Zadeck, \E-ciently Computing Static

Static Single Assignment •Induction variables (standard vs. SSA) •Loop Invariant Code Motion with SSA CS 380C Lecture 7 21 Static Single Assignment Cytron et al. Dominance Frontier Algorithm let SUCC(S) = [s∈S SUCC(s) DOM!−1(v) = DOM−1(v) - v, then

• Static Single Assignment form: type of intermediate representation oEach variable is assigned statically (in code) exactly once oEach definition is assigned a unique name • Properties: oMakes def-use chains explicit oDefinitions dominate uses (key property) oThis makes certain optimizations simpler or more efficient

Static Single Assignment Form (and dominators, post-dominators, dominance frontiers…) CS252r Spring 2011 ... •If node X contains assignment to a, put Φ function for a in dominance frontier of X •Adding Φ fn may require introducing additional Φ fn •Step 2: Rename variables so only one definition ...