Open Access is an initiative that aims to make scientific research freely available to all. To date our community has made over 100 million downloads. It’s based on principles of collaboration, unobstructed discovery, and, most importantly, scientific progression. As PhD students, we found it difficult to access the research we needed, so we decided to create a new Open Access publisher that levels the playing field for scientists across the world. How? By making research easy to access, and puts the academic needs of the researchers before the business interests of publishers.

We are a community of more than 103,000 authors and editors from 3,291 institutions spanning 160 countries, including Nobel Prize winners and some of the world’s most-cited researchers. Publishing on IntechOpen allows authors to earn citations and find new collaborators, meaning more people see your work not only from your own field of study, but from other related fields too.

Brief introduction to this section that descibes Open Access especially from an IntechOpen perspective

Want to get in touch? Contact our London head office or media team here

Our team is growing all the time, so we’re always on the lookout for smart people who want to help us reshape the world of scientific publishing.

Home > Books > Qualitative versus Quantitative Research

Research Methods in Library and Information Science

Submitted: 28 October 2016 Reviewed: 23 March 2017 Published: 28 June 2017

DOI: 10.5772/intechopen.68749

Cite this chapter

There are two ways to cite this chapter:

From the Edited Volume

Qualitative versus Quantitative Research

Edited by Sonyel Oflazoglu

To purchase hard copies of this book, please contact the representative in India: CBS Publishers & Distributors Pvt. Ltd. www.cbspd.com | [email protected]

Chapter metrics overview

5,880 Chapter Downloads

Impact of this chapter

Total Chapter Downloads on intechopen.com

Total Chapter Views on intechopen.com

Overall attention for this chapters

Library and information science (LIS) is a very broad discipline, which uses a wide rangeof constantly evolving research strategies and techniques. The aim of this chapter is to provide an updated view of research issues in library and information science. A stratified random sample of 440 articles published in five prominent journals was analyzed and classified to identify (i) research approach, (ii) research methodology, and (iii) method of data analysis. For each variable, a coding scheme was developed, and the articles were coded accordingly. A total of 78% of the articles reported empirical research. The rest 22% were classified as non‐empirical research papers. The five most popular topics were “information retrieval,” “information behaviour,” “information literacy,” “library services,” and “organization and management.” An overwhelming majority of the empirical research articles employed a quantitative approach. Although the survey emerged as the most frequently used research strategy, there is evidence that the number and variety of research methodologies have been increased. There is also evidence that qualitative approaches are gaining increasing importance and have a role to play in LIS, while mixed methods have not yet gained enough recognition in LIS research.

- library and information science

- research methods

- research strategies

- data analysis techniques

- research articles

Author Information

Aspasia togia *.

- Department of Library Science & Information Systems, Technological Educational Institute (TEI) of Thessaloniki, Greece

Afrodite Malliari

- DataScouting, Thessaloniki, Greece

*Address all correspondence to: [email protected]

1. Introduction

Library and information science (LIS), as its name indicates, is a merging of librarianship and information science that took place in the 1960s [ 1 , 2 ]. LIS is a field of both professional practice and scientific inquiry. As a field of practice, it includes the profession of librarianship as well as a number of other information professions, all of which assume the interplay of the following:

information content,

the people who interact with the content, and

the technology used to facilitate the creation, communication, storage, or transformation of the content [ 3 ].

The disciplinary foundation of LIS, which began in the 1920s, aimed at providing a theoretical foundation for the library profession. LIS has evolved in close relationship with other fields of research, especially computer science, communication studies, and cognitive sciences [ 4 ].

The connection of LIS with professional practice, on one hand, and other research fields on the other has influenced its research orientation and the development of methodological tools and theoretical perspectives [ 5 ]. Research problems are diverse, depending on the research direction, local trends, etc. Most of them relate to the professional practice although there are theoretical research statements as well. LIS research strives to address important information issues, such as these of “ information retrieval, information quality and authenticity, policy for access and preservation, the health and security applications of data mining ”(p. 3) [ 6 ]. The research is multidisciplinary in nature, and it has been heavily influenced by research designs developed in the social, behavioral, and management sciences and to a lesser extent by the theoretical inquiry adopted in the humanities [ 7 ]. Methods used in information retrieval research have been adapted from computer science. The emergence of evidence‐based librarianship in the late 1990s brought a positivist approach to LIS research, since it incorporated many of the research designs and methods used in clinical medicine [ 7 , 8 ]. In addition, LIS has developed its own methodological approaches, a prominent example of which is bibliometrics. Bibliometrics, which can be defined as “ the use of mathematical and statistical methods to study documents and patterns of publication ” (p. 38) [ 9 ], is a native research methodology, which has been extensively used outside the field, especially in science studies [ 10 ].

Library and information science research has been often criticized as being fragmentary, narrowly focused, and oriented to practical problems [ 11 ]. Many authors have noticed limited use of theory in published research and have advocated greater use of theory as a conceptual basis in LIS research [ 4 , 11 – 14 ]. Feehan et al. [ 13 ] claimed that LIS literature has not evolved enough to support a rigid body of its own theoretical basis. Jarvelin and Vakkari [ 15 ] argued that LIS theories are usually vague and conceptually unclear, and that research in LIS has been dominated by a paradigm which “ has made little use of such traditional scientific approaches as foundations and conceptual analysis, or of scientific explanation and theory formulation ” (p. 415). This lack of theoretical contributions may be associated with the fact that LIS emanated from professional practice and is therefore closely linked to practical problems such as the processing and organization of library materials, documentation, and information retrieval [ 15 , 16 ].

In this chapter, after briefly discussing the role of theory in LIS research, we provide an updated view of research issues in the field that will help scholars and students stay informed about topics related to research strategies and methods. To accomplish this, we describe and analyze patterns of LIS research activity as reflected in prominent library journals. The analysis of the articles highlights trends and recurring themes in LIS research regarding the use of multiple methods, the adoption of qualitative approaches, and the employment of advanced techniques for data analysis and interpretation [ 17 ].

2. The role of theory in LIS research

The presence of theory is an indication of research eminence and respectability [ 18 ], as well as a feature of discipline’s maturity [ 19 , 20 ]. Theory has been defined in many ways. “ Any of the following have been used as the meaning of theory: a law, a hypothesis, group of hypotheses, proposition, supposition, explanation, model, assumption, conjecture, construct, edifice, structure, opinion, speculation, belief, principle, rule, point of view, generalization, scheme, or idea ” (p. 309) [ 21 ]. A theory can be described as “ a set of interrelated concepts, definitions, and propositions that explains or predicts events or situations by specifying relations among variables ” [ 22 ]. According to Babbie [ 23 ], research is “ a systematic explanation for the observed facts and laws that related to a particular aspect of life ” (p. 49). It is “ a multiple‐level component of the research process, comprising a range of generalizations that move beyond a descriptive level to a more explanatory level ” [ 24 ] (p. 319). The role of theory in social sciences is, among other things, to explain and predict behavior, be usable in practical applications, and guide research [ 25 ]. According to Smiraglia [ 26 ], theory does not exist in a vacuum but in a system that explains the domains of human actions, the phenomena found in these domains, and the ways in which they are affected. He maintains that theory is developed by systematically observing phenomena, either in the positivist empirical research paradigm or in the qualitative hermeneutic paradigm. Theory is used to formulate hypotheses in quantitative research and confirms observations in qualitative research.

Glazier and Grover [ 24 ] proposed a model for theory‐building in LIS called “circuits of theory.” The model includes taxonomy of theory, developed earlier by the authors [ 11 ], and the critical social and psychological factors that influence research. The purpose of the taxonomy was to demonstrate the relationships among the concepts of research, theory, paradigms, and phenomena. Phenomena are described as “ events experienced in the empirical world ” (p. 230) [ 11 ]. Researchers assign symbols (digital or iconic representations, usually words or pictures) to phenomena, and meaning to symbols, and then they conceptualize the relationships among phenomena and formulate hypotheses and research questions. “ In the taxonomy, empirical research begins with the formation of research questions to be answered about the concepts or hypotheses for testing the concepts within a narrow set of predetermined parameters ” (p. 323) [ 24 ]. Various levels of theories, with implications for research in library and information Science, are described. The first theory level, called substantive theory , is defined as “ a set of propositions which furnish an explanation for an applied area of inquiry ” (p. 233) [ 11 ]. In fact, it may not be viewed as a theory but rather be considered as a research hypothesis that has been tested or even a research finding [ 16 ]. The next level of theory, called formal theory , is defined as “ a set of propositions which furnish an explanation for a formal or conceptual area of inquiry, that is, a discipline ” (p. 234) [ 11 ]. Substantive and formal theories together are usually considered as “middle range” theory in the social sciences. Their difference lies in the ability to structure generalizations and the potential for explanation and prediction. The final level, grand theory , is “ a set of theories or generalizations that transcend the borders of disciplines to explain relationships among phenomena ” (p. 321) [ 24 ]. According to the authors, most research generates substantive level theory, or, alternatively, researchers borrow theory from the appropriate discipline, apply it to the problem under investigation, and reconstruct the theory at the substantive level. Next in the hierarchy of theoretical categories is the paradigm , which is described as “ a framework of basic assumptions with which perceptions are evaluated and relationships are delineated and applied to a discipline or profession ” (p. 234) [ 11 ]. Finally, the most significant theoretical category is the world view , which is defined as “ an individual’s accepted knowledge, including values and assumptions, which provide a ‘filter’ for perception of all phenomena ” (p. 235) [ 11 ]. All the previous categories contribute to shaping the individual’s worldview. In the revised model, which places more emphasis on the impact of social environment on the research process, research and theory building is surrounded by a system of three basic contextual modules: the self, society, and knowledge, both discovered and undiscovered. The interactions and dialectical relationships of these three modules affect the research process and create a dynamic environment that fosters theory creation and development. The authors argue that their model will help researchers build theories that enable generalizations beyond the conclusions drawn from empirical data [ 24 ].

In an effort to propose a framework for a unified theory of librarianship, McGrath [ 27 ] reviewed research articles in the areas of publishing, acquisitions, classification and knowledge organization, storage, preservation and collection management, library collections, and circulations. In his study, he included articles that employed explanatory and predictive statistical methods to explore relationships between variables within and between the above subfields of LIS. For each paper reviewed, he identified the dependent variable, significant independent variables, and the units of analysis. The review displayed explanatory studies “ in nearly every level, with the possible exception of classification, while studies in circulation and use of the library were clearly dominant. A recapitulation showed that a variable at one level may be a unit of analysis at another, a property of explanatory research crucial to the development of theory, which has been either ignored or unrecognized in LIS literature ” (p. 368) [ 27 ]. The author concluded that “explanatory and predictive relationships do exist and that they can be useful in constructing a comprehensive unified theory of librarianship” (p. 368) [ 27 ].

Recent LIS literature provides several analyses of theory development and use in the field. In a longitudinal analysis of information needs and uses of literature, Julien and Duggan [ 28 ] investigated, among other things, to what extent LIS literature was grounded in theory. Articles “ based on a coherent and explicit framework of assumptions, definitions, and propositions that, taken together, have some explanatory power ” (p. 294) were classified as theoretical articles. Results showed that only 18.3% of the research studies identified in the sample of articles examined were theoretically grounded.

Pettigrew and McKechnie [ 29 ] analyzed 1160 journal articles published between 1993 and 1998 to determine the level of theory use in information science research. In the absence of a singular definition of theory that would cover all the different uses of the term in the sample of articles, they operationalized “theory” according to authors’ use of the term. They found that 34.1% of the articles incorporated theory, with the largest percentage of theories drawn from the social sciences. Information science itself was the second most important source of theories. The authors argued that this significant increase in theory use in comparison to earlier studies could be explained by the research‐oriented journals they selected for examination, the sample time, and the broad way in which they defined “theory.” With regard to this last point, that is, their approach of identifying theories only if the author(s) describe them as such in the article, Pettigrew and McKechnie [ 29 ] observed significant differences in how information science researchers perceive theory:

Although it is possible that conceptual differences regarding the nature of theory may be due to the different disciplinary backgrounds of researchers in IS, other themes emerged from our data that suggest a general confusion exists about theory even within subfields. Numerous examples came to light during our analysis in which an author would simultaneously refer to something as a theory and a method, or as a theory and a model, or as a theory and a reported finding. In other words, it seems as though authors, themselves, are sometimes unsure about what constitutes theory. Questions even arose regarding whether the author to whom a theory was credited would him or herself consider his or her work as theory (p. 68).

Kim and Jeong [ 16 ] examined the state and characteristics of theoretical research in LIS journals between 1984 and 2003. They focused on the “theory incident,” which is described as “an event in which the author contributes to the development or the use of theory in his/her paper.” Their study adopted Glazier and Grover’s [ 24 ] model of “circuits of theory.” Substantive level theory was operationalized to a tested hypothesis or an observed relationship, while both formal and grand level theories were identified when they were named as “theory,” “model,” or “law” by authors other than those who had developed them. Results demonstrated that the application of theory was present in 41.4% of the articles examined, signifying a significant increase in the proportion of theoretical articles as compared to previous studies. Moreover, it was evident that both theory development and theory use had increased by the year. Information seeking and use, and information retrieval, were identified as the subfields with the most significant contribution to the development of the theoretical framework.

In a more in‐depth analysis of theory use in Kumasi et al. [ 30 ] qualitatively analyzed the extent to which theory is meaningfully used in scholarly literature. For this purpose, they developed a theory talk coding scheme, which included six analytical categories, describing how theory is discussed in a study. The intensity of theory talk in the articles was described across a continuum from minimal (e.g., theory is discussed in literature review and not mentioned later) through moderate (e.g., multiple theories are introduced but without discussing their relevance to the study) to major (e.g., theory is employed throughout the study). Their findings seem to support the opinion that “ LIS discipline has been focused on the application of specific theoretical frameworks rather than the generation of new theories ” (p. 179) [ 30 ]. Another point the authors made was about the multiple terms used in the articles to describe theory. Words such as “framework,” “model,” or “theory” were used interchangeably by scholars.

It is evident from the above discussion that the treatment of theory in LIS research covers a spectrum of intensity, from marginal mentions to theory revising, expanding, or building. Recent analyses of the published scholarship indicate that the field has not been very successful in contributing to existing theory or producing new theory. In spite of this, one may still assert that LIS research employs theory, and, in fact, there are many theories that have been used or generated by LIS scholars. However, “ calls for additional and novel theory development work in LIS continue, particularly for theories that might help to address the research practice gap ” (p. 12) [ 31 ].

3. Research strategies in LIS

3.1. surveys of research methods.

LIS is a very broad discipline, which uses a wide range of constantly evolving research strategies and techniques [ 32 ]. Various classification schemes have been developed to analyze methods employed in LIS research (e.g., [ 13 , 15 , 17 , 33 – 35 , 38 ]). Back in 1996, in the “research record” column of the Journal of Education for Library and Information Science, Kim [ 36 ] synthesized previous categories and definitions and introduced a list of research strategies, including data collection and analysis methods. The listing included four general research strategies: (i) theoretical/philosophical inquiry (development of conceptual models or frameworks), (ii) bibliographic research (descriptive studies of books and their properties as well as bibliographies of various kinds), (iii) R&D (development of storage and retrieval systems, software, interface, etc.), and (iv) action research, it aims at solving problems and bringing about change in organizations. Strategies are then divided into quantitative and qualitative driven. In the first category are included descriptive studies, predictive/explanatory studies, bibliometric studies, content analysis, and operation research studies. Qualitative‐driven strategies are considered the following: case study, biographical method, historical method, grounded theory, ethnography, phenomenology, symbolic interactionism/semiotics, sociolinguistics/discourse analysis/ethnographic semantics/ethnography of communication, and hermeneutics/interpretive interactionism (p. 378–380) [ 36 ].

Systematic studies of research methods in LIS started in the 1980s and several reviews of the literature have been conducted over the past years to analyze the topics, methodologies, and quality of research. One of the earliest studies was done by Peritz [ 37 ] who carried out a bibliometric analysis of the articles published in 39 core LIS journals between 1950 and 1975. She examined the methodologies used, the type of library or organization investigated, the type of activity investigated, and the institutional affiliation of the authors. The most important findings were a clear orientation toward library and information service activities, a widespread use of the survey methodology, a considerable increase of research articles after 1960, and a significant increase in theoretical studies after 1965.

Nour [ 38 ] followed up on Peritz’s [ 37 ] work and studied research articles published in 41 selected journals during the year 1980. She found that survey and theoretical/analytic methodologies were the most popular, followed by bibliometrics. Comparing these findings to those made by Peritz [ 37 ], Nour [ 38 ] found that the amount of research continued to increase, but the proportion of research articles to all articles had been decreasing since 1975.

Feehan et al. [ 13 ] described how LIS research published during 1984 was distributed over various topics and what methods had been used to study these topics. Their analysis revealed a predominance of survey and historical methods and a notable percentage of articles using more than one research method. Following a different approach, Enger et al. (1989) focused on the statistical methods used by LIS researchers in articles published during 1985 [ 39 ]. They found that only one out of three of the articles reported any use of statistics. Of those, 21% used descriptive statistics and 11% inferential statistics. In addition, the authors found that researchers from disciplines other than LIS made the highest use of statistics and LIS faculty showed the highest use of inferential statistics.

An influential work, against which later studies have been compared, is that of Jarvelin and Vakkari [ 15 ] who studied LIS articles published in 1985 in order to determine how research was distributed over various subjects, what approaches had been taken by the authors, and what research strategies had been used. The authors replicated their study later to include older research published between 1965 and 1985 [ 40 ]. The main finding of these studies was that the trends and characteristics of LIS research remained more or less the same over the aforementioned period of 20 years. The most common topics were information service activities and information storage and retrieval. Empirical research strategies were predominant, and of them, the most frequent was the survey. Kumpulainen [ 41 ], in an effort to provide a continuum with Jarvelin and Vakkeri’s [ 15 ] study, analyzed 632 articles sampled from 30 core LIS journals with respect to various characteristics, including topics, aspect of activity, research method, data selection method, and data analysis techniques. She used the same classification scheme, and she selected the journals based on a slightly modified version of Jarvelin and Vakkari’s [ 15 ] list. Library services and information storage and retrieval emerged again as the most common subjects approached by the authors and survey was the most frequently used method.

More recent studies of this nature include those conducted by Koufogiannakis et al. [ 42 ], Hildreth and Aytac [ 43 ], Hider and Pymm [ 32 ], and Chu [ 17 ]. Koufogiannakis et al. [ 42 ] examined research articles published in 2001 and they found that the majority of them were questionnaire‐based descriptive studies. Comparative, bibliometrics, content analysis, and program evaluation studies were also popular. Information storage and retrieval emerged as the predominant subject area, followed by library collections and management. Hildreth and Aytac [ 43 ] presented a review of the 2003–2005 published library research with special focus on methodology issues and the quality of published articles of both practitioners and academic scholars. They found that most research was descriptive and the most frequent method for data collection was the questionnaire, followed by content analysis and interviews. With regard to data analysis, more researchers used quantitative methods, considerably less used qualitative‐only methods, whereas 61 out of 206 studies included some kind of qualitative analysis, raising the total percentage of qualitative methods to nearly 50%. With regard to the quality of published research, the authors argued that “ the majority of the reports are detailed, comprehensive, and well‐organized ” (p. 254) [ 43 ]. Still, they noticed that the majority of reports did not mention the critical issues of research validity and reliability and neither did they indicate study limitations or future research recommendations. Hider and Pymm [ 32 ] described content analysis of LIS literature “ which aimed to identify the most common strategies and techniques employed by LIS researchers carrying out high‐profile empirical research ” (p. 109). Their results suggested that while researchers employed a wide variety of strategies, they mostly used surveys and experiments. They also observed that although quantitative research accounted for more than 50% of the articles, there was an increase in the use of most sophisticated qualitative methods. Chu [ 17 ] analyzed the research articles published between 2001 and 2010 in three major journals and reported the following most frequent research methods: theoretical approach (e.g., conceptual analysis), content analysis, questionnaire, interview, experiment, and bibliometrics. Her study showed an increase in both the number and variety of research methods but lack of growth in the use of qualitative research or in the adoption of multiple research methods.

In summary, the literature shows a continued interest in the analysis of published LIS research. Approaches include focusing on particular publication years, geographic areas, journal titles, aspects of LIS, and specific characteristics, such as subjects, authorship, and research methods. Despite the abundance of content analyses of LIS literature, the findings are not easily comparable due to differences in the number and titles of journals examined, in the types of the papers selected for analysis, in the periods covered, and in classification schemes developed by the authors to categorize article topics and research strategies. Despite the differences, some findings are consistent among all studies:

Information seeking, information retrieval, and library and information service activities are among the most common subjects studied,

Descriptive research methodologies based on surveys and questionnaires predominate,

Over the years, there has been a considerable increase in the array of research approaches used to explore library issues, and

Data analysis is usually limited to descriptive statistics, including frequencies, means, and standard deviations.

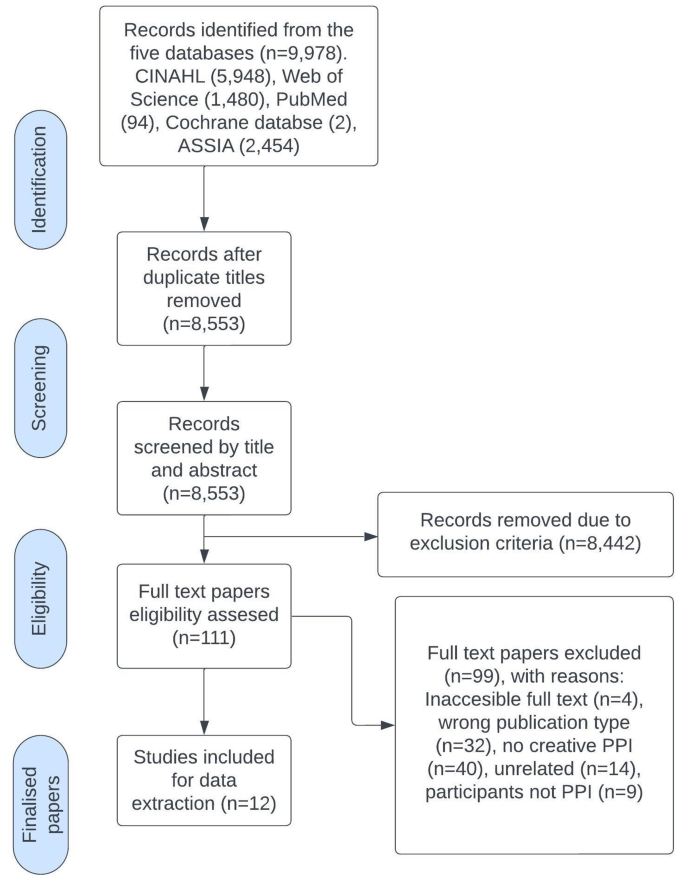

3.2. Data collection and analysis

Articles published between 2011 and 2016 were obtained from the following journals: Library and Information Science Research, College & Research Libraries, Journal of Documentation, Information Processing & Management, and Journal of Academic Librarianship ( Table 1 ). These five titles were selected as data sources because they have the highest 5‐year impact factor of the journals classified in Ulrich’s Serials Directory under the “Library and Information Sciences” subject heading. From the journals selected, only full‐length articles were collected. Editorials, book reviews, letters, interviews, commentaries, and news items were excluded from the analysis. This selection process yielded 1643 articles. A stratified random sample of 440 articles was chosen for in‐depth analysis ( Table 2 ). For the purpose of this study, five strata, corresponding to the five journals, were used. The sample size was determined using a margin of error, 4%, and confidence interval, 95%.

Table 1.

Profile of the journals.

Table 2.

Journal titles.

Each article was classified as either research or theoretical. Articles that employed specific research methodology and presented specific findings of original studies performed by the author(s) were considered research articles. The kind of study may vary (e.g., it could be an experiment, a survey, etc.), but in all cases, raw data had been collected and analyzed, and conclusions were drawn from the results of that analysis. Articles reporting research in system design or evaluation in the information systems field were also regarded as research articles . On the other hand, works that reviewed theories, theoretical concepts, or principles discussed topics of interest to researchers and professionals, or described research methodologies were regarded as theoretical articles [ 44 ] and were classified in the no‐empirical‐research category. In this category, were also included literature reviews and articles describing a project, a situation, a process, etc.

Each article was classified into a topical category according to its main subject. The articles classified as research were then further explored and analyzed to identify (i) research approach, (ii) research methodology, and (iii) method of data analysis. For each variable, a coding scheme was developed, and the articles were coded accordingly. The final list of the analysis codes was extracted inductively from the data itself, using as reference the taxonomies utilized in previous studies [ 15 , 32 , 43 , 45 ]. Research approaches “ are plans and procedures for research ” (p. 3) [ 46 ]. Research approaches can generally be grouped as qualitative, quantitative, and mixed methods studies. Quantitative studies aim at the systematic empirical investigation of quantitative properties or phenomena and their relationships. Qualitative research can be broadly defined as “ any kind of research that produces findings not arrived at by means of statistical procedures or other means of quantification ” (p. 17) [ 47 ]. It is a way to gain insights through discovering meanings and explaining phenomena based on the attributes of the data. In mixed model research, quantitative and qualitative approaches are combined within or across the stages of the research process. It was beyond the scope of this study to identify in which stages of a study—data collection, data analysis, and data interpretation—the mixing was applied or to reveal the types of mixing. Therefore, studies using both quantitative and qualitative methods, irrespective of whether they describe if and how the methods were integrated, were coded as mixed methods studies.

Research methodologies , or strategies of inquiry, are types of research models “ that provide specific direction for procedures in a research design ” (p. 11) [ 46 ] and inform the decisions concerning data collection and analysis. A coding schema of research methodologies was developed by the authors based on the analysis of all research articles included in the sample. The methodology classification included 12 categories ( Table 3 ). Each article was classified into one category for the variable research methodology . If more than one research strategy was mentioned (e.g., experiment and survey), the article was classified according to the main strategy.

Table 3.

Coding schema for research methodologies.

Methods of data analysis refer to the techniques used by the researchers to explore the original data and answer their research problems or questions. Data analysis for quantitative researches involves statistical analysis and interpretation of figures and numbers. In qualitative studies, on the other hand, data analysis involves identifying common patterns within the data and making interpretations of the meanings of the data. The array of data analysis methods included the following categories:

Descriptive statistics,

Inferential statistics,

Qualitative data analysis,

Experimental evaluation, and

Other methods,

Descriptive statistics are used to describe the basic features of the data in a study. Inferential statistics investigate questions, models, and hypotheses. Mathematical analysis refers to mathematic functions, etc. used mainly in bibliometric studies to answer research questions associated with citation data. Qualitative data analysis is the range of processes and procedures used for the exploration of qualitative data, from coding and descriptive analysis to identification of patterns and themes and the testing of emergent findings and hypotheses. It was used in this study as an overarching term encompassing various types of analysis, such as thematic analysis, discourse analysis, or grounded theory analysis. The class experimental evaluation was used for system and software analysis and design studies which assesses the newly developed algorithm, tool, method, etc. by performing experiments on selected datasets. In these cases, “experiments” differ from the experimental designs in social sciences. Methods that did not fall into one of these categories (e.g., mathematical analysis, visualization, or benchmarking) were classified as other methods . If both descriptive and inferential statistics were used in an article, only the inferential were recorded. In mixed methods studies, each method was recorded in the order in which it was reported in the article.

Ten percent of the articles were randomly selected and used to establish inter‐rater reliability and provide basic validation of the coding schema. Cohen’s kappa was calculated for each coded variable. The average Cohen’s kappa value was κ = 0.60, p < 0.000 (the highest was 0.63 and lowest was 0.59). This indicates a substantial agreement [ 48 ]. The coding disparities across raters were discussed, and the final codes were determined via consensus.

3.3. Results

3.3.1. topic.

Table 4 presents the distribution of articles over the various topics, for each of which a detailed description is provided. The five most popular topics of the papers in the total sample of 440 articles were “information retrieval,” “information behavior,” “information literacy,” “library services,” and “organization and management.” These areas cover over 60% of all topics studied in the papers. The least‐studied topics (covered in less than eight papers) fall into the categories of “information and knowledge management,” “library information systems,” “LIS theory,” and “infometrics.”

Table 4.

Article topics.

Figure 1 shows how the top five topics are distributed across journals. As expected, the topic “information retrieval” has higher publication frequencies in Information Processing & Management, a journal focusing on system design and issues related to the tools and techniques used in storage and retrieval of information. “Information literacy,” “information behavior,” “library services,” and “organization and management” appear to be distributed almost proportionately in College & Research Libraries. “Information literacy” seems to be a more preferred topic in the Journal of Academic Librarianship, while “information behavior” is more popular in the Journal of Documentation and Library & Information Science Research.

Figure 1.

Distribution of topics across journals.

3.3.2. Research approach and methodology

Of all articles examined, 343 articles, which represent the 78% of the sample, reported empirical research. The rest 22% (N = 97) were classified as non‐empirical research papers. Research articles were coded as quantitative, qualitative, or mixed methods studies. An overwhelming majority (70%) of the empirical research articles employed a quantitative research approach. Qualitative and mixed methods research was reported in 21.6 and 8.5% of the articles, respectively ( Figure 2 ).

Figure 2.

Research approach.

Table 5 presents the distribution of research approaches over the five most famous topics. The quantitative approach clearly prevails in all topics, especially in information retrieval research. However, qualitative designs seem to gain acceptance in all topics (except information retrieval), while in information behavior research, quantitative and qualitative approaches are almost evenly distributed. Mixed methods were quite frequent in information literacy and information behavior studies and less popular in the other topics.

Table 5.

Topics across research approach.

The most frequently used research strategy was survey, accounting for almost 37% of all research articles, followed by system and software analysis and design, a strategy used in this study specifically for research in information systems (Jarvelin & Vakkari, 1990). This result is influenced by the fact that Information Processing & Management addresses issues at the intersection between LIS and computer science, and the majority of its articles present the development of new tools, algorithms, methods and systems, and their experimental evaluation. The third‐ and fourth‐ranking strategies were content analysis and bibliometrics. Case study, experiment, and secondary data analysis were represented by 15 articles each, while the rest of the techniques were underrepresented with considerably fewer articles ( Table 6 ).

Table 6.

Research methodologies.

3.3.3. Methods of data analysis

Table 7 displays the frequencies for each type of data analysis.

Table 7.

Method of data analysis.

Almost half of the empirical research papers examined reported any use of statistics. Descriptive statistics, such as frequencies, means, or standard deviations, were more frequently used compared to inferential statistics, such as ANOVA, regression, or factor analysis. Nearly one‐third of the articles employed some type of qualitative data analysis either as the only method or—in mixed methods studies—in combination with quantitative techniques.

3.4. Discussions and conclusions

The patterns of LIS research activity as reflected in the articles published between 2011 and 2016 in five well‐established, peer‐reviewed journals were described and analyzed. LIS literature addresses many and diverse topics. Information retrieval, information behavior, and library services continue to attract the interest of researchers as they are core areas in library science. Information retrieval has been rated as one of the most famous areas of interest in research articles published between 1965 and 1985 [ 40 ]. According to Dimitroff [ 49 ], information retrieval was the second most popular topic in the articles published in the Bulletin of the Medical Library Association, while Cano [ 50 ] argued that LIS research produced in Spain from 1977 to 1994 was mostly centered on information retrieval and library and information services. In addition, Koufogiannakis et al. [ 42 ] found that information access and retrieval were the domain with the most research, and in Hildreth and Aytac’s [ 43 ] study, most articles were dealing with issues related to users (needs, behavior, information seeking, etc.), services, and collections. The present study provides evidence that the amount of research in information literacy is increasing, presumably due to the growing importance of information literacy instruction in libraries. In recent years, there is an ongoing educational role for librarians, who are more and more actively engaging in the teaching and learning processes, a trend that is reflected in the research output.

With regard to research methodologies, the present study seems to confirm the well‐documented predominance of survey in LIS research. According to Dimitroff [ 49 ], the percentage related to use of survey research methods reported in various studies varied between 20.3 and 41.5%. Powell [ 51 ], in a review of the research methods appearing in LIS literature, pointed out that survey had consistently been the most common type of study in both dissertations and journal articles. Survey reported the most widely used research design by Jarvelin and Vakkari [ 40 ], Crawford [ 52 ], Hildreth and Aytac [ 43 ], and Hider and Pymm [ 32 ]. The majority of articles examined by Koufogiannakis et al. [ 42 ] were descriptive studies using questionnaires/surveys. In addition, survey methods represented the largest proportion of methods used in information behavior articles analyzed by Julien et al. [ 53 ]. There is no doubt that survey has been used more than any other method in LIS research. As Jarvelin and Vakkari [ 15 ] put it, “it appears that the field is so survey‐oriented that almost all problems are seen through a survey viewpoint” (p. 416). Much of survey’s popularity can be ascribed to its being a well‐known, understood, easily conducted, and inexpensive method, which is easy to analyze results [ 41 , 42 ]. However, our findings suggest that while the survey ranks high, a variety of other methods have been also used in the research articles. Content analysis emerged as the third‐most frequent strategy, a finding similar to those of previous studies [ 17 , 32 ]. Although content analysis was not regarded by LIS researchers as a favored research method until recently, its popularity seems to be growing [ 17 ].

Quantitative approaches, which dominate, tend to rely on frequency counts, percentages, and descriptive statistics used to describe the basic features of the data in a study. Fewer studies used advanced statistical analysis techniques, such as t‐tests, correlation, and regressions, while there were some examples of more sophisticated methods, such as factor analysis, ANOVA, MANOVA, and structural equation modeling. Researchers engaging in quantitative research designs should take into consideration the use of inferential statistics, which enables the generalization from the sample being studied to the population of interest and, if used appropriately, are very useful for hypothesis testing. In addition, multivariate statistics are suitable for examining the relationships among variables, revealing patterns and understanding complex phenomena.

The findings also suggest that qualitative approaches are gaining increasing importance and have a role to play in LIS studies. These results are comparable to the findings of Hider and Pymm [ 32 ], who observed significant increases for qualitative research strategies in contemporary LIS literature. Qualitative analysis description varied widely, reflecting the diverse perspectives, analysis methods, and levels of depth of analysis. Commonly used terms in the articles included coding, content analysis, thematic analysis, thematic analytical approach, theme, or pattern identification. One could argue that the efforts made to encourage and promote qualitative methods in LIS research [ 54 , 55 ] have made some impact. However, qualitative research methods do not seem to be adequately utilized by library researchers and practitioners, despite their potential to offer far more illuminating ways to study library‐related issues [ 56 ]. LIS research has much to gain from the interpretive paradigm underpinning qualitative methods. This paradigm assumes that social reality is

the product of processes by which social actors together negotiate the meanings for actions and situations; it is a complex of socially constructed meanings. Human experience involves a process of interpretation rather than sensory, material apprehension of the external physical world and human behavior depends on how individuals interpret the conditions in which they find themselves. Social reality is not some ‘thing’ that may be interpreted in different ways, it is those interpretations (p. 96) [ 57 ].

As stated in the introduction of this chapter, library and information science focuses on the interaction between individuals and information. In every area of LIS research, the connection of factors that lead to and influence this interaction is increasingly complex. Qualitative research searches for “ all aspects of that complexity on the grounds that they are essential to understanding the behavior of which they are a part ” (p. 241) [ 59 ]. Qualitative research designs can offer a more in‐depth analysis of library users, their needs, attitudes, and behaviors.

The use of mixed methods designs was found to be rather rare. While Hildreth and Aytac [ 43 ] found higher percentages of studies using combined methods in data analysis, our results are analogous to those shown by Fidel [ 60 ]. In fact, as in her study, only few of the articles analyzed referred to mixed methods research by name, a finding indicating that “ the concept has not yet gained recognition in LIS research ” (p. 268). Mixed methods research has become an established research approach in the social sciences as it minimizes the weaknesses of quantitative and qualitative research alone and allows researchers to investigate the phenomena more completely [ 58 ].

In conclusion, there is evidence that LIS researchers employ a large number and wide variety of research methodologies. Each research approach, strategy, and method has its advantages and limitations. If the aim of the study is to confirm hypotheses about phenomena or measure and analyze the causal relationships between variables, then quantitative methods might be used. If the research seeks to explore, understand, and explain phenomena then qualitative methods might be used. Researchers can consider the full range of possibilities and make their selection based on the philosophical assumptions they bring to the study, the research problem being addressed, their personal experiences, and the intended audience for the study [ 46 ].

Taking into consideration the increasing use of qualitative methods in LIS studies, an in‐depth analysis of papers using qualitative methods would be interesting. A future study in which the different research strategies and types of analysis used in qualitative methods will be presented and analyzed could help LIS practitioners understand the benefits of qualitative analysis.

Mixed methods used in LIS research papers could be analyzed in future studies in order to identify in which stages of a study, data collection, data analysis, and data interpretation, the mixing was applied and to reveal the types of mixing.

As far as it concerns the quantitative research methods, which predominate in LIS research, it would be interesting to identify systematic relations between more than two variables such as authors’ affiliation, topic, research strategies, etc. and to create homogeneous groups using multivariate data analysis techniques.

- 1. Buckland MK, Liu ZM. History of information science. Annual Review of Information Science and Technology. 1995; 30 :385-416

- 2. Rayward WB. The history and historiography of information science: Some reflections. Information Processing & Management. 1996; 32 (1):3-17

- 3. Wildemuth BM. Applications of Social Research Methods to Questions in Information and Library Science. Westport, CT: Libraries Unlimited; 2009

- 4. Hjørland B. Theory and metatheory of information science: A new interpretation. Journal of Documentation. 1998; 54 (5):606-621. DOI: http://doi.org/10.1108/EUM0000000007183

- 5. Åström F. Heterogeneity and homogeneity in library and information science research. Information Research [Internet]. 2007 [cited 23 April 2017]; 12 (4): poster colisp01 [3 p.]. Available from: http://www.informationr.net/ir/12-4/colis/colisp01.html

- 6. Dillon A. Keynote address: Library and information science as a research domain: Problems and prospects. Information Research [Internet]. 2007 [cited 23 April 2017]; 12 (4): paper colis03 [6 p.]. Available from: http://www.informationr.net/ir/12-4/colis/colis03.html

- 7. Eldredge JD. Evidence‐based librarianship: An overview. Bulletin of the Medical Library Association. 2000; 88 (4):289-302

- 8. Bradley J, Marshall JG. Using scientific evidence to improve information practice. Health Libraries Review. 1995; 12 (3):147-157

- 9. Bibliometrics. In: International Encyclopedia of Information and Library Science. 2nd ed. London, UK: Routledge; 2003. p. 38

- 10. Åström F. Library and Information Science in context: The development of scientific fields, and their relations to professional contexts. In: Rayward WB, editor. Aware and Responsible: Papers of the Nordic‐International Colloquium on Social and Cultural Awareness and Responsibility in Library, Information and Documentation Studies (SCARLID). Oxford, UK: Scarecrow Press; 2004. pp. 1-27

- 11. Grover R, Glazier J. A conceptual framework for theory building in library and information science. Library and Information Science Research. 1986; 8 (3):227-242

- 12. Boyce BR, Kraft DH. Principles and theories in information science. In: W ME, editor. Annual Review of Information Science and Technology. Medford, NJ: Knowledge Industry Publications. 1985; pp. 153-178

- 13. Feehan PE, Gragg WL, Havener WM, Kester DD. Library and information science research: An analysis of the 1984 journal literature. Library and Information Science Research. 1987; 9 (3):173-185

- 14. Spink A. Information science: A third feedback framework. Journal of the American Society for Information Science. 1997; 48 (8):728-740

- 15. Jarvelin K, Vakkari P. Content analysis of research articles in Library and Information Science. Library and Information Science Research. 1990; 12 (4):395-421

- 16. Kim SJ, Jeong DY. An analysis of the development and use of theory in library and information science research articles. Library and Information Science Research. 2006; 28 (4):548-562. DOI: http://doi.org/10.1016/j.lisr.2006.03.018

- 17. Chu H. Research methods in library and information science: A content analysis. Library & Information Science Research. 2015; 37 (1):36-41. DOI: http://doi.org/10.1016/j.lisr.2014.09.003

- 18. Van Maanen J. Different strokes: Qualitative research in the administrative science quarterly from 1956 to 1996. In: Van Maanen J, editor. Qualitative Studies of Organizations. Thousand Oaks, CA: SAGE; 1998. pp. ix‐xxxii

- 19. Brookes BC. The foundations of information science Part I. Philosophical aspects. Journal of Information Science. 1980; 2 (3/4):125-133

- 20. Hauser L. A conceptual analysis of information science. Library and Information Science Research. 1988; 10 (1):3-35

- 21. McGrath WE. Current theory in Library and Information Science. Introduction. Library Trends. 2002; 50 (3):309-316

- 22. Theory and why it is important - Social and behavioral theories - e-Source Book - OBSSR e-Source [Internet]. Esourceresearch.org. 2017 [cited 23 April 2017]. Available from: http://www.esourceresearch.org/eSourceBook/SocialandBehavioralTheories/3TheoryandWhyItisImportant/tabid/727/Default.aspx

- 23. Babbie E. The practice of social research. 7th ed. Belmont, CA: Wadsworth; 1995

- 24. Glazier JD, Grover R. A multidisciplinary framework for theory building. Library Trends. 2002; 50 (3):317-329

- 25. Glaser B, Strauss AL. The discovery of grounded theory: Strategies for qualitative research. New Brunswick: Aldine Transaction; 1999

- 26. Smiraglia RP. The progress of theory in knowledge organization. Library Trends. 2002; 50 :330-349

- 27. McGrath WE. Explanation and prediction: Building a unified theory of librarianship, concept and review. Library Trends. 2002; 50 (3):350-370

- 28. Julien H, Duggan LJ. A longitudinal analysis of the information needs and uses literature. Library & Information Science Research. 2000; 22 (3):291-309. DOI: http://doi.org/10.1016/S0740‐8188(99)00057‐2

- 29. Pettigrew KE, McKechnie LEF. The use of theory in information science research. Journal of the American Society for Information Science and Technology. 2001; 52 (1):62-73. DOI: http://doi.org/10.1002/1532‐2890(2000)52:1<62::AID‐ASI1061>3.0.CO;2‐J

- 30. Kumasi KD, Charbonneau DH, Walster D. Theory talk in the library science scholarly literature: An exploratory analysis. Library & Information Science Research. 2013; 35 (3):175-180. DOI: http://doi.org/10.1016/j.lisr.2013.02.004

- 31. Rawson C, Hughes‐Hassell S. Research by Design: The promise of design‐based research for school library research. School Libraries Worldwide. 2015; 21 (2):11-25

- 32. Hider P, Pymm B. Empirical research methods reported in high‐profile LIS journal literature. Library & Information Science Research. 2008; 30 (2):108-114. DOI: http://doi.org/10.1016/j.lisr.2007.11.007

- 33. Bernhard, P. In search of research methods used in information science. Canadian Journal of Information and Library Science. 1993;18(3): 1-35

- 34. Blake VLP. Since Shaughnessy. Collection Management. 1994; 19 (1‐2):1-42. DOI: http://doi.org/10.1300/J105v19n01_01

- 35. Schlachter GA. Abstracts of library science dissertations. Library Science Annual. 1989; 1 :1988-1996

- 36. Kim MT. Research record. Journal of Education for Library and Information Science. 1996; 37 (4):376-383

- 37. Peritz BC. The methods of library science research: Some results from a bibliometric survey. Library Research. 1980; 2 (3):251-268

- 38. Nour MM. A quantitative analysis of the research articles published in core library journals of 1980. Library and Information Science Research. 1985; 7 (3):261-273

- 39. Enger KB, Quirk G, Stewart JA. Statistical methods used by authors of library and infor- mation science journal articles. Library and Information Science Research. 1989; 11 (1): 37-46

- 40. Jarvelin K, Vakkari P. The evolution of library and information science 1965-1985: A content analysis of journal articles. Information Processing and Management. 1993; 29 (1):129-144

- 41. Kumpulainen S. Library and information science research in 1975: Content analysis of the journal articles. Libri. 1991; 41 (1):59-76

- 42. Koufogiannakis D, Slater L, Crumley E. A content analysis of librarianship research. Journal of Information Science. 2004; 30 (3):227-239. DOI: http://doi.org/10.1177/0165551504044668

- 43. Hildreth CR, Aytac S. Recent library practitioner research: A methodological analysis and critique on JSTOR. Journal of Education for Library and Information Science. 2007; 48 (3):236-258

- 44. Gonzales‐Teruel A, Abad‐Garcia MF. Information needs and uses: An analysis of the literature published in Spain, 1990‐2004. Library and Information Science Research. 2007; 29 (1):30-46

- 45. Luo L, Mckinney M. JAL in the past decade: A comprehensive analysis of academic library research. The Journal of Academic Librarianship. 2015; 41 :123-129. DOI: http://doi.org/10.1016/j.acalib.2015.01.003

- 46. Creswell JW. Research Design: Qualitative, Quantitative, and Mixed Methods Approaches. 3rd ed. Thousand Oaks, CA: SAGE; 2009

- 47. Strauss A, Corbin J. Basics of Qualitative Research: Grounded Theory Procedures and Techniques. Newbury Park, CA: SAGE Publications; 1990

- 48. Neuendorf KA. The Content Analysis Guidebook. 2nd ed. Thousand Oaks, CA: SAGE Publications; 2016

- 49. Dimitroff A. Research for special libraries: A quantitative analysis of the literature. Special Libraries. 1995; 86 (4):256-264

- 50. Cano V. Bibliometric overview of library and information science research in Spain. Journal of the American Society for Information Science. 1999; 50 (8):675-680. DOI: http://doi.org/10.1002/(SICI)1097‐4571(1999)50:8<675::AID‐ASI5>3.0.CO;2‐B

- 51. Powell RR. Recent trends in research: A methodological essay. Library & Information Science Research. 1999; 21 (1):91-119. DOI: http://doi.org/10.1016/S0740‐8188(99)80007‐3

- 52. Crawford GA. The research literature of academic librarianship: A comparison of college & Research Libraries and Journal of Academic Librarianship. College & Research Libraries. 1999; 60 (3):224-230. DOI: http://doi.org/10.5860/crl.60.3.224

- 53. Julien H, Pecoskie JJL, Reed K. Trends in information behavior research, 1999-2008: A content analysis. Library & Information Science Research. 2011; 33 (1):19-24. DOI: http://doi.org/10.1016/j.lisr.2010.07.014

- 54. Fidel R. Qualitative methods in information retrieval research. Library and Information Science Research. 1993; 15 (3):219-247

- 55. Hernon P, Schwartz C. Reflections (editorial). Library and Information Science Research. 2003; 25 (1):1-2. DOI: http://doi.org/10.1016/S0740‐8188(02)00162‐7

- 56. Priestner A. Going native: Embracing ethnographic research methods in libraries. Revy. 2015; 38 (4):16-17

- 57. Blaikie N. Approaches to social enquiry. Cambridge: Polity; 1993

- 58. Johnson RB, Onwuegbuzie AJ. Mixed methods research: A research paradigm whose time has come. Educational Researcher. 2004; 33 (7):14-26

- 59. Westbrook L. Qualitative research methods: A review of major stages, data analysis techniques, and quality controls. Library & Information Science Research. 1994; 16 (3):241-254

- 60. Fidel R. Are we there yet?: Mixed methods research in library and information science. Library and Information Science Research. 2008; 30 (4):265-272. DOI: http://doi.org/10.1016/j.lisr.2008.04.001

© 2017 The Author(s). Licensee IntechOpen. This chapter is distributed under the terms of the Creative Commons Attribution 3.0 License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Continue reading from the same book

Edited by Sonyel Oflazoglu Dora

Published: 28 June 2017

By Seyma Demir and Yasemin Yildirim Usta

1896 downloads

By Maria Cecília de Souza Minayo

2128 downloads

By Yusuf Bilgin

3198 downloads

Research Methods in Library and Information Science, 7th Edition

Libraries Unlimited

Cite this eBook

9781440878589

Connaway, Lynn and Radford, Marie. Research Methods in Library and Information Science, 7th Edition. 7, Libraries Unlimited, 2021. ABC-CLIO, publisher.abc-clio.com/9781440878589.

Chicago Manual of Style

Connaway, Lynn, and Marie Radford. Research Methods in Library and Information Science, 7th Edition, 7. Libraries Unlimited, 2021. http://publisher.abc-clio.com/9781440878589

Connaway, L. & Radford, M. (2021). Research Methods in Library and Information Science, 7th Edition. Retrieved from http://publisher.abc-clio.com/9781440878589

The seventh edition of this frequently adopted textbook features new or expanded sections on social justice research, data analysis software, scholarly identity research, social networking, data science, and data visualization, among other topics. It continues to include discipline experts' voices.

The revised seventh edition of this popular text provides instruction and guidance for professionals and students in library and information science who want to conduct research and publish findings, as well as for practicing professionals who want a broad overview of the current literature.

Providing a broad introduction to research design, the authors include principles, data collection techniques, and analyses of quantitative and qualitative methods, as well as advantages and limitations of each method and updated bibliographies. Chapters cover the scientific method, sampling, validity, reliability, and ethical concerns along with quantitative and qualitative methods. LIS students and professionals will consult this text not only for instruction on conducting research but also for guidance in critically reading and evaluating research publications, proposals, and reports.

As in the previous edition, discipline experts provide advice, tips, and strategies for completing research projects, dissertations, and theses; writing grants; overcoming writer's block; collaborating with colleagues; and working with outside consultants. Journal and book editors discuss how to publish and identify best practices and understudied topics, as well as what they look for in submissions.

- Features new or expanded sections on social justice research; virtual collaboration, data collection, and dissemination; scholarly communication; computer-assisted qualitative and quantitative data analysis; scholarly identity research and guidelines; data science; and visualization of quantitative and qualitative data

- Provides a broad and comprehensive overview and update, especially of research published over the past five years

- Highlights school, public, and academic research findings

- Relies on the coauthors' expertise in research design, securing grant funding, and using the latest technology and data analysis software

Table of Contents

Table of Contents pages: 1 2

- Cover Cover1 1

- Title Page iii 4

- Copyright iv 5

- Contents v 6

- Illustrations xv 16

- Text Boxes xvii 18

- Preface xix 20

- 1—Research and Librarianship 1 24

- Introduction 1 24

- Definition of Research 2 25

- The Assessment Imperative 6 29

- Scholarly Communication 8 31

- Research Data Management and Reuse 11 34

- New Modes for Collaboration 14 37

- Time Management 16 39

- Overview of Previous Library and Information Science Research 19 42

- Current Library and Information Science Research Environment 19 42

- Research Methods in Library and Information Science 19 42

- Recommendations for Future Research in Library and Information Science 22 45

- Summary 25 48

- References 26 49

- 2—Developing the Research Study 35 58

- Planning for Research: Getting Started 35 58

- Philosophical Underpinnings and Assumptions 36 59

- Paradigms That Shape Research Development 37 60

- A General Outline for Research 39 62

- Literature Review of Related Research 39 62

- Identification of the Problem 43 66

- Characteristics of a Problem Suitable for Research 45 68

- Statement of the Problem 47 70

- Identifying Subproblems 48 71

- The Role of Theory in the Design of Research 49 72

- Definition of Theory 49 72

- Research Design 56 79

- Differences in Quantitative and Qualitative Design 57 80

- Mixed Methods 59 82

- Testing or Applying the Theory 66 89

- The Pilot Study 66 89

- Summary 67 90

- References 68 91

- 3—Writing the Research Proposal 73 96

- Organization and Content of a Typical Proposal 74 97

- Title Page 74 97

- Abstract 75 98

- Table of Contents 75 98

- Introduction and Statement of the Problem 75 98

- The Literature Review of Related Research 78 101

- Research Design 79 102

- Institutional Resources 81 104

- Personnel 81 104

- Budget 82 105

- Anticipated Results 84 107

- Indicators of Success 84 107

- Diversity Plan 86 109

- Limitations of the Study 86 109

- Back Matter 87 110

- The Dissertation Proposal: Further Guidance 87 110

- Characteristics of a Good Proposal 89 112

- Features That Detract from a Proposal 89 112

- Obtaining Funding for Library and Information Science Research 90 113

- Summary 95 118

- References 96 119

- 4—Principles of Quantitative Methods 99 122

- Formulating Hypotheses 100 123

- Definitions of Hypothesis 100 123

- Sources of Hypotheses 102 125

- Developing the Hypothesis 102 125

- Variables 103 126

- Concepts 105 128

- Desirable Characteristics of Hypotheses 107 130

- Testing the Hypothesis 108 131

- Validity and Reliability 110 133

- Validity of Research Design 110 133

- Validity in Measurement 111 134

- Logical Validity 112 135

- Empirical Validity 112 135

- Construct Validity 113 136

- Reliability of Research Design 113 136

- Reliability in Measurement 113 136

- Scales 115 138

- Ethics of Research 116 139

- General Guidelines 117 140

- Guidelines for Library and Information Science Professionals 119 142

- Ethics for Research in the Digital Environment 120 143

- Research Misconduct 123 146

- Summary 124 147

- References 124 147

- 5—Survey Research and the Questionnaire 129 152

- Survey Research 130 153

- Major Differences between Survey Research and Other Methods 130 153

- Types of Survey Research 130 153

- Exploratory Survey Research 131 154

- Descriptive Survey Research 132 155

- Other Types of Survey Research 132 155

- Basic Purposes of Descriptive Survey Research 133 156

- Basic Steps of Survey Research: An Overview 134 157

- Survey Research Designs 136 159

- Survey Research Costs 137 160

- The Questionnaire 138 161

- Prequestionnaire Planning 138 161

- Advantages of the Questionnaire 139 162

- Disadvantages of the Questionnaire 140 163

- Constructing the Questionnaire 141 164

- Type of Question According to Information Needed 142 165

- Type of Question According to Form 143 166

- Scaled Responses 146 169

- Question Content and Selection 152 175

- Question Wording 153 176

- Sequencing of Questionnaire Items 154 177

- Sources of Error 155 178

- Preparing the First Draft 155 178

- Evaluating the Questionnaire 156 179

- The Pretest 156 179

- Final Editing 157 180

- Cover Email or Letter with Introductory Information 159 182

- Distribution of the Questionnaire 160 183

- Summary 165 188

- References 165 188

- 6—Sampling 169 192

- Basic Terms and Concepts 169 192

- Types of Sampling Methods 170 193

- Nonprobability Sampling 170 193

- Probability Sampling 172 195

- Determining the Sample Size 182 205

- Use of Formulas 183 206

- Sampling Error 186 209

- Other Causes of Sampling Error 188 211

- Nonsampling Error 189 212

- Summary 189 212

- References 189 212

- 7—Experimental Research 191 214

- Causality 191 214

- The Conditions for Causality 192 215

- Bases for Inferring Causal Relationships 193 216

- Controlling the Variables 194 217

- Random Assignment 195 218

- Internal Validity 196 219

- Threats to Internal Validity 196 219

- External Validity 198 221

- Threats to External Validity 198 221

- Experimental Designs 199 222

- True Experimental Designs 200 223

- True Experiments and Correlational Studies 202 225

- Quasi-Experimental Designs 205 228

- Ex Post Facto Designs 207 230

- Internet-Based Experiments 207 230

- Summary 208 231

- References 208 231

- 8—Analysis of Quantitative Data 211 234

- Statistical Analysis 211 234

- Data Mining 212 235

- Log Analysis 212 235

- Data Science 215 238

- Machine Learning and Artificial Intelligence 215 238

- Bibliometrics 217 240

- Role of Statistics 221 244

- Cautions in Using Statistics 221 244

- Steps Involved in Statistical Analysis 222 245

- The Establishment of Categories 222 245

- Coding the Data 223 246

- Analyzing the Data: Descriptive Statistics 227 250

- Analyzing the Data: Inferential Statistics 233 256

- Parametric Statistics 235 258

- Nonparametric Statistics 240 263

- Selecting the Appropriate Statistical Test 241 264

- Cautions in Testing the Hypothesis 243 266

- Statistical Analysis Software 244 267

- Visualization and Display of Quantitative Data 246 269

- Summary 250 273

- References 251 274

- 9—Principles of Qualitative Methods 259 282

- Introduction to Qualitative Methods 259 282

- Strengths of a Qualitative Approach 261 284

- Role of the Researcher 262 285

- The Underlying Assumptions of Naturalistic Work 263 286

- Ethical Concerns 264 287

- Informed Consent 265 288

- Deception 268 291

- Confidentiality and Anonymity 269 292

- Data-Gathering Techniques 270 293

- Research Design 271 294

- Establishing Goals 272 295

- Developing the Conceptual Framework 273 296

- Developing Research Questions 274 297

- Research Questions for Focus Group and Individual Interviews in the Public Library Context 274 297

- Research Questions for Mixed-Methods Study with Focus Group and Individual Interviews in the Academic Library Context 275 298

- Research Questions for Focus Group and Individual Interviews in a High School Context 275 298

- Research Questions for a Mixed-Methods Grant Project Using Transcript Analysis, Individual Interviews, and Design Sessions in the Consortial Live Chat Virtual Reference Context 276 299

- Research Questions for a Mixed-Methods Study Using a Questionnaire and Individual Interviews Investigating Chat Virtual Reference in the Time of COVID-19 276 299

- Research Design in Online Environments 277 300

- New Modes for Online Data Collection 278 301

- Summary 280 303

- References 281 304

- 10—Analysis of Qualitative Data 287 310

- Data Analysis Tools and Methods 287 310

- Stages in Data Analysis 289 312

- Preparing and Processing Data for Analysis 289 312

- Computer-Assisted Qualitative Data Analysis Software (CAQDAS) 290 313

- Deciding Whether to Use Qualitative Software 295 318

- Strategies for Data Analysis 299 322

eBook Search

Advanced search.

current document titles Imprint: Linworth/Libraries Unlimited susan's collection all documents

Search for Book

Keyword(s):

Print ISBN-13:

Enter value

Publication Year:

Search >

Academia.edu no longer supports Internet Explorer.

To browse Academia.edu and the wider internet faster and more securely, please take a few seconds to upgrade your browser .

Enter the email address you signed up with and we'll email you a reset link.

- We're Hiring!

- Help Center

Research methods in library and information science: A content analysis

Related Papers

Advances in Library and Information Science

Judith Mavodza

The library and information science (LIS) profession is influenced by multidisciplinary research strategies and techniques (research methods) that in themselves are also evolving. They represent established ways of approaching research questions (e.g., qualitative vs. quantitative methods). This chapter reviews the methods of research as expressed in literature, demonstrating how, where, and if they are inter-connected. Chu concludes that popularly used approaches include the theoretical approach, experiment, content analysis, bibliometrics, questionnaire, and interview. It appears that most empirical research articles in Chu's analysis employed a quantitative approach. Although the survey emerged as the most frequently used research strategy, there is evidence that the number and variety of research methods and methodologies have been increasing. There is also evidence that qualitative approaches are gaining increasing importance and have a role to play in LIS, while mixed meth...

Aspasia Togia , Afrodite Malliari

Library and information science (LIS) is a very broad discipline, which uses a wide range of constantly evolving research strategies and techniques. The aim of this chapter is to provide an updated view of research issues in library and information science. A strati‐ fied random sample of 440 articles published in five prominent journals was analyzed and classified to identify (i) research approach, (ii) research methodology, and (iii) method of data analysis. For each variable, a coding scheme was developed, and the articles were coded accordingly. A total of 78% of the articles reported empirical research. The rest 22% were classified as non‐empirical research papers. The five most popular topics were " information retrieval, " " information behaviour, " " information literacy, " " library ser‐ vices, " and " organization and management. " An overwhelming majority of the empirical research articles employed a quantitative approach. Although the survey emerged as the most frequently used research strategy, there is evidence that the number and variety of research methodologies have been increased. There is also evidence that qualitative approaches are gaining increasing importance and have a role to play in LIS, while mixed methods have not yet gained enough recognition in LIS research.

Aspasia Togia

The aim of the present study is to investigate the general trends of LIS research, using as source material the articles published in Library and Information Science Research in a five-year period (2005-2010). Library & Information Science Research was chosen because is a cross-disciplinary and refereed journal, which focuses on the research process in library and information science, covers a wide range of topicswithin the field, reports research findings and provides work of interest to both academics and practitioners. The authors review the findings from an examination of research articles published in the journal, giving emphasis on articles that used quantitative and/or qualitative research methods as an integral part of the author’s work. The paper examines the major topics and problems addressed by LIS researchers, the research approaches and the types of quantitative and qualitative research methods used in articles published during this period, in an effort to understand t...

Veronica Gauchi Risso

Purpose – This paper aims to explore research methods used in Library and Information Science (LIS) during the past four decades. The goal is to compile a annotated bibliography of seminal works of the discipline used in different countries and social contexts. Design/methodology/approach – When comparing areas and types of research, different publication patterns are taken into account. As we can see, data indicators and types of studies carried out on scientific activity contribute very little when evaluating the real response potential to identified problems. Therefore, among other things, LIS needs new methodological developments, which should combine qualitative and quantitative approaches and allow a better understanding of the nature and characteristics of science in different countries. Findings – The conclusion is that LIS emerges strictly linked to descriptive methodologies, channeled to meet the challenges of professional practice through empirical strategies of a professional nature, which manifests itself the preponderance of a professional paradigm that turns out to be an indicator of poor scientific discipline development. Research limitations/implications – This, undoubtedly, reflects the reality of Anglo-Saxon countries, reproduced in most of the recognized journals of the field; this issue plus the chosen instruments for data collection certainly slant the results. Originality/value – The development of taxonomies in the discipline cannot be left aside from the accepted by the rest of the scientific community, at least if LIS desires to be integrated and recognized as a scientific discipline.

Abubakar Abdulkareem

All research is built on certain underlying philosophical assumptions about what constitutes valid research and which research method is appropriate for the development of knowledge in a given study. The use of research paradigm and theory provide specific direction for procedures in research design. This paper discussed the importance of understanding the use research paradigm and theory in relation with the concepts of ontology, epistemology and methodology in both quantitative and qualitative approach. The paper further discussed the connection of research paradigm and theory in library and information science (LIS) research, in both quantitative and qualitative research. The author presents step using deductive and inductive approach and provide a guide in which LIS researchers need to be consider before designing a research proposal.

Helen Clarke

Rajani Mishra

Research is the means to deplore the already existing information in the discipline. It is continuous and exhaustive study and leads to development of the newer theory, methodology or redefinition of the already existing theory in the light of new facts. This rediscovery depends upon the used methods and tools of the research. The present study is a step towards this. It tries to find out the various research methods used by the researchers of the library and information science. Keywords: Research methods, library services, survey, analytical research Cite this Article Rajani Mishra. The Data Analysis Tools Used in the Articles of Annals of Library and Information Studies: An Analysis. Journal of Advancements in Library Sciences . 2017; 4(2): 46–49p.

Library Hi Tech

Denise Koufogiannakis

International Journal of …

Chinwe Anunobi

Sidi Masuga

RELATED PAPERS

Wiley eBooks

David Drake

Technologies

Joshua Pearce , Koami Soulemane Hayibo

IEEE Intelligent Systems

Martin Hering-Bertram

Ibrahim H. Osman DEA, Egov Performance, SEM, Optimitization, Metaheuristics

Alfonso Ramirez Ponce

Acta Scientiarum. Human and Social Sciences

Olayinka ajala

Kuwait Journal of Science

rabia habib

Erik Jansen

Allel HADJALI

Annals of Botany

Antonio Useche

DANIELA ACEVES HERNANDEZ

Vandna Dahiya

Revista Prolegomenos Derechos Y Valores De La Facultad De Derecho

Dilia Patiño

IEEE Robotics and Automation Letters

Avital Bechar

Revista Mundaú

Waleska Aureliano

SSRN Electronic Journal

Brendan K O'Rourke

World journal of surgery

Naloe Black

Revista Jurídica de la Universidad de León