- Part 2 Problem-solving »

- Chapter 3 Solving Problems by Searching

- Edit on GitHub

Chapter 3 Solving Problems by Searching

When the correct action to take is not immediately obvious, an agent may need to plan ahead : to consider a sequence of actions that form a path to a goal state. Such an agent is called a problem-solving agent , and the computational process it undertakes is called search .

Problem-solving agents use atomic representations, that is, states of the world are considered as wholes, with no internal structure visible to the problem-solving algorithms. Agents that use factored or structured representations of states are called planning agents .

We distinguish between informed algorithms, in which the agent can estimate how far it is from the goal, and uninformed algorithms, where no such estimate is available.

3.1 Problem-Solving Agents

If the agent has no additional information—that is, if the environment is unknown —then the agent can do no better than to execute one of the actions at random. For now, we assume that our agents always have access to information about the world. With that information, the agent can follow this four-phase problem-solving process:

GOAL FORMULATION : Goals organize behavior by limiting the objectives and hence the actions to be considered.

PROBLEM FORMULATION : The agent devises a description of the states and actions necessary to reach the goal—an abstract model of the relevant part of the world.

SEARCH : Before taking any action in the real world, the agent simulates sequences of actions in its model, searching until it finds a sequence of actions that reaches the goal. Such a sequence is called a solution .

EXECUTION : The agent can now execute the actions in the solution, one at a time.

It is an important property that in a fully observable, deterministic, known environment, the solution to any problem is a fixed sequence of actions . The open-loop system means that ignoring the percepts breaks the loop between agent and environment. If there is a chance that the model is incorrect, or the environment is nondeterministic, then the agent would be safer using a closed-loop approach that monitors the percepts.

In partially observable or nondeterministic environments, a solution would be a branching strategy that recommends different future actions depending on what percepts arrive.

3.1.1 Search problems and solutions

A search problem can be defined formally as follows:

A set of possible states that the environment can be in. We call this the state space .

The initial state that the agent starts in.

A set of one or more goal states . We can account for all three of these possibilities by specifying an \(Is\-Goal\) method for a problem.

The actions available to the agent. Given a state \(s\) , \(Actions(s)\) returns a finite set of actions that can be executed in \(s\) . We say that each of these actions is applicable in \(s\) .

A transition model , which describes what each action does. \(Result(s,a)\) returns the state that results from doing action \(a\) in state \(s\) .

An action cost function , denote by \(Action\-Cost(s,a,s\pr)\) when we are programming or \(c(s,a,s\pr)\) when we are doing math, that gives the numeric cost of applying action \(a\) in state \(s\) to reach state \(s\pr\) .

A sequence of actions forms a path , and a solution is a path from the initial state to a goal state. We assume that action costs are additive; that is, the total cost of a path is the sum of the individual action costs. An optimal solution has the lowest path cost among all solutions.

The state space can be represented as a graph in which the vertices are states and the directed edges between them are actions.

3.1.2 Formulating problems

The process of removing detail from a representation is called abstraction . The abstraction is valid if we can elaborate any abstract solution into a solution in the more detailed world. The abstraction is useful if carrying out each of the actions in the solution is easier than the original problem.

3.2 Example Problems

A standardized problem is intended to illustrate or exercise various problem-solving methods. It can be given a concise, exact description and hence is suitable as a benchmark for researchers to compare the performance of algorithms. A real-world problem , such as robot navigation, is one whose solutions people actually use, and whose formulation is idiosyncratic, not standardized, because, for example, each robot has different sensors that produce different data.

3.2.1 Standardized problems

A grid world problem is a two-dimensional rectangular array of square cells in which agents can move from cell to cell.

Vacuum world

Sokoban puzzle

Sliding-tile puzzle

3.2.2 Real-world problems

Route-finding problem

Touring problems

Trveling salesperson problem (TSP)

VLSI layout problem

Robot navigation

Automatic assembly sequencing

3.3 Search Algorithms

A search algorithm takes a search problem as input and returns a solution, or an indication of failure. We consider algorithms that superimpose a search tree over the state-space graph, forming various paths from the initial state, trying to find a path that reaches a goal state. Each node in the search tree corresponds to a state in the state space and the edges in the search tree correspond to actions. The root of the tree corresponds to the initial state of the problem.

The state space describes the (possibly infinite) set of states in the world, and the actions that allow transitions from one state to another. The search tree describes paths between these states, reaching towards the goal. The search tree may have multiple paths to (and thus multiple nodes for) any given state, but each node in the tree has a unique path back to the root (as in all trees).

The frontier separates two regions of the state-space graph: an interior region where every state has been expanded, and an exterior region of states that have not yet been reached.

3.3.1 Best-first search

In best-first search we choose a node, \(n\) , with minimum value of some evaluation function , \(f(n)\) .

3.3.2 Search data structures

A node in the tree is represented by a data structure with four components

\(node.State\) : the state to which the node corresponds;

\(node.Parent\) : the node in the tree that generated this node;

\(node.Action\) : the action that was applied to the parent’s state to generate this node;

\(node.Path\-Cost\) : the total cost of the path from the initial state to this node. In mathematical formulas, we use \(g(node)\) as a synonym for \(Path\-Cost\) .

Following the \(PARENT\) pointers back from a node allows us to recover the states and actions along the path to that node. Doing this from a goal node gives us the solution.

We need a data structure to store the frontier . The appropriate choice is a queue of some kind, because the operations on a frontier are:

\(Is\-Empty(frontier)\) returns true only if there are no nodes in the frontier.

\(Pop(frontier)\) removes the top node from the frontier and returns it.

\(Top(frontier)\) returns (but does not remove) the top node of the frontier.

\(Add(node, frontier)\) inserts node into its proper place in the queue.

Three kinds of queues are used in search algorithms:

A priority queue first pops the node with the minimum cost according to some evaluation function, \(f\) . It is used in best-first search.

A FIFO queue or first-in-first-out queue first pops the node that was added to the queue first; we shall see it is used in breadth-first search.

A LIFO queue or last-in-first-out queue (also known as a stack ) pops first the most recently added node; we shall see it is used in depth-first search.

3.3.3 Redundant paths

A cycle is a special case of a redundant path .

As the saying goes, algorithms that cannot remember the past are doomed to repeat it . There are three approaches to this issue.

First, we can remember all previously reached states (as best-first search does), allowing us to detect all redundant paths, and keep only the best path to each state.

Second, we can not worry about repeating the past. We call a search algorithm a graph search if it checks for redundant paths and a tree-like search if it does not check.

Third, we can compromise and check for cycles, but not for redundant paths in general.

3.3.4 Measuring problem-solving performance

COMPLETENESS : Is the algorithm guaranteed to find a solution when there is one, and to correctly report failure when there is not?

COST OPTIMALITY : Does it find a solution with the lowest path cost of all solutions?

TIME COMPLEXITY : How long does it take to find a solution?

SPACE COMPLEXITY : How much memory is needed to perform the search?

To be complete, a search algorithm must be systematic in the way it explores an infinite state space, making sure it can eventually reach any state that is connected to the initial state.

In theoretical computer science, the typical measure of time and space complexity is the size of the state-space graph, \(|V|+|E|\) , where \(|V|\) is the number of vertices (state nodes) of the graph and \(|E|\) is the number of edges (distinct state/action pairs). For an implicit state space, complexity can be measured in terms of \(d\) , the depth or number of actions in an optimal solution; \(m\) , the maximum number of actions in any path; and \(b\) , the branching factor or number of successors of a node that need to be considered.

3.4 Uninformed Search Strategies

3.4.1 breadth-first search .

When all actions have the same cost, an appropriate strategy is breadth-first search , in which the root node is expanded first, then all the successors of the root node are expanded next, then their successors, and so on.

Breadth-first search always finds a solution with a minimal number of actions, because when it is generating nodes at depth \(d\) , it has already generated all the nodes at depth \(d-1\) , so if one of them were a solution, it would have been found.

All the nodes remain in memory, so both time and space complexity are \(O(b^d)\) . The memory requirements are a bigger problem for breadth-first search than the execution time . In general, exponential-complexity search problems cannot be solved by uninformed search for any but the smallest instances .

3.4.2 Dijkstra’s algorithm or uniform-cost search

When actions have different costs, an obvious choice is to use best-first search where the evaluation function is the cost of the path from the root to the current node. This is called Dijkstra’s algorithm by the theoretical computer science community, and uniform-cost search by the AI community.

The complexity of uniform-cost search is characterized in terms of \(C^*\) , the cost of the optimal solution, and \(\epsilon\) , a lower bound on the cost of each action, with \(\epsilon>0\) . Then the algorithm’s worst-case time and space complexity is \(O(b^{1+\lfloor C^*/\epsilon\rfloor})\) , which can be much greater than \(b^d\) .

When all action costs are equal, \(b^{1+\lfloor C^*/\epsilon\rfloor}\) is just \(b^{d+1}\) , and uniform-cost search is similar to breadth-first search.

3.4.3 Depth-first search and the problem of memory

Depth-first search always expands the deepest node in the frontier first. It could be implemented as a call to \(Best\-First\-Search\) where the evaluation function \(f\) is the negative of the depth.

For problems where a tree-like search is feasible, depth-first search has much smaller needs for memory. A depth-first tree-like search takes time proportional to the number of states, and has memory complexity of only \(O(bm)\) , where \(b\) is the branching factor and \(m\) is the maximum depth of the tree.

A variant of depth-first search called backtracking search uses even less memory.

3.4.4 Depth-limited and iterative deepening search

To keep depth-first search from wandering down an infinite path, we can use depth-limited search , a version of depth-first search in which we supply a depth limit, \(l\) , and treat all nodes at depth \(l\) as if they had no successors. The time complexity is \(O(b^l)\) and the space complexity is \(O(bl)\)

Iterative deepening search solves the problem of picking a good value for \(l\) by trying all values: first 0, then 1, then 2, and so on—until either a solution is found, or the depth- limited search returns the failure value rather than the cutoff value.

Its memory requirements are modest: \(O(bd)\) when there is a solution, or \(O(bm)\) on finite state spaces with no solution. The time complexity is \(O(bd)\) when there is a solution, or \(O(bm)\) when there is none.

In general, iterative deepening is the preferred uninformed search method when the search state space is larger than can fit in memory and the depth of the solution is not known .

3.4.5 Bidirectional search

An alternative approach called bidirectional search simultaneously searches forward from the initial state and backwards from the goal state(s), hoping that the two searches will meet.

3.4.6 Comparing uninformed search algorithms

3.5 Informed (Heuristic) Search Strategies

An informed search strategy uses domain–specific hints about the location of goals to find colutions more efficiently than an uninformed strategy. The hints come in the form of a heuristic function , denoted \(h(n)\) :

\(h(n)\) = estimated cost of the cheapest path from the state at node \(n\) to a goal state.

3.5.1 Greedy best-first search

Greedy best-first search is a form of best-first search that expands first the node with the lowest \(h(n)\) value—the node that appears to be closest to the goal—on the grounds that this is likely to lead to a solution quickly. So the evaluation function \(f(n)=h(n)\) .

Problem Solving Agents in Artificial Intelligence

In this post, we will talk about Problem Solving agents in Artificial Intelligence, which are sort of goal-based agents. Because the straight mapping from states to actions of a basic reflex agent is too vast to retain for a complex environment, we utilize goal-based agents that may consider future actions and the desirability of outcomes.

You Will Learn

Problem Solving Agents

Problem Solving Agents decide what to do by finding a sequence of actions that leads to a desirable state or solution.

An agent may need to plan when the best course of action is not immediately visible. They may need to think through a series of moves that will lead them to their goal state. Such an agent is known as a problem solving agent , and the computation it does is known as a search .

The problem solving agent follows this four phase problem solving process:

- Goal Formulation: This is the first and most basic phase in problem solving. It arranges specific steps to establish a target/goal that demands some activity to reach it. AI agents are now used to formulate goals.

- Problem Formulation: It is one of the fundamental steps in problem-solving that determines what action should be taken to reach the goal.

- Search: After the Goal and Problem Formulation, the agent simulates sequences of actions and has to look for a sequence of actions that reaches the goal. This process is called search, and the sequence is called a solution . The agent might have to simulate multiple sequences that do not reach the goal, but eventually, it will find a solution, or it will find that no solution is possible. A search algorithm takes a problem as input and outputs a sequence of actions.

- Execution: After the search phase, the agent can now execute the actions that are recommended by the search algorithm, one at a time. This final stage is known as the execution phase.

Problems and Solution

Before we move into the problem formulation phase, we must first define a problem in terms of problem solving agents.

A formal definition of a problem consists of five components:

Initial State

Transition model.

It is the agent’s starting state or initial step towards its goal. For example, if a taxi agent needs to travel to a location(B), but the taxi is already at location(A), the problem’s initial state would be the location (A).

It is a description of the possible actions that the agent can take. Given a state s, Actions ( s ) returns the actions that can be executed in s. Each of these actions is said to be appropriate in s.

It describes what each action does. It is specified by a function Result ( s, a ) that returns the state that results from doing action an in state s.

The initial state, actions, and transition model together define the state space of a problem, a set of all states reachable from the initial state by any sequence of actions. The state space forms a graph in which the nodes are states, and the links between the nodes are actions.

It determines if the given state is a goal state. Sometimes there is an explicit list of potential goal states, and the test merely verifies whether the provided state is one of them. The goal is sometimes expressed via an abstract attribute rather than an explicitly enumerated set of conditions.

It assigns a numerical cost to each path that leads to the goal. The problem solving agents choose a cost function that matches its performance measure. Remember that the optimal solution has the lowest path cost of all the solutions .

Example Problems

The problem solving approach has been used in a wide range of work contexts. There are two kinds of problem approaches

- Standardized/ Toy Problem: Its purpose is to demonstrate or practice various problem solving techniques. It can be described concisely and precisely, making it appropriate as a benchmark for academics to compare the performance of algorithms.

- Real-world Problems: It is real-world problems that need solutions. It does not rely on descriptions, unlike a toy problem, yet we can have a basic description of the issue.

Some Standardized/Toy Problems

Vacuum world problem.

Let us take a vacuum cleaner agent and it can move left or right and its jump is to suck up the dirt from the floor.

The vacuum world’s problem can be stated as follows:

States: A world state specifies which objects are housed in which cells. The objects in the vacuum world are the agent and any dirt. The agent can be in either of the two cells in the simple two-cell version, and each call can include dirt or not, therefore there are 2×2×2 = 8 states. A vacuum environment with n cells has n×2 n states in general.

Initial State: Any state can be specified as the starting point.

Actions: We defined three actions in the two-cell world: sucking, moving left, and moving right. More movement activities are required in a two-dimensional multi-cell world.

Transition Model: Suck cleans the agent’s cell of any filth; Forward moves the agent one cell forward in the direction it is facing unless it meets a wall, in which case the action has no effect. Backward moves the agent in the opposite direction, whilst TurnRight and TurnLeft rotate it by 90°.

Goal States: The states in which every cell is clean.

Action Cost: Each action costs 1.

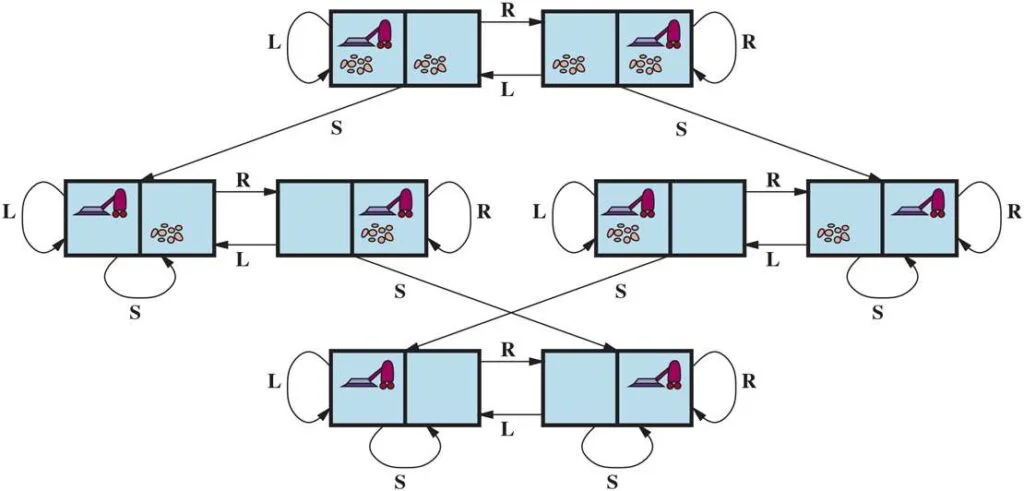

8 Puzzle Problem

In a sliding-tile puzzle , a number of tiles (sometimes called blocks or pieces) are arranged in a grid with one or more blank spaces so that some of the tiles can slide into the blank space. One variant is the Rush Hour puzzle, in which cars and trucks slide around a 6 x 6 grid in an attempt to free a car from the traffic jam. Perhaps the best-known variant is the 8- puzzle (see Figure below ), which consists of a 3 x 3 grid with eight numbered tiles and one blank space, and the 15-puzzle on a 4 x 4 grid. The object is to reach a specified goal state, such as the one shown on the right of the figure. The standard formulation of the 8 puzzles is as follows:

STATES : A state description specifies the location of each of the tiles.

INITIAL STATE : Any state can be designated as the initial state. (Note that a parity property partitions the state space—any given goal can be reached from exactly half of the possible initial states.)

ACTIONS : While in the physical world it is a tile that slides, the simplest way of describing action is to think of the blank space moving Left , Right , Up , or Down . If the blank is at an edge or corner then not all actions will be applicable.

TRANSITION MODEL : Maps a state and action to a resulting state; for example, if we apply Left to the start state in the Figure below, the resulting state has the 5 and the blank switched.

GOAL STATE : It identifies whether we have reached the correct goal state. Although any state could be the goal, we typically specify a state with the numbers in order, as in the Figure above.

ACTION COST : Each action costs 1.

You Might Like:

- Agents in Artificial Intelligence

Types of Environments in Artificial Intelligence

- Understanding PEAS in Artificial Intelligence

- River Crossing Puzzle | Farmer, Wolf, Goat and Cabbage

Share Article:

Digital image processing: all you need to know.

Problem Solving

Definitions.

Searching is one of the classic areas of AI.

A problem is a tuple $(S, s, A, \rho, G, P)$ where

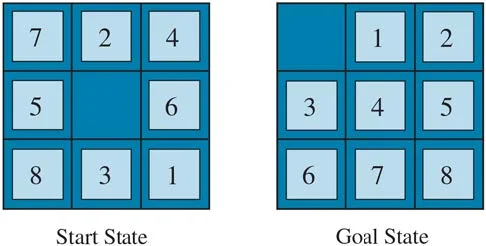

Example: A water jug problem

You have a two-gallon jug and a one-gallon jug; neither have any measuring marks on them at all. Initially both are empty. You need to get exactly one gallon into the two-gallon jug. Formally:

A graphical view of the transition function (initial state shaded, goal states outlined bold):

And a tabular view:

| f2 | e2 | f1 | e1 | t21 | t12 | |

|---|---|---|---|---|---|---|

| (0,0) | (2,0) | — | (0,1) | — | — | — |

| (1,0) | (2,0) | (0,0) | (1,1) | — | (0,1) | — |

| (2,0) | — | (0,0) | (2,1) | — | (1,1) | — |

| (0,1) | (2,1) | — | — | (0,0) | — | (1,0) |

| (1,1) | (2,1) | (0,1) | — | (1,0) | — | (2,0) |

| (2,1) | — | (0,1) | — | (2,0) | — | — |

To solve this problem, an agent would start at the initial state and explore the state space by following links until it arrived in a goal state. A solution to the water jug problem is a path from the initial state to a goal state .

Example solutions

There are an infinite number of solutions. Sometimes we are interested in the solution with the smallest path cost; more on this later.

Awww Man.... Why are we studying this?

Even if they’re not completely right, there are still zillions of problems that can be formulated in problem spaces, e.g.

| Problem | States | Actions |

|---|---|---|

| 8-puzzle | Tile configurations | Up, Down, Left, Right |

| 8-queens (incremental formulation) | Partial board configurations | Add queen, remove queen |

| 8-queens (complete-state formulation) | Board configurations | Move queen |

| TSP | Partial tours | Add next city, pop last city |

| Theorem Proving | Collection of known theorems | Rules of inference |

| Vacuum World | Current Location and status of all rooms | Left, Right, Suck |

| Road Navigation (Route Finding) | Intersections | Road segments |

| Internet Searching | Pages | Follow link |

| Counterfeit Coin Problem | A given weighing | Outcome of the weighing (less, equal, greater) |

Problem Types

State finding vs. action sequence finding.

A fundamental distinction:

| Action Sequence Finding | State Finding |

|---|---|

| We know the state space in advance. We know which states are goals. We have to find the sequence of actions that get us to a goal state. The sequence may be contingent, or expressed as an AND-OR tree, but the actions matter. | We only know the properties that a goal state should have, but we don’t even know if any goal states exist. We just need to find a state that satisfies certain constraints! We don’t care what action sequence gets us there. |

| Optimality is concerned with "cheapest path" | Optimality is concerned with the "best state" |

| Examples: 8-puzzle, water jug, vacuum world, route navigation, games, many robotics problems | Examples: N-queens, integrated circuit layout, factory floor layout, job-shop scheduling, automatic programming, portfolio management, network optimization, most other kinds of optimization problems |

Offline vs. Online Problems

In an online problem, the agent doesn’t even know what the state space is, and has to build a model of it as it acts. In an offline problem, percepts don’t matter at all. An agent can figure out the entire action sequence before doing anything at all .

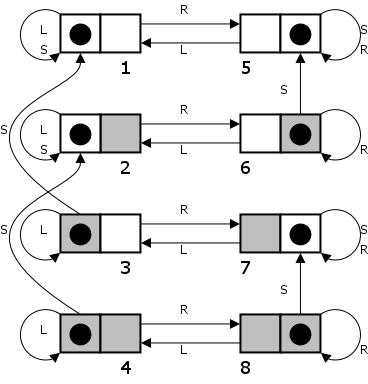

Offline Example : Vacuum World with two rooms, cleaning always works, a square once cleaned stays clean. States are 1 – 8, goal states are 1 and 5.

Sensorless (Conformant) Problems

The agent doesn’t know where it is. We can use belief states (sets of states that the agent might be in). Example from above deterministic, static, single-agent vacuum world:

| In State | Left | Right | Suck |

|---|---|---|---|

| 12345678 | 1234 | 5678 | 1257 |

| 1234 | 1234 | 5678 | 12 |

| 5678 | 1234 | 5678 | 57 |

| 1257 | 123 | 567 | 1257 |

| 12 | 12 | 56 | 12 |

| 57 | 13 | 57 | 57 |

| 123 | 123 | 567 | 12 |

| 567 | 123 | 567 | 57 |

| 56 | 12 | 56 | 5 |

| 13 | 13 | 57 | 1 |

| 5 | 1 | 5 | 5 |

| 1 | 1 | 5 | 1 |

Note the goal states are 1 and 5. If a state 15 was reachable, it would be a goal too.

Contingency Problems

Contingency Problem: The agent doesn’t know what effect its actions will have. This could be due to the environment being partially observable, or because of another agent. Ways to handle this:

Example: Partially observable vacuum world (meaning you don’t know the status of the other square) in which sucking in a clean square may make it dirty.

Can also model contingency problems is with "AND-OR graphs".

Example: find a winning strategy for Nim if there are only five stones in one row left. You are player square. You win if it is player circle’s turn with zero stones left.

In general then, a solution is a subtree in which

If the tree has only OR nodes, then the solution is just a path.

Search Algorithms

Hey, we know what a problem is, what a problem space is, and even what a solution is, but how exactly do we search the space ? Well there are zillions of approaches:

Types of Problem Solving Tasks

Agents may be asked to be

An algorithm is

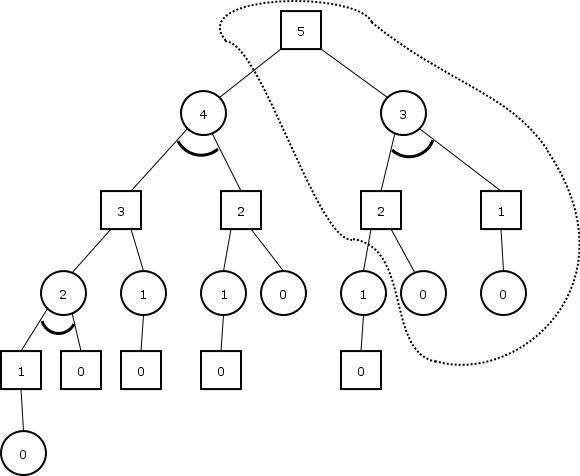

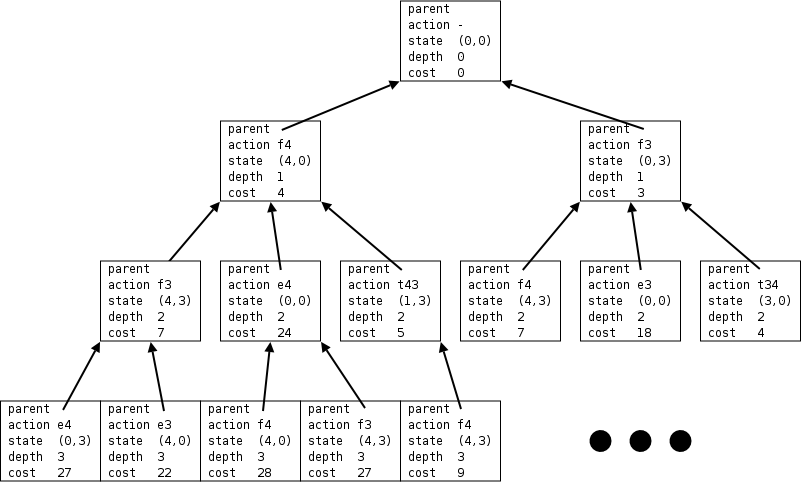

Search Trees

Example: The water jug problem with 4 and 3 gallon jugs. Cost is 1 point per gallon used when filling, 1 point to make a transfer, 5 points per gallon emptied (since it makes a mess). The search tree might start off like this:

Search trees have

The complexity of most search algorithms can be written as a function of one or more of $b$, $d$ and $m$.

In general though there may be more states than there are fundamental particles in the universe. But we need to find a solution. Usually is helpful to

- Data Science

- Data Analysis

- Data Visualization

- Machine Learning

- Deep Learning

- Computer Vision

- Artificial Intelligence

- AI ML DS Interview Series

- AI ML DS Projects series

- Data Engineering

- Web Scrapping

Agents in Artificial Intelligence

In artificial intelligence, an agent is a computer program or system that is designed to perceive its environment, make decisions and take actions to achieve a specific goal or set of goals. The agent operates autonomously, meaning it is not directly controlled by a human operator.

Agents can be classified into different types based on their characteristics, such as whether they are reactive or proactive, whether they have a fixed or dynamic environment, and whether they are single or multi-agent systems.

- Reactive agents are those that respond to immediate stimuli from their environment and take actions based on those stimuli. Proactive agents, on the other hand, take initiative and plan ahead to achieve their goals. The environment in which an agent operates can also be fixed or dynamic. Fixed environments have a static set of rules that do not change, while dynamic environments are constantly changing and require agents to adapt to new situations.

- Multi-agent systems involve multiple agents working together to achieve a common goal. These agents may have to coordinate their actions and communicate with each other to achieve their objectives. Agents are used in a variety of applications, including robotics, gaming, and intelligent systems. They can be implemented using different programming languages and techniques, including machine learning and natural language processing.

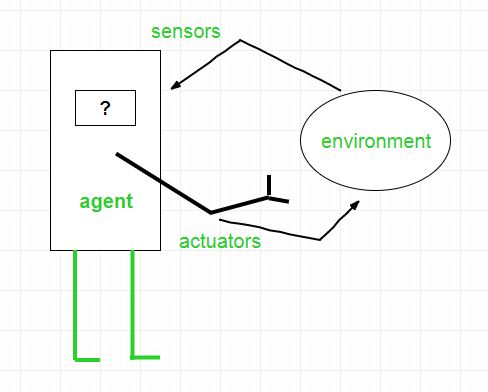

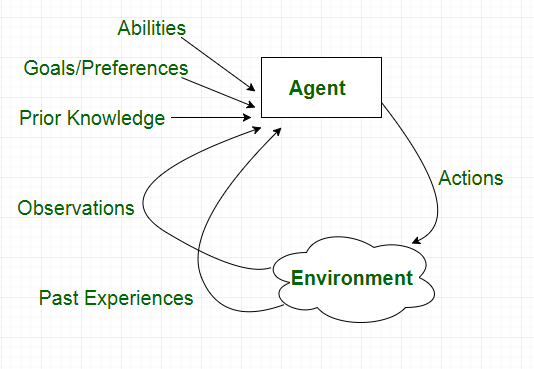

Artificial intelligence is defined as the study of rational agents. A rational agent could be anything that makes decisions, such as a person, firm, machine, or software. It carries out an action with the best outcome after considering past and current percepts(agent’s perceptual inputs at a given instance). An AI system is composed of an agent and its environment . The agents act in their environment. The environment may contain other agents.

An agent is anything that can be viewed as:

- Perceiving its environment through sensors and

- Acting upon that environment through actuators

Note : Every agent can perceive its own actions (but not always the effects).

Interaction of Agents with the Environment

Structure of an AI Agent

To understand the structure of Intelligent Agents, we should be familiar with Architecture and Agent programs. Architecture is the machinery that the agent executes on. It is a device with sensors and actuators, for example, a robotic car, a camera, and a PC. An agent program is an implementation of an agent function. An agent function is a map from the percept sequence(history of all that an agent has perceived to date) to an action.

Agent = Architecture + Agent Program

There are many examples of agents in artificial intelligence. Here are a few:

- Intelligent personal assistants: These are agents that are designed to help users with various tasks, such as scheduling appointments, sending messages, and setting reminders. Examples of intelligent personal assistants include Siri, Alexa, and Google Assistant.

- Autonomous robots: These are agents that are designed to operate autonomously in the physical world. They can perform tasks such as cleaning, sorting, and delivering goods. Examples of autonomous robots include the Roomba vacuum cleaner and the Amazon delivery robot.

- Gaming agents: These are agents that are designed to play games, either against human opponents or other agents. Examples of gaming agents include chess-playing agents and poker-playing agents.

- Fraud detection agents: These are agents that are designed to detect fraudulent behavior in financial transactions. They can analyze patterns of behavior to identify suspicious activity and alert authorities. Examples of fraud detection agents include those used by banks and credit card companies.

- Traffic management agents: These are agents that are designed to manage traffic flow in cities. They can monitor traffic patterns, adjust traffic lights, and reroute vehicles to minimize congestion. Examples of traffic management agents include those used in smart cities around the world.

- A software agent has Keystrokes, file contents, received network packages that act as sensors and displays on the screen, files, and sent network packets acting as actuators.

- A Human-agent has eyes, ears, and other organs which act as sensors, and hands, legs, mouth, and other body parts act as actuators.

- A Robotic agent has Cameras and infrared range finders which act as sensors and various motors act as actuators.

Characteristics of an Agent

Types of Agents

Agents can be grouped into five classes based on their degree of perceived intelligence and capability :

Simple Reflex Agents

Model-Based Reflex Agents

Goal-Based Agents

Utility-Based Agents

Learning Agent

- Multi-agent systems

- Hierarchical agents

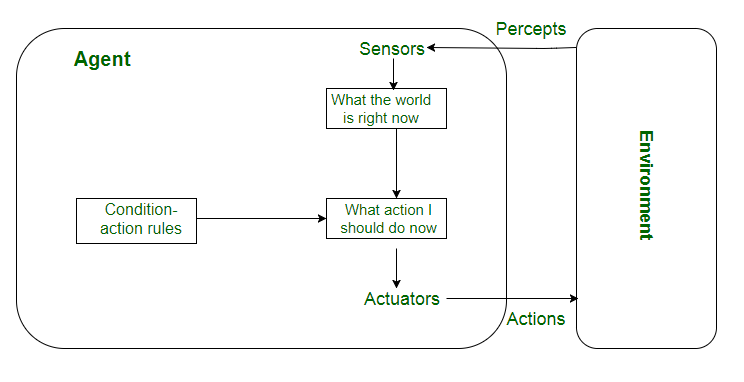

Simple reflex agents ignore the rest of the percept history and act only on the basis of the current percept . Percept history is the history of all that an agent has perceived to date. The agent function is based on the condition-action rule . A condition-action rule is a rule that maps a state i.e., a condition to an action. If the condition is true, then the action is taken, else not. This agent function only succeeds when the environment is fully observable. For simple reflex agents operating in partially observable environments, infinite loops are often unavoidable. It may be possible to escape from infinite loops if the agent can randomize its actions.

Problems with Simple reflex agents are :

- Very limited intelligence.

- No knowledge of non-perceptual parts of the state.

- Usually too big to generate and store.

- If there occurs any change in the environment, then the collection of rules needs to be updated.

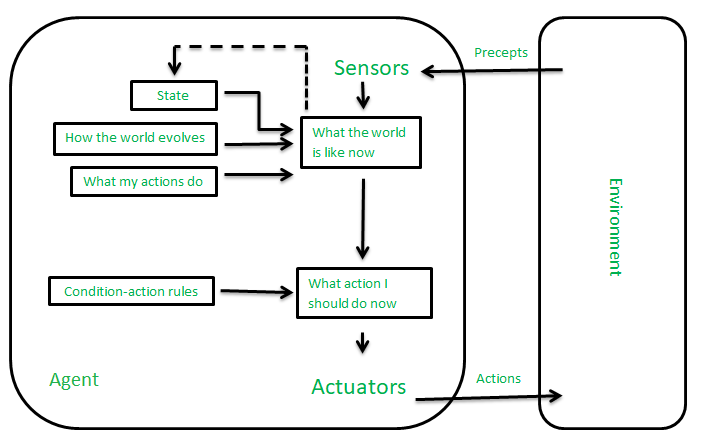

It works by finding a rule whose condition matches the current situation. A model-based agent can handle partially observable environments by the use of a model about the world. The agent has to keep track of the internal state which is adjusted by each percept and that depends on the percept history. The current state is stored inside the agent which maintains some kind of structure describing the part of the world which cannot be seen.

Updating the state requires information about:

- How the world evolves independently from the agent?

- How do the agent’s actions affect the world?

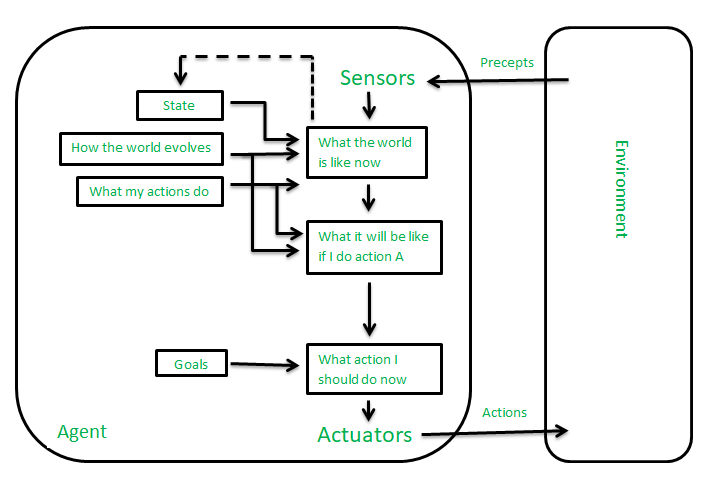

These kinds of agents take decisions based on how far they are currently from their goal (description of desirable situations). Their every action is intended to reduce their distance from the goal. This allows the agent a way to choose among multiple possibilities, selecting the one which reaches a goal state. The knowledge that supports its decisions is represented explicitly and can be modified, which makes these agents more flexible. They usually require search and planning. The goal-based agent’s behavior can easily be changed.

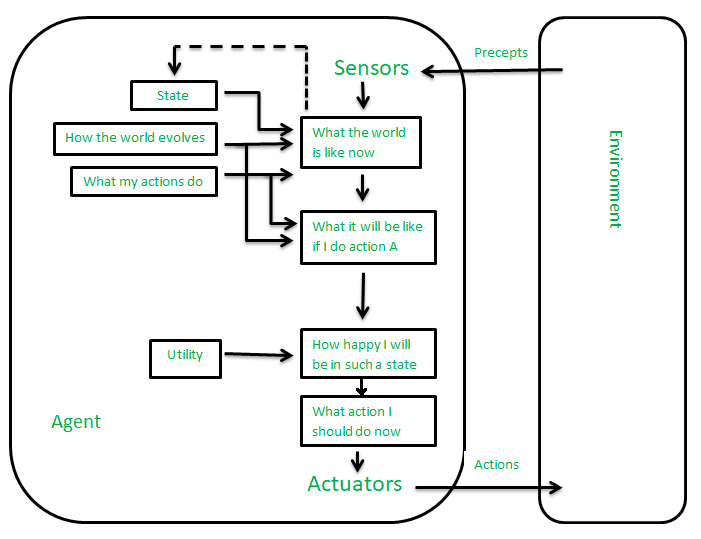

The agents which are developed having their end uses as building blocks are called utility-based agents. When there are multiple possible alternatives, then to decide which one is best, utility-based agents are used. They choose actions based on a preference (utility) for each state. Sometimes achieving the desired goal is not enough. We may look for a quicker, safer, cheaper trip to reach a destination. Agent happiness should be taken into consideration. Utility describes how “happy” the agent is. Because of the uncertainty in the world, a utility agent chooses the action that maximizes the expected utility. A utility function maps a state onto a real number which describes the associated degree of happiness.

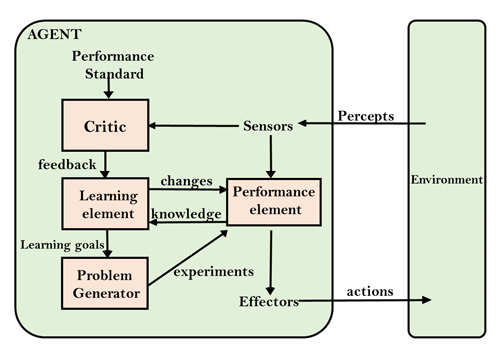

A learning agent in AI is the type of agent that can learn from its past experiences or it has learning capabilities. It starts to act with basic knowledge and then is able to act and adapt automatically through learning. A learning agent has mainly four conceptual components, which are:

- Learning element: It is responsible for making improvements by learning from the environment.

- Critic: The learning element takes feedback from critics which describes how well the agent is doing with respect to a fixed performance standard.

- Performance element: It is responsible for selecting external action.

- Problem Generator: This component is responsible for suggesting actions that will lead to new and informative experiences.

Multi-Agent Systems

These agents interact with other agents to achieve a common goal. They may have to coordinate their actions and communicate with each other to achieve their objective.

A multi-agent system (MAS) is a system composed of multiple interacting agents that are designed to work together to achieve a common goal. These agents may be autonomous or semi-autonomous and are capable of perceiving their environment, making decisions, and taking action to achieve the common objective.

MAS can be used in a variety of applications, including transportation systems, robotics, and social networks. They can help improve efficiency, reduce costs, and increase flexibility in complex systems. MAS can be classified into different types based on their characteristics, such as whether the agents have the same or different goals, whether the agents are cooperative or competitive, and whether the agents are homogeneous or heterogeneous.

- In a homogeneous MAS, all the agents have the same capabilities, goals, and behaviors.

- In contrast, in a heterogeneous MAS, the agents have different capabilities, goals, and behaviors.

This can make coordination more challenging but can also lead to more flexible and robust systems.

Cooperative MAS involves agents working together to achieve a common goal, while competitive MAS involves agents working against each other to achieve their own goals. In some cases, MAS can also involve both cooperative and competitive behavior, where agents must balance their own interests with the interests of the group.

MAS can be implemented using different techniques, such as game theory , machine learning , and agent-based modeling. Game theory is used to analyze strategic interactions between agents and predict their behavior. Machine learning is used to train agents to improve their decision-making capabilities over time. Agent-based modeling is used to simulate complex systems and study the interactions between agents.

Overall, multi-agent systems are a powerful tool in artificial intelligence that can help solve complex problems and improve efficiency in a variety of applications.

Hierarchical Agents

These agents are organized into a hierarchy, with high-level agents overseeing the behavior of lower-level agents. The high-level agents provide goals and constraints, while the low-level agents carry out specific tasks. Hierarchical agents are useful in complex environments with many tasks and sub-tasks.

- Hierarchical agents are agents that are organized into a hierarchy, with high-level agents overseeing the behavior of lower-level agents. The high-level agents provide goals and constraints, while the low-level agents carry out specific tasks. This structure allows for more efficient and organized decision-making in complex environments.

- Hierarchical agents can be implemented in a variety of applications, including robotics, manufacturing, and transportation systems. They are particularly useful in environments where there are many tasks and sub-tasks that need to be coordinated and prioritized.

- In a hierarchical agent system, the high-level agents are responsible for setting goals and constraints for the lower-level agents. These goals and constraints are typically based on the overall objective of the system. For example, in a manufacturing system, the high-level agents might set production targets for the lower-level agents based on customer demand.

- The low-level agents are responsible for carrying out specific tasks to achieve the goals set by the high-level agents. These tasks may be relatively simple or more complex, depending on the specific application. For example, in a transportation system, low-level agents might be responsible for managing traffic flow at specific intersections.

- Hierarchical agents can be organized into different levels, depending on the complexity of the system. In a simple system, there may be only two levels: high-level agents and low-level agents. In a more complex system, there may be multiple levels, with intermediate-level agents responsible for coordinating the activities of lower-level agents.

- One advantage of hierarchical agents is that they allow for more efficient use of resources. By organizing agents into a hierarchy, it is possible to allocate tasks to the agents that are best suited to carry them out, while avoiding duplication of effort. This can lead to faster, more efficient decision-making and better overall performance of the system.

Overall, hierarchical agents are a powerful tool in artificial intelligence that can help solve complex problems and improve efficiency in a variety of applications.

Uses of Agents

Agents are used in a wide range of applications in artificial intelligence, including:

- Robotics: Agents can be used to control robots and automate tasks in manufacturing, transportation, and other industries.

- Smart homes and buildings: Agents can be used to control heating, lighting, and other systems in smart homes and buildings, optimizing energy use and improving comfort.

- Transportation systems: Agents can be used to manage traffic flow, optimize routes for autonomous vehicles, and improve logistics and supply chain management.

- Healthcare: Agents can be used to monitor patients, provide personalized treatment plans, and optimize healthcare resource allocation.

- Finance: Agents can be used for automated trading, fraud detection, and risk management in the financial industry.

- Games: Agents can be used to create intelligent opponents in games and simulations, providing a more challenging and realistic experience for players.

- Natural language processing: Agents can be used for language translation, question answering, and chatbots that can communicate with users in natural language .

- Cybersecurity: Agents can be used for intrusion detection, malware analysis, and network security.

- Environmental monitoring: Agents can be used to monitor and manage natural resources, track climate change, and improve environmental sustainability.

- Social media: Agents can be used to analyze social media data, identify trends and patterns, and provide personalized recommendations to users.

Overall, agents are a versatile and powerful tool in artificial intelligence that can help solve a wide range of problems in different fields.

Please Login to comment...

Similar reads, improve your coding skills with practice.

What kind of Experience do you want to share?

Problem solving agent

Similar publications

- Recruit researchers

- Join for free

- Login Email Tip: Most researchers use their institutional email address as their ResearchGate login Password Forgot password? Keep me logged in Log in or Continue with Google Welcome back! Please log in. Email · Hint Tip: Most researchers use their institutional email address as their ResearchGate login Password Forgot password? Keep me logged in Log in or Continue with Google No account? Sign up

Artificial Intelligence

Control System

- Interview Q

Intelligent Agent

Problem-solving, adversarial search, knowledge represent, uncertain knowledge r., subsets of ai, artificial intelligence mcq, related tutorials.

COMMENTS

CPE/CSC 580-S06 Artificial Intelligence - Intelligent Agents Well-Defined Problems exact formulation of problems and solutions initial state current state / set of states, or the state at the beginning of the problem-solving process must be known to the agent operator description of an action state space set of all states reachable from the ...

function creates new nodes, parent, action. = 6g = 6lling in the various using the SuccessorFn elds and of the problem to create the correspondi. function Tree-Search( problem, fringe) returns a solution, or failure fringe Insert(Make-Node(Initial-State[problem]), fringe) loop do if fringe is empty then return failure.

Problem formulation ♦ Example problems ♦ Basic search algorithms Chapter 3 2 Problem-solving agents Restricted form of general agent: function Simple-Problem-Solving-Agent (percept) returns an action static: seq, an action sequence, initially empty state, some description of the current world state goal, a goal, initially null problem, a ...

Problem Formulation • Initial state: S 0 • Initial configuration of the problem (e.g. starting position in a maze) • Actions: A • The different ways in which the agent can change the state (e.g. moving to an adjacent position in the maze) • Goal condition: G • A function that determines whether a state reached by a given sequence of actions constitutes a solution to the problem or not.

Chapter 3 Solving Problems by Searching . When the correct action to take is not immediately obvious, an agent may need to plan ahead: to consider a sequence of actions that form a path to a goal state. Such an agent is called a problem-solving agent, and the computational process it undertakes is called search.. Problem-solving agents use atomic representations, that is, states of the world ...

The problem solving agent chooses a cost function that reflects its own performance measure. The solution to the problem is an action sequence that leads from initial state to goal state and the ...

Problem Solving Agents and Uninformed SearchAn intelligent agen. act to increase their performan. Four general steps in problem solving: Goal formulation - deciding on what the goal states are. - based on current situation and agent's performance measure. cessful world states Problem formulation - - how can we get to the goal, without ge.

Chapter 3. Outline. Chapter3 1. Problem-solving agents. function Simple-Problem-Solving-Agent(percept) returns an action static: seq, an action sequence, initially empty state, some description of the current world state goal, a goal, initially null problem, a problem formulation. state←Update-State(state,percept)

Intelligent Agents Agent: Anything that can be viewed as perceiving its environment through sensors and acting upon that environment through actuators. ... Problem Solving as Search •Search is a central topic in AI -Originated with Newell and Simon's work on problem solving. -Famous book: "Human Problem Solving" (1972)

May 10, 2024. In artificial intelligence, a problem-solving agent refers to a type of intelligent agent designed to address and solve complex problems or tasks in its environment. These agents are a fundamental concept in AI and are used in various applications, from game-playing algorithms to robotics and decision-making systems.

A discrete agent receives percepts one at a time, and maps this percept sequence to a sequence of discrete actions. Properties - autonomous - reactive to the environment - pro-active (goal-directed) - interacts with other agents via the environment Philipp Koehn Artificial Intelligence: Intelligent Agents 8 February 2024

Photo by hobijist3d on Unsplash. There are four basic kinds of agent programs that embodies the principles underlying almost all the intelligent systems. Simple Reflex Agents. Model-based Reflex ...

The problem-solving agent performs precisely by defining problems and several solutions. So we can say that problem solving is a part of artificial intelligence that encompasses a number of techniques such as a tree, B-tree, heuristic algorithms to solve a problem. We can also say that a problem-solving agent is a result-driven agent and always ...

Problem Solving Agents: Approach •General approach is called "search" •Input: environment, start state, goal state •Env.: states, actions, transitions, costs, goal test •Output: sequence of actions •Actions are executed after planning •Percepts are ignored when executing plan Nathan Sturtevant Introduction to Artificial ...

A wide range of problems in AI—including, among others, theorem proving, game playing, planning, and learning—can be formulated at an abstract level as essentially search problems. As noted above, representation is a key issue in problem solving. Consider 17 sticks arranged in 6 squares as shown in Figure 1.

Solving Problems by Searching • Problem solving agents: design, specification, implementation • Specification components - Problems - formulating well-defined ones - Solutions - requirements, constraints • Measuring performance Formulating Problems as (State Space) Search Data Structures Used in Search Problem-Solving Agents [1]:

The problem solving agent follows this four phase problem solving process: Goal Formulation: This is the first and most basic phase in problem solving. It arranges specific steps to establish a target/goal that demands some activity to reach it. AI agents are now used to formulate goals. Problem Formulation: It is one of the fundamental steps ...

Problem Solving Agent An agent that tries to come up with a sequence of actions that will bring the environment into a desired state. Search The process of looking for such a sequence, involving a systematic exploration of alternative actions. Searching is one of the classic areas of AI. Problems. A problem is a tuple $(S, s, A, \rho, G, P)$ where

for the agent, as shown in Figure 3.1. After formulating a goal and a problem to solve, the agent calls a search procedure to solve it. It then uses the solution to guide its actions, doing whatever the solution recommends as the next thing to do—typically, the first action of the sequence—and then removing that step from the sequence.

Model-Based Reflex Agents. It works by finding a rule whose condition matches the current situation. A model-based agent can handle partially observable environments by the use of a model about the world. The agent has to keep track of the internal state which is adjusted by each percept and that depends on the percept history. The current state is stored inside the agent which maintains some ...

Download scientific diagram | Problem solving agent from publication: Automatic Web Service Selection Using Ontology and Quality of Service | We develop a framework for integrating bioinformatics ...

A thermostat is an example of an intelligent agent. Following are the main four rules for an AI agent: Rule 1: An AI agent must have the ability to perceive the environment. Rule 2: The observation must be used to make decisions. Rule 3: Decision should result in an action. Rule 4: The action taken by an AI agent must be a rational action.

AI agents have become increasingly significant in various domains, enabling autonomous decision-making and problem-solving. To function effectively, these agents require a planning process that determines the best course of action and then executes the planned actions. In this paper, we present an efficient on-device Planner-Action framework that separates planning and action execution into ...

The Fishbone Diagram is a visual tool used in Lean Six Sigma to identify root causes of problems. It resembles a fish skeleton, with the main problem at the head and potential causes branching off the spine into categories, facilitating a systematic approach to problem-solving. Also commonly known as a Cause and Effect Diagram or an Ishikawa ...

RevAP: : A bankruptcy-based algorithm to solve the multi-agent credit assignment problem in task start threshold-based multi-agent systems. Authors: Hossein Yarahmadi, Mohammad Ebrahim Shiri, ... Rahaie Z., Beigy H., Critic learning in multi agent credit assignment problem, J. Intell. Fuzzy Systems 30 (6) (2016) 3465-3480. Google Scholar