- Comprehensive Learning Paths

- 150+ Hours of Videos

- Complete Access to Jupyter notebooks, Datasets, References.

Hypothesis Testing – A Deep Dive into Hypothesis Testing, The Backbone of Statistical Inference

- September 21, 2023

Explore the intricacies of hypothesis testing, a cornerstone of statistical analysis. Dive into methods, interpretations, and applications for making data-driven decisions.

In this Blog post we will learn:

- What is Hypothesis Testing?

- Steps in Hypothesis Testing 2.1. Set up Hypotheses: Null and Alternative 2.2. Choose a Significance Level (α) 2.3. Calculate a test statistic and P-Value 2.4. Make a Decision

- Example : Testing a new drug.

- Example in python

1. What is Hypothesis Testing?

In simple terms, hypothesis testing is a method used to make decisions or inferences about population parameters based on sample data. Imagine being handed a dice and asked if it’s biased. By rolling it a few times and analyzing the outcomes, you’d be engaging in the essence of hypothesis testing.

Think of hypothesis testing as the scientific method of the statistics world. Suppose you hear claims like “This new drug works wonders!” or “Our new website design boosts sales.” How do you know if these statements hold water? Enter hypothesis testing.

2. Steps in Hypothesis Testing

- Set up Hypotheses : Begin with a null hypothesis (H0) and an alternative hypothesis (Ha).

- Choose a Significance Level (α) : Typically 0.05, this is the probability of rejecting the null hypothesis when it’s actually true. Think of it as the chance of accusing an innocent person.

- Calculate Test statistic and P-Value : Gather evidence (data) and calculate a test statistic.

- p-value : This is the probability of observing the data, given that the null hypothesis is true. A small p-value (typically ≤ 0.05) suggests the data is inconsistent with the null hypothesis.

- Decision Rule : If the p-value is less than or equal to α, you reject the null hypothesis in favor of the alternative.

2.1. Set up Hypotheses: Null and Alternative

Before diving into testing, we must formulate hypotheses. The null hypothesis (H0) represents the default assumption, while the alternative hypothesis (H1) challenges it.

For instance, in drug testing, H0 : “The new drug is no better than the existing one,” H1 : “The new drug is superior .”

2.2. Choose a Significance Level (α)

When You collect and analyze data to test H0 and H1 hypotheses. Based on your analysis, you decide whether to reject the null hypothesis in favor of the alternative, or fail to reject / Accept the null hypothesis.

The significance level, often denoted by $α$, represents the probability of rejecting the null hypothesis when it is actually true.

In other words, it’s the risk you’re willing to take of making a Type I error (false positive).

Type I Error (False Positive) :

- Symbolized by the Greek letter alpha (α).

- Occurs when you incorrectly reject a true null hypothesis . In other words, you conclude that there is an effect or difference when, in reality, there isn’t.

- The probability of making a Type I error is denoted by the significance level of a test. Commonly, tests are conducted at the 0.05 significance level , which means there’s a 5% chance of making a Type I error .

- Commonly used significance levels are 0.01, 0.05, and 0.10, but the choice depends on the context of the study and the level of risk one is willing to accept.

Example : If a drug is not effective (truth), but a clinical trial incorrectly concludes that it is effective (based on the sample data), then a Type I error has occurred.

Type II Error (False Negative) :

- Symbolized by the Greek letter beta (β).

- Occurs when you accept a false null hypothesis . This means you conclude there is no effect or difference when, in reality, there is.

- The probability of making a Type II error is denoted by β. The power of a test (1 – β) represents the probability of correctly rejecting a false null hypothesis.

Example : If a drug is effective (truth), but a clinical trial incorrectly concludes that it is not effective (based on the sample data), then a Type II error has occurred.

Balancing the Errors :

In practice, there’s a trade-off between Type I and Type II errors. Reducing the risk of one typically increases the risk of the other. For example, if you want to decrease the probability of a Type I error (by setting a lower significance level), you might increase the probability of a Type II error unless you compensate by collecting more data or making other adjustments.

It’s essential to understand the consequences of both types of errors in any given context. In some situations, a Type I error might be more severe, while in others, a Type II error might be of greater concern. This understanding guides researchers in designing their experiments and choosing appropriate significance levels.

2.3. Calculate a test statistic and P-Value

Test statistic : A test statistic is a single number that helps us understand how far our sample data is from what we’d expect under a null hypothesis (a basic assumption we’re trying to test against). Generally, the larger the test statistic, the more evidence we have against our null hypothesis. It helps us decide whether the differences we observe in our data are due to random chance or if there’s an actual effect.

P-value : The P-value tells us how likely we would get our observed results (or something more extreme) if the null hypothesis were true. It’s a value between 0 and 1. – A smaller P-value (typically below 0.05) means that the observation is rare under the null hypothesis, so we might reject the null hypothesis. – A larger P-value suggests that what we observed could easily happen by random chance, so we might not reject the null hypothesis.

2.4. Make a Decision

Relationship between $α$ and P-Value

When conducting a hypothesis test:

We then calculate the p-value from our sample data and the test statistic.

Finally, we compare the p-value to our chosen $α$:

- If $p−value≤α$: We reject the null hypothesis in favor of the alternative hypothesis. The result is said to be statistically significant.

- If $p−value>α$: We fail to reject the null hypothesis. There isn’t enough statistical evidence to support the alternative hypothesis.

3. Example : Testing a new drug.

Imagine we are investigating whether a new drug is effective at treating headaches faster than drug B.

Setting Up the Experiment : You gather 100 people who suffer from headaches. Half of them (50 people) are given the new drug (let’s call this the ‘Drug Group’), and the other half are given a sugar pill, which doesn’t contain any medication.

- Set up Hypotheses : Before starting, you make a prediction:

- Null Hypothesis (H0): The new drug has no effect. Any difference in healing time between the two groups is just due to random chance.

- Alternative Hypothesis (H1): The new drug does have an effect. The difference in healing time between the two groups is significant and not just by chance.

Calculate Test statistic and P-Value : After the experiment, you analyze the data. The “test statistic” is a number that helps you understand the difference between the two groups in terms of standard units.

For instance, let’s say:

- The average healing time in the Drug Group is 2 hours.

- The average healing time in the Placebo Group is 3 hours.

The test statistic helps you understand how significant this 1-hour difference is. If the groups are large and the spread of healing times in each group is small, then this difference might be significant. But if there’s a huge variation in healing times, the 1-hour difference might not be so special.

Imagine the P-value as answering this question: “If the new drug had NO real effect, what’s the probability that I’d see a difference as extreme (or more extreme) as the one I found, just by random chance?”

For instance:

- P-value of 0.01 means there’s a 1% chance that the observed difference (or a more extreme difference) would occur if the drug had no effect. That’s pretty rare, so we might consider the drug effective.

- P-value of 0.5 means there’s a 50% chance you’d see this difference just by chance. That’s pretty high, so we might not be convinced the drug is doing much.

- If the P-value is less than ($α$) 0.05: the results are “statistically significant,” and they might reject the null hypothesis , believing the new drug has an effect.

- If the P-value is greater than ($α$) 0.05: the results are not statistically significant, and they don’t reject the null hypothesis , remaining unsure if the drug has a genuine effect.

4. Example in python

For simplicity, let’s say we’re using a t-test (common for comparing means). Let’s dive into Python:

Making a Decision : “The results are statistically significant! p-value < 0.05 , The drug seems to have an effect!” If not, we’d say, “Looks like the drug isn’t as miraculous as we thought.”

5. Conclusion

Hypothesis testing is an indispensable tool in data science, allowing us to make data-driven decisions with confidence. By understanding its principles, conducting tests properly, and considering real-world applications, you can harness the power of hypothesis testing to unlock valuable insights from your data.

More Articles

Correlation – connecting the dots, the role of correlation in data analysis, sampling and sampling distributions – a comprehensive guide on sampling and sampling distributions, law of large numbers – a deep dive into the world of statistics, central limit theorem – a deep dive into central limit theorem and its significance in statistics, skewness and kurtosis – peaks and tails, understanding data through skewness and kurtosis”, similar articles, complete introduction to linear regression in r, how to implement common statistical significance tests and find the p value, logistic regression – a complete tutorial with examples in r.

Subscribe to Machine Learning Plus for high value data science content

© Machinelearningplus. All rights reserved.

Machine Learning A-Z™: Hands-On Python & R In Data Science

Free sample videos:.

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- How to Write a Strong Hypothesis | Guide & Examples

How to Write a Strong Hypothesis | Guide & Examples

Published on 6 May 2022 by Shona McCombes .

A hypothesis is a statement that can be tested by scientific research. If you want to test a relationship between two or more variables, you need to write hypotheses before you start your experiment or data collection.

Table of contents

What is a hypothesis, developing a hypothesis (with example), hypothesis examples, frequently asked questions about writing hypotheses.

A hypothesis states your predictions about what your research will find. It is a tentative answer to your research question that has not yet been tested. For some research projects, you might have to write several hypotheses that address different aspects of your research question.

A hypothesis is not just a guess – it should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations, and statistical analysis of data).

Variables in hypotheses

Hypotheses propose a relationship between two or more variables . An independent variable is something the researcher changes or controls. A dependent variable is something the researcher observes and measures.

In this example, the independent variable is exposure to the sun – the assumed cause . The dependent variable is the level of happiness – the assumed effect .

Prevent plagiarism, run a free check.

Step 1: ask a question.

Writing a hypothesis begins with a research question that you want to answer. The question should be focused, specific, and researchable within the constraints of your project.

Step 2: Do some preliminary research

Your initial answer to the question should be based on what is already known about the topic. Look for theories and previous studies to help you form educated assumptions about what your research will find.

At this stage, you might construct a conceptual framework to identify which variables you will study and what you think the relationships are between them. Sometimes, you’ll have to operationalise more complex constructs.

Step 3: Formulate your hypothesis

Now you should have some idea of what you expect to find. Write your initial answer to the question in a clear, concise sentence.

Step 4: Refine your hypothesis

You need to make sure your hypothesis is specific and testable. There are various ways of phrasing a hypothesis, but all the terms you use should have clear definitions, and the hypothesis should contain:

- The relevant variables

- The specific group being studied

- The predicted outcome of the experiment or analysis

Step 5: Phrase your hypothesis in three ways

To identify the variables, you can write a simple prediction in if … then form. The first part of the sentence states the independent variable and the second part states the dependent variable.

In academic research, hypotheses are more commonly phrased in terms of correlations or effects, where you directly state the predicted relationship between variables.

If you are comparing two groups, the hypothesis can state what difference you expect to find between them.

Step 6. Write a null hypothesis

If your research involves statistical hypothesis testing , you will also have to write a null hypothesis. The null hypothesis is the default position that there is no association between the variables. The null hypothesis is written as H 0 , while the alternative hypothesis is H 1 or H a .

Hypothesis testing is a formal procedure for investigating our ideas about the world using statistics. It is used by scientists to test specific predictions, called hypotheses , by calculating how likely it is that a pattern or relationship between variables could have arisen by chance.

A hypothesis is not just a guess. It should be based on existing theories and knowledge. It also has to be testable, which means you can support or refute it through scientific research methods (such as experiments, observations, and statistical analysis of data).

A research hypothesis is your proposed answer to your research question. The research hypothesis usually includes an explanation (‘ x affects y because …’).

A statistical hypothesis, on the other hand, is a mathematical statement about a population parameter. Statistical hypotheses always come in pairs: the null and alternative hypotheses. In a well-designed study , the statistical hypotheses correspond logically to the research hypothesis.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

McCombes, S. (2022, May 06). How to Write a Strong Hypothesis | Guide & Examples. Scribbr. Retrieved 14 May 2024, from https://www.scribbr.co.uk/research-methods/hypothesis-writing/

Is this article helpful?

Shona McCombes

Other students also liked, operationalisation | a guide with examples, pros & cons, what is a conceptual framework | tips & examples, a quick guide to experimental design | 5 steps & examples.

- Prompt Library

- DS/AI Trends

- Stats Tools

- Interview Questions

- Generative AI

- Machine Learning

- Deep Learning

Hypothesis Testing Steps & Examples

Table of Contents

What is a Hypothesis testing?

As per the definition from Oxford languages, a hypothesis is a supposition or proposed explanation made on the basis of limited evidence as a starting point for further investigation. As per the Dictionary page on Hypothesis , Hypothesis means a proposition or set of propositions, set forth as an explanation for the occurrence of some specified group of phenomena, either asserted merely as a provisional conjecture to guide investigation (working hypothesis) or accepted as highly probable in the light of established facts.

The hypothesis can be defined as the claim that can either be related to the truth about something that exists in the world, or, truth about something that’s needs to be established a fresh . In simple words, another word for the hypothesis is the “claim” . Until the claim is proven to be true, it is called the hypothesis. Once the claim is proved, it becomes the new truth or new knowledge about the thing. For example , let’s say that a claim is made that students studying for more than 6 hours a day gets more than 90% of marks in their examination. Now, this is just a claim or a hypothesis and not the truth in the real world. However, in order for the claim to become the truth for widespread adoption, it needs to be proved using pieces of evidence, e.g., data. In order to reject this claim or otherwise, one needs to do some empirical analysis by gathering data samples and evaluating the claim. The process of gathering data and evaluating the claims or hypotheses with the goal to reject or otherwise (failing to reject) can be called as hypothesis testing . Note the wordings – “failing to reject”. It means that we don’t have enough evidence to reject the claim. Thus, until the time that new evidence comes up, the claim can be considered the truth. There are different techniques to test the hypothesis in order to reach the conclusion of whether the hypothesis can be used to represent the truth of the world.

One must note that the hypothesis testing never constitutes a proof that the hypothesis is absolute truth based on the observations. It only provides added support to consider the hypothesis as truth until the time that new evidences can against the hypotheses can be gathered. We can never be 100% sure about truth related to those hypotheses based on the hypothesis testing.

Simply speaking, hypothesis testing is a framework that can be used to assert whether the claim or the hypothesis made about a real-world/real-life event can be seen as the truth or otherwise based on the given data (evidences).

Hypothesis Testing Examples

Before we get ahead and start understanding more details about hypothesis and hypothesis testing steps, lets take a look at some real-world examples of how to think about hypothesis and hypothesis testing when dealing with real-world problems :

- Customers are churning because they ain’t getting response to their complaints or issues

- Customers are churning because there are other competitive services in the market which are providing these services at lower cost.

- Customers are churning because there are other competitive services which are providing more services at the same cost.

- It is claimed that a 500 gm sugar packet for a particular brand, say XYZA, contains sugar of less than 500 gm, say around 480gm. Can this claim be taken as truth? How do we know that this claim is true? This is a hypothesis until proved.

- A group of doctors claims that quitting smoking increases lifespan. Can this claim be taken as new truth? The hypothesis is that quitting smoking results in an increase in lifespan.

- It is claimed that brisk walking for half an hour every day reverses diabetes. In order to accept this in your lifestyle, you may need evidence that supports this claim or hypothesis.

- It is claimed that doing Pranayama yoga for 30 minutes a day can help in easing stress by 50%. This can be termed as hypothesis and would require testing / validation for it to be established as a truth and recommended for widespread adoption.

- One common real-life example of hypothesis testing is election polling. In order to predict the outcome of an election, pollsters take a sample of the population and ask them who they plan to vote for. They then use hypothesis testing to assess whether their sample is representative of the population as a whole. If the results of the hypothesis test are significant, it means that the sample is representative and that the poll can be used to predict the outcome of the election. However, if the results are not significant, it means that the sample is not representative and that the poll should not be used to make predictions.

- Machine learning models make predictions based on the input data. Each of the machine learning model representing a function approximation can be taken as a hypothesis. All different models constitute what is called as hypothesis space .

- As part of a linear regression machine learning model , it is claimed that there is a relationship between the response variables and predictor variables? Can this hypothesis or claim be taken as truth? Let’s say, the hypothesis is that the housing price depends upon the average income of people already staying in the locality. How true is this hypothesis or claim? The relationship between response variable and each of the predictor variables can be evaluated using T-test and T-statistics .

- For linear regression model , one of the hypothesis is that there is no relationship between the response variable and any of the predictor variables. Thus, if b1, b2, b3 are three parameters, all of them is equal to 0. b1 = b2 = b3 = 0. This is where one performs F-test and use F-statistics to test this hypothesis.

You may note different hypotheses which are listed above. The next step would be validate some of these hypotheses. This is where data scientists will come into picture. One or more data scientists may be asked to work on different hypotheses. This would result in these data scientists looking for appropriate data related to the hypothesis they are working. This section will be detailed out in near future.

State the Hypothesis to begin Hypothesis Testing

The first step to hypothesis testing is defining or stating a hypothesis. Before the hypothesis can be tested, we need to formulate the hypothesis in terms of mathematical expressions. There are two important aspects to pay attention to, prior to the formulation of the hypothesis. The following represents different types of hypothesis that could be put to hypothesis testing:

- Claim made against the well-established fact : The case in which a fact is well-established, or accepted as truth or “knowledge” and a new claim is made about this well-established fact. For example , when you buy a packet of 500 gm of sugar, you assume that the packet does contain at the minimum 500 gm of sugar and not any less, based on the label of 500 gm on the packet. In this case, the fact is given or assumed to be the truth. A new claim can be made that the 500 gm sugar contains sugar weighing less than 500 gm. This claim needs to be tested before it is accepted as truth. Such cases could be considered for hypothesis testing if this is claimed that the assumption or the default state of being is not true. The claim to be established as new truth can be stated as “alternate hypothesis”. The opposite state can be stated as “null hypothesis”. Here the claim that the 500 gm packet consists of sugar less than 500 grams would be stated as alternate hypothesis. The opposite state which is the sugar packet consists 500 gm is null hypothesis.

- Claim to establish the new truth : The case in which there is some claim made about the reality that exists in the world (fact). For example , the fact that the housing price depends upon the average income of people already staying in the locality can be considered as a claim and not assumed to be true. Another example could be the claim that running 5 miles a day would result in a reduction of 10 kg of weight within a month. There could be varied such claims which when required to be proved as true have to go through hypothesis testing. The claim to be established as new truth can be stated as “alternate hypothesis”. The opposite state can be stated as “null hypothesis”. Running 5 miles a day would result in reduction of 10 kg within a month would be stated as alternate hypothesis.

Based on the above considerations, the following hypothesis can be stated for doing hypothesis testing.

- The packet of 500 gm of sugar contains sugar of weight less than 500 gm. (Claim made against the established fact). This is a new knowledge which requires hypothesis testing to get established and acted upon.

- The housing price depends upon the average income of the people staying in the locality. This is a new knowledge which requires hypothesis testing to get established and acted upon.

- Running 5 miles a day results in a reduction of 10 kg of weight within a month. This is a new knowledge which requires hypothesis testing to get established for widespread adoption.

Formulate Null & Alternate Hypothesis as Next Step

Once the hypothesis is defined or stated, the next step is to formulate the null and alternate hypothesis in order to begin hypothesis testing as described above.

What is a null hypothesis?

In the case where the given statement is a well-established fact or default state of being in the real world, one can call it a null hypothesis (in the simpler word, nothing new). Well-established facts don’t need any hypothesis testing and hence can be called the null hypothesis. In cases, when there are any new claims made which is not well established in the real world, the null hypothesis can be thought of as the default state or opposite state of that claim. For example , in the previous section, the claim or hypothesis is made that the students studying for more than 6 hours a day gets more than 90% of marks in their examination. The null hypothesis, in this case, will be that the claim is not true or real. The null hypothesis can be stated that there is no relationship or association between the students reading more than 6 hours a day and they getting 90% of the marks. Any occurrence is only a chance occurrence. Another example of hypothesis is when somebody is alleged that they have performed a crime.

Null hypothesis is denoted by letter H with 0, e.g., [latex]H_0[/latex]

What is an alternate hypothesis?

When the given statement is a claim (unexpected event in the real world) and not yet proven, one can call/formulate it as an alternate hypothesis and accordingly define a null hypothesis which is the opposite state of the hypothesis. The alternate hypothesis is a new knowledge or truth that needs to be established. In simple words, the hypothesis or claim that needs to be tested against reality in the real world can be termed the alternate hypothesis. In order to reach a conclusion that the claim (alternate hypothesis) can be considered the new knowledge or truth (based on the available evidence), it would be important to reject the null hypothesis. It should be noted that null and alternate hypotheses are mutually exclusive and at the same time asymmetric. In the example given in the previous section, the claim that the students studying for more than 6 hours get more than 90% of marks can be termed as the alternate hypothesis.

Alternate hypothesis is denoted with H subscript a, e.g., [latex]H_a[/latex]

Once the hypothesis is formulated as null([latex]H_0[/latex]) and alternate hypothesis ([latex]H_a[/latex]), there are two possible outcomes that can happen from hypothesis testing. These outcomes are the following:

- Reject the null hypothesis : There is enough evidence based on which one can reject the null hypothesis. Let’s understand this with the help of an example provided earlier in this section. The null hypothesis is that there is no relationship between the students studying more than 6 hours a day and getting more than 90% marks. In a sample of 30 students studying more than 6 hours a day, it was found that they scored 91% marks. Given that the null hypothesis is true, this kind of hypothesis testing result will be highly unlikely. This kind of result can’t happen by chance. That would mean that the claim can be taken as the new truth or new knowledge in the real world. One can go and take further samples of 30 students to perform some more testing to validate the hypothesis. If similar results show up with other tests, it can be said with very high confidence that there is enough evidence to reject the null hypothesis that there is no relationship between the students studying more than 6 hours a day and getting more than 90% marks. In such cases, one can go to accept the claim as new truth that the students studying more than 6 hours a day get more than 90% marks. The hypothesis can be considered the new truth until the time that new tests provide evidence against this claim.

- Fail to reject the null hypothesis : There is not enough evidence-based on which one can reject the null hypothesis (well-established fact or reality). Thus, one would fail to reject the null hypothesis. In a sample of 30 students studying more than 6 hours a day, the students were found to score 75%. Given that the null hypothesis is true, this kind of result is fairly likely or expected. With the given sample, one can’t reject the null hypothesis that there is no relationship between the students studying more than 6 hours a day and getting more than 90% marks.

Examples of formulating the null and alternate hypothesis

The following are some examples of the null and alternate hypothesis.

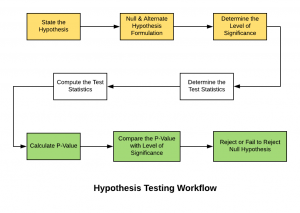

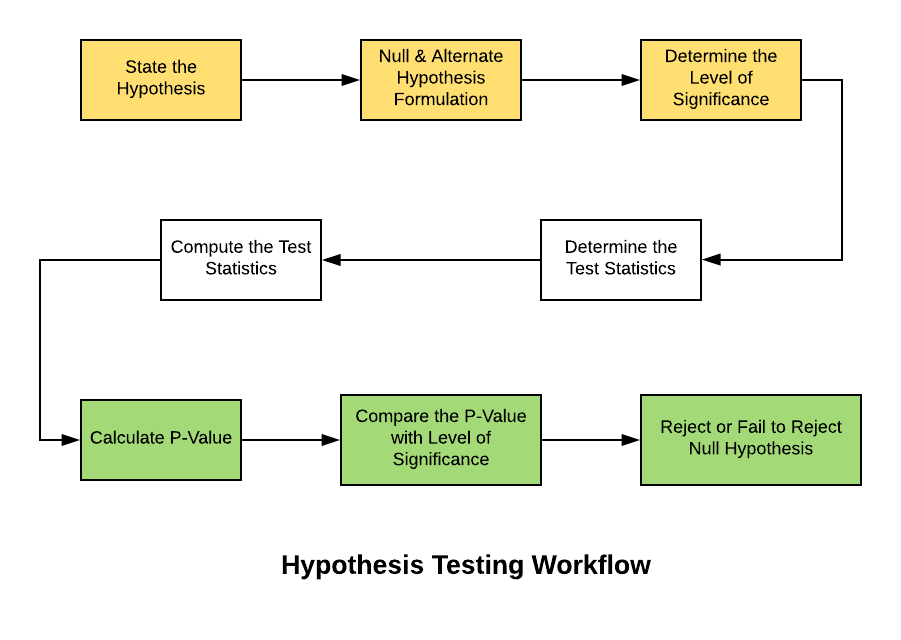

Hypothesis Testing Steps

Here is the diagram which represents the workflow of Hypothesis Testing.

Figure 1. Hypothesis Testing Steps

Based on the above, the following are some of the steps to be taken when doing hypothesis testing:

- State the hypothesis : First and foremost, the hypothesis needs to be stated. The hypothesis could either be the statement that is assumed to be true or the claim which is made to be true.

- Formulate the hypothesis : This step requires one to identify the Null and Alternate hypotheses or in simple words, formulate the hypothesis. Take an example of the canned sauce weighing 500 gm as the Null Hypothesis.

- Set the criteria for a decision : Identify test statistics that could be used to assess the Null Hypothesis. The test statistics with the above example would be the average weight of the sugar packet, and t-statistics would be used to determine the P-value. For different kinds of problems, different kinds of statistics including Z-statistics, T-statistics, F-statistics, etc can be used.

- Identify the level of significance (alpha) : Before starting the hypothesis testing, one would be required to set the significance level (also called as alpha ) which represents the value for which a P-value less than or equal to alpha is considered statistically significant. Typical values of alpha are 0.1, 0.05, and 0.01. In case the P-value is evaluated as statistically significant, the null hypothesis is rejected. In case, the P-value is more than the alpha value, the null hypothesis is failed to be rejected.

- Compute the test statistics : Next step is to calculate the test statistics (z-test, t-test, f-test, etc) to determine the P-value. If the sample size is more than 30, it is recommended to use z-statistics. Otherwise, t-statistics could be used. In the current example where 20 packets of canned sauce is selected for hypothesis testing, t-statistics will be calculated for the mean value of 505 gm (sample mean). The t-statistics would then be calculated as the difference of 505 gm (sample mean) and the population means (500 gm) divided by the sample standard deviation divided by the square root of sample size (20).

- Calculate the P-value of the test statistics : Once the test statistics have been calculated, find the P-value using either of t-table or a z-table. P-value is the probability of obtaining a test statistic (t-score or z-score) equal to or more extreme than the result obtained from the sample data, given that the null hypothesis H0 is true.

- Compare P-value with the level of significance : The significance level is set as the allowable range within which if the value appears, one will be failed to reject the Null Hypothesis. This region is also called as Non-rejection region . The value of alpha is compared with the p-value. If the p-value is less than the significance level, the test is statistically significant and hence, the null hypothesis will be rejected.

P-Value: Key to Statistical Hypothesis Testing

Once you formulate the hypotheses, there is the need to test those hypotheses. Meaning, say that the null hypothesis is stated as the statement that housing price does not depend upon the average income of people staying in the locality, it would be required to be tested by taking samples of housing prices and, based on the test results, this Null hypothesis could either be rejected or failed to be rejected . In hypothesis testing, the following two are the outcomes:

- Reject the Null hypothesis

- Fail to Reject the Null hypothesis

Take the above example of the sugar packet weighing 500 gm. The Null hypothesis is set as the statement that the sugar packet weighs 500 gm. After taking a sample of 20 sugar packets and testing/taking its weight, it was found that the average weight of the sugar packets came to 495 gm. The test statistics (t-statistics) were calculated for this sample and the P-value was determined. Let’s say the P-value was found to be 15%. Assuming that the level of significance is selected to be 5%, the test statistic is not statistically significant (P-value > 5%) and thus, the null hypothesis fails to get rejected. Thus, one could safely conclude that the sugar packet does weigh 500 gm. However, if the average weight of canned sauce would have found to be 465 gm, this is way beyond/away from the mean value of 500 gm and one could have ended up rejecting the Null Hypothesis based on the P-value .

Hypothesis Testing for Problem Analysis & Solution Implementation

Hypothesis testing can be applied in both problem analysis and solution implementation. The following represents method on how you can apply hypothesis testing technique for both problem and solution space:

- Problem Analysis : Hypothesis testing is a systematic way to validate assumptions or educated guesses during problem analysis. It allows for a structured investigation into the nature of a problem and its potential root causes. In this process, a null hypothesis and an alternative hypothesis are usually defined. The null hypothesis generally asserts that no significant change or effect exists, while the alternative hypothesis posits the opposite. Through controlled experiments, data collection, or statistical analysis, these hypotheses are then tested to determine their validity. For example, if a software company notices a sudden increase in user churn rate, they might hypothesize that the recent update to their application is the root cause. The null hypothesis could be that the update has no effect on churn rate, while the alternative hypothesis would assert that the update significantly impacts the churn rate. By analyzing user behavior and feedback before and after the update, and perhaps running A/B tests where one user group has the update and another doesn’t, the company can test these hypotheses. If the alternative hypothesis is confirmed, the company can then focus on identifying specific issues in the update that may be causing the increased churn, thereby moving closer to a solution.

- Solution Implementation : Hypothesis testing can also be a valuable tool during the solution implementation phase, serving as a method to evaluate the effectiveness of proposed remedies. By setting up a specific hypothesis about the expected outcome of a solution, organizations can create targeted metrics and KPIs to measure success. For example, if a retail business is facing low customer retention rates, they might implement a loyalty program as a solution. The hypothesis could be that introducing a loyalty program will increase customer retention by at least 15% within six months. The null hypothesis would state that the loyalty program has no significant effect on retention rates. To test this, the company can compare retention metrics from before and after the program’s implementation, possibly even setting up control groups for more robust analysis. By applying statistical tests to this data, the company can determine whether their hypothesis is confirmed or refuted, thereby gauging the effectiveness of their solution and making data-driven decisions for future actions.

- Tests of Significance

- Hypothesis testing for the Mean

- z-statistics vs t-statistics (Khan Academy)

Hypothesis testing quiz

The claim that needs to be established is set as ____________, the outcome of hypothesis testing is _________.

Please select 2 correct answers

P-value is defined as the probability of obtaining the result as extreme given the null hypothesis is true

There is a claim that doing pranayama yoga results in reversing diabetes. which of the following is true about null hypothesis.

In this post, you learned about hypothesis testing and related nuances such as the null and alternate hypothesis formulation techniques, ways to go about doing hypothesis testing etc. In data science, one of the reasons why one needs to understand the concepts of hypothesis testing is the need to verify the relationship between the dependent (response) and independent (predictor) variables. One would, thus, need to understand the related concepts such as hypothesis formulation into null and alternate hypothesis, level of significance, test statistics calculation, P-value, etc. Given that the relationship between dependent and independent variables is a sort of hypothesis or claim , the null hypothesis could be set as the scenario where there is no relationship between dependent and independent variables.

Recent Posts

- Pricing Analytics in Banking: Strategies, Examples - May 15, 2024

- How to Learn Effectively: A Holistic Approach - May 13, 2024

- How to Choose Right Statistical Tests: Examples - May 13, 2024

Ajitesh Kumar

Leave a reply cancel reply.

Your email address will not be published. Required fields are marked *

- Search for:

- Excellence Awaits: IITs, NITs & IIITs Journey

ChatGPT Prompts (250+)

- Generate Design Ideas for App

- Expand Feature Set of App

- Create a User Journey Map for App

- Generate Visual Design Ideas for App

- Generate a List of Competitors for App

- Pricing Analytics in Banking: Strategies, Examples

- How to Learn Effectively: A Holistic Approach

- How to Choose Right Statistical Tests: Examples

- Data Lakehouses Fundamentals & Examples

- Machine Learning Lifecycle: Data to Deployment Example

Data Science / AI Trends

- • Prepend any arxiv.org link with talk2 to load the paper into a responsive chat application

- • Custom LLM and AI Agents (RAG) On Structured + Unstructured Data - AI Brain For Your Organization

- • Guides, papers, lecture, notebooks and resources for prompt engineering

- • Common tricks to make LLMs efficient and stable

- • Machine learning in finance

Free Online Tools

- Create Scatter Plots Online for your Excel Data

- Histogram / Frequency Distribution Creation Tool

- Online Pie Chart Maker Tool

- Z-test vs T-test Decision Tool

- Independent samples t-test calculator

Recent Comments

I found it very helpful. However the differences are not too understandable for me

Very Nice Explaination. Thankyiu very much,

in your case E respresent Member or Oraganization which include on e or more peers?

Such a informative post. Keep it up

Thank you....for your support. you given a good solution for me.

- The Open University

- Guest user / Sign out

- Study with The Open University

My OpenLearn Profile

Personalise your OpenLearn profile, save your favourite content and get recognition for your learning

Data analysis: hypothesis testing

Course description

Course content, course reviews.

Making decisions about the world based on data requires a process that bridges the gap between unstructured data and the decision. Statistical hypothesis testing helps decision-making by formulating beliefs about the world, including people, organisations or other objects, and formally testing these beliefs.

In this free course, you will study the principles of hypothesis testing, including the specification of significance levels, as well as one-sided and two-sided tests. Finally, you will learn how to perform a hypothesis test of the mean of a variable, as well as the proportion of individuals in a dataset with a certain characteristic.

You will use spreadsheets throughout the course as the central tool used by professionals for simple data management and analysis.

This OpenLearn course is an adapted extract from the Open University course B126 Business data analytics and decision making .

Course learning outcomes

After studying this course, you should be able to:

- understand the principle of hypothesis testing

- understand the idea of alpha in hypothesis testing

- differentiate between one-tailed and two-tailed tests

- understand hypothesis testing of means and proportions

- report the exact p-value of a test.

First Published: 02/01/2024

Updated: 02/01/2024

Rate and Review

Rate this course, review this course.

Log into OpenLearn to leave reviews and join in the conversation.

Create an account to get more

Track your progress.

Review and track your learning through your OpenLearn Profile.

Statement of Participation

On completion of a course you will earn a Statement of Participation.

Access all course activities

Take course quizzes and access all learning.

Review the course

When you have finished a course leave a review and tell others what you think.

For further information, take a look at our frequently asked questions which may give you the support you need.

About this free course

Become an ou student, download this course, share this free course.

User Preferences

Content preview.

Arcu felis bibendum ut tristique et egestas quis:

- Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- Duis aute irure dolor in reprehenderit in voluptate

- Excepteur sint occaecat cupidatat non proident

Keyboard Shortcuts

1.2 - the 7 step process of statistical hypothesis testing.

We will cover the seven steps one by one.

Step 1: State the Null Hypothesis

The null hypothesis can be thought of as the opposite of the "guess" the researchers made. In the example presented in the previous section, the biologist "guesses" plant height will be different for the various fertilizers. So the null hypothesis would be that there will be no difference among the groups of plants. Specifically, in more statistical language the null for an ANOVA is that the means are the same. We state the null hypothesis as:

\(H_0 \colon \mu_1 = \mu_2 = ⋯ = \mu_T\)

for T levels of an experimental treatment.

Step 2: State the Alternative Hypothesis

\(H_A \colon \text{ treatment level means not all equal}\)

The alternative hypothesis is stated in this way so that if the null is rejected, there are many alternative possibilities.

For example, \(\mu_1\ne \mu_2 = ⋯ = \mu_T\) is one possibility, as is \(\mu_1=\mu_2\ne\mu_3= ⋯ =\mu_T\). Many people make the mistake of stating the alternative hypothesis as \(\mu_1\ne\mu_2\ne⋯\ne\mu_T\) which says that every mean differs from every other mean. This is a possibility, but only one of many possibilities. A simple way of thinking about this is that at least one mean is different from all others. To cover all alternative outcomes, we resort to a verbal statement of "not all equal" and then follow up with mean comparisons to find out where differences among means exist. In our example, a possible outcome would be that fertilizer 1 results in plants that are exceptionally tall, but fertilizers 2, 3, and the control group may not differ from one another.

Step 3: Set \(\alpha\)

If we look at what can happen in a hypothesis test, we can construct the following contingency table:

You should be familiar with Type I and Type II errors from your introductory courses. It is important to note that we want to set \(\alpha\) before the experiment ( a-priori ) because the Type I error is the more grievous error to make. The typical value of \(\alpha\) is 0.05, establishing a 95% confidence level. For this course, we will assume \(\alpha\) =0.05, unless stated otherwise.

Step 4: Collect Data

Remember the importance of recognizing whether data is collected through an experimental design or observational study.

Step 5: Calculate a test statistic

For categorical treatment level means, we use an F- statistic, named after R.A. Fisher. We will explore the mechanics of computing the F- statistic beginning in Lesson 2. The F- value we get from the data is labeled \(F_{\text{calculated}}\).

Step 6: Construct Acceptance / Rejection regions

As with all other test statistics, a threshold (critical) value of F is established. This F- value can be obtained from statistical tables or software and is referred to as \(F_{\text{critical}}\) or \(F_\alpha\). As a reminder, this critical value is the minimum value of the test statistic (in this case \(F_{\text{calculated}}\)) for us to reject the null.

The F- distribution, \(F_\alpha\), and the location of acceptance/rejection regions are shown in the graph below:

Step 7: Based on Steps 5 and 6, draw a conclusion about \(H_0\)

If \(F_{\text{calculated}}\) is larger than \(F_\alpha\), then you are in the rejection region and you can reject the null hypothesis with \(\left(1-\alpha \right)\) level of confidence.

Note that modern statistical software condenses Steps 6 and 7 by providing a p -value. The p -value here is the probability of getting an \(F_{\text{calculated}}\) even greater than what you observe assuming the null hypothesis is true. If by chance, the \(F_{\text{calculated}} = F_\alpha\), then the p -value would be exactly equal to \(\alpha\). With larger \(F_{\text{calculated}}\) values, we move further into the rejection region and the p- value becomes less than \(\alpha\). So, the decision rule is as follows:

If the p- value obtained from the ANOVA is less than \(\alpha\), then reject \(H_0\) in favor of \(H_A\).

Statistics Made Easy

The Complete Guide: Hypothesis Testing in Excel

In statistics, a hypothesis test is used to test some assumption about a population parameter .

There are many different types of hypothesis tests you can perform depending on the type of data you’re working with and the goal of your analysis.

This tutorial explains how to perform the following types of hypothesis tests in Excel:

- One sample t-test

- Two sample t-test

- Paired samples t-test

- One proportion z-test

- Two proportion z-test

Let’s jump in!

Example 1: One Sample t-test in Excel

A one sample t-test is used to test whether or not the mean of a population is equal to some value.

For example, suppose a botanist wants to know if the mean height of a certain species of plant is equal to 15 inches.

To test this, she collects a random sample of 12 plants and records each of their heights in inches.

She would write the hypotheses for this particular one sample t-test as follows:

- H 0 : µ = 15

- H A : µ ≠15

Refer to this tutorial for a step-by-step explanation of how to perform this hypothesis test in Excel.

Example 2: Two Sample t-test in Excel

A two sample t-test is used to test whether or not the means of two populations are equal.

For example, suppose researchers want to know whether or not two different species of plants have the same mean height.

To test this, they collect a random sample of 20 plants from each species and measure their heights.

The researchers would write the hypotheses for this particular two sample t-test as follows:

- H 0 : µ 1 = µ 2

- H A : µ 1 ≠ µ 2

Example 3: Paired Samples t-test in Excel

A paired samples t-test is used to compare the means of two samples when each observation in one sample can be paired with an observation in the other sample.

For example, suppose we want to know whether a certain study program significantly impacts student performance on a particular exam.

To test this, we have 20 students in a class take a pre-test. Then, we have each of the students participate in the study program for two weeks. Then, the students retake a post-test of similar difficulty.

We would write the hypotheses for this particular two sample t-test as follows:

- H 0 : µ pre = µ post

- H A : µ pre ≠ µ post

Example 4: One Proportion z-test in Excel

A one proportion z-test is used to compare an observed proportion to a theoretical one.

For example, suppose a phone company claims that 90% of its customers are satisfied with their service.

To test this claim, an independent researcher gathered a simple random sample of 200 customers and asked them if they are satisfied with their service.

- H 0 : p = 0.90

- H A : p ≠ 0.90

Example 5: Two Proportion z-test in Excel

A two proportion z-test is used to test for a difference between two population proportions.

For example, suppose a s uperintendent of a school district claims that the percentage of students who prefer chocolate milk over regular milk in school cafeterias is the same for school 1 and school 2.

To test this claim, an independent researcher obtains a simple random sample of 100 students from each school and surveys them about their preferences.

- H 0 : p 1 = p 2

- H A : p 1 ≠ p 2

Featured Posts

Hey there. My name is Zach Bobbitt. I have a Masters of Science degree in Applied Statistics and I’ve worked on machine learning algorithms for professional businesses in both healthcare and retail. I’m passionate about statistics, machine learning, and data visualization and I created Statology to be a resource for both students and teachers alike. My goal with this site is to help you learn statistics through using simple terms, plenty of real-world examples, and helpful illustrations.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Join the Statology Community

Sign up to receive Statology's exclusive study resource: 100 practice problems with step-by-step solutions. Plus, get our latest insights, tutorials, and data analysis tips straight to your inbox!

By subscribing you accept Statology's Privacy Policy.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Can J Hosp Pharm

- v.68(4); Jul-Aug 2015

Creating a Data Analysis Plan: What to Consider When Choosing Statistics for a Study

There are three kinds of lies: lies, damned lies, and statistics. – Mark Twain 1

INTRODUCTION

Statistics represent an essential part of a study because, regardless of the study design, investigators need to summarize the collected information for interpretation and presentation to others. It is therefore important for us to heed Mr Twain’s concern when creating the data analysis plan. In fact, even before data collection begins, we need to have a clear analysis plan that will guide us from the initial stages of summarizing and describing the data through to testing our hypotheses.

The purpose of this article is to help you create a data analysis plan for a quantitative study. For those interested in conducting qualitative research, previous articles in this Research Primer series have provided information on the design and analysis of such studies. 2 , 3 Information in the current article is divided into 3 main sections: an overview of terms and concepts used in data analysis, a review of common methods used to summarize study data, and a process to help identify relevant statistical tests. My intention here is to introduce the main elements of data analysis and provide a place for you to start when planning this part of your study. Biostatistical experts, textbooks, statistical software packages, and other resources can certainly add more breadth and depth to this topic when you need additional information and advice.

TERMS AND CONCEPTS USED IN DATA ANALYSIS

When analyzing information from a quantitative study, we are often dealing with numbers; therefore, it is important to begin with an understanding of the source of the numbers. Let us start with the term variable , which defines a specific item of information collected in a study. Examples of variables include age, sex or gender, ethnicity, exercise frequency, weight, treatment group, and blood glucose. Each variable will have a group of categories, which are referred to as values , to help describe the characteristic of an individual study participant. For example, the variable “sex” would have values of “male” and “female”.

Although variables can be defined or grouped in various ways, I will focus on 2 methods at this introductory stage. First, variables can be defined according to the level of measurement. The categories in a nominal variable are names, for example, male and female for the variable “sex”; white, Aboriginal, black, Latin American, South Asian, and East Asian for the variable “ethnicity”; and intervention and control for the variable “treatment group”. Nominal variables with only 2 categories are also referred to as dichotomous variables because the study group can be divided into 2 subgroups based on information in the variable. For example, a study sample can be split into 2 groups (patients receiving the intervention and controls) using the dichotomous variable “treatment group”. An ordinal variable implies that the categories can be placed in a meaningful order, as would be the case for exercise frequency (never, sometimes, often, or always). Nominal-level and ordinal-level variables are also referred to as categorical variables, because each category in the variable can be completely separated from the others. The categories for an interval variable can be placed in a meaningful order, with the interval between consecutive categories also having meaning. Age, weight, and blood glucose can be considered as interval variables, but also as ratio variables, because the ratio between values has meaning (e.g., a 15-year-old is half the age of a 30-year-old). Interval-level and ratio-level variables are also referred to as continuous variables because of the underlying continuity among categories.

As we progress through the levels of measurement from nominal to ratio variables, we gather more information about the study participant. The amount of information that a variable provides will become important in the analysis stage, because we lose information when variables are reduced or aggregated—a common practice that is not recommended. 4 For example, if age is reduced from a ratio-level variable (measured in years) to an ordinal variable (categories of < 65 and ≥ 65 years) we lose the ability to make comparisons across the entire age range and introduce error into the data analysis. 4

A second method of defining variables is to consider them as either dependent or independent. As the terms imply, the value of a dependent variable depends on the value of other variables, whereas the value of an independent variable does not rely on other variables. In addition, an investigator can influence the value of an independent variable, such as treatment-group assignment. Independent variables are also referred to as predictors because we can use information from these variables to predict the value of a dependent variable. Building on the group of variables listed in the first paragraph of this section, blood glucose could be considered a dependent variable, because its value may depend on values of the independent variables age, sex, ethnicity, exercise frequency, weight, and treatment group.

Statistics are mathematical formulae that are used to organize and interpret the information that is collected through variables. There are 2 general categories of statistics, descriptive and inferential. Descriptive statistics are used to describe the collected information, such as the range of values, their average, and the most common category. Knowledge gained from descriptive statistics helps investigators learn more about the study sample. Inferential statistics are used to make comparisons and draw conclusions from the study data. Knowledge gained from inferential statistics allows investigators to make inferences and generalize beyond their study sample to other groups.

Before we move on to specific descriptive and inferential statistics, there are 2 more definitions to review. Parametric statistics are generally used when values in an interval-level or ratio-level variable are normally distributed (i.e., the entire group of values has a bell-shaped curve when plotted by frequency). These statistics are used because we can define parameters of the data, such as the centre and width of the normally distributed curve. In contrast, interval-level and ratio-level variables with values that are not normally distributed, as well as nominal-level and ordinal-level variables, are generally analyzed using nonparametric statistics.

METHODS FOR SUMMARIZING STUDY DATA: DESCRIPTIVE STATISTICS

The first step in a data analysis plan is to describe the data collected in the study. This can be done using figures to give a visual presentation of the data and statistics to generate numeric descriptions of the data.

Selection of an appropriate figure to represent a particular set of data depends on the measurement level of the variable. Data for nominal-level and ordinal-level variables may be interpreted using a pie graph or bar graph . Both options allow us to examine the relative number of participants within each category (by reporting the percentages within each category), whereas a bar graph can also be used to examine absolute numbers. For example, we could create a pie graph to illustrate the proportions of men and women in a study sample and a bar graph to illustrate the number of people who report exercising at each level of frequency (never, sometimes, often, or always).

Interval-level and ratio-level variables may also be interpreted using a pie graph or bar graph; however, these types of variables often have too many categories for such graphs to provide meaningful information. Instead, these variables may be better interpreted using a histogram . Unlike a bar graph, which displays the frequency for each distinct category, a histogram displays the frequency within a range of continuous categories. Information from this type of figure allows us to determine whether the data are normally distributed. In addition to pie graphs, bar graphs, and histograms, many other types of figures are available for the visual representation of data. Interested readers can find additional types of figures in the books recommended in the “Further Readings” section.

Figures are also useful for visualizing comparisons between variables or between subgroups within a variable (for example, the distribution of blood glucose according to sex). Box plots are useful for summarizing information for a variable that does not follow a normal distribution. The lower and upper limits of the box identify the interquartile range (or 25th and 75th percentiles), while the midline indicates the median value (or 50th percentile). Scatter plots provide information on how the categories for one continuous variable relate to categories in a second variable; they are often helpful in the analysis of correlations.

In addition to using figures to present a visual description of the data, investigators can use statistics to provide a numeric description. Regardless of the measurement level, we can find the mode by identifying the most frequent category within a variable. When summarizing nominal-level and ordinal-level variables, the simplest method is to report the proportion of participants within each category.

The choice of the most appropriate descriptive statistic for interval-level and ratio-level variables will depend on how the values are distributed. If the values are normally distributed, we can summarize the information using the parametric statistics of mean and standard deviation. The mean is the arithmetic average of all values within the variable, and the standard deviation tells us how widely the values are dispersed around the mean. When values of interval-level and ratio-level variables are not normally distributed, or we are summarizing information from an ordinal-level variable, it may be more appropriate to use the nonparametric statistics of median and range. The first step in identifying these descriptive statistics is to arrange study participants according to the variable categories from lowest value to highest value. The range is used to report the lowest and highest values. The median or 50th percentile is located by dividing the number of participants into 2 groups, such that half (50%) of the participants have values above the median and the other half (50%) have values below the median. Similarly, the 25th percentile is the value with 25% of the participants having values below and 75% of the participants having values above, and the 75th percentile is the value with 75% of participants having values below and 25% of participants having values above. Together, the 25th and 75th percentiles define the interquartile range .

PROCESS TO IDENTIFY RELEVANT STATISTICAL TESTS: INFERENTIAL STATISTICS

One caveat about the information provided in this section: selecting the most appropriate inferential statistic for a specific study should be a combination of following these suggestions, seeking advice from experts, and discussing with your co-investigators. My intention here is to give you a place to start a conversation with your colleagues about the options available as you develop your data analysis plan.

There are 3 key questions to consider when selecting an appropriate inferential statistic for a study: What is the research question? What is the study design? and What is the level of measurement? It is important for investigators to carefully consider these questions when developing the study protocol and creating the analysis plan. The figures that accompany these questions show decision trees that will help you to narrow down the list of inferential statistics that would be relevant to a particular study. Appendix 1 provides brief definitions of the inferential statistics named in these figures. Additional information, such as the formulae for various inferential statistics, can be obtained from textbooks, statistical software packages, and biostatisticians.

What Is the Research Question?

The first step in identifying relevant inferential statistics for a study is to consider the type of research question being asked. You can find more details about the different types of research questions in a previous article in this Research Primer series that covered questions and hypotheses. 5 A relational question seeks information about the relationship among variables; in this situation, investigators will be interested in determining whether there is an association ( Figure 1 ). A causal question seeks information about the effect of an intervention on an outcome; in this situation, the investigator will be interested in determining whether there is a difference ( Figure 2 ).

Decision tree to identify inferential statistics for an association.

Decision tree to identify inferential statistics for measuring a difference.

What Is the Study Design?

When considering a question of association, investigators will be interested in measuring the relationship between variables ( Figure 1 ). A study designed to determine whether there is consensus among different raters will be measuring agreement. For example, an investigator may be interested in determining whether 2 raters, using the same assessment tool, arrive at the same score. Correlation analyses examine the strength of a relationship or connection between 2 variables, like age and blood glucose. Regression analyses also examine the strength of a relationship or connection; however, in this type of analysis, one variable is considered an outcome (or dependent variable) and the other variable is considered a predictor (or independent variable). Regression analyses often consider the influence of multiple predictors on an outcome at the same time. For example, an investigator may be interested in examining the association between a treatment and blood glucose, while also considering other factors, like age, sex, ethnicity, exercise frequency, and weight.

When considering a question of difference, investigators must first determine how many groups they will be comparing. In some cases, investigators may be interested in comparing the characteristic of one group with that of an external reference group. For example, is the mean age of study participants similar to the mean age of all people in the target group? If more than one group is involved, then investigators must also determine whether there is an underlying connection between the sets of values (or samples ) to be compared. Samples are considered independent or unpaired when the information is taken from different groups. For example, we could use an unpaired t test to compare the mean age between 2 independent samples, such as the intervention and control groups in a study. Samples are considered related or paired if the information is taken from the same group of people, for example, measurement of blood glucose at the beginning and end of a study. Because blood glucose is measured in the same people at both time points, we could use a paired t test to determine whether there has been a significant change in blood glucose.

What Is the Level of Measurement?

As described in the first section of this article, variables can be grouped according to the level of measurement (nominal, ordinal, or interval). In most cases, the independent variable in an inferential statistic will be nominal; therefore, investigators need to know the level of measurement for the dependent variable before they can select the relevant inferential statistic. Two exceptions to this consideration are correlation analyses and regression analyses ( Figure 1 ). Because a correlation analysis measures the strength of association between 2 variables, we need to consider the level of measurement for both variables. Regression analyses can consider multiple independent variables, often with a variety of measurement levels. However, for these analyses, investigators still need to consider the level of measurement for the dependent variable.

Selection of inferential statistics to test interval-level variables must include consideration of how the data are distributed. An underlying assumption for parametric tests is that the data approximate a normal distribution. When the data are not normally distributed, information derived from a parametric test may be wrong. 6 When the assumption of normality is violated (for example, when the data are skewed), then investigators should use a nonparametric test. If the data are normally distributed, then investigators can use a parametric test.

ADDITIONAL CONSIDERATIONS

What is the level of significance.

An inferential statistic is used to calculate a p value, the probability of obtaining the observed data by chance. Investigators can then compare this p value against a prespecified level of significance, which is often chosen to be 0.05. This level of significance represents a 1 in 20 chance that the observation is wrong, which is considered an acceptable level of error.

What Are the Most Commonly Used Statistics?

In 1983, Emerson and Colditz 7 reported the first review of statistics used in original research articles published in the New England Journal of Medicine . This review of statistics used in the journal was updated in 1989 and 2005, 8 and this type of analysis has been replicated in many other journals. 9 – 13 Collectively, these reviews have identified 2 important observations. First, the overall sophistication of statistical methodology used and reported in studies has grown over time, with survival analyses and multivariable regression analyses becoming much more common. The second observation is that, despite this trend, 1 in 4 articles describe no statistical methods or report only simple descriptive statistics. When inferential statistics are used, the most common are t tests, contingency table tests (for example, χ 2 test and Fisher exact test), and simple correlation and regression analyses. This information is important for educators, investigators, reviewers, and readers because it suggests that a good foundational knowledge of descriptive statistics and common inferential statistics will enable us to correctly evaluate the majority of research articles. 11 – 13 However, to fully take advantage of all research published in high-impact journals, we need to become acquainted with some of the more complex methods, such as multivariable regression analyses. 8 , 13

What Are Some Additional Resources?

As an investigator and Associate Editor with CJHP , I have often relied on the advice of colleagues to help create my own analysis plans and review the plans of others. Biostatisticians have a wealth of knowledge in the field of statistical analysis and can provide advice on the correct selection, application, and interpretation of these methods. Colleagues who have “been there and done that” with their own data analysis plans are also valuable sources of information. Identify these individuals and consult with them early and often as you develop your analysis plan.

Another important resource to consider when creating your analysis plan is textbooks. Numerous statistical textbooks are available, differing in levels of complexity and scope. The titles listed in the “Further Reading” section are just a few suggestions. I encourage interested readers to look through these and other books to find resources that best fit their needs. However, one crucial book that I highly recommend to anyone wanting to be an investigator or peer reviewer is Lang and Secic’s How to Report Statistics in Medicine (see “Further Reading”). As the title implies, this book covers a wide range of statistics used in medical research and provides numerous examples of how to correctly report the results.

CONCLUSIONS

When it comes to creating an analysis plan for your project, I recommend following the sage advice of Douglas Adams in The Hitchhiker’s Guide to the Galaxy : Don’t panic! 14 Begin with simple methods to summarize and visualize your data, then use the key questions and decision trees provided in this article to identify relevant statistical tests. Information in this article will give you and your co-investigators a place to start discussing the elements necessary for developing an analysis plan. But do not stop there! Use advice from biostatisticians and more experienced colleagues, as well as information in textbooks, to help create your analysis plan and choose the most appropriate statistics for your study. Making careful, informed decisions about the statistics to use in your study should reduce the risk of confirming Mr Twain’s concern.

Appendix 1. Glossary of statistical terms * (part 1 of 2)

- 1-way ANOVA: Uses 1 variable to define the groups for comparing means. This is similar to the Student t test when comparing the means of 2 groups.

- Kruskall–Wallis 1-way ANOVA: Nonparametric alternative for the 1-way ANOVA. Used to determine the difference in medians between 3 or more groups.

- n -way ANOVA: Uses 2 or more variables to define groups when comparing means. Also called a “between-subjects factorial ANOVA”.

- Repeated-measures ANOVA: A method for analyzing whether the means of 3 or more measures from the same group of participants are different.

- Freidman ANOVA: Nonparametric alternative for the repeated-measures ANOVA. It is often used to compare rankings and preferences that are measured 3 or more times.

- Fisher exact: Variation of chi-square that accounts for cell counts < 5.

- McNemar: Variation of chi-square that tests statistical significance of changes in 2 paired measurements of dichotomous variables.

- Cochran Q: An extension of the McNemar test that provides a method for testing for differences between 3 or more matched sets of frequencies or proportions. Often used as a measure of heterogeneity in meta-analyses.

- 1-sample: Used to determine whether the mean of a sample is significantly different from a known or hypothesized value.

- Independent-samples t test (also referred to as the Student t test): Used when the independent variable is a nominal-level variable that identifies 2 groups and the dependent variable is an interval-level variable.

- Paired: Used to compare 2 pairs of scores between 2 groups (e.g., baseline and follow-up blood pressure in the intervention and control groups).

Lang TA, Secic M. How to report statistics in medicine: annotated guidelines for authors, editors, and reviewers. 2nd ed. Philadelphia (PA): American College of Physicians; 2006.

Norman GR, Streiner DL. PDQ statistics. 3rd ed. Hamilton (ON): B.C. Decker; 2003.

Plichta SB, Kelvin E. Munro’s statistical methods for health care research . 6th ed. Philadelphia (PA): Wolters Kluwer Health/ Lippincott, Williams & Wilkins; 2013.

This article is the 12th in the CJHP Research Primer Series, an initiative of the CJHP Editorial Board and the CSHP Research Committee. The planned 2-year series is intended to appeal to relatively inexperienced researchers, with the goal of building research capacity among practising pharmacists. The articles, presenting simple but rigorous guidance to encourage and support novice researchers, are being solicited from authors with appropriate expertise.

Previous articles in this series:

- Bond CM. The research jigsaw: how to get started. Can J Hosp Pharm . 2014;67(1):28–30.

- Tully MP. Research: articulating questions, generating hypotheses, and choosing study designs. Can J Hosp Pharm . 2014;67(1):31–4.

- Loewen P. Ethical issues in pharmacy practice research: an introductory guide. Can J Hosp Pharm. 2014;67(2):133–7.

- Tsuyuki RT. Designing pharmacy practice research trials. Can J Hosp Pharm . 2014;67(3):226–9.

- Bresee LC. An introduction to developing surveys for pharmacy practice research. Can J Hosp Pharm . 2014;67(4):286–91.

- Gamble JM. An introduction to the fundamentals of cohort and case–control studies. Can J Hosp Pharm . 2014;67(5):366–72.

- Austin Z, Sutton J. Qualitative research: getting started. C an J Hosp Pharm . 2014;67(6):436–40.

- Houle S. An introduction to the fundamentals of randomized controlled trials in pharmacy research. Can J Hosp Pharm . 2014; 68(1):28–32.

- Charrois TL. Systematic reviews: What do you need to know to get started? Can J Hosp Pharm . 2014;68(2):144–8.

- Sutton J, Austin Z. Qualitative research: data collection, analysis, and management. Can J Hosp Pharm . 2014;68(3):226–31.

- Cadarette SM, Wong L. An introduction to health care administrative data. Can J Hosp Pharm. 2014;68(3):232–7.

Competing interests: None declared.

Further Reading

- Devor J, Peck R. Statistics: the exploration and analysis of data. 7th ed. Boston (MA): Brooks/Cole Cengage Learning; 2012. [ Google Scholar ]

- Lang TA, Secic M. How to report statistics in medicine: annotated guidelines for authors, editors, and reviewers. 2nd ed. Philadelphia (PA): American College of Physicians; 2006. [ Google Scholar ]

- Mendenhall W, Beaver RJ, Beaver BM. Introduction to probability and statistics. 13th ed. Belmont (CA): Brooks/Cole Cengage Learning; 2009. [ Google Scholar ]

- Norman GR, Streiner DL. PDQ statistics. 3rd ed. Hamilton (ON): B.C. Decker; 2003. [ Google Scholar ]

- Plichta SB, Kelvin E. Munro’s statistical methods for health care research. 6th ed. Philadelphia (PA): Wolters Kluwer Health/Lippincott, Williams & Wilkins; 2013. [ Google Scholar ]

Teach yourself statistics

How to Analyze Survey Data for Hypothesis Tests

Traditionally, researchers analyze survey data to estimate population parameters. But very similar analytical techniques can also be applied to test hypotheses.

In this lesson, we describe how to analyze survey data to test statistical hypotheses.

The Logic of the Analysis

In a big-picture sense, the analysis of survey sampling data is easy. When you use sample data to test a hypothesis, the analysis includes the same seven steps:

- Estimate a population parameter.

- Estimate population variance.

- Compute standard error.

- Set the significance level.

- Find the critical value (often a z-score or a t-score).

- Define the upper limit of the region of acceptance.

- Define the lower limit of the region of acceptance.

It doesn't matter whether the sampling method is simple random sampling, stratified sampling, or cluster sampling. And it doesn't matter whether the parameter of interest is a mean score, a proportion, or a total score. The analysis of survey sampling data always includes the same seven steps.