Create Professional Science Figures in Minutes

Join the largest science communication research community.

THOUSANDS OF ICONS

Browse over 50,000 icons and templates from over 30 fields of life science, curated and vetted by industry professionals.

DRAG AND DROP

Simple drag-and-drop functionality enables you to create scientific figures up to 50 times faster.

CUSTOM ICONS

Find icons you need for even the most specialized fields of life science, or we’ll create it in as little as 48 hours ( conditions apply ).

Figure: During HSV infection, memory CD4-T-cells respond to antigens in neural tissue by secreting IFN-γ and loosening tight junctions between endothelial cells, allowing antibodies to cross the blood-brain-barrier.

Ready to get started?

Get in touch

555-555-5555

Limited time offer: 20% off all templates ➞

How to Make Good Figures for Scientific Papers

Creating good figures for scientific publications requires using design best practices to make each figure clearly show the main point of your data story.

This article reviews important design principles that will help you create effective figures. However, if you want step-by-step tutorials on how to create the scientific illustrations and Excel graphs using Adobe Illustrator and PowerPoint, read these articles instead:

- Free Graphical Abstract Templates and Tutorials

- Free Research Poster Templates and Tutorials

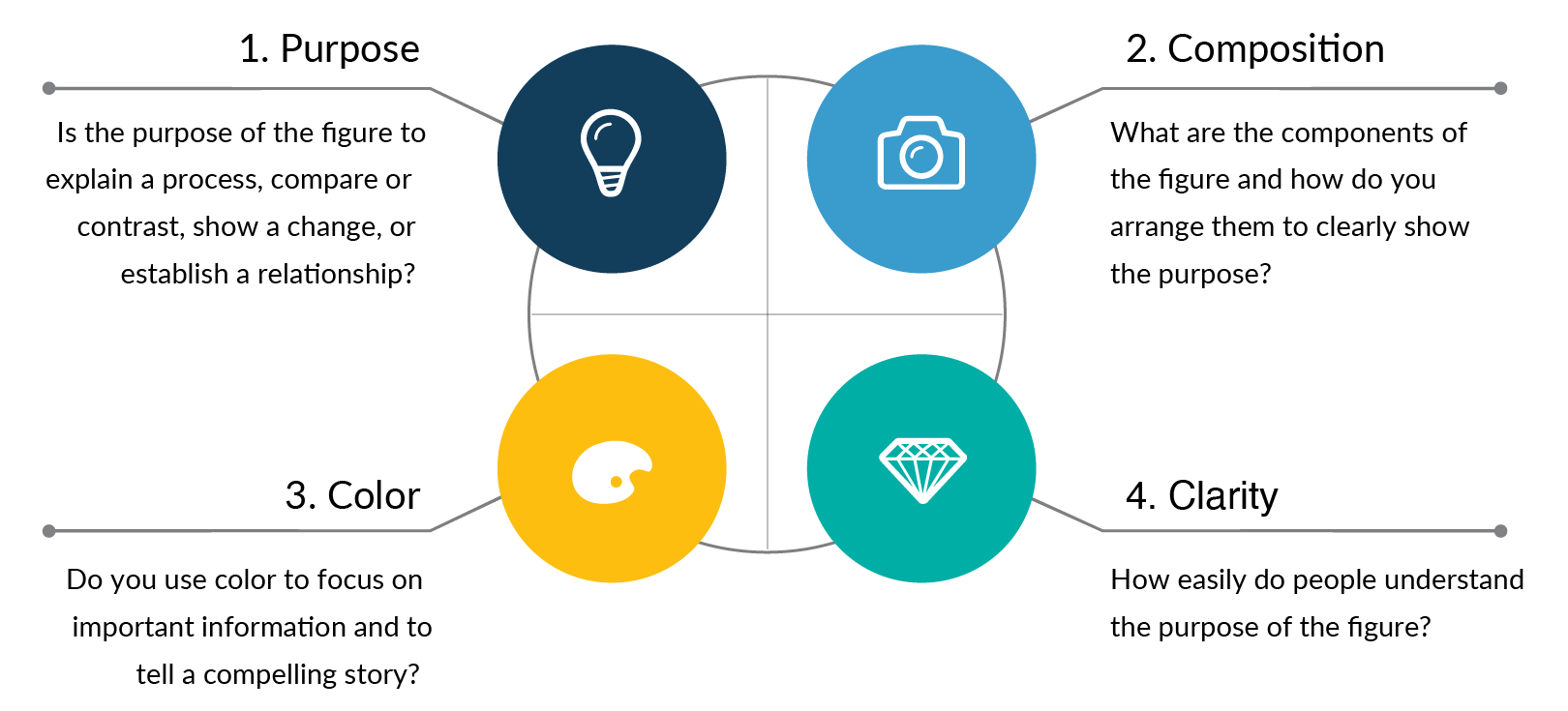

Four Rules to Create High-Quality Figures

The best data visualizations for scientific papers use a combination of good design principles and storytelling that allows the audience to quickly understand the results of a scientific study. Below are four rules that will help you make effective research figures and save you time with the final journal formatting. There are also practical tips on how to find the purpose of your figure and how to apply design best practices to graphs, images, and tables.

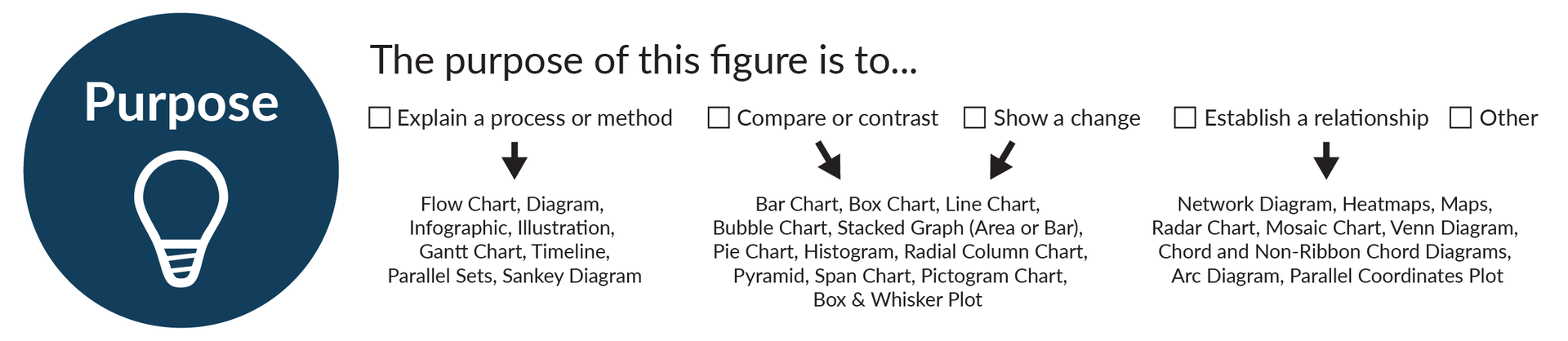

Rule 1: Clearly show the main purpose to your audience

For every graph or figure you create, the first step is to answer the question: what is the purpose of my data? Clearly defining the main purpose of your scientific design is essential so that you can create and format the data in ways that are easy to understand.

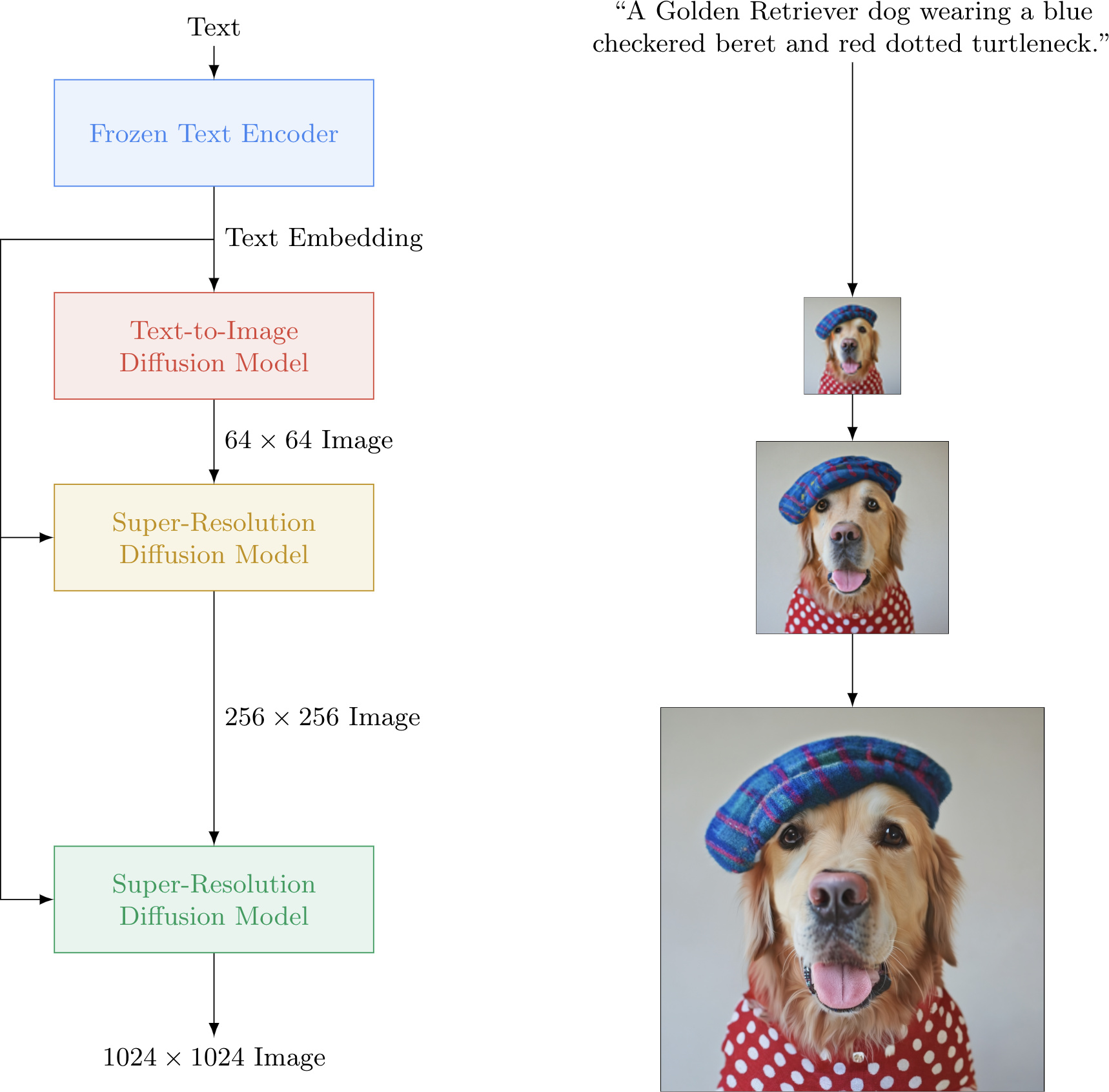

The most common purposes for scientific publications are to explain a process or method, compare or contrast, show a change, or to establish a relationship. Each of these purposes should then lead you to select graph types. For example, if the goal of your figure is to explain a method, you will likely want to choose process-focused graph types such as flow charts, diagrams, infographics, illustrations, gantt charts, timelines, parallel sets, or Sankey diagrams. Below are examples of the most common graph types that you can use for different data purposes. Read more articles to learn how to choose the right data visualizations and data storytelling .

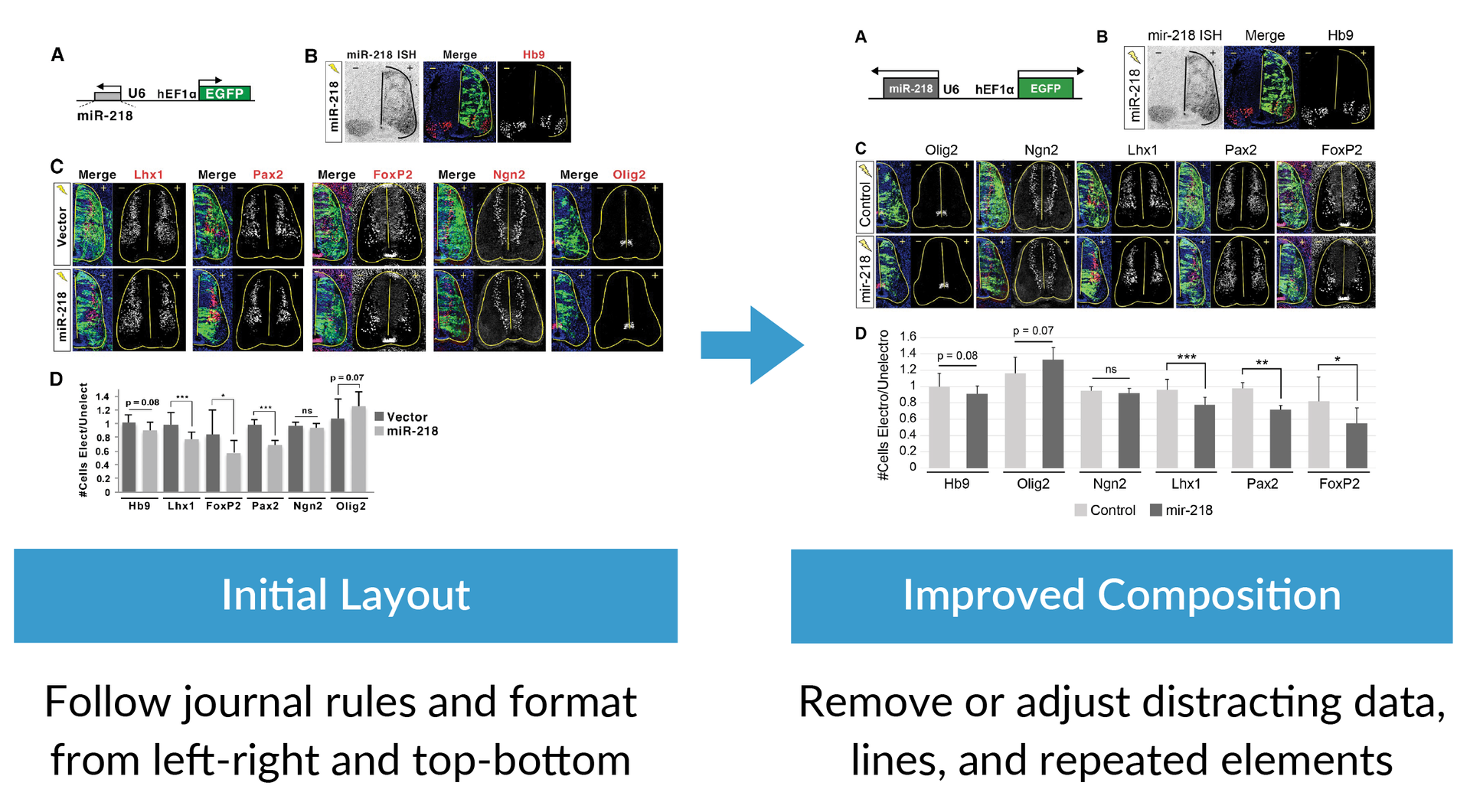

Rule 2: Use composition to simplify the information

After you define the purpose of your graph or figure, the next step is to make sure you follow composition best practices that make the information clear. Composition best practices include following the journal rules and formatting from left to right, top to bottom, or in a circle. You should also review your designs to remove or adjust distracting data, lines, shadows, and repeated elements. Applying good composition means spending time reviewing your layout and simplifying the story using these techniques.

Data Composition Best Practices:

- Design flow should be left to right, top to bottom, or in a circle

- Make sure most important data is the focus of the design

- Remove or adjust excess data and text

- Make text easy to read

- Reduce contrast of bold lines

- Remove repeated elements

- Remove shadows

The example below shows how to design a figure that applies the composition best practices by taking an initial layout of a figure on the left and then use formatting to fill the space, simplify information, and reorder the data to more clearly show the main purpose of the research.

Follow Science Journal Formatting Requirements:

In order to organize the graphs, charts, and figures, you will also need to know the requirements of the scientific journal. You will need to know the limits of the figure sizes, the maximum number of figures, as well as color, fonts, resolution, and file type requirements. You can find different journal requirements by going to the Journal’s homepage and then finding the link to the author’s guidelines from there. If you Google the journal’s formatting requirements, make sure you find the most up-to-date page.

For example, the academic journal Science allows a maximum of 6 figures and requires that they have a width of 55 mm (single column) or 230 mm (double column). In contrast, the journal Nature only allows 3-4 figures or tables with maximum widths of 89 mm (single column) and 183 mm (double column). If you planned to submit your scientific publication to Nature, you would need to carefully plan which graphs and tables will best tell your scientific story within only four figures.

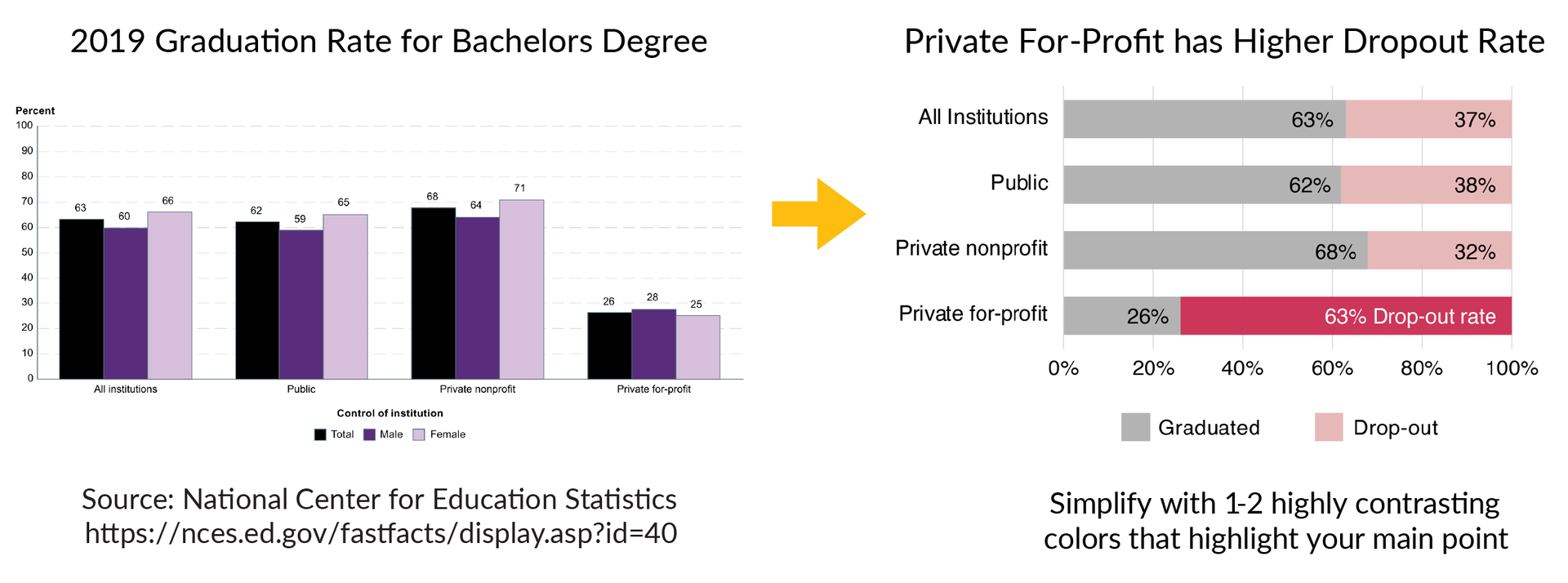

Rule 3: Use colors or grayscale to highlight the purpose

Color is one of the most powerful data storytelling tools. When used properly, color enhances understanding of your graphs and when used poorly, it can be very distracting.

Scientific Color Design Tips:

- If possible, limit your design to 1-2 colors that make the main point of the data stand out from the rest

- Make colors accessible to people with color blindness

The example below shows a graph on the left that has a lot of information about graduation rates for bachelor’s degrees in 2019. The text is small and the color design makes it difficult to understand the main results of the data. One way to improve this figure is to use colors to highlight the main story of the data, which is that private for-profit institutions have a much higher drop-out rate than all other institutions. The figure on the right improves this design using the bold pink color and clearer text to highlight the main point of the dataset.

Rule 4: Refine and repeat until the story is clear

The goal of good figure design is to have your audience clearly understand the main point of your research. That is why the final rule is to spend time refining the figure using the purpose, composition, and color tools so that the final design is clear.

It is normal to make 2-3 versions of a figure before you settle on the final design that works best. I recommend using the three clarity checkpoints below to improve your refinement process.

Design Clarity Checkpoints:

- Checkpoint 1. Does the figure show the overall story or main point when you hide the text? If not, improve the data visualization designs to more clearly show the main purpose.

- Checkpoint 2. Can you remove or adjust unnecessary elements that attract your attention? Remove repetitive elements, bounding boxes, background colors, extra lines, extra colors, repeated text, shadows/shading, either remove or adjust excess data, and consider moving information to supplementary figures.

- Checkpoint 3. Does the color palette enhance or distract from the story? Limit the use of color and pick a color palette that improves audience understanding of the main purpose of the figure. If the color doesn’t serve an obvious purpose, change to grayscale.

Scientific Figure Design Summary

For every scientific publication, follow the four rules of good scientific figure design to help you create effective graphics that engage and impress your audience:

- Clearly show the main purpose to your audience

- Use composition to simplify the information

- Use colors or grayscale to highlight the main points of the figure

- Refine and repeat the process until the story is clear

Related Content:

- Best Color Palettes for Scientific Figures and Data Visualizations

- Graphical Abstract Examples with Free Templates

- Free Research Poster Templates and Tutorials

- BioRender Alternatives: Scientific Illustration Software Comparisons

Create professional science figures with illustration services or use the online courses and templates to quickly learn how to make your own designs.

Interested in free design templates and training.

Explore scientific illustration templates and courses by creating a Simplified Science Publishing Log In. Whether you are new to data visualization design or have some experience, these resources will improve your ability to use both basic and advanced design tools.

Interested in reading more articles on scientific design? Learn more below:

Scientific Presentation Guide: How to Create an Engaging Research Talk

Data Storytelling Techniques: How to Tell a Great Data Story in 4 Steps

Best Science PowerPoint Templates and Slide Design Examples

Content is protected by Copyright license. Website visitors are welcome to share images and articles, however they must include the Simplified Science Publishing URL source link when shared. Thank you!

Online Courses

Stay up-to-date for new simplified science courses, subscribe to our newsletter.

Thank you for signing up!

You have been added to the emailing list and will only recieve updates when there are new courses or templates added to the website.

We use cookies on this site to enhance your user experience and we do not sell data. By using this website, you are giving your consent for us to set cookies: View Privacy Policy

Simplified Science Publishing, LLC

- Aug 16, 2021

Best free and paid software for scientific illustrations

The best things in life come with a free trial.

Posters. Presentations. Grants. Your papers. It’s becoming more and more essential to be able to make scientific illustrations yourself, but which software could you use to make them?

Are there free options? Should you go straight for the high-end subscriptions?

There are a lot of options out there and to make your life easier we compiled this guide.

To start, align yourself with one of these three personas to help you figure out where you might want to try first!

Student, post-doc or lab head?

Persona 1: You’re a grad student. You’ve gotten used to the writing part of academia (as you’d hope). But when it comes down to making a pretty picture for your proposal or presentation, you might be feeling stumped on how to make your figures look professional. You want to start somewhere, but most good software is really expensive (and not within the budget!)

So, a good free software might be your gateway into developing your skills in graphic design. The paid stuff can wait while you learn!

Persona 2: You’re a post doc. You’ve been invited to write an article for a highly prestigious review journal. You reckon this is finally your chance to spell out your name in FULL beyond the et al. AND to be more than just the mysterious 7th author. But the journal is asking for a handful of scientific illustrations as figures. They need high quality ones that’ll sparkle at 300 dpi when they’re printed hot off the press.

Perhaps you could try a free trial of a high-end industry software? Then convince your supervisor that the software is worth fully funding under the lab equipment budget!

Persona 3: You’re a tenured lab head. It’s grant season and don’t want to be rejected this time . You need to make figures to illustrate your point on a 2 page document, but we all know the word count is horridly small. You realise that a good figure speaks volumes - so you try to make them yourself, or end up asking your students to make them for you just before the deadline. Neither you nor they have got a clue what they could use.

Then maybe it’s worth buying a license to a trusty and reliable software for the whole team!

Figured out what you’re after? If not, no worries!

We’ll walk you through an overview of the best free and paid software for making your very own digital scientific illustrations to use anywhere in your research! It’s up to you to try them out to see which fits you best.

We’ve got 6 recommendations (3 free, 3 paid) lined up to get you started.

Let’s have a look, shall we?

Free design software

Recommended for: Persona 1: The Grad Student, and anyone with fundamental IT skills.

Looking to make a figure, but not from scratch? Canva’s got you covered.

Canva’s motto is “ Design for everyone ”. This means that even non-designers can create amazing graphics - including scientific illustrations!

You’ll first need to make an account on Canva, then you can use Canva directly in your browser.

Then you can start straight away with any of their suggested templates, and there’s a lot to choose from in the free version of Canva.

I personally like to use their Poster template, or set my own canvas up at a size I want. Then, it’s all about dragging and dropping free stock images, or “Elements” as Canva calls them, from their menus. You can use any of their provided Elements to compose your picture, and they have plenty of science-themed ones. Here’s a scientific schematic I put together in just a few minutes!

That was really easy!

But note that if you use these Elements, you can’t publish them anywhere that earns you money, so Canva’s more handy for things like presentations. It’s best to have a read of the Canva Terms of Use to be on the safe side.

If you end up loving Canva, you can optionally choose to upgrade to Canva PRO at $18.00 AUD/month (they also have a free 30 day trial of the PRO version!). This gives you access to a lot more Elements and a tonne of nifty features!

If you’re looking to make your own pictures from scratch, read on!

Recommended for: Persona 1: The Grad Student, and Persona 2: The Post Doc.

Looking to try out vector graphics for the first time, without the long-term commitment?

Vectr is completely browser-based and gives you access to the simple tools for making shapes and writing out text. It’s also equipped with basic features like adding drop shadows or making objects transparent.

It’s a great way to start learning how a Pen tool works, one of the most common tools in vector graphic design. Here’s something you can quickly whip up with the tools available to you in Vectr.

Also if you’re looking to upgrade to another vector graphic design software in the future, you can save your Vectr creations as an .svg file to edit elsewhere.

If you’re keen to upgrade your vector game, we’ve got more to discuss.

Recommended for: Everyone!

Welcome to the nitty-gritty of vector graphics, with your new best friend Inkscape. No fancy upgrades, just a full package that’s 100% free .

Inkscape gives you the ability to work with more complex vector shapes to make more detailed illustrations. You now have access to things like adjusting opacity, making 3D shapes in perspective , and a handy function you’ll see in most vector software called “clipping” , where you can combine shapes together to make new shapes.

Inkscape is downloadable for use on both Windows and MacOS. And by now, being able to save as an .svg file is pretty standard - you can take your Inkscape creations wherever you go!

There’s also a large community of Inkscapers out there being one of the more popular free choices. It’s easy enough to find a guide or tutorial to get you started.

Paid design software

Microsoft powerpoint.

Recommended for: Persona 1: The Grad Student

It needs no introductions - it’s the classic, trusty, Microsoft PowerPoint.

The good news is that you likely already own a paid license for this through your institution. Though just in case you don’t, you can purchase it for $160 AUD to keep forever. There’s also a $10 AUD/month plan for the whole Office suite , if you would much rather test out the waters.

PowerPoint is easy to use, intuitive and you’ve likely used it all throughout your education. But did you know that it has fantastic design capabilities? It’s a popular choice for making scientific posters, and we even have free templates just for this .

You’re likely familiar with the Shapes panel, and we’ve discussed previously how you can make just about anything with the right shapes . And, they’re all vector-based!

But did you know about the Icons and SmartArt tools?

The Icons panel is full of symbols and presets that are neatly categorised - and there’s tonnes of science ones. Just like Canva, you can just drag and drop them onto your workspace.

Combine those icons with the ready-made layouts in SmartArt for diagrams and flowcharts.

It’s super easy!

But wait, there’s more. Did you know that PowerPoint could make animated videos ? Surprise your reviewers with an animation for your next graphical abstract!

Hold up, what if you’re looking to upgrade from PowerPoint?

If you’re looking to go pro, we’ve got TWO industry-standard recommendations below.

Affinity Designer

Recommended for: Persona 2: The Post Doc, and Persona 3: The Lab Head

Think you’ve got the hang of the vector tools from Vectr or Inkscape? It’s time to take them to the next level in Affinity Designer.

Affinity Designer is a high-end vector graphics editing software developed by Serif available for MacOS, Windows as well as for iPads for that added portability.

You can access Affinity Designer with a one-off payment of $80 AUD - no subscriptions or anything. It’s incredible value, and there’s even a free trial to get a feel for it .

It features pretty much everything we’ve mentioned so far - a good selection of shapes and the ability to clip them, a fantastic Pen tool , intuitive text formatting. And, so SO much more! You’d just have to try it out!

Definitely, the one thing I particularly love about Affinity Designer is how fluidly it runs as a program, despite being a very intensive graphics machine. It rarely lags! Nobody wants to see program not responding before you’ve saved your work! With Affinity, there’s no worries!

Also, the unique hallmark of Affinity Designer is its handy Persona system. This allows you to preview your art for export on the fly, and is handy for checking whether your work will change depending on RGB/CMYK colour settings , changes in resolution as a raster image, and so much more.

The fewer unexpected surprises during printing the better!

Should you fall in love with Affinity Designer, it also comes as part of a set with Affinity Photo and Publisher for all your photo editing, painting and typesetting needs.

But, there’s one more piece of software we’ve got to show you, and is a top competitor with Affinity Designer.

Let’s roll out the red carpet.

Adobe Illustrator

You’ve reached the Holy Grail, and our favourite vector software here at Animate Your Science, coming straight from the industry giant Adobe .

Welcome to Adobe Illustrator.

Illustrator is an intensive vector graphics editing software package, that if used well, is capable of taking your vector art to new heights. Fully capable of generating crisp 2D art pieces, through to complete 3D renderings - there’s little that Illustrator can’t do. In fact, there’s a million ways to make the same thing in Illustrator.

Here’s something I prepared in Illustrator during my days as a researcher studying malaria parasites.

Adobe can feel overwhelming when you first open it, but there’s ways around that. With Illustrator’s customisable menus, you can help prevent that “ Whoa, this is too complex!” feeling.

You can hide the tools you don’t need, and keep the ones that you’re using to work. This is a mainstay hallmark in Adobe software called Panels - allowing you to fully control the look of your user interface. One day you might be drawing, another day you might be preparing text - there’s different preset Workspaces for different occasions.

And for those of you who loved Canva for its pre-made assets, Adobe still has you covered! With Adobe Stock Assets you have access to a huge collection of licensed vector illustrations and images. Each image comes with its own descriptive license, but chances are you’ll be able to use it anywhere you want! Check out their FAQ for this handy feature.

You can try out Illustrator today through a free trial , and when you’re ready to commit it’s available for $30 AUD/month. It’s available on MacOS, Windows + with added portability on the iPad.

If you’re loving Adobe (like we are!), you can switch to $80 AUD/month if you want access to the entire suite - which includes popular software programs including Photoshop (photo editing and painting), InDesign (document typesetting), Premiere Pro (video editing) and After Effects (animations). That’s fantastic value for such an expansive set of programs!

There’s a lot to tackle with this beast of a program, so we definitely recommend checking out a course on YouTube or SkillShare to learn the basics. Practice makes perfect!

Not looking to make your own illustrations?

You’ve got us at the ays team..

We get it, academic life is hectic and expecting you to design neat graphics might be too much. That’s why we offer design services for busy people like you.

Here at Animate Your Science, we can discuss your ideas and turn them into professional graphics that will turn heads. We’ve got you covered for all your graphical abstract, scientific infographic and scientific poster needs .

Have a custom request? Contact us to find out what we could do for your research.

To keep up to date with our latest tips for merging the worlds of science and graphic design, subscribe to our newsletter !

Until next time!

Dr Juan Miguel Balbin

Dr Tullio Rossi

Related Posts

How to design an effective graphical abstract: the ultimate guide

How to draw your research with simple scientific illustrations

Best examples of scientific posters

- Plasmids Topic Overview

- Plasmids 101

- Molecular Biology Protocols and Tips

- Plasmid Cloning

- Plasmid Elements

- Plasmid Tags

- New Plasmids at Addgene

- Other Plasmid Tools

- CRISPR Topic Overview

- Base Editing

- Cas Proteins

- CRISPR Biosafety

- CRISPR Expression Systems and Delivery Methods

- CRISPR Pooled Libraries

- CRISPR Visualize

- CRISPR gRNAs

- CRISPR Protocols and Tips

- CRISPR Therapeutic Applications

- Other CRISPR Tools

- Viral Vectors Topic Overview

- Viral Vectors 101

- Addgene’s Viral Service

- Adenoviral Vectors

- Chemogenetics

- Cell Tracing

- Neuroscience Biosensors

- Optogenetics

- Retroviral and Lentiviral Vectors

- Viral Vector Protocols and Tips

- Fluorescent Proteins Topic Overview

- Fluorescent Proteins 101

- Fluorescent Biosensors

- Fluorescent Imaging

- Generating Fusions

- Localization with Fluorescent Proteins

- Luminescence

- Non-protein Fluorophores

- Other Fluorescent Protein Tools

- Science Career Topic Overview

- Applying for Jobs

- Conferences

- Early Career Researcher

- Management for Scientists

- Mentoring for Scientists

- Professional development

- Science Career Options

- Download the Science Career Guide

- Microbiology

- Neuroscience

- Plant Biology

- Model Organisms

- Scientific Sharing

- Scientific Publishing

- Science Communication

- Using Addgene's Website

- Addgene News

Early Career Researcher Toolbox: Free Tools for Making Scientific Graphics

By Beth Kenkel

When I started writing for the Addgene blog, I was focused on writing about new scientific techniques and cool plasmids. Creating graphics were usually the last thing I thought about when writing posts. Since then I’ve realized my figures are just as important, if not more important, than my writing. Initially I didn’t have access to professional-grade design software, like Adobe Illustrator, and I didn’t want to pay for these programs either. But with a little Googling and some trial and error, I found some free design software that let me create graphics that better communicated the science in my blog posts. This post highlights several of these free tools which will hopefully also help you communicate your science, whether it’s in presentations, manuscripts, or social media.

If you want to draw your own figures

Google drawings.

Google Drawings is similar to using PowerPoint to draw figures. It’s part of Google Drive so it has a similar interface as Google Docs or other products in the suite. Since it’s web-based, you could access it from anywhere. Its ease of use is one of the reasons why this was the first program I used to create graphics for the Addgene blog. However, it has a limited number of drawing tools, so it’s harder to draw intricate figures, like a brain or a mouse.

Vectr is like a pared down version of Adobe Illustrator. There’s both a web and a desktop version of this software. Vectr has layers, which let you lock and hide individual vectors (shapes defined by 2D points connected by lines and curves) that you’ve drawn. Layers are useful for drawing figures with lots of elements. It took some playing around to figure out all the settings and tool options, but I found Vectr to be fairly intuitive and I was making figures quickly.

Inkscape is the most similar to Adobe Illustrator out of the three options for drawing your own figure discussed in this post. It’s open source and available for desktop use for Windows, Mac OS X, and Linux. Inkscape is versatile and has a wide selection of tools for drawing and editing shapes and like Vectr, Inkscape uses vector graphics. There’s a steep learning curve for Inkscape, but there are lots of resources for learning the software. The Inkscape website has many tutorials and Lynda has a tutorial as well, which you may have access to through your university or your public library.

If you want to use pre-drawn images in your figures

Biorender is like clip art for scientists. The web-based collection of over 20,000 icons is designed by professional medical illustrators with input from life scientists. It’s easy to search the collection and drag and drop an icon onto the workspace. The color and size of icons are adjustable. New icons can be requested, although there’s no guarantee Biorender will create it. Additionally, you can upload your own images to the Biorender workspace. Free Biorender accounts can store 5 workspaces at a time and workspaces are only downloadable in a small file size with a Biorender watermark, which is usually ok for informal settings like lab meeting presentations. You can download larger images without the watermark, which are better suited for publications, if you sign up for a paid account.

I’m proud to announce that @BioRender (a project my team & I have poured our hearts into for 2 yrs) has 200K+ figures by 95K+ scientists 👨🏽🔬👩🔬! We built this so you could stop struggling to make figures in PPT 🔬 Also, the base version is free, forever 💜 https://t.co/KJpyxgxeQt pic.twitter.com/Ho9SQlyUOP — Biotweeps - Maiko Kitaoka (@biotweeps) May 22, 2019

Smart Servier Medical Art

This site has 3,000 free medical images organized into four main categories: anatomy and the human body, cellular biology, medical specialities, and general items. Individual images as well as collections of images are easy to download in a .png file format. Images are under a Creative Commons 3.0 license , which requires users to give appropriate credit, provide a link to the license, and indicate if changes were made to the images. This requirement means the images are probably better suited for presentations and digital articles where it’s easier to give attribution, than for journal publications.

Google Image Search

Google image search is a great tool for searching the entire internet for scientific graphics. The tools settings let you tailor your search to particular types of images. For example, by selecting “Tools” and then clicking the “Usage rights” dropdown menu, you can search for images “Labeled for reuse with modification.” Occasionally Google Image Search results includes images that can’t be reused without attribution, despite filtering for images labeled for reuse with modification. It’s always a good idea to double-check the image’s license information before using it.

Have a tip for using one of the softwares discussed? Or know of a tool or resource not mentioned? Tell us about it in the comments section!

Additional resources on the Addgene blog

- Read our Early Career Researcher Toolbox blog post on social media for scientists

- Find science career blog posts

- Learn about other lab software

Topics: Science Careers , Early Career Researcher

Leave a Comment

Add Comment

Sharing science just got easier... Subscribe to our blog

Follow Addgene on Social

Addgene is a nonprofit plasmid repository.

We store and distribute high-quality plasmids from your colleagues.

- Cookies & Privacy Policy

- Accessibility

- Terms of Use

How to Create Publication-Quality Figures A step-by-step guide (using free software!) Benjamin Nanes

Introduction, data don't lie, follow the formatting rules, transparency, a computer's view of the journal page, raster data vs. vector data, rasterization and resampling, color spaces, hsl, hsv, and hsb, yuv, ycbcr, and ypbpr, indexed, mapped, and named colors, preparing figure components, graphs and charts, exporting vector graphics from r, exporting vector-format charts from any other program, high-bit-depth images, lookup tables, setting the lut range, comparison to photo editing programs, multi-channel images, generating the 8-bit rgb image, ordinary images, figure layout, importing vector files, importing images, clipping masks, calculating scale bars, exporting final figure files, image compression, creating tiff images, creating eps or pdf files, the bottom line, cmyk figures, preparing raster figure components for cmyk, color conversion, author information.

So, after months (years?) of toil in the lab, you're finally ready to share your ground-breaking discovery with the world. You've collected enough data to impress even the harshest reviewers. You've tied it all together in a story so brilliant, it's sure to be one of the most cited papers of all time.

Congratulations!

But before you can submit your magnum opus to Your Favorite Journal , you have one more hurdle to cross. You have to build the figures. And they have to be "publication-quality." Those PowerPoint slides you've been showing at lab meetings? Not going to cut it.

So, what exactly do you need to do for "publication-quality" figures? The journal probably has a long and incomprehensible set of rules. They may suggest software called Photoshop or Illustrator. You may have heard of them. You may be terrified by their price tags.

But here's the good news: It is entirely possible to build publication-quality figures that will satisfy the requirements of most (if not all) journals using only software that is free and open source. This guide describes how to do it. Not only will you save money on software licenses, you'll also be able to set up a workflow that is transparent, maintains the integrity of your data, and is guaranteed to wring every possible picogram of image quality out of the journal's publication format.

Here are the software packages that will make up the core of the figure-building workflow:

R — Charts, graphs, and statistics. A steep learning curve, but absolutely worth the effort. If you're lazy though, the graph-making program that you already use is probably fine.

ImageJ — Prepare your images. Yes, the user interface is a but rough, but this is a much more appropriate tool than Photoshop. For ImageJ bundled with a large collection of useful analysis tools, try the Fiji distribution .

Inkscape — Arrange, crop, and annotate your images; bring in graphs and charts; draw diagrams; and export the final figure in whatever format the journal wants. Illustrator is the non-free alternative. Trying to do this with Photoshop is begging for trouble.

Embed and Crop Images extension for Inkscape and The PDF Shrinker — Control image compression in your final figure files.

The focus on free software is facultative rather than ideological. All of these programs are available for Windows, Mac, and Linux, which is not always the case for commercial software. Furthermore, the fact that they are non-commercial avoids both monetary and bureaucratic hassles, so you can build your figures with the same computer you use to store and analyze your data, rather than relying on shared workstations (keep backups!) . Most importantly, these tools are often better than their commercial alternatives for building figures.

First of all, this guide is not intended to be a commentary on figure design. It's an introduction to the technical issues involved in turning your experimental data into something that can be displayed on a computer monitor, smart-phone, or dead tree while preserving as much information as possible. You will still be able to produce ugly and uninformative figures, even if they are technically perfect.

So, before we dive into the details of the figure-building workflow, let's take a moment to consider what we want to accomplish. Generally speaking, we have four goals: accurately present the data, conform to the journal's formatting requirements, preserve image quality, and maintain transparency.

And neither should your figures, even unintentionally. So it's important that you understand every step that stands between your raw data and the final figure. One way to think of this is that your data undergoes a series of transformations to get from what you measure to what ends up in the journal. For example, you might start with a set of mouse weight measurements. These numbers get 'transformed' into the figure as the vertical position of points on a chart, arranged in such a way that 500g is twice as far from the chart baseline as 250g. Or, a raw immunofluorescence image (a grid of photon counts) gets transformed by the application of a lookup table into a grayscale image. Either way, exactly what each transformation entails should be clear and reproducible. Nothing in the workflow should be a magic "black box."

Following one set of formatting rules shouldn't be too hard, at least when the journal is clear about what it expects, which isn't always the case. But the trick is developing a workflow that is sufficiently flexible to handle a wide variety of formatting rules — 300dpi or 600dpi, Tiff or PostScript, margins or no margins. The general approach should be to push decisions affecting the final figure format as far back in the workflow as possible so that switching does not require rebuilding the entire figure from scratch.

Unfortunately, making sure your figures look just the way you like is one of the most difficult goals of the figure-building process. Why? Because what you give the journal is not the same thing that will end up on the website or in the PDF. Or in print, but who reads print journals these days? The final figure files you hand over to the editor will be further processed — generally through some of those magic "black boxes." Though you can't control journal-induced figure quality loss, you can make sure the files you give them are as high-quality as possible going in.

If Reviewer #3 — or some guy in a bad mood who reads your paper five years after it gets published — doesn't like what he sees, you are going to have to prove that you prepared the figure appropriately. That means the figure-building workflow must be transparent. Every intermediate step from the raw data to the final figure should be saved, and it must be clear how each step is linked. Another reason to avoid black boxes.

This workflow should accomplish each of these goals. That being said, it's not really a matter of follow-the-checklist and get perfect figures. Rather, it's about understanding exactly what you're doing to get your data from its raw form to the (electronic) journal page.

In order to understand how to get data into a presentable form, we need to consider a few details of how visual information gets represented on a computer.

There are two fundamentally different ways that visual information can be described digitally. The first is by dividing an image into a grid, and representing the color of each cell in the grid — called a pixel — with a numeric value. This is raster data , and you're probably already familiar with it. Nearly all digital pictures, from artsy landscapes captured with high-end cameras to snapshots taken by cell phones, are represented as raster data. Raster data is also called bitmap data.

The second way computers can represent images is with a set of instructions. Kind of like "draw a thin dashed red line from point A to point B, then draw a blue circle with radius r centered at point C," but with more computer-readable syntax. This is called vector data, and it's usually used for images that can be decomposed into simple lines, curves, and shapes. For example, the text you're reading right now is represented as a set of curves .

Storing visual information as raster or vector data has an important impact on how that image gets displayed at different sizes. Raster data is resolution dependent . Because there are a finite number of pixels in the image, displaying the image at a particular size results in an image with a particular resolution, usually described as dots per inch (dpi) or pixels per inch (ppi). If a raster image is displayed at too large a size for the number of pixels it contains, the resolution will be too low, and the individual pixels will be easily visible, giving the image a blocky or "pixelated" appearance.

In contrast, vector data is resolution independent . Vector images can be enlarged to any size without appearing pixelated. This is because the drawing instructions that make up the vector image do not depend on the final image size. Given the vector image instruction to draw a curve between two points, the computer will calculate as many intermediate points as are necessary for the curve to appear smooth. In a raster image a curve must be divided into pixels when the image is created, and it isn't easy to add more pixels if the image is enlarged later.

Often, raster images have a specified resolution stored separately from the pixel values (a.k.a. metadata ). This resolution metadata isn't really an integral part of the raster image, though it can be useful for conveying important information, such as the scale factor of a microscope or the physical size at which an image is intended to be printed. Similarly, vector images may use a physical coordinate system, such as inches or centimeters. However, the coordinates can be scaled by multiplication with a constant, so, as with raster images, the image data is independent of the physical units.

So, if vector data is resolution independent, why use raster data at all? It's often a question of efficiency. Vector data is great for visual data that can be broken down into simple shapes and patterns. For something like a graph or a simple line drawing, a vector-based representation is probably going to be higher quality and smaller (in terms of file size) than a raster image. However, as images get more complex, the vector representation becomes progressively less efficient. Think of it this way: As you add more shapes to an image, the number of drawing instructions needed for the vector representation also increases, while the number of pixels in the corresponding raster image can stay the same. At some point, resolution independence is no longer worth the cost in file size and processing time.

There's a second very important reason why raster data may be preferable to vector data. Many images are so complex that the simplest shapes into which they can be divided are, effectively, pixels. Consider a photograph. One could create a vector image based on outlines or simple shapes in the picture, but this would be a cartoon approximation — shading and textural details would be lost. The only way to create a vector image capturing all the data in the photograph is to create many small shapes to represent the smallest details present — pixels.

Another way to think about this is that some visual data is natively raster. In raster images from digital cameras, each pixel corresponds to the signal captured by a single photosite on the detector. (This is literally true for the camera attached to your microscope, but the full story is a bit more complicated for consumer cameras.) The camera output is pixels, not lines and curves, so it makes sense to represent the image with raster, rather than vector data.

At some point, almost all vector data gets converted into raster data through a process called rasterization . Usually this happens just before the image is sent to a display or printer, because these devices are built to display and print pixels. That's why your monitor has a screen resolution, which specifies the pixel dimensions of the display area. Because vector-format images are resolution independent, they can be rasterized onto pixel grids of any size, but once rasterized, the image is then tied to that particular pixel grid. In other words, the rasterized image contains less information than the original vector image — rasterization causes a loss of image quality.

A similar loss of image information can occur when raster images are redrawn onto a new pixel grid. This process, called resampling , almost always results in an image that is lower quality, even if the pixel dimensions of the resampled image are increased. Why? Consider an image that is originally 100px × 100px, but is resampled to 150px × 150px. The problem is that many of the pixels in the new image do not directly correspond to pixels in the old image — they lie somewhere between the locations of the old pixels. We could assign them values based on the average of the neighboring pixels, but this will tend to blur sharp edges. Alternatively, we could just duplicate some of the old pixels, but this will shift boundaries and change shapes. There are fancier algorithms too, but the point is, there is no way to exactly represent the original raster data on the new pixel grid.

The takeaway from all this is that rasterization and resampling are to be avoided whenever possible . And when, as is often the case, rasterization and resampling are required to produce an image with a particular size and resolution, rasterization and resampling should only be done once — and as the very last steps in the workflow. Once vector information has been rasterized and raster images have been resampled, any further manipulation risks unnecessary quality loss.

Whether an image is represented by raster or vector data, there are a variety of ways to store color information. Every unit of the image — pixels in raster images and shapes/lines/curves in vector images — has an associated color value. There isn't any practical way to represent the more or less infinite light wavelengths (and combinations thereof) perceived as different colors in the real world, so in the digital world, we take shortcuts. These shortcuts mean that only a finite, though generally large, number of colors are available. Different shortcuts make available slightly different sets of colors, called color spaces .

More precisely, color spaces are sets of colors, while the types of numerical color descriptions discussed below are color models . Color models are mapped onto color spaces, ideally based on widely agreed upon standards so that a particular color model value actually appears the same in all situations. Of course things are generally more complicated than that. Rarely do different computer monitors, for example, display colors exactly the same way.

The simplest color representation has no color at all, just black, white, and shades of gray. A grayscale color is just a single number. Usually, lower numbers are closer to black and higher numbers are closer to white. The range of possible numbers (shades) is determined by the bit depth , discussed later. Another name for this color model is single-channel , which comes from raster images, where each pixel stores one number per image channel .

Adding actual color means adding more numbers (a.k.a., more channels). The most common system uses three channels, and is named after the colors each of them represents: red , green , and blue . RGB is an additive color model — the desired color is created by adding together different amounts of red, green, and blue light. Red and green make yellow; red and blue make purple; green and blue make aqua; and all three together make white. Computers use RGB color almost exclusively. It's also the color model journals want to see in your final figures, the better for displaying them on readers' digital devices. This workflow builds figures using RGB color.

Another way to add color to an image is to subtract it. In subtractive color models , each channel represents a pigment absorbing a certain color. CMYK color represents a common color printing process, with cyan , magenta , yellow , and black inks (the K stands for "key"). Once upon a time, journals would ask for CMYK figures to facilitate printing, but now, when there is a print edition, the journal's production department usually handles the conversion from RGB to CMYK. If, for some reason, Your Favorite Journal insists on CMYK figures, you'll need to take a look at the appendix, which discusses some possible solutions (none very good, unfortunately). Note that since CMYK color has four channels, a CMYK raster image will be 1/3 larger than the equivalent RGB raster image. The CMYK color space also contains 1/3 more possible unique colors than the RGB color space, although in practice, RGB models usually represent a broader range of perceived colors than CMYK models.

Several related models specify colors not by adding or subtracting primary colors, but with parameters related to color perception. These generally include hue (sort of like wavelength), saturation (the precise definition varies, but some measure of color intensity), and lightness , value , or brightness (different kinds of dark/light scales). You're most likely to encounter one of these models in a color-picker dialog box, since the maps of these spaces tend to be more intuitive than RGB or CMYK. However, the colors are usually mapped directly to an RGB model.

Similar to the HSL family of color models, these models include separate brightness and hue components. The Y channel is called the luminance value, and it is basically the grayscale version of the color. The other two channels are chrominance values, different systems for specifying hue. These color models are associated with old-fashioned analog video (think pre-2009 television) and various video compression formats where some color information is discarded to reduce the video size (loss of chrominance information is less noticeable than loss of luminance information).

If an image contains relatively few colors, it's sometimes possible to save space by indexing them in a color table . Each color in the table can then be identified with a single index value or label, such as "SaddleBrown" , which your browser probably maps to RGB (139,69,19) . Spot colors are named colors used to refer to specific inks for printing rather than for subsetting the RGB color space.

The range of numbers available in a particular channel is determined by the channel's bit depth , named for the number of bits (0s and 1s) used to store each value. Images with higher bit depth can describe finer shades and colors, though at the cost of increased file size. Pixels of a 1-bit single-channel raster image can hold one of two values, 0 or 1, so the image is only black and white. Pixels of an 8-bit image hold values from 0 to 255, so the image can include black, white, and 254 shades of gray in between. Pixels of a 16-bit image hold values from 0 to 65,535. However, the 8-bit image will be eight-times the file size of the 1-bit image, and the 16-bit image will be twice the file size of the 8-bit image, assuming they all have the same pixel dimensions.

Nearly all computer monitors are built to display 3-channel 8-bit images using the RGB color model. That's (2 8 ) 3 ~ 16.77 million possible colors and shades, if you're counting. 8-bit RGB is so deeply ingrained in computer graphics, that you're relatively unlikely to encounter anything else in ordinary computer use, with the exception of 8-bit grayscale or an 8-bit single-channel color table mapped to 8-bit RGB values. 8-bit RGB is sometimes called 24-bit RGB, because 8-bits per channel × 3 channels = 24-bits total per pixel.

When a larger than 8-bit image does get produced — even the sensors in most cheap digital cameras capture images that are 10-bits per channel — it is often automatically down-sampled to 8-bit. This is fine for ordinary photos, but potentially problematic for microscopy images. That fancy camera attached to your microscope probably captures 12- to 16-bit images. One of the major challenges of building figures with these images is creating the necessary 8-bit representations of them without inadvertently hiding important information. Information will inevitably be lost, but it's important that the transformation to 8-bit is fully under your control.

You'll often see 8-bit RGB values in base-16 or hexadecimal notation for compactness. This is usually a string of 6 digits/letters, often preceded by "#" or "0x", with each character pair representing one channel. The letters "a" through "f" are used to represent "digits" 10 through 15. For example "6c" equals (16×6)+12 = 108 in base-10. " #ff9933 " is RGB (255,153,51) .

Now that we've covered the basics of how computers represent visual information, let's move on to the nuts and bolts of building a figure. We'll consider a three-step workflow: preparing individual figure components from your data, combining multiple components together in a figure, and exporting the final figure file in Your Favorite Journal 's preferred format.

Graphs and charts are obvious candidates for vector data. They're easily decomposed into shapes ( bar -chart, dot -plot), and if you have to resize them, you want all those lines and curves to stay sharp and un-pixelated. Even if you will need to submit your final figures as raster images, it makes sense to keep charts as vector drawings as long as possible to avoid quality loss from resampling.

Lots of software packages can be used to draw charts and export them as vector data, but my personal favorite is R . R is a scripting language focused on statistical computations and graphics. It's free, open-source, and has a large variety of add-on packages, including the Bioconductor packages for bioinformatics. Plus, because R is a scripting language, it's easy to customize charts, keep a complete record of how you made them, and automate otherwise repetitive tasks. I even used several R scripts to help build this website, although that's not one of its more common uses.

The downside of R's power and flexibility is a substantial helping of complexity. If you're on a deadline, you might want to skip down to the part about saving vector-format charts from other programs . Know too that the steepness of the learning curve is inversely proportional to your programming experience. That said, the ultimate payoff is well worth the initial effort. There are lots of books and websites about R — UCLA has a very nice introduction — so here we'll restrict our focus to how to take a chart you've created in R and export it in a format that can be placed into your final figures.

This section assumes a basic familiarity with R. If you want to put off learning R until later, skip down to the next section .

In R, objects called devices mediate the translation of graphical commands. Different devices are used to create on-screen displays, vector image files, and raster image files. In an R console, type ?Devices to see a list of what's available. If you don't explicitly start a device, R will start one automatically when it receives a graphics command. The default device is operating system-dependent, but it is usually an on-screen display.

The easiest device to use for exporting charts in vector format is pdf , which, as you might guess, makes PDF files. Other vector-format devices are also possible, including postscript , cairo_ps , svg , cairo_pdf , and win.metafile . They all have their strengths and weaknesses, but I've found that pdf reliably produces PDFs that are both consistently viewable on many computers and easily imported into Inkscape for layout of the final figure.

All you need to do to get PDF files of your figures is to wrap your plotting code in commands to open and close a pdf device:

And that's it. There are just a few bits to keep in mind:

Setting useDingbats = FALSE on the pdf device makes larger files, but it also prevents issues when importing some charts into Inkscape.

By default, pdf measures fonts in points (1/72 in.), but everything else in 1/96 in.

The default color space is RGB. It's possible to create a CMYK-formatted PDF, but the conversion process is not well documented.

The default page size is 7 in. × 7 in. If you need to change this, set width = X, height = Y when you open the device.

If you want to try out a different device, just replace pdf with your device of choice. Keep in mind that some devices produce raster images instead of vector images.

Don't forget to call dev.off() to close the device, or you won't be able to open your PDF.

Not all chart-making programs give you an explicit option to export charts as vector-format files such as PDF, PostScript, or EPS. If one of those options is available, use it (of the three, PDF is usually the best choice for importing into Inkscape for layout of the final figures). If not, printing the chart and setting a PDF maker as the printer will often do the trick. Don't worry if there's more on the page than just your chart, since it will be possible to pull out the chart by itself when you import it into Inkscape. To check if the resulting PDF really does contain vector data (PDFs can also contain raster images), open the file and zoom in as much as you can. If you don't see any pixels, you're all set. This method works for charts created in Excel or PowerPoint — just save the whole spreadsheet or presentation as a PDF.

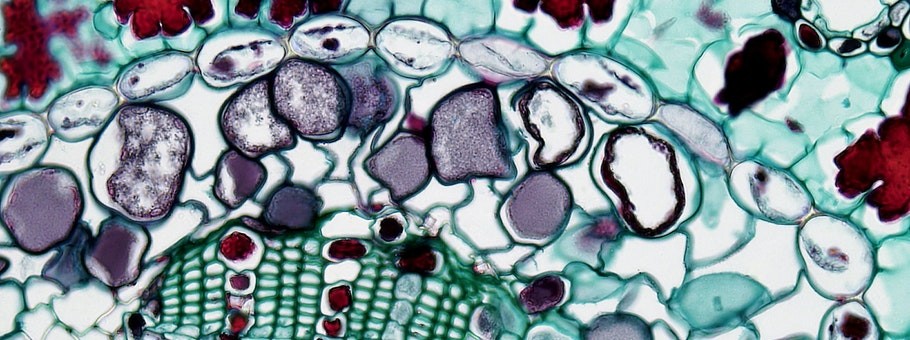

Most measurement tools that produce raster data — from cameras used for immunofluorescence microscopy to X-ray detectors — don't produce images that are directly displayable on a computer screen. They produce high-bit-depth images, and including these images in figures often presents a challenge. On the one hand, the images are natively composed of raster data, so the actual pixel values have important meaning which we want to preserve. However, because they are not directly displayable, they must be downsampled before they can be included in a figure. Our goal is to transform high-resolution, high-bit-depth images to 8-bit RGB in a way that is reproducible and does not hide important information from the original data.

The process of preparing a raster image for display in a figure should be kept completely separate from image analysis and quantification, which should always be based on the original, unaltered image data . Figure preparation should also be kept separate from and downstream of processing steps intended to apply to actual measurements, such as deconvolution algorithms. It is important to save original image data along with a record of every transformation applied to derive the image displayed in a figure.

The most useful program for preparing high-bit-depth images for publication is ImageJ . It can open a very large variety of original high-bit-depth image formats which is both convenient and important for maintaining the integrity of your data. It also has useful analysis tools (many contained in the Fiji distribution), is open-source and easy to extend, and gives you complete control of the transformation to an 8-bit RGB image. While many popular photo editing programs, including Photoshop, can be used to open high-bit-depth images and convert them to 8-bit RGB, none offer the transparency and degree of control provided by ImageJ. That flexibility is important, both for preparing the highest quality presentation of your data and for ensuring that important information from your data is not inadvertently hidden.

The key to creating a figure-ready image from high-bit-depth raster data is a lookup table, or LUT for short. The LUT is a function mapping each potential value in the high-bit-depth image to a corresponding 8-bit RGB value. Suppose, for example, you have a 12-bit image, which can contain pixel values from 0 to 4,095. One LUT might map 0 to RGB (0,0,0) , 4,095 to RGB (255,255,255) , and every value in between 0 and 4,095 to the linearly interpolated RGB value between black and white. This LUT would produce a simple grayscale image. However, it's not the only possible LUT. Another LUT might map values from the 0-1,000 range specifically to the red channel – RGB (0,0,0) to RGB (255,0,0) – and values from the 1,001-4,095 range to grayscale values. The advantage of a LUT such as this is that it increases the ability to discriminate between original data values in the final figure. After all, there is no way to map 4,095 shades of gray onto 255 shades of gray without loosing some detail.

It's worth noting that whenever a high-bit-depth image is displayed on a computer monitor, there is an implicit LUT which automatically generates an 8-bit RGB image. This is because both monitors and the software controlling them are built to display 8-bit RGB values — they don't know what to do with raster data using other bit depths or color models. ImageJ is such a useful program because it deals with the LUT explicitly.

To try out different LUTs in ImageJ, open up an image – stick with a single-channel image for now – and click on the LUT button in the toolbar (alternatively, choose Image > Lookup Tables from the menu). This will show a rather large list ranging from grayscale to primary colors to plenty of more fanciful options. Just stay away from the Apply LUT button, which has the totally unhelpful function of downsampling the image to single-channel 8-bit, rather than what we want to eventually get to, 8-bit RGB. For now, just pick a LUT you like.

If for some reason you're not happy with the available choices, it is possible to create a custom LUT ( Image > Color > Edit LUT... ). Note that LUTs in ImageJ are limited to 256 possible values, with everything else determined by interpolation.

Once you've decided on a LUT, the next step is to determine the range of values on which you want it applied. It will often be the case that the interesting information in your high-bit-depth raster data is concentrated in the middle of range — in other words, very few pixels have values that are very close to zero or very close to the maximum value. Remember that it usually isn't possible to assign a unique color for every value, so when this is the case, it makes sense to focus your attention on the range containing most of the pixels.

To set the LUT range in ImageJ, you can use either of two tools: Image > Adjust > Brightness/Contrast... ( Shift-C ) or Image > Adjust > Window/Level... . The Brightness/Contrast tool lets you set the minimum and maximum pixel values which will be mapped to the extremes of the LUT. Pixels between the minimum and maximum values are assigned RGB values based on the LUT. Any pixels below the minimum or above the maximum don't disappear, but they are forced to the LUT extremes, and won't be distinguishable from each other.

The Brightness/Contrast tool also lets you set properties called "brightness" and "contrast," which are just parameters used to set the minimum and maximum pixel values indirectly. Adjusting the brightness shifts the minimum and maximum together, while adjusting contrast brings the minimum and maximum closer together or farther apart. The Window/Level tool does exactly the same thing — window is the equivalent of contrast, and level is the equivalent of brightness.

Both tools conveniently display a histogram of your image, which is a good quick check to make sure you're not hiding too much of your data below the minimum or above the maximum (to see a larger histogram, click on your image and press H ). Also with both tools, if you want to set values by typing them in rather than with sliders, click on the Set button. Avoid the Apply button, which will downsample your image and prevent further changes.

If you're familiar with photo editing programs, all of this might sound a bit familiar. These programs also let you adjust brightness and contrast, and they do accomplish more or less the same thing. The main difference is that in most photo editing programs, these commands actually transform the underlying image data. In ImageJ, they just alter the mapping function for the LUT, and no actual changes are made to the raster data until you create an 8-bit RGB image. That means that in photo editing programs, adjusting the brightness and contrast causes the loss of image information — i.e. a reduction in image quality. This loss of information will occur during the creation of the RGB image in ImageJ too, but in photo editing programs, each adjustment results in the loss of more information. Unless you are extremely disciplined and make only one adjustment, the quality of the final image will suffer. Since changing the LUT in ImageJ does not affect the original raster data, it's much easier to preserve image quality, even if you want to test out lots of different LUT settings.

Some photo editing programs also allow you to make other adjustments affecting images, such as gamma corrections or curves to transform color values. These adjustments basically just define implicit LUTs — if the input value is plotted on one axis and the output value is plotted on the other, the LUT can be visualized as a line or curve defining how the different input values are mapped to outputs. Gamma is just a way to specify a simple curve, but in principle, all sorts of funny shapes are possible. Many journals explicitly prohibit these types of image adjustments because they can sometimes hide important details from the data. The grayscale and single-color LUTs in ImageJ won't violate these prohibitions — they look like straight lines — but that doesn't mean they can't hide data if you're not careful. Remember that it simply isn't possible to show all the data in a high-bit-depth image, so set the LUT with care.

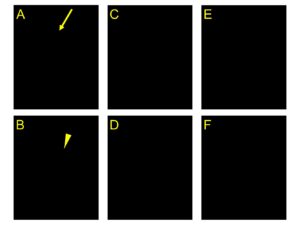

It's quite likely that many of your high-bit-depth images have more than one channel. One particularly common source of multi-channel raster data comes from immunofluorescence microscopy, where signals from multiple fluors are captured and recorded on separate channels. In the final figure, each channel can be presented as a separate RGB image, or multiple channels can be combined together in a single RGB image. Either way, each channel will need its own LUT. Note that if you want to present separate panels of each channel along with a combined "overlay" panel, it's easiest to prepare 8-bit RGB images for each individual channel and a totally separate RGB image for the combined panel, rather than trying to create the combined panel from the individual channel RGB images.

To separate a multi-channel image into several single-channel images in ImageJ, use the Image > Color > Split Channels command. Each resulting single-channel image can then be assigned a LUT and range as described above. To set LUTs and ranges on a multi-channel image, just use the c slider along the bottom of the image to select which channel you want to deal with. Changes from the LUT menu or the Brightness/Contrast tool will apply to that channel. A helpful tool accessible from Images > Color > Channels Tool... or pressing Shift-Z can be used to temporarily hide certain channels — choose Color from the drop-down menu to view only the currently selected channel or Grayscale to view it using a generic grayscale LUT. If you want to combine several single-channel images into a multi-channel image, use the Image > Color > Merge Channels... command.

When setting LUTs for a multi-channel image, keep in mind that the resulting RGB value for any given pixel will be the sum of the RGB values assigned to that pixel by the LUTs for each channel. So, for example, in a two-channel image, if a pixel gets RGB (100,50,0) from one LUT and RGB (50,75,10) from the other LUT, the final value will be RGB (150,125,10) . Remember that the maximum value in 8-bit RGB is 255. If adding values from multiple LUTs exceeds that, the result will still be stuck at 255.

A good way to avoid the possibility of exceeding the maximum 8-bit value of 255 in two- or three- channel images is to make sure that each LUT is orthogonal , or restricted to separate RGB color components. For a three-channel image, this means one LUT assigning shades of red, the second assigning shades of green, and the third assigning shades of blue. For two-channel images there are many possibilities. A good choice is to use shades of green ( RGB (0,255,0) ) and shades of magenta ( RGB (255,0,255) ), since green tends to be perceived as brighter than blue or red individually. It's also helpful for the not-insignificant number of people who are red-green colorblind.

Strictly speaking, LUTs are orthogonal if (1) they can be defined as vectors in the color model coordinate space; and (2) the dot products of each pair of LUTs equal zero. Under this definition, orthogonal LUTs don't necessarily guarantee that final RGB component values larger than 255 can be avoided. Consider three LUTs mapping minimum values to RGB (0,0,0) and maximum values to RGB (0,255,255) , RGB (255,0,255) , and RGB (255,255,0) . These vectors are at right-angles in RGB space, but it's easy to see that sums on any of the RGB coordinates could exceed 255. However, if the LUTs are orthogonal and the sum of their maxima does not exceed 255 on any axis, then any set of LUT coordinates specifies a unique point in RGB space. If these conditions are not met, some RGB colors may result from multiple different combinations of LUT axis coordinates, introducing ambiguity. As you may have guessed, it is not possible to have more than three orthogonal LUTs in an RGB color model.

Once you have assigned LUTs and set their ranges to your satisfaction, generating an 8-bit RGB image is easy. Just choose Image > Type > RGB Color from the menu. This will generate a brand new 8-bit RGB image representation of your original high-bit-depth raster data. If you have a single-channel image and used a grayscale LUT, you can save file space by making a single-channel 8-bit image instead of an RGB image: Image > Type > 8-bit . Be careful with this option though, since it changes the current file rather than creating a new one. Just use Save As instead of Save , and you'll be fine. For both RGB and grayscale images, be sure to avoid quality-degrading image compression when you save the file. Avoid Jpeg at all costs. Both Tiff and PNG are safe choices. Note that there's no need to worry about cropping the image at this stage. It's easier to do that later, when preparing the figure layout.

Be careful not to overwrite your original high-bit-depth image file with the 8-bit RGB image. It's best to think of this as creating a totally new representation of your original data, not applying an adjustment on top of the original image.

If you used a LUT other than grayscale or shades of a simple color, your readers might find it helpful to see a LUT scale bar in the final figure. To make a scale image that can be included in the figure layout, choose File > New > Image... from the menu. Set Type: to 8-bit , Fill With: to Ramp , Width to 256, and height to 1. Clicking Ok will give you a long, thin gradient image. Don't worry that it's only one pixel thick — you'll be able to stretch it later. Select the LUT for which you want to create a scale, set the image type to RGB Color , save the image, and you've got your LUT scale bar.

Some pictures are just pictures — for example, pictures taken with ordinary digital cameras. There's no direct quantitative relationship between the pixel values and your measurements, and the images are 8-bit RGB format to begin with. These images can be included in figures as they are, without the process of setting LUTs. And generally, that's exactly the best thing to do. However, if you decide that the image does need some sort of processing, such as conversion to grayscale to save money on page charges or color correction to compensate for poorly set white-balance, try to do all the adjustment you need in one transformation, since each individual transformation can reduce image quality. Also, keep a copy of the original image file, both because it's the original data, and so if (when) you later decide you don't like the transformed image, you can apply a different transformation to the original image and avoid more quality loss than is absolutely necessary. As with high-bit-depth images, there's no need to worry about cropping ordinary images just yet.

Now that we have the individual components for a figure, it's time to put them all together. The workflow discussed here uses Inkscape , a very flexible (and free) vector graphics editor. The most commonly used non-free alternative to Inkscape is Adobe Illustrator. While it is sometimes possible to create figures using Photoshop, it's generally a bad idea. Why? Because Photoshop is designed to deal primarily with raster data. While it does have limited support for some types of vector data, everything is still tied to a single pixel grid. This means that, unless you are extremely careful, every image component imported into the figure will be resampled, probably multiple times, and most vector components will be rasterized, potentially resulting in significant quality loss. Every manipulation, including scaling, rotating, and even just moving figure components in Photoshop requires resampling. While the changes can be subtle, quality loss from resampling operations is additive — the more operations, the worse the final image will look.

Inkscape, on the other hand, is geared toward vector data and has no document-defined pixel grid. Raster images can be imported into Inkscape as objects that can be positioned, stretched, rotated, and cropped repeatedly, all without resampling. This makes Inkscape a great tool for combining both vector and raster components together in one document — exactly what we need to create a figure layout. There are plenty of general tutorials available on the Inkscape website , so we'll restrict our focus to important tasks related to the figure-building workflow.

Before starting on the figure layout, it's helpful to set a few basic document properties ( File > Document Properties... ). Note that all of these settings can be changed later without affecting your figure:

The Page tab sets page size and default units. Page size is mostly a convenience feature — the page boundaries won't actually show up in the final figure file — but it can be matched to your journal's figure size limits.

Default units sets the units shown on the page rulers as well as the default units choice in most option panels. Inches and centimeters are probably self-explanatory. pt means PostScript points (1/72 in.), and pc means picas (12 points). px isn't really pixels — this isn't a raster document — it means 1/90 in.

The Grid tab can be used to create a grid for aligning objects on the page. Toggle display of the grid by pressing # . Snapping to the grid or other objects can be controlled by the buttons on the snapping toolbar, usually displayed at the right of the window.

The file format used by Inkscape is called SVG, which is short for scalable vector graphics, a perfectly accurate, if generic, description of what the file format contains. SVG is a text-based markup language for representing vector graphics. That means you can open up an SVG file in a text editor and see the individual instructions describing how to draw the image, or even write an SVG file entirely by hand. It also means that developing software to manipulate SVG files is pretty easy. Additionally, SVG is a Web standard , so most modern browsers can be used to view SVG files — many of the figures on this page are SVG. When displayed in the browser, one SVG pixel (1/90 in.) does equal one HTML pixel.

Inkscape is able to import many vector-format file types, but the most reliable is PDF. For some file types, such as PostScript (.ps), EPS, WMF, EMF, and Adobe Illustrator (.ai), Inkscape can correctly recognize most, but not all, features of the file. Inkscape can open SVG files, of course, but SVG files created by other programs sometimes cause problems. PDF import usually goes smoothly, which is all the more useful since many programs can save PDF files. Multi-page PDFs can also be imported, though only one page at a time.

The easiest way to import a vector-format file is just to open it ( File > Open... ). Some imported files can be difficult to work with because their objects are bound together in redundant groups. To undo these, do Edit > Select All followed by a few repetitions of Object > Ungroup . Then just copy the imported vector objects, or a subset of them, and paste them into your figure. Note that the imported objects become part of the figure SVG file. Changing the imported file later won't affect the figure, so if you regenerate a chart PDF, you'll have to delete the old version in the figure SVG and import the chart PDF again.

The upside to having the imported vector data included as objects in the SVG file is that they're completely editable. That means it's possible to change things like fill colors and line widths, which can go a long way to creating a unified look for your figures, even if you're including charts created in several different programs. Editing imported text, however, may not be possible, especially if the imported file used a font which is not available on your computer.

To import an image file into your figure, choose File > Import... from the menu, or just drag in the file from a file manager. This should be either an 8-bit grayscale image or an 8-bit RGB image. Inkscape will let you choose whether to embed the image or to link it. Selecting embed will write the actual image data into the SVG file. On the other hand, selecting link will store only a reference to the location of the image file on your computer. Linking the image is a better option for two reasons. First, it will keep your SVG file nice and small, even if it contains many large images. Second, if the linked image is changed — if, for example, you go back and generate a new 8-bit RGB file using different LUTs — the changes are automatically reflected in the SVG. The downside is that if the location of the image file is changed, the link will need to be updated (which can be done by right-clicking on the image and selecting Image Properties ).

When first imported, the image is likely to be quite large, since Inkscape will size the image to be 90dpi by default. The image can be scaled to a more appropriate size, of course, though take care not to inadvertently scale the width and height separately. Some journals have rules stipulating a minimum resolution for images. To calculate the resolution of an image within the figure, just divide the width or height of the image in pixels (the real pixels in the raster image, not Inkscape "pixels" – opening the image in ImageJ is a good way to get the dimensions) by the width or height of the image in Inkscape. Alternatively, if you've scaled the image by a certain percentage after importing it, divide 9,000 by that percentage to get the resulting resolution.

To crop an image (or any object) in Inkscape, add a clipping mask , which is any other path or shape used to define the displayable boundaries of the image. The clipping mask just hides the parts of the image outside its boundaries — it won't actually remove any data. So if you decide you want to go back and change how you've cropped an image, it's easy to do so.

To create a clipping mask, first draw a shape to define the clipping mask's boundaries. A rectangle is usually most convenient, but any closed path will do. Position the shape on top of the image that should be cropped. Don't worry about the color and line style of the shape — it will be made invisible. Then select both the image and the clip path (hold Shift and click on both), right-click on the path, and choose Set Clip from the menu. The parts of the image outside the path should disappear. To remove a clipping mask from and image, just right-click on it and choose Release Clip from the menu.

Use the widget below to calculate scale bar lengths for a microscopy image. Use the width or height of the entire image before the addition of a clipping path. The scale factor will depend on your microscope, objective, camera, as well as any post-acquisition processing, such as deconvolution. Once you have determined the appropriate size for the scale bar, draw a horizontal line starting at the left edge of the page — enable snapping to the page boundaries, use the Beizer curve tool ( Shift-F6 ), and hold Ctrl to keep the line straight. Then switch to the Edit paths by nodes tool ( F2 ) and select the node away from the page boundary. Move this node to the correct position by entering the appropriate bar size in the X position field in the toolbar at the top of the screen. Be sure that the units drop-down box is set correctly. Now the line will be exactly the right length for a scale bar, and it can be styled (thickness, color, etc.) and positioned however you like.