Problem-Solving Method in Teaching

The problem-solving method is a highly effective teaching strategy that is designed to help students develop critical thinking skills and problem-solving abilities . It involves providing students with real-world problems and challenges that require them to apply their knowledge, skills, and creativity to find solutions. This method encourages active learning, promotes collaboration, and allows students to take ownership of their learning.

Table of Contents

Definition of problem-solving method.

Problem-solving is a process of identifying, analyzing, and resolving problems. The problem-solving method in teaching involves providing students with real-world problems that they must solve through collaboration and critical thinking. This method encourages students to apply their knowledge and creativity to develop solutions that are effective and practical.

Meaning of Problem-Solving Method

The meaning and Definition of problem-solving are given by different Scholars. These are-

Woodworth and Marquis(1948) : Problem-solving behavior occurs in novel or difficult situations in which a solution is not obtainable by the habitual methods of applying concepts and principles derived from past experience in very similar situations.

Skinner (1968): Problem-solving is a process of overcoming difficulties that appear to interfere with the attainment of a goal. It is the procedure of making adjustments in spite of interference

Benefits of Problem-Solving Method

The problem-solving method has several benefits for both students and teachers. These benefits include:

- Encourages active learning: The problem-solving method encourages students to actively participate in their own learning by engaging them in real-world problems that require critical thinking and collaboration

- Promotes collaboration: Problem-solving requires students to work together to find solutions. This promotes teamwork, communication, and cooperation.

- Builds critical thinking skills: The problem-solving method helps students develop critical thinking skills by providing them with opportunities to analyze and evaluate problems

- Increases motivation: When students are engaged in solving real-world problems, they are more motivated to learn and apply their knowledge.

- Enhances creativity: The problem-solving method encourages students to be creative in finding solutions to problems.

Steps in Problem-Solving Method

The problem-solving method involves several steps that teachers can use to guide their students. These steps include

- Identifying the problem: The first step in problem-solving is identifying the problem that needs to be solved. Teachers can present students with a real-world problem or challenge that requires critical thinking and collaboration.

- Analyzing the problem: Once the problem is identified, students should analyze it to determine its scope and underlying causes.

- Generating solutions: After analyzing the problem, students should generate possible solutions. This step requires creativity and critical thinking.

- Evaluating solutions: The next step is to evaluate each solution based on its effectiveness and practicality

- Selecting the best solution: The final step is to select the best solution and implement it.

Verification of the concluded solution or Hypothesis

The solution arrived at or the conclusion drawn must be further verified by utilizing it in solving various other likewise problems. In case, the derived solution helps in solving these problems, then and only then if one is free to agree with his finding regarding the solution. The verified solution may then become a useful product of his problem-solving behavior that can be utilized in solving further problems. The above steps can be utilized in solving various problems thereby fostering creative thinking ability in an individual.

The problem-solving method is an effective teaching strategy that promotes critical thinking, creativity, and collaboration. It provides students with real-world problems that require them to apply their knowledge and skills to find solutions. By using the problem-solving method, teachers can help their students develop the skills they need to succeed in school and in life.

- Jonassen, D. (2011). Learning to solve problems: A handbook for designing problem-solving learning environments. Routledge.

- Hmelo-Silver, C. E. (2004). Problem-based learning: What and how do students learn? Educational Psychology Review, 16(3), 235-266.

- Mergendoller, J. R., Maxwell, N. L., & Bellisimo, Y. (2006). The effectiveness of problem-based instruction: A comparative study of instructional methods and student characteristics. Interdisciplinary Journal of Problem-based Learning, 1(2), 49-69.

- Richey, R. C., Klein, J. D., & Tracey, M. W. (2011). The instructional design knowledge base: Theory, research, and practice. Routledge.

- Savery, J. R., & Duffy, T. M. (2001). Problem-based learning: An instructional model and its constructivist framework. CRLT Technical Report No. 16-01, University of Michigan. Wojcikowski, J. (2013). Solving real-world problems through problem-based learning. College Teaching, 61(4), 153-156

MCQtimes.Com

Teaching Methods

Principles Of Teaching MCQs Quiz With Answers

Are you ready to take the Principles of teaching MCQs quiz with answers? With this quiz about the Principles of teaching, you can test yourself and enhance your knowledge. Do you understand the Principles of teaching enough to pass this test with a score of 80 percent or above? Well, let's see. It is not only a test but also for enlightening you more with the concept. Prepare yourself for this, and do not forget to comment. Let's go!

The class of IV-kalikasan is tasked to analyze the present population of the different cities and municipalities of the National Capital Region for the last five years. How can they best present their analysis?

By means of tables

By looking for a pattern

By means of a graph

By guessing and checking

Rate this question:

In Math, Teacher G presents various examples of plane figures to her class. Afterward, she asks the students to give the definition of each. What method did she use?

Teaching tinikling to i-maliksi becomes possible through the use of:.

Inductive method

Expository method

Demonstration method

Laboratory method

What is the implication of using a method that focuses on the why rather than how?

There is the best method.

A typical one will be good for any subject.

These methods should be standardized for different subjects.

Teaching methods should favor inquiry and problem solving.

Which of the following characterizes a well-motivated lesson?

The class is quit.

The children have something to do.

The teacher can leave the pupils to attend to some activities.

Here are varied procedures and activities undertaken by the pupils.

To ensure that the lesson will go on smoothly, Teacher A listed down the steps she will undertake together with those of her students. This practice relates to.

Teaching style

Teaching method

Teaching strategy

Teaching Technique

The strategy of teaching, which makes use of the old concept of "each-one-teach-one" of the sixty's, is similar.

Peer learning

Independent learning

Partner learning

Cooperative learning

Which of the following is NOT true?

The lesson should be in a constant state of revision.

A good daily lesson plan ensures a better discussion.

Students should never see a teacher using a lesson plan.

All teachers, regardless of their experiences, should have a daily lesson.

The class of Grade 6- Einstein is scheduled to perform an experiment on that day. However, the chemicals are insufficient. What method may be then used?

Demonstration

Pictures, models, and the like arouse students' interest in the day's topic. In what part of the lesson should the given materials be presented?

Initiating activities

Culminating activities

Evaluation activities

Developmental activities

Quiz Review Timeline +

Our quizzes are rigorously reviewed, monitored and continuously updated by our expert board to maintain accuracy, relevance, and timeliness.

- Current Version

- Mar 22, 2023 Quiz Edited by ProProfs Editorial Team

- Sep 06, 2017 Quiz Created by Eldee Balolong

Related Topics

Recent Quizzes

Featured Quizzes

Popular Topics

- Academic Quizzes

- Adult Learning Quizzes

- Blended Learning Quizzes

- Coaching Quizzes

- College Quizzes

- Communication Quizzes

- Curriculum Quizzes

- Distance Learning Quizzes

- E Learning Quizzes

- Early Childhood Education Quizzes

- GCSE Quizzes

- Grade Quizzes

- Graduation Quizzes

- Kindergarten Quizzes

- Knowledge Quizzes

- Literacy Quizzes

- Online Assessment Quizzes

- Online Exam Quizzes

- Online Test Quizzes

- Physical Education Quizzes

- Qualification Quizzes

- Religious Education Quizzes

- School Quizzes

- Special Education Quizzes

- Student Quizzes

- Study Quizzes

- Theory Quizzes

- Thesis Quizzes

- University Quizzes

Related Quizzes

Wait! Here's an interesting quiz for you.

- Python Multiline String

- Python Multiline Comment

- Python Iterate String

- Python Dictionary

- Python Lists

- Python List Contains

- Page Object Model

- TestNG Annotations

- Python Function Quiz

- Python String Quiz

- Python OOP Test

- Java Spring Test

- Java Collection Quiz

- JavaScript Skill Test

- Selenium Skill Test

- Selenium Python Quiz

- Shell Scripting Test

- Latest Python Q&A

- CSharp Coding Q&A

- SQL Query Question

- Top Selenium Q&A

- Top QA Questions

- Latest Testing Q&A

- REST API Questions

- Linux Interview Q&A

- Shell Script Questions

- Python Quizzes

- Testing Quiz

- Shell Script Quiz

- WebDev Interview

- Python Basic

- Python Examples

- Python Advanced

- Python Selenium

- General Tech

Problem-Solving Method of Teaching: All You Need to Know

Ever wondered about the problem-solving method of teaching? We’ve got you covered, from its core principles to practical tips, benefits, and real-world examples.

The problem-solving method of teaching is a student-centered approach to learning that focuses on developing students’ problem-solving skills. In this method, students are presented with real-world problems to solve, and they are encouraged to use their own knowledge and skills to come up with solutions. The teacher acts as a facilitator, providing guidance and support as needed, but ultimately the students are responsible for finding their own solutions.

Must Read: How to Tell Me About Yourself in an Interview

5 Most Important Benefits of Problem-Solving Method of Teaching

The new way of teaching primarily helps students develop critical thinking skills and real-world application abilities. It also promotes independence and self-confidence in problem-solving.

The problem-solving method of teaching has a number of benefits. It helps students to:

1. Enhances critical thinking: By presenting students with real-world problems to solve, the problem-solving method of teaching forces them to think critically about the situation and to come up with their own solutions. This process helps students to develop their critical thinking skills, which are essential for success in school and in life.

2. Fosters creativity: The problem-solving method of teaching encourages students to be creative in their approach to solving problems. There is often no one right answer to a problem, so students are free to come up with their own unique solutions. This process helps students to develop their creativity, which is an important skill in all areas of life.

3. Encourages real-world application: The problem-solving method of teaching helps students learn how to apply their knowledge to real-world situations. By solving real-world problems, students are able to see how their knowledge is relevant to their lives and to the world around them. This helps students to become more motivated and engaged learners.

4. Builds student confidence: When students are able to successfully solve problems, they gain confidence in their abilities. This confidence is essential for success in all areas of life, both academic and personal.

5. Promotes collaborative learning: The problem-solving method of teaching often involves students working together to solve problems. This collaborative learning process helps students to develop their teamwork skills and to learn from each other.

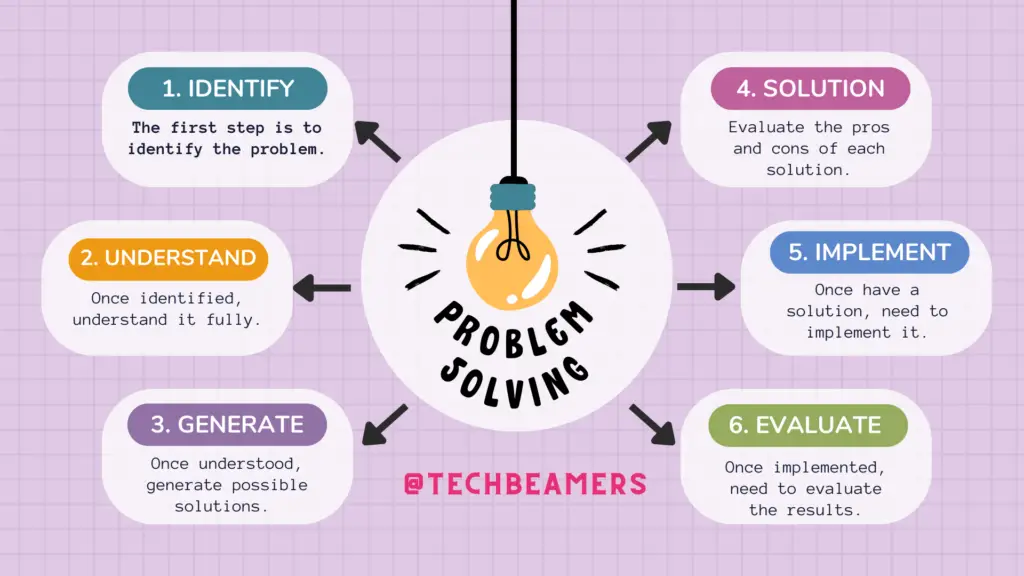

Know 6 Steps in the Problem-Solving Method of Teaching

Also Read: Do You Know the Difference Between ChatGPT and GPT-4?

The problem-solving method of teaching typically involves the following steps:

- Identifying the problem. The first step is to identify the problem that students will be working on. This can be done by presenting students with a real-world problem, or by asking them to come up with their own problems.

- Understanding the problem. Once students have identified the problem, they need to understand it fully. This may involve breaking the problem down into smaller parts or gathering more information about the problem.

- Generating solutions. Once students understand the problem, they need to generate possible solutions. This can be done by brainstorming, or by using problem-solving techniques such as root cause analysis or the decision matrix.

- Evaluating solutions. Students need to evaluate the pros and cons of each solution before choosing one to implement.

- Implementing the solution. Once students have chosen a solution, they need to implement it. This may involve taking action or developing a plan.

- Evaluating the results. Once students have implemented the solution, they need to evaluate the results to see if it was successful. If the solution is not successful, students may need to go back to step 3 and generate new solutions.

Find Out Examples of the Problem-Solving Method of Teaching

Here are a few examples of how the problem-solving method of teaching can be used in different subjects:

- Math: Students could be presented with a real-world problem such as budgeting for a family or designing a new product. Students would then need to use their math skills to solve the problem.

- Science: Students could be presented with a science experiment, or asked to research a scientific topic and come up with a solution to a problem. Students would then need to use their science knowledge and skills to solve the problem.

- Social studies: Students could be presented with a historical event or current social issue, and asked to come up with a solution. Students would then need to use their social studies knowledge and skills to solve the problem.

5 How Tos For Using The Problem-Solving Method Of Teaching

Here are a few tips for using the problem-solving method of teaching effectively:

- Choose problems that are relevant to students’ lives and interests.

- Make sure that the problems are challenging but achievable.

- Provide students with the resources they need to solve the problems, such as books, websites, or experts.

- Encourage students to work collaboratively and to share their ideas.

- Be patient and supportive. Problem-solving can be a challenging process, but it is also a rewarding one.

Also Try: 1-10 Random Number Generator

How to Choose: Let’s Draw a Comparison

The following table compares the different problem-solving methods:

Which Method is the Most Suitable?

The most suitable method of teaching will depend on a number of factors, such as the subject matter, the student’s age and ability level, and the teacher’s own preferences. However, the problem-solving method of teaching is a valuable approach that can be used in any subject area and with students of all ages.

Here are some additional tips for using the problem-solving method of teaching effectively:

- Differentiate instruction. Not all students learn at the same pace or in the same way. Teachers can differentiate instruction to meet the needs of all learners by providing different levels of support and scaffolding.

- Use formative assessment. Formative assessment can be used to monitor students’ progress and to identify areas where they need additional support. Teachers can then use this information to provide students with targeted instruction.

- Create a positive learning environment. Students need to feel safe and supported in order to learn effectively. Teachers can create a positive learning environment by providing students with opportunities for collaboration, celebrating their successes, and creating a classroom culture where mistakes are seen as learning opportunities.

Interested in New Tech: 7 IoT Trends to Watch in 2023

Some Unique Examples to Refer to Before We Conclude

Here are a few unique examples of how the problem-solving method of teaching can be used in different subjects:

- English: Students could be presented with a challenging text, such as a poem or a short story, and asked to analyze the text and come up with their own interpretation.

- Art: Students could be asked to design a new product or to create a piece of art that addresses a social issue.

- Music: Students could be asked to write a song about a current event or to create a new piece of music that reflects their cultural heritage.

The problem-solving method of teaching is a powerful tool that can be used to help students develop the skills they need to succeed in school and in life. By creating a learning environment where students are encouraged to think critically and solve problems, teachers can help students to become lifelong learners.

You Might Also Like

How to fix load css asynchronously, how to fix accessibility issues with tables in wordpress, apache spark introduction and architecture, difference between spring and spring boot, langchain chatbot – let’s create a full-fledged app, sign up for daily newsletter, be keep up get the latest breaking news delivered straight to your inbox..

Popular Tutorials

Top 50 SQL Query Interview Questions for Practice

7 Demo Websites to Practice Selenium Automation in 2024

SQL Exercises – Complex Queries

15 Java Coding Questions for Testers

30 Python Programming Questions On List, Tuple, and Dictionary

Center for Teaching

Teaching problem solving.

Print Version

Tips and Techniques

Expert vs. novice problem solvers, communicate.

- Have students identify specific problems, difficulties, or confusions . Don’t waste time working through problems that students already understand.

- If students are unable to articulate their concerns, determine where they are having trouble by asking them to identify the specific concepts or principles associated with the problem.

- In a one-on-one tutoring session, ask the student to work his/her problem out loud . This slows down the thinking process, making it more accurate and allowing you to access understanding.

- When working with larger groups you can ask students to provide a written “two-column solution.” Have students write up their solution to a problem by putting all their calculations in one column and all of their reasoning (in complete sentences) in the other column. This helps them to think critically about their own problem solving and helps you to more easily identify where they may be having problems. Two-Column Solution (Math) Two-Column Solution (Physics)

Encourage Independence

- Model the problem solving process rather than just giving students the answer. As you work through the problem, consider how a novice might struggle with the concepts and make your thinking clear

- Have students work through problems on their own. Ask directing questions or give helpful suggestions, but provide only minimal assistance and only when needed to overcome obstacles.

- Don’t fear group work ! Students can frequently help each other, and talking about a problem helps them think more critically about the steps needed to solve the problem. Additionally, group work helps students realize that problems often have multiple solution strategies, some that might be more effective than others

Be sensitive

- Frequently, when working problems, students are unsure of themselves. This lack of confidence may hamper their learning. It is important to recognize this when students come to us for help, and to give each student some feeling of mastery. Do this by providing positive reinforcement to let students know when they have mastered a new concept or skill.

Encourage Thoroughness and Patience

- Try to communicate that the process is more important than the answer so that the student learns that it is OK to not have an instant solution. This is learned through your acceptance of his/her pace of doing things, through your refusal to let anxiety pressure you into giving the right answer, and through your example of problem solving through a step-by step process.

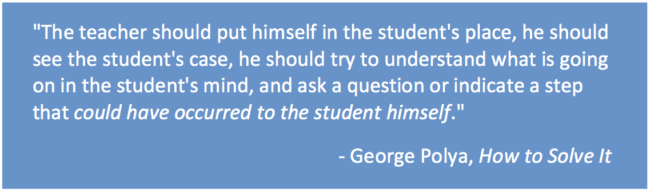

Experts (teachers) in a particular field are often so fluent in solving problems from that field that they can find it difficult to articulate the problem solving principles and strategies they use to novices (students) in their field because these principles and strategies are second nature to the expert. To teach students problem solving skills, a teacher should be aware of principles and strategies of good problem solving in his or her discipline .

The mathematician George Polya captured the problem solving principles and strategies he used in his discipline in the book How to Solve It: A New Aspect of Mathematical Method (Princeton University Press, 1957). The book includes a summary of Polya’s problem solving heuristic as well as advice on the teaching of problem solving.

Teaching Guides

- Online Course Development Resources

- Principles & Frameworks

- Pedagogies & Strategies

- Reflecting & Assessing

- Challenges & Opportunities

- Populations & Contexts

Quick Links

- Services for Departments and Schools

- Examples of Online Instructional Modules

Assessing by Multiple Choice Questions

Multiple choice question (MCQ) tests can be useful for formative assessment and to stimulate students' active and self-managed learning. They improve students' learning performance and their perceptions of the quality of their learning experience ( Velan et al., 2008 ). When using MCQ tests for formative learning, you might still want to assign a small weight to them in the overall assessment plan for the course, to indicate to students that their grasp of the material tested is important.

MCQ tests are strongly associated with assessing lower-order cognition such as the recall of discrete facts. Because of this, assessors have questioned their use in higher education. It is possible to design MCQ tests to assess higher-order cognition (such as synthesis, creative thinking and problem-solving), but questions must be drafted with considerable skill if such tests are to be valid and reliable. This takes time and entails significant subjective judgement.

When determining whether an MCQ test is at the appropriate cognitive level, you may want to compare it with the levels as set out in Krathwohl's (2002) revision to Bloom's Taxonomy of Educational Objectives. If an MCQ test is, in fact, appropriate to the learning outcomes you want to assess and the level of cognition they involve, the next question is whether it would be best used for formative assessment (to support students' self-management of their learning), or for summative assessment (to determine the extent of students' learning at a particular point). When MCQ tests are being used for summative assessment, it's important to ask whether this is because of the ease of making them or for solid educational reasons. Where MCQ tests are appropriate, ensure that you integrate them effectively into assessment design. MCQ tests should never constitute the only or major form of summative assessment in university-level courses.

When to use

MCQ tests are useful for assessing lower-order cognitive processes, such as the recall of factual information, although this is often at the expense of higher-level critical and creative reasoning processes. Use MCQ tests when, for example, you want to:

- assess lower-order cognition such as the recall of discrete facts, particularly if they will be essential to higher-order learning later in the course

- gather information about students' pre-course understanding, knowledge gaps and misconceptions, to help plan learning and teaching approaches.

- provide students with an accessible way to review course material, check that they understand key concepts and obtain timely feedback to help them manage their own learning

- test students' broad knowledge of the curriculum and learning outcomes.

It should be noted that the closed-ended nature of MCQ tests makes them particularly inappropriate for assessing originality and creativity in thinking.

MCQ tests are readily automated, with numerous systems available to compile, administer, mark and provide feedback on tests according to a wide range of parameters. Marking can be done by human markers with little increase in marking load; or automatically, with no additional demands on teachers or tutors.

Objectivity

Although MCQ tests can lack nuance in that the answers are either right or wrong (provided the questions are well-designed), this has the advantage of reducing markers' bias and increasing overall objectivity. Moreover, because the tests do not require the students to formulate and write their own answers, the students' writing ability (which can vary widely even within a homogeneous cohort) becomes much less of a subjective factor in determining their grasp of the material. It should be noted, however, that they are highly prone to cultural bias (Bazemore-James et al., 2016).

The development of question banks

MCQ tests can be refined and enhanced over time to incorporate new questions into an ever-growing question pool that can be used in different settings.

While MCQ tests are quick and straighforward to administer and mark, they require a large amount of up-front design effort to ensure that they are valid, relevant, fair and as free as possible from cultural, gender or racial bias. It's also challenging to write MCQs that resist mere guesswork. This is particularly the case if the questions are intended to test higher-order cognition such as synthesis, creative thinking and problem-solving.

The use of MCQ tests as summative assessments can encourage students to adopt superficial approaches to learning. This superficiality is exacerbated by the lack of in-depth, critical feedback inherent in a highly standardised assessment.

MCQ tests can disadvantage students with reading or English-language difficulties, regardless of how well they understand the content being assessed.

Plan a summative MCQ test

If you decide that an MCQ test is appropriate for summative assessment according to the objectives and outcomes for a course, let students know in the course outline that you'll be using it.

Table 1 sets out a number of factors to consider in the test planning stage.

Table 1: Factors in planning MCQ test design

Construct an MCQ test

Constructing effective MCQ tests and items takes considerable time and requires scrupulous care in the design, review and validation stages. Constructing MCQ tests for high-stakes summative assessment is a specialist task.

For this reason, rather than constructing a test from scratch, it may be more efficient for you to see what other validated tests already exist, and incorporate one into any course for which numerous decisions need to be made.

In some circumstances it may be worth the effort to create a new test. If you can undertake test development collaboratively within your department or discipline group, or as a larger project across institutional boundaries, you will increase the test's potential longevity and sustainability.

By progressively developing a multiple-choice question bank or pool, you can support benchmarking processes and establish assessment standards that have long-term effects on assuring course quality.

Use a design framework to see how individual MCQ questions will assess particular topic areas and types of learning objectives, across a spectrum of cognitive demand, to contribute to the test's overall balance. As an example, the "design blueprint" in Table 2 provides a structural framework for planning.

Table 2: Design blueprint for multiple choice test design (from the Instructional Assessment Resources at the University of Texas at Austin)

Use the most appropriate format for each question posed. Ask yourself, is it best to use:

- a single correct answer

- more than one correct answer

- a true/false choice (with single or multiple correct answers)

- matching (e.g. a term with the appropriate definition, or a cause with the most likely effect)

- sentence completion, or

- questions relating to some given prompt material?

To assess higher-order thinking and reasoning, consider basing a cluster of MCQ items on some prompt material, such as:

- a brief outline of a problem, case or scenario

- a visual representation (picture, diagram or table) of the interrelationships among pieces of information or concepts

- an excerpt from published material.

You can present the associated MCQ items in a sequence from basic understanding through to higher-order reasoning, including:

- identifying the effect of changing a parameter

- selecting the solution to a given problem

- nominating the optimum application of a principle.

You may wish to add some short-answer questions to a substantially MCQ test to minimise the effect of guessing by requiring students to express in their own words their understanding and analysis of problems.

Well in advance of an MCQ test, explain to students:

- the purposes of the test (and whether it is formative or summative)

- the topics being covered

- the structure of the test

- whether aids can be taken into the test (for example, calculators, notes, textbooks, dictionaries)

- how it will be marked

- how the mark will contribute to their overall grade.

Compose clear instructions on the test itself, explaining:

- the components of the test

- their relative weighting

- how much time you expect students to spend on each section, so that they can optimise their time.

Quality assurance of MCQ tests

Whether you use MCQ tests to support learning in a formative way or for summative assessment, ensure that the overall test and each of its individual items are well aligned with the course learning objectives. When using MCQ tests for summative assessment, it's all the more critical that you assure their validity.

The following strategies will help you assure quality:

- Use a basic quality checklist ( such as this one from Knapp & Associates International ) when designing and reviewing the test.

- Take the test yourself. Calculate student completion time as being four times longer than your completion time.

- Work collaboratively across your discipline to develop an MCQ item bank as a dynamic (and growing) repository that everyone can use for formative or summative assessments, and that enables peer review, evaluation and validation.

Use peer review to:

- consider whether MCQ tests are educationally justified in your discipline

- critically evaluate MCQ test and item design

- examine the effects of using MCQs in the context of the learning setting

- record and disseminate the peer review outcomes to students and colleagues.

Engage students in active learning with MCQ tests

Used formatively, MCQ tests can:

- engage students in actively reviewing their own learning progress, identifying gaps and weaknesses in their understanding, and consolidating their learning through rehearsal

- provide a trigger for collaborative learning activities, such as discussion and debate about the answers to questions.

- become collaborative through the use of technologies such as electronic voting systems

- through peer assessment, help students identify common areas of misconception within the class.

You can also create activities that disrupt the traditional agency of assessment. You might, for example, require students to indicate the learning outcomes with which individual questions are aligned, or to construct their own MCQ questions and prepare explanatory feedback on the right and wrong answers (Fellenz, 2010).

Ensure fairness

Construct MCQ tests according to inclusive-design principles to ensure equal chances of success for all students. Take into account any diversity of ability, cultural background or learning styles and needs.

- Avoid sexual, racial, cultural or linguistic stereotyping in individual MCQ test items, to ensure that no groups of students are unfairly advantaged or disadvantaged.

- Provide alternative formats for MCQ-type exams for any students with disabilities. They may, for example, need more time to take a test, or to be provided with assistive technology or readers.

- Set up contingency plans for timed online MCQ tests, as computers can malfunction or system outages occur at any time. Also address any issues arising from students being in different time zones.

- Develop processes in advance so that you have time to inform students about the objectives, formats and delivery of MCQ tests and, for a summative test, the marking scheme being used and the test's effect on overall marks.

- To reduce the opportunity for plagiarism, specify a randomised question presentation for MCQ, so that different students will be presented with the same content in a different order.

Writing Good Multiple Choice Test Questions (Vanderbilt University).

Bazemore-James, C. M., Shinaprayoon, T., & Martin, J. (2016). Understanding and supporting students who experience cultural bias in standardized tests . Trends and Issues in Academic Support: 2016-2017 .

Fellenz, M.R. (2004). Using assessment to support higher level learning: the multiple choice item development assignment. Assessment and Evaluation in Higher Education 29 (6), 703-719.

Krathwohl, D. R. (2002). A revision of Bloom's Taxonomy: An overview . Theory into Practice , 41 (4), 212-218.

LeJeune, J. (2023). A multiple-choice study: The impact of transparent question design on student performance . Perspectives In Learning , 20 (1), 75-89.

Velan, G.M., Jones, P., McNeil, H.P. and Kumar, R.K. (2008). Integrated online formative assessments in the biomedical sciences for medical students: benefits for learning . BMC Medical Education 8(52).

Events & news

Mathematics Teaching Strategies MCQs

Special education (students with high-incidence disabilities) mcqs topics.

General MCQs

Characteristics and Laws Impacting Special Education MCQs

Educational Collaboration in Special Education MCQs

Classroom Management and Organization MCQs

Integrating Technological Applications MCQs

Instruction Assessment MCQs

Teacher Directed Instruction MCQs

Special Students in General Education MCQs

Student Mediated Instruction MCQs

Learning Strategies MCQs

Reading and Writing Strategies MCQs

Application in Other Content Areas MCQs

Social and Emotional Needs of Students MCQs

Life Skills and Transition MCQs

Behavioral Classroom Management and Organization MCQs

Welcome to the Mathematics Teaching Strategies section on MCQss.com. Here, you will find a wide range of MCQs designed to enhance your mathematics teaching strategies and approaches, enabling you to engage students, foster conceptual understanding, and promote problem-solving skills in mathematics education.

Teaching mathematics requires a deep understanding of both the subject matter and effective instructional methods. The MCQs provided in this section cover various teaching strategies, including inquiry-based learning, hands-on activities, problem-solving approaches, differentiation techniques, and the use of technology in mathematics instruction.

Whether you are a mathematics teacher, educator, or aspiring educator, the Mathematics Teaching Strategies MCQs offer a platform to enhance your teaching effectiveness and inspire your students' love for mathematics. Explore these MCQs, apply the strategies in your classroom, and witness the positive impact on student learning and achievement.

Join us in exploring the MCQs on Mathematics Teaching Strategies and unlock your potential to become a more effective mathematics educator.

1: The use of symbols, including numeric, algebraic, statistical and geometric is called abstract instruction.

A. True

B. False

2: Knowing the number of objects in a set is called _____.

A. Cardinality

B. Modality

C. Ordinaility

D. Degree

3: Manipulating objects to represent numerals and operations is called learned helplessness.

4: the ability to understand numbers, numeric relationships, and how to use numeric information to solve mathematic problems is called _____..

A. Number sense

B. Numeration

C. Fluency

D. Operations

5: Use procedural strategies accurately and know how to make sense of numerical and quantitative situations is called _____powerful students.

A. Numerically

B. Analytically

C. Algebraically

D. Chronologically

6: Teacher uses _____ representations such as tally marks, dots, picture and so forth is called representational instruction.

A. Visual

B. Audible

C. Verbal

D. None of these

7: Breaking a task into smaller manageable parts or steps, teaching steps as separate objectives, and then combining all steps to complete the entire task is called _____.

A. Task analysis

B. Behavior analysis

C. Education analysis

8: Ability to perceive and understand accurately what you see is called ______ perception.

B. Audio

C. Code

D. Verbal

9: Kita has difficulty remembering the definitions of mathematical terms. A strategy most likely to help her is:

A. Self-regulation

B. Peer tutoring

C. A mnemonic device

D. An advance organizer

10: Mr. Robinson’s general education classroom is comprised primarily of students who are culturally diverse and some of them have learning disabilities. He does not believe that the problems in the textbook are relevant to their lives. In order to engage his students more effectively, Mr. Robinson should consider:

A. Focusing solely on the textbook problems to expose them to new ideas

B. Allowing students to solve problems independently without teacher direction

C. Writing math problems that are culturally relevant and authentic

D. Allowing students to create their own problem-solving strategies

11: The first step in developing mathematics instruction is to:

A. Write the short-term lesson objectives

B. Sequence the skills

C. Determine prerequisites

D. Select a teaching procedure

12: Maria refuses to attempt to solve math problems in class. She cries and says, “I can’t do it.” Maria may be experiencing problems related to:

A. Memory difficulties

B. Attention problems

C. Language

D. Learned helplessness

13: Researchers have found that the more effective approach for teaching mathematics to students with high-incidence disabilities is:

A. Implicit instruction

B. Discovery learning

C. Social interaction

D. Explicit instruction

14: Selective attention can affect a student’s math performance.

15: a disadvantage of a scripted lesson plan in mathematics is too much emphasis is placed on devising additional examples., 16: keywords but not pegwords can be used to teach mathematics., 17: graphic representation is not an appropriate strategy to help students solve word problems., 18: the conversion of words into math symbols is an example of how language can affect mathematical performance., list of mathematics teaching s..., related mathematics teaching strategies mcqs:.

Purpose of Science MCQs

Reporting Educational research MCQs

Available in:

Latest mcqs:.

Ethics MCQs

Learning Theory/memory MCQs

Theology MCQs

Psychological Statistics MCQs

Applied Philosophy MCQs

Popular MCQs:

Privacy Policy | Terms and Conditions | Contact Us

© copyright 2024 by mcqss.com

- Switch skin

Problem Solving Techniques MCQs with Answers

Welcome to the Problem Solving Techniques MCQs with Answers . In this post, we have shared Problem Solving Techniques Online Test for different competitive exams. Find practice Problem Solving Techniques Practice Questions with answers in Aptitude Test exams here. Each question offers a chance to enhance your knowledge regarding Problem Solving Techniques.

Problem-solving entails several steps, including defining the issue, pinpointing its underlying cause, prioritizing and evaluating potential solutions, and finally implementing the chosen resolution. It’s important to note that there isn’t a universal problem-solving approach that applies to all situations.

Problem Solving Techniques Online Quiz

By presenting 3 options to choose from, Problem Solving Techniques Quiz which cover a wide range of topics and levels of difficulty, making them adaptable to various learning objectives and preferences. Whether you’re a student looking to reinforce your understanding our Student MCQs Online Quiz platform has something for you. You will have to read all the given answers of Problem Solving Techniques Questions and Answers and click over the correct answer.

- Test Name: Problem Solving Techniques MCQ Quiz Practice

- Type: MCQ’s

- Total Questions: 40

- Total Marks: 40

- Time: 40 minutes

Note: Questions will be shuffled each time you start the test. Any question you have not answered will be marked incorrect. Once you are finished, click the View Results button. You will encounter Multiple Choice Questions (MCQs) related to Problem Solving Techniques , where three options will be provided. You’ll choose the most appropriate answer and move on to the next question without using the allotted time.

Wrong shortcode initialized

Download Problem Solving Techniques Multiple Choice Questions with Answers Free PDF

You can also download Problem Solving Techniques Questions with Answers free PDF from the link provided below. To Download file in PDF click on the arrow sign at the top right corner.

If you are interested to enhance your knowledge regarding English, Physics , Chemistry , Computer , and Biology please click on the link of each category, you will be redirected to dedicated website for each category.

World Economic Integration Agreements MCQs with Answers

World famous programming languages mcqs with answers, related articles.

Word Problems MCQs with Answers

Calendars and clocks mcqs with answers, surds and indices mcqs with answers, logical reasoning mcqs with answers, alphabetical and numerical series mcqs with answers, mensuration mcqs with answers, leave a reply cancel reply.

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Research article

- Open access

- Published: 02 February 2016

Case-based learning and multiple choice questioning methods favored by students

- Magalie Chéron 1 ,

- Mirlinda Ademi 2 ,

- Felix Kraft 3 &

- Henriette Löffler-Stastka 1

BMC Medical Education volume 16 , Article number: 41 ( 2016 ) Cite this article

13k Accesses

17 Citations

Metrics details

Investigating and understanding how students learn on their own is essential to effective teaching, but studies are rarely conducted in this context. A major aim within medical education is to foster procedural knowledge. It is known that case-based questioning exercises drive the learning process, but the way students deal with these exercises is explored little.

This study examined how medical students deal with case-based questioning by evaluating 426 case-related questions created by 79 fourth-year medical students. The subjects covered by the questions, the level of the questions (equivalent to United States Medical Licensing Examination Steps 1 and 2), and the proportion of positively and negatively formulated questions were examined, as well as the number of right and wrong answer choices, in correlation to the formulation of the question.

The evaluated case-based questions’ level matched the United States Medical Licensing Examination Step 1 level. The students were more confident with items aiming on diagnosis, did not reject negatively formulated questions and tended to prefer handling with right content, while keeping wrong content to a minimum.

These results should be taken into consideration for the formulation of case-based questioning exercises in the future and encourage the development of bedside teaching in order to foster the acquisition of associative and procedural knowledge, especially clinical reasoning and therapy-oriented thinking.

Peer Review reports

Trying to understand how students learn on their own, aside from lectures, is essential to effective teaching. It is known that assessment and case-based questioning drive the learning process. Studies have shown that the way assessment is being conducted influences students’ approach to learning critically [ 1 ]. Several written methods are used for the assessment of medical competence: Multiple Choice Questions (MCQs), Key Feature Questions, Short Answer Questions, Essay Questions and Modified Essay Questions [ 2 ]. Based upon their structure and quality, examination questions can be subdivided into (1) open-ended or multiple choice and (2) context rich or context poor ones [ 3 , 4 ].

Well-formulated MCQs assess cognitive, affective and psychomotoric domains and are preferred over other methods because they ensure objective assessment, minimal effect of the examiner’s bias, comparability and cover a wide range of subjects [ 5 ]. Context rich MCQs encourage complex cognitive clinical thinking, while context poor or context free questions mainly test declarative knowledge (facts, “what” information), which involves pure recall of isolated pieces of information such as definitions or terminologies. In contrast, procedural knowledge (“why” and “how” information) requires different skills: Students are encouraged to understand concepts and to gather information from various disciplines in order to apply their knowledge in a clinically-oriented context. Remarkably, prior clinical experience has been suggested to be a strong factor influencing students’ performance in procedural knowledge tasks [ 6 , 7 ]. With the focus of teaching students to think critically, test items must require students to use a high level of cognitive processing [ 3 ]. A successful approach is using Extended Matching Items (EMIs), consisting of clinical vignettes [ 2 ]. This format is characteristic for examination questions in Step 2 of the United States Medical Licensing Examination (USMLE) [ 8 ]. Step 2 items, test the application of clinical knowledge required by a general physician and encourage examinees to make clinical decision rather than to simply recall isolated facts [ 6 ]. As a well-established examination format introduced by the National Board of Medical Examiners (NBME) USMLE question criteria served for the comparison in our study.

Case-based learning (CBL) has gained in importance within past years. This well-established pedagogical method has been used by the Harvard Business School since 1920 [ 9 ]. Nevertheless, there is no international consensus on its definition. CBL as introduced to students of the Medical University of Vienna (MUV, Austria) in Block 20 is inquiry-based learning demanding students to develop clinical reasoning by solving authentic clinical cases presented as context rich MCQs. Generally, exposing students to complex clinical cases promotes (1) self-directed learning, (2) clinical reasoning, (3) clinical problem-solving and (4) decision making [ 9 ]. In contrast to other testing formats CBL facilitates deeper conceptual understanding. As students see the direct relevance of the information to be learnt, their motivation increases and they are more likely to remember facts. Studies showed that CBL fosters more active and collaborative learners and that students enjoy CBL as a teaching method [ 9 ]. This is in line with the improving results from the students’ evaluation of the course Psychic Functions in Health and Illness and Medical Communication Skills-C ( Block 20/ÄGF-C ) performed in 2013 [ 10 ] and 2014 [ 11 ] at the MUV. A Likert scale ranging from 1 (very bad) to 4 (very good) was used to evaluate the quality of the lectures: The mean grade improved from 1.6 in 2013 to 3 one year later, after the introduction of online case-based exercises related to the lectures. Therefore, based upon the assumption that students tend to prefer practical learning, the development of case-based question driven blended-learning will be further encouraged at the MUV, in order to aim for an effective training for the fostering of procedural knowledge, necessary for clinical reasoning processes and clinical authentic care.

There are no published studies analyzing the way medical students construct MCQs regarding their level of clinical reasoning. To learn more about how students deal with case-based questioning we analyzed student-generated MCQs. The study gives important insights by examining students’ way of reasoning, from formal reasoning using only declarative knowledge to clinical and procedural reasoning based on patients’ cases. Moreover, this study allows to observe to what extent negatively formulated questions, a rarely used format in exams, may not be a problem for students.

This analysis is based on the evaluation of a compensatory exercise for missed seminars completed by students in their fourth year of medical studies at the MUV, after attending their first course on psychic functioning ( Block 20/ÄGF-C ). The 5-week-long Block 20 [ 12 ] focused on the fundamentals of psychic functions, the presentation of the most important psychological schools and on the significance of genetic, biological, gender-related and social factors, as well as on the presentation of psychotherapeutic options and prevention of psychic burden [ 13 ]. Basis of the doctor-patient communication and of psychological exploration techniques were offered [ 12 ]. To pass Block 20 , all students had to take part to the related online CBL [ 14 ] exercise. This exercise presented patients cases including detailed information on diagnosis and therapy, subdivided in psychotherapy and pharmacology. The students had to answer MCQ concerning each diagnostic and therapeutic step.

The current study was approved by the ethics committee of the Medical University of Vienna, students gave informed consent to take part and data is deposited in publicly available repositories (online CBL exercise) after finishing the study. The students were instructed to create MCQs with 4–5 answer possibilities per question, related to cases of patients with psychopathological disorders presented in the online CBL exercise and in the lectures’ textbook of the Block 20 [ 15 ]. The “One-Best Answer” format was recommended. Additionally, the students were required to explain why the answers were right or wrong. MCQ examples were offered to the students in the online CBL exercise.

The authors performed the assessment and classification of the students’ MCQs after group briefings. A final review was done by MC to ensure inter-rater reliability, it was stable at k = .73 between MC and HLS.

Subjects covered by the questions

The proportions of epidemiology, etiology/pathogenesis, diagnosis and therapy oriented items were examined. In order to simplify the classification, etiology and pathogenesis items were gathered into one group. Items asking for symptoms, classifications (e.g. ICD 10 criteria) as well as necessary questions in the anamnesis were gathered as diagnosis items. Among the therapy items, the frequency of items concerning psychotherapy methods and pharmacology was also compared. The proportion of exercises including at least one diagnosis item and one therapy item was observed. These subjects were chosen according to the patient cases of the CBL exercises and the lectures’ textbook.

Level of the questions

The level of the questions was evaluated in comparison to USMLE equivalent Steps 1 and 2, as described by the NBME. While Step 1 questions (called recall items ) test basic science knowledge, “every item on Step 2 provides a patient vignette” and tests higher skills. Step 2 questions are necessarily application of knowledge items and require interpretation from the student [ 6 ].

To assess the level of the items, 2 further groups were created, distinguishing items from the others. Examples of Step 1 and Step 2 Items’ stems offered by the students:

Step 1: “What is the pharmacological first line therapy of borderline patients?” (Item 31) Step 2: “M. Schlüssel presents himself with several medical reports from 5 specialists for neurology, orthopedics, trauma surgery, neuroradiology and anesthesia, as well as from 4 different general practitioners. Diagnostic findings showed no evidence for any pathology. Which therapy options could help the patient?” (Item 86)

Further, “Elaborate Items” were defined by the authors as well thought-out questions with detailed answer possibilities and/or extensive explanations of the answers.

Finally, “One-Step questions” and “n-steps questions” were differentiated. This categorization reflects the number of cognitive processes needed to answer a question and estimates the complexity of association of a MCQ. Recall items are necessarily one-step questions, whereas application of knowledge items may be one-step questions or multiple (n) steps questions. Because the evaluation of the cognitive processes is dependent on the knowledge of the examinee, the NBME does not give priority to this categorization anymore, although it gives information on the level and quality of the questions [ 6 ]. The previous example of a Step 2 question (Item 86) is an application of knowledge (Step 2) item categorized as n-Step item, because answering the question necessitates an association to the diagnosis, which is not explicitly given by the question. An example for a Step 2 question necessitating only one cognitive process would be: “A patient complains about tremor and excessive sweating. Which anamnestic questions are necessary to ask to diagnose an alcohol withdrawal syndrome?” (Item 232)

Formulation of the questions

The proportion of positively and negatively formulated questions created by the students was examined, as well as the number of right and wrong answer choices, in correlation to the formulation of the question.

Descriptive statistics were performed using SPSS 22.0 to analyze the subjects covered by the questions, their level and formulation, and the number of answer possibilities offered. The significance of the differences was performed using the Chi-square Test or Mann–Whitney U Test, depending on the examined variable, after testing for normal distribution. A given p -value < .05 was considered statistically significant in all calculations.

The study included 105 compensation exercises, performed by 79 students, representing 428 MCQs. After reviewing by the examiner (HLS), who is responsible for pass/fail decisions on the completion and graduation concerning the curriculum element Block 20/ÄGF-C, followed by corrections from the students, two questions were excluded, because the answers offered were not corresponding to the MCQ’s stem. Finally, 426 questions remained and were analyzed.

The subjects covered by the 426 questions concerned the diagnosis of psychiatric diseases (49.1 %), their therapies (29.6 %) and their etiology and pathogenesis (21.4 %). 18 questions covered two subjects (Table 1 ).

Significantly more items concerned the diagnosis of psychiatric diseases than their therapies ( p < .001, Chi-Square Test); 63.3 % of the students offered at least one item regarding diagnosis and one item regarding therapy in their exercise.

Among the therapy items, significantly more pharmacology items were offered than psychotherapy items (59 % versus 41 %; p = .043, Chi-Square Test).

395 (92.7 %) of the questions were classified as Step 1-questions. Nevertheless, 199 (46.7 %) of the questions were elaborate. 421 (98.8 %) out of the 426 questions were One-Step questions, according to USMLE criteria (Table 1 ). From the 18 questions covering two subjects, 16 were Step 1-questions.

72.5 % of the questions were positively formulated, 27.5 % negatively. A significant difference was observed between the positively and negatively formulated questions regarding the number of right answers: Table 2 shows the distribution of the number of right answers, depending on the questions’ formulation. The students offered significantly more right answer possibilities per positive-formulated question than per negative-formulated questions ( p < .001, Mann–Whitney U Test).

The positive-formulated questions had more often two or more right answers than the negative-formulated questions ( p < .001, Chi-square value = 44.2).

The students also offered less answer possibilities per positive-formulated question than per negative-formulated question ( p < .001, Mann–Whitney U Test). Further, Table 2 presents the distribution of the number of answers offered depending on the questions’ formulation. The proportion of questions with 4 answer possibilities instead of 5 is higher within the group of positive-formulated questions ( p < .001, Chi-square value = 16.56). Regarding the proportion of elaborate questions depending on their formulation, there was no significant difference.

Twenty-nine (36.7 %) students offered only positively formulated questions. The students who formulated at least one question negatively (63.3 %) formulated 41.1 ± 22.4 % of their questions negatively.

Many more questions aiming on diagnosis

At the end of year 4, students of the MUV had had various lectures but hardly any actual experiences with therapies. This may explain why significantly more items concerned the diagnosis of psychiatric diseases than their therapies.

Among questions aiming on therapy, significantly more concerned pharmacotherapy than psychotherapy

Before Block 20 , the seminars concerning therapies in the MUV Curriculum were almost exclusively pharmacological. After successful attendance of Block 20 most students who did not have any personal experience of psychotherapy only had little insight into how psychotherapy is developing on the long-term and what psychotherapy can really provide to the patient. Psychotherapy associations were still loaded with old stereotypes [ 13 , 16 ]. This could explain why significantly more therapy questions addressed pharmacology than psychotherapy.

A huge majority of Step 1 questions

The students mainly offered Step 1 questions. It can be questioned, whether the lack of case-oriented questions was an indication for insufficient clinical thinking by the students. An essential explanation could be that students lacked adequate patient contact until the end of year four. Indeed, MUV students were allowed to begin their practical experience after year two and eight compulsory clerkship weeks were scheduled before the beginning of year five [ 17 ]. Thus, Austrian medical students gained consistent clinical experience only after year four, with rotations in year five and the newly introduced Clinical Practical Year in year six. A European comparison of medical universities’ curricula showed that students of other countries spent earlier more time with patients: Dutch, French and German medical students began with a nursing training in year one and had 40, 10 and 4 months, respectively, more clerkship experience than Austrian students before entering year five [ 18 – 21 ]. French and Dutch universities are extremely centered on clinical thinking, with a total of 36 clerkship months in France and the weekly presence of patients from the first lectures on in Groningen [ 22 ]. Thus, it would be interesting to repeat a similar case-based exercise in these countries to explore if medical students at the same educational stage but with more practical experience are more likely to offer patient vignette items.

Students preferred to work with right facts and did not reject negatively worded questions

As negatively worded questions were usually banished from MCQ exams, it was interesting to observe that medical students did not reject them. In fact, negatively formulated questions are more likely to be misunderstood. Their understanding correlates to reading ability [ 23 ] and concentration. Although many guidelines [ 6 , 24 ] clearly advised to avoid negative items, the students generated 27.5 % of negatively formulated questions. Also Pick N format -questions with several right answers were offered by the students, despite the recommendations for this exercise: They offered significantly less total answer possibilities but significantly more right answers to positively worded questions than to negatively worded questions. Those results supported the hypothesis that the students preferred handling right content while keeping wrong content to a minimum.

Several possible reasons can be contemplated. When students lack confidence with a theme and try to avoid unsuitable answer possibilities, it can be more difficult to find four wrong answers to a positively worded question instead of several right answers, which may be listed in a book. Furthermore, some students may fear to think up wrong facts to avoid learning wrong content. Indeed, among positively worded items, 26.6 % were offered with 3 or more right answers, which never happened for negatively worded items (Table 2 ).

Notably, “right answer possibilities” of negatively worded items’ stems as well as “wrong answer possibilities” of positively worded items’ stems are actually “wrong facts”. For example, the right answer of the item “Which of the following symptoms does NOT belong to ICD-10 criteria of depression?” (Item 177) is the only “wrong fact” of the 5 answer possibilities. Writing the 4 “wrong answers” of this question, which are actually the ICD-10 criteria for depression, can help the students learn these diagnostic criteria. On the contrary, the “right answers” to a positively worded item such as “Which vegetative symptoms are related to panic attacks?” (Item 121) are the true facts.

Finally, the students’ interest for right facts supports the theory that a positive approach, positive emotions and curiosity are favorable to learning processes. Indeed, asking for right content is a natural way of learning, already used by children from the very early age. The inborn curiosity — urge to explain the unexpected [ 25 ], need to resolve uncertainty [ 26 ] or urge to know more [ 27 ]— is shown by the amount of questions asked by children [ 28 , 29 ]. The students’ way to ask for right contents appears very close to this original learning process.

The inputs of developmental psychology, cognitive psychology as well as of neurosciences underline this hypothesis. Bower presented influences of affect on cognitive processes: He showed a powerful effect of people’s mood on their free associations to neutral words and better learning abilities regarding incidents congruent with their mood [ 30 ]. Growing neurophysiological knowledge confirmed the close relation between concentration, learning and emotions — basic psychic functions necessitating the same brain structures. The amygdala, connected to major limbic structures (e.g. pre-frontal cortex, hippocampus, ventral striatum), plays a major role in affect regulation as well as in learning processes [ 15 ], and the hippocampus, essential to explicit learning, is highly influenced by stress, presenting one of the highest concentrations of glucocorticoid receptors in the brain [ 31 ]. Stress diminishes the synaptic plasticity within the hippocampus [ 32 ], plasticity which is necessary to long-term memory.

Neuroscientific research also underlined the interdependence of cognitive ability and affect regulation. Salas showed on a patient after an ischemic stroke event with prefrontal cortex damage that, due to executive impairment and increased emotional reactivity, cognitive resources could not allow self-modulation and reappraising of negative affects anymore [ 33 ].

Considering this interdependence, right contents might be related to a positive attitude and positive affects among the students. It could be interesting to further research on this relation as well as on the students’ motivations concerning the formulation of the questions.

The combination of those reasons probably explains why the students offered significantly more wrong answers to negatively worded items and more right answers to positively worded items, both resulting in the use of more right facts. All the students’ assessment questions and associated feedback were used to create a new database at the MUV trying to integrate more right facts in case-based learning exercises in the future.

The main limitation concerns the small sample size and the focus on only one curriculum element. Further studies with convenient sampling should include other medical fields and bridge the gap to learning outcome research.

The evaluation of the questions offered by medical students in their fourth year at the MUV showed that the students were much more confident with items aiming on diagnosis. Among items aiming on therapy, they proved to be more confident with pharmacotherapy than with psychotherapy. These results, together with the improving evaluation of the Block 20 after introducing CBL exercises and the international awareness that case-based questioning have a positive steering effect on the learning process and foster the acquisition of associative and procedural knowledge, should encourage the further development of affective positively involving case-based exercises, especially with a focus on clinical reasoning and therapy-oriented thinking.

The development of bedside teaching and the implementation of clerkships from the first year of studies (e.g. a 4-week practical nursing training) could also be considered in order to stimulate earlier patient-centered thinking of the students of the MUV. A comparison with the level of clinical reasoning of medical students from countries where more practical experience is scheduled during the first year of study would be interesting.

Concerning assessment methods and particularly the formulation of case-based questions, the students did not reject negatively formulated questions, but showed a tendency to prefer working with right contents, while keeping wrong content to a minimum. This preference could be further explored and considered in the future for the formulation of MCQs in case-based exercises.

Availability of supporting data

Data of the patients’ cases, on which the MCQs created by the students were based on, can be found in the textbook of the curriculum element and lectures [ 15 ] and via the Moodle website of the Medical University of Vienna [ 34 ]. The Moodle website is available for students and teachers of the Medical University of Vienna with their username and password. The analyzed and anonymous datasets including the MCQs [ 34 ] are accessible on request directly from the authors.

Abbreviations

Case-based learning

Extended Matching Items

- Multiple Choice Question

Medical University of Vienna

National Board of Medical Examiners

United States Medical Licensing Examination

Reid WA, Duvall E, Evans P. Relationship between assessment results and approaches to learning and studying in Year Two medical students. Med Educ. 2007;41:754–62. doi: 10.1111/j.1365-2923.2007.02801.x .

Article Google Scholar

Al-Wardy NM. Assessment methods in undergraduate medical education. Sultan Qaboos Univ Med J. 2010;10:203–9.

Google Scholar

Epstein RM. Assessment in Medical Education. N Engl J Med. 2007;356:387–96. doi: 10.1056/NEJMra054784 .

Schuwirth LWT, van der Vleuten CPM. Different written assessment methods: what can be said about their strengths and weaknesses? Med Educ. 2004;38:974–9. doi: 10.1111/j.1365-2929.2004.01916.x .

Gajjar S, Sharma R, Kumar P, Rana M. Item and Test Analysis to Identify Quality Multiple Choice Questions (MCQs) from an Assessment of Medical Students of Ahmedabad, Gujarat. Indian J Community Med. 2014;39:17–20. doi: 10.4103/0970-0218.126347 .

Case SM, Swanson DB. National Board of Medical Examiners, Constructing Written Test Questions For the Basic and Clinical Sciences, Third Edition (revised). Philadelphia, USA: National Board of Medical Examiners® (NBME®); 2002.

Schmidmaier R, Eiber S, Ebersbach R, Schiller M, Hege I, Holzer M, et al. Learning the facts in medical school is not enough: which factors predict successful application of procedural knowledge in a laboratory setting? BMC Med Educ. 2013;13:28. doi: 10.1186/1472-6920-13-28 .

United States Medical Licensing Examination ®, (2015). http://www.usmle.org/ (Accessed January 29, 2016).

Thistlethwaite JE, Davies D, Ekeocha S, Kidd JM, MacDougall C, Matthews P, et al. The effectiveness of case-based learning in health professional education. A BEME systematic review: BEME Guide No. 23. Med Teach. 2012;34:e421–44. doi: 10.3109/0142159X.2012.680939 .

Medizinische Universität Wien, Management Summary, Curriculumelementevaluation SS 2013, 2013. http://www.meduniwien.ac.at/homepage/content/organisation/dienstleistungseinrichtungen-und-stabstellen/evaluation-und-qualitaetsmanagement/evaluation/curriculumelementevaluation-online/ . (Accessed January 29, 2016)

Medizinische Universität Wien, Management Summary, Curriculumelementevaluation SS 2014, 2014. http://www.meduniwien.ac.at/homepage/content/organisation/dienstleistungseinrichtungen-und-stabstellen/evaluation-und-qualitaetsmanagement/evaluation/curriculumelementevaluation-online/ . (Accessed January 29, 2016)

Medizinische Universität Wien, (2015). https://studyguide.meduniwien.ac.at/curriculum/n202-2014/?state=0-65824-3725/block-20-Psychische-Funktionen-in-Gesundheit-Und-Krankheit-Aerztliche-Gespraechsfuehrung-C (Accessed January 29, 2016).

Löffler-Stastka H, Blüml V, Ponocny-Seliger E, Hodal M, Jandl-Jager E, Springer-Kremser M. Students’ Attitudes Towards Psychotherapy : Changes After a Course on Psychic Functions in Health and Illness. Psychother Psych Med. 2010;60(3-4):118–25.

B.R. Turk, R. Krexner, F. Otto, T. Wrba, H. Löffler-Stastka, Not the ghost in the machine: transforming patient data into e-learning cases within a case-based blended learning framework for medical education, Procedia - Soc Behav Sci. 2015;186:713–725.

H. Löffler-Stastka, S. Doering, Psychische Funktionen in Gesundheit und Krankheit, 10th ed., Facultas Universitätsverlag, Wien; 2014.

H. Löffler-Stastka, V. Blüml, M. Hodal, E. Ponocny-Seliger, Attitude of Austrian students toward psychotherapy. Int J Med I. 2008;1(3):110–115.

Medizinische Universität Wien, Curriculum für das Diplomstudium Humanmedizin Konsolidierte Fassung : Stand Oktober 2013, 2013.

Maastricht University- Faculty of Health Medicine and Life Sciences, Maastricht Medical Curriculum, 2014–2015 Bachelor, (2015) 2014–2015, The Netherlands; 2015.

le ministre de la défense et la ministre de l’enseignement supérieur et de la recherche La ministre des affaires sociales et de la santé, Arrêté du 8 avril 2013 relatif au régime des études en vue du premier et du deuxième cycle des études médicales, JORF n°0095, (2013).

Medizinische Universität Heidelberg, Approbationsordnung für Ärzte, Stand 2013, 2013.

University of Groningen, (2015). Http://www.rug.nl/masters/medicine/programme (Accessed January 29, 2016).

J.B.M. Kuks, The bachelor-master structure (two-cycle curriculum) according to the Bologna agreement: a Dutch experience., GMS Z. Med. Ausbild. 27 (2010) Doc33. doi: 10.3205/zma000670 .

Weems GH, Onwuegbuzie AJ, Collins KMT. The Role of Reading Comprehension in Responses to Positively and Negatively Worded Items on Rating Scales. Eval Res Educ. 2006;19:3–20. doi: 10.1080/09500790608668322 .

R. Krebs, Anleitung zur Herstellung von MC - Fragen und MC - Prüfungen für die ärztliche Ausbildung, 2004.

J. Piaget, The psychology of intelligence., Totowa, NJ: Littlefield, Adams; 1960.

Kagan J. Motives and development. J Pers Soc Psychol. 1972;22(1):51–66. doi: 10.1037/h0032356 .

Engel S. Children’s Need to Know : Curiosity in Schools. Harv Educ Rev. 2011;81:625–46.

W. Labov, T. Labov, Learning the syntax of questions. In: Recent Advances in the Psychology of Language (pp.1–44), Springer US, 1978. doi: 10.1007/978-1-4684-2532-1 .

B. Tizard, M. Hughes, Young Children Learning, Harvard University Press, Cambridge, Massachusetts, United States of America; 1985.

Bower GH. Mood and Memory. Am Psychol. 1981;36(2):129–48.

McEwen BS. Stress and hippocampal plasticity. Annu Rev Neurosci. 1999;22:105–22. doi: 10.1146/annurev.neuro.22.1.105 .

McEwen BS. Sex, stress and the hippocampus: allostasis, allostatic load and the aging process. Neurobiol Aging. 2002;23:921–39.

Salas CE, Radovic D, Yuen KSL, Yeates GN, Castro O, Turnbull OH. “Opening an emotional dimension in me”: changes in emotional reactivity and emotion regulation in a case of executive impairment after left fronto-parietal damage. Bull Menninger Clin. 2014;78:301–34. doi: 10.1521/bumc.2014.78.4.301 .

Medizinische Universität Wien, https://moodle.meduniwien.ac.at/course/view.php?id=260§ion=1 , in https://moodle.meduniwien.ac.at/login/index.php , (2015) (Accessed January 29, 2016).

Download references

Acknowledgment

Prof. Dr. Michel Slama.

Author information

Authors and affiliations.

Department for Psychoanalysis and Psychotherapy, Advanced Postgraduate Program for Psychotherapy Research, Medical University of Vienna, Waehringer Guertel 18-20, 1090, Vienna, Austria

Magalie Chéron & Henriette Löffler-Stastka

Research Center for Molecular Medicine of the Austrian Academy of Sciences, Vienna, Austria

Mirlinda Ademi

Department of Anaesthesia, General Intensive Care and Pain Therapy, Medical University Vienna, Vienna, Austria

Felix Kraft

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Henriette Löffler-Stastka .

Additional information

Competing interest.

The authors declare that they have no competing interests.

The authors declare that they have no financial competing interests.

The authors declare that they have no non-financial competing interest.

Authors’ contribution

MC carried out the study, performed the statistical analysis and drafted the manuscript. MA performed the statistical analysis and helped to draft the manuscript. FK participated in the design of the study and statistical analysis. HLS conceived of the study, participated in its design and coordination and helped to draft the manuscript. All authors read and approved the final manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License ( http://creativecommons.org/licenses/by/4.0/ ), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver ( http://creativecommons.org/publicdomain/zero/1.0/ ) applies to the data made available in this article, unless otherwise stated.

Reprints and permissions

About this article

Cite this article.

Chéron, M., Ademi, M., Kraft, F. et al. Case-based learning and multiple choice questioning methods favored by students. BMC Med Educ 16 , 41 (2016). https://doi.org/10.1186/s12909-016-0564-x

Download citation

Received : 01 August 2015

Accepted : 26 January 2016

Published : 02 February 2016

DOI : https://doi.org/10.1186/s12909-016-0564-x

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Case-based questioning

- Learning process

- Questions’ formulation

- Medical education

BMC Medical Education

ISSN: 1472-6920

- Submission enquiries: [email protected]

- General enquiries: [email protected]

View all MCQs in

No comments yet

Related MCQs

- In problem solving method, a systematic and orderly process is adopted for carrying out

- Problem solving method help the learner to

- Problem solving involves

- The method of teaching at memory level is

- The method of teaching at reflective level is

- Lecture method lays emphasis on

- In lecture method,

- Lecture method is a

- Demonstration method is