- Advanced Search

Journal of Database Management

Latest Issue

Volume 35, Issue 1

- 701 E. Chocolate Ave., Hershey, PA

- United States

- Machine Learning

- Adoption and Use

- Anomaly-Based Intrusion Detection Systems

Narrativization in Information Systems Development

People see the world and convey their perception of it with narratives. In an information system context, stories are told and collected when the systems are developed. Requirements elicitation is largely dependent on communication between systems ...

A Sample-Aware Database Tuning System With Deep Reinforcement Learning

Based on the relationship between client load and overall system performance, the authors propose a sample-aware deep deterministic policy gradient model. Specifically, they improve sample quality by filtering out sample noise caused by the fluctuations ...

RDF(S) Store in Object-Relational Databases

The Resource Description Framework (RDF) and RDF Schema (RDFS) recommended by World Wide Web Consortium (W3C) provide a flexible model for semantically representing data on the web. With the widespread acceptance of RDF(S) (RDF and RDFS for short), a ...

Handling Imbalanced Data With Weighted Logistic Regression and Propensity Score Matching methods : The Case of P2P Money Transfers

The adoption of empirical methods for secondary data analysis has witnessed a significant surge in IS research. However, the secondary data is often incomplete, skewed, and imbalanced at best. Consequently, there is a growing recognition of the ...

Optimal Information Acquisition and Sharing Decisions : Joint Reviews on Crowdsourcing Product Design

The acquisition and sharing of reviews have significant ramifications for the selection of crowdsourcing designs before mass production. This article studies the optimal decision of a brand enterprise regarding the acquisition/sharing of crowdsourcing ...

Intrusion Detection System : A Comparative Study of Machine Learning-Based IDS

The use of encrypted data, the diversity of new protocols, and the surge in the number of malicious activities worldwide have posed new challenges for intrusion detection systems (IDS). In this scenario, existing signature-based IDS are not performing ...

An Efficient NoSQL-Based Storage Schema for Large-Scale Time Series Data

In IoT (internet of things), most data from the connected devices change with time and have sampling intervals, which are called time-series data. It is challenging to design a time series storage model that can write massive time-series data in a short ...

Examining the Usefulness of Customer Reviews for Mobile Applications : The Role of Developer Responsiveness

- Zhiying Jiang ,

- Vanessa Liu ,

- Miriam Erne

In the context of mobile applications (apps), the role of customers has been transformed from mere passive adopters to active co-creators through contribution of user reviews. However, customers might not always possess the required technical expertise ...

Save to Binder

Export citations.

- Please download or close your previous search result export first before starting a new bulk export. Preview is not available. By clicking download, a status dialog will open to start the export process. The process may take a few minutes but once it finishes a file will be downloadable from your browser. You may continue to browse the DL while the export process is in progress. Download

- Download citation

- Copy citation

We are preparing your search results for download ...

We will inform you here when the file is ready.

Your file of search results citations is now ready.

Your search export query has expired. Please try again.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- HHS Author Manuscripts

How I do it: A Practical Database Management System to Assist Clinical Research Teams with Data Collecting, Organization, and Reporting

1 Russell H. Morgan Department of Radiology and Radiological Science, Division of Vascular and Interventional Radiology, The Johns Hopkins Hospital, Sheikh Zayed Tower, Ste 7203, 1800 Orleans St, Baltimore, MD, USA 21287

Julius Chapiro

Rüdiger schernthaner, rafael duran, zhijun wang, boris gorodetski, jean-françois geschwind.

2 U/S Imaging and Interventions (UII), Philips Research North America, 345 Scarborough Road, Briarcliff Manor, New York 10510

Introduction

With the growing amount of clinical research studies in the field of interventional oncology, selective patient data is becoming more difficult to store and organize effectively. Existing hospital EMR (electronic medical record) systems store patient data in the form of reports and data tables. Our institution’s EMR system placed our researchers in a position where time consuming methods are needed to search for suitable patients for clinical studies. Researchers had to manually read through the reports and data tables to filter patients and gather data. For most studies, spreadsheet programs such as Microsoft Excel ® (Microsoft, Washington, USA) are often used as a data repository similar to a database to record and organize patient data for research. Once the spreadsheet is populated, it is manually filtered by set study parameters and then pushed to statistical analysis software for further analysis. For statistical analysis, columns containing text are translated into binary values (1 or 0) to be in a format acceptable by statistical analysis software. For example, each tumor entity is assigned a new column. Patient histological reports are read manually to assign a 1 or 0 to each tumor entity column, 1 for positive, 0 for negative. Under a tumor entity column, researchers would write a 1 for all patients with the tumor and a 0 for all patients without the tumor.

This method of data storage has limitations in the organization and the quality of the data. Data input and analysis without a database run a higher risk of incorrect data entry, patient exclusion, and a higher risk of introducing duplicates. Furthermore, data selection and calculation is time consuming. An alternative could be the clinical research database that Meineke et. al. proposed ( 1 ). However, it is too unspecific for interventional oncology research and would need additional optimization, for example, the capability to automatically calculate various variables such as tumor staging systems and to record information about multiple treatment sessions.

The purpose of this study was to provide an improved workflow efficient tool through the use of a clinical research database management system (DBMS) optimized for interventional oncology clinical research.

Materials and Methods

This was a single-institution prospective study. The study was compliant with the Health Insurance Portability and Accountability Act (HIPAA) and was waived by the Institutional Review Board.

Database and Query Interface Design

The presented database management system has two distinct parts, the database server and client interface, illustrated in Figure 1 . The database is run by software (MySQL, Oracle Corporation, California, USA and phpMyAdmin, The phpMyAdmin Project, California, USA) on a central computer server within the department ( 2 , 3 ). Authorized users were granted access to this password protected and encrypted secured server (HIPAA compliant). Multiple users concurrently add, edit, and query data remotely through a customized graphical user interface (GUI) utilizing Microsoft Access ® (Microsoft, Washington, USA). Any data changes are immediately logged for others to see. The database performed automatic calculations using queries, user-defined search criteria. Queries were saved, rerun, and exported to spreadsheets. Queries aid in data analysis and increase study productivity ( 4 ). They are powerful tools for filtering and sorting datasets. Figure 2 illustrates the query interface and an example of request from the database.

This chart shows a general layout of the database server and its clients. It illustrates how the database management system performs queries (orange circle) such as statistical analysis. Multiple computers are granted access to the database. The blue rectangles represent the database management system software. Researchers can utilize the database client graphical user interface (GUI) to import data without needing to format. Researchers also control data through the GUI. Queries are usually run through the GUI to provide wanted results. Once the results are obtained, researchers export the query to a spreadsheet, illustrated by the green rectangle.

This figure illustrates the query interface. In this example query, a list of male patients over the age of 40 with hepatocellular carcinoma (HCC) is wanted. The user inputs search criteria for age, gender, and tumor type, “>40”, “m”, and “HCC” respectively. MRN: medical record number.

Graphical User Interface Design and Utility

In our research environment, the database GUI was created to facilitate patient data input. This was done by using custom user-friendly interface forms that contain textboxes and labels including demographic data, treatment information (e.g. conventional transarterial chemoembolization (TACE)), tumors types, dates and types of radiological exams, etc. The GUI is used to view patient data and allows users to add/edit data ( Figure 3 ). The database interface is not limited to one form. It can have multiple forms, shown as tabs, to assist grouping various medical data. Figure 4 shows an example of multiple tabs for groups of related data.

This form illustrates how users input data to the database. The form is divided into three parts:

(a) Patient Form – Data consists of basic patient information. Patient Identification (PID) is a unique number generated by the database to uniquely identify patients. LAST MODIFIED is a timestamp of when the data was most recently updated or added. MODIFIED BY is a text box that records who updated/added data. (a1) shows the total amount of patients in the database.

(b) Tumor – Data consists of a patient’s primary and secondary tumors in the liver. The dropdown allows users to select a tumor or add new tumor types (e.g. metastatic disease). (b1) shows how many tumors types the patient has in the liver.

(c) Embolization Procedures – Data consists of intra-arterial therapies (IATs) sessions. (c1) shows how many IATs sessions a patient has went through.

This figure illustrates the tabular form where each group of related data is shown as individual tabs to assist user navigation. The display of patient identification information and comments are maintained while the user navigates to different tabs to preserve the scope and field of view for each patient.

Automatic Calculations

Automatic calculations may be run between values, such as dates. For example, the database may calculate the time between baseline imaging, follow-up imaging, treatment dates, pre- and post-treatment dates, date of diagnosis, and patient’s date of death in relation to a particular treatment or event (e.g. randomization), essential for survival studies. Using these queries, the database can also calculate the median overall survival automatically. The database does also automatically calculate clinical scores such as Child-Pugh score and Barcelona Clinic Liver Cancer (BCLC) stage as shown in Figure 5 ( 5 ). For our purposes, the Child Pugh score and BCLC were calculated using baseline data before a patient’s first embolization as is typically done for staging. The illustrated calculators can be revised as needed. Once patient blood data is available, queries are run to produce a list of all patients with Child-Pugh scores. Researchers can then quickly retrieve them.

This form shows a patient’s Child-Pugh score and Barcelona Clinic Liver Cancer (BCLC) stage. They are automatically calculated when provided with pertinent patient data. The “Calculate” buttons are used to refresh the form should any patient data value change.

PT/INR: Prothrombin Time/International Normalized Ratio; PS: Performance Status.

Statistical Output

Another powerful feature of the database is its ability to provide a first tier of statistical information. Using this GUI, the user defines the search criteria and runs queries to obtain immediate statistical information about a particular set of parameters. With this feature, the database can quickly output an accurate summary of patient data such as, for example, how many patients have colorectal carcinoma and undergo conventional TACE.

Questionnaire Assessment

A questionnaire (15 questions) was designed and distributed to 21 board-certified interventional radiologists who conduct clinical research at our academic hospital that include Phase I, II, and III clinical trials, and retrospective studies. The questionnaire determined how data is controlled in retrospective studies and the likelihood to use the database. The questionnaire is shown in Table 1 . The purpose of the questionnaire was to 1) illustrate the general scope of where researchers were having problems within Excel and data organization, such as wasted effort working with duplicate patients and unintentional failure to include available patients, and 2) to gauge how receptive they would be to a database system. Using this information, the database system was constructed. There were weekly progress updates with the clinical research team to ensure that the original goals set out to address the deficiencies of Excel were being resolved.

| Question: I searched and filtered data manually |

| Question: I inputted formulas and Excel functions to calculate scores, response rates, or statistics in my Excel spreadsheet |

| Question: I summarized my Excel data in a report |

| Question: I converted non-binary data into binary data (0/1) by defining a cut-off point to differentiate |

| Question: I have done statistical analysis myself |

| Question: I unknowingly produced duplicate data that I later found out was already collected by another colleague |

| Question: From the beginning of data collection to finishing analysis, about what percentage of the total time spent for a single retrospective study did you spend on: |

| Question: Querying/filtering/categorizing data? |

| Question: Calculating data? |

| Question: If given the opportunity, how likely will you use software that: |

| Question: Produces group summaries with minimal effort? |

| Question: Calculates clinical staging and score systems automatically? |

| Question: Allows multiple users to add and edit data into the same database so that redundant collection of the same patients by different colleagues can be avoided? |

| Question: Allows users to track data modifications? |

| Question: Stores data in a centralized location with remote access? |

Questionnaire Results

All 21 interventional radiologists completed the questionnaire. Self evaluation results are shown in Figure 6 . In data collection and analysis, over 50% (11/21) spent most of the time searching, filtering, and/or categorizing data. However, about 50% (10/21) spent little to no time calculating the data. 67% of respondents (14/21) realized at some point that there were erroneously included patients who should have been excluded and there were patients who were erroneously not included. Over 85% (18/21) were very receptive to using software that produces group summaries such as totals of each tumor type with minimal effort, calculates clinical staging and score systems automatically, and also allows remote access for multiple users to add/edit data in a central server with data modification logs.

The self evaluation results are from Table 1 .

Query Interface Output

In Figure 7 , the query of male patients, over 40 years old, with HCC is run. Figure 8 shows a query result of patients with TACE and Child-Pugh score A calculated by the database. Figure 9 illustrates an interval of time between two events as a query that can be calculated automatically (e.g. time elapsed between two embolization procedures). The output of the queries as described above is shown in a structured and concise list, which can be exported for further research study specific analysis.

This figure illustrates the output of a query for male patients with hepatocellular carcinoma (HCC). The interface outputs a list of all patients matching the search criteria.

This is the output of a query for patients who had undergone TACE in 2006 (P_PROC_DATE column) with Child Pugh Class A, here labeled as “Classification”. The automatically calculated Child-Pugh Class can be used also for querying.

The database automatically calculates the days between TACE sessions for each patient as a query (red circle). The current treatment “EMBODate,” is subtracted from the next treatment, “Next_EMBO.” Empty fields indicate that the patient has undergone only one treatment or the session is the latest treatment. Because the query is saved, double clicking the query indicated by the red circle refreshes the calculation for the entire database of patients.

The main finding of this study is that there is a need for a much more time efficient and accurate way to store, retrieve, and analyze patient data for clinical research studies. The database management system presented here fulfills these needs. This was achieved through the use of automatic calculations, interface forms, queries, etc. With a personalized interface, data access, entry, organization, queries, calculations, and export processes are seamlessly performed to assist clinical research with data and statistical analysis. Furthermore, the database is a unified repository of clinical research information and a shared resource among the clinical research team. This allows for a multi-user level experience where there can be simultaneous access to the data and where the efforts of each individual in adding/appending new information can be used by the entire team.

With the presented database put into use, the effort for clinical studies can truly focus on conducting various statistical analysis and data interpretation rather than preparing data for analysis ( 6 ). All retrospective data can be merged into this database, enabling a centrally maintained and shared resource. Our clinical research team now has access to a customized database of patients with a large number of clinical parameters, allowing a vast combination of queries to form or support study hypotheses. The user defined GUI-connected interface is invaluable for anyone collecting data as it facilitates data entry and minimizes data entry errors.

In previous data collection and analysis, converting spreadsheet data to binary/numeric format was time consuming and impractical. The database presented in this study relieves the inconvenience of manually searching, organizing, and calculating data. Processing calculations, especially more complex calculations such as clinical staging scores, can now be done automatically. Prior to implementing the presented database system, a typical Excel spreadsheet for the clinical studies at our institution would have over 100 columns. These columns included patient demographics, repeat treatment dates and types (new columns per TACE session), and repeated pre-/post-imaging dates and types (new columns per multi-modular scan). Tracking medical data is frequently difficult due to the large amount of columns in the spreadsheet. Compared to a typical Excel spreadsheet with many columns, browsing and adding prospective data through the database interface presented here is more organized and practical with ten defined tabs for data groups, ranging from a patient’s basic information to treatments to survival status. In addition, the database interface lists all repeating treatments and imaging per patient as rows instead of columns, facilitating comparisons between multiple treatments of a patient. Combining the database’s ability to calculate statistical analysis with automatic calculation queries, reports can be generated with virtually any parameter. This is not only helpful in radiology, but also beneficial for other studies and hospital information systems.

The database management system in this study has some limitations. A database system may not be suitable for all kinds of research teams. There are several factors that may illustrate the need of a database. In a previous report on data collection, applicable examples and guidelines were addressed to determine whether or not implementing a database is feasible in the current environment ( 7 ). Depending on the environment and context, a database may not be implemented right away as it needs additional testing. Furthermore, the database will need a dedicated server to host the database along with the data. In order to use the database interface, training is required. Someone who specializes in databases, such as a database administrator, needs to teach researchers and other potential users how to use the database interface and query interface for filtering patients and obtaining statistics. This is especially needed in more advanced queries and in developing additional GUIs. It should be noted that Microsoft Access is being used in this work as a “front-end” interface that communicates with the SQL database to query (filter) data, and for input/appending to existing data. Other software such as FileMaker Pro (FileMaker, Santa Clara, CA) and REDCap would serve a similar function ( 8 ). The need for the SQL database is so that multiple users can access the stored data at the same time, increased level of security, stability, and performance, and serving as a unified repository of clinical research information that can be shared by the research team ( 9 , 10 ). Also, the database administrator has to not only construct a database on a server with input from clinicians and other end users, but in addition would need to maintain the database ( 11 , 12 ). Typical maintenance includes routine backups, altering database structure and interface for new data types, and updating database and client software. A server can be hosted on a PC or online, both of which all parties involved can access in the same network locally or remotely. Furthermore, databases can be enabled to communicate with other databases. While the initial setup and learning curve is high, the database allows for fluid data entry in an organized fashion, querying results including calculations, and storing data while supporting simultaneous user access. With the variety of research teams and departments, ideally each suitable team should have their own database. This is not necessarily only for interventional oncology but also for any specific area of research, for example, studies with patients undergoing ablation, percutaneous abscess drainage (PAD), etc. These databases can be connected for interdisciplinary research to provide a broader scope of data and facilitate data search ( 13 ).

The current database implementation and interface allows a much faster and more detailed retrospective analysis of patient cohorts. In addition, it facilitates data management and a standardized information output for ongoing prospective clinical trials. The database management system with an interface is a work efficient and robust tool that provides a significant edge over manual retrieval of patient records by filtering data and assisting statistical analysis in a study-relevant fashion.

Acknowledgments

Funding and support has been provided by NIH/NCI R01 CA160771, P30 CA006973, and Philips Research North America, Briarcliff Manor, NY, USA.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

How IBM helps Wimbledon use generative AI to drive personalised fan engagement

This collaboration with Wimbledon teams extends beyond the fan-facing digital platform, into enterprise-wide transformation.

Authentication vs. authorization: What’s the difference?

6 min read - Authentication verifies a user’s identity, while authorization gives the user the right level of access to system resources.

Applying generative AI to revolutionize telco network operations

5 min read - Learn the many potential applications that operators and suppliers are capitalizing on to enhance network operations for telco.

Re-evaluating data management in the generative AI age

4 min read - A good place to start is refreshing the way organizations govern data, particularly as it pertains to its usage in generative AI solutions.

Top 7 risks to your identity security posture

5 min read - Identity misconfigurations and blind spots stand out as critical concerns that undermine an organization’s identity security posture.

June 27, 2024

IBM announces new AI assistant and feature innovations at Think 2024

June 26, 2024

A major upgrade to Db2® Warehouse on IBM Cloud®

June 25, 2024

Increase efficiency in asset lifecycle management with Maximo Application Suite’s new AI-power...

Achieving operational efficiency through Instana’s Intelligent Remediation

June 24, 2024

Manage the routing of your observability log and event data

Best practices for augmenting human intelligence with AI

2 min read - Enabling participation in the AI-driven economy to be underpinned by fairness, transparency, explainability, robustness and privacy.

Microcontrollers vs. microprocessors: What’s the difference?

6 min read - Microcontroller units (MCUs) and microprocessor units (MPUs) are two kinds of integrated circuits that, while similar in certain ways, are very different in many others.

Mastering budget control in the age of AI: Leveraging on-premises and cloud XaaS for success

2 min read - As organizations harness the power of AI while controlling costs, leveraging anything as a service (XaaS) models emerges as strategic.

Highlights by topic

Use IBM Watsonx’s AI or build your own machine learning models

Automate IT infrastructure management

Cloud-native software to secure resources and simplify compliance

Run code on real quantum systems using a full-stack SDK

Aggregate and analyze large datasets

Store, query and analyze structured data

Manage infrastructure, environments and deployments

Run workloads on hybrid cloud infrastructure

Responsible AI can revolutionize tax agencies to improve citizen services

Generative AI can revolutionize tax administration and drive toward a more personalized and ethical future.

Intesa Sanpaolo and IBM secure digital transactions with fully homomorphic encryption

6 min read - Explore how European bank Intesa Sanpaolo and IBM partnered to deliver secure digital transactions using fully homomorphic encryption.

What is AI risk management?

8 min read - AI risk management is the process of identifying, mitigating and addressing the potential risks associated with AI technologies.

How IBM and AWS are partnering to deliver the promise of responsible AI

4 min read - This partnership between IBM and Amazon SageMaker is poised to play a pivotal role in shaping responsible AI practices across industries

Speed, scale and trustworthy AI on IBM Z with Machine Learning for IBM z/OS v3.2

4 min read - Machine Learning for IBM® z/OS® is an AI platform made for IBM z/OS environments, combining data and transaction gravity with AI infusion.

The recipe for RAG: How cloud services enable generative AI outcomes across industries

4 min read - While the AI is the key component of the RAG framework, other “ingredients” such as PaaS solutions are integral to the mix

Rethink IT spend in the age of generative AI

3 min read - It's critical for organizations to consider frameworks like FinOps and TBM for visibility and accountability of all tech expenditure.

IBM Newsletters

Advances on Data Management and Information Systems

- Published: 02 March 2022

- Volume 24 , pages 1–10, ( 2022 )

Cite this article

- Jérôme Darmont 1 ,

- Boris Novikov 2 ,

- Robert Wrembel 3 &

- Ladjel Bellatreche 4

7050 Accesses

5 Citations

3 Altmetric

Explore all metrics

Avoid common mistakes on your manuscript.

1 Introduction

The research and technological area of data management encompasses various concepts, techniques, algorithms and technologies, including data modeling, data integration and ingestion, transactional data management, query languages, query optimization, physical data storage, data structures, analytical techniques (including On-Line Analytical Processing – OLAP), as well as service creation and orchestration (Garcia-Molina et al., 2009 ). Data management technologies are core components of every information system, either centralized or distributed, deployed in an on-premise hardware architecture or in a cloud ecosystem. Data management technologies have been used in commercial, mature products for decades. They were originally developed for managing structured data (mainly expressed in the relational data model).

Yet, the ubiquitous big data (Azzini et al., 2021 ) require development of new data management techniques, suitable for the variety of data formats (from structured, through semi-structured, to unstructured), overwhelming data volumes and velocity of big data generation. These new techniques draw upon the concepts applied to managing relational data.

One of the most frequently used data format for big data is based on graphs, which are a natural way of representing relationships between entities, e.g., knowledge, social connections and components of a complex system. Such data not only need to be efficiently stored but also efficiently analyzed. Therefore, some OLAP-like analysis approaches from graph data have been recently proposed, e.g., Chen et al. ( 2020 ), Ghrab et al. ( 2018 ), Ghrab et al. ( 2021 ), and Schuetz et al. ( 2021 ). Thus, combining graph and OLAP technologies offers ways of analyzing graphs in a manner already well accepted by the industry (Richardson et al., 2021 ).

The complexity of ecosystems for managing big data results in challenges for orchestrating these components and in optimizing their performance, as there are too many parameters in each system to be manually tuned by a human administrator. Thus, more and more frequently, machine learning techniques are applied to performance optimization, e.g., Hernández et al. ( 2018 ) and Witt et al. ( 2019 ). Conversely, data management techniques are used to solve challenges in machine learning, such as building end-to-end data processing pipelines (Romero and Wrembel, 2020 ).

In this editorial to the special section of Information Systems Frontiers, we outline research problems in graph processing, OLAP and machine learning. These problems are addressed by the papers in this special section.

2 Selected Research Problems in Data Management and Information Systems

2.1 graph processing.

Graph processing algorithms have been attracting attention of researchers since the 1950’s. Several knowledge representation techniques (such as semantic networks) studied in the 1970’s utilize graph structures, including applications to rule-based systems (Griffith, 1982 ), data structures for efficient processing (Moldovan, 1984 )), and several other aspects.

The concepts of semantic networks served as a base for the Semantic Web and evolved into knowledge graphs, also known as knowledge bases. Processing of large distributed RDF knowledge bases with the SPARQL language is addressed in Peng et al. ( 2016 ).

Graph-based models become a natural choice for a representation of semi-structured data (McHugh et al., 1997 ). Graph representations proved their usefulness for modeling hypertexts, including the World Wide Web (Meusel et al., 2014 ). Documents (e.g., Web pages) are mapped to vertices, while directed edges represent links. The graph representations of the WWW provided several features (such as page rank and simrank) for deep analysis of its structure and definition. Sources of large graphs include social networks, bioinformatics, road networks, and other application domains.

The need to store and process large graphs supports growing interest to graph databases. An overview of several aspects of graph databases can be found in (Deutsch and Papakonstantinou, 2018 ). Typically, such databases can store graph vertices and nodes, labeled with sets of attributes. A widespread opinion states that graph databases provide more powerful modeling features than the relational model used in traditional relational databases. This is doubtful, as a relational database schema (represented for example as an ER model) is also a graph (Pokorný, 2016 ). Actually, the advantage of graph databases is that the expensive and time-consuming modeling can be pushed forward to later phases of the information system lifecycle, providing more options for rapid prototyping and similar application development methodologies (Brdjanin et al., 2018 ).

A need of highly expressive tools for graph processing specification triggered a number of efforts in declarative query languages design. A step toward the standardization of graph query languages (Angles et al., 2018 ) is focused on providing a balance between high expressiveness and computational performance, avoiding constructs that may result in unacceptable computation complexity. The GSQL graph query language (Deutsch et al., 2020 ) supports the specification of complex analytical queries over graphs, including pattern matching and aggregations. A comparison of different graph processing techniques, available in the Neo4j graph database management system, can be found in Holzschuher and Peinl ( 2013 ).

Several similarities can be found between relational and graph declarative query languages: as soon as sets of labeled nodes or edges are produced as intermediate results, the remaining processing is typically expressed in terms of relational operations. The most significant differences between graph and relational database query languages follow.

Graph traversal (implicit and rarely used in relational languages) requires the intensive use of recursion and an efficient implementation in graph databases.

Graph query languages provide support for computationally complex processing, such as weighted shortest path search, potentially with additional constraints.

Locality of data placement is essential for high performance of relational systems. Data placement in graph databases is much more complex and often results in poor performance when the size of the database exceeds the available main memory of a single server.

The items listed above are inter-related: the performance of graph processing depends on locality needed for efficient traversing of a graph. However, traversing depends on the problem being solved. A generic approach is to rely on certain graph properties to optimize the storage of graph nodes and edges, that is, graph partitioning.

2.2 On-Line Analytical Processing

The term “On-Line Analytical Processing” (OLAP) was coined by Edgar F. Codd in 1993 (Codd et al., 1993 ). OLAP is defined in contrast to operational database systems that run On-Line Transactional Processing (OLTP). In OLTP, data representing the current state of information may be frequently modified and are interrogated through relatively simple queries. OLAP’s data are typically sourced from one or several OLTP databases, consolidated and historicized for decision-support purposes. They are seldom modified and are queried by complex, analytical queries that run over large data volumes.

Conceptually, OLAP rests on a metaphor that is easy to grasp by business users: the (hyper)cube. Facts constituted of numerical Key Performance Indicators (KPIs), e.g., product sales, are analysis subjects. They are viewed as points in a multidimensional space whose dimensions are analysis axes, e.g., time, store, salesperson, etc. Dimensions may also have hierarchies, e.g., \(store \rightarrow city \rightarrow state\) . Thus, dimensions represent the coordinates of facts in the multidimensional space.

In the 1990’s, OLAP research mainly focused on designing efficient logical and physical models, synthetically surveyed by Vassiliadis and Sellis ( 1999 ). Relational OLAP (ROLAP) relies on storing data in time-tested relational Database Management Systems (DBMSs), complemented with new, OLAP-specific operators and queries available in SQL99. ROLAP is cheap and easy to implement, can handle large data volumes, and schema evolution is relatively easy. However, ROLAP induces numerous, costly joints that hinder query performance, and analysis results are not suitable to end-users, i.e., business users, and thus must be reformatted.

In contrast, Multidimensional OLAP (MOLAP) sticks to the cube metaphor. Hypercubes are natively stored in multidimensional tables, allowing quick aggregate computations. However, it turned out that MOLAP systems and languages (e.g., MDX) were in majority proprietary and difficult to implement. Moreover, data volume is limited to the RAM size and a cube can be quite sparse, wasting memory. Eventually, refreshing the system is limited, inducing full and costly periodical reconstructions.

Eventually, Hybrid OLAP (HOLAP) was proposed as the best of both worlds (Salka, 1998 ), by storing atomic data in a relational DBMS and aggregated data in MOLAP cubes, thus achieving a good cost/performance tradeoff on large data volumes. However, HOLAP is difficult to implement and neither as fast as MOLAP nor as scalable as ROLAP. Later on, in 2014, Gartner introduced the Hybrid Transaction/Analytical Processing (HTAP Footnote 1 ), where an in-memory DBMS helps process OLTP and OLAP simultaneously, which allows transactional data to be quickly available for analytics and induces fast, distributed query computation while avoiding data redundancy. However, this is a complex and drastic change in decision-support architectures.

After OLAP pioneers, many lines of research went on for more than fifteen years, which can be classified in two trends. In the first trend, OLAP is adapted to particular data formats. One of the most prominent of such adaptations is probably Spatial OLAP (SOLAP Han, 2017 ), where OLAP is applied on spatial (and even spatio-temporal) data, allowing for example to zoom and dezoom (i.e., drill-down and roll-up in terms of OLAP operations) spatial representations such as maps. Another well-researched adaptation was XML-OLAP (also called XOLAP), which allows OLAP on semi-structured data. Related approaches are surveyed in Mahboubi et al. ( 2009 ). Other examples include OLAP on trajectory data (Marketos and Theodoridis, 2010 ) and mobile OLAP (Maniatis, 2004 ).

In the second trend, OLAP is hybridized with other techniques for specific purposes. Quite quickly, OLAP was associated with data mining, with OLAP providing data navigation and identifying a subset of a cube; and data mining featuring association, classification, prediction, clustering, and sequencing on this data subset (Han, 1997 ).

With the Web becoming an important source of data, OLAP systems could not rely only on internal data any more and had to discover external, Web data, as well as their semantics. This issue was addressed with the help of Semantic Web (SW) technologies that support inference and reasoning on data. An extensive survey covers this research trend (Abelló et al., 2015 ). OLAP was also combined with information networks akin to social media, in the sense that they can be represented by very similar graphs. A comprehensive survey of the so-called Graph OLAP, with a focus on bibliographic data analysis, is provided in Loudcher et al. ( 2015 ).

Eventually, the Big Data era made OLAP meet new challenges such as: (1) design methods that handle a high complexity that tends to make the number of dimensions explosive; (2) computing methodologies that leverage the cloud computing paradigm for scaling and performance; and (3) query languages that can manage data variety (Cuzzocrea, 2015 ).

Big Data also pushed forward the exploitation of textual documents, which are acknowledged to represent the majority of the information stored worldwide. In the context of OLAP, i.e., Text or Textual OLAP, the key issue is to find ways of aggregating textual documents instead of numerical KPIs. Two trends emerge, based on the hypercube structure and text mining, respectively. They are thoroughly surveyed and discussed in Bouakkaz et al. ( 2017 ).

Finally, with the emergence of Data Lakes (DLs) in the 2010’s, the concept of Data Warehouse (DW), on which OLAP typically rests, is challenged in terms of data integration complexity, data siloing, data variety management and even scaling. However, DLs and DWs are actually synergistic. A DL can indeed be the source of a DW, and DWs can be components, among others, of DLs. Thence, OLAP remains very useful as an analytical tool in both cases. Two recent and complimentary surveys cover DL, DW and OLAP-related issues (Sawadogo and Darmont, 2021 ; Hai et al., 2021 ).

2.3 Machine Learning

Artificial intelligence (AI) has been a hot research and technological topic for a few years. AI refers to the computing techniques that allow stimulation of human-like intelligence in machines. AI is a broad area of research and technology that includes a sub-area - Machine Learning (ML), which enables a computer system to learn models from data.

The most frequent ML techniques include regression, clustering, and classification (they are supported by multiple software tools (Krensky & Idoine, 2021 )). Regression aims at building statistical models to predict continuous values (e.g., electrical or thermal energy usage in a given point in time or time period). Clustering aims at dividing data items into a non-predefined number of groups, such that the instances in the same group have similar values of some features (e.g., grouping customers by their purchase behaviour). Classification aims at predicting a predefined class to which belongs a given data item (e.g., classifying patients into a class of high blood pressure risk or a class of no-risk).

ML in turn includes a sub-area - Artificial Neural Networks (ANNs). ANNs are based on a statistical model that reflects the way a human brain is build, thus it mathematically models how the brain works. ANNs are the foundation of Deep Learning (DL) (Bengio et al., 2021 ). DL applies algorithms that allow a machine to train itself from large volumes of data, in order to learn new models based on new input (data). DL turned out to be especially efficient in image and speech recognition.

In order to build prediction models by ML algorithms, massive amounts of pre-processed data are needed. The pre-processing includes a workflow of tasks (a.k.a. data wrangling (Bogatu et al., 2019 ), data processing/preparation pipeline (Konstantinou & Paton, 2020 ; Romero et al., 2020 ) or ETL (Ali & Wrembel, 2017 )). The workflow includes the following tasks: data integration and transformation, data cleaning and homogenization, data preparation for a particular ML algorithm. Based on pre-processed data, ML models are built (trained, validated, and tuned Quemy, 2020 ). Since the whole workflow is very complex, constructing it requires a deep knowledge from its developer in multiple areas, including software engineering, data engineering, performance optimization, and ML. Thus, multiple works focus on automating the construction of such workflows. This research area is commonly called AutoML . It turned to be a hot research area in recent years (Bilalli et al., 2019 ; Giovanelli et al., 2021 ; Koehler et al., 2021 ; Quemy, 2019 ). (Kedziora et al., 2020 ) provides an excellent state of the art of this research area.

Other major trends in ML are pointed to by the Gartner report on strategical technological trends for 2021 (Panetta, 2020 ). Among the trends AI engineering is listed. It is defined as means to “facilitate the performance, scalability, interpretability and reliability of AI models”. Interpretability and reliability is crucial, since AI systems are typically applied to support decision making by providing means of prediction models and recommendations, which by definition must be reliable. Moreover, a decision maker must be able to figure out and understand how a decision was reached by a given model.

Unfortunately, models built by ML algorithms may be difficult to understand for a user, for two main reasons. First, a model may be too complex to be understood by a user. Second, a user typically has access to an input and output of a model, i.e., internals of the model are hidden. Such models are typically referred to as black-box models . They typically include ensemble models produced by classification techniques (e.g., Random Forest, Bagging, Adaboost) and ANN models. Even a simple classification model may be difficult to understand if a decision tree is large. ANN models are by their nature non-interpretable (e.g., an ANN with a hundred of inputs and several hidden layers). As a consequence, a user is not able to fully understand how decisions are reached by such complex models (Du et al., 2019 ).

Yet, in a decision making process, it is necessary to understand how a given decision was reached by a ML model. Therefore, there is a need for developing methods for explaining how ML black-box models work internally. As the response to this need, the so-called Explainable Artificial Intelligence (EAI) (Biggio et al., 2021 ; Goebel et al., 2018 ; Liang et al., 2021 ; Langer et al., 2021 ; Miller, 2019 ) or Interpretable Machine Learning (IML) (Du et al., 2019 ) techniques are being developed. This research problem is defined as “investigating methods to produce or complement AI to make accessible and interpretable the internal logic and the outcome of the model, making such process human understandable” (Bodria et al., 2021 ). Explaining ML models turned out to be crucial in multiple business and engineering domains, such as system security (Mahbooba et al., 2021 ), health care (Danso et al., 2021 ), chemistry (Karimi et al., 2021 ), text processing (Moradi & Samwald, 2021 ), finance (Ohana et al., 2021 ), energy management (Sardianos et al., 2021 ), and IoT (García-Magariño et al., 2019 ).

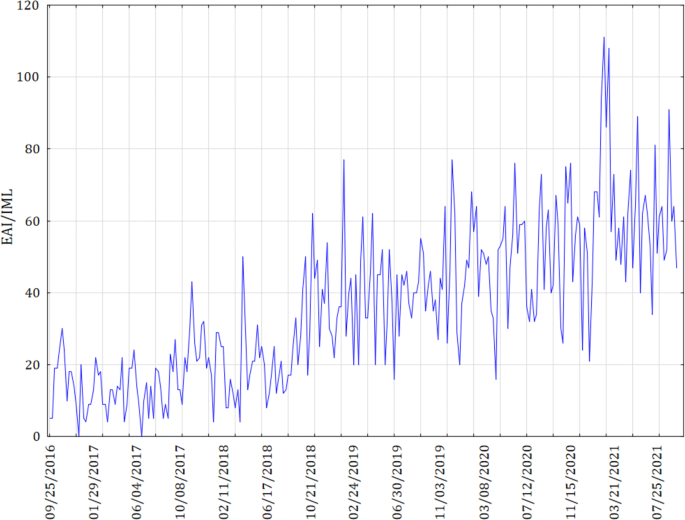

A substantial growth of this research topic is observed in years 2018-2019. The DBLP service Footnote 2 includes in total 215 papers on EAI and 163 papers on IML (as of September 25, 2021). Google Trends Footnote 3 shows an increasing popularity trend of this research topic. Figure 1 shows an aggregated trend for EAI and IML.

Aggregated popularity trend of EAI and IML topics (Google Trends)

Techniques for building interpretable ML models can be divided into two categories, namely intrinsic and post-hoc. An orthogonal classification divides the techniques into global and local interpretable models (Du et al., 2019 ).

Models from the intrinsic interpretability incorporate interpretability directly to their structures, making them self-interpretable. Examples of such models include for example: decision trees, rule-based models, and linear models. Models from the post-hoc interpretability require constructing an additional model, which provides explanations to the main model.

A globally interpretable model means that a user is able to understand how a model works globally, i.e., in a generalized way. A locally interpretable model allows a user to understand how an individual prediction was made by the model.

Multiple approaches to explaining models have been proposed. They may be specific to a type of data used to build a model, i.e., there are specific approaches for table-like data, for images, and for texts.

For explaining models that use table-like data, the most popular method is based either on rules or on feature importance. A rule-based explanation uses decision rules understandable by a user, which explain reasoning that produced the final prediction (decision). A feature importance explanation assigns a value to each input feature. The value represents the importance of a given feature in the produced model.

For explaining models that work on images, the most frequently used technique is called the Sailency Map (SM). The SM is an image where a brightness and/or color of a pixel reflects how important the pixel is (it is typically visualized as a divergent color map). This way, it can be visualized whether and how strongly a given pixel in an image contributes to the given output of a model. The SM is typically modeled as a matrix, whose dimensions are the sizes of the image being analyzed.

A concept similar to SM can be used to explain models that work on text data. When the SM is applied to a text, then every word in the text is assigned a color, which reflects the importance of a given word in the final output of a model.

An excellent overview of explanation methods in ML for various types of data is available in Bodria et al. ( 2021 ).

3 Special Section Content

This editorial paper overviews research topics covered in this special section of the Information Systems Frontiers journal. The special section contains papers invited from the 24 th European Conference on Advances in Databases and Information Systems (ADBIS).

3.1 ADBIS Research Topics

The ADBIS conference has been running continuously since 1993. An overview of ADBIS past and present activities can be found in (Tsikrika and Manolopoulos, 2016 ) and at http://adbis.eu . ADBIS is considered among core European conferences on practical and theoretical aspects of databases, data engineering, data management as well as information systems development and management. In this context, the most frequent research topics addressed by researchers submitting papers to ADBIS within the last ten years include: Data streams , Data models and modeling , Data cleaning and quality , Graph processing , Reasoning and intelligent systems , On-Line Analytical Processing , Software and systems , Ontologies and RDF , Algorithms , Indexing , Spatio-temporal data processing , Data integration , Query language and processing , Machine Learning .

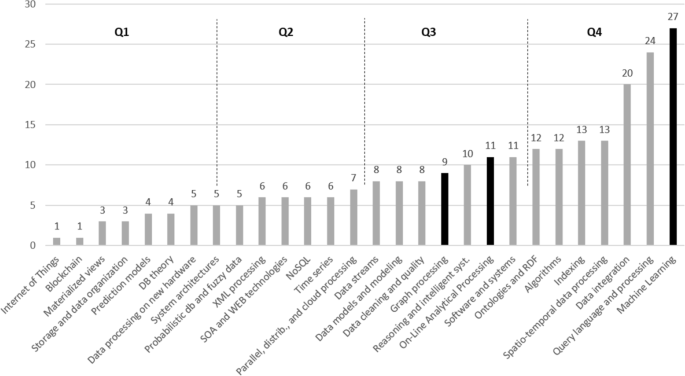

All research topics covered within the last 10 editions (years 2012-2021) of ADBIS are visualized in Fig. 2 . We constrained the analysis to the 10-years period in order to reflect the recent research interests. Moreover, we analyzed papers published only in the LNCS volumes, to include the highest quality papers. In Fig. 2 , the Y axis shows a total number of papers addressing a given topic (the median is equal to 7). Q1-Q4 represent the first, second, third, and fourth quartile, respectively.

Research topics within the last 10 years of ADBIS (based on papers published only in LNCS volumes)

The papers included in this special section address topics from Q3 and Q4, and thus represent frequent ADBIS topics . These papers cover: Graph processing , OLAP , and Machine Learning (marked in black in Fig. 2 ). These three topics are outlined in Section 2 , whereas the papers included in this special section are summarized in Section 3 . It is worth to note that the most frequent ADBIS topics reflect world research trends and they follow research topics of top world conferences in databases and data engineering, including SIGMOD, VLDB, and ICDE (Wrembel et al., 2019 ). This special section includes three papers covering: Graph processing , OLAP , and Machine Learning .

3.2 Papers in this Special Section

The first paper (Belayneh et al., 2022 ), Speeding Up Reachability Queries in Public Transport Networks Using Graph , authored by Bezaye Tesfaye Belayneh, Nikolaus Augsten, Mateusz Pawlik, Michael H. Böhlen, and Christian S. Jensen, addresses the challenges discussed in Section 2.1 for a special case of temporal road networks graphs and a special case of queries, namely, reachability queries over public transport network.

An evaluation of such queries involves multiple computations of shortest paths with additional temporal constraints. Specifically, the connection time calculated as a difference between a departure of the outgoing vehicle and an arrival of previous incoming vehicle is added to the length of a path. The problem is, in general, NP -hard. Therefore, an approximate algorithm is needed to solve the problem efficiently. To this end, the authors propose an algorithm based on graph partitioning: the problem is split into smaller problems. A set of boundary nodes is pre-calculated for each partition. The shortest path is found in each partition (called a cell in the paper) and a choice of a path between partitions. Pre-calculated paths inside cells constitute an index that significantly speeds up the search. In the proposed evaluation, the search is limited to startpoint and endpoint cells and search for chains of cells, as the paths inside cells and boundary nodes are pre-calculatied. The partitioning provides locality, but of course actual performance depends on the choice of partitioning algorithm. The paper contains deep performance analysis and comparison of different partitioning algorithms.

The second paper (Francia et al., 2022 ), entitled Enhancing Cubes with Models to Describe Multidimensional Data , by Matteo Francia, Patrick Marcel, Veronika Peralta, and Stefano Rizzi, presents a first step toward a proof of concept of the Intentional Analytics Model (IAM).

IAM mobilizes both Online Analytical Processing (OLAP) and various machine learning methods to allow users express the so-called analysis intentions and obtain the so-called enhanced (annotated) data cube. Analysis intentions are expressed with five operators. The paper focuses on formalizing and implementing the describe operator, which describes cube measures. Enhanced cube cells are associated with interesting components of models (e.g., clustering models) that are automatically extracted from cubes. For example, cells containing outliers can be highlighted.

Moreover, the authors propose a measure to assess the interestingness of model components in terms of novelty, peculiarity and surprise during the user’s data navigation. A dataviz is also automatically produced by a heuristic to depict enhanced cubes, by coupling text-based representations (a pivot table and a ranked component list) and graphical representations, i.e., various possible charts. Eventually, the whole approach is evaluated through experiments that target efficiency, scalability, effectiveness, and formulation complexity.

The third paper (Ferrettini et al., 2022 ), entitled Coalitional Strategies for Efficient Individual Prediction Explanation , by Gabriel Ferrettini, Elodie Escriva, Julien Aligon, Jean-Baptiste Excoffier, and Chantal Soulé-Dupuy, addresses the problem of explaining machine learning models. The goal of this work was to develop a general method for facilitating the understanding of how a machine learning model works, with a particular focus on identifying groups of attributes that affect a ML model, i.e., a quality of prediction provided by the model.

A starting point of the investigation is an observation that attributes cannot be considered as independent of each other, therefore it was required to verify the influence of all possible attributes combinations on the model quality. The influence of an attribute is measured according to its importance in each group an attribute can belong to. A complete influence of an attribute now takes into consideration its importance among all the possible attribute combinations. Computing the complete influence is of exponential complexity. For this reason, efficient methods for finding influential groups are needed.

In this context, the paper describes a method for identifying groups of attributes that are crucial for a quality of a ML model. To this end, the authors proposed the so-called coalitions . A coalition includes these attributes that influence a ML model. In order to identify coalitions, the authors proposed to use the following techniques:

Model-based coalition , where interactions between attributes used in a model are detected by analyzing the usage of the attributes by the model. To this end, the values of attributes in an input data set are modified and it is observed how the model predictions vary.

PCA-based coalition , where the PCA method is applied to create a set of combined attributes, represented by a new attribute obtained from the PCA. This set is considered as an influential group of attributes.

Variance inflation factor-based coalitions , where the standard variance inflation factor (VIF) is an estimation of the multicollinearity of the attributes in a dataset, w.r.t. a given target attribute. VIF is based on the R coefficient of determination of the linear regression. Since the value of VIF is computed by means of a linear regression, this method is suitable for coalitions where linear correlation between attributes exist.

Spearman correlation coefficient-based coalition , which takes into account non-linear correlations between attributes. The correlations are computed between all pairs of attributes and their correlations are represented by the Spearman coefficient.

These methods were evaluated by excessive experiments on multiple data sets provided by openml.org, for two classification algorithms, namely Random Forest and Support Vector Machine. As the baseline, the so-called complete method was selected. The obtained results, show that the proposed methods provided promising performance characteristics in terms of computation time and model accuracy.

https://www.gartner.com/imagesrv/media-products/pdf/Kx/KX-1-3CZ44RH.pdf

https://dblp.uni-trier.de/

https://trends.google.com/trends/

Abelló, A., Romero, O., Pedersen, T.B., Llavori, R.B., Nebot, V., Cabo, M.J.A., & Simitsis, A. (2015). Using semantic web technologies for exploratory OLAP: a survey. IEEE Transactions on Knowledge and Data Enginering , 27 (2), 571–588.

Article Google Scholar

Ali, S.M.F., & Wrembel, R. (2017). From conceptual design to performance optimization of ETL workflows: current state of research and open problems. The VLDB Journal , 26 (6), 777–801.

Angles, R., Arenas, M., Barcelo, P., Boncz, P., Fletcher, G., Gutierrez, C., Lindaaker, T., Paradies, M., Plantikow, S., Sequeda, J., van Rest, O., & Voigt, H. (2018). G-core: a core for future graph query languages. In ACM SIGMOD Int. Conf. on management of data (pp. 1421–1432).

Azzini, A.S.B. Jr, Bellandi, V., Catarci, T., Ceravolo, P., Cudré-mauroux, P., Maghool, S., Pokorný, J., Scannapieco, M., Sédes, F., Tavares, G.M., & Wrembel, R. (2021). Advances in data management in the big data era. In Advancing research in information and communication technology, IFIP AICT , (Vol. 600 pp. 99–126). Springer.

Belayneh, B.T., Augsten, N., Pawlik, M., Böhlen, M. H., & Jensen, C.S. (2022). Speeding up reachability queries in public transport networks using graph partitioning. Inf. Syst Frontiers 24 (1). https://doi.org/10.1007/s10796-021-10164-2 .

Bengio, Y., Lecun, Y., & Hinton, G. (2021). Deep learning for ai. Communcations of the ACM , 64 (7), 58–65.

Biggio, B., Diaz, C., Paulheim, H., & Saukh, O. (2021). Big minds sharing their vision on the future of ai (panel). In Database and expert systems applications (DEXA), LNCS , Vol. 12923. Springer.

Bilalli, B., Abelló, A., Aluja-banet, T., & Wrembel, R. (2019). PRESISTANT: learning based assistant for data pre-processing. Data & Knowledge Engineering 123.

Bodria, F., Giannotti, F., Guidotti, R., Naretto, F., Pedreschi, D., & Rinzivillo, S. (2021). Benchmarking and survey of explanation methods for black box models. arXiv: 2102.13076 .

Bogatu, A., Paton, N.W., Fernandes, A.A.A., & Koehler, M. (2019). Towards automatic data format transformations: Data wrangling at scale. The Computer Journal , 62 (7), 1044–1060.

Bouakkaz, M., Ouinten, Y., Loudcher, S., & Strekalova, Y.A. (2017). Textual aggregation approaches in OLAP context: a survey. Int. Journal of Information Management , 37 (6), 684–692.

Brdjanin, D., Banjac, D., Banjac, G., & Maric, S. (2018). An online business process model-driven generator of the conceptual database model. In Int. Conf. on web intelligence, mining and semantics .

Chen, H., Wu, B., Deng, S., Huang, C., Li, C., Li, Y., & Cheng, J. (2020). High performance distributed OLAP on property graphs with grasper. In Int. Conf. on management of data, SIGMOD (pp. 2705–2708). ACM.

Codd, E., Codd, S., & Salley, C. (1993). Providing OLAP to User-Analysts: an IT mandate. E.F codd & associates.

Cuzzocrea, A. (2015). Data warehousing and OLAP over Big Data: a survey of the state-of-the-art, open problems and future challenges. Int. Journal of Business Process Integration and Management , 7 (4), 372–377.

Danso, S.O., Zeng, Z., Muniz, G.T., & Ritchie, C. (2021). Developing an explainable machine learning-based personalised dementia risk prediction model: a transfer learning approach with ensemble learning algorithms. Frontiers Big Data , 613047 , 4.

Google Scholar

Deutsch, A., & Papakonstantinou, Y. (2018). Graph data models, query languages and programming paradigms. Proc. VLDB Endow. , 11 (12), 2106–2109.

Deutsch, A., Xu, Y., Wu, M., & Lee, V.E. (2020). Aggregation support for modern graph analytics in tigergraph. In ACM SIGMOD Int. Conf. on management of data (pp. 377–392).

Du, M., Liu, N., & Hu, X. (2019). Techniques for interpretable machine learning. Communcations of the ACM , 63 (1), 68–77.

Ferrettini, G., Escriva, E., Aligon, J., Excoffier, J.B., & Soulé-Dupuy, C. (2022). Coalitional strategies for efficient individual prediction explanation. Inf. Syst Frontiers 24(1). https://doi.org/10.1007/s10796-021-10141-9 .

Francia, M., Marcel, P., Peralta, V., & Rizzi, S. (2022). Enhancing cubes with models to describe multidimensional data. Inf. Syst Frontiers 24(1). https://doi.org/10.1007/s10796-021-10147-3 .

García-Magariño, I., Rajarajan, M., & Lloret, J. (2019). Human-centric AI for trustworthy iot systems with explainable multilayer perceptrons. IEEE Access , 7 , 125562–125574.

Garcia-Molina, H., Ullman, J.D., & Widom, J. (2009). Database systems - the complete book . London: Pearson Education.

Ghrab, A., Romero, O., Jouili, S., & Skhiri, S. (2018). Graph BI & analytics: Current state and future challenges. In Int. Conf. on big data analytics and knowledge discovery DAWAK, LNCS , (Vol. 11031 pp. 3–18). Springer.

Ghrab, A., Romero, O., Skhiri, S., & Zimányi, E. (2021). Topograph: an end-to-end framework to build and analyze graph cubes. Information Systems Frontiers , 23 (1), 203–226.

Giovanelli, J., Bilalli, B., & Abelló, A. (2021). Effective data pre-processing for automl. In Int. Workshop on design, optimization, languages and analytical processing of big data (DOLAP), CEUR workshop proceedings , (Vol. 2840 pp. 1–10).

Goebel, R., Chander, A., Holzinger, K., Lécué, F., Akata, Z., Stumpf, S., Kieseberg, P., & Holzinger, A. (2018). Explainable AI: the new 42?. In IFIP TC 5 Int. Cross-domain conf. on machine learning and knowledge extraction CD-MAKE, LNCS , (Vol. 11015 pp. 295–303). Springer.

Griffith, R.L. (1982). Three principles of representation for semantic networks. ACM Transactions on Database Systems 417–442.

Hai, R., Quix, C., & Jarke, M. (2021). Data lake concept and systems: a survey arXiv: 2106.09592 .

Han, J. (1997). OLAP Mining: Integration of OLAP with data mining. In Conf. on database semantics (DS), IFIP conference proceedings , (Vol. 124 pp. 3–20).

Han, J. (2017). OLAP, Spatial , (pp. 809–812). Berlin: Encyclopedia of GIS Springer.

Hernández, A.́B., Pérez, M.S., Gupta, S., & Muntés-mulero, V. (2018). Using machine learning to optimize parallelism in big data applications. Future Gener. Comput. Syst. , 86 , 1076–1092.

Holzschuher, F., & Peinl, R. (2013). Performance of graph query languages: Comparison of cypher, gremlin and native access in neo4j. In Joint EDBT/ICDT workshops (pp. 195–204).

Karimi, M., Wu, D., Wang, Z., & Shen, Y. (2021). Explainable deep relational networks for predicting compound-protein affinities and contacts. Journal of Chemical Information and Modeling , 61 (1), 46–66.

Kedziora, D.J., Musial, K., & Gabrys, B. (2020). Autonoml: Towards an integrated framework for autonomous machine learning. arXiv: 2012.12600 .

Koehler, M., Abel, E., Bogatu, A., Civili, C., Mazilu, L., Konstantinou, N., Fernandes, A.A.A., Keane, J.A., Libkin, L., & Paton, N.W. (2021). Incorporating data context to cost-effectively automate end-to-end data wrangling. IEEE Transactions on Big Data , 7 (1), 169–186.

Konstantinou, N., & Paton, N.W. (2020). Feedback driven improvement of data preparation pipelines. Information Systems , 92 , 101480.

Krensky, P., & Idoine, C. (2021). Magic quadrant for data science and machine learning platforms. https://www.gartner.com/doc/reprints?id=1-25D1UI0O&ct=210302&st=sb . Gartner.

Langer, M., Oster, D., Speith, T., Hermanns, H., Kästner, L., Schmidt, E., Sesing, A., & Baum, K. (2021). What do we want from explainable artificial intelligence (xai)? - a stakeholder perspective on XAI and a conceptual model guiding interdisciplinary XAI research. Artifitial Intelligence , 296 , 103473.

Liang, Y., Li, S., Yan, C., Li, M., & Jiang, C. (2021). Explaining the black-box model: a survey of local interpretation methods for deep neural networks. Neurocomputing , 419 , 168–182.

Loudcher, S., Jakawat, W., Soriano-Morales, E.P., & Favre, C. (2015). Combining OLAP and information networks for bibliographic data analysis: a survey. Scientometrics , 103 (2), 471–487.

Mahbooba, B., Timilsina, M., Sahal, R., & Serrano, M. (2021). Explainable artificial intelligence (XAI) to enhance trust management in intrusion detection systems using decision tree model. Complexity , 2021 , 6634811:1–6634811:11.

Mahboubi, H., Hachicha, M., & Darmont, J. (2009). XML Warehousing And OLAP, Encyclopedia of Data Warehousing and Mining, Second Edition, vol. IV, pp. 2109–2116 IGI Publishing.

Maniatis, A.S. (2004). The case for mobile OLAP. In Current trends in database technology – EDBT workshops, LNCS , (Vol. 3268 pp. 405–414).

Marketos, G., & Theodoridis, Y. (2010). Ad-hoc OLAP on Trajectory Data. In Int. Conf. on mobile data management (MDM) (pp. 189–198).

McHugh, J., Abiteboul, S., Goldman, R., Quass, D., & Widom, J. (1997). Lore: a database management system for semistructured data. SIGMOD Record , 26 (3), 54–66.

Meusel, R., Vigna, S., Lehmberg, O., & Bizer, C. (2014). Graph structure in the web — revisited: a trick of the heavy tail. In Int. Conf. on world wide web (pp. 427–432).

Miller, T. (2019). Explanation in artificial intelligence: Insights from the social sciences. Artificial Intelligence , 267 , 1–38.

Moldovan, D.I. (1984). An associative array architecture intended for semantic network processing. In Annual conf. of the ACM on the fifth generation challenge (pp. 212–221). ACM.

Moradi, M., & Samwald, M. (2021). Explaining black-box models for biomedical text classification. IEEE Journal of Biomedical and Health Informatics , 25 (8), 3112–3120.

Ohana, J., Ohana, S., Benhamou, E., Saltiel, D., & Guez, B. (2021). Explainable AI (XAI) models applied to the multi-agent environment of financial markets. In Explainable and transparent AI and multi-agent systems, lecture notes in computer science , (Vol. 12688 pp. 189–207). Springer.

Panetta, K. (2020). Gartner top strategic technology trends for 2021. https://www.gartner.com/smarterwithgartner/gartner-top-strategic-technology-trends-for-2021 . Gartner.

Peng, P., Zou, L., Özsu, M.T., Chen, L., & Zhao, D. (2016). Processing sparql queries over distributed rdf graphs. The VLDB Journal , 25 , 243–268.

Pokorný, J. (2016). Conceptual and database modelling of graph databases. In Int. Symp. on database engineering and application systems (IDEAS) (pp. 370–377).

Quemy, A. (2019). Data pipeline selection and optimization. In Int. Workshop on design, optimization, languages and analytical processing of big data, CEUR workshop proceedings , Vol. 2324.

Quemy, A. (2020). Two-stage optimization for machine learning workflow. Information Systems , 92 , 101483.

Richardson, J., Schlegel, K., Sallam, R., Kronz, A., & Sun, J. (2021). Magic quadrant for analytics and business intelligence platforms. https://www.gartner.com/doc/reprints?id=1-1YOXON7Q&ct=200330&st=sb . Gartner.

Romero, O., & Wrembel, R. (2020). Data engineering for data science: Two sides of the same coin. Int. Conf. on big data analytics and knowledge discovery DAWAK, LNCS, vol. 12393, pp. 157–166. Springer .

Romero, O., Wrembel, R., & Song, I. (2020). An alternative view on data processing pipelines from the DOLAP 2019 perspective. Information Systems 92.

Salka, C. (1998). Ending the MOLAP/ROLAP debate: Usage based aggregation and flexible HOLAP. In Int. Conf. on data engineering (ICDE) (p. 180).

Sardianos, C., Varlamis, I., Chronis, C., Dimitrakopoulos, G., Alsalemi, A., Himeur, Y., Bensaali, F., & Amira, A. (2021). The emergence of explainability of intelligent systems: Delivering explainable and personalized recommendations for energy efficiency. Int. Journal of Intelligent Systems , 36 (2), 656– 680.

Sawadogo, P.N., & Darmont, J. (2021). On data lake architectures and metadata management. Journal of Intelligent Information Systems , 56 (1), 97–120.

Schuetz, C.G., Bozzato, L., Neumayr, B., Schrefl, M., & Serafini, L. (2021). Knowledge graph OLAP. Semantic Web , 12 (4), 649–683.

Tsikrika, T., & Manolopoulos, Y. (2016). A retrospective study on the 20 years of the ADBIS conference. In New trends in databases and information systems, communications in computer and information science , (Vol. 637 pp. 1–15). Springer.

Vassiliadis, P., & Sellis, T.K. (1999). A survey of logical models for OLAP databases. SIGMOD Record , 28 (4), 64–69.

Witt, C., Bux, M., Gusew, W., & Leser, U. (2019). Predictive performance modeling for distributed batch processing using black box monitoring and machine learning. Information Systems , 82 , 33–52.

Wrembel, R., Abelló, A., & Song, I. (2019). DOLAP Data warehouse research over two decades: Trends and challenges. Information Systems , 85 , 44–47.

Download references

Acknowledgements

The Guest Editors thank all friends and colleagues who contributed to the success of this special section. We appreciate the effort of all the authors who were attracted by the topics of the ADBIS conference and submitted scientific contributions.

Special thanks go to the Editors-In-Chief Prof. Ram Ramesh and Prof. H. Raghav Rao, for offering this special section to the ADBIS conference and to the Springer staff, namely to Kristine Kay Canaleja and Aila O. Asejo-Nuique, for efficient cooperation.

We appreciated the work done by the reviewers who offered their expertise in assessing the quality of the submitted papers by providing constructive comments to the authors. The list of reviewers includes:

–Andras Benczur (Eötvös Loránd University, Hungary)

–Paweł Boiński (Poznan University of Technology, Poland)

–Omar Boussaid (Univeristé Lyon 2, France)

–Theo Härder (Technical University Kaiserslautern, Germany)

–Petar Jovanovic (Universitat Politècnica de Catalunya, Spain)

–Sebastian Link (University of Auckland, New Zealand)

–Angelo Montanari (University of Udine, Italy)

–Kestutis Normantas (Vilnius Gediminas Technical University, Lithuania)

–Carlos Ordonez (University of Houston, USA)

–Szymon Wilk (Poznan University of Technology, Poland)

–Vladimir Zadorozhny (University of Pittsburgh, USA)

Author information

Authors and affiliations.

Universié Lumière Lyon 2, Lyon, France

Jérôme Darmont

HSE Unviersity, Saint Petersburg, Russia

Boris Novikov

Poznan University of Technology, Poznan, Poland

Robert Wrembel

École Nationale Supérieure de Mécanique et d’Aérotechnique, Poitiers, France

Ladjel Bellatreche

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Robert Wrembel .

Additional information

Publisher’s note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Reprints and permissions

About this article

Darmont, J., Novikov, B., Wrembel, R. et al. Advances on Data Management and Information Systems. Inf Syst Front 24 , 1–10 (2022). https://doi.org/10.1007/s10796-021-10235-4

Download citation

Accepted : 22 December 2021

Published : 02 March 2022

Issue Date : February 2022

DOI : https://doi.org/10.1007/s10796-021-10235-4

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

Advertisement

- Find a journal

- Publish with us

- Track your research

IMAGES

VIDEO

COMMENTS

Explore the latest full-text research PDFs, articles, conference papers, preprints and more on DATABASE MANAGEMENT SYSTEMS. Find methods information, sources, references or conduct a literature ...

Download : Download high-res image (261KB) Download : Download full-size image Fig. 1. A simplified view of a database system and the end-user with the emphasis on components relevant to this study; the arrows represent the flow of information from the end-user's device to the database residing in persistent storage; the flow of information back to the software application is not illustrated ...

the database is used by one or several software applications via a DBMS. Collectively, the database, the DBMS, and the software application are referred to as a database system [31, p.7][17, p.65]. The separation of the database and the DBMS, especially in the realm of relational databases, is typically impossible without exporting the database ...

Systems administrators and computer science textbooks may expect databases to be instantiated in a small number of technologies (e.g., relational or graph-based database management systems), but there are numerous examples of databases in non-conventional or unexpected technologies, such as spreadsheets or other assemblages of files linked ...

Definition. A database management system is a software-based system to provide application access to data in a controlled and managed fashion. By allowing separate definition of the structure of the data, the database management system frees the application from many of the onerous details in the care and feeding of its data.

Journal of Database Management. Search within JDBM. Search Search. Home; Browse by Title; Periodicals; Journal of Database Management; ... In an information system context, stories are told and collected when the systems are developed. ... The adoption of empirical methods for secondary data analysis has witnessed a significant surge in IS ...

Abstract. A database management system (DBMS) is a software that implements functions to organize the storage and retrieval of data in databases according to a specific data model. Relational DBMS are the most mature and popular systems, but especially for the needs of big data applications, new categories of DBMS, such as NoSQL DBMS or Complex ...

For instance, a number of research articles have been published focusing on developing tools for teaching database systems course (Abut & Ozturk, 1997; Connolly et al., 2005; ... It is mainly because of its ability to handle data in a relational database management system and direct implementation of database theoretical concepts. Also, other ...

This research paper provides a comprehensive review of advancements in database management systems (DBMS) over the years, encompassing both relational and non-relational databases. By analyzing ...

2 DATABASE SYSTEMS 2.1 Database System Overview A database is a collection of interrelated data, typically stored according to a data model. Typically, the data is used by one or several software applications via a DBMS. Collectively, the database, the DBMS, and the software application are referred to as a database system [31, p.7][17, p.65].

For instance, a number of research articles have been published focusing on developing tools for teaching database systems course (Abut & Ozturk, 1997; Connolly et al., 2005; ... It is mainly because of its ability to handle data in a relational database management system and direct implementation of database theoretical concepts. Also, other ...

A database management system (DBMS) is a software application that manages databases, handles user queries, and interacts with the computer hardware. (Rodney Hebels et al., 2003) It is an improvement over file management systems as it reduces data redundancy, allows for related records to be retrieved, and provides a simple query language for ...

A database system consists of one or several database management systems, databases, and software applications. Fig. 2 shows a simplified flow of information from traditional data sources (depicted on the left-hand side, e.g., relational databases) to the vector database (gray). Continuing with the example of Greek plays, the human-readable texts of the plays are vectorized, i.e., transformed ...