Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- Qualitative vs. Quantitative Research | Differences, Examples & Methods

Qualitative vs. Quantitative Research | Differences, Examples & Methods

Published on April 12, 2019 by Raimo Streefkerk . Revised on June 22, 2023.

When collecting and analyzing data, quantitative research deals with numbers and statistics, while qualitative research deals with words and meanings. Both are important for gaining different kinds of knowledge.

Common quantitative methods include experiments, observations recorded as numbers, and surveys with closed-ended questions.

Quantitative research is at risk for research biases including information bias , omitted variable bias , sampling bias , or selection bias . Qualitative research Qualitative research is expressed in words . It is used to understand concepts, thoughts or experiences. This type of research enables you to gather in-depth insights on topics that are not well understood.

Common qualitative methods include interviews with open-ended questions, observations described in words, and literature reviews that explore concepts and theories.

Table of contents

The differences between quantitative and qualitative research, data collection methods, when to use qualitative vs. quantitative research, how to analyze qualitative and quantitative data, other interesting articles, frequently asked questions about qualitative and quantitative research.

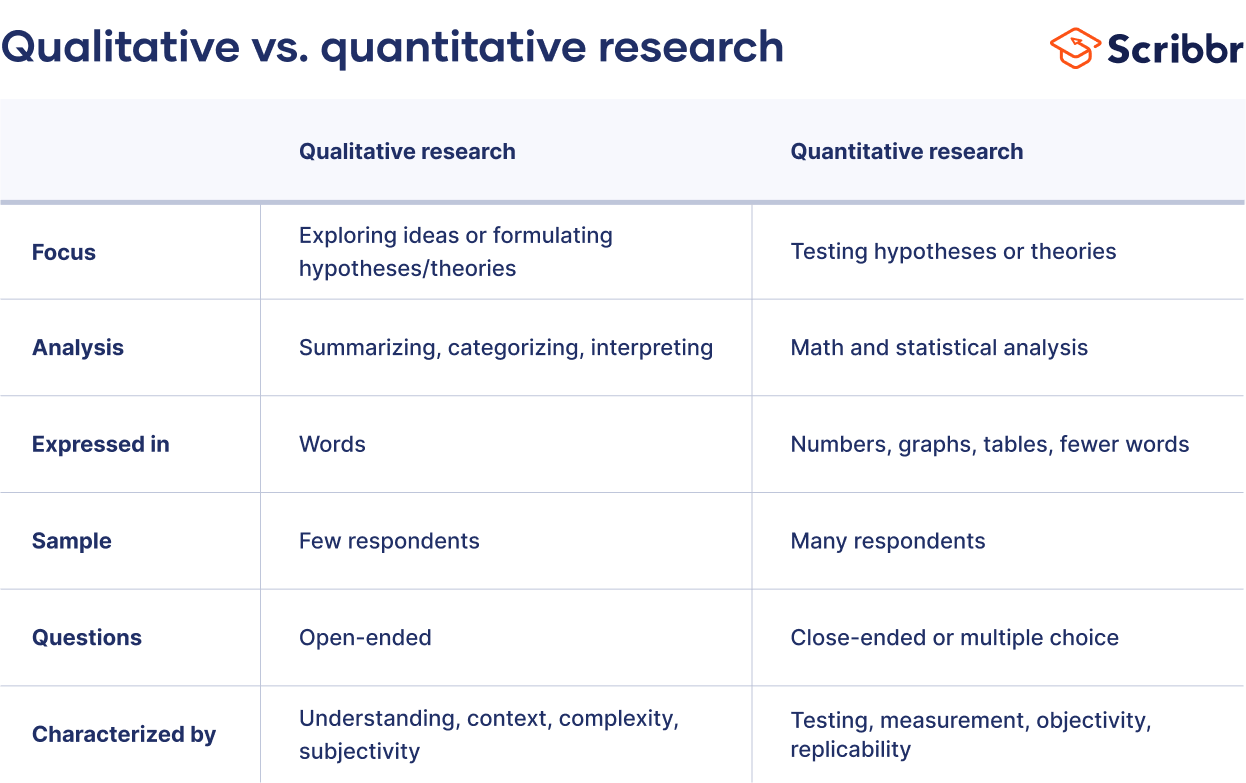

Quantitative and qualitative research use different research methods to collect and analyze data, and they allow you to answer different kinds of research questions.

Quantitative and qualitative data can be collected using various methods. It is important to use a data collection method that will help answer your research question(s).

Many data collection methods can be either qualitative or quantitative. For example, in surveys, observational studies or case studies , your data can be represented as numbers (e.g., using rating scales or counting frequencies) or as words (e.g., with open-ended questions or descriptions of what you observe).

However, some methods are more commonly used in one type or the other.

Quantitative data collection methods

- Surveys : List of closed or multiple choice questions that is distributed to a sample (online, in person, or over the phone).

- Experiments : Situation in which different types of variables are controlled and manipulated to establish cause-and-effect relationships.

- Observations : Observing subjects in a natural environment where variables can’t be controlled.

Qualitative data collection methods

- Interviews : Asking open-ended questions verbally to respondents.

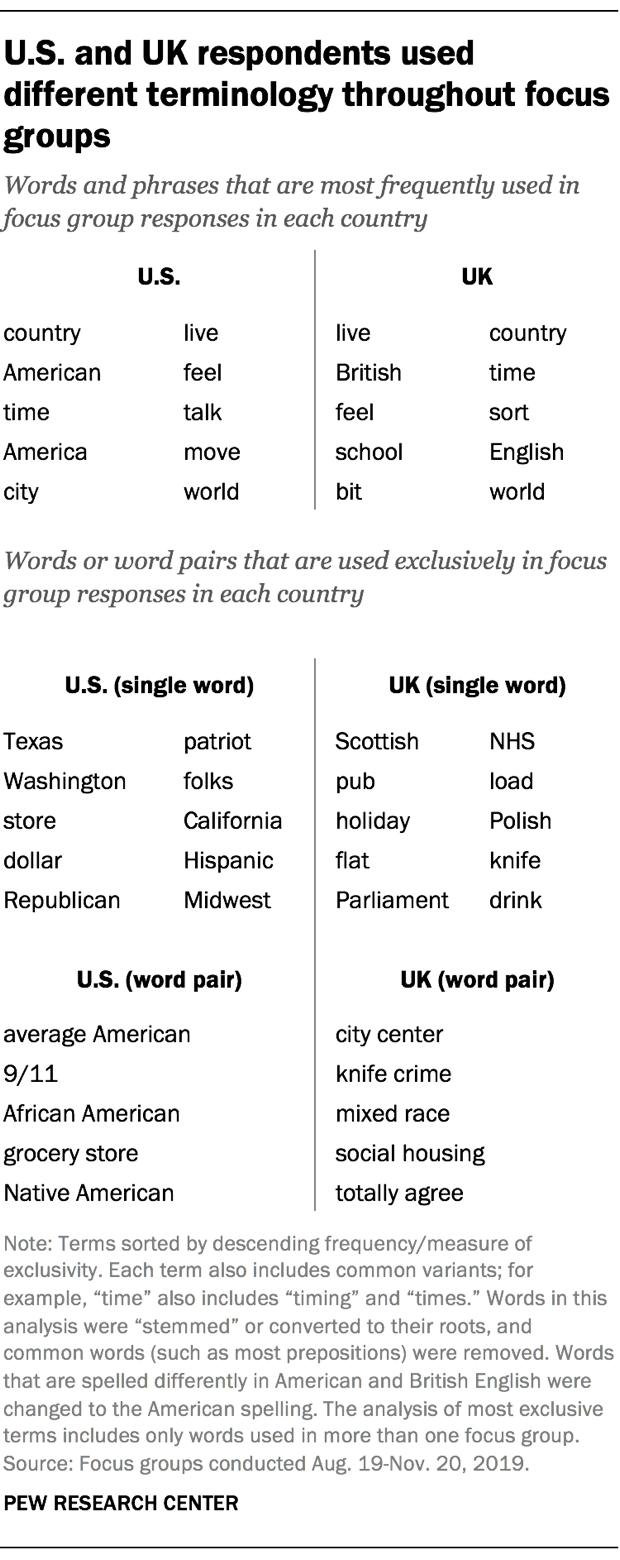

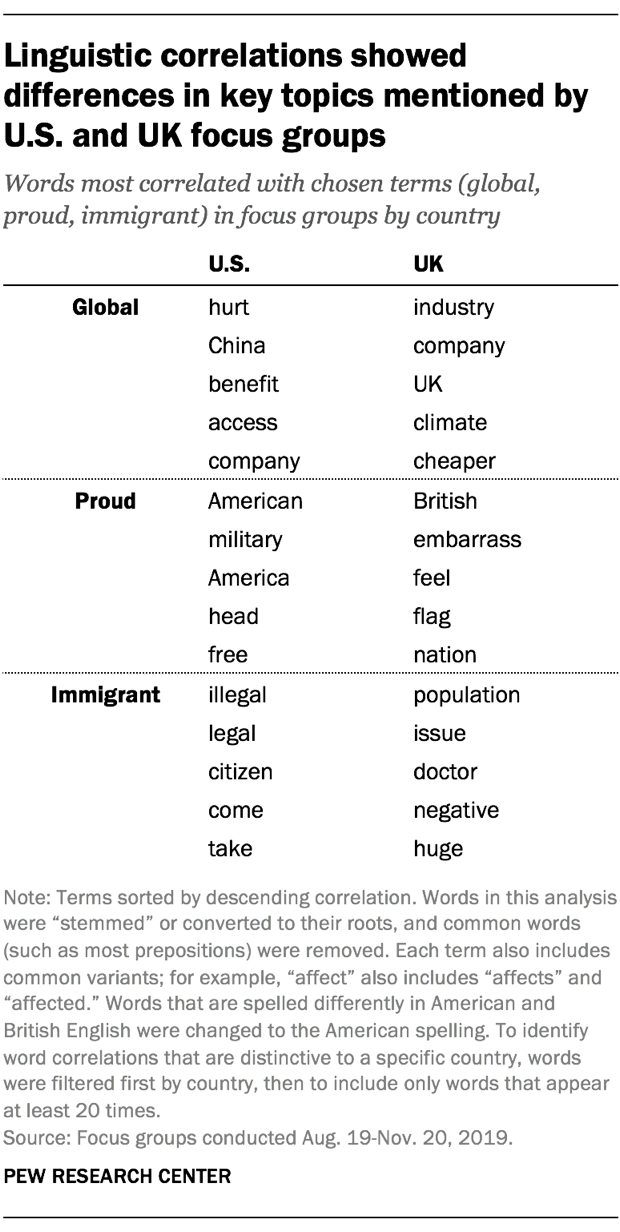

- Focus groups : Discussion among a group of people about a topic to gather opinions that can be used for further research.

- Ethnography : Participating in a community or organization for an extended period of time to closely observe culture and behavior.

- Literature review : Survey of published works by other authors.

A rule of thumb for deciding whether to use qualitative or quantitative data is:

- Use quantitative research if you want to confirm or test something (a theory or hypothesis )

- Use qualitative research if you want to understand something (concepts, thoughts, experiences)

For most research topics you can choose a qualitative, quantitative or mixed methods approach . Which type you choose depends on, among other things, whether you’re taking an inductive vs. deductive research approach ; your research question(s) ; whether you’re doing experimental , correlational , or descriptive research ; and practical considerations such as time, money, availability of data, and access to respondents.

Quantitative research approach

You survey 300 students at your university and ask them questions such as: “on a scale from 1-5, how satisfied are your with your professors?”

You can perform statistical analysis on the data and draw conclusions such as: “on average students rated their professors 4.4”.

Qualitative research approach

You conduct in-depth interviews with 15 students and ask them open-ended questions such as: “How satisfied are you with your studies?”, “What is the most positive aspect of your study program?” and “What can be done to improve the study program?”

Based on the answers you get you can ask follow-up questions to clarify things. You transcribe all interviews using transcription software and try to find commonalities and patterns.

Mixed methods approach

You conduct interviews to find out how satisfied students are with their studies. Through open-ended questions you learn things you never thought about before and gain new insights. Later, you use a survey to test these insights on a larger scale.

It’s also possible to start with a survey to find out the overall trends, followed by interviews to better understand the reasons behind the trends.

Qualitative or quantitative data by itself can’t prove or demonstrate anything, but has to be analyzed to show its meaning in relation to the research questions. The method of analysis differs for each type of data.

Analyzing quantitative data

Quantitative data is based on numbers. Simple math or more advanced statistical analysis is used to discover commonalities or patterns in the data. The results are often reported in graphs and tables.

Applications such as Excel, SPSS, or R can be used to calculate things like:

- Average scores ( means )

- The number of times a particular answer was given

- The correlation or causation between two or more variables

- The reliability and validity of the results

Analyzing qualitative data

Qualitative data is more difficult to analyze than quantitative data. It consists of text, images or videos instead of numbers.

Some common approaches to analyzing qualitative data include:

- Qualitative content analysis : Tracking the occurrence, position and meaning of words or phrases

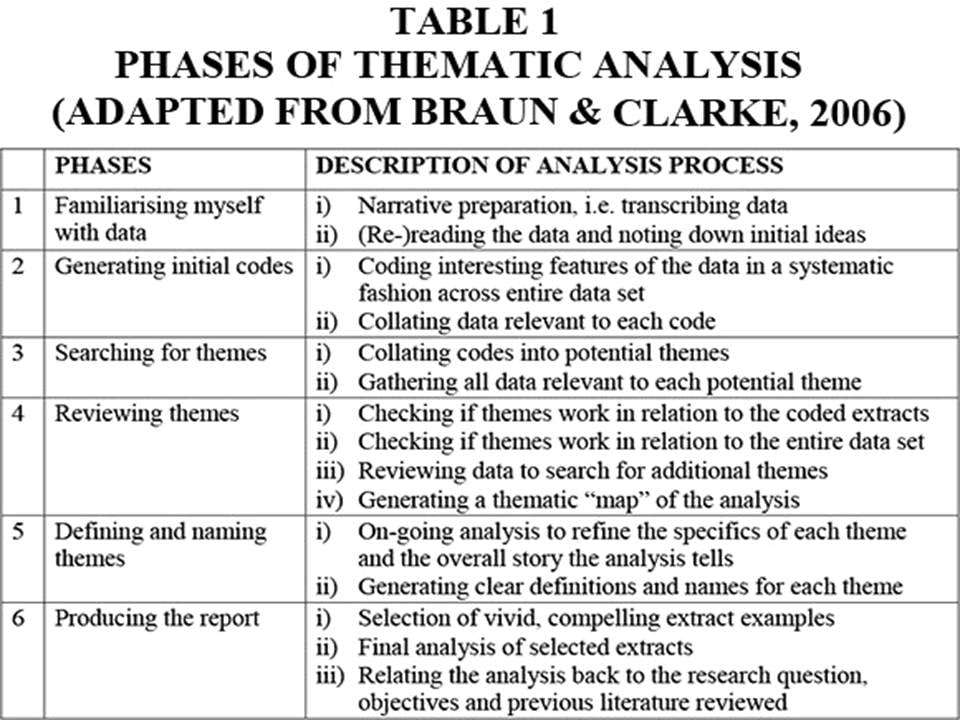

- Thematic analysis : Closely examining the data to identify the main themes and patterns

- Discourse analysis : Studying how communication works in social contexts

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Chi square goodness of fit test

- Degrees of freedom

- Null hypothesis

- Discourse analysis

- Control groups

- Mixed methods research

- Non-probability sampling

- Quantitative research

- Inclusion and exclusion criteria

Research bias

- Rosenthal effect

- Implicit bias

- Cognitive bias

- Selection bias

- Negativity bias

- Status quo bias

Quantitative research deals with numbers and statistics, while qualitative research deals with words and meanings.

Quantitative methods allow you to systematically measure variables and test hypotheses . Qualitative methods allow you to explore concepts and experiences in more detail.

In mixed methods research , you use both qualitative and quantitative data collection and analysis methods to answer your research question .

The research methods you use depend on the type of data you need to answer your research question .

- If you want to measure something or test a hypothesis , use quantitative methods . If you want to explore ideas, thoughts and meanings, use qualitative methods .

- If you want to analyze a large amount of readily-available data, use secondary data. If you want data specific to your purposes with control over how it is generated, collect primary data.

- If you want to establish cause-and-effect relationships between variables , use experimental methods. If you want to understand the characteristics of a research subject, use descriptive methods.

Data collection is the systematic process by which observations or measurements are gathered in research. It is used in many different contexts by academics, governments, businesses, and other organizations.

There are various approaches to qualitative data analysis , but they all share five steps in common:

- Prepare and organize your data.

- Review and explore your data.

- Develop a data coding system.

- Assign codes to the data.

- Identify recurring themes.

The specifics of each step depend on the focus of the analysis. Some common approaches include textual analysis , thematic analysis , and discourse analysis .

A research project is an academic, scientific, or professional undertaking to answer a research question . Research projects can take many forms, such as qualitative or quantitative , descriptive , longitudinal , experimental , or correlational . What kind of research approach you choose will depend on your topic.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Streefkerk, R. (2023, June 22). Qualitative vs. Quantitative Research | Differences, Examples & Methods. Scribbr. Retrieved June 28, 2024, from https://www.scribbr.com/methodology/qualitative-quantitative-research/

Is this article helpful?

Raimo Streefkerk

Other students also liked, what is quantitative research | definition, uses & methods, what is qualitative research | methods & examples, mixed methods research | definition, guide & examples, get unlimited documents corrected.

✔ Free APA citation check included ✔ Unlimited document corrections ✔ Specialized in correcting academic texts

- - Google Chrome

Intended for healthcare professionals

- My email alerts

- BMA member login

- Username * Password * Forgot your log in details? Need to activate BMA Member Log In Log in via OpenAthens Log in via your institution

Search form

- Advanced search

- Search responses

- Search blogs

- Three techniques for...

Three techniques for integrating data in mixed methods studies

- Related content

- Peer review

- Alicia O’Cathain , professor 1 ,

- Elizabeth Murphy , professor 2 ,

- Jon Nicholl , professor 1

- 1 Medical Care Research Unit, School of Health and Related Research, University of Sheffield, Sheffield S1 4DA, UK

- 2 University of Leicester, Leicester, UK

- Correspondence to: A O’Cathain a.ocathain{at}sheffield.ac.uk

- Accepted 8 June 2010

Techniques designed to combine the results of qualitative and quantitative studies can provide researchers with more knowledge than separate analysis

Health researchers are increasingly using designs that combine qualitative and quantitative methods, and this is often called mixed methods research. 1 Integration—the interaction or conversation between the qualitative and quantitative components of a study—is an important aspect of mixed methods research, and, indeed, is essential to some definitions. 2 Recent empirical studies of mixed methods research in health show, however, a lack of integration between components, 3 4 which limits the amount of knowledge that these types of studies generate. Without integration, the knowledge yield is equivalent to that from a qualitative study and a quantitative study undertaken independently, rather than achieving a “whole greater than the sum of the parts.” 5

Barriers to integration have been identified in both health and social research. 6 7 One barrier is the absence of formal education in mixed methods research. Fortunately, literature is rapidly expanding to fill this educational gap, including descriptions of how to integrate data and findings from qualitative and quantitative methods. 8 9 In this article we outline three techniques that may help health researchers to integrate data or findings in their mixed methods studies and show how these might enhance knowledge generated from this approach.

Triangulation protocol

Researchers will often use qualitative and quantitative methods to examine different aspects of an overall research question. For example, they might use a randomised controlled trial to assess the effectiveness of a healthcare intervention and semistructured interviews with patients and health professionals to consider the way in which the intervention was used in the real world. Alternatively, they might use a survey of service users to measure satisfaction with a service and focus groups to explore views of care in more depth. Data are collected and analysed separately for each component to produce two sets of findings. Researchers will then attempt to combine these findings, sometimes calling this process triangulation. The term triangulation can be confusing because it has two meanings. 10 It can be used to describe corroboration between two sets of findings or to describe a process of studying a problem using different methods to gain a more complete picture. The latter meaning is commonly used in mixed methods research and is the meaning used here.

The process of triangulating findings from different methods takes place at the interpretation stage of a study when both data sets have been analysed separately (figure ⇓ ). Several techniques have been described for triangulating findings. They require researchers to list the findings from each component of a study on the same page and consider where findings from each method agree (convergence), offer complementary information on the same issue (complementarity), or appear to contradict each other (discrepancy or dissonance). 11 12 13 Explicitly looking for disagreements between findings from different methods is an important part of this process. Disagreement is not a sign that something is wrong with a study. Exploration of any apparent “inter-method discrepancy” may lead to a better understanding of the research question, 14 and a range of approaches have been used within health services research to explore inter-method discrepancy. 15

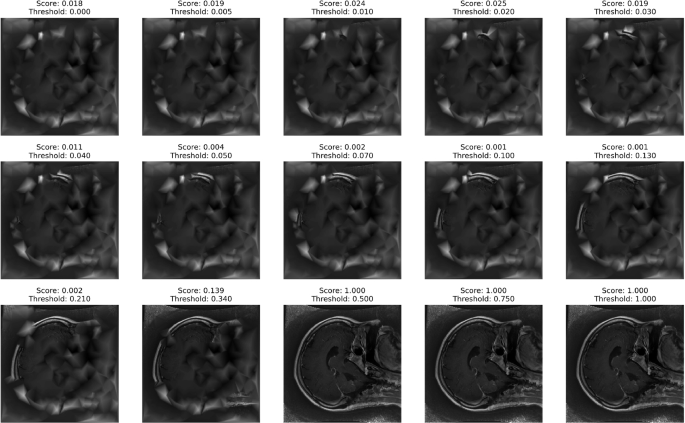

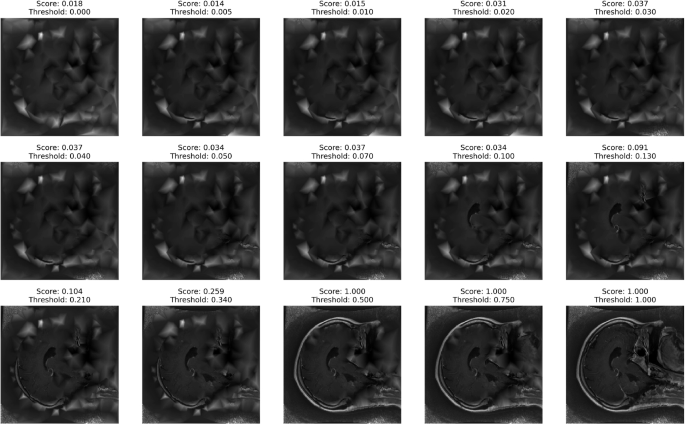

Point of application for three techniques for integrating data in mixed methods research

- Download figure

- Open in new tab

- Download powerpoint

The most detailed description of how to carry out triangulation is the triangulation protocol, 11 which although developed for multiple qualitative methods, is relevant to mixed methods studies. This technique involves producing a “convergence coding matrix” to display findings emerging from each component of a study on the same page. This is followed by consideration of where there is agreement, partial agreement, silence, or dissonance between findings from different components. This technique for triangulation is the only one to include silence—where a theme or finding arises from one data set and not another. Silence might be expected because of the strengths of different methods to examine different aspects of a phenomenon, but surprise silences might also arise that help to increase understanding or lead to further investigations.

The triangulation protocol moves researchers from thinking about the findings related to each method, to what Farmer and colleagues call meta-themes that cut across the findings from different methods. 11 They show a worked example of triangulation protocol, but we could find no other published example. However, similar principles were used in an iterative mixed methods study to understand patient and carer satisfaction with a new primary angioplasty service. 16 Researchers conducted semistructured interviews with 16 users and carers to explore their experiences and views of the new service. These were used to develop a questionnaire for a survey of 595 patients (and 418 of their carers) receiving either the new service or usual care. Finally, 17 of the patients who expressed dissatisfaction with aftercare and rehabilitation were followed up to explore this further in semistructured interviews. A shift of thinking to meta-themes led the researchers away from reporting the findings from the interviews, survey, and follow-up interviews sequentially to consider the meta-themes of speed and efficiency, convenience of care, and discharge and after care. The survey identified that a higher percentage of carers of patients using the new service rated the convenience of visiting the hospital as poor than those using usual care. The interviews supported this concern about the new service, but also identified that the weight carers gave to this concern was low in the context of their family member’s life being saved.

Morgan describes this move as the “third effort” because it occurs after analysis of the qualitative and the quantitative components. 17 It requires time and energy that must be planned into the study timetable. It is also useful to consider who will carry out the integration process. Farmer and colleagues require two researchers to work together during triangulation, which can be particularly important in mixed methods studies if different researchers take responsibility for the qualitative and quantitative components. 11

Following a thread

Moran-Ellis and colleagues describe a different technique for integrating the findings from the qualitative and quantitative components of a study, called following a thread. 18 They state that this takes place at the analysis stage of the research process (figure ⇑ ). It begins with an initial analysis of each component to identify key themes and questions requiring further exploration. Then the researchers select a question or theme from one component and follow it across the other components—they call this the thread. The authors do not specify steps in this technique but offer a visual model for working between datasets. An approach similar to this has been undertaken in health services research, although the researchers did not label it as such, probably because the technique has not been used frequently in the literature (box)

An example of following a thread 19

Adamson and colleagues explored the effect of patient views on the appropriate use of services and help seeking using a survey of people registered at a general practice and semistructured interviews. The qualitative (22 interviews) and quantitative components (survey with 911 respondents) took place concurrently.

The researchers describe what they call an iterative or cyclical approach to analysis. Firstly, the preliminary findings from the interviews generated a hypothesis for testing in the survey data. A key theme from the interviews concerned the self rationing of services as a responsible way of using scarce health care. This theme was then explored in the survey data by testing the hypothesis that people’s views of the appropriate use of services would explain their help seeking behaviour. However, there was no support for this hypothesis in the quantitative analysis because the half of survey respondents who felt that health services were used inappropriately were as likely to report help seeking for a series of symptoms presented in standardised vignettes as were respondents who thought that services were not used inappropriately. The researchers then followed the thread back to the interview data to help interpret this finding.

After further analysis of the interview data the researchers understood that people considered the help seeking of other people to be inappropriate, rather than their own. They also noted that feeling anxious about symptoms was considered to be a good justification for seeking care. The researchers followed this thread back into the survey data and tested whether anxiety levels about the symptoms in the standardised vignettes predicted help seeking behaviour. This second hypothesis was supported by the survey data. Following a thread led the researchers to conclude that patients who seek health care for seemingly minor problems have exceeded their thresholds for the trade-off between not using services inappropriately and any anxiety caused by their symptoms.

Mixed methods matrix

A unique aspect of some mixed methods studies is the availability of both qualitative and quantitative data on the same cases. Data from the qualitative and quantitative components can be integrated at the analysis stage of a mixed methods study (figure ⇑ ). For example, in-depth interviews might be carried out with a sample of survey respondents, creating a subset of cases for which there is both a completed questionnaire and a transcript. Cases may be individuals, groups, organisations, or geographical areas. 9 All the data collected on a single case can be studied together, focusing attention on cases, rather than variables or themes, within a study. The data can be examined in detail for each case—for example, comparing people’s responses to a questionnaire with their interview transcript. Alternatively, data on each case can be summarised and displayed in a matrix 8 9 20 along the lines of Miles and Huberman’s meta-matrix. 21 Within a mixed methods matrix, the rows represent the cases for which there is both qualitative and quantitative data, and the columns display different data collected on each case. This allows researchers to pay attention to surprises and paradoxes between types of data on a single case and then look for patterns across all cases 20 in a qualitative cross case analysis. 21

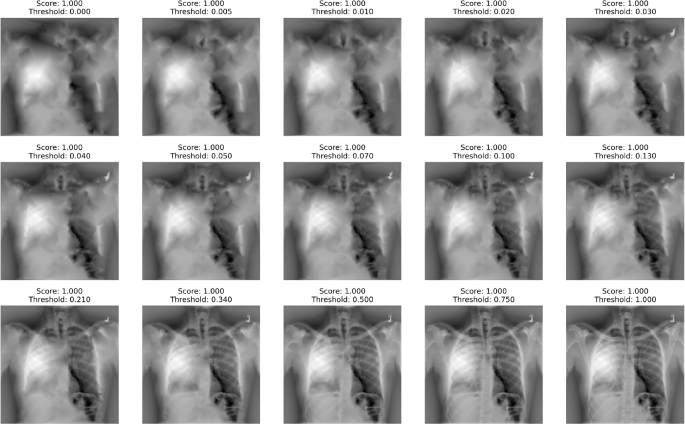

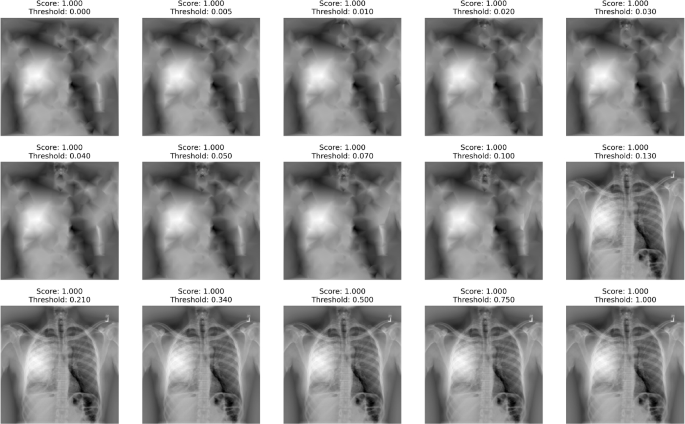

We used a mixed methods matrix to study the relation between types of team working and the extent of integration in mixed methods studies in health services research (table ⇓ ). 22 Quantitative data were extracted from the proposals, reports, and peer reviewed publications of 75 mixed methods studies, and these were analysed to describe the proportion of studies with integrated outputs such as mixed methods journal articles. Two key variables in the quantitative component were whether the study was assessed as attempting to integrate qualitative or quantitative data or findings and the type of publications produced. We conducted qualitative interviews with 20 researchers who had worked on some of these studies to explore how mixed methods research was practised, including how the team worked together.

Example of a mixed methods matrix for a study exploring the relationship between types of teams and integration between qualitative and quantitative components of studies* 22

- View inline

The shared cases between the qualitative and quantitative components were 21 mixed methods studies (because one interviewee had worked on two studies in the quantitative component). A matrix was formed with each of the 21 studies as a row. The first column of the matrix contained the study identification, the second column indicated whether integration had occurred in that project, and the third column the score for integration of publications emerging from the study. The rows were then ordered to show the most integrated cases first. This ordering of rows helped us to see patterns across rows.

The next columns were themes from the qualitative interview with a researcher from that project. For example, the first theme was about the expertise in qualitative research within the team and whether the interviewee reported this as adequate for the study. The matrix was then used in the context of the qualitative analysis to explore the issues that affected integration. In particular, it helped to identify negative cases (when someone in the analysis doesn’t fit with the conclusions the analysis is coming to) within the qualitative analysis to facilitate understanding. Interviewees reported the need for experienced qualitative researchers on mixed methods studies to ensure that the qualitative component was published, yet two cases showed that this was neither necessary nor sufficient. This pushed us to explore other factors in a research team that helped generate outputs, and integrated outputs, from a mixed methods study.

Themes from a qualitative study can be summarised to the point where they are coded into quantitative data. In the matrix (table ⇑ ), the interviewee’s perception of the adequacy of qualitative expertise on the team could have been coded as adequate=1 or not=2. This is called “quantitising” of qualitative data 23 ; coded data can then be analysed with data from the quantitative component. This technique has been used to great effect in healthcare research to identify the discrepancy between health improvement assessed using quantitative measures and with in-depth interviews in a randomised controlled trial. 24

We have presented three techniques for integration in mixed methods research in the hope that they will inspire researchers to explore what can be learnt from bringing together data from the qualitative and quantitative components of their studies. Using these techniques may give the process of integration credibility rather than leaving researchers feeling that they have “made things up.” It may also encourage researchers to describe their approaches to integration, allowing them to be transparent and helping them to develop, critique, and improve on these techniques. Most importantly, we believe it may help researchers to generate further understanding from their research.

We have presented integration as unproblematic, but it is not. It may be easier for single researchers to use these techniques than a large research team. Large teams will need to pay attention to team dynamics, considering who will take responsibility for integration and who will be taking part in the process. In addition, we have taken a technical stance here rather than paying attention to different philosophical beliefs that may shape approaches to integration. We consider that these techniques would work in the context of a pragmatic or subtle realist stance adopted by some mixed methods researchers. 25 Finally, it is important to remember that these techniques are aids to integration and are helpful only when applied with expertise.

Summary points

Health researchers are increasingly using designs which combine qualitative and quantitative methods

However, there is often lack of integration between methods

Three techniques are described that can help researchers to integrate data from different components of a study: triangulation protocol, following a thread, and the mixed methods matrix

Use of these methods will allow researchers to learn more from the information they have collected

Cite this as: BMJ 2010;341:c4587

Funding: Medical Research Council grant reference G106/1116

Competing interests: All authors have completed the unified competing interest form at www.icmje.org/coi_disclosure.pdf (available on request from the corresponding author) and declare financial support for the submitted work from the Medical Research Council; no financial relationships with commercial entities that might have an interest in the submitted work; no spouses, partners, or children with relationships with commercial entities that might have an interest in the submitted work; and no non-financial interests that may be relevant to the submitted work.

Contributors: AOC wrote the paper. JN and EM contributed to drafts and all authors agreed the final version. AOC is guarantor.

Provenance and peer review: Not commissioned; externally peer reviewed.

- ↵ Lingard L, Albert M, Levinson W. Grounded theory, mixed methods and action research. BMJ 2008 ; 337 : a567 . OpenUrl FREE Full Text

- ↵ Creswell JW, Fetters MD, Ivankova NV. Designing a mixed methods study in primary care. Ann Fam Med 2004 ; 2 : 7 -12. OpenUrl Abstract / FREE Full Text

- ↵ Lewin S, Glenton C, Oxman AD. Use of qualitative methods alongside randomised controlled trials of complex healthcare interventions: methodological study. BMJ 2009 ; 339 : b3496 . OpenUrl Abstract / FREE Full Text

- ↵ O’Cathain A, Murphy E, Nicholl J. Integration and publications as indicators of ‘yield’ from mixed methods studies. J Mix Methods Res 2007 ; 1 : 147 -63. OpenUrl CrossRef Web of Science

- ↵ Barbour RS. The case for combining qualitative and quantitative approaches in health services research. J Health Serv Res Policy 1999 ; 4 : 39 -43. OpenUrl PubMed

- ↵ O’Cathain A, Nicholl J, Murphy E. Structural issues affecting mixed methods studies in health research: a qualitative study. BMC Med Res Methodol 2009 ; 9 : 82 . OpenUrl CrossRef PubMed

- ↵ Bryman A. Barriers to integrating quantitative and qualitative research. J Mix Methods Res 2007 ; 1 : 8 -22. OpenUrl CrossRef

- ↵ Creswell JW, Plano-Clark V. Designing and conducting mixed methods research . Sage, 2007 .

- ↵ Bazeley P. Analysing mixed methods data. In: Andrew S, Halcomb EJ, eds. Mixed methods research for nursing and the health sciences . Wiley-Blackwell, 2009 :84-118.

- ↵ Sandelowski M. Triangles and crystals: on the geometry of qualitative research. Res Nurs Health 1995 ; 18 : 569 -74. OpenUrl CrossRef PubMed Web of Science

- ↵ Farmer T, Robinson K, Elliott SJ, Eyles J. Developing and implementing a triangulation protocol for qualitative health research. Qual Health Res 2006 ; 16 : 377 -94. OpenUrl Abstract / FREE Full Text

- ↵ Foster RL. Addressing the epistemologic and practical issues in multimethod research: a procedure for conceptual triangulation. Adv Nurs Sci 1997 ; 20 : 1 -12. OpenUrl PubMed

- ↵ Erzerberger C, Prein G. Triangulation: validity and empirically based hypothesis construction. Qual Quant 1997 ; 31 : 141 -54. OpenUrl CrossRef Web of Science

- ↵ Fielding NG, Fielding JL. Linking data . Sage, 1986 .

- ↵ Moffatt S, White M, Mackintosh J, Howel D. Using quantitative and qualitative data in health services research—what happens when mixed method findings conflict? BMC Health Serv Res 2006 ; 6 : 28 . OpenUrl CrossRef PubMed

- ↵ Sampson FC, O’Cathain A, Goodacre S. Is primary angioplasty an acceptable alternative to thrombolysis? Quantitative and qualitative study of patient and carer satisfaction. Health Expectations (forthcoming).

- ↵ Morgan DL. Practical strategies for combining qualitative and quantitative methods: applications to health research. Qual Health Res 1998 ; 8 : 362 -76. OpenUrl Abstract / FREE Full Text

- ↵ Moran-Ellis J, Alexander VD, Cronin A, Dickinson M, Fielding J, Sleney J, et al. Triangulation and integration: processes, claims and implications. Qualitative Research 2006 ; 6 : 45 -59. OpenUrl Abstract / FREE Full Text

- ↵ Adamson J, Ben-Shlomo Y, Chaturvedi N, Donovan J. Exploring the impact of patient views on ‘appropriate’ use of services and help seeking: a mixed method study. Br J Gen Pract 2009 ; 59 : 496 -502. OpenUrl Web of Science

- ↵ Wendler MC. Triangulation using a meta-matrix. J Adv Nurs 2001 ; 35 : 521 -5. OpenUrl CrossRef PubMed Web of Science

- ↵ Miles M, Huberman A. Qualitative data analysis: an expanded sourcebook . Sage, 1994 .

- ↵ O’Cathain A, Murphy E, Nicholl J. Multidisciplinary, interdisciplinary or dysfunctional? Team working in mixed methods research. Qual Health Res 2008 ; 18 : 1574 -85. OpenUrl Abstract / FREE Full Text

- ↵ Sandelowski M. Combining qualitative and quantitative sampling, data collection, and analysis techniques in mixed-method studies. Res Nurs Health 2000 ; 23 : 246 -55. OpenUrl CrossRef PubMed Web of Science

- ↵ Campbell R, Quilty B, Dieppe P. Discrepancies between patients’ assessments of outcome: qualitative study nested within a randomised controlled trial. BMJ 2003 ; 326 : 252 -3. OpenUrl FREE Full Text

- ↵ Mays N, Pope C. Assessing quality in qualitative research. BMJ 2000 ; 320 : 50 -2. OpenUrl FREE Full Text

News alert: UC Berkeley has announced its next university librarian

Secondary menu

- Log in to your Library account

- Hours and Maps

- Connect from Off Campus

- UC Berkeley Home

Search form

Research methods--quantitative, qualitative, and more: overview.

- Quantitative Research

- Qualitative Research

- Data Science Methods (Machine Learning, AI, Big Data)

- Text Mining and Computational Text Analysis

- Evidence Synthesis/Systematic Reviews

- Get Data, Get Help!

About Research Methods

This guide provides an overview of research methods, how to choose and use them, and supports and resources at UC Berkeley.

As Patten and Newhart note in the book Understanding Research Methods , "Research methods are the building blocks of the scientific enterprise. They are the "how" for building systematic knowledge. The accumulation of knowledge through research is by its nature a collective endeavor. Each well-designed study provides evidence that may support, amend, refute, or deepen the understanding of existing knowledge...Decisions are important throughout the practice of research and are designed to help researchers collect evidence that includes the full spectrum of the phenomenon under study, to maintain logical rules, and to mitigate or account for possible sources of bias. In many ways, learning research methods is learning how to see and make these decisions."

The choice of methods varies by discipline, by the kind of phenomenon being studied and the data being used to study it, by the technology available, and more. This guide is an introduction, but if you don't see what you need here, always contact your subject librarian, and/or take a look to see if there's a library research guide that will answer your question.

Suggestions for changes and additions to this guide are welcome!

START HERE: SAGE Research Methods

Without question, the most comprehensive resource available from the library is SAGE Research Methods. HERE IS THE ONLINE GUIDE to this one-stop shopping collection, and some helpful links are below:

- SAGE Research Methods

- Little Green Books (Quantitative Methods)

- Little Blue Books (Qualitative Methods)

- Dictionaries and Encyclopedias

- Case studies of real research projects

- Sample datasets for hands-on practice

- Streaming video--see methods come to life

- Methodspace- -a community for researchers

- SAGE Research Methods Course Mapping

Library Data Services at UC Berkeley

Library Data Services Program and Digital Scholarship Services

The LDSP offers a variety of services and tools ! From this link, check out pages for each of the following topics: discovering data, managing data, collecting data, GIS data, text data mining, publishing data, digital scholarship, open science, and the Research Data Management Program.

Be sure also to check out the visual guide to where to seek assistance on campus with any research question you may have!

Library GIS Services

Other Data Services at Berkeley

D-Lab Supports Berkeley faculty, staff, and graduate students with research in data intensive social science, including a wide range of training and workshop offerings Dryad Dryad is a simple self-service tool for researchers to use in publishing their datasets. It provides tools for the effective publication of and access to research data. Geospatial Innovation Facility (GIF) Provides leadership and training across a broad array of integrated mapping technologies on campu Research Data Management A UC Berkeley guide and consulting service for research data management issues

General Research Methods Resources

Here are some general resources for assistance:

- Assistance from ICPSR (must create an account to access): Getting Help with Data , and Resources for Students

- Wiley Stats Ref for background information on statistics topics

- Survey Documentation and Analysis (SDA) . Program for easy web-based analysis of survey data.

Consultants

- D-Lab/Data Science Discovery Consultants Request help with your research project from peer consultants.

- Research data (RDM) consulting Meet with RDM consultants before designing the data security, storage, and sharing aspects of your qualitative project.

- Statistics Department Consulting Services A service in which advanced graduate students, under faculty supervision, are available to consult during specified hours in the Fall and Spring semesters.

Related Resourcex

- IRB / CPHS Qualitative research projects with human subjects often require that you go through an ethics review.

- OURS (Office of Undergraduate Research and Scholarships) OURS supports undergraduates who want to embark on research projects and assistantships. In particular, check out their "Getting Started in Research" workshops

- Sponsored Projects Sponsored projects works with researchers applying for major external grants.

- Next: Quantitative Research >>

- Last Updated: Apr 25, 2024 11:09 AM

- URL: https://guides.lib.berkeley.edu/researchmethods

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

- Qualitative vs Quantitative Research | Examples & Methods

Qualitative vs Quantitative Research | Examples & Methods

Published on 4 April 2022 by Raimo Streefkerk . Revised on 8 May 2023.

When collecting and analysing data, quantitative research deals with numbers and statistics, while qualitative research deals with words and meanings. Both are important for gaining different kinds of knowledge.

Common quantitative methods include experiments, observations recorded as numbers, and surveys with closed-ended questions. Qualitative research Qualitative research is expressed in words . It is used to understand concepts, thoughts or experiences. This type of research enables you to gather in-depth insights on topics that are not well understood.

Table of contents

The differences between quantitative and qualitative research, data collection methods, when to use qualitative vs quantitative research, how to analyse qualitative and quantitative data, frequently asked questions about qualitative and quantitative research.

Quantitative and qualitative research use different research methods to collect and analyse data, and they allow you to answer different kinds of research questions.

Prevent plagiarism, run a free check.

Quantitative and qualitative data can be collected using various methods. It is important to use a data collection method that will help answer your research question(s).

Many data collection methods can be either qualitative or quantitative. For example, in surveys, observations or case studies , your data can be represented as numbers (e.g. using rating scales or counting frequencies) or as words (e.g. with open-ended questions or descriptions of what you observe).

However, some methods are more commonly used in one type or the other.

Quantitative data collection methods

- Surveys : List of closed or multiple choice questions that is distributed to a sample (online, in person, or over the phone).

- Experiments : Situation in which variables are controlled and manipulated to establish cause-and-effect relationships.

- Observations: Observing subjects in a natural environment where variables can’t be controlled.

Qualitative data collection methods

- Interviews : Asking open-ended questions verbally to respondents.

- Focus groups: Discussion among a group of people about a topic to gather opinions that can be used for further research.

- Ethnography : Participating in a community or organisation for an extended period of time to closely observe culture and behavior.

- Literature review : Survey of published works by other authors.

A rule of thumb for deciding whether to use qualitative or quantitative data is:

- Use quantitative research if you want to confirm or test something (a theory or hypothesis)

- Use qualitative research if you want to understand something (concepts, thoughts, experiences)

For most research topics you can choose a qualitative, quantitative or mixed methods approach . Which type you choose depends on, among other things, whether you’re taking an inductive vs deductive research approach ; your research question(s) ; whether you’re doing experimental , correlational , or descriptive research ; and practical considerations such as time, money, availability of data, and access to respondents.

Quantitative research approach

You survey 300 students at your university and ask them questions such as: ‘on a scale from 1-5, how satisfied are your with your professors?’

You can perform statistical analysis on the data and draw conclusions such as: ‘on average students rated their professors 4.4’.

Qualitative research approach

You conduct in-depth interviews with 15 students and ask them open-ended questions such as: ‘How satisfied are you with your studies?’, ‘What is the most positive aspect of your study program?’ and ‘What can be done to improve the study program?’

Based on the answers you get you can ask follow-up questions to clarify things. You transcribe all interviews using transcription software and try to find commonalities and patterns.

Mixed methods approach

You conduct interviews to find out how satisfied students are with their studies. Through open-ended questions you learn things you never thought about before and gain new insights. Later, you use a survey to test these insights on a larger scale.

It’s also possible to start with a survey to find out the overall trends, followed by interviews to better understand the reasons behind the trends.

Qualitative or quantitative data by itself can’t prove or demonstrate anything, but has to be analysed to show its meaning in relation to the research questions. The method of analysis differs for each type of data.

Analysing quantitative data

Quantitative data is based on numbers. Simple maths or more advanced statistical analysis is used to discover commonalities or patterns in the data. The results are often reported in graphs and tables.

Applications such as Excel, SPSS, or R can be used to calculate things like:

- Average scores

- The number of times a particular answer was given

- The correlation or causation between two or more variables

- The reliability and validity of the results

Analysing qualitative data

Qualitative data is more difficult to analyse than quantitative data. It consists of text, images or videos instead of numbers.

Some common approaches to analysing qualitative data include:

- Qualitative content analysis : Tracking the occurrence, position and meaning of words or phrases

- Thematic analysis : Closely examining the data to identify the main themes and patterns

- Discourse analysis : Studying how communication works in social contexts

Quantitative research deals with numbers and statistics, while qualitative research deals with words and meanings.

Quantitative methods allow you to test a hypothesis by systematically collecting and analysing data, while qualitative methods allow you to explore ideas and experiences in depth.

In mixed methods research , you use both qualitative and quantitative data collection and analysis methods to answer your research question .

The research methods you use depend on the type of data you need to answer your research question .

- If you want to measure something or test a hypothesis , use quantitative methods . If you want to explore ideas, thoughts, and meanings, use qualitative methods .

- If you want to analyse a large amount of readily available data, use secondary data. If you want data specific to your purposes with control over how they are generated, collect primary data.

- If you want to establish cause-and-effect relationships between variables , use experimental methods. If you want to understand the characteristics of a research subject, use descriptive methods.

Data collection is the systematic process by which observations or measurements are gathered in research. It is used in many different contexts by academics, governments, businesses, and other organisations.

There are various approaches to qualitative data analysis , but they all share five steps in common:

- Prepare and organise your data.

- Review and explore your data.

- Develop a data coding system.

- Assign codes to the data.

- Identify recurring themes.

The specifics of each step depend on the focus of the analysis. Some common approaches include textual analysis , thematic analysis , and discourse analysis .

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Streefkerk, R. (2023, May 08). Qualitative vs Quantitative Research | Examples & Methods. Scribbr. Retrieved 24 June 2024, from https://www.scribbr.co.uk/research-methods/quantitative-qualitative-research/

Is this article helpful?

Raimo Streefkerk

- Translators

- Graphic Designers

Please enter the email address you used for your account. Your sign in information will be sent to your email address after it has been verified.

Qualitative and Quantitative Research: Differences and Similarities

Qualitative research and quantitative research are two complementary approaches for understanding the world around us.

Qualitative research collects non-numerical data , and the results are typically presented as written descriptions, photographs, videos, and/or sound recordings.

In contrast, quantitative research collects numerical data , and the results are typically presented in tables, graphs, and charts.

Debates about whether to use qualitative or quantitative research methods are common in the social sciences (i.e. anthropology, archaeology, economics, geography, history, law, linguistics, politics, psychology, sociology), which aim to understand a broad range of human conditions. Qualitative observations may be used to gain an understanding of unique situations, which may lead to quantitative research that aims to find commonalities.

Within the natural and physical sciences (i.e. physics, chemistry, geology, biology), qualitative observations often lead to a plethora of quantitative studies. For example, unusual observations through a microscope or telescope can immediately lead to counting and measuring. In other situations, meaningful numbers cannot immediately be obtained, and the qualitative research must stand on its own (e.g. The patient presented with an abnormally enlarged spleen (Figure 1), and complained of pain in the left shoulder.)

For both qualitative and quantitative research, the researcher's assumptions shape the direction of the study and thereby influence the results that can be obtained. Let's consider some prominent examples of qualitative and quantitative research, and how these two methods can complement each other.

Qualitative research example

In 1960, Jane Goodall started her decades-long study of chimpanzees in the wild at Gombe Stream National Park in Tanzania. Her work is an example of qualitative research that has fundamentally changed our understanding of non-human primates, and has influenced our understanding of other animals, their abilities, and their social interactions.

Dr. Goodall was by no means the first person to study non-human primates, but she took a highly unusual approach in her research. For example, she named individual chimpanzees instead of numbering them, and used terms such as "childhood", "adolescence", "motivation", "excitement", and "mood". She also described the distinct "personalities" of individual chimpanzees. Dr. Goodall was heavily criticized for describing chimpanzees in ways that are regularly used to describe humans, which perfectly illustrates how the assumptions of the researcher can heavily influence their work.

The quality of qualitative research is largely determined by the researcher's ability, knowledge, creativity, and interpretation of the results. One of the hallmarks of good qualitative research is that nothing is predefined or taken for granted, and that the study subjects teach the researcher about their lives. As a result, qualitative research studies evolve over time, and the focus or techniques used can shift as the study progresses.

Qualitative research methods

Dr. Goodall immersed herself in the chimpanzees' natural surroundings, and used direct observation to learn about their daily life. She used photographs, videos, sound recordings, and written descriptions to present her data. These are all well-established methods of qualitative research, with direct observation within the natural setting considered a gold standard. These methods are time-intensive for the researcher (and therefore monetarily expensive) and limit the number of individuals that can be studied at one time.

When studying humans, a wider variety of research methods are available to understand how people perceive and navigate their world—past or present. These techniques include: in-depth interviews (e.g. Can you discuss your experience of growing up in the Deep South in the 1950s?), open-ended survey questions (e.g. What do you enjoy most about being part of the Church of Latter Day Saints?), focus group discussions, researcher participation (e.g. in military training), review of written documents (e.g. social media accounts, diaries, school records, etc), and analysis of cultural records (e.g. anything left behind including trash, clothing, buildings, etc).

Qualitative research can lead to quantitative research

Qualitative research is largely exploratory. The goal is to gain a better understanding of an unknown situation. Qualitative research in humans may lead to a better understanding of underlying reasons, opinions, motivations, experiences, etc. The information generated through qualitative research can provide new hypotheses to test through quantitative research. Quantitative research studies are typically more focused and less exploratory, involve a larger sample size, and by definition produce numerical data.

Dr. Goodall's qualitative research clearly established periods of childhood and adolescence in chimpanzees. Quantitative studies could better characterize these time periods, for example by recording the amount of time individual chimpanzees spend with their mothers, with peers, or alone each day during childhood compared to adolescence.

For studies involving humans, quantitative data might be collected through a questionnaire with a limited number of answers (e.g. If you were being bullied, what is the likelihood that you would tell at least one parent? A) Very likely, B) Somewhat likely, C) Somewhat unlikely, D) Unlikely).

Quantitative research example

One of the most influential examples of quantitative research began with a simple qualitative observation: Some peas are round, and other peas are wrinkled. Gregor Mendel was not the first to make this observation, but he was the first to carry out rigorous quantitative experiments to better understand this characteristic of garden peas.

As described in his 1865 research paper, Mendel carried out carefully controlled genetic crosses and counted thousands of resulting peas. He discovered that the ratio of round peas to wrinkled peas matched the ratio expected if pea shape were determined by two copies of a gene for pea shape, one inherited from each parent. These experiments and calculations became the foundation of modern genetics, and Mendel's ratios became the default hypothesis for experiments involving thousands of different genes in hundreds of different organisms.

The quality of quantitative research is largely determined by the researcher's ability to design a feasible experiment, that will provide clear evidence to support or refute the working hypothesis. The hallmarks of good quantitative research include: a study that can be replicated by an independent group and produce similar results, a sample population that is representative of the population under study, a sample size that is large enough to reveal any expected statistical significance.

Quantitative research methods

The basic methods of quantitative research involve measuring or counting things (size, weight, distance, offspring, light intensity, participants, number of times a specific phrase is used, etc). In the social sciences especially, responses are often be split into somewhat arbitrary categories (e.g. How much time do you spend on social media during a typical weekday? A) 0-15 min, B) 15-30 min, C) 30-60 min, D) 1-2 hrs, E) more than 2 hrs).

These quantitative data can be displayed in a table, graph, or chart, and grouped in ways that highlight patterns and relationships. The quantitative data should also be subjected to mathematical and statistical analysis. To reveal overall trends, the average (or most common survey answer) and standard deviation can be determined for different groups (e.g. with treatment A and without treatment B).

Typically, the most important result from a quantitative experiment is the test of statistical significance. There are many different methods for determining statistical significance (e.g. t-test, chi square test, ANOVA, etc.), and the appropriate method will depend on the specific experiment.

Statistical significance provides an answer to the question: What is the probably that the difference observed between two groups is due to chance alone, and the two groups are actually the same? For example, your initial results might show that 32% of Friday grocery shoppers buy alcohol, while only 16% of Monday grocery shoppers buy alcohol. If this result reflects a true difference between Friday shoppers and Monday shoppers, grocery store managers might want to offer Friday specials to increase sales.

After the appropriate statistical test is conducted (which incorporates sample size and other variables), the probability that the observed difference is due to chance alone might be more than 5%, or less than 5%. If the probability is less than 5%, the convention is that the result is considered statistically significant. (The researcher is also likely to cheer and have at least a small celebration.) Otherwise, the result is considered statistically insignificant. (If the value is close to 5%, the researcher may try to group the data in different ways to achieve statistical significance. For example, by comparing alcohol sales after 5pm on Friday and Monday.) While it is important to reveal differences that may not be immediately obvious, the desire to manipulate information until it becomes statistically significant can also contribute to bias in research.

So how often do results from two groups that are actually the same give a probability of less than 5%? A bit less than 5% of the time (by definition). This is one of the reasons why it is so important that quantitative research can be replicated by different groups.

Which research method should I choose?

Choose the research methods that will allow you to produce the best results for a meaningful question, while acknowledging any unknowns and controlling for any bias. In many situations, this will involve a mixed methods approach. Qualitative research may allow you to learn about a poorly understood topic, and then quantitative research may allow you to obtain results that can be subjected to rigorous statistical tests to find true and meaningful patterns. Many different approaches are required to understand the complex world around us.

- Academic Writing Advice

- All Blog Posts

- Writing Advice

- Admissions Writing Advice

- Book Writing Advice

- Short Story Advice

- Employment Writing Advice

- Business Writing Advice

- Web Content Advice

- Article Writing Advice

- Magazine Writing Advice

- Grammar Advice

- Dialect Advice

- Editing Advice

- Freelance Advice

- Legal Writing Advice

- Poetry Advice

- Graphic Design Advice

- Logo Design Advice

- Translation Advice

- Blog Reviews

- Short Story Award Winners

- Scholarship Winners

Need an academic editor before submitting your work?

The differences between qualitative and quantitative research methods

Last updated

15 January 2023

Reviewed by

Two approaches to this systematic information gathering are qualitative and quantitative research. Each of these has its place in data collection, but each one approaches from a different direction. Here's what you need to know about qualitative and quantitative research.

All your data in one place

Analyze your qualitative and quantitative data together in Dovetail and uncover deeper insights

- The differences between quantitative and qualitative research

The main difference between these two approaches is the type of data you collect and how you interpret it. Qualitative research focuses on word-based data, aiming to define and understand ideas. This study allows researchers to collect information in an open-ended way through interviews, ethnography, and observation. You’ll study this information to determine patterns and the interplay of variables.

On the other hand, quantitative research focuses on numerical data and using it to determine relationships between variables. Researchers use easily quantifiable forms of data collection, such as experiments that measure the effect of one or several variables on one another.

- Qualitative vs. quantitative data collection

Focusing on different types of data means that the data collection methods vary.

Quantitative data collection methods

As previously stated, quantitative data collection focuses on numbers. You gather information through experiments, database reports, or surveys with multiple-choice answers. The goal is to have data you can use in numerical analysis to determine relationships.

Qualitative data collection methods

On the other hand, the data collected for qualitative research is an exploration of a subject's attributes, thoughts, actions, or viewpoints. Researchers will typically conduct interviews , hold focus groups, or observe behavior in a natural setting to assemble this information. Other options include studying personal accounts or cultural records.

- Qualitative vs. quantitative outcomes

The two approaches naturally produce different types of outcomes. Qualitative research gains a better understanding of the reason something happens. For example, researchers may comb through feedback and statements to ascertain the reasoning behind certain behaviors or actions.

On the other hand, quantitative research focuses on the numerical analysis of data, which may show cause-and-effect relationships. Put another way, qualitative research investigates why something happens, while quantitative research looks at what happens.

- How to analyze qualitative and quantitative data

Because the two research methods focus on different types of information, analyzing the data you've collected will look different, depending on your approach.

Analyzing quantitative data

As this data is often numerical, you’ll likely use statistical analysis to identify patterns. Researchers may use computer programs to generate data such as averages or rate changes, illustrating the results in tables or graphs.

Analyzing qualitative data

Qualitative data is more complex and time-consuming to process as it may include written texts, videos, or images to study. Finding patterns in thinking, actions, and beliefs is more nuanced and subject to interpretation.

Researchers may use techniques such as thematic analysis , combing through the data to identify core themes or patterns. Another tool is discourse analysis , which studies how communication functions in different contexts.

- When to use qualitative vs. quantitative research

Choosing between the two approaches comes down to understanding what your goal is with the research.

Qualitative research approach

Qualitative research is useful for understanding a concept, such as what people think about certain experiences or how cultural beliefs affect perceptions of events. It can help you formulate a hypothesis or clarify general questions about the topic.

Quantitative research approach

On the other hand, quantitative research verifies or tests a hypothesis you've developed, or you can use it to find answers to those questions.

Mixed methods approach

Often, researchers use elements of both types of research to provide complex and targeted information. This may look like a survey with multiple-choice and open-ended questions.

- Benefits and limitations

Of course, each type of research has drawbacks and strengths. It's essential to be aware of the pros and cons.

Qualitative studies: Pros and cons

This approach lets you consider your subject creatively and examine big-picture questions. It can advance your global understanding of topics that are challenging to quantify.

On the other hand, the wide-open possibilities of qualitative research can make it tricky to focus effectively on your subject of inquiry. It makes it easier for researchers to skew the data with social biases and personal assumptions. There’s also the tendency for people to behave differently under observation.

It can also be more difficult to get a large sample size because it's generally more complex and expensive to conduct qualitative research. The process usually takes longer, as well.

Quantitative studies: Pros and cons

The quantitative methodology produces data you can communicate and present without bias. The methods are direct and generally easier to reproduce on a larger scale, enabling researchers to get accurate results. It can be instrumental in pinning down precise facts about a topic.

It is also a restrictive form of inquiry. Researchers cannot add context to this type of data collection or expand their focus in a different direction within a single study. They must be alert for biases. Quantitative research is more susceptible to selection bias and omitting or incorrectly measuring variables.

- How to balance qualitative and quantitative research

Although people tend to gravitate to one form of inquiry over another, each has its place in studying a subject. Both approaches can identify patterns illustrating the connection between multiple elements, and they can each advance your understanding of subjects in important ways.

Understanding how each option will serve you will help you decide how and when to use each. Generally, qualitative research can help you develop and refine questions, while quantitative research helps you get targeted answers to those questions. Which element do you need to advance your study of the subject? Can both of them hone your knowledge?

Open-ended vs. close-ended questions

One way to use techniques from both approaches is with open-ended and close-ended questions in surveys. Because quantitative analysis requires defined sets of data that you can represent numerically, the questions must be close-ended. On the other hand, qualitative inquiry is naturally open-ended, allowing room for complex ideas.

An example of this is a survey on the impact of inflation. You could include both multiple-choice questions and open-response questions:

1. How do you compensate for higher prices at the grocery store? (Select all that apply)

A. Purchase fewer items

B. Opt for less expensive choices

C. Take money from other parts of the budget

D. Use a food bank or other charity to fill the gaps

E. Make more food from scratch

2. How do rising prices affect your grocery shopping habits? (Write your answer)

We need qualitative and quantitative forms of research to advance our understanding of the world. Neither is the "right" way to go, but one may be better for you depending on your needs.

Learn more about qualitative research data analysis software

Should you be using a customer insights hub.

Do you want to discover previous research faster?

Do you share your research findings with others?

Do you analyze research data?

Start for free today, add your research, and get to key insights faster

Editor’s picks

Last updated: 18 April 2023

Last updated: 27 February 2023

Last updated: 6 February 2023

Last updated: 6 October 2023

Last updated: 5 February 2023

Last updated: 16 April 2023

Last updated: 7 March 2023

Last updated: 9 March 2023

Last updated: 12 December 2023

Last updated: 11 March 2024

Last updated: 6 March 2024

Last updated: 5 March 2024

Last updated: 13 May 2024

Latest articles

Related topics, .css-je19u9{-webkit-align-items:flex-end;-webkit-box-align:flex-end;-ms-flex-align:flex-end;align-items:flex-end;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-flex-direction:row;-ms-flex-direction:row;flex-direction:row;-webkit-box-flex-wrap:wrap;-webkit-flex-wrap:wrap;-ms-flex-wrap:wrap;flex-wrap:wrap;-webkit-box-pack:center;-ms-flex-pack:center;-webkit-justify-content:center;justify-content:center;row-gap:0;text-align:center;max-width:671px;}@media (max-width: 1079px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}}@media (max-width: 799px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}} decide what to .css-1kiodld{max-height:56px;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;}@media (max-width: 1079px){.css-1kiodld{display:none;}} build next, decide what to build next.

Users report unexpectedly high data usage, especially during streaming sessions.

Users find it hard to navigate from the home page to relevant playlists in the app.

It would be great to have a sleep timer feature, especially for bedtime listening.

I need better filters to find the songs or artists I’m looking for.

Log in or sign up

Get started for free

Qualitative and Quantitative Methods in Research

- Living reference work entry

- First Online: 10 August 2022

- Cite this living reference work entry

- Christina Mazzola Nicols 3

44 Accesses

1 Definition

Research that aims to gather an in-depth understanding of human behavior and the factors contributing to the behavior. Frequent methods of qualitative data collection include observation, in-depth interviews, and focus groups. Words, pictures, or objects comprise the resulting data.

A qualitative research method used to understand behaviors in a natural setting. The researcher relies on their observations of the subject to collect and analyze data.

A qualitative method in which an interviewer directs a series of questions to the person he/she is interviewing, typically either in person or by telephone. As an interview progresses, questions tend to move from the general to the specific.

Interviews conducted in small groups of participants instead of individuals. Typically, a trained moderator leads a focused discussion among eight to ten participants over the course of 1–2 h. Focus groups can be conducted in...

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

Institutional subscriptions

Further Readings

Douglas Evans, W. (2016). Social marketing research for global public health: Methods and technologies . Oxford Press.

Book Google Scholar

Hicks, N. J., & Nicols, C. M. (2016). Health industry communication: New media, new methods, new message (2nd ed.). Jones & Bartlett Learning.

Google Scholar

Siegel, M., & Lotenberg, L. D. (2007). Marketing public health: Strategies to promote social change (2nd ed.). Jones and Bartlett Publishers.

Download references

Author information

Authors and affiliations.

Hager Sharp, Washington, DC, USA

Christina Mazzola Nicols

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Christina Mazzola Nicols .

Section Editor information

Milken Institute School of Public Health, George Washington University, Washington, D. C., USA

American University of Beirut, Beirut, Lebanon

Marco Bardus

Rights and permissions

Reprints and permissions

Copyright information

© 2021 The Author(s), under exclusive licence to Springer Nature Switzerland AG

About this entry

Cite this entry.

Nicols, C.M. (2021). Qualitative and Quantitative Methods in Research. In: The Palgrave Encyclopedia of Social Marketing. Palgrave Macmillan, Cham. https://doi.org/10.1007/978-3-030-14449-4_154-1

Download citation

DOI : https://doi.org/10.1007/978-3-030-14449-4_154-1

Received : 11 May 2020

Accepted : 07 July 2021

Published : 10 August 2022

Publisher Name : Palgrave Macmillan, Cham

Print ISBN : 978-3-030-14449-4

Online ISBN : 978-3-030-14449-4

eBook Packages : Springer Reference Business and Management Reference Module Humanities and Social Sciences Reference Module Business, Economics and Social Sciences

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Qualitative vs Quantitative Research Methods & Data Analysis

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

What is the difference between quantitative and qualitative?

The main difference between quantitative and qualitative research is the type of data they collect and analyze.

Quantitative research collects numerical data and analyzes it using statistical methods. The aim is to produce objective, empirical data that can be measured and expressed in numerical terms. Quantitative research is often used to test hypotheses, identify patterns, and make predictions.

Qualitative research , on the other hand, collects non-numerical data such as words, images, and sounds. The focus is on exploring subjective experiences, opinions, and attitudes, often through observation and interviews.

Qualitative research aims to produce rich and detailed descriptions of the phenomenon being studied, and to uncover new insights and meanings.

Quantitative data is information about quantities, and therefore numbers, and qualitative data is descriptive, and regards phenomenon which can be observed but not measured, such as language.

What Is Qualitative Research?

Qualitative research is the process of collecting, analyzing, and interpreting non-numerical data, such as language. Qualitative research can be used to understand how an individual subjectively perceives and gives meaning to their social reality.

Qualitative data is non-numerical data, such as text, video, photographs, or audio recordings. This type of data can be collected using diary accounts or in-depth interviews and analyzed using grounded theory or thematic analysis.

Qualitative research is multimethod in focus, involving an interpretive, naturalistic approach to its subject matter. This means that qualitative researchers study things in their natural settings, attempting to make sense of, or interpret, phenomena in terms of the meanings people bring to them. Denzin and Lincoln (1994, p. 2)

Interest in qualitative data came about as the result of the dissatisfaction of some psychologists (e.g., Carl Rogers) with the scientific study of psychologists such as behaviorists (e.g., Skinner ).

Since psychologists study people, the traditional approach to science is not seen as an appropriate way of carrying out research since it fails to capture the totality of human experience and the essence of being human. Exploring participants’ experiences is known as a phenomenological approach (re: Humanism ).

Qualitative research is primarily concerned with meaning, subjectivity, and lived experience. The goal is to understand the quality and texture of people’s experiences, how they make sense of them, and the implications for their lives.

Qualitative research aims to understand the social reality of individuals, groups, and cultures as nearly as possible as participants feel or live it. Thus, people and groups are studied in their natural setting.

Some examples of qualitative research questions are provided, such as what an experience feels like, how people talk about something, how they make sense of an experience, and how events unfold for people.

Research following a qualitative approach is exploratory and seeks to explain ‘how’ and ‘why’ a particular phenomenon, or behavior, operates as it does in a particular context. It can be used to generate hypotheses and theories from the data.

Qualitative Methods

There are different types of qualitative research methods, including diary accounts, in-depth interviews , documents, focus groups , case study research , and ethnography.

The results of qualitative methods provide a deep understanding of how people perceive their social realities and in consequence, how they act within the social world.

The researcher has several methods for collecting empirical materials, ranging from the interview to direct observation, to the analysis of artifacts, documents, and cultural records, to the use of visual materials or personal experience. Denzin and Lincoln (1994, p. 14)

Here are some examples of qualitative data:

Interview transcripts : Verbatim records of what participants said during an interview or focus group. They allow researchers to identify common themes and patterns, and draw conclusions based on the data. Interview transcripts can also be useful in providing direct quotes and examples to support research findings.

Observations : The researcher typically takes detailed notes on what they observe, including any contextual information, nonverbal cues, or other relevant details. The resulting observational data can be analyzed to gain insights into social phenomena, such as human behavior, social interactions, and cultural practices.

Unstructured interviews : generate qualitative data through the use of open questions. This allows the respondent to talk in some depth, choosing their own words. This helps the researcher develop a real sense of a person’s understanding of a situation.

Diaries or journals : Written accounts of personal experiences or reflections.

Notice that qualitative data could be much more than just words or text. Photographs, videos, sound recordings, and so on, can be considered qualitative data. Visual data can be used to understand behaviors, environments, and social interactions.

Qualitative Data Analysis

Qualitative research is endlessly creative and interpretive. The researcher does not just leave the field with mountains of empirical data and then easily write up his or her findings.

Qualitative interpretations are constructed, and various techniques can be used to make sense of the data, such as content analysis, grounded theory (Glaser & Strauss, 1967), thematic analysis (Braun & Clarke, 2006), or discourse analysis .

For example, thematic analysis is a qualitative approach that involves identifying implicit or explicit ideas within the data. Themes will often emerge once the data has been coded .

Key Features

- Events can be understood adequately only if they are seen in context. Therefore, a qualitative researcher immerses her/himself in the field, in natural surroundings. The contexts of inquiry are not contrived; they are natural. Nothing is predefined or taken for granted.

- Qualitative researchers want those who are studied to speak for themselves, to provide their perspectives in words and other actions. Therefore, qualitative research is an interactive process in which the persons studied teach the researcher about their lives.

- The qualitative researcher is an integral part of the data; without the active participation of the researcher, no data exists.

- The study’s design evolves during the research and can be adjusted or changed as it progresses. For the qualitative researcher, there is no single reality. It is subjective and exists only in reference to the observer.

- The theory is data-driven and emerges as part of the research process, evolving from the data as they are collected.

Limitations of Qualitative Research

- Because of the time and costs involved, qualitative designs do not generally draw samples from large-scale data sets.

- The problem of adequate validity or reliability is a major criticism. Because of the subjective nature of qualitative data and its origin in single contexts, it is difficult to apply conventional standards of reliability and validity. For example, because of the central role played by the researcher in the generation of data, it is not possible to replicate qualitative studies.

- Also, contexts, situations, events, conditions, and interactions cannot be replicated to any extent, nor can generalizations be made to a wider context than the one studied with confidence.

- The time required for data collection, analysis, and interpretation is lengthy. Analysis of qualitative data is difficult, and expert knowledge of an area is necessary to interpret qualitative data. Great care must be taken when doing so, for example, looking for mental illness symptoms.

Advantages of Qualitative Research

- Because of close researcher involvement, the researcher gains an insider’s view of the field. This allows the researcher to find issues that are often missed (such as subtleties and complexities) by the scientific, more positivistic inquiries.