ORIGINAL RESEARCH article

Statistical analysis of complex problem-solving process data: an event history analysis approach.

- 1 Department of Statistics, London School of Economics and Political Science, London, United Kingdom

- 2 School of Statistics, University of Minnesota, Minneapolis, MN, United States

- 3 Department of Statistics, Columbia University, New York, NY, United States

Complex problem-solving (CPS) ability has been recognized as a central 21st century skill. Individuals' processes of solving crucial complex problems may contain substantial information about their CPS ability. In this paper, we consider the prediction of duration and final outcome (i.e., success/failure) of solving a complex problem during task completion process, by making use of process data recorded in computer log files. Solving this problem may help answer questions like “how much information about an individual's CPS ability is contained in the process data?,” “what CPS patterns will yield a higher chance of success?,” and “what CPS patterns predict the remaining time for task completion?” We propose an event history analysis model for this prediction problem. The trained prediction model may provide us a better understanding of individuals' problem-solving patterns, which may eventually lead to a good design of automated interventions (e.g., providing hints) for the training of CPS ability. A real data example from the 2012 Programme for International Student Assessment (PISA) is provided for illustration.

1. Introduction

Complex problem-solving (CPS) ability has been recognized as a central 21st century skill of high importance for several outcomes including academic achievement ( Wüstenberg et al., 2012 ) and workplace performance ( Danner et al., 2011 ). It encompasses a set of higher-order thinking skills that require strategic planning, carrying out multi-step sequences of actions, reacting to a dynamically changing system, testing hypotheses, and, if necessary, adaptively coming up with new hypotheses. Thus, there is almost no doubt that an individual's problem-solving process data contain substantial amount of information about his/her CPS ability and thus are worth analyzing. Meaningful information extracted from CPS process data may lead to better understanding, measurement, and even training of individuals' CPS ability.

Problem-solving process data typically have a more complex structure than that of panel data which are traditionally more commonly encountered in statistics. Specifically, individuals may take different strategies toward solving the same problem. Even for individuals who take the same strategy, their actions and time-stamps of the actions may be very different. Due to such heterogeneity and complexity, classical regression and multivariate data analysis methods cannot be straightforwardly applied to CPS process data.

Possibly due to the lack of suitable analytic tools, research on CPS process data is limited. Among the existing works, none took a prediction perspective. Specifically, Greiff et al. (2015) presented a case study, showcasing the strong association between a specific strategic behavior (identified by expert knowledge) in a CPS task from the 2012 Programme for International Student Assessment (PISA) and performance both in this specific task and in the overall PISA problem-solving score. He and von Davier (2015 , 2016) proposed an N-gram method from natural language processing for analyzing problem-solving items in technology-rich environments, focusing on identifying feature sequences that are important to task completion. Vista et al. (2017) developed methods for the visualization and exploratory analysis of students' behavioral pathways, aiming to detect action sequences that are potentially relevant for establishing particular paths as meaningful markers of complex behaviors. Halpin and De Boeck (2013) and Halpin et al. (2017) adopted a Hawkes process approach to analyzing collaborative problem-solving items, focusing on the psychological measurement of collaboration. Xu et al. (2018) proposed a latent class model that analyzes CPS patterns by classifying individuals into latent classes based on their problem-solving processes.

In this paper, we propose to analyze CPS process data from a prediction perspective. As suggested in Yarkoni and Westfall (2017) , an increased focus on prediction can ultimately lead us to greater understanding of human behavior. Specifically, we consider the simultaneous prediction of the duration and the final outcome (i.e., success/failure) of solving a complex problem based on CPS process data. Instead of a single prediction, we hope to predict at any time during the problem-solving process. Such a data-driven prediction model may bring us insights about individuals' CPS behavioral patterns. First, features that contribute most to the prediction may correspond to important strategic behaviors that are key to succeeding in a task. In this sense, the proposed method can be used as an exploratory data analysis tool for extracting important features from process data. Second, the prediction accuracy may also serve as a measure of the strength of the signal contained in process data that reflects one's CPS ability, which reflects the reliability of CPS tasks from a prediction perspective. Third, for low stake assessments, the predicted chance of success may be used to give partial credits when scoring task takers. Fourth, speed is another important dimension of complex problem solving that is closely associated with the final outcome of task completion ( MacKay, 1982 ). The prediction of the duration throughout the problem-solving process may provide us insights on the relationship between the CPS behavioral patterns and the CPS speed. Finally, the prediction model also enables us to design suitable interventions during their problem-solving processes. For example, a hint may be provided when a student is predicted having a high chance to fail after sufficient efforts.

More precisely, we model the conditional distribution of duration time and final outcome given the event history up to any time point. This model can be viewed as a special event history analysis model, a general statistical framework for analyzing the expected duration of time until one or more events happen (see e.g., Allison, 2014 ). The proposed model can be regarded as an extension to the classical regression approach. The major difference is that the current model is specified over a continuous-time domain. It consists of a family of conditional models indexed by time, while the classical regression approach does not deal with continuous-time information. As a result, the proposed model supports prediction at any time during one's problem-solving process, while the classical regression approach does not. The proposed model is also related to, but substantially different from response time models (e.g., van der Linden, 2007 ) which have received much attention in psychometrics in recent years. Specifically, response time models model the joint distribution of response time and responses to test items, while the proposed model focuses on the conditional distribution of CPS duration and final outcome given the event history.

Although the proposed method learns regression-type models from data, it is worth emphasizing that we do not try to make statistical inference, such as testing whether a specific regression coefficient is significantly different from zero. Rather, the selection and interpretation of the model are mainly justified from a prediction perspective. This is because statistical inference tends to draw strong conclusions based on strong assumptions on the data generation mechanism. Due to the complexity of CPS process data, a statistical model may be severely misspecified, making valid statistical inference a big challenge. On the other hand, the prediction framework requires less assumptions and thus is more suitable for exploratory analysis. More precisely, the prediction framework admits the discrepancy between the underlying complex data generation mechanism and the prediction model ( Yarkoni and Westfall, 2017 ). A prediction model aims at achieving a balance between the bias due to this discrepancy and the variance due to a limited sample size. As a price, findings from the predictive framework are preliminary and only suggest hypotheses for future confirmatory studies.

The rest of the paper is organized as follows. In Section 2, we describe the structure of complex problem-solving process data and then motivate our research questions, using a CPS item from PISA 2012 as an example. In Section 3, we formulate the research questions under a statistical framework, propose a model, and then provide details of estimation and prediction. The introduced model is illustrated through an application to an example item from PISA 2012 in Section 4. We discuss limitations and future directions in Section 5.

2. Complex Problem-Solving Process Data

2.1. a motivating example.

We use a specific CPS item, CLIMATE CONTROL (CC) 1 , to demonstrate the data structure and to motivate our research questions. It is part of a CPS unit in PISA 2012 that was designed under the “MicroDYN” framework ( Greiff et al., 2012 ; Wüstenberg et al., 2012 ), a framework for the development of small dynamic systems of causal relationships for assessing CPS.

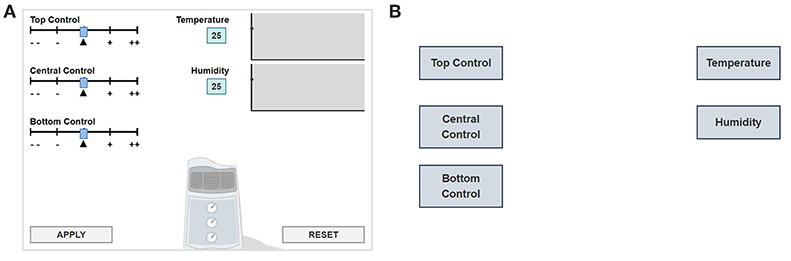

In this item, students are instructed to manipulate the panel (i.e., to move the top, central, and bottom control sliders; left side of Figure 1A ) and to answer how the input variables (control sliders) are related to the output variables (temperature and humidity). Specifically, the initial position of each control slider is indicated by a triangle “▴.” The students can change the top, central and bottom controls on the left of Figure 1 by using the sliders. By clicking “APPLY,” they will see the corresponding changes in temperature and humidity. After exploration, the students are asked to draw lines in a diagram ( Figure 1B ) to answer what each slider controls. The item is considered correctly answered if the diagram is correctly completed. The problem-solving process for this item is that the students must experiment to determine which controls have an impact on temperature and which on humidity, and then represent the causal relations by drawing arrows between the three inputs (top, central, and bottom control sliders) and the two outputs (temperature and humidity).

Figure 1. (A) Simulation environment of CC item. (B) Answer diagram of CC item.

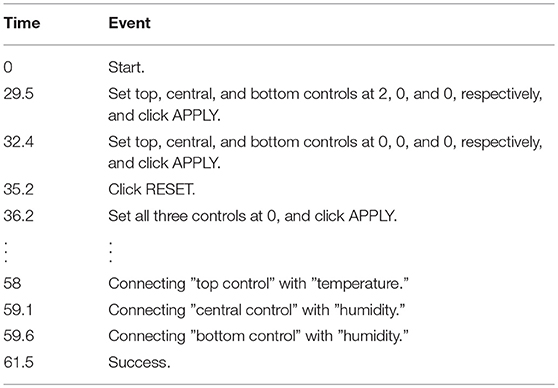

PISA 2012 collected students' problem-solving process data in computer log files, in the form of a sequence of time-stamped events. We illustrate the structure of data in Table 1 and Figure 2 , where Table 1 tabulates a sequence of time-stamped events from a student and Figure 2 visualizes the corresponding event time points on a time line. According to the data, 14 events were recorded between time 0 (start) and 61.5 s (success). The first event happened at 29.5 s that was clicking “APPLY” after the top, central, and bottom controls were set at 2, 0, and 0, respectively. A sequence of actions followed the first event and finally at 58, 59.1, and 59.6 s, a final answer was correctly given using the diagram. It is worth clarifying that this log file does not collect all the interactions between a student and the simulated system. That is, the status of the control sliders is only recorded in the log file, when the “APPLY” button is clicked.

Table 1 . An example of computer log file data from CC item in PISA 2012.

Figure 2 . Visualization of the structure of process data from CC item in PISA 2012.

The process data for solving a CPS item typically have two components, knowledge acquisition and knowledge application, respectively. This CC item mainly focuses the former, which includes learning the causal relationships between the inputs and the outputs and representing such relationships by drawing the diagram. Since data on representing the causal relationship is relatively straightforward, in the rest of the paper, we focus on the process data related to knowledge acquisition and only refer a student's problem-solving process to his/her process of exploring the air conditioner, excluding the actions involving the answer diagram.

Intuitively, students' problem-solving processes contain information about their complex problem-solving ability, whether in the context of the CC item or in a more general sense of dealing with complex tasks in practice. However, it remains a challenge to extract meaningful information from their process data, due to the complex data structure. In particular, the occurrences of events are heterogeneous (i.e., different people can have very different event histories) and unstructured (i.e., there is little restriction on the order and time of the occurrences). Different students tend to have different problem-solving trajectories, with different actions taken at different time points. Consequently, time series models, which are standard statistical tools for analyzing dynamic systems, are not suitable here.

2.2. Research Questions

We focus on two specific research questions. Consider an individual solving a complex problem. Given that the individual has spent t units of time and has not yet completed the task, we would like to ask the following two questions based on the information at time t : How much additional time does the individual need? And will the individual succeed or fail upon the time of task completion?

Suppose we index the individual by i and let T i be the total time of task completion and Y i be the final outcome. Moreover, we denote H i ( t ) = ( h i 1 ( t ) , ... , h i p ( t ) ) ⊤ as a p -vector function of time t , summarizing the event history of individual i from the beginning of task to time t . Each component of H i ( t ) is a feature constructed from the event history up to time t . Taking the above CC item as an example, components of H i ( t ) may be, the number of actions a student has taken, whether all three control sliders have been explored, the frequency of using the reset button, etc., up to time t . We refer to H i ( t ) as the event history process of individual i . The dimension p may be high, depending on the complexity of the log file.

With the above notation, the two questions become to simultaneously predict T i and Y i based on H i ( t ). Throughout this paper, we focus on the analysis of data from a single CPS item. Extensions of the current framework to multiple-item analysis are discussed in Section 5.

3. Proposed Method

3.1. a regression model.

We now propose a regression model to answer the two questions raised in Section 2.2. We specify the marginal conditional models of Y i and T i given H i ( t ) and T i > t , respectively. Specifically, we assume

where Φ is the cumulative distribution function of a standard normal distribution. That is, Y i is assumed to marginally follow a probit regression model. In addition, only the conditional mean and variance are assumed for log( T i − t ). Our model parameters include the regression coefficients B = ( b jk )2 × p and conditional variance σ 2 . Based on the above model specification, a pseudo-likelihood function will be devived in Section 3.3 for parameter estimation.

Although only marginal models are specified, we point out that the model specifications (1) through (3) impose quite strong assumptions. As a result, the model may not most closely approximate the data-generating process and thus a bias is likely to exist. On the other hand, however, it is a working model that leads to reasonable prediction and can be used as a benchmark model for this prediction problem in future investigations.

We further remark that the conditional variance of log( T i − t ) is time-invariant under the current specification, which can be further relaxed to be time-dependent. In addition, the regression model for response time is closely related to the log-normal model for response time analysis in psychometrics (e.g., van der Linden, 2007 ). The major difference is that the proposed model is not a measurement model disentangling item and person effects on T i and Y i .

3.2. Prediction

Under the model in Section 3.1, given the event history, we predict the final outcome based on the success probability Φ( b 11 h i 1 ( t ) + ⋯ + b 1 p h ip ( t )). In addition, based on the conditional mean of log( T i − t ), we predict the total time at time t by t + exp( b 21 h i 1 ( t ) + ⋯ + b 2 p h ip ( t )). Given estimates of B from training data, we can predict the problem-solving duration and final outcome at any t for an individual in the testing sample, throughout his/her entire problem-solving process.

3.3. Parameter Estimation

It remains to estimate the model parameters based on a training dataset. Let our data be (τ i , y i ) and { H i ( t ): t ≥ 0}, i = 1, …, N , where τ i and y i are realizations of T i and Y i , and { H i ( t ): t ≥ 0} is the entire event history.

We develop estimating equations based on a pseudo likelihood function. Specifically, the conditional distribution of Y i given H i ( t ) and T i > t can be written as

where b 2 = ( b 11 , ... , b 1 p ) ⊤ . In addition, using the log-normal model as a working model for T i − t , the corresponding conditional distribution of T i can be written as

where b 2 = ( b 21 , ... , b 2 p ) ⊤ . The pseudo-likelihood is then written as

where t 1 , …, t J are J pre-specified grid points that spread out over the entire time spectrum. The choice of the grid points will be discussed in the sequel. By specifying the pseudo-likelihood based on the sequence of time points, the prediction at different time is taken into accounting in the estimation. We estimate the model parameters by maximizing the pseudo-likelihood function L ( B , σ).

In fact, (5) can be factorized into

Therefore, b 1 is estimated by maximizing L 1 ( b 1 ), which takes the form of a likelihood function for probit regression. Similarly, b 2 and σ are estimated by maximizing L 2 ( b 2 , σ), which is equivalent to solving the following estimation equations,

The estimating equations (8) and (9) can also be derived directly based on the conditional mean and variance specification of log( T i − t ). Solving these equations is equivalent to solving a linear regression problem, and thus is computationally easy.

3.4. Some Remarks

We provide a few remarks. First, choosing suitable features into H i ( t ) is important. The inclusion of suitable features not only improves the prediction accuracy, but also facilitates the exploratory analysis and interpretation of how behavioral patterns affect CPS result. If substantive knowledge about a CPS task is available from cognition theory, one may choose features that indicate different strategies toward solving the task. Otherwise, a data-driven approach may be taken. That is, one may select a model from a candidate list based on certain cross-validation criteria, where, if possible, all reasonable features should be consider as candidates. Even when a set of features has been suggested by cognition theory, one can still take the data-driven approach to find additional features, which may lead to new findings.

Second, one possible extension of the proposed model is to allow the regression coefficients to be a function of time t , whereas they are independent of time under the current model. In that case, the regression coefficients become functions of time, b jk ( t ). The current model can be regarded as a special case of this more general model. In particular, if b jk ( t ) has high variation along time in the best predictive model, then simply applying the current model may yield a high bias. Specifically, in the current estimation procedure, a larger grid point tends to have a smaller sample size and thus contributes less to the pseudo-likelihood function. As a result, a larger bias may occur in the prediction at a larger time point. However, the estimation of the time-dependent coefficient is non-trivial. In particular, constraints should be imposed on the functional form of b jk ( t ) to ensure a certain level of smoothness over time. As a result, b jk ( t ) can be accurately estimated using information from a finite number of time points. Otherwise, without any smoothness assumptions, to predict at any time during one's problem-solving process, there are an infinite number of parameters to estimate. Moreover, when a regression coefficient is time-dependent, its interpretation becomes more difficult, especially if the sign changes over time.

Third, we remark on the selection of grid points in the estimation procedure. Our model is specified in a continuous time domain that supports prediction at any time point in a continuum during an individual's problem-solving process. The use of discretized grid points is a way to approximate the continuous-time system, so that estimation equations can be written down. In practice, we suggest to place the grid points based on the quantiles of the empirical distribution of duration based on the training set. See the analysis in Section 4 for an illustration. The number of grid points may be further selected by cross validation. We also point out that prediction can be made at any time point on the continuum, not limited to the grid points for parameter estimation.

4. An Example from PISA 2012

4.1. background.

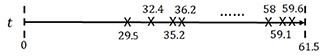

In what follows, we illustrate the proposed method via an application to the above CC item 2 . This item was also analyzed in Greiff et al. (2015) and Xu et al. (2018) . The dataset was cleaned from the entire released dataset of PISA 2012. It contains 16,872 15-year-old students' problem-solving processes, where the students were from 42 countries and economies. Among these students, 54.5% answered correctly. On average, each student took 129.9 s and 17 actions solving the problem. Histograms of the students' problem-solving duration and number of actions are presented in Figure 3 .

Figure 3. (A) Histogram of problem-solving duration of the CC item. (B) Histogram of the number of actions for solving the CC item.

4.2. Analyses

The entire dataset was randomly split into training and testing sets, where the training set contains data from 13,498 students and the testing set contains data from 3,374 students. A predictive model was built solely based on the training set and then its performance was evaluated based on the testing set. We used J = 9 grid points for the parameter estimation, with t 1 through t 9 specified to be 64, 81, 94, 106, 118, 132, 149, 170, and 208 s, respectively, which are the 10% through 90% quantiles of the empirical distribution of duration. As discussed earlier, the number of grid points and their locations may be further engineered by cross validation.

4.2.1. Model Selection

We first build a model based on the training data, using a data-driven stepwise forward selection procedure. In each step, we add one feature into H i ( t ) that leads to maximum increase in a cross-validated log-pseudo-likelihood, which is calculated based on a five-fold cross validation. We stop adding features into H i ( t ) when the cross-validated log-pseudo-likelihood stops increasing. The order in which the features are added may serve as a measure of their contribution to predicting the CPS duration and final outcome.

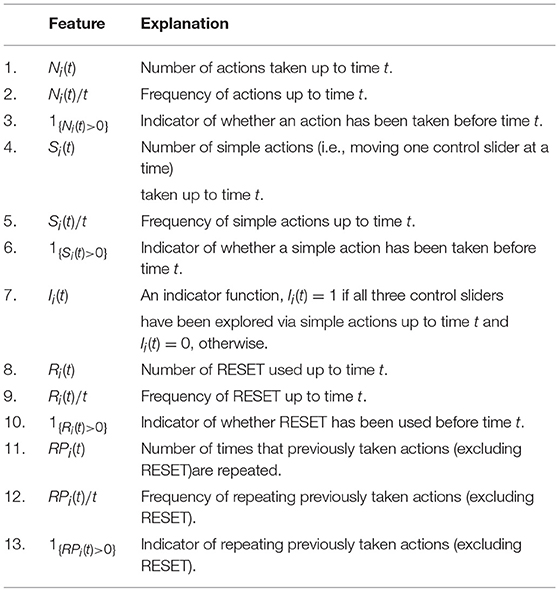

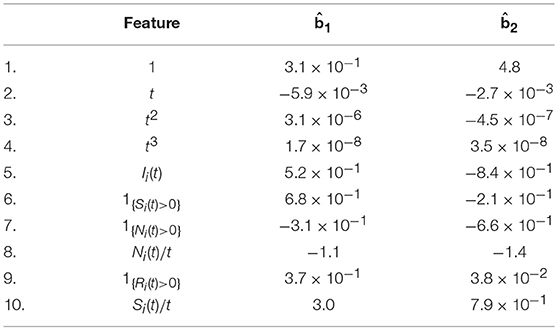

The candidate features being considered for model selection are listed in Table 2 . These candidate features were chosen to reflect students' CPS behavioral patterns from different aspects. In what follows, we discuss some of them. For example, the feature I i ( t ) indicates whether or not all three control sliders have been explored by simple actions (i.e., moving one control slider at a time) up to time t . That is, I i ( t ) = 1 means that the vary-one-thing-at-a-time (VOTAT) strategy ( Greiff et al., 2015 ) has been taken. According to the design of the CC item, the VOTAT strategy is expected to be a strong predictor of task success. In addition, the feature N i ( t )/ t records a student's average number of actions per unit time. It may serve as a measure of the student's speed of taking actions. In experimental psychology, response time or equivalently speed has been a central source for inferences about the organization and structure of cognitive processes (e.g., Luce, 1986 ), and in educational psychology, joint analysis of speed and accuracy of item response has also received much attention in recent years (e.g., van der Linden, 2007 ; Klein Entink et al., 2009 ). However, little is known about the role of speed in CPS tasks. The current analysis may provide some initial result on the relation between a student's speed and his/her CPS performance. Moreover, the features defined by the repeating of previously taken actions may reflect students' need of verifying the derived hypothesis on the relation based on the previous action or may be related to students' attention if the same actions are repeated many times. We also include 1, t, t 2 , and t 3 in H i ( t ) as the initial set of features to capture the time effect. For simplicity, country information is not taken into account in the current analysis.

Table 2 . The list of candidate features to be incorporated into the model.

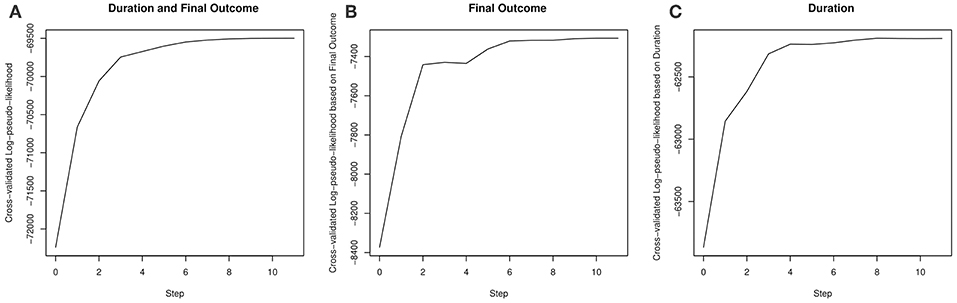

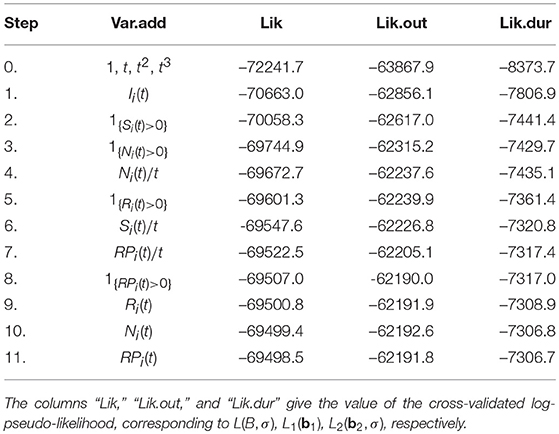

Our results on model selection are summarized in Figure 4 and Table 3 . The pseudo-likelihood stopped increasing after 11 steps, resulting a final model with 15 components in H i ( t ). As we can see from Figure 4 , the increase in the cross-validated log-pseudo-likelihood is mainly contributed by the inclusion of features in the first six steps, after which the increment is quite marginal. As we can see, the first, second, and sixth features entering into the model are all related to taking simple actions, a strategy known to be important to this task (e.g., Greiff et al., 2015 ). In particular, the first feature being selected is I i ( t ), which confirms the strong effect of the VOTAT strategy. In addition, the third and fourth features are both based on N i ( t ), the number of actions taken before time t . Roughly, the feature 1 { N i ( t )>0} reflects the initial planning behavior ( Eichmann et al., 2019 ). Thus, this feature tends to measure students' speed of reading the instruction of the item. As discussed earlier, the feature N i ( t )/ t measures students' speed of taking actions. Finally, the fifth feature is related to the use of the RESET button.

Figure 4 . The increase in the cross-validated log-pseudo-likelihood based on a stepwise forward selection procedure. (A–C) plot the cross-validated log-pseudo-likelihood, corresponding to L ( B , σ), L 1 ( b 1 ), L 2 ( b 2 , σ), respectively.

Table 3 . Results on model selection based on a stepwise forward selection procedure.

4.2.2. Prediction Performance on Testing Set

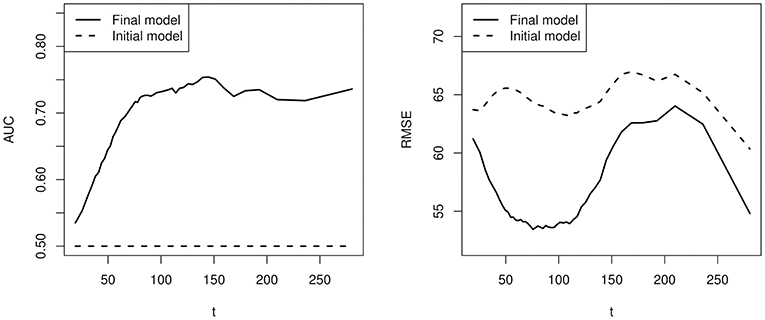

We now look at the prediction performance of the above model on the testing set. The prediction performance was evaluated at a larger set of time points from 19 to 281 s. Instead of reporting based on the pseudo-likelihood function, we adopted two measures that are more straightforward. Specifically, we measured the prediction of final outcome by the Area Under the Curve (AUC) of the predicted Receiver Operating Characteristic (ROC) curve. The value of AUC is between 0 and 1. A larger AUC value indicates better prediction of the binary final outcome, with AUC = 1 indicating perfect prediction. In addition, at each time point t , we measured the prediction of duration based on the root mean squared error (RMSE), defined as

where τ i , i = N + 1, …, N + n , denotes the duration of students in the testing set, and τ ^ i ( t ) denotes the prediction based on information up to time t according to the trained model.

Results are presented in Figure 5 , where the testing AUC and RMSE for the final outcome and duration are presented. In particular, results based on the model selected by cross validation ( p = 15) and the initial model ( p = 4, containing the initial covariates 1, t , t 2 , and t 3 ) are compared. First, based on the selected model, the AUC is never above 0.8 and the RMSE is between 53 and 64 s, indicating a low signal-to-noise ratio. Second, the students' event history does improve the prediction of final outcome and duration upon the initial model. Specifically, since the initial model does not take into account the event history, it predicts the students with duration longer than t to have the same success probability. Consequently, the test AUC is 0.5 at each value of t , which is always worse than the performance of the selected model. Moreover, the selected model always outperforms the initial model in terms of the prediction of duration. Third, the AUC for the prediction of the final outcome is low when t is small. It keeps increasing as time goes on and fluctuates around 0.72 after about 120 s.

Figure 5 . A comparison of prediction accuracy between the model selected by cross validation and a baseline model without using individual specific event history.

4.2.3. Interpretation of Parameter Estimates

To gain more insights into how the event history affects the final outcome and duration, we further look at the results of parameter estimation. We focus on a model whose event history H i ( t ) includes the initial features and the top six features selected by cross validation. This model has similar prediction accuracy as the selected model according to the cross-validation result in Figure 4 , but contains less features in the event history and thus is easier to interpret. Moreover, the parameter estimates under this model are close to those under the cross-validation selected model, and the signs of the regression coefficients remain the same.

The estimated regression coefficients are presented in Table 4 . First, the first selected feature I i ( t ), which indicates whether all three control sliders have been explored via simple actions, has a positive regression coefficient on final outcome and a negative coefficient on duration. It means that, controlling the rest of the parameters, a student who has taken the VOTAT strategy tends to be more likely to give a correct answer and to complete in a shorter period of time. This confirms the strong effect of VOTAT strategy in solving the current task.

Table 4 . Estimated regression coefficients for a model for which the event history process contains the initial features based on polynomials of t and the top six features selected by cross validation.

Second, besides I i ( t ), there are two features related to taking simple actions, 1 { S i ( t )>0} and S i ( t )/ t , which are the indicator of taking at least one simple action and the frequency of taking simple actions. Both features have positive regression coefficients on the final outcome, implying larger values of both features lead to a higher success rate. In addition, 1 { S i ( t )>0} has a negative coefficient on duration and S i ( t )/ t has a positive one. Under this estimated model, the overall simple action effect on duration is b ^ 25 I i ( t ) + b ^ 26 1 { S i ( t ) > 0 } + b ^ 2 , 10 S i ( t ) / t , which is negative for most students. It implies that, overall, taking simple actions leads to a shorter predicted duration. However, once all three types of simple actions have been taken, a higher frequency of taking simple actions leads to a weaker but sill negative simple action effect on the duration.

Third, as discussed earlier, 1 { N i ( t )>0} tends to measure the student's speed of reading the instruction of the task and N i ( t )/ t can be regarded as a measure of students' speed of taking actions. According to the estimated regression coefficients, the data suggest that a student who reads and acts faster tends to complete the task in a shorter period of time with a lower accuracy. Similar results have been seen in the literature of response time analysis in educational psychology (e.g., Klein Entink et al., 2009 ; Fox and Marianti, 2016 ; Zhan et al., 2018 ), where speed of item response was found to negatively correlated with accuracy. In particular, Zhan et al. (2018) found a moderate negative correlation between students' general mathematics ability and speed under a psychometric model for PISA 2012 computer-based mathematics data.

Finally, 1 { R i ( t )>0} , the use of the RESET button, has positive regression coefficients on both final outcome and duration. It implies that the use of RESET button leads to a higher predicted success probability and a longer duration time, given the other features controlled. The connection between the use of the RESET button and the underlying cognitive process of complex problem solving, if it exists, still remains to be investigated.

5. Discussions

5.1. summary.

As an early step toward understanding individuals' complex problem-solving processes, we proposed an event history analysis method for the prediction of the duration and the final outcome of solving a complex problem based on process data. This approach is able to predict at any time t during an individual's problem-solving process, which may be useful in dynamic assessment/learning systems (e.g., in a game-based assessment system). An illustrative example is provided that is based on a CPS item from PISA 2012.

5.2. Inference, Prediction, and Interpretability

As articulated previously, this paper focuses on a prediction problem, rather than a statistical inference problem. Comparing with a prediction framework, statistical inference tends to draw stronger conclusions under stronger assumptions on the data generation mechanism. Unfortunately, due to the complexity of CPS process data, such assumptions are not only hardly satisfied, but also difficult to verify. On the other hand, a prediction framework requires less assumptions and thus is more suitable for exploratory analysis. As a price, the findings from the predictive framework are preliminary and can only be used to generate hypotheses for future studies.

It may be useful to provide uncertainty measures for the prediction performance and for the parameter estimates, where the former indicates the replicability of the prediction performance and the later reflects the stability of the prediction model. In particular, patterns from a prediction model with low replicability and low stability should not be overly interpreted. Such uncertainty measures may be obtained from cross validation and bootstrapping (see Chapter 7, Friedman et al., 2001 ).

It is also worth distinguishing prediction methods based on a simple model like the one proposed above and those based on black-box machine learning algorithms (e.g., random forest). Decisions based on black-box algorithms can be very difficult to understood by human and thus do not provide us insights about the data, even though they may have a high prediction accuracy. On the other hand, a simple model can be regarded as a data dimension reduction tool that extracts interpretable information from data, which may facilitate our understanding of complex problem solving.

5.3. Extending the Current Model

The proposed model can be extended along multiple directions. First, as discussed earlier, we may extend the model by allowing the regression coefficients b jk to be time-dependent. In that case, nonparametric estimation methods (e.g., splines) need to be developed for parameter estimation. In fact, the idea of time-varying coefficients has been intensively investigated in the event history analysis literature (e.g., Fan et al., 1997 ). This extension will be useful if the effects of the features in H i ( t ) change substantially over time.

Second, when the dimension p of H i ( t ) is high, better interpretability and higher prediction power may be achieved by using Lasso-type sparse estimators (see e.g., Chapter 3 Friedman et al., 2001 ). These estimators perform simultaneous feature selection and regularization in order to enhance the prediction accuracy and interpretability.

Finally, outliers are likely to occur in the data due to the abnormal behavioral patterns of a small proportion of people. A better treatment of outliers will lead to better prediction performance. Thus, a more robust objective function will be developed for parameter estimation, by borrowing ideas from the literature of robust statistics (see e.g., Huber and Ronchetti, 2009 ).

5.4. Multiple-Task Analysis

The current analysis focuses on analyzing data from a single task. To study individuals' CPS ability, it may be of more interest to analyze multiple CPS tasks simultaneously and to investigate how an individual's process data from one or multiple tasks predict his/her performance on the other tasks. Generally speaking, one's CPS ability may be better measured by the information in the process data that is generalizable across a representative set of CPS tasks than only his/her final outcomes on these tasks. In this sense, this cross-task prediction problem is closely related to the measurement of CPS ability. This problem is also worth future investigation.

Author Contributions

All authors listed have made a substantial, direct and intellectual contribution to the work, and approved it for publication.

This research was funded by NAEd/Spencer postdoctoral fellowship, NSF grant DMS-1712657, NSF grant SES-1826540, NSF grant IIS-1633360, and NIH grant R01GM047845.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

1. ^ The item can be found on the OECD website ( http://www.oecd.org/pisa/test-2012/testquestions/question3/ )

2. ^ The log file data and code book for the CC item can be found online: http://www.oecd.org/pisa/pisaproducts/database-cbapisa2012.htm .

Allison, P. D. (2014). Event history analysis: Regression for longitudinal event data . London: Sage.

Google Scholar

Danner, D., Hagemann, D., Schankin, A., Hager, M., and Funke, J. (2011). Beyond IQ: a latent state-trait analysis of general intelligence, dynamic decision making, and implicit learning. Intelligence 39, 323–334. doi: 10.1016/j.intell.2011.06.004

CrossRef Full Text | Google Scholar

Eichmann, B., Goldhammer, F., Greiff, S., Pucite, L., and Naumann, J. (2019). The role of planning in complex problem solving. Comput. Educ . 128, 1–12. doi: 10.1016/j.compedu.2018.08.004

Fan, J., Gijbels, I., and King, M. (1997). Local likelihood and local partial likelihood in hazard regression. Anna. Statist . 25, 1661–1690. doi: 10.1214/aos/1031594736

Fox, J.-P., and Marianti, S. (2016). Joint modeling of ability and differential speed using responses and response times. Multivar. Behav. Res . 51, 540–553. doi: 10.1080/00273171.2016.1171128

PubMed Abstract | CrossRef Full Text | Google Scholar

Friedman, J., Hastie, T., and Tibshirani, R. (2001). The Elements of Statistical Learning . New York, NY: Springer.

Greiff, S., Wüstenberg, S., and Avvisati, F. (2015). Computer-generated log-file analyses as a window into students' minds? A showcase study based on the PISA 2012 assessment of problem solving. Comput. Educ . 91, 92–105. doi: 10.1016/j.compedu.2015.10.018

Greiff, S., Wüstenberg, S., and Funke, J. (2012). Dynamic problem solving: a new assessment perspective. Appl. Psychol. Measur . 36, 189–213. doi: 10.1177/0146621612439620

Halpin, P. F., and De Boeck, P. (2013). Modelling dyadic interaction with Hawkes processes. Psychometrika 78, 793–814. doi: 10.1007/s11336-013-9329-1

Halpin, P. F., von Davier, A. A., Hao, J., and Liu, L. (2017). Measuring student engagement during collaboration. J. Educ. Measur . 54, 70–84. doi: 10.1111/jedm.12133

He, Q., and von Davier, M. (2015). “Identifying feature sequences from process data in problem-solving items with N-grams,” in Quantitative Psychology Research , eds L. van der Ark, D. Bolt, W. Wang, J. Douglas, and M. Wiberg, (New York, NY: Springer), 173–190.

He, Q., and von Davier, M. (2016). “Analyzing process data from problem-solving items with n-grams: insights from a computer-based large-scale assessment,” in Handbook of Research on Technology Tools for Real-World Skill Development , eds Y. Rosen, S. Ferrara, and M. Mosharraf (Hershey, PA: IGI Global), 750–777.

Huber, P. J., and Ronchetti, E. (2009). Robust Statistics . Hoboken, NJ: John Wiley & Sons.

Klein Entink, R. H., Kuhn, J.-T., Hornke, L. F., and Fox, J.-P. (2009). Evaluating cognitive theory: A joint modeling approach using responses and response times. Psychol. Methods 14, 54–75. doi: 10.1037/a0014877

Luce, R. D. (1986). Response Times: Their Role in Inferring Elementary Mental Organization . New York, NY: Oxford University Press.

MacKay, D. G. (1982). The problems of flexibility, fluency, and speed–accuracy trade-off in skilled behavior. Psychol. Rev . 89, 483–506. doi: 10.1037/0033-295X.89.5.483

van der Linden, W. J. (2007). A hierarchical framework for modeling speed and accuracy on test items. Psychometrika 72, 287–308. doi: 10.1007/s11336-006-1478-z

Vista, A., Care, E., and Awwal, N. (2017). Visualising and examining sequential actions as behavioural paths that can be interpreted as markers of complex behaviours. Comput. Hum. Behav . 76, 656–671. doi: 10.1016/j.chb.2017.01.027

Wüstenberg, S., Greiff, S., and Funke, J. (2012). Complex problem solving–More than reasoning? Intelligence 40, 1–14. doi: 10.1016/j.intell.2011.11.003

Xu, H., Fang, G., Chen, Y., Liu, J., and Ying, Z. (2018). Latent class analysis of recurrent events in problem-solving items. Appl. Psychol. Measur . 42, 478–498. doi: 10.1177/0146621617748325

Yarkoni, T., and Westfall, J. (2017). Choosing prediction over explanation in psychology: lessons from machine learning. Perspect. Psychol. Sci . 12, 1100–1122. doi: 10.1177/1745691617693393

Zhan, P., Jiao, H., and Liao, D. (2018). Cognitive diagnosis modelling incorporating item response times. Br. J. Math. Statist. Psychol . 71, 262–286. doi: 10.1111/bmsp.12114

Keywords: process data, complex problem solving, PISA data, response time, event history analysis

Citation: Chen Y, Li X, Liu J and Ying Z (2019) Statistical Analysis of Complex Problem-Solving Process Data: An Event History Analysis Approach. Front. Psychol . 10:486. doi: 10.3389/fpsyg.2019.00486

Received: 31 August 2018; Accepted: 19 February 2019; Published: 18 March 2019.

Reviewed by:

Copyright © 2019 Chen, Li, Liu and Ying. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY) . The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yunxiao Chen, [email protected]

Disclaimer: All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.

Collaborative Problem Solving: Considerations for the National Assessment of Educational Progress NAEP CPS NCES PISA

- https://nces.ed.gov/nationsreportcard/pdf/researchcenter/collaborative_problem_solving.pdf

An IERI – International Educational Research Institute Journal

- Open access

- Published: 13 November 2023

Behavioral patterns in collaborative problem solving: a latent profile analysis based on response times and actions in PISA 2015

- Areum Han ORCID: orcid.org/0000-0001-6974-521X 1 ,

- Florian Krieger ORCID: orcid.org/0000-0001-9981-8432 2 ,

- Francesca Borgonovi ORCID: orcid.org/0000-0002-6759-4515 3 &

- Samuel Greiff ORCID: orcid.org/0000-0003-2900-3734 1

Large-scale Assessments in Education volume 11 , Article number: 35 ( 2023 ) Cite this article

1340 Accesses

1 Citations

8 Altmetric

Metrics details

Process data are becoming more and more popular in education research. In the field of computer-based assessments of collaborative problem solving (ColPS), process data have been used to identify students’ test-taking strategies while working on the assessment, and such data can be used to complement data collected on accuracy and overall performance. Such information can be used to understand, for example, whether students are able to use a range of styles and strategies to solve different problems, given evidence that such cognitive flexibility may be important in labor markets and societies. In addition, process information might help researchers better identify the determinants of poor performance and interventions that can help students succeed. However, this line of research, particularly research that uses these data to profile students, is still in its infancy and has mostly been centered on small- to medium-scale collaboration settings between people (i.e., the human-to-human approach). There are only a few studies involving large-scale assessments of ColPS between a respondent and computer agents (i.e., the human-to-agent approach), where problem spaces are more standardized and fewer biases and confounds exist. In this study, we investigated students’ ColPS behavioral patterns using latent profile analyses (LPA) based on two types of process data (i.e., response times and the number of actions) collected from the Program for International Student Assessment (PISA) 2015 ColPS assessment, a large-scale international assessment of the human-to-agent approach. Analyses were conducted on test-takers who: (a) were administered the assessment in English and (b) were assigned the Xandar unit at the beginning of the test. The total sample size was N = 2,520. Analyses revealed two profiles (i.e., Profile 1 [95%] vs. Profile 2 [5%]) showing different behavioral characteristics across the four parts of the assessment unit. Significant differences were also found in overall performance between the profiles.

Collaborative problem-solving (ColPS) skills are considered crucial 21st century skills (Graesser et al., 2018 ; Greiff & Borgonovi, 2022 ). They are a combination of cognitive and social skill sets (Organization for Economic Co-operation and Development [OECD], 2017a ), involving “an anchoring skill—a skill upon which other skills are built” (Popov et al., 2019 , p. 100). Thus, it makes sense that the importance of ColPS has been continually emphasized in research and policy spheres. Modern workplaces and societies require individuals to be able to work in teams to solve ill-structured problems, so having a sufficient level of the skills and the ability to effectively execute them are expected and required in many contexts in people’s lives (Gottschling et al., 2022 ; Rosen & Tager, 2013 , as cited in Herborn et al., 2017 ; Sun et al., 2022 ). Consequently, interest in research and policies on ColPS has grown in the past few years.

In 2015, the Program for International Student Assessment (PISA), managed by the OECD, administered an additional, computer-based assessment of ColPS alongside the core assessment domains of mathematics, reading, and science. The PISA 2015 ColPS assessment was administered in 52 education systems, targeting 15-year-old students (OECD, 2017a , 2017b ). It has provided a substantial body of theory and evidence related to computer-based assessments of the skills involved in the human-to-agent approach (i.e., H-A approach), which makes test-takers collaborate with a couple of computer agents to tackle simulative problems. A great deal of subsequent theoretical and empirical studies on ColPS have followed, drawing on the established framework of the PISA 2015 ColPS assessment and the data that were generated (e.g., Chang et al., 2017 ; Child & Shaw, 2019 ; Graesser et al., 2018 ; Herborn et al., 2017 ; Kang et al., 2019 ; Rojas et al., 2021 ; Swiecki et al., 2020 ; Tang et al., 2021 ; Wu et al., 2022 ).

Despite a growing body of research on ColPS, an unexplained aspect of ColPS revolves around the question, “What particular [ColPS] behaviors give rise to successful problem-solving outcomes?” (Sun et al., 2022 , p. 1). To address this question, a few studies have used students’ process data (e.g., response times) and specifically attempted to profile these students on the basis of their data to investigate behavioral patterns that go beyond performance. Indeed, analyzing test-takers’ process data makes it possible to understand the characteristics of performance in depth, for instance, how 15-year-old students interacted in problem spaces, such as incorrect responses despite overall effective strategies or correct responses that relied on guessing (He et al., 2022 ; Teig et al., 2020 ). However, such studies are still in the embryonic stage and have mostly revolved around the relatively small- to medium-scale assessments with the human-to-human approach (i.e., H-H approach), which entails naturalistic collaboration with people (e.g., Andrews-Todd et al., 2018 ; Dowell et al., 2018 ; Han & Wilson, 2022 ; Hao & Mislevy, 2019 ; Hu & Chen, 2022 ). Little research has been carried out on the process data from large-scale assessments that have used the H-A approach, such as the one employed in PISA 2015.

Therefore, in this research, we aimed to investigate test-takers’ profiles to address the aforementioned question about the behaviors that lead to successful collaborative problem solving. To do so, we conducted an exploratory latent profile analysis (LPA), a profiling methodology that is based on the two types of process data collected in PISA 2015: (a) response time (i.e., the sum of “the time spent on the last visit to an item” per part; OECD, 2019 , p. 3) and (b) the number of actions (e.g., “posting a chat log” or “conducting a search on a map tool”; De Boeck & Scalise, 2019 , p. 1). As described in the previous literature, PISA 2015 has several advantages, including automated scoring and easier and more valid comparisons in standardized settings, although it simultaneously has drawbacks (e.g., it is limited in its ability to deliver an authentic collaboration experience; Han et al., 2023 ; Siddiq & Scherer, 2017 ). It should be noted that PISA 2015 is just one of many (large-scale) H-A assessments on ColPS. Thus, there will be myriad possible ways to find behavioral patterns. As a steppingstone, we hope the results of this study will be helpful for clarifying the behaviors of (un)successful participants in ColPS and will thus be conducive to the development of appropriate interventions (Greiff et al., 2018 ; Hao & Mislevy, 2019 ; Hickendorff et al., 2018 ; Teig et al., 2020 ). Furthermore, as we identified subgroups on the basis of the process data, the subgroups will be used to design better task situations and assessment tools in terms of validity and statistical scoring rules in the future (AERA, APA, & NCME, 2014 ; Goldhammer et al., 2020 , 2021 ; Herborn et al., 2017 ; Hubley & Zumbo, 2017 ; Li et al., 2017 ; Maddox, 2023 ; von Davier & Halpin, 2013 ).

Literature review

The colps assessment in pisa 2015 and the xandar unit.

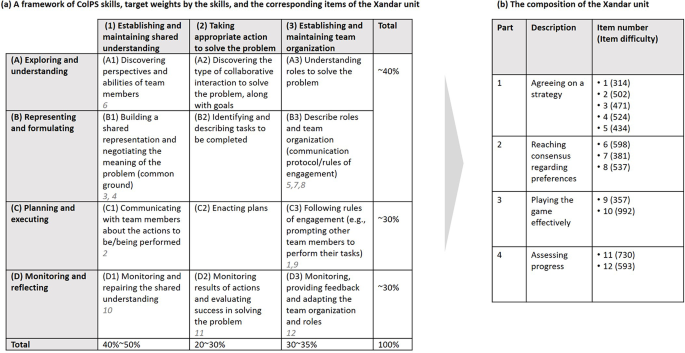

ColPS in PISA 2015 is defined as “the capacity of an individual to effectively engage in a process whereby two or more agents attempt to solve a problem by sharing the understanding and effort required to come to a solution and pooling their knowledge, skills and efforts to reach that solution” (OECD, 2017a , p. 6). To design and implement the assessment, the OECD defined a matrix of four individual problem-solving processes and three collaboration processes, for a total of 12 different skills (OECD, 2017a ; see Fig. 1 ). The four individual problem-solving processes came from PISA 2012 and entail (a) Exploring and understanding, (b) Representing and formulating, (c) Planning and executing, and (d) Monitoring and reflecting, whereas the three collaborative competencies are (a) Establishing and maintaining shared understanding, (b) Taking appropriate action to solve the problem, and (c) Establishing and maintaining team organization.

The overall composition of the Xandar unit and a general guideline of weighting. Note. The number in Figure 1( a ), highlighted in grey and italics, indicates the item number of the Xandar unit corresponding to each subskill. The item difficulty values in Figure 1 ( b ) are reported on the PISA scale. The framework of Figure 1 is based on the OECD ( 2016 , 2017b ). Adapted from the PISA 2015 collaborative problem-solving framework, by the OECD, 2017 ( https://www.oecd.org/pisa/pisaproducts/Draft%20PISA%202015%20Collaborative%20Problem%20Solving%20Framework%20.pdf ). Copyright 2017 by the OECD. The data for the descriptions of the Xandar unit are from OECD ( 2016 ). Adapted from Description of the released unit from the 2015 PISA collaborative problem-solving assessment, collaborative problem-solving skills, and proficiency levels, by the OECD, 2016 ( https://www.oecd.org/pisa/test/CPS-Xandar-scoring-guide.pdf ). Copyright 2016 by the OECD

With the matrix of four problem-solving and three collaboration processes in mind, the assessment was designed and consisted of assorted items, that is, a single communicative turn between the test-taker and agent(s), actions, products, or responses during ColPS (OECD, 2016 ). With difficulty ranging from 314 to 992, each item measured one (or sometimes more than one) of the 12 skills, and a score of 0, 1, or 2 was assigned (Li et al., 2021 ; OECD, 2016 , 2017a ). Diverse sets of items referred to each task (e.g., consensus building), and each task covered one component of each (problem scenario) unit with a predefined between-unit dimension (e.g., school context vs. non-school context) and various within-unit dimensions (e.g., types of tasks, including jigsaw or negotiation; see details in OECD, 2017a ).

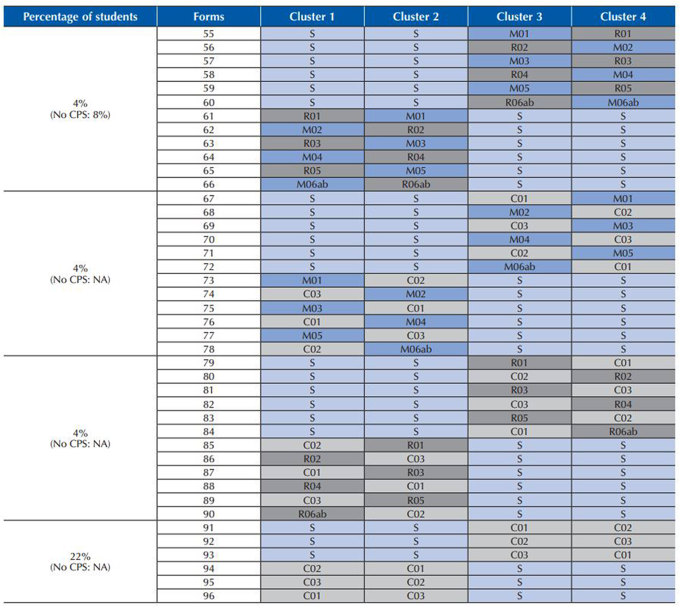

In the computer-based assessment mode of PISA 2015 Footnote 1 , each test-taker worked on four 30-min clusters (i.e., 2 h in total), two of which were in the domain of science, whereas the rest involved reading, mathematics, or ColPS (OECD, 2017a ; see Fig. 2 ). Thus, one test-taker could have had one or two ColPS units—with different positions depending on an assessment form—if their countries or economies were participating in the ColPS assessment (see OECD, 2017c ). Among the ColPS units in the main PISA 2015 study, only one unit was released in the official OECD reports, called Xandar , with additional contextual information included to help interpret the findings (e.g., the unit structure or item difficulty) beyond the raw data, which included actions, response times, and performance levels (e.g., OECD, 2016 ). Consequently, this unit was utilized in the current study because the valid interpretations of the behavioral patterns we identified relied on each item’s specific contextual information (Goldhammer et al., 2021 ).

The computer-based assessment design of the PISA 2015 main study, including the domain of ColPS. Note. R01-R06 = Reading clusters; M01-M06 = Mathematics clusters; S = Science clusters; C01-C03 = ColPS clusters. From PISA 2015 Technical Report (p. 39), by the OECD, 2017 ( https://www.oecd.org/pisa/data/2015-technical-report/PISA2015_TechRep_Final.pdf ). Copyright 2017 by the OECD

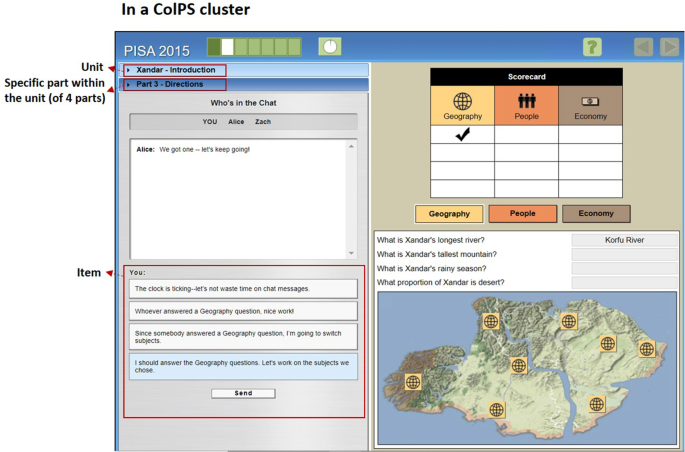

In the Xandar unit, each test-taker worked with two computer agents to solve problems on the geography, people, and economy of an imaginary country named Xandar (OECD, 2017b ; see Fig. 3 ). It should be noted that performance in the Xandar unit was assessed as correct actions or responses in the ColPS process, not as the quality of group output. According to the OECD, this unit is “in-school, private, non-technology” context-based and is composed of four separate parts of “decision-making and coordination tasks” in the scenario of a contest (OECD, 2017b ; p. 53). The detailed composition of the unit is described in Fig. 1 (b).

An example screenshot of the PISA 2015 Xandar unit. Note. Adapted from Description of the released unit from the 2015 PISA collaborative problem-solving assessment, collaborative problem-solving skills, and proficiency levels (p. 11), by the OECD, 2016 ( https://www.oecd.org/pisa/test/CPS-Xandar-scoring-guide.pdf ). Copyright 2016 by the OECD

As Fig. 1 shows, the unit did not cover all 12 skills from the competency framework but covered the skills only partially across the four parts of the assessment. More information about each part is as follows.

In Part 1 (i.e., the stage for agreeing on a strategy as a team), participants get accustomed to the assessment unit, including the chat interface and task space (De Boeck & Scalise, 2019 ; OECD, 2017b ). The stage aims to establish common strategies for ColPS under the contest rules (De Boeck & Scalise, 2019 ). Part 1 contains five items, whose item difficulty ranges from 314 to 524 (OECD, 2016 ).

In Part 2 (i.e., reaching a consensus regarding preferences), participants and the computer agents each allocate a topic to themselves (i.e., the geography, people, or economy of Xandar; OECD, 2017b ). In this process, they should reach a consensus by resolving disagreements within their team (OECD, 2017b ). The purpose of this stage is to establish a mutual understanding (De Boeck & Scalise, 2019 ). There are three items in Part 2, with a difficulty of 381, 537, and 598, respectively (OECD, 2016 ).

In Part 3 (i.e., playing the game effectively), participants respond to the questions about the geography of Xandar (OECD, 2017b ), regardless of their choice in Part 2. In this part, they proceed with the contest and should respond appropriately to the agents who violate common rules and raise issues (De Boeck & Scalise, 2019 ). Part 3 consists of two items (i.e., one with a difficulty of 357 and the other with a difficulty of 992; OECD, 2016 ).

In Part 4 (i.e., assessing progress), participants are required to monitor and assess their team’s progress (OECD, 2017b ). In this part, the computer agents pose challenges to the progress evaluation and ask for extra help for the team to solve problems on the economy of Xandar (De Boeck & Scalise, 2019 ). Part 4 is composed of two items (i.e., one with a difficulty of 593 and the other with a difficulty of 730; OECD, 2016 ).

Process data and profiling students on the basis of response times and actions

Process data refer to “empirical information about the cognitive (as well as meta-cognitive, motivational, and affective) states and related behavior that mediate the effect of the measured construct(s) on the task product (i.e., item score)” (Goldhammer & Zehner, 2017 , p. 128). These data can thus indicate “ traces of processes” (e.g., strategy use or engagement; Ercikan et al., 2020 , p. 181; Goldhammer et al., 2021 ; Zhu et al., 2016 ). Such information is recorded and collected via external instruments and encompasses diverse types of data, such as eye-tracking data, paradata (e.g., mouse clicks) or anthropological data (e.g., gestures; Hubley & Zumbo, 2017 ). Process data have recently been spotlighted, as technology-based assessments have advanced with the growth of data science and computational psychometrics, thereby increasing the opportunities for their exploitation across the entire assessment cycle (Goldhammer & Zehner, 2017 ; Maddox, 2023 ).

A substantial number of studies on response times and the number of clicks (i.e., defined as actions in this study) along with test scores have been published, specifically in the field of cognitive ability testing (e.g., studies on complex problem-solving). For instance, according to Goldhammer et al. ( 2014 ), response times and task correctness have a positive relationship when controlled reasoning-related constructs (e.g., computer-based problem-solving) are being measured, in contrast to repetitive and automatic reasoning (e.g., basic reading; Greiff et al., 2018 ; Scherer et al., 2015 ). Greiff et al. ( 2018 ) also argued that the number of interventions employed across the investigation stages can be used as a way to gauge the thoroughness of task exploration because they indicate in-depth and longer commitments to the complex problem-solving task.

In the sphere of ColPS assessments—related to and not mutually exclusive from the domain of complex problem-solving—there is also currently active research on these data, particularly in the contexts of assessments that employ the H-H approach. One such research topic involves profiling students on the basis of their data to examine the behavioral patterns that occur during ColPS. For instance, Hao and Mislevy ( 2019 ) found four clusters via a hierarchical clustering analysis of communication data. One of their results was that participants’ performance level tended to improve (i.e., the scores on the questions about the factors related to volcanic eruption) through more negotiations after the team relayed information. Andrews-Todd et al. ( 2017 ) also discovered four profiles through the analysis of chat logs from applying Andersen/Rasch multivariate item response modeling: cooperative, collaborative, fake collaboration, and dominant/dominant interaction patterns. They reported that the propensities for displaying the cooperative and collaborative interaction patterns were positively correlated with the performance outcomes ( r = .28 and 0.11, p s < 0.05), in contrast to the dominant/dominant interaction pattern, which was negatively correlated with performance outcomes ( r = − .21, p < .01). However, there was no significant correlation between outcomes and the inclination to exhibit the fake collaboration pattern ( r = − .02, p = .64). Such results cannot be directly applied to assessments that have applied the H-A approach due to the differences in interactions.

Compared with studies that have employed the H-H approach, there is still not much research that has attempted to identify behavioral patterns on the basis of the process data collected in ColPS assessments that have employed the H-A approach. One of the few studies is De Boeck and Scalise ( 2019 ). They applied structural equation modeling to data on United States students’ actions, response times, and performance in each part of the PISA 2015 Xandar unit. Consequently, they found a general correlation between the number of actions and response times, a finding that suggests that “an impulsive and fast trial-and-error style” was not the most successful strategy for this unit (p. 6). They also demonstrated specific associations for each part of the unit. For example, performance was related to more actions and more time in Part 4 (i.e., the last part about assessing progress), in contrast to Part 1 on understanding the contest, where the association between actions and performance was negative (De Boeck & Scalise, 2019 ; OECD, 2016 ). Notably, these findings resonate with earlier studies in other settings employing different tools. For instance, despite being implemented in the H-H setting, Chung et al. ( 1999 ) reported that low-performing teams exchanged more predefined messages than high-performing teams during their knowledge mapping tasks. However, De Boeck and Scalise ( 2019 ) showed the general patterns of their entire sample of students and did not delve into the distinctiveness of the patterns, in contrast to the current study, which was designed to explore unobserved student groups and their behavioral characteristics via LPA. Furthermore, the patterns they identified in their study were associated with the performances in each part, thereby making it difficult to determine the relationship between the patterns and the overall level of performance. Their participants were also limited to only individuals from the United States. Therefore, there is still a need to uncover behavioral patterns on the basis of process data and their relationships with overall performance in detail, relying on more diverse populations in more standardized settings and by taking advantage of the H-A approach.

Research questions

The objective of this research was to investigate different behavioral profiles of test-takers by drawing on the two types of process data that are available (i.e., response time and the number of actions) collected during the PISA 2015 ColPS assessment, particularly in the four parts across the Xandar unit. To achieve the objective, we posed two research questions: (a) Which profiles can be identified on the basis of students’ response times and the number of actions in the Xandar unit? and (b) How do the profiles differ in terms of overall performance?

Methodology

Participants and sampling.

The current study examined the PISA 2015 ColPS assessment participants, specifically those who (a) took the assessment in English and (b) had the Xandar unit as the first cluster because we wanted to control for potential sources of bias (i.e., languages, item position, and fatigue). Out of the total of 3,065 students belonging to 11 education systems (i.e., Australia, Canada, Hong Kong, Luxembourg, Macao, New Zealand, Singapore, Sweden, the United Arab Emirates, the United Kingdom, and the United States) Footnote 2 , 539 outliers were excluded via the robust Mahalanobis distance estimation with a 0.01 cutoff for the p -value (see Leys et al., 2018 ) to avoid the influence of outliers on the profile solution (Spurk et al., 2020 ). Footnote 3 In addition, six inactive students were subsequently excluded (i.e., those who did not exhibit any activities across the indicators). Hence, the final sample consisted of 2,520 students (see Table 1 ). The student samples were chosen according to the specific two-stage sampling procedures employed by the OECD (De Boeck & Scalise, 2019 ; OECD, 2009 ) that bring about different probabilities of each student’s participation (Asparouhov, 2005 ; Burns et al., 2022 ; Scherer et al., 2017 ). Given the OECD’s official guidelines and the previous literature related to PISA and LPA (e.g., Burns et al., 2022 ; OECD, 2017c ; Wilson & Urick, 2022 ), we included the sampling hierarchy and the sampling weights of the students in the analyses (see also the Statistical Analyses section). Table 1 Participants’ characteristics Full size table

Materials and indicators

We employed a total of eight indicators for the analyses: (a) the total response time (i.e., one indicator per part for a total of four indicators; the sum of “the time spent on the last visit to an item” per part; OECD, 2019 ) and (b) the total number of actions (i.e., one indicator per part for a total of four indicators). For the distal outcome variables, we utilized the 10 plausible ColPS values (i.e., PV1CLPS-PV10CLPS), which have “a weighted mean of 500 and a weighted standard deviation of 100” (OECD, 2017c , p. 234). The plausible values are “multiple imputed proficiency values” given the test-takers’ patterns of responses, which thus include probabilistic components and indicate their possible level of ability (i.e., a latent construct; Khorramdel et al., 2020 , p. 44). To analyze the plausible values, we referred to the recommendations made in the official guidelines of the OECD (e.g., OECD, 2017c ) and the previous literature on large-scale assessments (e.g., Asparouhov & Muthén, 2010 ; Rutkowski et al., 2010 ; Scherer, 2020 ; Yamashita et al., 2020 , see also Statistical Analyses section). All measures included in this study were open to the public and can be found in the PISA 2015 repository database ( https://www.oecd.org/pisa/data/2015database/ ).

Data cleaning and preparation

We used R 4.2.1 to prepare the data (R Core Team, 2022 ). As shown above, we extracted the sample students on the basis of two conditions: (a) whether they took the assessment in English and (b) whether they had the Xandar unit as the first cluster of the assessment. We then used M plus version 8.8 (Muthén & Muthén, 1998–2017 ) to conduct exploratory analyses for all indicators. Given that the variances between the response time indicators were too high, the analyses did not converge. Thus, we applied a logarithmic transformation to the response time indicators in order to reduce the variance in further steps. Note that the action indicators could not be transformed because one student had none (i.e., 0 actions) in Part 4.

Statistical analyses

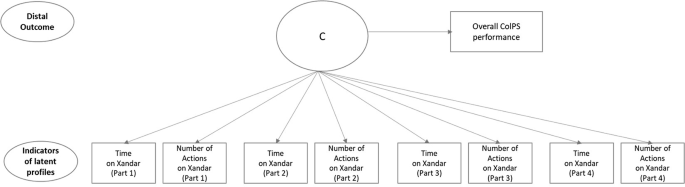

LPA was used to identify latent profiles of students on the basis of response time and action data measured in the Xandar unit (Morin et al., 2011 ; see the model in Fig. 4 ). LPA, a more person-centered and model-based approach, has many advantages over other traditional clustering methods, such as k -means clustering (Magidson & Vermunt, 2002 ; Morin et al., 2011 ). In particular, it classifies individuals into clusters on the basis of the estimated probabilities of belonging to specific profiles, where other covariates, such as demographics, can also be considered (Magidson & Vermunt, 2002 ; Pastor et al., 2007 ; Spurk et al., 2020 ). It also specifies alternative models, thereby making it possible to compare multiple models on the basis of various fit statistics (Morin et al., 2011 ).

Full LPA model in this research. Note. C denotes the categorical latent variable describing the latent profiles

Relying on these strengths of LPA, we conducted the statistical analyses with reference to syntax written by Burns et al. ( 2022 ) and Song ( 2021 ). We followed the default assumption of the traditional LPA that the residual correlations between the indicators can be explained only by profile membership (Morin et al., 2011 ; Vermunt & Magidson, 2002 ). There was insufficient empirical and theoretical evidence that it would be acceptable to relax assumptions related to the relationship between the two types of process data from the PISA 2015 ColPS assessment (Collie et al., 2020 ; Meyer & Morin, 2016 ; Morin et al., 2011 ). Therefore, we fixed (a) the covariances between the latent profile indicators to zero and (b) the variances to equality across profiles (i.e., default options; Asparouhov & Muthén, 2015 ; Muthén & Muthén, 1998–2017 ).

At the same time, because correlation coefficients greater than 0.50 were found between some indicators (see Table 2 ), we separately relaxed some assumptions (i.e., some indicators may be correlated within profiles) and tested them. According to Sinha et al. ( 2021 ), the cases with correlation coefficients over 0.50 may have an impact on modeling and fit statistics, so they should be examined carefully. Thus, we tried to formally check the level of local dependence between the indicators but could get only some evidence from the factor loadings due to the constraints of the statistical program. Using the evidence we gathered and drawing on Sinha et al. ( 2021 ), we separately conducted sensitivity analyses by relaxing the assumption (i.e., allowing local dependence between two specific indicators within profiles) or removing one of them. However, not all trials terminated well when based on the relaxed assumptions. When removing some indicators (e.g., C100Q01T and C100Q02A), the relative model fit statistics improved for some trials, but the overall profile membership did not change substantially. Therefore, we decided to stick with the current model with all the indicators and the most conservative assumptions.

In this study, we inspected several models with one to 10 latent profiles, particularly employing the standard three-step approach, in line with best practices (Asparouhov & Muthén, 2014 ; Bakk & Vermunt, 2016 ; Dziak et al., 2016 ; Nylund-Gibson & Choi, 2018 ; Nylund-Gibson et al., 2019 ; Wang & Wang, 2019 ). Footnote 4 According to the approach, (a) an unconditional LPA model is first specified on the basis of the indicator variables. Then, (b) the measurement errors and the most likely class variable of the latent profile C are allocated to the model. Finally, (c) the relationship between profile membership and the distal outcomes is estimated (Dziak et al., 2016 ). Specifically for Step 3, 10 data sets (i.e., each of which contains one of the 10 sets of plausible values, leaving the other variables the same) were prepared to utilize the PISA plausible values in Mplus (Asparouhov & Muthén, 2010 ; Yamashita et al., 2020 ). In this way, 10 analyses with each plausible value were conducted, and the final estimations were derived according to Rubin’s ( 1987 ) rules (Baraldi & Enders, 2013 ; Burns et al., 2022 ; Muthén & Muthén, 1998–2017 ; Rohatgi & Scherer, 2020 ; Mplus Type = IMPUTATION option).

Given the sampling design of PISA described earlier, we applied the final student weights (i.e., W_FSTUWT; Mplus WEIGHT option) and the hierarchical sampling structure (i.e., selecting schools first; cluster = CNTSCHID) to the models ( Mplus Type = COMPLEX MIXTURE option). As can be seen from the kurtosis and skewness values in Table 2 , the raw data were not normally distributed. Therefore, maximum likelihood estimation with robust standard errors (MLR) was used to address the nonnormality and the possibility of nonindependence in the data (Spurk et al., 2020 ; Teig et al., 2020 ). Out of 2,520 students in the sample, four did not fully respond to the test unit (i.e., missing rates = 0.1%; 20 observations/20,160 records of all eight indicators). Despite their small numbers, these missing data were handled with the full information maximum likelihood estimation (i.e., the default in Mplus ; Collie et al., 2020 ; Rohatgi & Scherer, 2020 ). Following the recommendations in earlier studies (e.g., Berlin et al., 2014 ; Nylund-Gibson & Choi, 2018 ; Spurk et al., 2020 ), we also used multiple starting values to avoid local solution problems. Thus, the models were estimated with at least 5,000 random start values, and the best 500 were retained for the final optimization (Geiser, 2012 ; Meyer & Morin, 2016 ; Morin et al., 2011 ). We report the results from the models that “converged on a replicated solution” (Morin et al., 2011 , p. 65).

Model evaluation and selection

We examined multiple criteria and referred to the prior literature to evaluate the candidate models and select the best profile solution. First, we checked whether an error message occurred (Berlin et al., 2014 ; Spurk et al., 2020 ). Second, we compared the relative information criteria, such as the Bayesian Information Criterion (BIC), across the candidate models. The lowest values of the relative information criteria suggest the best fitting model (Berlin et al., 2014 ; Morin et al., 2011 ; Spurk et al., 2020 ). Third, we reviewed the level of entropy and average posterior classification probabilities of the models, both of which can represent the confidence level of the classification. If the values for a specific model are closer to 1, its classification accuracy is greater (Berlin et al., 2014 ; Morin et al., 2011 ). Fourth, we considered profile sizes. According to Berlin et al. ( 2014 ) and Spurk et al. ( 2020 ), the profile should be retained if the additional profile consists of (a) greater than or equal to 1% of the total sample or (b) greater than or equal to 25 cases. Fifth, we examined whether a “salsa effect” existed (i.e., “the coercion of [profiles] to fit a population that may not have been latent [profiles]”; Sinha et al., 2021 , p. 26). In other words, the effect suggests that the differences in indicators between profiles are shown merely as parallel lines (Sinha et al., 2021 ). Thus, it indicates unreliable results of the profile analysis. Finally, we validated our identification by testing mean differences in the overall performance across the profile groups, which provided the answers to the second research question (Sinha et al., 2021 ; Spurk et al., 2020 ). We further tested mean differences in each indicator across the profile groups using the Wald test (Burns et al., 2022 ).

Due to limitations of the statistical program, we could not implement the bootstrapped likelihood ratio test (i.e., BLRT) to determine the number of profiles. Moreover, the results from the other alternative tests (e.g., the Lo-Mendell-Rubin test) were not statistically significant, which might be unreliable because our raw data deviated from the default assumption of normality (Guerra-Peña & Steinley, 2016 ; Spurk et al., 2020 ). Indeed, such tests for large-scale complex data, as in the current research, have yet to be thoroughly scrutinized (Scherer et al., 2017 ).

Descriptive statistics and correlations for the behavioral indicators: response times and the number of actions

Prior to identifying the profiles, we checked the descriptive statistics for the indicators, as presented in Table 2 . The correlations between the indicators were low to moderate in size overall (Guilford, 1942 ). Specifically, we found high correlations between the response times in Parts 1 and 2 ( r = .63, p < .01) and between the number of actions in Parts 2 and 4 ( r = .55, p < .01).

The number of profiles based on the behavioral indicators and their descriptions

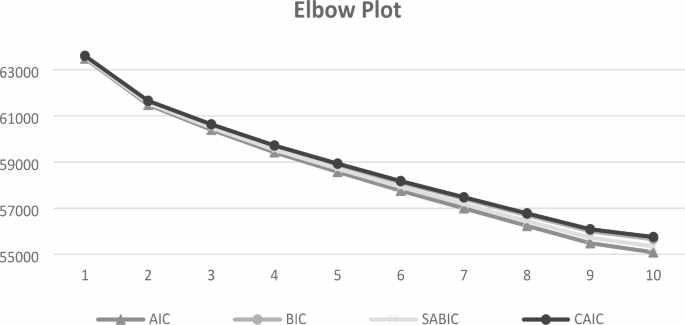

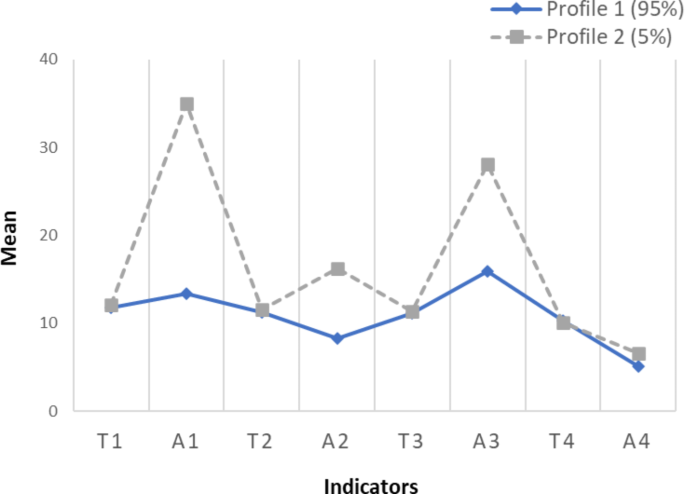

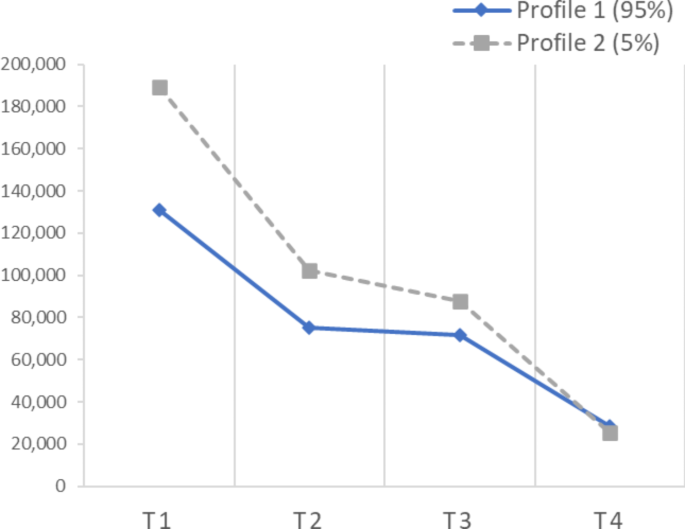

The number of latent profiles based on the behavioral indicators.