Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Chapter 11: Presenting Your Research

Writing a Research Report in American Psychological Association (APA) Style

Learning Objectives

- Identify the major sections of an APA-style research report and the basic contents of each section.

- Plan and write an effective APA-style research report.

In this section, we look at how to write an APA-style empirical research report , an article that presents the results of one or more new studies. Recall that the standard sections of an empirical research report provide a kind of outline. Here we consider each of these sections in detail, including what information it contains, how that information is formatted and organized, and tips for writing each section. At the end of this section is a sample APA-style research report that illustrates many of these principles.

Sections of a Research Report

Title page and abstract.

An APA-style research report begins with a title page . The title is centred in the upper half of the page, with each important word capitalized. The title should clearly and concisely (in about 12 words or fewer) communicate the primary variables and research questions. This sometimes requires a main title followed by a subtitle that elaborates on the main title, in which case the main title and subtitle are separated by a colon. Here are some titles from recent issues of professional journals published by the American Psychological Association.

- Sex Differences in Coping Styles and Implications for Depressed Mood

- Effects of Aging and Divided Attention on Memory for Items and Their Contexts

- Computer-Assisted Cognitive Behavioural Therapy for Child Anxiety: Results of a Randomized Clinical Trial

- Virtual Driving and Risk Taking: Do Racing Games Increase Risk-Taking Cognitions, Affect, and Behaviour?

Below the title are the authors’ names and, on the next line, their institutional affiliation—the university or other institution where the authors worked when they conducted the research. As we have already seen, the authors are listed in an order that reflects their contribution to the research. When multiple authors have made equal contributions to the research, they often list their names alphabetically or in a randomly determined order.

In some areas of psychology, the titles of many empirical research reports are informal in a way that is perhaps best described as “cute.” They usually take the form of a play on words or a well-known expression that relates to the topic under study. Here are some examples from recent issues of the Journal Psychological Science .

- “Smells Like Clean Spirit: Nonconscious Effects of Scent on Cognition and Behavior”

- “Time Crawls: The Temporal Resolution of Infants’ Visual Attention”

- “Scent of a Woman: Men’s Testosterone Responses to Olfactory Ovulation Cues”

- “Apocalypse Soon?: Dire Messages Reduce Belief in Global Warming by Contradicting Just-World Beliefs”

- “Serial vs. Parallel Processing: Sometimes They Look Like Tweedledum and Tweedledee but They Can (and Should) Be Distinguished”

- “How Do I Love Thee? Let Me Count the Words: The Social Effects of Expressive Writing”

Individual researchers differ quite a bit in their preference for such titles. Some use them regularly, while others never use them. What might be some of the pros and cons of using cute article titles?

For articles that are being submitted for publication, the title page also includes an author note that lists the authors’ full institutional affiliations, any acknowledgments the authors wish to make to agencies that funded the research or to colleagues who commented on it, and contact information for the authors. For student papers that are not being submitted for publication—including theses—author notes are generally not necessary.

The abstract is a summary of the study. It is the second page of the manuscript and is headed with the word Abstract . The first line is not indented. The abstract presents the research question, a summary of the method, the basic results, and the most important conclusions. Because the abstract is usually limited to about 200 words, it can be a challenge to write a good one.

Introduction

The introduction begins on the third page of the manuscript. The heading at the top of this page is the full title of the manuscript, with each important word capitalized as on the title page. The introduction includes three distinct subsections, although these are typically not identified by separate headings. The opening introduces the research question and explains why it is interesting, the literature review discusses relevant previous research, and the closing restates the research question and comments on the method used to answer it.

The Opening

The opening , which is usually a paragraph or two in length, introduces the research question and explains why it is interesting. To capture the reader’s attention, researcher Daryl Bem recommends starting with general observations about the topic under study, expressed in ordinary language (not technical jargon)—observations that are about people and their behaviour (not about researchers or their research; Bem, 2003 [1] ). Concrete examples are often very useful here. According to Bem, this would be a poor way to begin a research report:

Festinger’s theory of cognitive dissonance received a great deal of attention during the latter part of the 20th century (p. 191)

The following would be much better:

The individual who holds two beliefs that are inconsistent with one another may feel uncomfortable. For example, the person who knows that he or she enjoys smoking but believes it to be unhealthy may experience discomfort arising from the inconsistency or disharmony between these two thoughts or cognitions. This feeling of discomfort was called cognitive dissonance by social psychologist Leon Festinger (1957), who suggested that individuals will be motivated to remove this dissonance in whatever way they can (p. 191).

After capturing the reader’s attention, the opening should go on to introduce the research question and explain why it is interesting. Will the answer fill a gap in the literature? Will it provide a test of an important theory? Does it have practical implications? Giving readers a clear sense of what the research is about and why they should care about it will motivate them to continue reading the literature review—and will help them make sense of it.

Breaking the Rules

Researcher Larry Jacoby reported several studies showing that a word that people see or hear repeatedly can seem more familiar even when they do not recall the repetitions—and that this tendency is especially pronounced among older adults. He opened his article with the following humourous anecdote:

A friend whose mother is suffering symptoms of Alzheimer’s disease (AD) tells the story of taking her mother to visit a nursing home, preliminary to her mother’s moving there. During an orientation meeting at the nursing home, the rules and regulations were explained, one of which regarded the dining room. The dining room was described as similar to a fine restaurant except that tipping was not required. The absence of tipping was a central theme in the orientation lecture, mentioned frequently to emphasize the quality of care along with the advantages of having paid in advance. At the end of the meeting, the friend’s mother was asked whether she had any questions. She replied that she only had one question: “Should I tip?” (Jacoby, 1999, p. 3)

Although both humour and personal anecdotes are generally discouraged in APA-style writing, this example is a highly effective way to start because it both engages the reader and provides an excellent real-world example of the topic under study.

The Literature Review

Immediately after the opening comes the literature review , which describes relevant previous research on the topic and can be anywhere from several paragraphs to several pages in length. However, the literature review is not simply a list of past studies. Instead, it constitutes a kind of argument for why the research question is worth addressing. By the end of the literature review, readers should be convinced that the research question makes sense and that the present study is a logical next step in the ongoing research process.

Like any effective argument, the literature review must have some kind of structure. For example, it might begin by describing a phenomenon in a general way along with several studies that demonstrate it, then describing two or more competing theories of the phenomenon, and finally presenting a hypothesis to test one or more of the theories. Or it might describe one phenomenon, then describe another phenomenon that seems inconsistent with the first one, then propose a theory that resolves the inconsistency, and finally present a hypothesis to test that theory. In applied research, it might describe a phenomenon or theory, then describe how that phenomenon or theory applies to some important real-world situation, and finally suggest a way to test whether it does, in fact, apply to that situation.

Looking at the literature review in this way emphasizes a few things. First, it is extremely important to start with an outline of the main points that you want to make, organized in the order that you want to make them. The basic structure of your argument, then, should be apparent from the outline itself. Second, it is important to emphasize the structure of your argument in your writing. One way to do this is to begin the literature review by summarizing your argument even before you begin to make it. “In this article, I will describe two apparently contradictory phenomena, present a new theory that has the potential to resolve the apparent contradiction, and finally present a novel hypothesis to test the theory.” Another way is to open each paragraph with a sentence that summarizes the main point of the paragraph and links it to the preceding points. These opening sentences provide the “transitions” that many beginning researchers have difficulty with. Instead of beginning a paragraph by launching into a description of a previous study, such as “Williams (2004) found that…,” it is better to start by indicating something about why you are describing this particular study. Here are some simple examples:

Another example of this phenomenon comes from the work of Williams (2004).

Williams (2004) offers one explanation of this phenomenon.

An alternative perspective has been provided by Williams (2004).

We used a method based on the one used by Williams (2004).

Finally, remember that your goal is to construct an argument for why your research question is interesting and worth addressing—not necessarily why your favourite answer to it is correct. In other words, your literature review must be balanced. If you want to emphasize the generality of a phenomenon, then of course you should discuss various studies that have demonstrated it. However, if there are other studies that have failed to demonstrate it, you should discuss them too. Or if you are proposing a new theory, then of course you should discuss findings that are consistent with that theory. However, if there are other findings that are inconsistent with it, again, you should discuss them too. It is acceptable to argue that the balance of the research supports the existence of a phenomenon or is consistent with a theory (and that is usually the best that researchers in psychology can hope for), but it is not acceptable to ignore contradictory evidence. Besides, a large part of what makes a research question interesting is uncertainty about its answer.

The Closing

The closing of the introduction—typically the final paragraph or two—usually includes two important elements. The first is a clear statement of the main research question or hypothesis. This statement tends to be more formal and precise than in the opening and is often expressed in terms of operational definitions of the key variables. The second is a brief overview of the method and some comment on its appropriateness. Here, for example, is how Darley and Latané (1968) [2] concluded the introduction to their classic article on the bystander effect:

These considerations lead to the hypothesis that the more bystanders to an emergency, the less likely, or the more slowly, any one bystander will intervene to provide aid. To test this proposition it would be necessary to create a situation in which a realistic “emergency” could plausibly occur. Each subject should also be blocked from communicating with others to prevent his getting information about their behaviour during the emergency. Finally, the experimental situation should allow for the assessment of the speed and frequency of the subjects’ reaction to the emergency. The experiment reported below attempted to fulfill these conditions. (p. 378)

Thus the introduction leads smoothly into the next major section of the article—the method section.

The method section is where you describe how you conducted your study. An important principle for writing a method section is that it should be clear and detailed enough that other researchers could replicate the study by following your “recipe.” This means that it must describe all the important elements of the study—basic demographic characteristics of the participants, how they were recruited, whether they were randomly assigned, how the variables were manipulated or measured, how counterbalancing was accomplished, and so on. At the same time, it should avoid irrelevant details such as the fact that the study was conducted in Classroom 37B of the Industrial Technology Building or that the questionnaire was double-sided and completed using pencils.

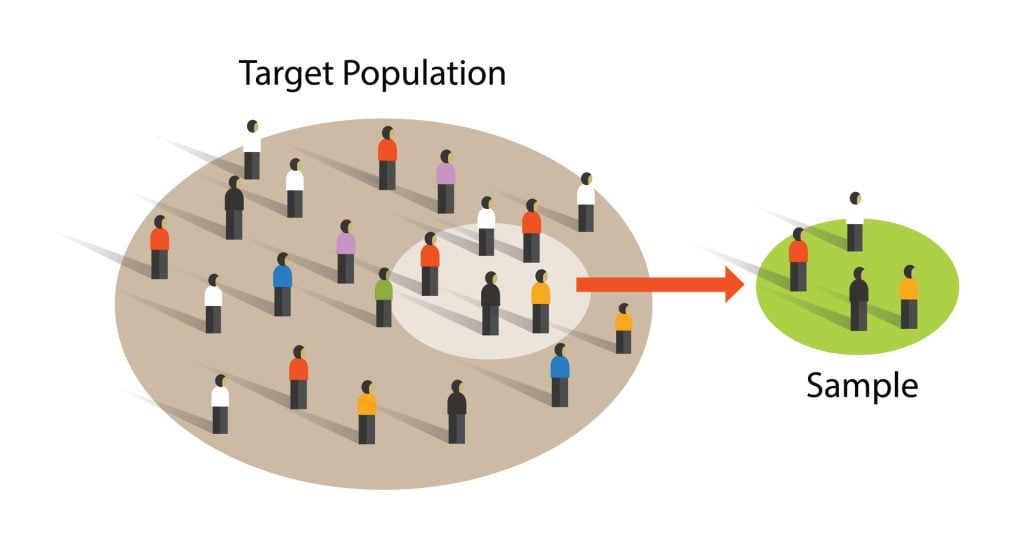

The method section begins immediately after the introduction ends with the heading “Method” (not “Methods”) centred on the page. Immediately after this is the subheading “Participants,” left justified and in italics. The participants subsection indicates how many participants there were, the number of women and men, some indication of their age, other demographics that may be relevant to the study, and how they were recruited, including any incentives given for participation.

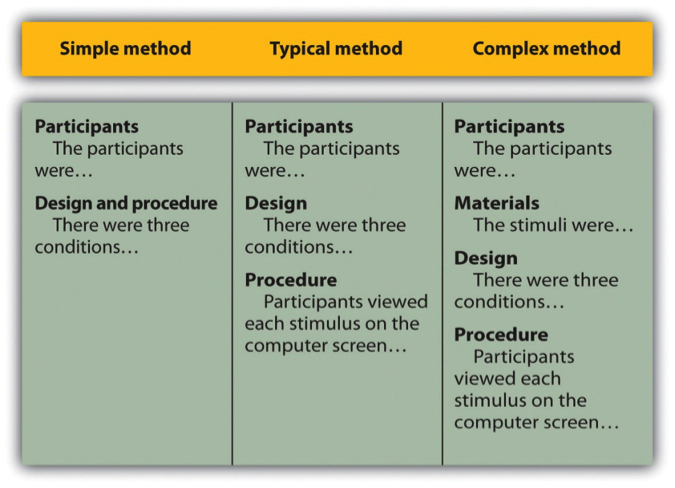

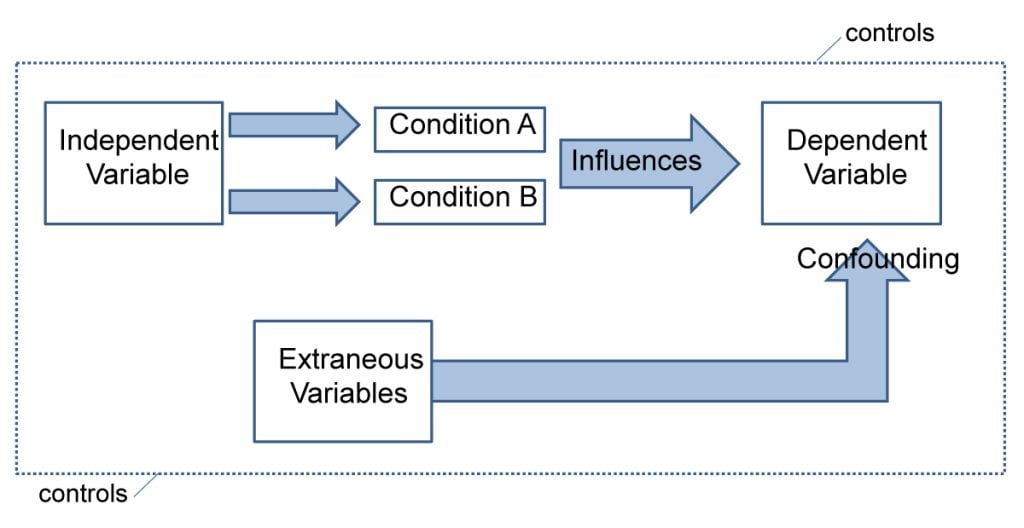

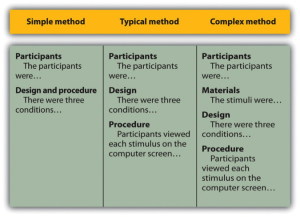

After the participants section, the structure can vary a bit. Figure 11.1 shows three common approaches. In the first, the participants section is followed by a design and procedure subsection, which describes the rest of the method. This works well for methods that are relatively simple and can be described adequately in a few paragraphs. In the second approach, the participants section is followed by separate design and procedure subsections. This works well when both the design and the procedure are relatively complicated and each requires multiple paragraphs.

What is the difference between design and procedure? The design of a study is its overall structure. What were the independent and dependent variables? Was the independent variable manipulated, and if so, was it manipulated between or within subjects? How were the variables operationally defined? The procedure is how the study was carried out. It often works well to describe the procedure in terms of what the participants did rather than what the researchers did. For example, the participants gave their informed consent, read a set of instructions, completed a block of four practice trials, completed a block of 20 test trials, completed two questionnaires, and were debriefed and excused.

In the third basic way to organize a method section, the participants subsection is followed by a materials subsection before the design and procedure subsections. This works well when there are complicated materials to describe. This might mean multiple questionnaires, written vignettes that participants read and respond to, perceptual stimuli, and so on. The heading of this subsection can be modified to reflect its content. Instead of “Materials,” it can be “Questionnaires,” “Stimuli,” and so on.

The results section is where you present the main results of the study, including the results of the statistical analyses. Although it does not include the raw data—individual participants’ responses or scores—researchers should save their raw data and make them available to other researchers who request them. Several journals now encourage the open sharing of raw data online.

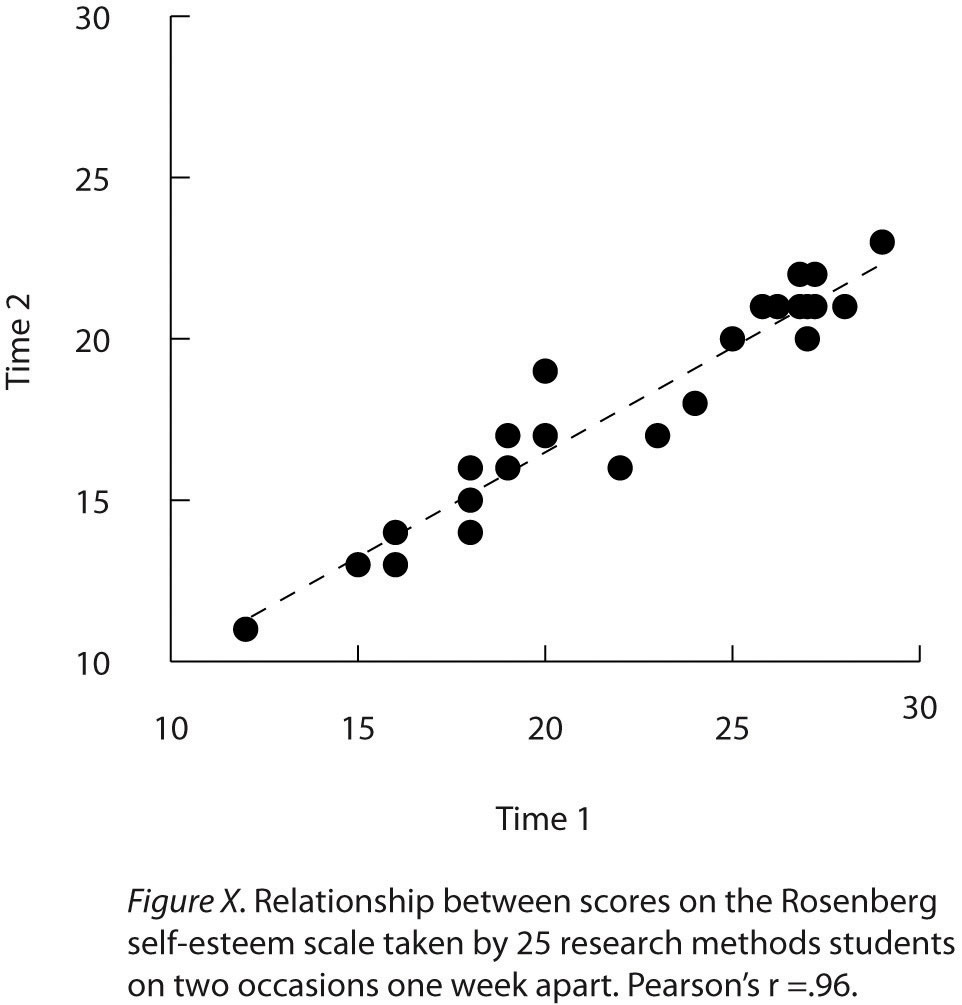

Although there are no standard subsections, it is still important for the results section to be logically organized. Typically it begins with certain preliminary issues. One is whether any participants or responses were excluded from the analyses and why. The rationale for excluding data should be described clearly so that other researchers can decide whether it is appropriate. A second preliminary issue is how multiple responses were combined to produce the primary variables in the analyses. For example, if participants rated the attractiveness of 20 stimulus people, you might have to explain that you began by computing the mean attractiveness rating for each participant. Or if they recalled as many items as they could from study list of 20 words, did you count the number correctly recalled, compute the percentage correctly recalled, or perhaps compute the number correct minus the number incorrect? A third preliminary issue is the reliability of the measures. This is where you would present test-retest correlations, Cronbach’s α, or other statistics to show that the measures are consistent across time and across items. A final preliminary issue is whether the manipulation was successful. This is where you would report the results of any manipulation checks.

The results section should then tackle the primary research questions, one at a time. Again, there should be a clear organization. One approach would be to answer the most general questions and then proceed to answer more specific ones. Another would be to answer the main question first and then to answer secondary ones. Regardless, Bem (2003) [3] suggests the following basic structure for discussing each new result:

- Remind the reader of the research question.

- Give the answer to the research question in words.

- Present the relevant statistics.

- Qualify the answer if necessary.

- Summarize the result.

Notice that only Step 3 necessarily involves numbers. The rest of the steps involve presenting the research question and the answer to it in words. In fact, the basic results should be clear even to a reader who skips over the numbers.

The discussion is the last major section of the research report. Discussions usually consist of some combination of the following elements:

- Summary of the research

- Theoretical implications

- Practical implications

- Limitations

- Suggestions for future research

The discussion typically begins with a summary of the study that provides a clear answer to the research question. In a short report with a single study, this might require no more than a sentence. In a longer report with multiple studies, it might require a paragraph or even two. The summary is often followed by a discussion of the theoretical implications of the research. Do the results provide support for any existing theories? If not, how can they be explained? Although you do not have to provide a definitive explanation or detailed theory for your results, you at least need to outline one or more possible explanations. In applied research—and often in basic research—there is also some discussion of the practical implications of the research. How can the results be used, and by whom, to accomplish some real-world goal?

The theoretical and practical implications are often followed by a discussion of the study’s limitations. Perhaps there are problems with its internal or external validity. Perhaps the manipulation was not very effective or the measures not very reliable. Perhaps there is some evidence that participants did not fully understand their task or that they were suspicious of the intent of the researchers. Now is the time to discuss these issues and how they might have affected the results. But do not overdo it. All studies have limitations, and most readers will understand that a different sample or different measures might have produced different results. Unless there is good reason to think they would have, however, there is no reason to mention these routine issues. Instead, pick two or three limitations that seem like they could have influenced the results, explain how they could have influenced the results, and suggest ways to deal with them.

Most discussions end with some suggestions for future research. If the study did not satisfactorily answer the original research question, what will it take to do so? What new research questions has the study raised? This part of the discussion, however, is not just a list of new questions. It is a discussion of two or three of the most important unresolved issues. This means identifying and clarifying each question, suggesting some alternative answers, and even suggesting ways they could be studied.

Finally, some researchers are quite good at ending their articles with a sweeping or thought-provoking conclusion. Darley and Latané (1968) [4] , for example, ended their article on the bystander effect by discussing the idea that whether people help others may depend more on the situation than on their personalities. Their final sentence is, “If people understand the situational forces that can make them hesitate to intervene, they may better overcome them” (p. 383). However, this kind of ending can be difficult to pull off. It can sound overreaching or just banal and end up detracting from the overall impact of the article. It is often better simply to end when you have made your final point (although you should avoid ending on a limitation).

The references section begins on a new page with the heading “References” centred at the top of the page. All references cited in the text are then listed in the format presented earlier. They are listed alphabetically by the last name of the first author. If two sources have the same first author, they are listed alphabetically by the last name of the second author. If all the authors are the same, then they are listed chronologically by the year of publication. Everything in the reference list is double-spaced both within and between references.

Appendices, Tables, and Figures

Appendices, tables, and figures come after the references. An appendix is appropriate for supplemental material that would interrupt the flow of the research report if it were presented within any of the major sections. An appendix could be used to present lists of stimulus words, questionnaire items, detailed descriptions of special equipment or unusual statistical analyses, or references to the studies that are included in a meta-analysis. Each appendix begins on a new page. If there is only one, the heading is “Appendix,” centred at the top of the page. If there is more than one, the headings are “Appendix A,” “Appendix B,” and so on, and they appear in the order they were first mentioned in the text of the report.

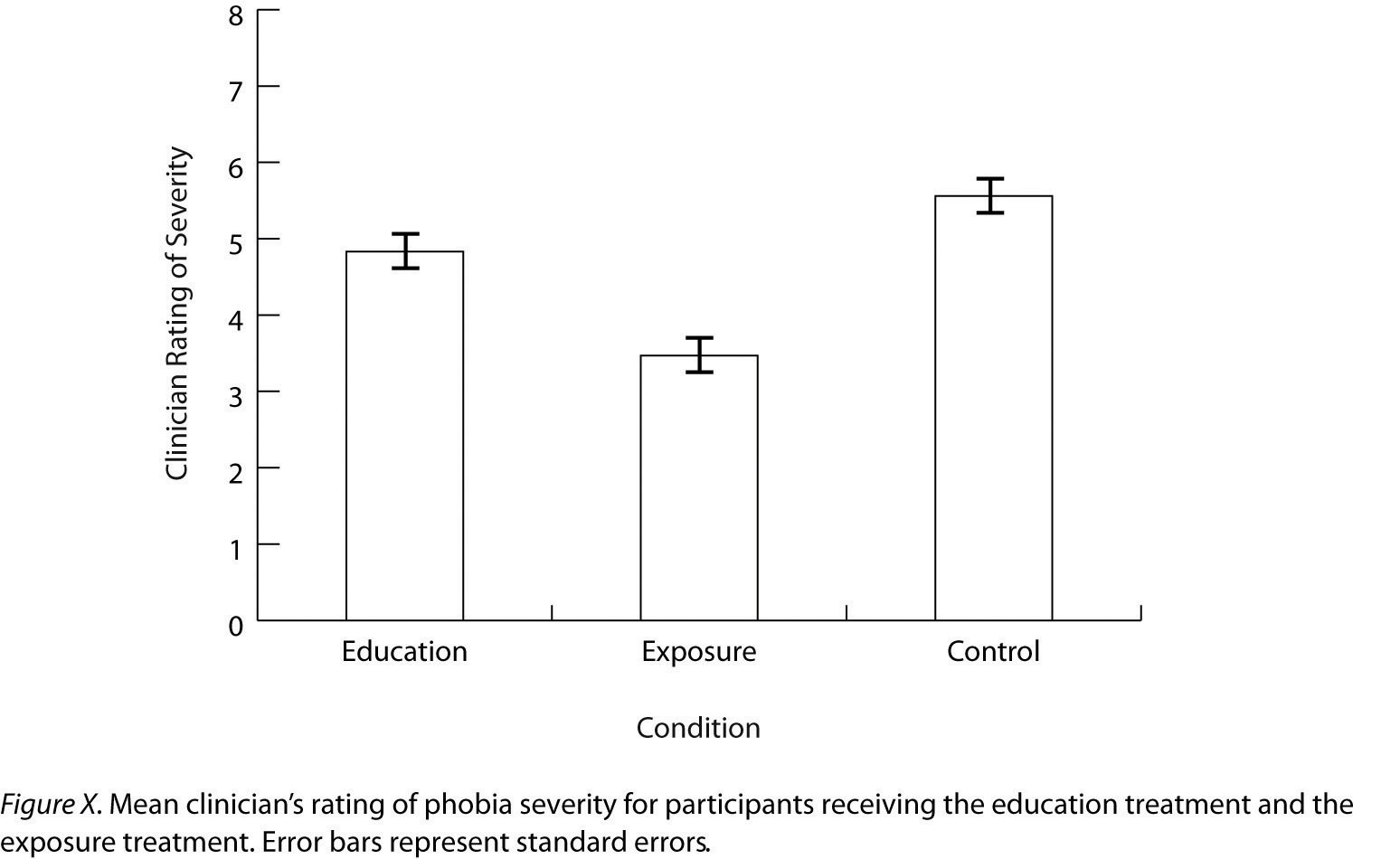

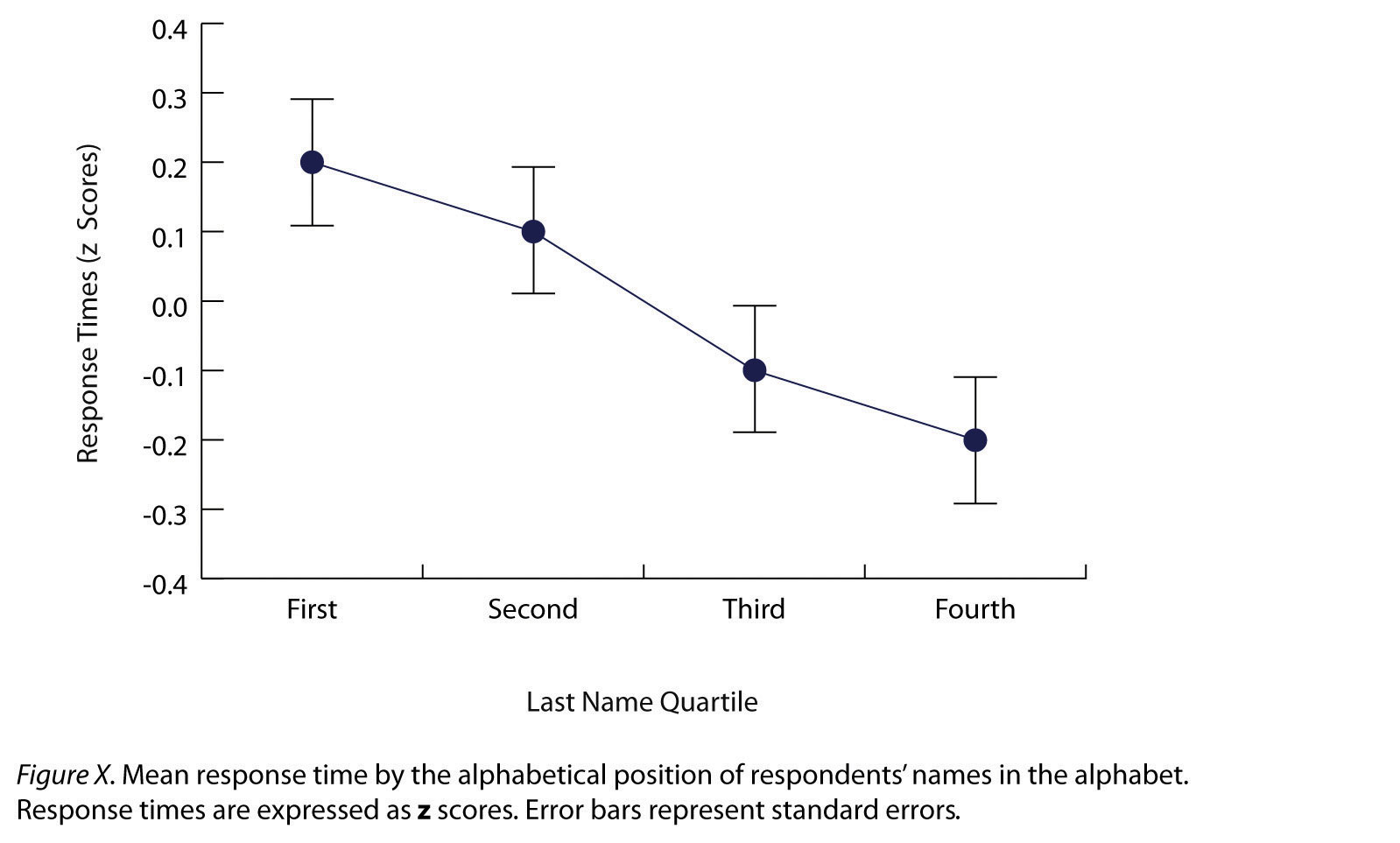

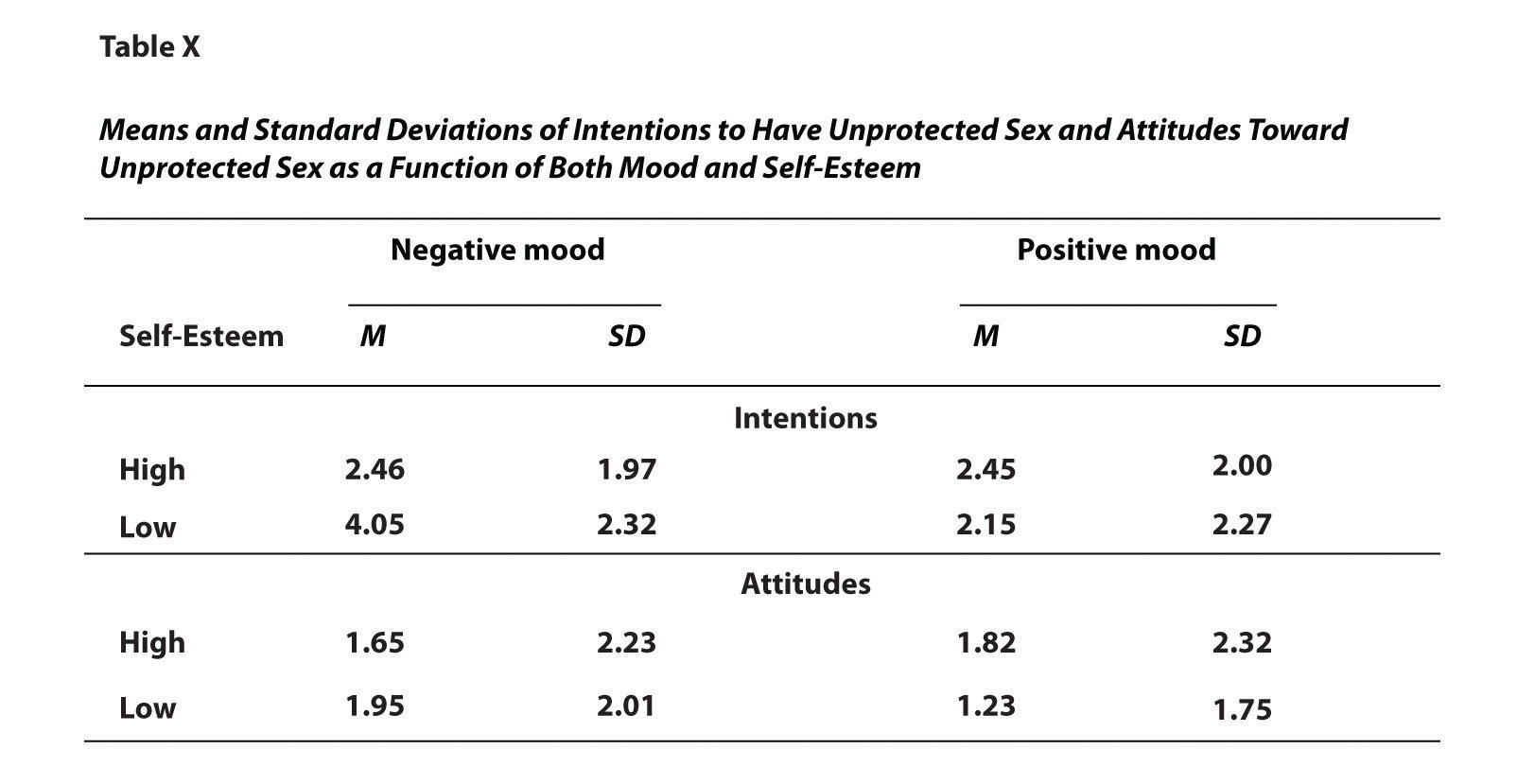

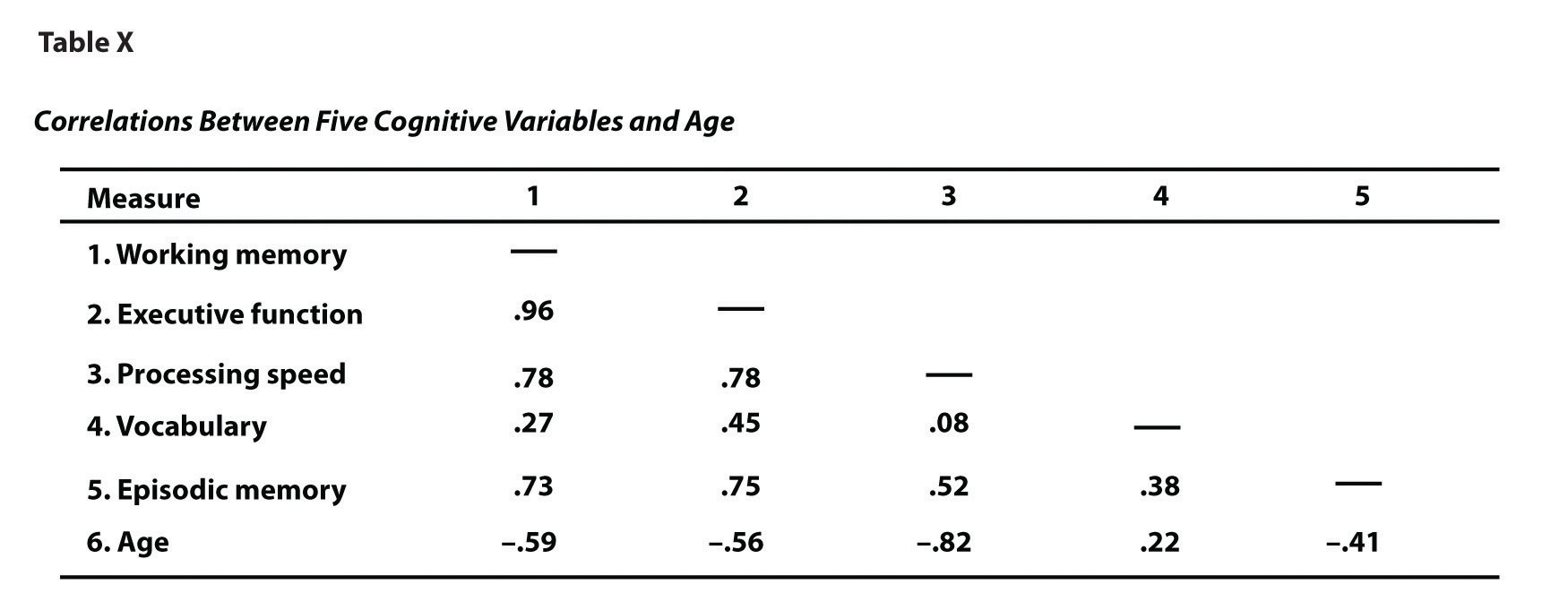

After any appendices come tables and then figures. Tables and figures are both used to present results. Figures can also be used to illustrate theories (e.g., in the form of a flowchart), display stimuli, outline procedures, and present many other kinds of information. Each table and figure appears on its own page. Tables are numbered in the order that they are first mentioned in the text (“Table 1,” “Table 2,” and so on). Figures are numbered the same way (“Figure 1,” “Figure 2,” and so on). A brief explanatory title, with the important words capitalized, appears above each table. Each figure is given a brief explanatory caption, where (aside from proper nouns or names) only the first word of each sentence is capitalized. More details on preparing APA-style tables and figures are presented later in the book.

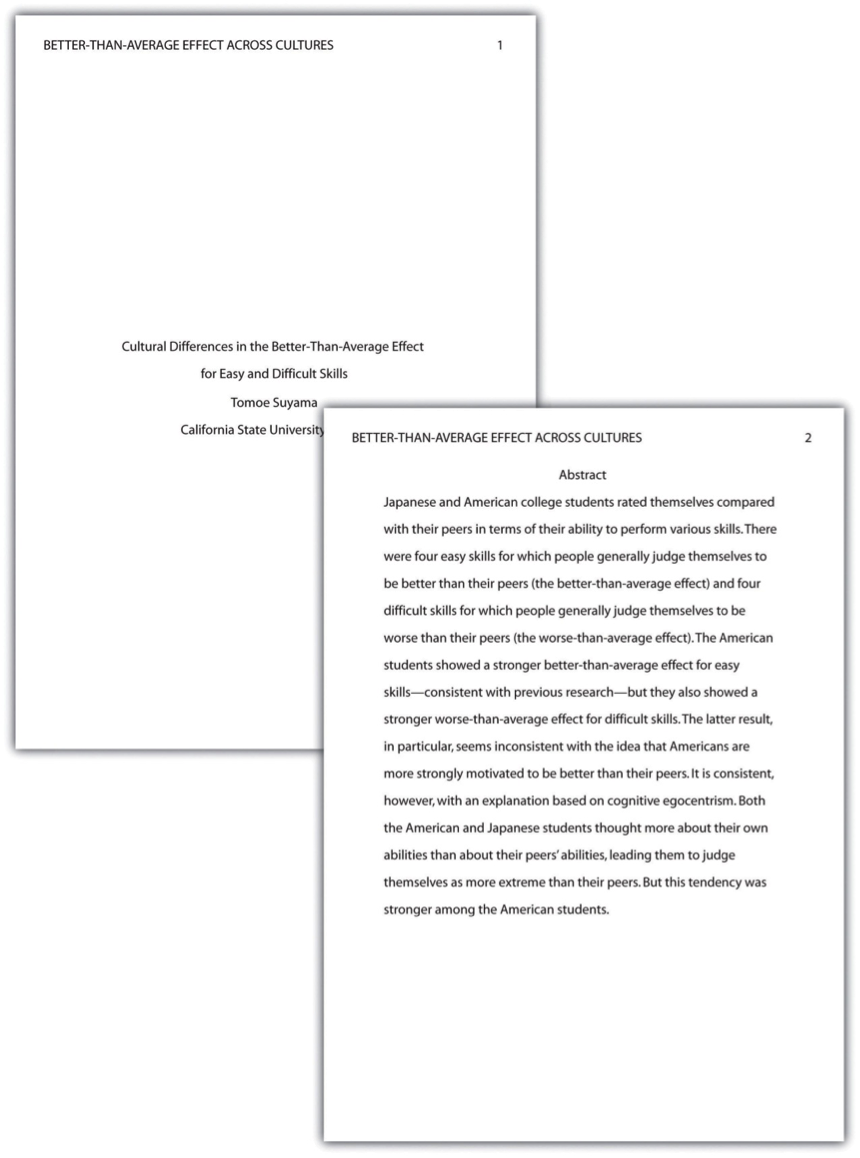

Sample APA-Style Research Report

Figures 11.2, 11.3, 11.4, and 11.5 show some sample pages from an APA-style empirical research report originally written by undergraduate student Tomoe Suyama at California State University, Fresno. The main purpose of these figures is to illustrate the basic organization and formatting of an APA-style empirical research report, although many high-level and low-level style conventions can be seen here too.

Key Takeaways

- An APA-style empirical research report consists of several standard sections. The main ones are the abstract, introduction, method, results, discussion, and references.

- The introduction consists of an opening that presents the research question, a literature review that describes previous research on the topic, and a closing that restates the research question and comments on the method. The literature review constitutes an argument for why the current study is worth doing.

- The method section describes the method in enough detail that another researcher could replicate the study. At a minimum, it consists of a participants subsection and a design and procedure subsection.

- The results section describes the results in an organized fashion. Each primary result is presented in terms of statistical results but also explained in words.

- The discussion typically summarizes the study, discusses theoretical and practical implications and limitations of the study, and offers suggestions for further research.

- Practice: Look through an issue of a general interest professional journal (e.g., Psychological Science ). Read the opening of the first five articles and rate the effectiveness of each one from 1 ( very ineffective ) to 5 ( very effective ). Write a sentence or two explaining each rating.

- Practice: Find a recent article in a professional journal and identify where the opening, literature review, and closing of the introduction begin and end.

- Practice: Find a recent article in a professional journal and highlight in a different colour each of the following elements in the discussion: summary, theoretical implications, practical implications, limitations, and suggestions for future research.

Long Descriptions

Figure 11.1 long description: Table showing three ways of organizing an APA-style method section.

In the simple method, there are two subheadings: “Participants” (which might begin “The participants were…”) and “Design and procedure” (which might begin “There were three conditions…”).

In the typical method, there are three subheadings: “Participants” (“The participants were…”), “Design” (“There were three conditions…”), and “Procedure” (“Participants viewed each stimulus on the computer screen…”).

In the complex method, there are four subheadings: “Participants” (“The participants were…”), “Materials” (“The stimuli were…”), “Design” (“There were three conditions…”), and “Procedure” (“Participants viewed each stimulus on the computer screen…”). [Return to Figure 11.1]

- Bem, D. J. (2003). Writing the empirical journal article. In J. M. Darley, M. P. Zanna, & H. R. Roediger III (Eds.), The compleat academic: A practical guide for the beginning social scientist (2nd ed.). Washington, DC: American Psychological Association. ↵

- Darley, J. M., & Latané, B. (1968). Bystander intervention in emergencies: Diffusion of responsibility. Journal of Personality and Social Psychology, 4 , 377–383. ↵

A type of research article which describes one or more new empirical studies conducted by the authors.

The page at the beginning of an APA-style research report containing the title of the article, the authors’ names, and their institutional affiliation.

A summary of a research study.

The third page of a manuscript containing the research question, the literature review, and comments about how to answer the research question.

An introduction to the research question and explanation for why this question is interesting.

A description of relevant previous research on the topic being discusses and an argument for why the research is worth addressing.

The end of the introduction, where the research question is reiterated and the method is commented upon.

The section of a research report where the method used to conduct the study is described.

The main results of the study, including the results from statistical analyses, are presented in a research article.

Section of a research report that summarizes the study's results and interprets them by referring back to the study's theoretical background.

Part of a research report which contains supplemental material.

Research Methods in Psychology - 2nd Canadian Edition Copyright © 2015 by Paul C. Price, Rajiv Jhangiani, & I-Chant A. Chiang is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

Psychological Report Writing

March 8, 2021 - paper 2 psychology in context | research methods.

Through using this website, you have learned about, referred to, and evaluated research studies. These research studies are generally presented to the scientific community as a journal article. Most journal articles follow a standard format. This is similar to the way you may have written up experiments in other sciences.

(2) Introduction:

This tells everyone why the study is being carried out and the commentary should form a ‘funnel’ of information. First, there is broad coverage of all the background research with appropriate evaluative comments: “Asch (1951) found…but Crutchfield (1955) showed…” Once the general research has been covered, the focus becomes much narrower finishing with the main researcher/research area you are hoping to support/refute. This then leads to the aims and hypothesis/hypotheses (i.e. experimental and null hypotheses) being stated.

(1) Design:

(4) Results:

All studies have flaws, so anything that went wrong or the limitations of the study are discussed together with suggestions for how it could be improved if it were to be repeated. Suggestions for alternative studies and future research are also explored. The discussion ends with a paragraph summing up what was found and assessing the implications of the study and any conclusions that can be drawn from it.

Look through your report and include a reference every researcher mentioned. A reference should include; the name of the researcher, the date the research was published, the title of the book/journal, where the book was published (or what journal the article was published in), the edition number of the book/volume of the journal article, the page numbers used.

Exam Tip: In the exam, the types of questions you could expect relating to report writing include; defining what information you would find in each section of the report, in addition, on the old specification, questions linked to report writing have included; writing up a method section, results section and designing a piece of research.

In addition, in the exam, you may get asked to write; a consent form , debriefing sheet or a set of standardised instructions.

(2) A im of the study?

(3) P rocedure – What will I be asked to do if I take part?

(5) Do I H ave to take part?

Explain to the participant that they don’t have to take part in the study, explain about their right to withdraw.

Have you received enough information about the study? YES/NO

Do you consent for your data to be used in this study and retained for use in other studies? YES/NO

When writing a set of standardised instructions, it is essential that you include:

5. Explain to the participants what will happen in the study, what they will be expected to do (step by step), how long the task/specific parts of the task will take to complete.

8. Check that the participants are still happy to proceed with the study.

This is the form that you should complete with your participants at the end of the study to ensure that they are happy with the way the study has been conducted, to explain to them the true nature of the study, to confirm consent and to give them the researcher’s contact details in case they want to ask any further questions.

(2) Participants:

We're not around right now. But you can send us an email and we'll get back to you, asap.

Psychological Science

Prospective submitters of manuscripts are encouraged to read Editor-in-Chief Simine Vazire’s editorial , as well as the editorial by Tom Hardwicke, Senior Editor for Statistics, Transparency, & Rigor, and Simine Vazire.

Psychological Science , the flagship journal of the Association for Psychological Science, is the leading peer-reviewed journal publishing empirical research spanning the entire spectrum of the science of psychology. The journal publishes high quality research articles of general interest and on important topics spanning the entire spectrum of the science of psychology. Replication studies are welcome and evaluated on the same criteria as novel studies. Articles are published in OnlineFirst before they are assigned to an issue. This journal is a member of the Committee on Publication Ethics (COPE) .

Quick Facts

| Simine Vazire | |

| Print: 0956-7976 Online: 1467-9280 | |

| 12 issues per year |

Read the February 2022 editorial by former Editor-in-Chief Patricia Bauer, “Psychological Science Stepping Up a Level.”

Read the January 2020 editorial by former Editor Patricia Bauer on her vision for the future of Psychological Science .

Read the December 2015 editorial on replication by former Editor Steve Lindsay, as well as his April 2017 editorial on sharing data and materials during the review process.

Watch Geoff Cumming’s video workshop on the new statistics.

Current Issue

Online First Articles

List of Issues

Editorial Board

Submission Guidelines

Editorial Policies

Featured research from psychological science.

Teens Who View Their Homes as More Chaotic Than Their Siblings Have Poorer Mental Health in Adulthood

Many parents ponder why one of their children seems more emotionally troubled than the others. A new study in the United Kingdom reveals a possible basis for those differences.

Rewatching Videos of People Shifts How We Judge Them, Study Indicates

Rewatching recorded behavior, whether on a Tik-Tok video or police body-camera footage, makes even the most spontaneous actions seem more rehearsed or deliberate, new research shows.

Loneliness Bookends Adulthood, Study Shows

Loneliness in adulthood follows a U-shaped pattern: It’s higher in younger and older adulthood, and lowest during middle adulthood, according to new research that examined nine longitudinal studies from around the world.

Privacy Overview

| Cookie | Duration | Description |

|---|---|---|

| __cf_bm | 30 minutes | This cookie, set by Cloudflare, is used to support Cloudflare Bot Management. |

| Cookie | Duration | Description |

|---|---|---|

| AWSELBCORS | 5 minutes | This cookie is used by Elastic Load Balancing from Amazon Web Services to effectively balance load on the servers. |

| Cookie | Duration | Description |

|---|---|---|

| at-rand | never | AddThis sets this cookie to track page visits, sources of traffic and share counts. |

| CONSENT | 2 years | YouTube sets this cookie via embedded youtube-videos and registers anonymous statistical data. |

| uvc | 1 year 27 days | Set by addthis.com to determine the usage of addthis.com service. |

| _ga | 2 years | The _ga cookie, installed by Google Analytics, calculates visitor, session and campaign data and also keeps track of site usage for the site's analytics report. The cookie stores information anonymously and assigns a randomly generated number to recognize unique visitors. |

| _gat_gtag_UA_3507334_1 | 1 minute | Set by Google to distinguish users. |

| _gid | 1 day | Installed by Google Analytics, _gid cookie stores information on how visitors use a website, while also creating an analytics report of the website's performance. Some of the data that are collected include the number of visitors, their source, and the pages they visit anonymously. |

| Cookie | Duration | Description |

|---|---|---|

| loc | 1 year 27 days | AddThis sets this geolocation cookie to help understand the location of users who share the information. |

| VISITOR_INFO1_LIVE | 5 months 27 days | A cookie set by YouTube to measure bandwidth that determines whether the user gets the new or old player interface. |

| YSC | session | YSC cookie is set by Youtube and is used to track the views of embedded videos on Youtube pages. |

| yt-remote-connected-devices | never | YouTube sets this cookie to store the video preferences of the user using embedded YouTube video. |

| yt-remote-device-id | never | YouTube sets this cookie to store the video preferences of the user using embedded YouTube video. |

| yt.innertube::nextId | never | This cookie, set by YouTube, registers a unique ID to store data on what videos from YouTube the user has seen. |

| yt.innertube::requests | never | This cookie, set by YouTube, registers a unique ID to store data on what videos from YouTube the user has seen. |

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

Psychology articles from across Nature Portfolio

Psychology is a scientific discipline that focuses on understanding mental functions and the behaviour of individuals and groups.

The neuroscience of turning heads

Measuring neural activity in moving humans has been a longstanding challenge in neuroscience, which limits what we know about our navigational neural codes. Leveraging mobile EEG and motion capture, Griffiths et al. overcome this challenge to elucidate neural representations of direction and highlight key cross-species similarities.

- Sergio A. Pecirno

- Alexandra T. Keinath

Child brains respond to climate change

Maternal exposure to ambient heat during pregnancy has been shown to increase the risk for several adverse birth outcomes. Now research reveals that variations of ambient temperature during pregnancy and childhood could have a long-term impact on a child’s brain development.

- Johanna Lepeule

Boosting children’s cognitive control does not result in behavioral or neural changes

Cognitive control is crucial for present and future success and therefore is a frequent target of interventions. This study showed that training cognitive control in a large sample of 6–13-year-old children did not lead to any behavioral or neural changes, either immediately or 1 year after training.

Related Subjects

- Human behaviour

Latest Research and Reviews

Distilling the concept of authenticity

Authenticity is promoted by cultural norms, institutions and folk wisdom, but there is disagreement about what exactly authenticity is. In this Review, Sedikides and Schlegel describe major conceptualizations of the subjective experience of authenticity and discuss its relevance for psychological functioning.

- Constantine Sedikides

- Rebecca J. Schlegel

Follow-up of telemedicine mental health interventions amid COVID-19 pandemic

- Carlos Roncero

- Sara Díaz-Trejo

- Armando González-Sánchez

Sleep quality and mental disorder symptoms among correctional workers in Ontario, Canada

- Rosemary Ricciardelli

- Tamara L. Taillieu

- R. Nicholas Carleton

Humans flexibly use visual priors to optimize their haptic exploratory behavior

- Michaela Jeschke

- Aaron C. Zoeller

- Knut Drewing

Reliable, rapid, and remote measurement of metacognitive bias

- Celine A. Fox

- Abbie McDonogh

- Claire M. Gillan

Exploring the dynamics of prefrontal cortex in the interaction between orienteering experience and cognitive performance by fNIRS

News and Comment

Premature call for implementation of tetris in clinical practice: a commentary on deforges et al. (2023).

- Joar Øveraas Halvorsen

- Ineke Wessel

- Ioana A. Cristea

Exploring brain representations through the lens of similarity structures

- Stefania Mattioni

Puerto Rico’s energy transition

- Silvana Lakeman

Causal prominence for neuroscience

- Philip Tseng

Ethical principles and practices for using naturally occurring data

Naturally occurring data are not always covered by today’s ethical regulations. However, scientists can adapt the foundational ethical principles of research using human subjects to meet their obligations to science and society.

- Alexandra Paxton

The shortage of child psychiatrists in mainland China

This Comment was conducted to clarify the current number of child psychiatrists in mainland China, to analyze the reasons for the shortages and to provide constructive suggestions for solving the current shortage.

- Zhongliang Jiang

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Last updated 27/06/24: Online ordering is currently unavailable due to technical issues. We apologise for any delays responding to customers while we resolve this. For further updates please visit our website: https://www.cambridge.org/news-and-insights/technical-incident

We use cookies to distinguish you from other users and to provide you with a better experience on our websites. Close this message to accept cookies or find out how to manage your cookie settings .

Login Alert

- > The Cambridge Handbook of Clinical Assessment and Diagnosis

- > Writing a Psychological Report Using Evidence-Based Psychological Assessment Methods

Book contents

- The Cambridge Handbook of Clinical Assessment and Diagnosis

- Copyright page

- Contributors

- Acknowledgments

- 1 Introduction to the Handbook of Clinical Assessment and Diagnosis

- Part I General Issues in Clinical Assessment and Diagnosis

- 2 Psychometrics and Psychological Assessment

- 3 Multicultural Issues in Clinical Psychological Assessment

- 4 Ethical and Professional Issues in Assessment

- 5 Contemporary Psychopathology Diagnosis

- 6 Assessment of Noncredible Reporting and Responding

- 7 Technological Advances in Clinical Assessment

- 8 Psychological Assessment as Treatment

- 9 Writing a Psychological Report Using Evidence-Based Psychological Assessment Methods

- Part II Specific Clinical Assessment Methods

- Part III Assessment and Diagnosis of Specific Mental Disorders

- Part IV Clinical Assessment in Specific Settings

9 - Writing a Psychological Report Using Evidence-Based Psychological Assessment Methods

from Part I - General Issues in Clinical Assessment and Diagnosis

Published online by Cambridge University Press: 06 December 2019

Psychological assessment and report writing are arguably two of the more important tasks of clinical psychologists. The overall purpose of this chapter is to provide some recommendations and guidelines on how to write a psychological report using evidence-based assessment methods. Principles on psychological report writing derived from seminal papers in the field of psychological assessment were adapted and used as an organizing tool to create a template on how to write all varieties of psychological reports that incorporate evidence-based assessment methods. Report writers who share similar approaches to evidence-based assessment methods may find this template helpful when formatting their psychological reports.

Access options

Save book to kindle.

To save this book to your Kindle, first ensure [email protected] is added to your Approved Personal Document E-mail List under your Personal Document Settings on the Manage Your Content and Devices page of your Amazon account. Then enter the ‘name’ part of your Kindle email address below. Find out more about saving to your Kindle .

Note you can select to save to either the @free.kindle.com or @kindle.com variations. ‘@free.kindle.com’ emails are free but can only be saved to your device when it is connected to wi-fi. ‘@kindle.com’ emails can be delivered even when you are not connected to wi-fi, but note that service fees apply.

Find out more about the Kindle Personal Document Service .

- Writing a Psychological Report Using Evidence-Based Psychological Assessment Methods

- By R. Michael Bagby , Shauna Solomon-Krakus

- Edited by Martin Sellbom , University of Otago, New Zealand , Julie A. Suhr , Ohio University

- Book: The Cambridge Handbook of Clinical Assessment and Diagnosis

- Online publication: 06 December 2019

- Chapter DOI: https://doi.org/10.1017/9781108235433.009

Save book to Dropbox

To save content items to your account, please confirm that you agree to abide by our usage policies. If this is the first time you use this feature, you will be asked to authorise Cambridge Core to connect with your account. Find out more about saving content to Dropbox .

Save book to Google Drive

To save content items to your account, please confirm that you agree to abide by our usage policies. If this is the first time you use this feature, you will be asked to authorise Cambridge Core to connect with your account. Find out more about saving content to Google Drive .

Purdue Online Writing Lab Purdue OWL® College of Liberal Arts

Writing in Psychology Overview

Welcome to the Purdue OWL

This page is brought to you by the OWL at Purdue University. When printing this page, you must include the entire legal notice.

Copyright ©1995-2018 by The Writing Lab & The OWL at Purdue and Purdue University. All rights reserved. This material may not be published, reproduced, broadcast, rewritten, or redistributed without permission. Use of this site constitutes acceptance of our terms and conditions of fair use.

Psychology is based on the study of human behaviors. As a social science, experimental psychology uses empirical inquiry to help understand human behavior. According to Thrass and Sanford (2000), psychology writing has three elements: describing, explaining, and understanding concepts from a standpoint of empirical investigation.

Discipline-specific writing, such as writing done in psychology, can be similar to other types of writing you have done in the use of the writing process, writing techniques, and in locating and integrating sources. However, the field of psychology also has its own rules and expectations for writing; not everything that you have learned in about writing in the past works for the field of psychology.

Writing in psychology includes the following principles:

- Using plain language : Psychology writing is formal scientific writing that is plain and straightforward. Literary devices such as metaphors, alliteration, or anecdotes are not appropriate for writing in psychology.

- Conciseness and clarity of language : The field of psychology stresses clear, concise prose. You should be able to make connections between empirical evidence, theories, and conclusions. See our OWL handout on conciseness for more information.

- Evidence-based reasoning: Psychology bases its arguments on empirical evidence. Personal examples, narratives, or opinions are not appropriate for psychology.

- Use of APA format: Psychologists use the American Psychological Association (APA) format for publications. While most student writing follows this format, some instructors may provide you with specific formatting requirements that differ from APA format .

Types of writing

Most major writing assignments in psychology courses consists of one of the following two types.

Experimental reports: Experimental reports detail the results of experimental research projects and are most often written in experimental psychology (lab) courses. Experimental reports are write-ups of your results after you have conducted research with participants. This handout provides a description of how to write an experimental report .

Critical analyses or reviews of research : Often called "term papers," a critical analysis of research narrowly examines and draws conclusions from existing literature on a topic of interest. These are frequently written in upper-division survey courses. Our research paper handouts provide a detailed overview of how to write these types of research papers.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- HHS Author Manuscripts

Reporting Standards for Research in Psychology

In anticipation of the impending revision of the Publication Manual of the American Psychological Association , APA’s Publications and Communications Board formed the Working Group on Journal Article Reporting Standards (JARS) and charged it to provide the board with background and recommendations on information that should be included in manuscripts submitted to APA journals that report (a) new data collections and (b) meta-analyses. The JARS Group reviewed efforts in related fields to develop standards and sought input from other knowledgeable groups. The resulting recommendations contain (a) standards for all journal articles, (b) more specific standards for reports of studies with experimental manipulations or evaluations of interventions using research designs involving random or nonrandom assignment, and (c) standards for articles reporting meta-analyses. The JARS Group anticipated that standards for reporting other research designs (e.g., observational studies, longitudinal studies) would emerge over time. This report also (a) examines societal developments that have encouraged researchers to provide more details when reporting their studies, (b) notes important differences between requirements, standards, and recommendations for reporting, and (c) examines benefits and obstacles to the development and implementation of reporting standards.

The American Psychological Association (APA) Working Group on Journal Article Reporting Standards (the JARS Group) arose out of a request for information from the APA Publications and Communications Board. The Publications and Communications Board had previously allowed any APA journal editor to require that a submission labeled by an author as describing a randomized clinical trial conform to the CONSORT (Consolidated Standards of Reporting Trials) reporting guidelines ( Altman et al., 2001 ; Moher, Schulz, & Altman, 2001 ). In this context, and recognizing that APA was about to initiate a revision of its Publication Manual ( American Psychological Association, 2001 ), the Publications and Communications Board formed the JARS Group to provide itself with input on how the newly developed reporting standards related to the material currently in its Publication Manual and to propose some related recommendations for the new edition.

The JARS Group was formed of five current and previous editors of APA journals. It divided its work into six stages:

- establishing the need for more well-defined reporting standards,

- gathering the standards developed by other related groups and professional organizations relating to both new data collections and meta-analyses,

- drafting a set of standards for APA journals,

- sharing the drafted standards with cognizant others,

- refining the standards yet again, and

- addressing additional and unresolved issues.

This article is the report of the JARS Group’s findings and recommendations. It was approved by the Publications and Communications Board in the summer of 2007 and again in the spring of 2008 and was transmitted to the task force charged with revising the Publication Manual for consideration as it did its work. The content of the report roughly follows the stages of the group’s work. Those wishing to move directly to the reporting standards can go to the sections titled Information for Inclusion in Manuscripts That Report New Data Collections and Information for Inclusion in Manuscripts That Report Meta-Analyses.

Why Are More Well-Defined Reporting Standards Needed?

The JARS Group members began their work by sharing with each other documents they knew of that related to reporting standards. The group found that the past decade had witnessed two developments in the social, behavioral, and medical sciences that encouraged researchers to provide more details when they reported their investigations. The first impetus for more detail came from the worlds of policy and practice. In these realms, the call for use of “evidence-based” decision making had placed a new emphasis on the importance of understanding how research was conducted and what it found. For example, in 2006, the APA Presidential Task Force on Evidence-Based Practice defined the term evidence-based practice to mean “the integration of the best available research with clinical expertise” (p. 273; italics added). The report went on to say that “evidence-based practice requires that psychologists recognize the strengths and limitations of evidence obtained from different types of research” (p. 275).

In medicine, the movement toward evidence-based practice is now so pervasive (see Sackett, Rosenberg, Muir Grey, Hayes & Richardson, 1996 ) that there exists an international consortium of researchers (the Cochrane Collaboration; http://www.cochrane.org/index.htm ) producing thousands of papers examining the cumulative evidence on everything from public health initiatives to surgical procedures. Another example of accountability in medicine, and the importance of relating medical practice to solid medical science, comes from the member journals of the International Committee of Medical Journal Editors (2007) , who adopted a policy requiring registration of all clinical trials in a public trials registry as a condition of consideration for publication.

In education, the No Child Left Behind Act of 2001 (2002) required that the policies and practices adopted by schools and school districts be “scientifically based,” a term that appears over 100 times in the legislation. In public policy, a consortium similar to that in medicine now exists (the Campbell Collaboration; http://www.campbellcollaboration.org ), as do organizations meant to promote government policymaking based on rigorous evidence of program effectiveness (e.g., the Coalition for Evidence-Based Policy; http://www.excelgov.org/index.php?keyword=a432fbc34d71c7 ). Each of these efforts operates with a definition of what constitutes sound scientific evidence. The developers of previous reporting standards argued that new transparency in reporting is needed so that judgments can be made by users of evidence about the appropriate inferences and applications derivable from research findings.

The second impetus for more detail in research reporting has come from within the social and behavioral science disciplines. As evidence about specific hypotheses and theories accumulates, greater reliance is being placed on syntheses of research, especially meta-analyses ( Cooper, 2009 ; Cooper, Hedges, & Valentine, 2009 ), to tell us what we know about the workings of the mind and the laws of behavior. Different findings relating to a specific question examined with various research designs are now mined by second users of the data for clues to the mediation of basic psychological, behavioral, and social processes. These clues emerge by clustering studies based on distinctions in their methods and then comparing their results. This synthesis-based evidence is then used to guide the next generation of problems and hypotheses studied in new data collections. Without complete reporting of methods and results, the utility of studies for purposes of research synthesis and meta-analysis is diminished.

The JARS Group viewed both of these stimulants to action as positive developments for the psychological sciences. The first provides an unprecedented opportunity for psychological research to play an important role in public and health policy. The second promises a sounder evidence base for explanations of psychological phenomena and a next generation of research that is more focused on resolving critical issues.

The Current State of the Art

Next, the JARS Group collected efforts of other social and health organizations that had recently developed reporting standards. Three recent efforts quickly came to the group’s attention. Two efforts had been undertaken in the medical and health sciences to improve the quality of reporting of primary studies and to make reports more useful for the next users of the data. The first effort is called CONSORT (Consolidated Standards of Reporting Trials; Altman et al., 2001 ; Moher et al., 2001 ). The CONSORT standards were developed by an ad hoc group primarily composed of biostatisticians and medical researchers. CONSORT relates to the reporting of studies that carried out random assignment of participants to conditions. It comprises a checklist of study characteristics that should be included in research reports and a flow diagram that provides readers with a description of the number of participants as they progress through the study—and by implication the number who drop out—from the time they are deemed eligible for inclusion until the end of the investigation. These guidelines are now required by the top-tier medical journals and many other biomedical journals. Some APA journals also use the CONSORT guidelines.

The second effort is called TREND (Transparent Reporting of Evaluations with Nonexperimental Designs; Des Jarlais, Lyles, Crepaz, & the TREND Group, 2004 ). TREND was developed under the initiative of the Centers for Disease Control, which brought together a group of editors of journals related to public health, including several journals in psychology. TREND contains a 22-item checklist, similar to CONSORT, but with a specific focus on reporting standards for studies that use quasi-experimental designs, that is, group comparisons in which the groups were established using procedures other than random assignment to place participants in conditions.

In the social sciences, the American Educational Research Association (2006) recently published “Standards for Reporting on Empirical Social Science Research in AERA Publications.” These standards encompass a broad range of research designs, including both quantitative and qualitative approaches, and are divided into eight general areas, including problem formulation; design and logic of the study; sources of evidence; measurement and classification; analysis and interpretation; generalization; ethics in reporting; and title, abstract, and headings. They contain about two dozen general prescriptions for the reporting of studies as well as separate prescriptions for quantitative and qualitative studies.

Relation to the APA Publication Manual

The JARS Group also examined previous editions of the APA Publication Manual and discovered that for the last half century it has played an important role in the establishment of reporting standards. The first edition of the APA Publication Manual , published in 1952 as a supplement to Psychological Bulletin ( American Psychological Association, Council of Editors, 1952 ), was 61 pages long, printed on 6-in. by 9-in. paper, and cost $1. The principal divisions of manuscripts were titled Problem, Method, Results, Discussion, and Summary (now the Abstract). According to the first Publication Manual, the section titled Problem was to include the questions asked and the reasons for asking them. When experiments were theory-driven, the theoretical propositions that generated the hypotheses were to be given, along with the logic of the derivation and a summary of the relevant arguments. The method was to be “described in enough detail to permit the reader to repeat the experiment unless portions of it have been described in other reports which can be cited” (p. 9). This section was to describe the design and the logic of relating the empirical data to theoretical propositions, the subjects, sampling and control devices, techniques of measurement, and any apparatus used. Interestingly, the 1952 Manual also stated, “Sometimes space limitations dictate that the method be described synoptically in a journal, and a more detailed description be given in auxiliary publication” (p. 25). The Results section was to include enough data to justify the conclusions, with special attention given to tests of statistical significance and the logic of inference and generalization. The Discussion section was to point out limitations of the conclusions, relate them to other findings and widely accepted points of view, and give implications for theory or practice. Negative or unexpected results were not to be accompanied by extended discussions; the editors wrote, “Long ‘alibis,’ unsupported by evidence or sound theory, add nothing to the usefulness of the report” (p. 9). Also, authors were encouraged to use good grammar and to avoid jargon, as “some writing in psychology gives the impression that long words and obscure expressions are regarded as evidence of scientific status” (pp. 25–26).

Through the following editions, the recommendations became more detailed and specific. Of special note was the Report of the Task Force on Statistical Inference ( Wilkinson & the Task Force on Statistical Inference, 1999 ), which presented guidelines for statistical reporting in APA journals that informed the content of the 4th edition of the Publication Manual . Although the 5th edition of the Manual does not contain a clearly delineated set of reporting standards, this does not mean the Manual is devoid of standards. Instead, recommendations, standards, and requirements for reporting are embedded in various sections of the text. Most notably, statements regarding the method and results that should be included in a research report (as well as how this information should be reported) appear in the Manual ’s description of the parts of a manuscript (pp. 10–29). For example, when discussing who participated in a study, the Manual states, “When humans participated as the subjects of the study, report the procedures for selecting and assigning them and the agreements and payments made” (p. 18). With regard to the Results section, the Manual states, “Mention all relevant results, including those that run counter to the hypothesis” (p. 20), and it provides descriptions of “sufficient statistics” (p. 23) that need to be reported.

Thus, although reporting standards and requirements are not highlighted in the most recent edition of the Manual, they appear nonetheless. In that context, then, the proposals offered by the JARS Group can be viewed not as breaking new ground for psychological research but rather as a systematization, clarification, and—to a lesser extent than might at first appear—an expansion of standards that already exist. The intended contribution of the current effort, then, becomes as much one of increased emphasis as increased content.

Drafting, Vetting, and Refinement of the JARS

Next, the JARS Group canvassed the APA Council of Editors to ascertain the degree to which the CONSORT and TREND standards were already in use by APA journals and to make us aware of other reporting standards. Also, the JARS Group requested from the APA Publications Office data it had on the use of auxiliary websites by authors of APA journal articles. With this information in hand, the JARS Group compared the CONSORT, TREND, and AERA standards to one another and developed a combined list of nonredundant elements contained in any or all of the three sets of standards. The JARS Group then examined the combined list, rewrote some items for clarity and ease of comprehension by an audience of psychologists and other social and behavioral scientists, and added a few suggestions of its own.

This combined list was then shared with the APA Council of Editors, the APA Publication Manual Revision Task Force, and the Publications and Communications Board. These groups were requested to react to it. After receiving these reactions and anonymous reactions from reviewers chosen by the American Psychologist , the JARS Group revised its report and arrived at the list of recommendations contained in Tables 1 , ,2, 2 , and and3 3 and Figure 1 . The report was then approved again by the Publications and Communications Board.

Note. This flowchart is an adaptation of the flowchart offered by the CONSORT Group ( Altman et al., 2001 ; Moher, Schulz, & Altman, 2001 ). Journals publishing the original CONSORT flowchart have waived copyright protection.

Journal Article Reporting Standards (JARS): Information Recommended for Inclusion in Manuscripts That Report New Data Collections Regardless of Research Design

| Paper section and topic | Description |

|---|---|

| Title and title page | Identify variables and theoretical issues under investigation and the relationship between them Author note contains acknowledgment of special circumstances: Use of data also appearing in previous publications, dissertations, or conference papers Sources of funding or other support Relationships that may be perceived as conflicts of interest |

| Abstract | Problem under investigation Participants or subjects; specifying pertinent characteristics; in animal research, include genus and species Study method, including: Sample size Any apparatus used Outcome measures Data-gathering procedures Research design (e.g., experiment, observational study) Findings, including effect sizes and confidence intervals and/or statistical significance levels Conclusions and the implications or applications |

| Introduction | The importance of the problem: Theoretical or practical implications Review of relevant scholarship: Relation to previous work If other aspects of this study have been reported on previously, how the current report differs from these earlier reports Specific hypotheses and objectives: Theories or other means used to derive hypotheses Primary and secondary hypotheses, other planned analyses How hypotheses and research design relate to one another |

| Method | |

| Participant characteristics | Eligibility and exclusion criteria, including any restrictions based on demographic characteristics Major demographic characteristics as well as important topic-specific characteristics (e.g., achievement level in studies of educational interventions), or in the case of animal research, genus and species |

| Sampling procedures | Procedures for selecting participants, including: The sampling method if a systematic sampling plan was implemented Percentage of sample approached that participated Self-selection (either by individuals or units, such as schools or clinics) Settings and locations where data were collected Agreements and payments made to participants Institutional review board agreements, ethical standards met, safety monitoring |

| Sample size, power, and precision | Intended sample size Actual sample size, if different from intended sample size How sample size was determined: Power analysis, or methods used to determine precision of parameter estimates Explanation of any interim analyses and stopping rules |

| Measures and covariates | Definitions of all primary and secondary measures and covariates: Include measures collected but not included in this report Methods used to collect data Methods used to enhance the quality of measurements: Training and reliability of data collectors Use of multiple observations Information on validated or ad hoc instruments created for individual studies, for example, psychometric and biometric properties |

| Research design | Whether conditions were manipulated or naturally observed Type of research design; provided in are modules for: Randomized experiments (Module A1) Quasi-experiments (Module A2) Other designs would have different reporting needs associated with them |

| Results | |

| Participant flow | Total number of participants Flow of participants through each stage of the study |

| Recruitment Statistics and data analysis | Dates defining the periods of recruitment and repeated measurements or follow-up Information concerning problems with statistical assumptions and/or data distributions that could affect the validity of findings Missing data: Frequency or percentages of missing data Empirical evidence and/or theoretical arguments for the causes of data that are missing, for example, missing completely at random (MCAR), missing at random (MAR), or missing not at random (MNAR) Methods for addressing missing data, if used For each primary and secondary outcome and for each subgroup, a summary of: Cases deleted from each analysis Subgroup or cell sample sizes, cell means, standard deviations, or other estimates of precision, and other descriptive statistics Effect sizes and confidence intervals For inferential statistics (null hypothesis significance testing), information about: The a priori Type I error rate adopted Direction, magnitude, degrees of freedom, and exact level, even if no significant effect is reported For multivariable analytic systems (e.g., multivariate analyses of variance, regression analyses, structural equation modeling analyses, and hierarchical linear modeling) also include the associated variance–covariance (or correlation) matrix or matrices Estimation problems (e.g., failure to converge, bad solution spaces), anomalous data points Statistical software program, if specialized procedures were used Report any other analyses performed, including adjusted analyses, indicating those that were prespecified and those that were exploratory (though not necessarily in level of detail of primary analyses) |

| Ancillary analyses | Discussion of implications of ancillary analyses for statistical error rates |

| Discussion | Statement of support or nonsupport for all original hypotheses: Distinguished by primary and secondary hypotheses Post hoc explanations Similarities and differences between results and work of others Interpretation of the results, taking into account: Sources of potential bias and other threats to internal validity Imprecision of measures The overall number of tests or overlap among tests, and Other limitations or weaknesses of the study Generalizability (external validity) of the findings, taking into account: The target population Other contextual issues Discussion of implications for future research, program, or policy |

Module A: Reporting Standards for Studies With an Experimental Manipulation or Intervention (in Addition to Material Presented in Table 1 )

| Paper section and topic | Description |

|---|---|

| Method | |

| Experimental manipulations or interventions | Details of the interventions or experimental manipulations intended for each study condition, including control groups, and how and when manipulations or interventions were actually administered, specifically including: Content of the interventions or specific experimental manipulations Summary or paraphrasing of instructions, unless they are unusual or compose the experimental manipulation, in which case they may be presented verbatim Method of intervention or manipulation delivery Description of apparatus and materials used and their function in the experiment Specialized equipment by model and supplier Deliverer: who delivered the manipulations or interventions Level of professional training Level of training in specific interventions or manipulations Number of deliverers and, in the case of interventions, the , , and range of number of individuals/units treated by each Setting: where the manipulations or interventions occurred Exposure quantity and duration: how many sessions, episodes, or events were intended to be delivered, how long they were intended to last Time span: how long it took to deliver the intervention or manipulation to each unit Activities to increase compliance or adherence (e.g., incentives) Use of language other than English and the translation method |

| Units of delivery and analysis | Unit of delivery: How participants were grouped during delivery Description of the smallest unit that was analyzed (and in the case of experiments, that was randomly assigned to conditions) to assess manipulation or intervention effects (e.g., individuals, work groups, classes) If the unit of analysis differed from the unit of delivery, description of the analytical method used to account for this (e.g., adjusting the standard error estimates by the design effect or using multilevel analysis) |

| Results | |

| Participant flow | Total number of groups (if intervention was administered at the group level) and the number of participants assigned to each group: Number of participants who did not complete the experiment or crossed over to other conditions, explain why Number of participants used in primary analyses Flow of participants through each stage of the study (see ) |

| Treatment fidelity | Evidence on whether the treatment was delivered as intended |

| Baseline data | Baseline demographic and clinical characteristics of each group |

| Statistics and data analysis | Whether the analysis was by intent-to-treat, complier average causal effect, other or multiple ways |

| Adverse events and side effects | All important adverse events or side effects in each intervention group |

| Discussion | Discussion of results taking into account the mechanism by which the manipulation or intervention was intended to work (causal pathways) or alternative mechanisms If an intervention is involved, discussion of the success of and barriers to implementing the intervention, fidelity of implementation Generalizability (external validity) of the findings, taking into account: The characteristics of the intervention How, what outcomes were measured Length of follow-up Incentives Compliance rates The “clinical or practical significance” of outcomes and the basis for these interpretations |

Reporting Standards for Studies Using Random and Nonrandom Assignment of Participants to Experimental Groups

| Paper section and topic | Description |

|---|---|

| Module A1: Studies using random assignment | |

| Method | |

| Random assignment method | Procedure used to generate the random assignment sequence, including details of any restriction (e.g., blocking, stratification) |

| Random assignment concealment | Whether sequence was concealed until interventions were assigned |

| Random assignment implementation | Who generated the assignment sequence Who enrolled participants Who assigned participants to groups |

| Masking | Whether participants, those administering the interventions, and those assessing the outcomes were unaware of condition assignments If masking took place, statement regarding how it was accomplished and how the success of masking was evaluated |

| Statistical methods | Statistical methods used to compare groups on primary outcome(s) Statistical methods used for additional analyses, such as subgroup analyses and adjusted analysis Statistical methods used for mediation analyses |

| Module A2: Studies using nonrandom assignment | |

| Method | |

| Assignment method | Unit of assignment (the unit being assigned to study conditions, e.g., individual, group, community) Method used to assign units to study conditions, including details of any restriction (e.g., blocking, stratification, minimization) Procedures employed to help minimize potential bias due to nonrandomization (e.g., matching, propensity score matching) |

| Masking | Whether participants, those administering the interventions, and those assessing the outcomes were unaware of condition assignments If masking took place, statement regarding how it was accomplished and how the success of masking was evaluated |

| Statistical methods | Statistical methods used to compare study groups on primary outcome(s), including complex methods for correlated data Statistical methods used for additional analyses, such as subgroup analyses and adjusted analysis (e.g., methods for modeling pretest differences and adjusting for them) Statistical methods used for mediation analyses |

Information for Inclusion in Manuscripts That Report New Data Collections