Statistics Made Easy

The Importance of Statistics in Research (With Examples)

The field of statistics is concerned with collecting, analyzing, interpreting, and presenting data.

In the field of research, statistics is important for the following reasons:

Reason 1 : Statistics allows researchers to design studies such that the findings from the studies can be extrapolated to a larger population.

Reason 2 : Statistics allows researchers to perform hypothesis tests to determine if some claim about a new drug, new procedure, new manufacturing method, etc. is true.

Reason 3 : Statistics allows researchers to create confidence intervals to capture uncertainty around population estimates.

In the rest of this article, we elaborate on each of these reasons.

Reason 1: Statistics Allows Researchers to Design Studies

Researchers are often interested in answering questions about populations like:

- What is the average weight of a certain species of bird?

- What is the average height of a certain species of plant?

- What percentage of citizens in a certain city support a certain law?

One way to answer these questions is to go around and collect data on every single individual in the population of interest.

However, this is typically too costly and time-consuming which is why researchers instead take a sample of the population and use the data from the sample to draw conclusions about the population as a whole.

There are many different methods researchers can potentially use to obtain individuals to be in a sample. These are known as sampling methods .

There are two classes of sampling methods:

- Probability sampling methods : Every member in a population has an equal probability of being selected to be in the sample.

- Non-probability sampling methods : Not every member in a population has an equal probability of being selected to be in the sample.

By using probability sampling methods, researchers can maximize the chances that they obtain a sample that is representative of the overall population.

This allows researchers to extrapolate the findings from the sample to the overall population.

Read more about the two classes of sampling methods here .

Reason 2: Statistics Allows Researchers to Perform Hypothesis Tests

Another way that statistics is used in research is in the form of hypothesis tests .

These are tests that researchers can use to determine if there is a statistical significance between different medical procedures or treatments.

For example, suppose a scientist believes that a new drug is able to reduce blood pressure in obese patients. To test this, he measures the blood pressure of 30 patients before and after using the new drug for one month.

He then performs a paired samples t- test using the following hypotheses:

- H 0 : μ after = μ before (the mean blood pressure is the same before and after using the drug)

- H A : μ after < μ before (the mean blood pressure is less after using the drug)

If the p-value of the test is less than some significance level (e.g. α = .05), then he can reject the null hypothesis and conclude that the new drug leads to reduced blood pressure.

Note : This is just one example of a hypothesis test that is used in research. Other common tests include a one sample t-test , two sample t-test , one-way ANOVA , and two-way ANOVA .

Reason 3: Statistics Allows Researchers to Create Confidence Intervals

Another way that statistics is used in research is in the form of confidence intervals .

A confidence interval is a range of values that is likely to contain a population parameter with a certain level of confidence.

For example, suppose researchers are interested in estimating the mean weight of a certain species of turtle.

Instead of going around and weighing every single turtle in the population, researchers may instead take a simple random sample of turtles with the following information:

- Sample size n = 25

- Sample mean weight x = 300

- Sample standard deviation s = 18.5

Using the confidence interval for a mean formula , researchers may then construct the following 95% confidence interval:

95% Confidence Interval: 300 +/- 1.96*(18.5/√ 25 ) = [292.75, 307.25]

The researchers would then claim that they’re 95% confident that the true mean weight for this population of turtles is between 292.75 pounds and 307.25 pounds.

Additional Resources

The following articles explain the importance of statistics in other fields:

The Importance of Statistics in Healthcare The Importance of Statistics in Nursing The Importance of Statistics in Business The Importance of Statistics in Economics The Importance of Statistics in Education

Featured Posts

Hey there. My name is Zach Bobbitt. I have a Masters of Science degree in Applied Statistics and I’ve worked on machine learning algorithms for professional businesses in both healthcare and retail. I’m passionate about statistics, machine learning, and data visualization and I created Statology to be a resource for both students and teachers alike. My goal with this site is to help you learn statistics through using simple terms, plenty of real-world examples, and helpful illustrations.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Join the Statology Community

Sign up to receive Statology's exclusive study resource: 100 practice problems with step-by-step solutions. Plus, get our latest insights, tutorials, and data analysis tips straight to your inbox!

By subscribing you accept Statology's Privacy Policy.

Effective Use of Statistics in Research – Methods and Tools for Data Analysis

Remember that impending feeling you get when you are asked to analyze your data! Now that you have all the required raw data, you need to statistically prove your hypothesis. Representing your numerical data as part of statistics in research will also help in breaking the stereotype of being a biology student who can’t do math.

Statistical methods are essential for scientific research. In fact, statistical methods dominate the scientific research as they include planning, designing, collecting data, analyzing, drawing meaningful interpretation and reporting of research findings. Furthermore, the results acquired from research project are meaningless raw data unless analyzed with statistical tools. Therefore, determining statistics in research is of utmost necessity to justify research findings. In this article, we will discuss how using statistical methods for biology could help draw meaningful conclusion to analyze biological studies.

Table of Contents

Role of Statistics in Biological Research

Statistics is a branch of science that deals with collection, organization and analysis of data from the sample to the whole population. Moreover, it aids in designing a study more meticulously and also give a logical reasoning in concluding the hypothesis. Furthermore, biology study focuses on study of living organisms and their complex living pathways, which are very dynamic and cannot be explained with logical reasoning. However, statistics is more complex a field of study that defines and explains study patterns based on the sample sizes used. To be precise, statistics provides a trend in the conducted study.

Biological researchers often disregard the use of statistics in their research planning, and mainly use statistical tools at the end of their experiment. Therefore, giving rise to a complicated set of results which are not easily analyzed from statistical tools in research. Statistics in research can help a researcher approach the study in a stepwise manner, wherein the statistical analysis in research follows –

1. Establishing a Sample Size

Usually, a biological experiment starts with choosing samples and selecting the right number of repetitive experiments. Statistics in research deals with basics in statistics that provides statistical randomness and law of using large samples. Statistics teaches how choosing a sample size from a random large pool of sample helps extrapolate statistical findings and reduce experimental bias and errors.

2. Testing of Hypothesis

When conducting a statistical study with large sample pool, biological researchers must make sure that a conclusion is statistically significant. To achieve this, a researcher must create a hypothesis before examining the distribution of data. Furthermore, statistics in research helps interpret the data clustered near the mean of distributed data or spread across the distribution. These trends help analyze the sample and signify the hypothesis.

3. Data Interpretation Through Analysis

When dealing with large data, statistics in research assist in data analysis. This helps researchers to draw an effective conclusion from their experiment and observations. Concluding the study manually or from visual observation may give erroneous results; therefore, thorough statistical analysis will take into consideration all the other statistical measures and variance in the sample to provide a detailed interpretation of the data. Therefore, researchers produce a detailed and important data to support the conclusion.

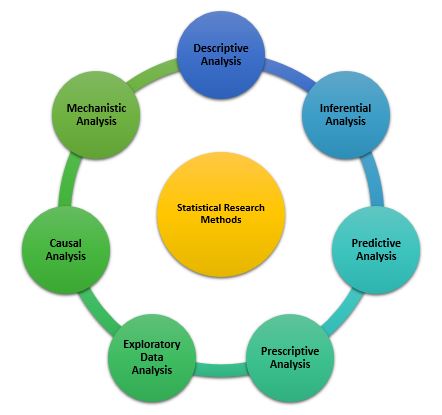

Types of Statistical Research Methods That Aid in Data Analysis

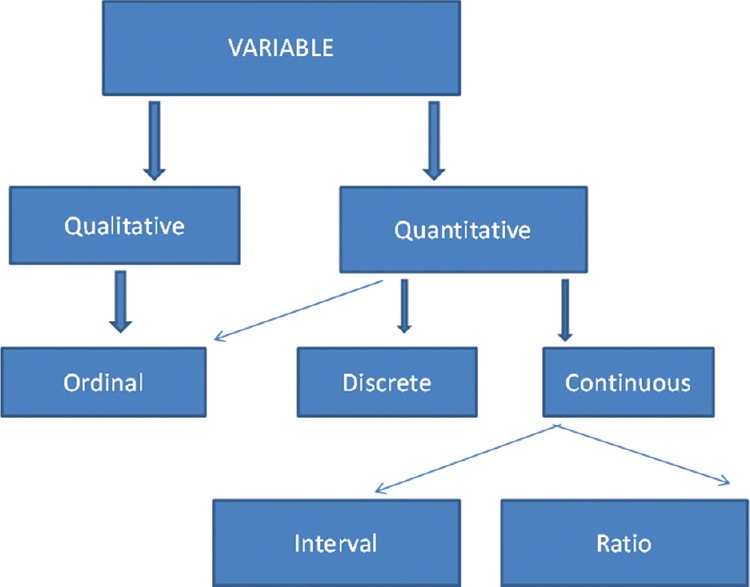

Statistical analysis is the process of analyzing samples of data into patterns or trends that help researchers anticipate situations and make appropriate research conclusions. Based on the type of data, statistical analyses are of the following type:

1. Descriptive Analysis

The descriptive statistical analysis allows organizing and summarizing the large data into graphs and tables . Descriptive analysis involves various processes such as tabulation, measure of central tendency, measure of dispersion or variance, skewness measurements etc.

2. Inferential Analysis

The inferential statistical analysis allows to extrapolate the data acquired from a small sample size to the complete population. This analysis helps draw conclusions and make decisions about the whole population on the basis of sample data. It is a highly recommended statistical method for research projects that work with smaller sample size and meaning to extrapolate conclusion for large population.

3. Predictive Analysis

Predictive analysis is used to make a prediction of future events. This analysis is approached by marketing companies, insurance organizations, online service providers, data-driven marketing, and financial corporations.

4. Prescriptive Analysis

Prescriptive analysis examines data to find out what can be done next. It is widely used in business analysis for finding out the best possible outcome for a situation. It is nearly related to descriptive and predictive analysis. However, prescriptive analysis deals with giving appropriate suggestions among the available preferences.

5. Exploratory Data Analysis

EDA is generally the first step of the data analysis process that is conducted before performing any other statistical analysis technique. It completely focuses on analyzing patterns in the data to recognize potential relationships. EDA is used to discover unknown associations within data, inspect missing data from collected data and obtain maximum insights.

6. Causal Analysis

Causal analysis assists in understanding and determining the reasons behind “why” things happen in a certain way, as they appear. This analysis helps identify root cause of failures or simply find the basic reason why something could happen. For example, causal analysis is used to understand what will happen to the provided variable if another variable changes.

7. Mechanistic Analysis

This is a least common type of statistical analysis. The mechanistic analysis is used in the process of big data analytics and biological science. It uses the concept of understanding individual changes in variables that cause changes in other variables correspondingly while excluding external influences.

Important Statistical Tools In Research

Researchers in the biological field find statistical analysis in research as the scariest aspect of completing research. However, statistical tools in research can help researchers understand what to do with data and how to interpret the results, making this process as easy as possible.

1. Statistical Package for Social Science (SPSS)

It is a widely used software package for human behavior research. SPSS can compile descriptive statistics, as well as graphical depictions of result. Moreover, it includes the option to create scripts that automate analysis or carry out more advanced statistical processing.

2. R Foundation for Statistical Computing

This software package is used among human behavior research and other fields. R is a powerful tool and has a steep learning curve. However, it requires a certain level of coding. Furthermore, it comes with an active community that is engaged in building and enhancing the software and the associated plugins.

3. MATLAB (The Mathworks)

It is an analytical platform and a programming language. Researchers and engineers use this software and create their own code and help answer their research question. While MatLab can be a difficult tool to use for novices, it offers flexibility in terms of what the researcher needs.

4. Microsoft Excel

Not the best solution for statistical analysis in research, but MS Excel offers wide variety of tools for data visualization and simple statistics. It is easy to generate summary and customizable graphs and figures. MS Excel is the most accessible option for those wanting to start with statistics.

5. Statistical Analysis Software (SAS)

It is a statistical platform used in business, healthcare, and human behavior research alike. It can carry out advanced analyzes and produce publication-worthy figures, tables and charts .

6. GraphPad Prism

It is a premium software that is primarily used among biology researchers. But, it offers a range of variety to be used in various other fields. Similar to SPSS, GraphPad gives scripting option to automate analyses to carry out complex statistical calculations.

This software offers basic as well as advanced statistical tools for data analysis. However, similar to GraphPad and SPSS, minitab needs command over coding and can offer automated analyses.

Use of Statistical Tools In Research and Data Analysis

Statistical tools manage the large data. Many biological studies use large data to analyze the trends and patterns in studies. Therefore, using statistical tools becomes essential, as they manage the large data sets, making data processing more convenient.

Following these steps will help biological researchers to showcase the statistics in research in detail, and develop accurate hypothesis and use correct tools for it.

There are a range of statistical tools in research which can help researchers manage their research data and improve the outcome of their research by better interpretation of data. You could use statistics in research by understanding the research question, knowledge of statistics and your personal experience in coding.

Have you faced challenges while using statistics in research? How did you manage it? Did you use any of the statistical tools to help you with your research data? Do write to us or comment below!

Frequently Asked Questions

Statistics in research can help a researcher approach the study in a stepwise manner: 1. Establishing a sample size 2. Testing of hypothesis 3. Data interpretation through analysis

Statistical methods are essential for scientific research. In fact, statistical methods dominate the scientific research as they include planning, designing, collecting data, analyzing, drawing meaningful interpretation and reporting of research findings. Furthermore, the results acquired from research project are meaningless raw data unless analyzed with statistical tools. Therefore, determining statistics in research is of utmost necessity to justify research findings.

Statistical tools in research can help researchers understand what to do with data and how to interpret the results, making this process as easy as possible. They can manage large data sets, making data processing more convenient. A great number of tools are available to carry out statistical analysis of data like SPSS, SAS (Statistical Analysis Software), and Minitab.

nice article to read

Holistic but delineating. A very good read.

Rate this article Cancel Reply

Your email address will not be published.

Enago Academy's Most Popular Articles

- Promoting Research

- Thought Leadership

- Trending Now

How Enago Academy Contributes to Sustainable Development Goals (SDGs) Through Empowering Researchers

The United Nations Sustainable Development Goals (SDGs) are a universal call to action to end…

- Reporting Research

Research Interviews: An effective and insightful way of data collection

Research interviews play a pivotal role in collecting data for various academic, scientific, and professional…

Planning Your Data Collection: Designing methods for effective research

Planning your research is very important to obtain desirable results. In research, the relevance of…

- Language & Grammar

Best Plagiarism Checker Tool for Researchers — Top 4 to choose from!

While common writing issues like language enhancement, punctuation errors, grammatical errors, etc. can be dealt…

- Industry News

- Publishing News

2022 in a Nutshell — Reminiscing the year when opportunities were seized and feats were achieved!

It’s beginning to look a lot like success! Some of the greatest opportunities to research…

2022 in a Nutshell — Reminiscing the year when opportunities were seized and feats…

Sign-up to read more

Subscribe for free to get unrestricted access to all our resources on research writing and academic publishing including:

- 2000+ blog articles

- 50+ Webinars

- 10+ Expert podcasts

- 50+ Infographics

- 10+ Checklists

- Research Guides

We hate spam too. We promise to protect your privacy and never spam you.

I am looking for Editing/ Proofreading services for my manuscript Tentative date of next journal submission:

What would be most effective in reducing research misconduct?

- Skip to secondary menu

- Skip to main content

- Skip to primary sidebar

Statistics By Jim

Making statistics intuitive

The Importance of Statistics

By Jim Frost 51 Comments

The field of statistics is the science of learning from data. Statistical knowledge helps you use the proper methods to collect the data, employ the correct analyses, and effectively present the results. Statistics is a crucial process behind how we make discoveries in science, make decisions based on data, and make predictions. Statistics allows you to understand a subject much more deeply.

Personally, I think statistics is an exciting field about the thrill of discovery, learning, and challenging your assumptions. Statistics facilitates the creation of new knowledge. Bit by bit, we push back the frontier of what is known. To learn more about my passion for statistics as an experienced statistician, read about my experiences and challenges early in my scientific research career .

For a contrast, read about qualitative research , which uses non-numeric data and does not perform statistical analyses.

Statistics Uses Numerical Evidence to Draw Valid Conclusions

Statistics are not just numbers and facts. You know, things like 4 out of 5 dentists prefer a specific toothpaste. Instead, it’s an array of knowledge and procedures that allow you to learn from data reliably. Statistics allow you to evaluate claims based on quantitative evidence and help you differentiate between reasonable and dubious conclusions. That aspect is particularly vital these days because data are so plentiful along with interpretations presented by people with unknown motivations.

Statisticians offer critical guidance in producing trustworthy analyses and predictions. Along the way, statisticians can help investigators avoid a wide variety of analytical traps.

When analysts use statistical procedures correctly, they tend to produce accurate results. In fact, statistical analyses account for uncertainty and error in the results. Statisticians ensure that all aspects of a study follow the appropriate methods to produce trustworthy results. These methods include:

- Producing reliable data.

- Analyzing the data appropriately.

- Drawing reasonable conclusions.

Statisticians Know How to Avoid Common Pitfalls

Using statistical analyses to produce findings for a study is the culmination of a long process. This process includes constructing the study design, selecting and measuring the variables, devising the sampling technique and sample size , cleaning the data, and determining the analysis methodology among numerous other issues. In some cases, you might want to take the raw data and use it to cluster observations in similar groups by using patterns in the data to help target your research or interventions. The overall quality of the results depends on the entire chain of events. A single weak link might produce unreliable results. The following list provides a small taste of potential problems and analytical errors that can affect a study.

Accuracy and Precision : Before collecting data, you must ascertain the accuracy and precision of your measurement system. After all, if you can’t trust your data, you can’t trust the results!

Biased samples: An incorrectly drawn sample can bias the conclusions from the start. For example, if a study uses human subjects, the subjects might be different than non-subjects in a way that affects the results. See: Populations, Parameters, and Samples in Inferential Statistics .

Overgeneralization: Findings from one population might not apply to another population. Unfortunately, it’s not necessarily clear what differentiates one population from another. Statistical inferences are always limited, and you must understand the limitations.

Causality: How do you determine when X causes a change in Y? Statisticians need tight standards to assume causality whereas others accept causal relationships more easily. When A precedes B, and A is correlated with B, many mistakenly believe it is a causal connection! However, you’ll need to use an experimental design that includes random assignment to assume confidently that the results represent causality. Learn how to determine whether you’re observing causation or correlation !

Incorrect analysis: Are you analyzing a multivariate study area with only one variable? Or, using an inadequate set of variables? Perhaps you’re assessing the mean when the median might be a better ? Or, did you fit a linear relationship to data that are nonlinear ? You can use a wide range of analytical tools, but not all of them are correct for a specific situation.

Violating the assumptions for an analysis: Most statistical analyses have assumptions. These assumptions often involve properties of the sample, variables, data, and the model. Adding to the complexity, you can waive some assumptions under specific conditions—sometimes thanks to the central limit theorem . When you violate an important assumption, you risk producing misleading results.

Data mining : Even when analysts do everything else correctly, they can produce falsely significant results by investigating a dataset for too long. When analysts conduct many tests, some will be statistically significant due to chance patterns in the data. Fastidious statisticians track the number of tests performed during a study and place the results in the proper context.

Numerous considerations must be correct to produce trustworthy conclusions. Unfortunately, there are many ways to mess up analyses and produce misleading results. Statisticians can guide others through this swamp! Without these guides, you might unintentionally end up p-hacking your results .

Use Statistics to Make an Impact in Your Field

Statistical analyses are used in almost all fields to make sense of the vast amount of data that are available. Even if the field of statistics is not your primary field of study, it can help you make an impact in your chosen field. Chances are very high that you’ll need working knowledge of statistical methodology both to produce new findings in your field and to understand the work of others.

Conversely, as a statistician, there is a high demand for your skills in a wide variety of areas: universities, research labs, government, industry, etc. Furthermore, statistical careers often pay quite well. One of my favorite quotes about statistics is the following by John Tukey:

“The best thing about being a statistician is that you get to play in everyone else’s backyard.”

My interests are quite broad, and statistical knowledge provides the tools to understand all of them.

Lies, Damned Lies, and Statistics: Use Statistical Knowledge to Protect Yourself

I’m sure you’re familiar with the expression about damned lies and statistics, which was spread by Mark Twain among others. Is it true?

Unscrupulous analysts can use incorrect methodology to draw unwarranted conclusions. That long list of accidental pitfalls can quickly become a source of techniques to produce misleading analyses intentionally. But, how do you know? If you’re not familiar with statistics, these manipulations can be hard to detect. Statistical knowledge is the solution to this problem. Use it to protect yourself from manipulation and to react to information intelligently.

Learn how anecdotal evidence is the opposite of statistical methodology and how it can lead you astray!

Using statistics in a scientific study requires a lot of planning. To learn more about this process, read 5 Steps for Conducting Scientific Studies with Statistical Analyses .

The world today produces more data and more analyses designed to influence you than ever before. Are you ready for it?

If you’re learning about statistics and like the approach I use in my blog, check out my Introduction to Statistics book! It’s available at Amazon and other retailers.

Share this:

Reader Interactions

July 11, 2022 at 2:25 am

Your are Awesome Jim I like your Blog’s Thanks It’s Very Helpful for me!

July 11, 2022 at 2:33 am

Thanks so much! You’re too kind! I’m really glad my blog has been helpful too! 🙂

June 7, 2022 at 1:40 pm

Please pardon my ignorance and the possibility that I’m some sort of Philistine but I’m trying to help my teenager with statistics revision and my brain is fried. I’m not lacking in intelligence (my favourite subject is physics) but I’m struggling to see the point in the subject when I imagine that there are computer programs that one can put data into in order to find out statistics. I even typed ‘statistics for idiots’ into Google search and the results I got have made me even more confused.

June 8, 2022 at 9:02 pm

There are definitely computer programs in which you can enter the data and it’ll display some numbers. However, there is a lot more to it than that. There are many pitfalls that the untrained can fall into without realizing. Those pitfalls can completely invalidate the results. So, yes, you can enter data into statistical software, and it’ll display some results. However, garbage in –> garbage out. And there are various cases where you won’t realize it’s garbage. The analyses have various assumptions that you need to check. If you don’t check and satisfy the assumptions, you can’t trust the results. Do you know what statistical test is correct for your specific data?

Then there are all the experimental design issues before you even get to measuring data that will help ensure valid results. And, if you want to show causation, how do you do that? There’s the old and true saying that “correlation doesn’t necessarily imply causation.” So, how do you tell? How do you show causation?

Those are just a few of the possible issues. There are many others! Some I discuss in this vary blog post!

Statistics isn’t just the numbers and calculations. It’s understanding the proper methods and procedures, and how to use them correctly so you can both collect and analyze data that will answer your research questions. There’s a whole chain of events that starts during the design phase (well before data collection) and goes through to the analysis phase that needs to be just right for you to be able to trust the results you see in your statistical software. And, if your software says the results are statistically significant, what does that even mean? And not mean? There’s a lot of specialized knowledge that is required throughout that process.

March 31, 2022 at 10:55 am

Thank you so much! It would be a great help. Appreciate it!

March 27, 2022 at 6:21 am

Hello Sir. may I ask on how to ensure that the statistical tools will be used in the study are aligned with the research objectives? Thank you so much!

March 28, 2022 at 9:23 pm

That’s question that requires a very long and complex answer. I’ve written three books about that and there are many more!

However, I’ve written a post that discusses the key considerations and it’ll answer your questions: Conducting Scientific Studies with Statistical Analyses

February 2, 2022 at 3:01 pm

Pls sir, I want to ask a question, What is the importance of statistics in mass communication

February 3, 2022 at 4:03 pm

Imagine you’re communicating with many people about scientific findings. You’ll need to know how to interpret the results of a statistical study. Sometimes knowing exactly what a study is concluding and, importantly, unable to conclude is crucial. Additionally, you should understand the strength of the study. Are there any shortcomings or weaknesses that should make you question the results? By being able to read the statistical results of the study and having a full awareness of the implications of the study’s design, you’ll be better able to present only the credible results to your audience and able to convey them accurately without either incorrectly exaggerating or diminishing their importance beyond their true value.

September 20, 2021 at 12:37 pm

What is statistics and the Importance sir please this is an assignment given to me thank you sir.

September 20, 2021 at 3:49 pm

You’re in the right place. Read this article to answer your questions. There’s no reason for me to retype what I’ve already written in the article in the comments sections! It’s all there!

February 5, 2021 at 3:22 am

Hello sir Jim, your articles is very interesting and very much helpful.

Knowing about statistics sir, I have personal question: How do you apply statistics in the research process?

February 5, 2021 at 9:58 pm

I happen to have written a blog post exactly about that topic! 5 Steps for Conducting Studies with Statistics

Please read that post and if you have more specific questions about a part of the process, you can post them there.

Thanks for writing!

December 1, 2020 at 4:16 am

what year was this made? im planning to use it as a reference to my paper

December 1, 2020 at 11:39 pm

Hi Saegiru,

For online resources, you typically don’t use the publication data because it can change over time. Instead, you generally use the data you accessed the URL. Perdue University’s Online Writing Lab (OWL) has a great web page for how to reference websites and URLs . Please see their guidelines.

November 6, 2020 at 6:18 am

THANK YOU FOR THIS ‘VERY HELPFUL’

September 27, 2020 at 11:38 am

When are ur articles publisehd?

September 28, 2020 at 2:16 pm

I post new articles every 2-4 weeks. You can subscribe to receive an email every time I post a new article. Look in the right side bar, partway down for the place to enter your email address. I do not send spam or sell your email.

August 7, 2020 at 11:06 am

Jim. What a champion you are. Than you so much. May God Bless.

June 15, 2020 at 7:02 pm

Achei incrível, maravilhoso texto!!! Trabalhar com estatística, a Bioestatística em particular é desafiador.

June 15, 2020 at 10:24 pm

Obrigado! Estou feliz que meu site seja útil!

June 13, 2020 at 5:30 am

I’m really grateful for this explanation. You clarified everything, more knowledge I pray.

March 2, 2020 at 1:44 pm

Thank you sir ,for your selfless services,your text really help me. more knowledge I pray 🙏.

February 16, 2020 at 7:18 pm

Thanks a lot, Jim. I found very useful, your article in the preparation of my research work. I highly appreciate your work.

December 7, 2019 at 2:57 pm

Hi Jim, I am elated to run into your website. You clearly explain confusing subjects. As I have decided to embark on learning data science, statistics is the number one area that pops up in every online course. I am curious of your perspective on how linear regression machine learning algorithms differs from the linear regression in statistics. I would love your explanation to draw the connection between the two. Moreover, it would be so amazing if you could educate on all of these algorithms. We need SMEs like yourself to talk in layman’s terms. Thank you!

November 17, 2019 at 11:25 pm

And the year this article was published is when sir? Or the date published. Thank you

November 18, 2019 at 11:28 am

Hello Najihah,

To cite this page as a reference, please see the Electronic Sources guidelines from Purdue University. Look in the “A Page on a Website” section. Typically, you use the access date. For this post, you can use the following citation (change the date as needed):

Frost, Jim. “The Importance of Statistics” Statistics By Jim , https://statisticsbyjim.com/basics/importance-statistics/ . Accessed 18 November 2019.

November 11, 2019 at 8:31 am

Thank you sir for your well explained notes. This one has really helped me a lot to complete my assignment

October 2, 2019 at 4:10 am

Please can you help me in writing a reference to your article?

October 2, 2019 at 5:09 pm

For this type of request, I always refer people to Purdue’s excellent resource about citing electronic sources. This first section on their web page is titled “Webpage or Piece of Online Content” and has several examples that you can use.

Purdue’s Reference List: Electronic Sources

For the author’s name (mine), you can use “Frost, J.”

September 7, 2019 at 9:16 am

how does statistics widen the scope of knowledge

June 18, 2019 at 6:08 am

Thanks for the information, it’s quite interesting.

May 15, 2019 at 4:23 am

i found your article is so usefull for me writing my thesis. may I know when you wrote this article?

May 17, 2019 at 10:30 am

Hi Geovani,

Thank you and I’m glad that you found the article to be helpful! I’m not sure exactly when I wrote it. It goes back quite a ways. However, to reference a webpage, you really need the retrieved from URL date because webpages can change overtime. Read here to learn How to cite a website .

Best of luck with your thesis!

April 30, 2019 at 7:22 am

I have found your article very informative and interesting. I appreciate your points of view and I agree with so many. You’ve done a great job with making this clear enough for anyone to understand.

April 30, 2019 at 11:07 pm

Thank you so much, Steav! I really appreciate that!

March 28, 2019 at 2:13 am

In social science, statistics cover all the jobs which is necessary in social sciences for planning, estimating,working, facilitating and most important point is that through statistics all information, observation and data are collected into a single page.

December 6, 2018 at 10:26 am

what is your thought about the importance of statistics in social science?

December 1, 2018 at 11:05 pm

I have a baseball data sets with 30 independent variables. In this data set, I have one variable which is a combination of the summation 3 variables from the data set. For example, x8=x3+x4+x5. I need to build a multiple linear regression model, if i include x8 in my model should i remove x3,x4,x5. Could you please advise with this

December 2, 2018 at 12:35 am

Yes, you should remove those variables!

October 23, 2018 at 2:07 pm

thanks for sharing your knowledge with us thankss you sir

September 15, 2018 at 4:20 am

My notes on statistics are incomplete because I don’t know the importance of statistics .but u help me a lot in completing my notes .thanku so much sir

September 15, 2018 at 4:17 pm

You’re super welcome! I’m glad it was helpful!

June 27, 2018 at 12:26 pm

its really awesome as it helped me a lot in completing my class 11 notes thank you sir thank you very much for such a wonderful explanation

June 27, 2018 at 2:30 pm

Hi Cera, It makes me happy to hear that my website helped you! Best of luck with your studies!

March 21, 2018 at 1:56 am

Hi,very well explain in simple language , I expect more blogs from you’r side. especially ,how much sample is required for particular analysis and what are criteria should be consider before collecting the sample.

Thank you.Jim..

March 21, 2018 at 1:49 pm

Hi Gopala, I’m very happy to hear that you’re finding my blogs to helpful! I have just written one about determining a good sample size ! I think you’ll find that one to be helpful too.

March 14, 2018 at 6:53 am

Hi. Thanks for posting this. This really helped me with my research for the upcoming quiz.

March 14, 2018 at 11:02 am

Hi Madison, you’re very welcome! I’m glad it helped!

December 11, 2017 at 1:46 am

1. The hanging comma (the second one in “Lies, Damned Lies, and Statistics”) gives this a totally different sense.

2. We are in the age of information quality. This is beyond traditional statistics. See https://www.facebook.com/infoQbook/

December 11, 2017 at 2:06 am

Hi Ron, thanks for you thoughtful comment.

The full expression is: “There are three kinds of lies: lies, damned lies, and statistics.” And, the Wikipedia article includes the final comma. I believe it accurately reflects the intention of the quote that statistics are worse than both lies and damn lies!

I’d argue that the field of statistics is very concerned about the quality of the information that goes into analyses. However, it looks like you and your book are taking it to another level. Congratulations!

Comments and Questions Cancel reply

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

The Beginner's Guide to Statistical Analysis | 5 Steps & Examples

Statistical analysis means investigating trends, patterns, and relationships using quantitative data . It is an important research tool used by scientists, governments, businesses, and other organizations.

To draw valid conclusions, statistical analysis requires careful planning from the very start of the research process . You need to specify your hypotheses and make decisions about your research design, sample size, and sampling procedure.

After collecting data from your sample, you can organize and summarize the data using descriptive statistics . Then, you can use inferential statistics to formally test hypotheses and make estimates about the population. Finally, you can interpret and generalize your findings.

This article is a practical introduction to statistical analysis for students and researchers. We’ll walk you through the steps using two research examples. The first investigates a potential cause-and-effect relationship, while the second investigates a potential correlation between variables.

Table of contents

Step 1: write your hypotheses and plan your research design, step 2: collect data from a sample, step 3: summarize your data with descriptive statistics, step 4: test hypotheses or make estimates with inferential statistics, step 5: interpret your results, other interesting articles.

To collect valid data for statistical analysis, you first need to specify your hypotheses and plan out your research design.

Writing statistical hypotheses

The goal of research is often to investigate a relationship between variables within a population . You start with a prediction, and use statistical analysis to test that prediction.

A statistical hypothesis is a formal way of writing a prediction about a population. Every research prediction is rephrased into null and alternative hypotheses that can be tested using sample data.

While the null hypothesis always predicts no effect or no relationship between variables, the alternative hypothesis states your research prediction of an effect or relationship.

- Null hypothesis: A 5-minute meditation exercise will have no effect on math test scores in teenagers.

- Alternative hypothesis: A 5-minute meditation exercise will improve math test scores in teenagers.

- Null hypothesis: Parental income and GPA have no relationship with each other in college students.

- Alternative hypothesis: Parental income and GPA are positively correlated in college students.

Planning your research design

A research design is your overall strategy for data collection and analysis. It determines the statistical tests you can use to test your hypothesis later on.

First, decide whether your research will use a descriptive, correlational, or experimental design. Experiments directly influence variables, whereas descriptive and correlational studies only measure variables.

- In an experimental design , you can assess a cause-and-effect relationship (e.g., the effect of meditation on test scores) using statistical tests of comparison or regression.

- In a correlational design , you can explore relationships between variables (e.g., parental income and GPA) without any assumption of causality using correlation coefficients and significance tests.

- In a descriptive design , you can study the characteristics of a population or phenomenon (e.g., the prevalence of anxiety in U.S. college students) using statistical tests to draw inferences from sample data.

Your research design also concerns whether you’ll compare participants at the group level or individual level, or both.

- In a between-subjects design , you compare the group-level outcomes of participants who have been exposed to different treatments (e.g., those who performed a meditation exercise vs those who didn’t).

- In a within-subjects design , you compare repeated measures from participants who have participated in all treatments of a study (e.g., scores from before and after performing a meditation exercise).

- In a mixed (factorial) design , one variable is altered between subjects and another is altered within subjects (e.g., pretest and posttest scores from participants who either did or didn’t do a meditation exercise).

- Experimental

- Correlational

First, you’ll take baseline test scores from participants. Then, your participants will undergo a 5-minute meditation exercise. Finally, you’ll record participants’ scores from a second math test.

In this experiment, the independent variable is the 5-minute meditation exercise, and the dependent variable is the math test score from before and after the intervention. Example: Correlational research design In a correlational study, you test whether there is a relationship between parental income and GPA in graduating college students. To collect your data, you will ask participants to fill in a survey and self-report their parents’ incomes and their own GPA.

Measuring variables

When planning a research design, you should operationalize your variables and decide exactly how you will measure them.

For statistical analysis, it’s important to consider the level of measurement of your variables, which tells you what kind of data they contain:

- Categorical data represents groupings. These may be nominal (e.g., gender) or ordinal (e.g. level of language ability).

- Quantitative data represents amounts. These may be on an interval scale (e.g. test score) or a ratio scale (e.g. age).

Many variables can be measured at different levels of precision. For example, age data can be quantitative (8 years old) or categorical (young). If a variable is coded numerically (e.g., level of agreement from 1–5), it doesn’t automatically mean that it’s quantitative instead of categorical.

Identifying the measurement level is important for choosing appropriate statistics and hypothesis tests. For example, you can calculate a mean score with quantitative data, but not with categorical data.

In a research study, along with measures of your variables of interest, you’ll often collect data on relevant participant characteristics.

| Variable | Type of data |

|---|---|

| Age | Quantitative (ratio) |

| Gender | Categorical (nominal) |

| Race or ethnicity | Categorical (nominal) |

| Baseline test scores | Quantitative (interval) |

| Final test scores | Quantitative (interval) |

| Parental income | Quantitative (ratio) |

|---|---|

| GPA | Quantitative (interval) |

Prevent plagiarism. Run a free check.

In most cases, it’s too difficult or expensive to collect data from every member of the population you’re interested in studying. Instead, you’ll collect data from a sample.

Statistical analysis allows you to apply your findings beyond your own sample as long as you use appropriate sampling procedures . You should aim for a sample that is representative of the population.

Sampling for statistical analysis

There are two main approaches to selecting a sample.

- Probability sampling: every member of the population has a chance of being selected for the study through random selection.

- Non-probability sampling: some members of the population are more likely than others to be selected for the study because of criteria such as convenience or voluntary self-selection.

In theory, for highly generalizable findings, you should use a probability sampling method. Random selection reduces several types of research bias , like sampling bias , and ensures that data from your sample is actually typical of the population. Parametric tests can be used to make strong statistical inferences when data are collected using probability sampling.

But in practice, it’s rarely possible to gather the ideal sample. While non-probability samples are more likely to at risk for biases like self-selection bias , they are much easier to recruit and collect data from. Non-parametric tests are more appropriate for non-probability samples, but they result in weaker inferences about the population.

If you want to use parametric tests for non-probability samples, you have to make the case that:

- your sample is representative of the population you’re generalizing your findings to.

- your sample lacks systematic bias.

Keep in mind that external validity means that you can only generalize your conclusions to others who share the characteristics of your sample. For instance, results from Western, Educated, Industrialized, Rich and Democratic samples (e.g., college students in the US) aren’t automatically applicable to all non-WEIRD populations.

If you apply parametric tests to data from non-probability samples, be sure to elaborate on the limitations of how far your results can be generalized in your discussion section .

Create an appropriate sampling procedure

Based on the resources available for your research, decide on how you’ll recruit participants.

- Will you have resources to advertise your study widely, including outside of your university setting?

- Will you have the means to recruit a diverse sample that represents a broad population?

- Do you have time to contact and follow up with members of hard-to-reach groups?

Your participants are self-selected by their schools. Although you’re using a non-probability sample, you aim for a diverse and representative sample. Example: Sampling (correlational study) Your main population of interest is male college students in the US. Using social media advertising, you recruit senior-year male college students from a smaller subpopulation: seven universities in the Boston area.

Calculate sufficient sample size

Before recruiting participants, decide on your sample size either by looking at other studies in your field or using statistics. A sample that’s too small may be unrepresentative of the sample, while a sample that’s too large will be more costly than necessary.

There are many sample size calculators online. Different formulas are used depending on whether you have subgroups or how rigorous your study should be (e.g., in clinical research). As a rule of thumb, a minimum of 30 units or more per subgroup is necessary.

To use these calculators, you have to understand and input these key components:

- Significance level (alpha): the risk of rejecting a true null hypothesis that you are willing to take, usually set at 5%.

- Statistical power : the probability of your study detecting an effect of a certain size if there is one, usually 80% or higher.

- Expected effect size : a standardized indication of how large the expected result of your study will be, usually based on other similar studies.

- Population standard deviation: an estimate of the population parameter based on a previous study or a pilot study of your own.

Once you’ve collected all of your data, you can inspect them and calculate descriptive statistics that summarize them.

Inspect your data

There are various ways to inspect your data, including the following:

- Organizing data from each variable in frequency distribution tables .

- Displaying data from a key variable in a bar chart to view the distribution of responses.

- Visualizing the relationship between two variables using a scatter plot .

By visualizing your data in tables and graphs, you can assess whether your data follow a skewed or normal distribution and whether there are any outliers or missing data.

A normal distribution means that your data are symmetrically distributed around a center where most values lie, with the values tapering off at the tail ends.

In contrast, a skewed distribution is asymmetric and has more values on one end than the other. The shape of the distribution is important to keep in mind because only some descriptive statistics should be used with skewed distributions.

Extreme outliers can also produce misleading statistics, so you may need a systematic approach to dealing with these values.

Calculate measures of central tendency

Measures of central tendency describe where most of the values in a data set lie. Three main measures of central tendency are often reported:

- Mode : the most popular response or value in the data set.

- Median : the value in the exact middle of the data set when ordered from low to high.

- Mean : the sum of all values divided by the number of values.

However, depending on the shape of the distribution and level of measurement, only one or two of these measures may be appropriate. For example, many demographic characteristics can only be described using the mode or proportions, while a variable like reaction time may not have a mode at all.

Calculate measures of variability

Measures of variability tell you how spread out the values in a data set are. Four main measures of variability are often reported:

- Range : the highest value minus the lowest value of the data set.

- Interquartile range : the range of the middle half of the data set.

- Standard deviation : the average distance between each value in your data set and the mean.

- Variance : the square of the standard deviation.

Once again, the shape of the distribution and level of measurement should guide your choice of variability statistics. The interquartile range is the best measure for skewed distributions, while standard deviation and variance provide the best information for normal distributions.

Using your table, you should check whether the units of the descriptive statistics are comparable for pretest and posttest scores. For example, are the variance levels similar across the groups? Are there any extreme values? If there are, you may need to identify and remove extreme outliers in your data set or transform your data before performing a statistical test.

| Pretest scores | Posttest scores | |

|---|---|---|

| Mean | 68.44 | 75.25 |

| Standard deviation | 9.43 | 9.88 |

| Variance | 88.96 | 97.96 |

| Range | 36.25 | 45.12 |

| 30 | ||

From this table, we can see that the mean score increased after the meditation exercise, and the variances of the two scores are comparable. Next, we can perform a statistical test to find out if this improvement in test scores is statistically significant in the population. Example: Descriptive statistics (correlational study) After collecting data from 653 students, you tabulate descriptive statistics for annual parental income and GPA.

It’s important to check whether you have a broad range of data points. If you don’t, your data may be skewed towards some groups more than others (e.g., high academic achievers), and only limited inferences can be made about a relationship.

| Parental income (USD) | GPA | |

|---|---|---|

| Mean | 62,100 | 3.12 |

| Standard deviation | 15,000 | 0.45 |

| Variance | 225,000,000 | 0.16 |

| Range | 8,000–378,000 | 2.64–4.00 |

| 653 | ||

A number that describes a sample is called a statistic , while a number describing a population is called a parameter . Using inferential statistics , you can make conclusions about population parameters based on sample statistics.

Researchers often use two main methods (simultaneously) to make inferences in statistics.

- Estimation: calculating population parameters based on sample statistics.

- Hypothesis testing: a formal process for testing research predictions about the population using samples.

You can make two types of estimates of population parameters from sample statistics:

- A point estimate : a value that represents your best guess of the exact parameter.

- An interval estimate : a range of values that represent your best guess of where the parameter lies.

If your aim is to infer and report population characteristics from sample data, it’s best to use both point and interval estimates in your paper.

You can consider a sample statistic a point estimate for the population parameter when you have a representative sample (e.g., in a wide public opinion poll, the proportion of a sample that supports the current government is taken as the population proportion of government supporters).

There’s always error involved in estimation, so you should also provide a confidence interval as an interval estimate to show the variability around a point estimate.

A confidence interval uses the standard error and the z score from the standard normal distribution to convey where you’d generally expect to find the population parameter most of the time.

Hypothesis testing

Using data from a sample, you can test hypotheses about relationships between variables in the population. Hypothesis testing starts with the assumption that the null hypothesis is true in the population, and you use statistical tests to assess whether the null hypothesis can be rejected or not.

Statistical tests determine where your sample data would lie on an expected distribution of sample data if the null hypothesis were true. These tests give two main outputs:

- A test statistic tells you how much your data differs from the null hypothesis of the test.

- A p value tells you the likelihood of obtaining your results if the null hypothesis is actually true in the population.

Statistical tests come in three main varieties:

- Comparison tests assess group differences in outcomes.

- Regression tests assess cause-and-effect relationships between variables.

- Correlation tests assess relationships between variables without assuming causation.

Your choice of statistical test depends on your research questions, research design, sampling method, and data characteristics.

Parametric tests

Parametric tests make powerful inferences about the population based on sample data. But to use them, some assumptions must be met, and only some types of variables can be used. If your data violate these assumptions, you can perform appropriate data transformations or use alternative non-parametric tests instead.

A regression models the extent to which changes in a predictor variable results in changes in outcome variable(s).

- A simple linear regression includes one predictor variable and one outcome variable.

- A multiple linear regression includes two or more predictor variables and one outcome variable.

Comparison tests usually compare the means of groups. These may be the means of different groups within a sample (e.g., a treatment and control group), the means of one sample group taken at different times (e.g., pretest and posttest scores), or a sample mean and a population mean.

- A t test is for exactly 1 or 2 groups when the sample is small (30 or less).

- A z test is for exactly 1 or 2 groups when the sample is large.

- An ANOVA is for 3 or more groups.

The z and t tests have subtypes based on the number and types of samples and the hypotheses:

- If you have only one sample that you want to compare to a population mean, use a one-sample test .

- If you have paired measurements (within-subjects design), use a dependent (paired) samples test .

- If you have completely separate measurements from two unmatched groups (between-subjects design), use an independent (unpaired) samples test .

- If you expect a difference between groups in a specific direction, use a one-tailed test .

- If you don’t have any expectations for the direction of a difference between groups, use a two-tailed test .

The only parametric correlation test is Pearson’s r . The correlation coefficient ( r ) tells you the strength of a linear relationship between two quantitative variables.

However, to test whether the correlation in the sample is strong enough to be important in the population, you also need to perform a significance test of the correlation coefficient, usually a t test, to obtain a p value. This test uses your sample size to calculate how much the correlation coefficient differs from zero in the population.

You use a dependent-samples, one-tailed t test to assess whether the meditation exercise significantly improved math test scores. The test gives you:

- a t value (test statistic) of 3.00

- a p value of 0.0028

Although Pearson’s r is a test statistic, it doesn’t tell you anything about how significant the correlation is in the population. You also need to test whether this sample correlation coefficient is large enough to demonstrate a correlation in the population.

A t test can also determine how significantly a correlation coefficient differs from zero based on sample size. Since you expect a positive correlation between parental income and GPA, you use a one-sample, one-tailed t test. The t test gives you:

- a t value of 3.08

- a p value of 0.001

The final step of statistical analysis is interpreting your results.

Statistical significance

In hypothesis testing, statistical significance is the main criterion for forming conclusions. You compare your p value to a set significance level (usually 0.05) to decide whether your results are statistically significant or non-significant.

Statistically significant results are considered unlikely to have arisen solely due to chance. There is only a very low chance of such a result occurring if the null hypothesis is true in the population.

This means that you believe the meditation intervention, rather than random factors, directly caused the increase in test scores. Example: Interpret your results (correlational study) You compare your p value of 0.001 to your significance threshold of 0.05. With a p value under this threshold, you can reject the null hypothesis. This indicates a statistically significant correlation between parental income and GPA in male college students.

Note that correlation doesn’t always mean causation, because there are often many underlying factors contributing to a complex variable like GPA. Even if one variable is related to another, this may be because of a third variable influencing both of them, or indirect links between the two variables.

Effect size

A statistically significant result doesn’t necessarily mean that there are important real life applications or clinical outcomes for a finding.

In contrast, the effect size indicates the practical significance of your results. It’s important to report effect sizes along with your inferential statistics for a complete picture of your results. You should also report interval estimates of effect sizes if you’re writing an APA style paper .

With a Cohen’s d of 0.72, there’s medium to high practical significance to your finding that the meditation exercise improved test scores. Example: Effect size (correlational study) To determine the effect size of the correlation coefficient, you compare your Pearson’s r value to Cohen’s effect size criteria.

Decision errors

Type I and Type II errors are mistakes made in research conclusions. A Type I error means rejecting the null hypothesis when it’s actually true, while a Type II error means failing to reject the null hypothesis when it’s false.

You can aim to minimize the risk of these errors by selecting an optimal significance level and ensuring high power . However, there’s a trade-off between the two errors, so a fine balance is necessary.

Frequentist versus Bayesian statistics

Traditionally, frequentist statistics emphasizes null hypothesis significance testing and always starts with the assumption of a true null hypothesis.

However, Bayesian statistics has grown in popularity as an alternative approach in the last few decades. In this approach, you use previous research to continually update your hypotheses based on your expectations and observations.

Bayes factor compares the relative strength of evidence for the null versus the alternative hypothesis rather than making a conclusion about rejecting the null hypothesis or not.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Student’s t -distribution

- Normal distribution

- Null and Alternative Hypotheses

- Chi square tests

- Confidence interval

Methodology

- Cluster sampling

- Stratified sampling

- Data cleansing

- Reproducibility vs Replicability

- Peer review

- Likert scale

Research bias

- Implicit bias

- Framing effect

- Cognitive bias

- Placebo effect

- Hawthorne effect

- Hostile attribution bias

- Affect heuristic

Is this article helpful?

Other students also liked.

- Descriptive Statistics | Definitions, Types, Examples

- Inferential Statistics | An Easy Introduction & Examples

- Choosing the Right Statistical Test | Types & Examples

More interesting articles

- Akaike Information Criterion | When & How to Use It (Example)

- An Easy Introduction to Statistical Significance (With Examples)

- An Introduction to t Tests | Definitions, Formula and Examples

- ANOVA in R | A Complete Step-by-Step Guide with Examples

- Central Limit Theorem | Formula, Definition & Examples

- Central Tendency | Understanding the Mean, Median & Mode

- Chi-Square (Χ²) Distributions | Definition & Examples

- Chi-Square (Χ²) Table | Examples & Downloadable Table

- Chi-Square (Χ²) Tests | Types, Formula & Examples

- Chi-Square Goodness of Fit Test | Formula, Guide & Examples

- Chi-Square Test of Independence | Formula, Guide & Examples

- Coefficient of Determination (R²) | Calculation & Interpretation

- Correlation Coefficient | Types, Formulas & Examples

- Frequency Distribution | Tables, Types & Examples

- How to Calculate Standard Deviation (Guide) | Calculator & Examples

- How to Calculate Variance | Calculator, Analysis & Examples

- How to Find Degrees of Freedom | Definition & Formula

- How to Find Interquartile Range (IQR) | Calculator & Examples

- How to Find Outliers | 4 Ways with Examples & Explanation

- How to Find the Geometric Mean | Calculator & Formula

- How to Find the Mean | Definition, Examples & Calculator

- How to Find the Median | Definition, Examples & Calculator

- How to Find the Mode | Definition, Examples & Calculator

- How to Find the Range of a Data Set | Calculator & Formula

- Hypothesis Testing | A Step-by-Step Guide with Easy Examples

- Interval Data and How to Analyze It | Definitions & Examples

- Levels of Measurement | Nominal, Ordinal, Interval and Ratio

- Linear Regression in R | A Step-by-Step Guide & Examples

- Missing Data | Types, Explanation, & Imputation

- Multiple Linear Regression | A Quick Guide (Examples)

- Nominal Data | Definition, Examples, Data Collection & Analysis

- Normal Distribution | Examples, Formulas, & Uses

- Null and Alternative Hypotheses | Definitions & Examples

- One-way ANOVA | When and How to Use It (With Examples)

- Ordinal Data | Definition, Examples, Data Collection & Analysis

- Parameter vs Statistic | Definitions, Differences & Examples

- Pearson Correlation Coefficient (r) | Guide & Examples

- Poisson Distributions | Definition, Formula & Examples

- Probability Distribution | Formula, Types, & Examples

- Quartiles & Quantiles | Calculation, Definition & Interpretation

- Ratio Scales | Definition, Examples, & Data Analysis

- Simple Linear Regression | An Easy Introduction & Examples

- Skewness | Definition, Examples & Formula

- Statistical Power and Why It Matters | A Simple Introduction

- Student's t Table (Free Download) | Guide & Examples

- T-distribution: What it is and how to use it

- Test statistics | Definition, Interpretation, and Examples

- The Standard Normal Distribution | Calculator, Examples & Uses

- Two-Way ANOVA | Examples & When To Use It

- Type I & Type II Errors | Differences, Examples, Visualizations

- Understanding Confidence Intervals | Easy Examples & Formulas

- Understanding P values | Definition and Examples

- Variability | Calculating Range, IQR, Variance, Standard Deviation

- What is Effect Size and Why Does It Matter? (Examples)

- What Is Kurtosis? | Definition, Examples & Formula

- What Is Standard Error? | How to Calculate (Guide with Examples)

What is your plagiarism score?

Skip to main content

- SAS Viya Platform

- Capabilities

- Why SAS Viya?

- Move to SAS Viya

- Artificial Intelligence

- Risk Management

- All Products & Solutions

- Public Sector

- Life Sciences

- Retail & Consumer Goods

- All Industries

- Contracting with SAS

- Customer Stories

- Generative AI

Why Learn SAS?

Demand for SAS skills is growing. Advance your career and train your team in sought after skills

- Train My Team

- Course Catalog

- Free Training

- My Training

- Academic Programs

- Free Academic Software

- Certification

- Choose a Credential

- Why get certified?

- Exam Preparation

- My Certification

- Communities

- Ask the Expert

- All Webinars

- Video Tutorials

- YouTube Channel

- SAS Programming

- Statistical Procedures

- New SAS Users

- Administrators

- All Communities

- Documentation

- Installation & Configuration

- SAS Viya Administration

- SAS Viya Programming

- System Requirements

- All Documentation

- Support & Services

- Knowledge Base

- Starter Kit

- Support by Product

- Support Services

- All Support & Services

- User Groups

- Partner Program

- Find a Partner

- Sign Into PartnerNet

Learn why SAS is the world's most trusted analytics platform, and why analysts, customers and industry experts love SAS.

Learn more about SAS

- Annual Report

- Vision & Mission

- Office Locations

- Internships

- Search Jobs

- News & Events

- Newsletters

- Trust Center

- support.sas.com

- documentation.sas.com

- blogs.sas.com

- communities.sas.com

- developer.sas.com

Select Your Region

Middle East & Africa

Asia Pacific

- Canada (English)

- Canada (Français)

- United States

- Česká Republika

- Deutschland

- Schweiz (Deutsch)

- Suisse (Français)

- United Kingdom

- Middle East

- Saudi Arabia

- South Africa

- New Zealand

- Philippines

- Thailand (English)

- ประเทศไทย (ภาษาไทย)

- Worldwide Sites

Create Profile

Get access to My SAS, trials, communities and more.

Edit Profile

Statistical Analysis

Look around you. statistics are everywhere..

The field of statistics touches our lives in many ways. From the daily routines in our homes to the business of making the greatest cities run, the effects of statistics are everywhere.

Statistical Analysis Defined

What is statistical analysis? It’s the science of collecting, exploring and presenting large amounts of data to discover underlying patterns and trends. Statistics are applied every day – in research, industry and government – to become more scientific about decisions that need to be made. For example:

- Manufacturers use statistics to weave quality into beautiful fabrics, to bring lift to the airline industry and to help guitarists make beautiful music.

- Researchers keep children healthy by using statistics to analyze data from the production of viral vaccines, which ensures consistency and safety.

- Communication companies use statistics to optimize network resources, improve service and reduce customer churn by gaining greater insight into subscriber requirements.

- Government agencies around the world rely on statistics for a clear understanding of their countries, their businesses and their people.

Look around you. From the tube of toothpaste in your bathroom to the planes flying overhead, you see hundreds of products and processes every day that have been improved through the use of statistics.

Analytics Insights

Connect with the latest insights on analytics through related articles and research., more on statistical analysis.

- What are the next big trends in statistics?

- Why should students study statistics?

- Celebrating statisticians: W. Edwards Deming

- Statistics: The language of science

Statistics is so unique because it can go from health outcomes research to marketing analysis to the longevity of a light bulb. It’s a fun field because you really can do so many different things with it.

Besa Smith President and Senior Scientist Analydata

Statistical Computing

Traditional methods for statistical analysis – from sampling data to interpreting results – have been used by scientists for thousands of years. But today’s data volumes make statistics ever more valuable and powerful. Affordable storage, powerful computers and advanced algorithms have all led to an increased use of computational statistics.

Whether you are working with large data volumes or running multiple permutations of your calculations, statistical computing has become essential for today’s statistician. Popular statistical computing practices include:

- Statistical programming – From traditional analysis of variance and linear regression to exact methods and statistical visualization techniques, statistical programming is essential for making data-based decisions in every field.

- Econometrics – Modeling, forecasting and simulating business processes for improved strategic and tactical planning. This method applies statistics to economics to forecast future trends.

- Operations research – Identify the actions that will produce the best results – based on many possible options and outcomes. Scheduling, simulation, and related modeling processes are used to optimize business processes and management challenges.

- Matrix programming – Powerful computer techniques for implementing your own statistical methods and exploratory data analysis using row operation algorithms.

- Statistical quality improvement – A mathematical approach to reviewing the quality and safety characteristics for all aspects of production.

Careers in Statistical Analysis

With everyone from The New York Times to Google’s Chief Economist Hal Varien proclaiming statistics to be the latest hot career field, who are we to argue? But why is there so much talk about careers in statistical analysis and data science? It could be the shortage of trained analytical thinkers. Or it could be the demand for managing the latest big data strains. Or, maybe it’s the excitement of applying mathematical concepts to make a difference in the world.

If you talk to statisticians about what first interested them in statistical analysis, you’ll hear a lot of stories about collecting baseball cards as a child. Or applying statistics to win more games of Axis and Allies. It is often these early passions that lead statisticians into the field. As adults, those passions can carry over into the workforce as a love of analysis and reasoning, where their passions are applied to everything from the influence of friends on purchase decisions to the study of endangered species around the world.

Learn more about current and historical statisticians:

- Ask a statistician videos cover current uses and future trends in statistics.

- SAS loves stats profiles statisticians working at SAS.

- Celebrating statisticians commemorates statistics practitioners from history.

Statistics Procedures Community

Join our statistics procedures community, where you can ask questions and share your experiences with SAS statistical products. SAS Statistical Procedures

Statistical Analysis Resources

- Statistics training

- Statistical analytics tutorials

- Statistics and operations research news

- SAS ® statistics products

Want more insights?

Risk & Fraud

Discover new insights on risk and fraud through research, related articles and much more..

Get more insights on big data including articles, research and other hot topics.

Explore insights from marketing movers and shakers on a variety of timely topics.

Learn more about sas products and solutions.

The Best PhD and Masters Consulting Company

Introduction to Statistical Analysis: A Beginner’s Guide.

Statistical analysis is a crucial component of research work across various disciplines, helping researchers derive meaningful insights from data. Whether you’re conducting scientific studies, social research, or data-driven investigations, having a solid understanding of statistical analysis is essential. In this beginner’s guide, we will explore the fundamental concepts and techniques of statistical analysis specifically tailored for research work, providing you with a strong foundation to enhance the quality and credibility of your research findings.

1. Importance of Statistical Analysis in Research:

Research aims to uncover knowledge and make informed conclusions. Statistical analysis plays a pivotal role in achieving this by providing tools and methods to analyze and interpret data accurately. It helps researchers identify patterns, test hypotheses, draw inferences, and quantify the strength of relationships between variables. Understanding the significance of statistical analysis empowers researchers to make evidence-based decisions.

2. Data Collection and Organization:

Before diving into statistical analysis, researchers must collect and organize their data effectively. We will discuss the importance of proper sampling techniques, data quality assurance, and data preprocessing. Additionally, we will explore methods to handle missing data and outliers, ensuring that your dataset is reliable and suitable for analysis.

3. Exploratory Data Analysis (EDA):

Exploratory Data Analysis is a preliminary step that involves visually exploring and summarizing the main characteristics of the data. We will cover techniques such as data visualization, descriptive statistics, and data transformations to gain insights into the distribution, central tendencies, and variability of the variables in your dataset. EDA helps researchers understand the underlying structure of the data and identify potential relationships for further investigation.

4. Statistical Inference and Hypothesis Testing:

Statistical inference allows researchers to make generalizations about a population based on a sample. We will delve into hypothesis testing, covering concepts such as null and alternative hypotheses, p-values, and significance levels. By understanding these concepts, you will be able to test your research hypotheses and determine if the observed results are statistically significant.

5. Parametric and Non-parametric Tests: