- Search Menu

- Sign in through your institution

- Advance Articles

- Editor's Choice

- Braunwald's Corner

- ESC Guidelines

- EHJ Dialogues

- Issue @ a Glance Podcasts

- CardioPulse

- Weekly Journal Scan

- European Heart Journal Supplements

- Year in Cardiovascular Medicine

- Asia in EHJ

- Most Cited Articles

- ESC Content Collections

- Author Guidelines

- Submission Site

- Why publish with EHJ?

- Open Access Options

- Submit from medRxiv or bioRxiv

- Author Resources

- Self-Archiving Policy

- Read & Publish

- Advertising and Corporate Services

- Advertising

- Reprints and ePrints

- Sponsored Supplements

- Journals Career Network

- About European Heart Journal

- Editorial Board

- About the European Society of Cardiology

- ESC Publications

- War in Ukraine

- ESC Membership

- ESC Journals App

- Developing Countries Initiative

- Dispatch Dates

- Terms and Conditions

- Journals on Oxford Academic

- Books on Oxford Academic

Article Contents

Introduction, the power of non-verbal communication, in academic settings, the role of body language in interviews and evaluations, cultural considerations, the impact of body language on collaboration, declarations.

- < Previous

Unspoken science: exploring the significance of body language in science and academia

- Article contents

- Figures & tables

- Supplementary Data

Mansi Patil, Vishal Patil, Unisha Katre, Unspoken science: exploring the significance of body language in science and academia, European Heart Journal , Volume 45, Issue 4, 21 January 2024, Pages 250–252, https://doi.org/10.1093/eurheartj/ehad598

- Permissions Icon Permissions

Scientific presentations serve as a platform for researchers to share their work and engage with their peers. Science and academia rely heavily on effective communication to share knowledge and foster collaboration. Science and academia are domains deeply rooted in the pursuit of knowledge and the exchange of ideas. While the focus is often on the content of research papers, lectures, and presentations, there is another form of communication that plays a significant role in these fields: body language. Non-verbal cues, such as facial expressions, gestures, posture, and eye contact, can convey a wealth of information, often subtly influencing interpersonal dynamics and the perception of scientific work. In this article, we will delve into the unspoken science of body language, exploring its significance in science and academia. It is essential to emphasize on the importance of body language in scientific and academic settings, highlighting its impact on presentations, interactions, interviews, and collaborations. Additionally, cultural considerations and the implications for cross-cultural communication are explored. By understanding the unspoken science of body language, researchers and academics can enhance their communication skills and promote a more inclusive and productive scientific community.

Communication is a multi-faceted process, and words are only one aspect of it. Research suggests that non-verbal communication constitutes a substantial portion of human interaction, often conveying information that words alone cannot. Body language has a direct impact on how people perceive and interpret scientific ideas and findings. 1 For example, a presenter who maintains confident eye contact, uses purposeful gestures, and exhibits an open posture is likely to be seen as more credible and persuasive compared with someone who fidgets, avoids eye contact, and displays closed-off body language ( Figure 1 ).

Types of non-verbal communications. 2 Non-verbal communication comprises of haptics, gestures, proxemics, facial expressions, paralinguistics, body language, appearance, eye contact, and artefacts.

In academia, body language plays a crucial role in various contexts. During lectures, professors who use engaging body language, such as animated gestures and expressive facial expressions, can captivate their students and enhance the learning experience. Similarly, students who exhibit attentive and respectful body language, such as maintaining eye contact and nodding, signal their interest and engagement in the subject matter. 3

Body language also influences interactions between colleagues and supervisors. For instance, in a laboratory setting, researchers who display confident and open body language are more likely to be perceived as competent and reliable by their peers. Conversely, individuals who exhibit closed-off or defensive body language may inadvertently create an environment that inhibits collaboration and knowledge sharing. The impact of haptics in research collaboration and networking lies in its potential to enhance interpersonal connections and convey emotions, thereby fostering a deeper sense of empathy and engagement among participants.

Interviews and evaluations are critical moments in academic and scientific careers. Body language can significantly impact the outcomes of these processes. Candidates who display confident body language, including good posture, firm handshakes, and appropriate gestures, are more likely to make positive impressions on interviewers or evaluators. Conversely, individuals who exhibit nervousness or closed-off body language may unwittingly convey a lack of confidence or competence, even if their qualifications are strong. Recognizing the power of body language in these situations allows individuals to present themselves more effectively and positively.

Non-verbal cues play a pivotal role during interviews and conferences, where researchers and academics showcase their work. When attending conferences or presenting research, scientists must be aware of their body language to effectively convey their expertise and credibility. Confident body language can inspire confidence in others, making it easier to establish professional connections, garner support for research projects, and secure collaborations.

Similarly, during job interviews, body language can significantly impact the outcome. The facial non-verbal elements of an interviewee in a job interview setting can have a great effect on their chances of being hired. The face as a whole, the eyes, and the mouth are features that are looked at and observed by the interviewer as they makes their judgements on the person’s effective work ability. The more an applicant genuinely smiles and has their eyes’ non-verbal message match their mouth’s non-verbal message, they will be more likely to get hired than those who do not. As proven, that first impression can be made in only milliseconds; thus, it is crucial for an applicant to pass that first test. It paints the road for the rest of the interview process. 4

While body language is a universal form of communication, it is important to recognize that its interpretation can vary across cultures. Different cultures have distinct norms and expectations regarding body language, and what may be seen as confident in one culture may be interpreted differently in another. 5 It is crucial for scientists and academics to be aware of these cultural nuances to foster effective cross-cultural communication and understanding. Awareness of cultural nuances is crucial in fostering effective cross-cultural communication and understanding. Scientists and academics engaged in international collaborations or interactions should familiarize themselves with cultural differences to avoid misunderstandings and promote respectful and inclusive communication.

Collaboration lies at the heart of scientific progress and academic success. Body language plays a significant role in building trust and establishing effective collaboration among researchers and academics. Open and inviting body language, along with active listening skills, can foster an environment where ideas can be freely exchanged, leading to innovative breakthroughs. In research collaboration and networking, proxemics can significantly affect the level of trust and rapport between researchers. Respecting each other’s personal space and maintaining appropriate distances during interactions can foster a more positive and productive working relationship, leading to better communication and idea exchange ( Figure 2 ). Furthermore, being aware of cultural variations in proxemics can help researchers navigate diverse networking contexts, promoting cross-cultural understanding and enabling more fruitful international collaborations.

Overcoming the barrier of communication. The following factors are important for overcoming the barriers in communication, namely, using culturally appropriate language, being observant, assuming positive intentions, avoiding being judgemental, identifying and controlling bias, slowing down responses, emphasizing relationships, seeking help from interpreters, being eager to learn and adapt, and being empathetic.

On the other hand, negative body language, such as crossed arms, lack of eye contact, or dismissive gestures, can signal disinterest or disagreement, hindering collaboration and stifling the flow of ideas. Recognizing and addressing such non-verbal cues can help create a more inclusive and productive scientific community.

Effective communication is paramount in science and academia, where the exchange of ideas and knowledge fuels progress. While the scientific community often focuses on the power of words, it is crucial not to send across conflicting verbal and non-verbal cues. While much attention is given to verbal communication, the significance of non-verbal cues, specifically body language, cannot be overlooked. Body language encompasses facial expressions, gestures, posture, eye contact, and other non-verbal behaviours that convey information beyond words.

Disclosure of Interest

There are no conflicts of interests from all authors.

Baugh AD , Vanderbilt AA , Baugh RF . Communication training is inadequate: the role of deception, non-verbal communication, and cultural proficiency . Med Educ Online 2020 ; 25 : 1820228 . https://doi.org/10.1080/10872981.2020.1820228

Google Scholar

Aralia . 8 Nonverbal Tips for Public Speaking . Aralia Education Technology. https://www.aralia.com/helpful-information/nonverbal-tips-public-speaking/ (22 July 2023, date last accessed)

Danesi M . Nonverbal communication. In: Understanding Nonverbal Communication : Boomsburry Academic , 2022 ; 121 – 162 . https://doi.org/10.5040/9781350152670.ch-001

Google Preview

Cortez R , Marshall D , Yang C , Luong L . First impressions, cultural assimilation, and hireability in job interviews: examining body language and facial expressions’ impact on employer’s perceptions of applicants . Concordia J Commun Res 2017 ; 4 . https://doi.org/10.54416/dgjn3336

Pozzer-Ardenghi L . Nonverbal aspects of communication and interaction and their role in teaching and learning science. In: The World of Science Education . Netherlands : Brill , 2009 , 259 – 271 . https://doi.org/10.1163/9789087907471_019

Email alerts

Citing articles via, looking for your next opportunity, affiliations.

- Online ISSN 1522-9645

- Print ISSN 0195-668X

- Copyright © 2024 European Society of Cardiology

- About Oxford Academic

- Publish journals with us

- University press partners

- What we publish

- New features

- Open access

- Institutional account management

- Rights and permissions

- Get help with access

- Accessibility

- Media enquiries

- Oxford University Press

- Oxford Languages

- University of Oxford

Oxford University Press is a department of the University of Oxford. It furthers the University's objective of excellence in research, scholarship, and education by publishing worldwide

- Copyright © 2024 Oxford University Press

- Cookie settings

- Cookie policy

- Privacy policy

- Legal notice

This Feature Is Available To Subscribers Only

Sign In or Create an Account

This PDF is available to Subscribers Only

For full access to this pdf, sign in to an existing account, or purchase an annual subscription.

- Reference Manager

- Simple TEXT file

People also looked at

Original research article, body language in the brain: constructing meaning from expressive movement.

- 1 Department of Psychiatry, University of British Columbia, Vancouver, BC, Canada

- 2 Mental Health and Integrated Neurobehavioral Development Research Core, Child and Family Research Institute, Vancouver, BC, Canada

- 3 Psychiatric Epidemiology and Evaluation Unit, Saint John of God Clinical Research Center, Brescia, Italy

- 4 Department of Psychological and Brain Sciences, University of California, Santa Barbara, CA, USA

This fMRI study investigated neural systems that interpret body language—the meaningful emotive expressions conveyed by body movement. Participants watched videos of performers engaged in modern dance or pantomime that conveyed specific themes such as hope, agony, lust, or exhaustion. We tested whether the meaning of an affectively laden performance was decoded in localized brain substrates as a distinct property of action separable from other superficial features, such as choreography, kinematics, performer, and low-level visual stimuli. A repetition suppression (RS) procedure was used to identify brain regions that decoded the meaningful affective state of a performer, as evidenced by decreased activity when emotive themes were repeated in successive performances. Because the theme was the only feature repeated across video clips that were otherwise entirely different, the occurrence of RS identified brain substrates that differentially coded the specific meaning of expressive performances. RS was observed bilaterally, extending anteriorly along middle and superior temporal gyri into temporal pole, medially into insula, rostrally into inferior orbitofrontal cortex, and caudally into hippocampus and amygdala. Behavioral data on a separate task indicated that interpreting themes from modern dance was more difficult than interpreting pantomime; a result that was also reflected in the fMRI data. There was greater RS in left hemisphere, suggesting that the more abstract metaphors used to express themes in dance compared to pantomime posed a greater challenge to brain substrates directly involved in decoding those themes. We propose that the meaning-sensitive temporal-orbitofrontal regions observed here comprise a superordinate functional module of a known hierarchical action observation network (AON), which is critical to the construction of meaning from expressive movement. The findings are discussed with respect to a predictive coding model of action understanding.

Introduction

Body language is a powerful form of non-verbal communication providing important clues about the intentions, emotions, and motivations of others. In the course of our everyday lives, we pick up information about what people are thinking and feeling through their body posture, mannerisms, gestures, and the prosody of their movements. This intuitive social awareness is an impressive feat of neural integration; the cumulative result of activity in distributed brain systems specialized for coding a wide range of social information. Reading body language is more than just a matter of perception. It entails not only recognizing and coding socially relevant visual information, but also ascribing meaning to those representations.

We know a great deal about brain systems involved in the perception of facial expressions, eye movements, body movement, hand gestures, and goal directed actions, as well as those mediating affective, decision, and motor responses to social stimuli. What is still missing is an understanding of how the brain “reads” body language. Beyond the decoding of body motion, what are the brain substrates directly involved in extracting meaning from affectively laden body expressions? The brain has several functionally specialized structures and systems for processing socially relevant perceptual information. A subcortical pulvinar-superior colliculus-amygdala-striatal circuit mediates reflex-like perception of emotion from body posture, particularly fear, and activates commensurate reflexive motor responses ( Dean et al., 1989 ; Cardinal et al., 2002 ; Sah et al., 2003 ; de Gelder and Hadjikhani, 2006 ). A region of the occipital cortex known as the extrastriate body area (EBA) is sensitive to bodily form ( Bonda et al., 1996 ; Hadjikhani and de Gelder, 2003 ; Astafiev et al., 2004 ; Peelen and Downing, 2005 ; Urgesi et al., 2006 ). The fusiform gyrus of the ventral occipital and temporal lobes has a critical role in processing faces and facial expressions ( McCarthy et al., 1997 ; Hoffman and Haxby, 2000 ; Haxby et al., 2002 ). Posterior superior temporal sulcus is involved in perceiving the motion of biological forms in particular ( Allison et al., 2000 ; Pelphrey et al., 2005 ). Somatosensory, ventromedial prefrontal, premotor, and insular cortex contribute to one's own embodied awareness of perceived emotional states ( Adolphs et al., 2000 ; Damasio et al., 2000 ). Visuomotor processing in a functional brain network known as the action observation network (AON) codes observed action in distinct functional modules that together link the perception of action and emotional body language with ongoing behavioral goals and the formation of adaptive reflexes, decisions, and motor behaviors ( Grafton et al., 1996 ; Rizzolatti et al., 1996b , 2001 ; Hari et al., 1998 ; Fadiga et al., 2000 ; Buccino et al., 2001 ; Grézes et al., 2001 ; Grèzes et al., 2001 ; Ferrari et al., 2003 ; Zentgraf et al., 2005 ; Bertenthal et al., 2006 ; de Gelder, 2006 ; Frey and Gerry, 2006 ; Ulloa and Pineda, 2007 ). Given all we know about how bodies, faces, emotions, and actions are perceived, one might expect a clear consensus on how meaning is derived from these percepts. Perhaps surprisingly, while we know these systems are crucial to integrating perceptual information with affective and motor responses, how the brain deciphers meaning based on body movement remains unknown. The focus of this investigation was to identify brain substrates that decode meaning from body movement, as evidenced by meaning-specific neural processing that differentiates body movements conveying distinct expressions.

To identify brain substrates sensitive to the meaningful emotive state of an actor conveyed through body movement, we used repetition suppression (RS) fMRI. This technique identifies regions of the brain that code for a particular stimulus dimension (e.g., shape) by revealing substrates that have different patterns of neural activity in response to different attributes of that dimension (e.g., circle, square, triangle; Grill-Spector et al., 2006 ). When a particular attribute is repeated, synaptic activity and the associated blood oxygen level-dependent (BOLD) response decreases in voxels containing neuronal assemblies that code that attribute ( Wiggs and Martin, 1998 ; Grill-Spector and Malach, 2001 ). We have used this method previously to show that various properties of an action such as movement kinematics, object goal, outcome, and context-appropriateness of action mechanics are uniquely coded by different neural substrates within a parietal-frontal action observation network (AON; Hamilton and Grafton, 2006 , 2007 , 2008 ; Ortigue et al., 2010 ). Here, we applied RS-fMRI to identify brain areas in which activity decreased when the meaningful emotive theme of an expressive performance was repeated between trials. The results demonstrate a novel coding function of the AON—decoding meaning from body language.

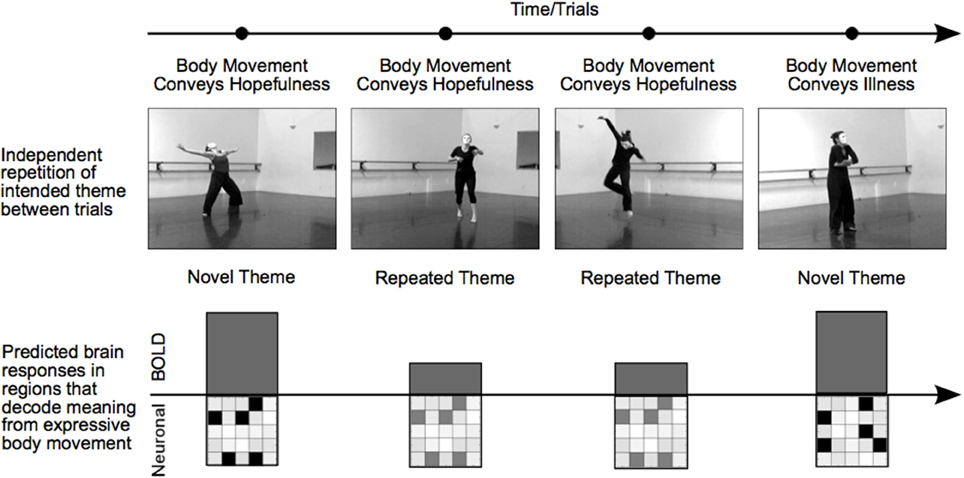

Working with a group of professional dancers, we produced a set of video clips in which performers intentionally expressed a particular meaningful theme either through dance or pantomime. Typical themes consisted of expressions of hope, agony, lust, or exhaustion. The experimental manipulation of theme was studied independently of choreography, performer, or camera viewpoint, which allowed us to repeat the meaning of a movement sequence from one trial to another while varying physical movement characteristics and perceptual features. With this RS-fMRI design, a decrease in BOLD activity for repeated relative to novel themes (RS) could not be attributed to specific movements, characteristics of the performer, “low-level” visual features, or the general process of attending to body expressions. Rather, RS revealed brain areas in which specific voxel-wise neural population codes differentiated meaningful expressions based on body movement (Figure 1 ).

Figure 1. Manipulating trial sequence to induce RS in brain regions that decode body language . The order of video presentation was controlled such that themes depicted in consecutive videos were either novel or repeated. Each consecutive video clip was unique; repeated themes were always portrayed by different dancers, different camera angles, or both. Thus, RS for repeated themes was not the result of low-level visual features, but rather identified brain areas that were sensitive to the specific meaningful theme conveyed by a performance. In brain regions showing RS, a particular affective theme—hope, for example—will evoke a particular pattern of neural activity. A novel theme on the subsequent trial—illness, for instance—will trigger a different but equally strong pattern of neural activity in distinct cell assemblies, resulting in an equivalent BOLD response. In contrast, a repetition of the hopefulness theme on the subsequent trial will trigger activity in the same neural assemblies as the first trial, but to a lesser extent, resulting in a reduced BOLD response for repeated themes. In this way, regions showing RS reveal regions that support distinct patterns of neural activity in response to different themes.

Participants were scanned using fMRI while viewing a series of 10-s video clips depicting modern dance or pantomime performances that conveyed specific meaningful themes. Because each performer had a unique artistic style, the same theme could be portrayed using completely different physical movements. This allowed the repetition of meaning while all other aspects of the physical stimuli varied from trial to trial. We predicted that specific regions of the AON engaged by observing expressive whole body movement would show suppressed BOLD activation for repeated relative to novel themes (RS). Brain regions showing RS would reveal brain substrates directly involved in decoding meaning based on body movement.

The dance and pantomime performances used here conveyed expressive themes through movement, but did not rely on typified, canonical facial expressions to invoke particular affective responses. Rather, meaningful themes were expressed with unique artistic choreography while facial expressions were concealed with a classic white mime's mask. The result was a subtle stimulus set that promoted thoughtful, interpretive viewing that could not elicit reflex-like responses based on prototypical facial expressions. In so doing, the present study shifted the focus away from automatic affective resonance toward a more deliberate ascertainment of meaning from movement.

While dance and pantomime both expressed meaningful emotive themes, the quality of movement and the types of gestures used were different. Pantomime sequences used fairly mundane gestures and natural, everyday movements. Dance sequences used stylized gestures and interpretive, prosodic movements. The critical distinction between these two types of expressive movement is in the degree of abstraction in the metaphors that link movement with meaning (see Morris, 2002 for a detailed discussion of movement metaphors). Pantomime by definition uses gesture to mimic everyday objects, situations, and behavior, and thus relies on relatively concrete movement metaphors. In contrast, dance relies on more abstract movement metaphors that draw on indirect associations between qualities of movement and the emotions and thoughts it evokes in a viewer. We predicted that since dance expresses meaning more abstractly than pantomime, dance sequences would be more difficult to interpret than pantomimed sequences, and would likewise pose a greater challenge to brain processes involved in decoding meaning from movement. Thus, we predicted greater involvement of thematic decoding areas for danced than for pantomimed movement expressions. Greater RS for dance than pantomime could result from dance triggering greater activity upon a first presentation, a greater reduction in activity with a repeated presentation, or some combination of both. Given our prediction that greater RS for dance would be linked to interpretive difficulty, we hypothesized it would be manifested as an increased processing demand resulting in greater initial BOLD activity for novel danced themes.

Participants

Forty-six neurologically healthy, right-handed individuals (30 women, mean age = 24.22 years, range = 19–55 years) provided written informed consent and were paid for their participation. Performers also agreed in writing to allow the use of their images and videos for scientific purposes. The protocol was approved by the Office of Research Human Subjects Committee at the University of California Santa Barbara (UCSB).

Eight themes were depicted, including four danced themes (happy, hopeful, fearful, and in agony) and four pantomimed themes (in love, relaxed, ill, and exhausted). Performance sequences were choreographed and performed by four professional dancers recruited from the SonneBlauma Danscz Theatre Company (Santa Barbara, California; now called ArtBark International, http://www.artbark.org/ ). Performers wore expressionless white masks so body language was conveyed though gestural whole-body movement as opposed to facial expressions. To express each theme, performers adopted an affective stance and improvised a short sequence of modern dance choreography (two themes per performer) or pantomime gestures (two themes per performer). Each of the eight themes were performed by two different dancers and recorded from two different camera angles, resulting in four distinct videos representing each theme (32 distinct videos in total; clips available in Supplementary Materials online).

Behavioral Procedure

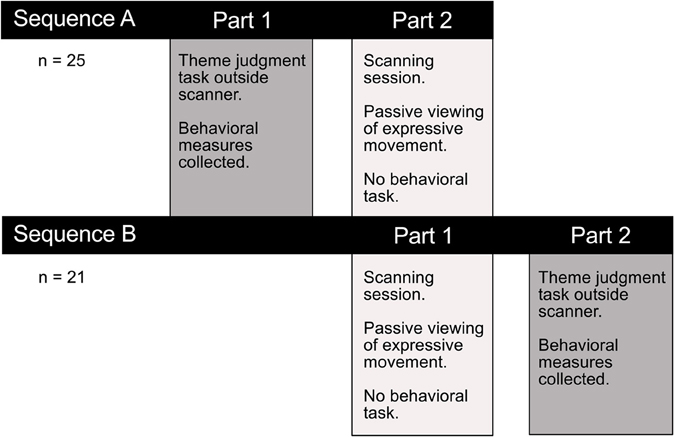

In a separate session outside the scanner either before or after fMRI data collection, an interpretation task measured observers' ability to discern the intended meaning of a performance (Figure 2 ). The interpretation task was carried out in a separate session to avoid confounding movement observation in the scanner with explicit decision-making and overt motor responses. Participants were asked to view each video clip and choose from a list of four options the theme that best corresponded with the movement sequence they had just watched. Responses were made by pressing one of four corresponding buttons on a keyboard. Two behavioral measures were collected to assess how well participants interpreted the intended meaning of expressive performances. Consistency scores reflected the proportion of observers' interpretations that matched the performer's intended expression. Response times indicated the time taken to make interpretive judgments. In order to encourage subjects to use their initial impressions and to avoid over-deliberating, the four response options were previewed briefly immediately prior to video presentation.

Figure 2. Experimental testing procedure . Participants completed a thematic interpretation task outside the scanner, either before or after the imaging session. Performance on this task allowed us to test whether there was a difference in how readily observers interpreted the intended meaning conveyed through dance or pantomime. Any performance differences on this explicit theme judgment task could help interpret the functional significance of observed differences in brain activity associated with passively viewing the two types of movement in the scanner.

For the interpretation task collected outside the scanner, videos were presented and responses collected on a Mac Powerbook G4 laptop programmed using the Psychtoolbox (v. 3.0.8) extension ( Brainard, 1997 ; Pelli and Brainard, 1997 ) for Mac OSX running under Matlab 7.5 R2007b (the MathWorks, Natick, MA). Each trial began with the visual presentation of a list of four theme options corresponding to four button press responses (“u,” “i,” “o,” or “p” keyboard buttons). This list remained on the screen for 3 s, the screen blanked for 750 ms, and then the movie played for 10 s. Following the presentation of the movie, the four response options were presented again, and remained on the screen until a response was made. Each unique video was presented twice, resulting in 64 trials total. Video order was randomized for each participant, and the response options for each trial included the intended theme and three randomly selected alternatives.

Neuroimaging Procedure

fMRI data were collected with a Siemens 3.0 T Magnetom Tim Trio system using a 12-channel phased array head coil. Functional images were acquired with a T2* weighted single shot gradient echo, echo-planar sequence sensitive to Blood Oxygen Level Dependent (BOLD) contrast (TR = 2 s; TE = 30 ms; FA = 90°; FOV = 19.2 cm). Each volume consisted of 37 slices acquired parallel to the AC–PC plane (interleaved acquisition; 3 mm thick with 0.5 mm gap; 3 × 3 mm in-plane resolution; 64 × 64 matrix).

Each participant completed four functional scanning runs lasting approximately 7.5 min while viewing danced or acted expressive movement sequences. While there were a total of eight themes in the stimulus set for the study, each scanning run depicted only two of those eight themes. Over the course of all four scanning runs, all eight themes were depicted. Trial sequences were arranged such that theme of a movement sequence was either novel or repeated with respect to the previous trial. This allowed for the analysis of BOLD response RS for repeated vs. novel themes. Each run presented 24 video clips (3 presentations of 8 unique videos depicting 2 themes × 2 dancers × 2 camera angles). Novel and repeated themes were intermixed within each scanning run, with no more than three sequential repetitions of the same theme. Two scanning runs depicted dance and two runs depicted pantomime performances. The order of runs was randomized for each participant. The experiment was controlled using Presentation software (version 13.0, Neurobehavioral Systems Inc, CA). Participants were instructed to focus on the movement performance while viewing the videos. No specific information about the themes portrayed or types of movement used was provided, and no motor responses were required.

For the behavioral data collected outside the scanner, mean consistency scores and mean response time (RT; ms) were computed for each participant. Consistency and RT were each submitted to an ANOVA with Movement Type (dance vs. pantomime) as a within-subjects factor using Stata/IC 10.0 for Macintosh.

Statistical analysis of the neuroimaging data was organized to identify: (1) brain areas responsive to the observation of expressive movement sequences, defined by BOLD activity relative to an implicit baseline, (2) brain areas directly involved in decoding meaning from movement, defined by RS for repeated themes, (3) brain areas in which processes for decoding thematic meaning varied as a function of abstractness, defined by greater RS for danced than pantomimed themes, and (4) the specific pattern of BOLD activity differences for novel and repeated themes as a function of danced or pantomimed movements in regions showing greater RS for dance.

The fMRI data were analyzed using Statistical Parametric Mapping software (SPM5, Wellcome Department of Imaging Neuroscience, London; www.fil.ion.ucl.ac.uk/spm ) implemented in Matlab 7.5 R2007b (The MathWorks, Natick, MA). Individual scans were realigned, slice-time corrected and spatially normalized to the Montreal Neurological Institute (MNI) template in SPM5 with a resampled resolution of 3 × 3 × 3 mm. A smoothing kernel of 8 mm was applied to the functional images. A general linear model was created for each participant using SPM5. Parameter estimates of event-related BOLD activity were computed for novel and repeated themes depicted by danced and pantomimed movements, separately for each scanning run, for each participant.

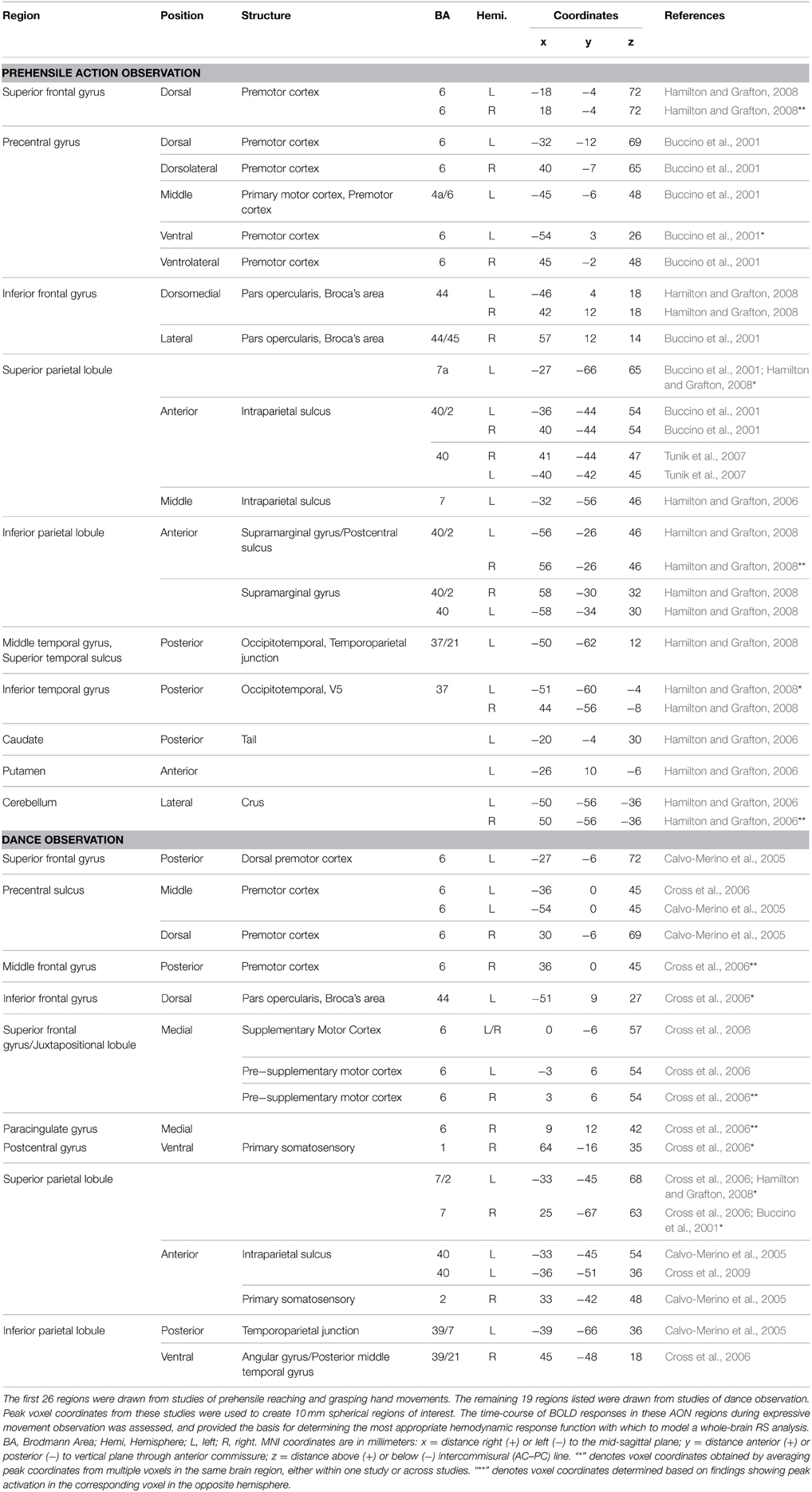

Because the intended theme of each movement sequence was not expressed at a discrete time point but rather throughout the duration of the 10 s video clip, the most appropriate hemodynamic response function (HRF) with which to model the BOLD response at the individual level was determined empirically prior to parameter estimation. Of interest was whether the shape of the BOLD response to these relatively long video clips differed from the canonical HRF typically implemented in SPM. The shape of the BOLD response was estimated for each participant by modeling a finite impulse response function ( Ollinger et al., 2001 ). Each trial was represented by a sequence of 12 consecutive TRs, beginning at the onset of each video clip. Based on this deconvolution, a set of beta weights describing the shape of the response over a 24 s interval was obtained for both novel and repeated themes depicted by both danced and pantomimed movement sequences. To determine whether adjustments should be made to the canonical HRF implemented in SPM, the BOLD responses of a set of 45 brain regions within a known AON were evaluated (see Table 1 for a complete list). To find the most representative shape of the BOLD response within the AON, deconvolved beta weights for each condition were averaged across sessions and collapsed by singular value decomposition analysis ( Golub and Reinsch, 1970 ). This resulted in a characteristic signal shape that maximally described the actual BOLD response in AON regions for both novel and repeated themes, for both danced and pantomimed sequences. This examination of the BOLD response revealed that its time-to-peak was delayed 4 s compared to the canonical HRF response curve typically implemented in SPM. That is, the peak of the BOLD response was reached at 8–10 s following stimulus onset instead of the canonical 4–6 s. Given this result, parameter estimation for conditions of interest in our main analysis was based on a convolution of the design matrix for each participant with a custom HRF that accounted for the observed 4 s delay. Time-to-peak of the HRF was adjusted from 6 to 10 s while keeping the same overall width and height of the canonical function implemented in SPM. Using this custom HRF, the 10 s video duration was modeled as usual in SPM by convolving the HRF with a 10 s boxcar function.

Table 1. The action observation network, as defined by previous investigations .

Second-level whole-brain analysis was conducted with SPM8 using a 2 × 2 random effects model with Movement Type and Repetition as within-subject factors using the weighted parameter estimates (contrast images) obtained at the individual level as data. A gray matter mask was applied to whole-brain contrast images prior to second-level analysis to remove white matter voxels from the analysis. Six second-level contrasts were computed, including (1) expressive movement observation (BOLD relative to baseline), (2) dance observation effect (danced sequences > pantomimed sequences), (3) pantomime observation effect (pantomimed sequences > danced sequences), (4) RS (novel themes > repeated themes), (5) dance × repetition interaction (RS for dance > RS for pantomime), and (6) pantomime x repetition interaction (RS for pantomime > RS for dance). Following the creation of T-map images in SPM8, FSL was used to create Z-map images (Version 4.1.1; Analysis Group, FMRIB, Oxford, UK; Smith et al., 2004 ; Jenkinson et al., 2012 ). The results were thresholded at p < 0.05, cluster-corrected using FSL subroutines based on Gaussian random field theory ( Poldrack et al., 2011 ; Nichols, 2012 ). To examine the nature of the differences in RS between dance and pantomime, a mask image was created based on the corresponding cluster-thresholded Z-map of regions showing greater RS for dance, and the mean BOLD activity (contrast image values) was computed for novel and repeated dance and pantomime contrasts from each participant's first-level analysis. Mean BOLD activity measures were submitted to a 2 × 2 ANOVA with Movement Type (dance vs. pantomime) and Repetition (novel vs. repeat) as within-subjects factors using Stata/IC 10.0 for Macintosh.

In order to ensure that observed RS effects for repeated themes were not due to low-level kinematic effects, a motion tracking analysis of all 32 videos was performed using Tracker 4.87 software for Mac (written by Douglas Brown, distributed on the Open Source Physics platform, www.opensourcephysics.org ). A variety of motion parameters, including velocity, acceleration, momentum, and kinetic energy, were computed within the Tracker software based on semi-automated/supervised motion tracking of the top of the head, one hand, and one foot of each performer. The key question relevant to our results was whether there was a difference in motion between videos depicting novel and repeated themes. One factor ANOVAs for each motion parameter revealed no significant differences in coarse kinematic profiles between “novel” and “repeated” theme trials (all p 's > 0.05). This was not particularly surprising given that all videos were used for both novel and repeated themes, which were defined entirely based on trial sequence). In contrast, the comparison between danced and pantomimed themes did reveal significant differences in kinematic profiles. A 2 × 3 ANOVA with Movement Type (Dance, Pantomime) and Body Point (Hand, Head, Foot) as factors was conducted for each motion parameter (velocity, acceleration, momentum, and kinetic energy), and revealed greater motion energy on all parameters for the danced themes compared to the pantomimed themes (all p 's < 0.05). Any differences in RS between danced and pantomimed themes may therefore be attributed to differences in kinematic properties of body movement. Importantly, however, because there were no systematic differences in motion kinematics between novel and repeated themes, any RS effects for repeated themes could not be attributed to the effect of motion kinematics.

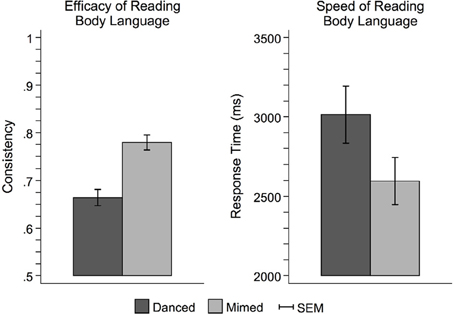

Figure 3 illustrates the behavioral results of the interpretation task completed outside the scanner. Participants had higher consistency scores for pantomimed movements than danced movements [ F (1, 42) = 42.06, p < 0.0001], indicating better transmission of the intended expressive meaning from performer to viewer. Pantomimed sequences were also interpreted more quickly than danced sequences [ F (1, 42) = 27.28, p < 0.0001], suggesting an overall performance advantage for pantomimed sequences.

Figure 3. Behavioral performance on the theme judgment task . Participants more readily interpreted pantomime than dance. This was evidenced by both greater consistency between the meaningful theme intended to be expressed by the performer and the interpretive judgments made by the observer (left), and faster response times (right). This pattern of results suggests that dance was more difficult to interpret than pantomime, perhaps owing to the use of more abstract metaphors to link movement with meaning. Pantomime, on the other hand, relied on more concrete, mundane sorts of movements that were more likely to carry meaningful associations based on observers' prior everyday experience. SEM, standard error of the mean.

Expressive Whole-body Movements Engage the Action Observation Network

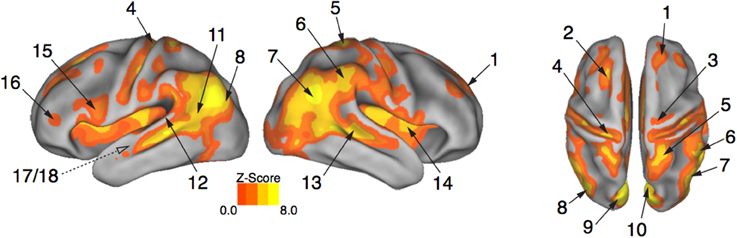

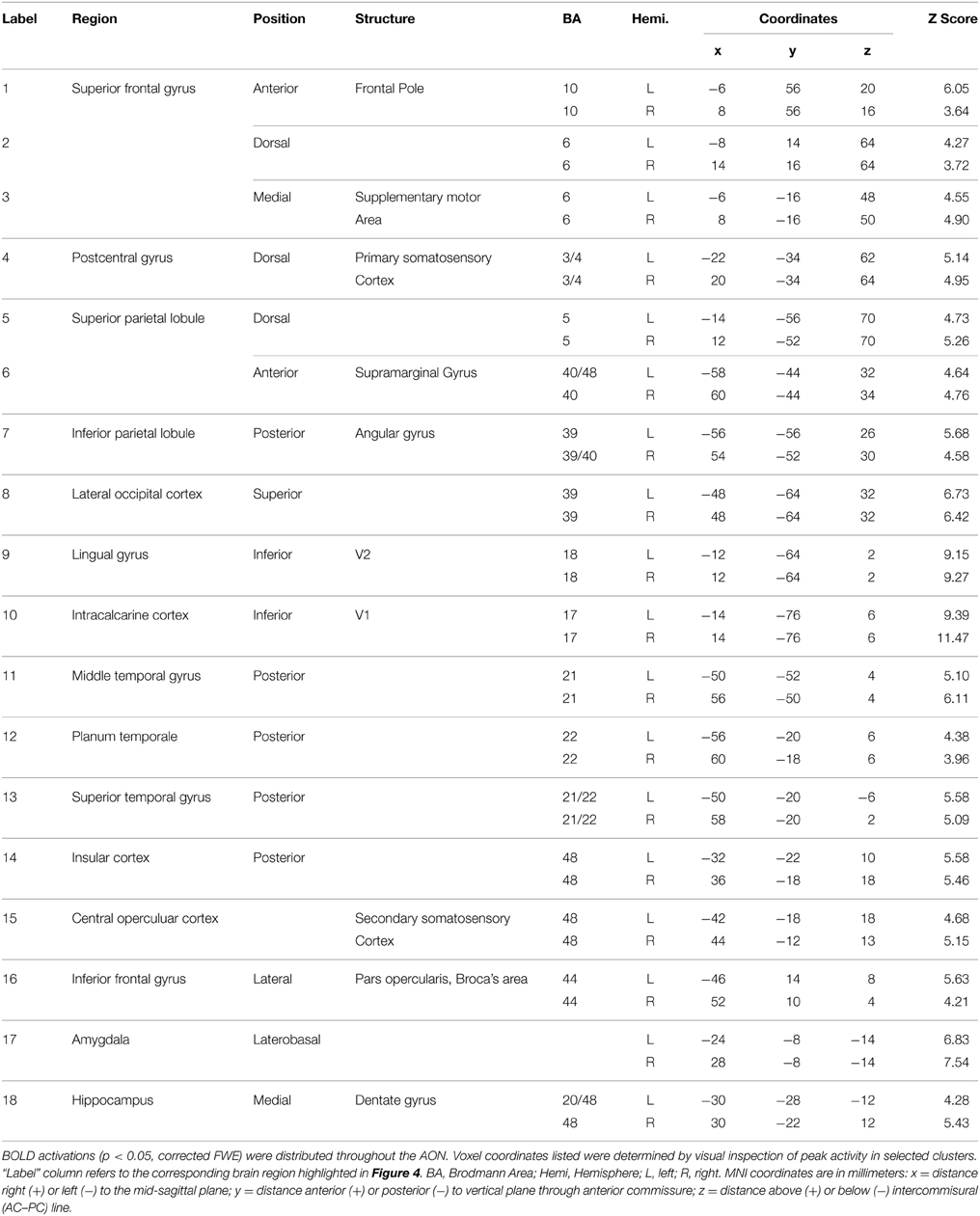

Brain activity associated with the observation of expressive movement sequences was revealed by significant BOLD responses to observing both dance and pantomime movement sequences, relative to the inter-trial resting baseline. Figure 4 depicts significant activation ( p < 0.05, cluster corrected in FSL) rendered on an inflated cortical surface of the Human PALS-B12 Atlas ( Van Essen, 2005 ) using Caret (Version 5. 61; http://www.nitrc.org/projects/caret ; Van Essen et al., 2001 ). Table 2 presents the MNI coordinates for selected voxels within clusters active during movement observation, as labeled in Figure 4 . Region names were obtained from the Harvard-Oxford Cortical and Subcortical Structural Atlases ( Frazier et al., 2005 ; Desikan et al., 2006 ; Makris et al., 2006 ; Goldstein et al., 2007 ; Harvard Center for Morphometric Analysis; www.partners.org/researchcores/imaging/morphology_MGH.asp ), and Brodmann Area labels were obtained from the Juelich Histological Atlas ( Eickhoff et al., 2005 , 2006 , 2007 ), as implemented in FSL. Observation of body movement was associated with robust BOLD activation encompassing cortex typically associated with the AON, including fronto-parietal regions linked to the representation of action kinematics, goals, and outcomes ( Hamilton and Grafton, 2006 , 2007 ), as well as temporal, occipital, and insular cortex and subcortical regions including amygdala and hippocampus—regions typically associated with language comprehension ( Kirchhoff et al., 2000 ; Ni et al., 2000 ; Friederici et al., 2003 ) and socio-affective information processing and decision-making ( Anderson et al., 1999 ; Adolphs et al., 2003 ; Bechara et al., 2003 ; Bechara and Damasio, 2005 ).

Figure 4. Expressive performances engage the action observation network . Viewing expressive whole-body movement sequences engaged a distributed cortical action observation network ( p < 0.05, FWE corrected). Large areas of parietal, temporal, frontal, and insular cortex included somatosensory, motor, and premotor regions that have been considered previously to comprise a human “mirror neuron” system, as well as non-motor areas linked to comprehension, social perception, and affective decision-making. Number labels correspond to those listed in Table 2 , which provides anatomical names and voxel coordinates for areas of peak activation. Dotted line for regions 17/18 indicates medial temporal position not visible on the cortical surface.

Table 2. Brain regions showing a significant BOLD response while participants viewed expressive whole-body movement sequences .

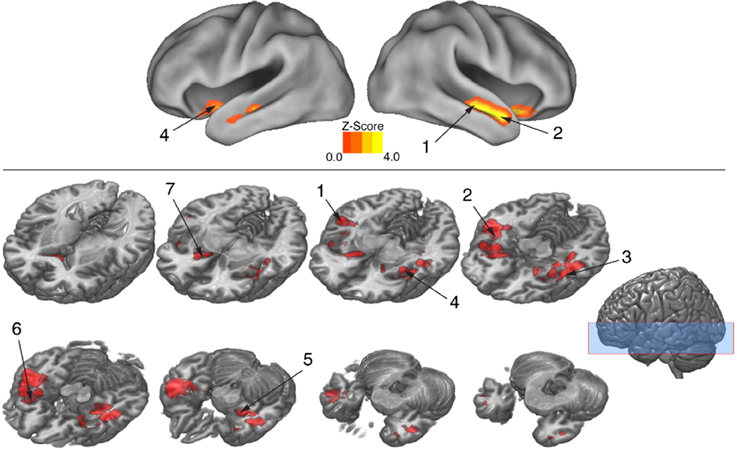

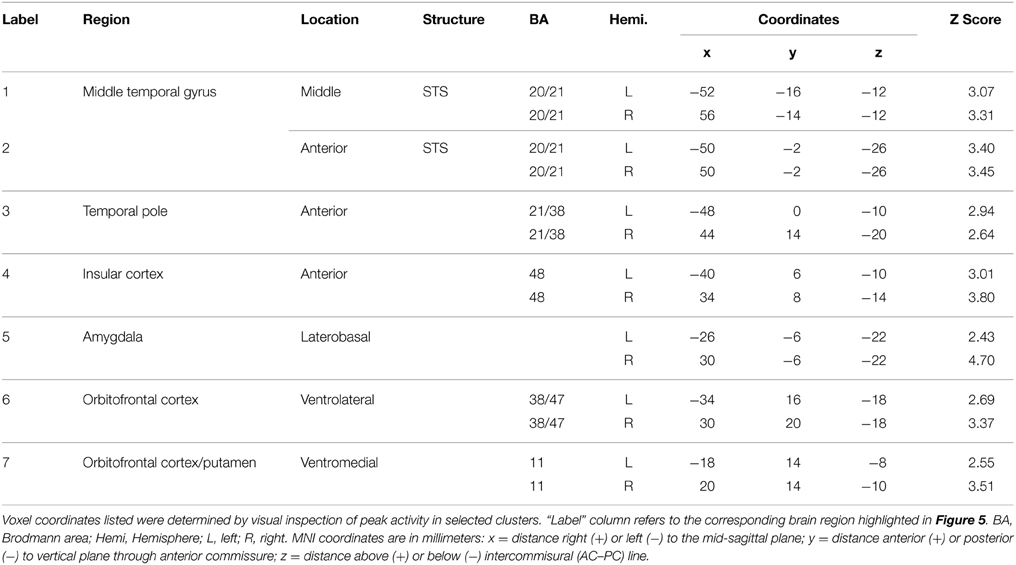

The Action Observation Network “Reads” Body Language

To isolate brain areas that decipher meaning conveyed by expressive body movement, regions showing RS (reduced BOLD activity for repeated compared to novel themes) were identified. Since theme was the only stimulus dimension repeated systematically across trials for this comparison, decreased activation for repeated themes could not be attributed to physical features of the stimulus such as particular movements, performers, or camera viewpoints. Figure 5 illustrates brain areas showing significant suppression for repeated themes ( p < 0.05, cluster corrected in FSL). Table 3 presents the MNI coordinates for selected voxels within significant clusters. RS was found bilaterally on the rostral bank of the middle temporal gyrus extending into temporal pole and orbitofrontal cortex. There was also significant suppression in bilateral amygdala and insular cortex.

Figure 5. BOLD suppression (RS) reveals brain substrates for “reading” body language . Regions involved in decoding meaning in body language showing were isolated by testing for BOLD suppression when the intended theme of an expressive performance was repeated across trials. To identify regions showing RS, BOLD activity associated with novel themes was contrasted with BOLD activity associated with repeated themes ( p < 0.05, cluster corrected in FSL). Significantly greater activity for novel relative to repeated themes was evidence of RS. Given that the intended theme of a performance was the only element that was repeated between trials, regions showing RS revealed brain substrates that were sensitive to the specific meaning infused into a movement sequence by a performer. Number labels correspond to those listed in Table 3 , which provides anatomical names and voxel coordinates for key clusters showing significant RS. Blue shaded area indicates vertical extent of axial slices shown.

Table 3. Brain regions showing significant BOLD suppression for repeated themes ( p < 0.05, cluster corrected in FSL) .

Movement Abstractness Challenges Brain Substrates that Decode Meaning

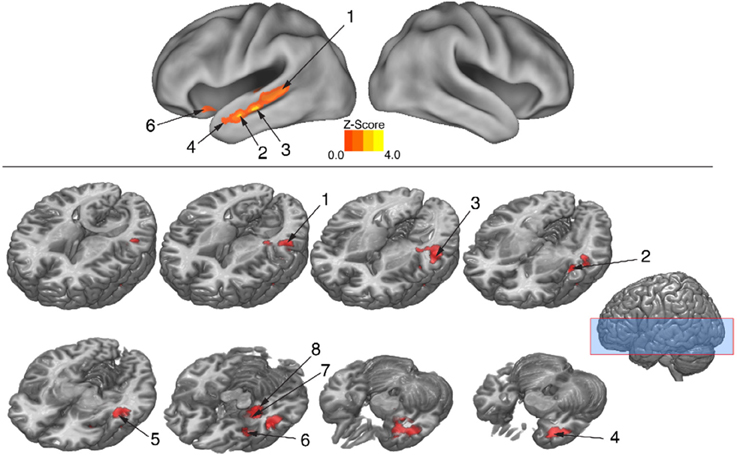

The behavioral analysis indicated that interpreting danced themes was more difficult than interpreting pantomimed themes, as evidenced by lower consistency scores and greater RTs. Previous research indicates that greater difficulty discriminating a particular stimulus dimension is associated with greater BOLD suppression upon repetition of that dimension's attributes ( Hasson et al., 2006 ). To test whether greater difficulty decoding meaning from dance than pantomime would also be associated with greater RS in the present data, the magnitude of BOLD response suppression was compared between movement types. This was done with the Dance × Repetition interaction contrast in the second-level whole brain analysis, which revealed regions that had greater RS for dance than for pantomime. Figure 6 illustrates brain regions showing greater RS for themes portrayed through dance than pantomime ( p < 0.05, cluster corrected in FSL). Significant differences were entirely left-lateralized in superior and middle temporal gyri, extending into temporal pole and orbitofrontal cortex, and also present in laterobasal amygdala and the cornu ammonis of the hippocampus. Table 4 presents the MNI coordinates for selected voxels within significant clusters. The reverse Pantomime × Repetition interaction was also tested, but did not reveal any regions showing greater RS for pantomime than dance ( p > 0.05, cluster corrected in FSL).

Figure 6. Regions showing greater RS for dance than pantomime . RS effects were compared between movement types. This was implemented as an interaction contrast within our Movement Type × Repetition ANOVA design [(Novel Dance > Repeated Dance) > (Novel Pantomime > Repeated Pantomime)]. Greater RS for dance was lateralized to left hemisphere meaning-sensitive regions. The brain areas shown here have been linked previously to the comprehension of meaning in verbal language, suggesting the possibility they represent shared brain substrates for building meaning from both language and action. Number labels correspond to those listed in Table 4 , which provides anatomical names and voxel coordinates for key clusters showing significantly greater RS for dance. Blue shaded area indicates vertical extent of axial slices shown.

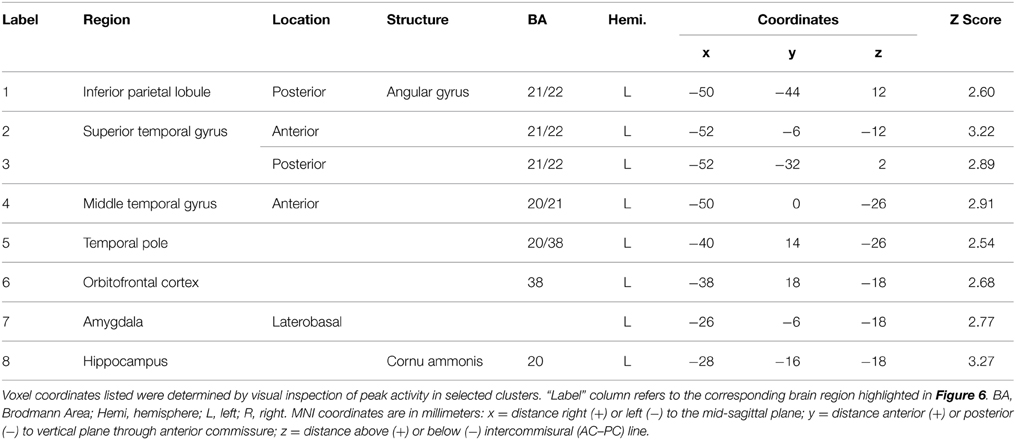

Table 4. Brain regions showing significantly greater RS for themes expressed through dance relative to themes expressed through pantomime ( p < 0.05, cluster corrected in FSL) .

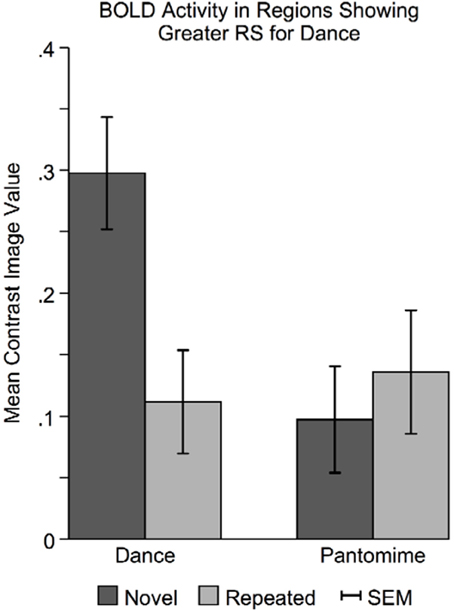

In regions showing greater RS for dance than pantomime, mean BOLD responses for novel and repeated dance and pantomime conditions were computed across voxels for each participant based on their first-level contrast images. This was done to test whether the greater RS for dance was due to greater activity in the novel condition, lower activity in the repeated condition, or some combination of both. Figure 7 illustrates a pattern of BOLD activity across conditions demonstrates that the greater RS for dance was the result of greater initial BOLD activation in response to novel themes. The ANOVA results showed a significant Movement Type × Repetition interaction [ F (1, 42) = 7.83, p < 0.01], indicating that BOLD activity in response to novel danced themes was greater than BOLD activity for all other conditions in these regions.

Figure 7. Novel danced themes challenge brain substrates that decode meaning from movement . To determine the specific pattern of BOLD activity that resulted in greater RS for dance, average BOLD activity in these areas was computed for each condition separately. Greater RS for dance was driven by a larger BOLD response to novel danced themes. Considered together with behavioral findings indicating that dance was more difficult to interpret, greater RS for dance seems to result from a greater processing “challenge” to brain substrates involved in decoding meaning from movement. SEM, standard error of the mean.

This study was designed to reveal brain regions involved in reading body language—the meaningful information we pick up about the affective states and intentions of others based on their body movement. Brain regions that decoded meaning from body movement were identified with a whole brain analysis of RS that compared BOLD activity for novel and repeated themes expressed through modern dance or pantomime. Significant RS for repeated themes was observed bilaterally, extending anteriorly along middle and superior temporal gyri into temporal pole, medially into insula, rostrally into inferior orbitofrontal cortex, and caudally into hippocampus and amygdala. Together, these brain substrates comprise a functional system within the larger AON. This suggests strongly that decoding meaning from expressive body movement constitutes a dimension of action representation not previously isolated in studies of action understanding. In the following we argue that this embedding is consistent with the hierarchical organization of the AON.

Body Language as Superordinate in a Hierarchical Action Observation Network

Previous investigations of action understanding have identified the AON as a key a cognitive system for the organization of action in general, highlighting the fact that both performing and observing action rely on many of the same brain substrates ( Grafton, 2009 ; Ortigue et al., 2010 ; Kilner, 2011 ; Ogawa and Inui, 2011 ; Uithol et al., 2011 ; Grafton and Tipper, 2012 ). Shared brain substrates for controlling one's own action and understanding the actions of others are often taken as evidence of a “mirror neuron system” (MNS), following from physiological studies showing that cells in area F5 of the macaque monkey premotor cortex fired in response to both performing and observing goal-directed actions ( Pellegrino et al., 1992 ; Gallese et al., 1996 ; Rizzolatti et al., 1996a ). Since these initial observations were made regarding monkeys, there has been a tremendous effort to characterize a human analog of the MNS, and incorporate it into theories of not only action understanding, but also social cognition, language development, empathy, and neuropsychiatric disorders in which these faculties are compromised ( Gallese and Goldman, 1998 ; Rizzolatti and Arbib, 1998 ; Rizzolatti et al., 2001 ; Gallese, 2003 ; Gallese et al., 2004 ; Rizzolatti and Craighero, 2004 ; Iacoboni et al., 2005 ; Tettamanti et al., 2005 ; Dapretto et al., 2006 ; Iacoboni and Dapretto, 2006 ; Shapiro, 2008 ; Decety and Ickes, 2011 ). A fundamental assumption common to all such theories is that mirror neurons provide a direct neural mechanism for action understanding through “motor resonance,” or the simulation of one's own motor programs for an observed action ( Jacob, 2008 ; Oosterhof et al., 2013 ). One proposed mechanism for action understanding through motor resonance is the embodiment of sensorimotor associations between action goals and specific motor behaviors ( Mitz et al., 1991 ; Niedenthal et al., 2005 ; McCall et al., 2012 ). While the involvement of the motor system in a range of social, cognitive and affective domains is certainly worthy of focused investigation, and mirror neurons may well play an important role in supporting such “embodied cognition,” this by no means implies that mirror neurons alone can account for the ability to garner meaning from observed body movement.

Since the AON is a distributed cortical network that extends beyond motor-related brain substrates engaged during action observation, it is best characterized not as a homogeneous “mirroring” mechanism, but rather as a collection of functionally specific but interconnected modules that represent distinct properties of observed actions ( Grafton, 2009 ; Grafton and Tipper, 2012 ). The present results build on this functional-hierarchical model of the AON by incorporating meaningful expression as an inherent aspect of body movement that is decoded in distinct regions of the AON. In other words, the bilateral temporal-orbitofrontal regions that showed RS for repeated themes comprise a distinct functional module of the AON that supports an additional level of the action representation hierarchy. Such an interpretation is consistent with the idea that action representation is inherently nested, carried out within a hierarchy of part-whole processes for which higher levels depend on lower levels ( Cooper and Shallice, 2006 ; Botvinick, 2008 ; Grafton and Tipper, 2012 ). We propose that the meaning infused into the body movement of a person having a particular affective stance is decoded superordinately to more concrete properties of action, such as kinematics and object goals. Under this view, while decoding these representationally subordinate properties of action may involve motor-related brain substrates, decoding “body language” engages non-motor regions of the AON that link movement and meaning, relying on inputs from lower levels of the action representation hierarchy that provide information about movement kinematics, prosodic nuances, and dynamic inflections.

While the present results suggest that the temporal-orbitofrontal regions identified here as decoding meaning from emotive body movement constitute a distinct functional module within a hierarchically organized AON, it is important to note that these regions have not previously been included in anatomical descriptions of the AON. The present study, however, isolated a property of action representation that had not been previously investigated; so identifying regions of the AON not previously included in its functional-anatomic definition is perhaps not surprising. This underscores the important point that the AON is functionally defined, such that its apparent anatomical extent in a given experimental context depends upon the particular aspects of action representation that are engaged and isolable. Previous studies of another abstract property of action representation, namely intention understanding, also illustrate this point. Inferring the intentions of an actor engages medial prefrontal cortex, bilateral posterior superior temporal sulcus, and left temporo-parietal junction—non-motor regions of the brain typically associated with “mentalizing,” or thinking about the mental states of another agent ( Ansuini et al., 2015 ; Ciaramidaro et al., 2014 ). A key finding of this research is that intention understanding depends fundamentally on the integration of motor-related (“mirroring”) brain regions and non-motor (“mentalizing”) brain regions ( Becchio et al., 2012 ). The present results parallel this finding, and point to the idea that in the context of action representation, motor and non-motor brain areas are not two separate brain networks, but rather one integrated functional system.

Predictive Coding and the Construction of Meaning in the Action Observation Network

A critical question raised by the idea that the temporal-orbitofrontal brain regions in which RS was observed here constitute a superordinate, meaning-sensitive functional module of the AON is how activity in subordinate AON modules is integrated at this higher level to produce differential neural firing patterns in response to different meaningful body expressions. That is, what are the neural mechanisms underlying the observed sensitivity to meaning in body language, and furthermore, why are these mechanisms subject to adaptation through repetition (RS)? While the present results do not provide direct evidence to answer these questions, we propose that a “predictive coding” interpretation provides a coherent model of action representation ( Brass et al., 2007 ; Kilner and Frith, 2008 ; Brown and Brüne, 2012 ) that yields useful predictions about the neural processes by which meaning is decoded that would account for the observed RS effect. The primary mechanism invoked by a predictive coding framework of action understanding is recurrent feed-forward and feedback processing across the various levels of the AON, which supports a Bayesian system of predictive neural coding, feedback processes, and prediction error reduction at each level of action representation ( Friston et al., 2011 ). According to this model, each level of the action observation hierarchy generates predictions to anticipate neural activity at lower levels of the hierarchy. Predictions in the form of neural codes are sent to lower levels through feedback connections, and compared with actual subordinate neural representations. Any discrepancy between neural predictions and actual representations are coded as prediction error. Information regarding prediction error is sent through recurrent feed-forward projections to superordinate regions, and used to update predictive priors such that subsequent prediction error is minimized. Together, these Bayes-optimal neural ensemble operations converge on the most probable inference for representation at the superordinate level ( Friston et al., 2011 ) and, ultimately, action understanding based on the integration of representations at each level of the action observation hierarchy ( Chambon et al., 2011 ; Kilner, 2011 ).

A predictive coding account of the present results would suggest that initial feed-forward inputs from subordinate levels of the AON provided the superordinate temporal-orbitofrontal module with information regarding movement kinematics, prosody, gestural elements, and dynamic inflections, which, when integrated with other inputs based on prior experience, would provide a basis for an initial prediction about potential meanings of a body expression. This prediction would yield a generative neural model about the movement dynamics that would be expected given the predicted meaning of the observed body expression, which would be fed back to lower levels of the network that coded movement dynamics and sensorimotor associations. Predictive activity would be contrasted with actual representations as movement information was accrued throughout the performance, and the resulting prediction error would be utilized via feed-forward projections to temporal-orbitofrontal regions to update predictive codes regarding meaning and minimize subsequent prediction error. In this way, the meaningful affective theme being expressed by the performer would be converged upon through recurrent Bayes-optimal neural ensemble operations. Thus, meaning expressed through body language would be accrued iteratively in temporal-orbitofrontal regions by integrating neural representations of various facets of action decoded throughout the AON. Interestingly, and consistent with a model in which an iterative process accrued information over time, we observed that BOLD responses in AON regions peaked more slowly than expected based on SPM's canonical HRF as the videos were viewed over an extended (10 s) duration. Under an iterative predictive coding model, RS for repeated themes could be accounted for by reduced initial generative activity in temporal-orbitofrontal regions due to better constrained predictions about potential meanings conveyed by observed movement, more efficient convergence on an inference due to faster minimization of prediction error, or some combination of both of these mechanisms. The present results provide indirect evidence for the former account, in that more abstract, less constrained movement metaphors relied upon by expressive dance resulted in greater RS due to larger BOLD responses for novel themes relative to the more concrete, better-constrained associations conveyed by pantomime.

Shared Brain Substrates for Meaning in Action and Language

The middle temporal gyrus and superior temporal sulcus regions identified here as part of a functional module of the AON that “reads” body language have been linked previously to a variety of high-level linguistic domains related to understanding meaning. Among these are conceptual knowledge ( Lambon Ralph et al., 2009 ), language comprehension ( Hasson et al., 2006 ; Noppeney and Penny, 2006 ; Price, 2010 ), sensitivity to the congruency between intentions and actions, both verbal/conceptual ( Deen and McCarthy, 2010 ), and perceptual/implicit ( Wyk et al., 2009 ), as well as understanding abstract language and metaphorical descriptions of action ( Desai et al., 2011 ). While together these studies demonstrate that high-level linguistic processing involves bilateral superior and middle temporal regions, there is evidence for a general predominance of the left hemisphere in comprehending semantics ( Price, 2010 ), and a predominance of the right hemisphere in incorporating socio-emotional information and affective context ( Wyk et al., 2009 ). For example, brain atrophy associated with a primary progressive aphasia characterized by profound disturbances in semantic comprehension occurs bilaterally in anterior middle temporal regions, but is more pronounced in the left hemisphere ( Gorno-Tempini et al., 2004 ). In contrast, neural degeneration in right hemisphere orbitofrontal, insula, and anterior middle temporal regions is associated not only with semantic dementia but also deficits in socio-emotional sensitivity and regulation ( Rosen et al., 2005 ).

This hemispheric asymmetry in brain substrates associated with interpreting meaning in verbal language is paralleled in the present results, which not only link the same bilateral temporal-orbitofrontal brain substrates to comprehending meaning from affectively expressive body language, but also demonstrate a predominance of the left hemisphere in deciphering the particularly abstract movement metaphors conveyed by dance. This asymmetry was evident as greater RS for repeated themes for dance relative to pantomime, which was driven by a greater initial activation for novel themes, suggesting that these left-hemisphere regions were engaged more vigorously when decoding more abstract movement metaphors. Together, these results illustrate a striking overlap in the brain substrates involved in processing meaning in verbal language and decoding meaning from expressive body movement. This overlap suggests that a long-hypothesized evolutionary link between gestural body movement and language ( Hewes et al., 1973 ; Harnad et al., 1976 ; Rizzolatti and Arbib, 1998 ; Corballis, 2003 ) may be instantiated by a network of shared brain substrates for representing semiotic structure, which constitutes the informational scaffolding for building meaning in both language and gesture ( Lemke, 1987 ; Freeman, 1997 ; McNeill, 2012 ; Lhommet and Marsella, 2013 ). While speculative, under this view the temporal-orbitofrontal AON module for coding meaning observed may provide a neural basis for semiosis (the construction of meaning), which would lend support to the intriguing philosophical argument that meaning is fundamentally grounded in processes of the body, brain, and the social environment within which they are immersed ( Thibault, 2004 ).

Summary and Conclusions

The present results identify a system of temporal, orbitofrontal, insula, and amygdala brain regions that supports the meaningful interpretation of expressive body language. We propose that these areas reveal a previously undefined superordinate functional module within a known, stratified hierarchical brain network for action representation. The findings are consistent with a predictive coding model of action understanding, wherein the meaning that is imbued into expressive body movements through subtle kinematics and prosodic nuances is decoded as a distinct property of action via feed-forward and feedback processing across the levels of a hierarchical AON. Under this view, recurrent processing loops integrate lower-level representations of movement dynamics and socio-affective perceptual information to generate, evaluate, and update predictive inferences about expressive content that are mediated in a superordinate temporal-orbitofrontal module of the AON. Thus, while lower-level action representation in motor-related brain areas (sometimes referred to as a human “mirror neuron system”) may be a key step in the construction of meaning from movement, it is not these motor areas that code the specific meaning of an expressive body movement. Rather, we have demonstrated an additional level of the cortical action representation hierarchy in non-motor regions of the AON. The results highlight an important link between action representation and language, and point to the possibility of shared brain substrates for constructing meaning in both domains.

Author Contributions

CT, GS, and SG designed the experiment. CT and GS created stimuli, which included recruiting professional dancers and filming expressive movement sequences. GS carried out video editing. CT completed computer programming for experimental control and data analysis. GS and CT recruited participants and conducted behavioral and fMRI testing. CT and SG designed the data analysis and CT and GS carried it out. GS conducted a literature review, and CT wrote the paper with reviews and edits from SG.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

Research supported by the James S. McDonnell Foundation.

Supplementary Material

The Supplementary Material for this article can be found online at: http://dx.doi.org/10.6084/m9.figshare.1508616

Adolphs, R., Damasio, H., Tranel, D., Cooper, G., and Damasio, A. R. (2000). A role for somatosensory cortices in the visual recognition of emotion as revealed by three-dimensional lesion mapping. J. Neurosci. 20, 2683–2690.

PubMed Abstract | Google Scholar

Adolphs, R., Tranel, D., and Damasio, A. R. (2003). Dissociable neural systems for recognizing emotions. Brain Cogn. 52, 61–69. doi: 10.1016/S0278-2626(03)00009-5

PubMed Abstract | CrossRef Full Text | Google Scholar

Allison, T., Puce, A., and McCarthy, G. (2000). Social perception from visual cues: role of the STS region. Trends Cogn. Sci. 4, 267–278. doi: 10.1016/S1364-6613(00)01501-1

Anderson, S. W., Bechara, A., Damasio, H., Tranel, D., and Damasio, A. R. (1999). Impairment of social and moral behavior related to early damage in human prefrontal cortex. Nat. Neurosci. 2, 1032–1037. doi: 10.1038/14833

Ansuini, C., Cavallo, A., Bertone, C., and Becchio, C. (2015). Intentions in the brain: the unveiling of Mister Hyde. Neuroscientist 21, 126–135. doi: 10.1177/1073858414533827

Astafiev, S. V., Stanley, C. M., Shulman, G. L., and Corbetta, M. (2004). Extrastriate body area in human occipital cortex responds to the performance of motor actions. Nat. Neurosci. 7, 542–548. doi: 10.1038/nn1241

Becchio, C., Cavallo, A., Begliomini, C., Sartori, L., Feltrin, G., and Castiello, U. (2012). Social grasping: from mirroring to mentalizing. Neuroimage 61, 240–248. doi: 10.1016/j.neuroimage.2012.03.013

Bechara, A., and Damasio, A. R. (2005). The somatic marker hypothesis: a neural theory of economic decision. Games Econ. Behav. 52, 336–372. doi: 10.1016/j.geb.2004.06.010

CrossRef Full Text | Google Scholar

Bechara, A., Damasio, H., and Damasio, A. R. (2003). Role of the amygdala in decision making. Ann. N.Y. Acad. Sci. 985, 356–369. doi: 10.1111/j.1749-6632.2003.tb07094.x

Bertenthal, B. I., Longo, M. R., and Kosobud, A. (2006). Imitative response tendencies following observation of intransitive actions. J. Exp. Psychol. 32, 210–225. doi: 10.1037/0096-1523.32.2.210

Bonda, E., Petrides, M., Ostry, D., and Evans, A. (1996). Specific involvement of human parietal systems and the amygdala in the perception of biological motion. J. Neurosci. 16, 3737–3744.

Botvinick, M. M. (2008). Hierarchical models of behavior and prefrontal function. Trends Cogn. Sci. 12, 201–208. doi: 10.1016/j.tics.2008.02.009

Brainard, D. H. (1997). The psychophysics toolbox. Spat. Vis. 10, 433–436. doi: 10.1163/156856897X00357

Brass, M., Schmitt, R. M., Spengler, S., and Gergely, G. (2007). Investigating action understanding: inferential processes versus action simulation. Curr. Biol. 17, 2117–2121. doi: 10.1016/j.cub.2007.11.057

Brown, E. C., and Brüne, M. (2012). The role of prediction in social neuroscience. Front. Hum. Neurosci . 6:147. doi: 10.3389/fnhum.2012.00147

Buccino, G., Binkofski, F., Fink, G. R., Fadiga, L., Fogassi, L., Gallese, V., et al. (2001). Action observation activates premotor and parietal areas in a somatotopic manner: an fMRI study. Eur. J. Neurosci. 13, 400–404. doi: 10.1046/j.1460-9568.2001.01385.x

Calvo-Merino, B., Glaser, D. E., Grèzes, J., Passingham, R. E., and Haggard, P. (2005). Action observation and acquired motor skills: an FMRI study with expert dancers. Cereb. Cortex 15, 1243. doi: 10.1093/cercor/bhi007

Cardinal, R. N., Parkinson, J. A., Hall, J., and Everitt, B. J. (2002). Emotion and motivation: the role of the amygdala, ventral striatum, and prefrontal cortex. Neurosci. Biobehav. Rev. 26, 321–352. doi: 10.1016/S0149-7634(02)00007-6

Chambon, V., Domenech, P., Pacherie, E., Koechlin, E., Baraduc, P., and Farrer, C. (2011). What are they up to? The role of sensory evidence and prior knowledge in action understanding. PLoS ONE 6:e17133. doi: 10.1371/journal.pone.0017133

Ciaramidaro, A., Becchio, C., Colle, L., Bara, B. G., and Walter, H. (2014). Do you mean me? Communicative intentions recruit the mirror and the mentalizing system. Soc. Cogn. Affect. Neurosci . 9, 909–916. doi: 10.1093/scan/nst062

Cooper, R. P., and Shallice, T. (2006). Hierarchical schemas and goals in the control of sequential behavior. Psychol. Rev. 113, 887–916. discussion 917–931. doi: 10.1037/0033-295x.113.4.887

Corballis, M. C. (2003). “From hand to mouth: the gestural origins of language,” in Language Evolution: The States of the Art , eds M. H. Christiansen and S. Kirby (Oxford University Press). Available online at: http://groups.lis.illinois.edu/amag/langev/paper/corballis03fromHandToMouth.html

PubMed Abstract

Cross, E. S., Hamilton, A. F. C., and Grafton, S. T. (2006). Building a motor simulation de novo : observation of dance by dancers. Neuroimage 31, 1257–1267. doi: 10.1016/j.neuroimage.2006.01.033

Cross, E. S., Kraemer, D. J. M., Hamilton, A. F. D. C., Kelley, W. M., and Grafton, S. T. (2009). Sensitivity of the action observation network to physical and observational learning. Cereb. Cortex 19, 315. doi: 10.1093/cercor/bhn083

Damasio, A. R., Grabowski, T. J., Bechara, A., Damasio, H., Ponto, L. L., Parvizi, J., et al. (2000). Subcortical and cortical brain activity during the feeling of self-generated emotions. Nat. Neurosci. 3, 1049–1056. doi: 10.1038/79871

Dapretto, M., Davies, M. S., Pfeifer, J. H., Scott, A. A., Sigman, M., Bookheimer, S. Y., et al. (2006). Understanding emotions in others: mirror neuron dysfunction in children with autism spectrum disorders. Nat. Neurosci. 9, 28–30. doi: 10.1038/nn1611

Dean, P., Redgrave, P., and Westby, G. W. M. (1989). Event or emergency? Two response systems in the mammalian superior colliculus. Trends Neurosci . 12, 137–147. doi: 10.1016/0166-2236(89)90052-0

Decety, J., and Ickes, W. (2011). The Social Neuroscience of Empathy . Cambridge, MA: MIT Press.

Google Scholar

Deen, B., and McCarthy, G. (2010). Reading about the actions of others: biological motion imagery and action congruency influence brain activity. Neuropsychologia 48, 1607–1615. doi: 10.1016/j.neuropsychologia.2010.01.028

de Gelder, B. (2006). Towards the neurobiology of emotional body language. Nat. Rev. Neurosci. 7, 242–249. doi: 10.1038/nrn1872

de Gelder, B., and Hadjikhani, N. (2006). Non-conscious recognition of emotional body language. Neuroreport 17, 583. doi: 10.1097/00001756-200604240-00006

Desai, R. H., Binder, J. R., Conant, L. L., Mano, Q. R., and Seidenberg, M. S. (2011). The neural career of sensory-motor metaphors. J. Cogn. Neurosci. 23, 2376–2386. doi: 10.1162/jocn.2010.21596

Desikan, R. S., Ségonne, F., Fischl, B., Quinn, B. T., Dickerson, B. C., Blacker, D., et al. (2006). An automated labeling system for subdividing the human cerebral cortex on MRI scans into gyral based regions of interest. Neuroimage 31, 968–980. doi: 10.1016/j.neuroimage.2006.01.021

Eickhoff, S. B., Heim, S., Zilles, K., and Amunts, K. (2006). Testing anatomically specified hypotheses in functional imaging using cytoarchitectonic maps. Neuroimage 32, 570–582. doi: 10.1016/j.neuroimage.2006.04.204

Eickhoff, S. B., Paus, T., Caspers, S., Grosbras, M. H., Evans, A. C., Zilles, K., et al. (2007). Assignment of functional activations to probabilistic cytoarchitectonic areas revisited. Neuroimage 36, 511–521. doi: 10.1016/j.neuroimage.2007.03.060

Eickhoff, S. B., Stephan, K. E., Mohlberg, H., Grefkes, C., Fink, G. R., Amunts, K., et al. (2005). A new SPM toolbox for combining probabilistic cytoarchitectonic maps and functional imaging data. Neuroimage 25, 1325–1335. doi: 10.1016/j.neuroimage.2004.12.034

Fadiga, L., Fogassi, L., Gallese, V., and Rizzolatti, G. (2000). Visuomotor neurons: ambiguity of the discharge or motor perception? Int. J. Psychophysiol. 35, 165–177. doi: 10.1016/S0167-8760(99)00051-3

Ferrari, P. F., Gallese, V., Rizzolatti, G., and Fogassi, L. (2003). Mirror neurons responding to the observation of ingestive and communicative mouth actions in the monkey ventral premotor cortex. Eur. J. Neurosci. 17, 1703–1714. doi: 10.1046/j.1460-9568.2003.02601.x

Frazier, J. A., Chiu, S., Breeze, J. L., Makris, N., Lange, N., Kennedy, D. N., et al. (2005). Structural brain magnetic resonance imaging of limbic and thalamic volumes in pediatric bipolar disorder. Am. J. Psychiatry 162, 1256–1265. doi: 10.1176/appi.ajp.162.7.1256

Freeman, W. J. (1997). A neurobiological interpretation of semiotics: meaning vs. representation. IEEE Int. Conf. Syst. Man Cybern. Comput. Cybern. Simul. 2, 93–102. doi: 10.1109/ICSMC.1997.638197

Frey, S. H., and Gerry, V. E. (2006). Modulation of neural activity during observational learning of actions and their sequential orders. J. Neurosci. 26, 13194–13201. doi: 10.1523/JNEUROSCI.3914-06.2006

Friederici, A. D., Rüschemeyer, S.-A., Hahne, A., and Fiebach, C. J. (2003). The role of left inferior frontal and superior temporal cortex in sentence comprehension: localizing syntactic and semantic processes. Cereb. Cortex 13, 170–177. doi: 10.1093/cercor/13.2.170

Friston, K., Mattout, J., and Kilner, J. (2011). Action understanding and active inference. Biol. Cybern. 104, 137–60. doi: 10.1007/s00422-011-0424-z

Gallese, V. (2003). The roots of empathy: the shared manifold hypothesis and the neural basis of intersubjectivity. Psychopathology 36, 171–180. doi: 10.1159/000072786

Gallese, V., Fadiga, L., Fogassi, L., and Rizzolatti, G. (1996). Action recognition in the premotor cortex. Brain 119, 593. doi: 10.1093/brain/119.2.593

Gallese, V., and Goldman, A. (1998). Mirror neurons and the simulation theory of mind-reading. Trends Cogn. Sci. 2, 493–501. doi: 10.1016/S1364-6613(98)01262-5

Gallese, V., Keysers, C., and Rizzolatti, G. (2004). A unifying view of the basis of social cognition. Trends Cogn. Sci. 8, 396–403. doi: 10.1016/j.tics.2004.07.002

Goldstein, J. M., Seidman, L. J., Makris, N., Ahern, T., O'Brien, L. M., Caviness, V. S., et al. (2007). Hypothalamic abnormalities in Schizophrenia: sex effects and genetic vulnerability. Biol. Psychiatry 61, 935–945. doi: 10.1016/j.biopsych.2006.06.027

Golub, G. H., and Reinsch, C. (1970). Singular value decomposition and least squares solutions. Numer. Math. 14, 403–420. doi: 10.1007/BF02163027

Gorno-Tempini, M. L., Dronkers, N. F., Rankin, K. P., Ogar, J. M., Phengrasamy, L., Rosen, H. J., et al. (2004). Cognition and anatomy in three variants of primary progressive aphasia. Ann. Neurol. 55, 335–346. doi: 10.1002/ana.10825

Grafton, S. T. (2009). Embodied cognition and the simulation of action to understand others. Ann. N.Y. Acad. Sci. 1156, 97–117. doi: 10.1111/j.1749-6632.2009.04425.x

Grafton, S. T., Arbib, M. A., Fadiga, L., and Rizzolatti, G. (1996). Localization of grasp representations in humans by positron emission tomography. Exp. Brain Res. 112, 103–111. doi: 10.1007/BF00227183

Grafton, S. T., and Tipper, C. M. (2012). Decoding intention: a neuroergonomic perspective. Neuroimage 59, 14–24. doi: 10.1016/j.neuroimage.2011.05.064

Grèzes, J., Decety, J., and Grezes, J. (2001). Functional anatomy of execution, mental simulation, observation, and verb generation of actions: a meta-analysis. Hum. Brain Mapp. 12, 1–19. doi: 10.1002/1097-0193(200101)12:1<1::AID-HBM10>3.0.CO;2-V

Grezes, J., Fonlupt, P., Bertenthal, B., Delon-Martin, C., Segebarth, C., Decety, J., et al. (2001). Does perception of biological motion rely on specific brain regions? Neuroimage 13, 775–785. doi: 10.1006/nimg.2000.0740

Grill-Spector, K., Henson, R., and Martin, A. (2006). Repetition and the brain: neural models of stimulus-specific effects. Trends Cogn. Sci. 10, 14–23. doi: 10.1016/j.tics.2005.11.006

Grill-Spector, K., and Malach, R. (2001). fMR-adaptation: a tool for studying the functional properties of human cortical neurons. Acta Psychol. 107, 293–321. doi: 10.1016/S0001-6918(01)00019-1

Hadjikhani, N., and de Gelder, B. (2003). Seeing fearful body expressions activates the fusiform cortex and amygdala. Curr. Biol. 13, 2201–2205. doi: 10.1016/j.cub.2003.11.049

Hamilton, A. F. C., and Grafton, S. T. (2006). Goal representation in human anterior intraparietal sulcus. J. Neurosci. 26, 1133. doi: 10.1523/JNEUROSCI.4551-05.2006

Hamilton, A. F. D. C., and Grafton, S. T. (2008). Action outcomes are represented in human inferior frontoparietal cortex. Cereb. Cortex 18, 1160–1168. doi: 10.1093/cercor/bhm150

Hamilton, A. F., and Grafton, S. T. (2007). “The motor hierarchy: from kinematics to goals and intentions,” in Sensorimotor Foundations of Higher Cognition: Attention and Performance , Vol. 22, eds P. Haggard, Y. Rossetti, and M. Kawato (Oxford: Oxford University Press), 381–402.

Hari, R., Forss, N., Avikainen, S., Kirveskari, E., Salenius, S., and Rizzolatti, G. (1998). Activation of human primary motor cortex during action observation: a neuromagnetic study. Proc. Natl. Acad. Sci. U.S.A. 95, 15061–15065. doi: 10.1073/pnas.95.25.15061