- Games & Quizzes

- History & Society

- Science & Tech

- Biographies

- Animals & Nature

- Geography & Travel

- Arts & Culture

- On This Day

- One Good Fact

- New Articles

- Lifestyles & Social Issues

- Philosophy & Religion

- Politics, Law & Government

- World History

- Health & Medicine

- Browse Biographies

- Birds, Reptiles & Other Vertebrates

- Bugs, Mollusks & Other Invertebrates

- Environment

- Fossils & Geologic Time

- Entertainment & Pop Culture

- Sports & Recreation

- Visual Arts

- Demystified

- Image Galleries

- Infographics

- Top Questions

- Britannica Kids

- Saving Earth

- Space Next 50

- Student Center

- When did science begin?

- Where was science invented?

scientific hypothesis

Our editors will review what you’ve submitted and determine whether to revise the article.

- National Center for Biotechnology Information - PubMed Central - On the scope of scientific hypotheses

- LiveScience - What is a scientific hypothesis?

- The Royal Society - On the scope of scientific hypotheses

scientific hypothesis , an idea that proposes a tentative explanation about a phenomenon or a narrow set of phenomena observed in the natural world. The two primary features of a scientific hypothesis are falsifiability and testability, which are reflected in an “If…then” statement summarizing the idea and in the ability to be supported or refuted through observation and experimentation. The notion of the scientific hypothesis as both falsifiable and testable was advanced in the mid-20th century by Austrian-born British philosopher Karl Popper .

The formulation and testing of a hypothesis is part of the scientific method , the approach scientists use when attempting to understand and test ideas about natural phenomena. The generation of a hypothesis frequently is described as a creative process and is based on existing scientific knowledge, intuition , or experience. Therefore, although scientific hypotheses commonly are described as educated guesses, they actually are more informed than a guess. In addition, scientists generally strive to develop simple hypotheses, since these are easier to test relative to hypotheses that involve many different variables and potential outcomes. Such complex hypotheses may be developed as scientific models ( see scientific modeling ).

Depending on the results of scientific evaluation, a hypothesis typically is either rejected as false or accepted as true. However, because a hypothesis inherently is falsifiable, even hypotheses supported by scientific evidence and accepted as true are susceptible to rejection later, when new evidence has become available. In some instances, rather than rejecting a hypothesis because it has been falsified by new evidence, scientists simply adapt the existing idea to accommodate the new information. In this sense a hypothesis is never incorrect but only incomplete.

The investigation of scientific hypotheses is an important component in the development of scientific theory . Hence, hypotheses differ fundamentally from theories; whereas the former is a specific tentative explanation and serves as the main tool by which scientists gather data, the latter is a broad general explanation that incorporates data from many different scientific investigations undertaken to explore hypotheses.

Countless hypotheses have been developed and tested throughout the history of science . Several examples include the idea that living organisms develop from nonliving matter, which formed the basis of spontaneous generation , a hypothesis that ultimately was disproved (first in 1668, with the experiments of Italian physician Francesco Redi , and later in 1859, with the experiments of French chemist and microbiologist Louis Pasteur ); the concept proposed in the late 19th century that microorganisms cause certain diseases (now known as germ theory ); and the notion that oceanic crust forms along submarine mountain zones and spreads laterally away from them ( seafloor spreading hypothesis ).

What Is a Testable Hypothesis?

- Scientific Method

- Chemical Laws

- Periodic Table

- Projects & Experiments

- Biochemistry

- Physical Chemistry

- Medical Chemistry

- Chemistry In Everyday Life

- Famous Chemists

- Activities for Kids

- Abbreviations & Acronyms

- Weather & Climate

- Ph.D., Biomedical Sciences, University of Tennessee at Knoxville

- B.A., Physics and Mathematics, Hastings College

A hypothesis is a tentative answer to a scientific question. A testable hypothesis is a hypothesis that can be proved or disproved as a result of testing, data collection, or experience. Only testable hypotheses can be used to conceive and perform an experiment using the scientific method .

Requirements for a Testable Hypothesis

In order to be considered testable, two criteria must be met:

- It must be possible to prove that the hypothesis is true.

- It must be possible to prove that the hypothesis is false.

- It must be possible to reproduce the results of the hypothesis.

Examples of a Testable Hypothesis

All the following hypotheses are testable. It's important, however, to note that while it's possible to say that the hypothesis is correct, much more research would be required to answer the question " why is this hypothesis correct?"

- Students who attend class have higher grades than students who skip class. This is testable because it is possible to compare the grades of students who do and do not skip class and then analyze the resulting data. Another person could conduct the same research and come up with the same results.

- People exposed to high levels of ultraviolet light have a higher incidence of cancer than the norm. This is testable because it is possible to find a group of people who have been exposed to high levels of ultraviolet light and compare their cancer rates to the average.

- If you put people in a dark room, then they will be unable to tell when an infrared light turns on. This hypothesis is testable because it is possible to put a group of people into a dark room, turn on an infrared light, and ask the people in the room whether or not an infrared light has been turned on.

Examples of a Hypothesis Not Written in a Testable Form

- It doesn't matter whether or not you skip class. This hypothesis can't be tested because it doesn't make any actual claim regarding the outcome of skipping class. "It doesn't matter" doesn't have any specific meaning, so it can't be tested.

- Ultraviolet light could cause cancer. The word "could" makes a hypothesis extremely difficult to test because it is very vague. There "could," for example, be UFOs watching us at every moment, even though it's impossible to prove that they are there!

- Goldfish make better pets than guinea pigs. This is not a hypothesis; it's a matter of opinion. There is no agreed-upon definition of what a "better" pet is, so while it is possible to argue the point, there is no way to prove it.

How to Propose a Testable Hypothesis

Now that you know what a testable hypothesis is, here are tips for proposing one.

- Try to write the hypothesis as an if-then statement. If you take an action, then a certain outcome is expected.

- Identify the independent and dependent variable in the hypothesis. The independent variable is what you are controlling or changing. You measure the effect this has on the dependent variable.

- Write the hypothesis in such a way that you can prove or disprove it. For example, a person has skin cancer, you can't prove they got it from being out in the sun. However, you can demonstrate a relationship between exposure to ultraviolet light and increased risk of skin cancer.

- Make sure you are proposing a hypothesis you can test with reproducible results. If your face breaks out, you can't prove the breakout was caused by the french fries you had for dinner last night. However, you can measure whether or not eating french fries is associated with breaking out. It's a matter of gathering enough data to be able to reproduce results and draw a conclusion.

- Examples of Independent and Dependent Variables

- Null Hypothesis Examples

- What Is the Visible Light Spectrum?

- What Glows Under Black Light?

- Difference Between Independent and Dependent Variables

- What Are Examples of a Hypothesis?

- What Is a Black Light?

- What Are the Elements of a Good Hypothesis?

- What Is a Hypothesis? (Science)

- Understanding Simple vs Controlled Experiments

- How To Design a Science Fair Experiment

- Scientific Method Vocabulary Terms

- Null Hypothesis Definition and Examples

- Theory Definition in Science

- Hypothesis, Model, Theory, and Law

- Six Steps of the Scientific Method

Research Hypothesis In Psychology: Types, & Examples

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

A research hypothesis, in its plural form “hypotheses,” is a specific, testable prediction about the anticipated results of a study, established at its outset. It is a key component of the scientific method .

Hypotheses connect theory to data and guide the research process towards expanding scientific understanding

Some key points about hypotheses:

- A hypothesis expresses an expected pattern or relationship. It connects the variables under investigation.

- It is stated in clear, precise terms before any data collection or analysis occurs. This makes the hypothesis testable.

- A hypothesis must be falsifiable. It should be possible, even if unlikely in practice, to collect data that disconfirms rather than supports the hypothesis.

- Hypotheses guide research. Scientists design studies to explicitly evaluate hypotheses about how nature works.

- For a hypothesis to be valid, it must be testable against empirical evidence. The evidence can then confirm or disprove the testable predictions.

- Hypotheses are informed by background knowledge and observation, but go beyond what is already known to propose an explanation of how or why something occurs.

Predictions typically arise from a thorough knowledge of the research literature, curiosity about real-world problems or implications, and integrating this to advance theory. They build on existing literature while providing new insight.

Types of Research Hypotheses

Alternative hypothesis.

The research hypothesis is often called the alternative or experimental hypothesis in experimental research.

It typically suggests a potential relationship between two key variables: the independent variable, which the researcher manipulates, and the dependent variable, which is measured based on those changes.

The alternative hypothesis states a relationship exists between the two variables being studied (one variable affects the other).

A hypothesis is a testable statement or prediction about the relationship between two or more variables. It is a key component of the scientific method. Some key points about hypotheses:

- Important hypotheses lead to predictions that can be tested empirically. The evidence can then confirm or disprove the testable predictions.

In summary, a hypothesis is a precise, testable statement of what researchers expect to happen in a study and why. Hypotheses connect theory to data and guide the research process towards expanding scientific understanding.

An experimental hypothesis predicts what change(s) will occur in the dependent variable when the independent variable is manipulated.

It states that the results are not due to chance and are significant in supporting the theory being investigated.

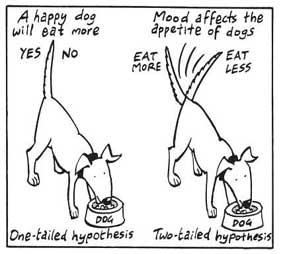

The alternative hypothesis can be directional, indicating a specific direction of the effect, or non-directional, suggesting a difference without specifying its nature. It’s what researchers aim to support or demonstrate through their study.

Null Hypothesis

The null hypothesis states no relationship exists between the two variables being studied (one variable does not affect the other). There will be no changes in the dependent variable due to manipulating the independent variable.

It states results are due to chance and are not significant in supporting the idea being investigated.

The null hypothesis, positing no effect or relationship, is a foundational contrast to the research hypothesis in scientific inquiry. It establishes a baseline for statistical testing, promoting objectivity by initiating research from a neutral stance.

Many statistical methods are tailored to test the null hypothesis, determining the likelihood of observed results if no true effect exists.

This dual-hypothesis approach provides clarity, ensuring that research intentions are explicit, and fosters consistency across scientific studies, enhancing the standardization and interpretability of research outcomes.

Nondirectional Hypothesis

A non-directional hypothesis, also known as a two-tailed hypothesis, predicts that there is a difference or relationship between two variables but does not specify the direction of this relationship.

It merely indicates that a change or effect will occur without predicting which group will have higher or lower values.

For example, “There is a difference in performance between Group A and Group B” is a non-directional hypothesis.

Directional Hypothesis

A directional (one-tailed) hypothesis predicts the nature of the effect of the independent variable on the dependent variable. It predicts in which direction the change will take place. (i.e., greater, smaller, less, more)

It specifies whether one variable is greater, lesser, or different from another, rather than just indicating that there’s a difference without specifying its nature.

For example, “Exercise increases weight loss” is a directional hypothesis.

Falsifiability

The Falsification Principle, proposed by Karl Popper , is a way of demarcating science from non-science. It suggests that for a theory or hypothesis to be considered scientific, it must be testable and irrefutable.

Falsifiability emphasizes that scientific claims shouldn’t just be confirmable but should also have the potential to be proven wrong.

It means that there should exist some potential evidence or experiment that could prove the proposition false.

However many confirming instances exist for a theory, it only takes one counter observation to falsify it. For example, the hypothesis that “all swans are white,” can be falsified by observing a black swan.

For Popper, science should attempt to disprove a theory rather than attempt to continually provide evidence to support a research hypothesis.

Can a Hypothesis be Proven?

Hypotheses make probabilistic predictions. They state the expected outcome if a particular relationship exists. However, a study result supporting a hypothesis does not definitively prove it is true.

All studies have limitations. There may be unknown confounding factors or issues that limit the certainty of conclusions. Additional studies may yield different results.

In science, hypotheses can realistically only be supported with some degree of confidence, not proven. The process of science is to incrementally accumulate evidence for and against hypothesized relationships in an ongoing pursuit of better models and explanations that best fit the empirical data. But hypotheses remain open to revision and rejection if that is where the evidence leads.

- Disproving a hypothesis is definitive. Solid disconfirmatory evidence will falsify a hypothesis and require altering or discarding it based on the evidence.

- However, confirming evidence is always open to revision. Other explanations may account for the same results, and additional or contradictory evidence may emerge over time.

We can never 100% prove the alternative hypothesis. Instead, we see if we can disprove, or reject the null hypothesis.

If we reject the null hypothesis, this doesn’t mean that our alternative hypothesis is correct but does support the alternative/experimental hypothesis.

Upon analysis of the results, an alternative hypothesis can be rejected or supported, but it can never be proven to be correct. We must avoid any reference to results proving a theory as this implies 100% certainty, and there is always a chance that evidence may exist which could refute a theory.

How to Write a Hypothesis

- Identify variables . The researcher manipulates the independent variable and the dependent variable is the measured outcome.

- Operationalized the variables being investigated . Operationalization of a hypothesis refers to the process of making the variables physically measurable or testable, e.g. if you are about to study aggression, you might count the number of punches given by participants.

- Decide on a direction for your prediction . If there is evidence in the literature to support a specific effect of the independent variable on the dependent variable, write a directional (one-tailed) hypothesis. If there are limited or ambiguous findings in the literature regarding the effect of the independent variable on the dependent variable, write a non-directional (two-tailed) hypothesis.

- Make it Testable : Ensure your hypothesis can be tested through experimentation or observation. It should be possible to prove it false (principle of falsifiability).

- Clear & concise language . A strong hypothesis is concise (typically one to two sentences long), and formulated using clear and straightforward language, ensuring it’s easily understood and testable.

Consider a hypothesis many teachers might subscribe to: students work better on Monday morning than on Friday afternoon (IV=Day, DV= Standard of work).

Now, if we decide to study this by giving the same group of students a lesson on a Monday morning and a Friday afternoon and then measuring their immediate recall of the material covered in each session, we would end up with the following:

- The alternative hypothesis states that students will recall significantly more information on a Monday morning than on a Friday afternoon.

- The null hypothesis states that there will be no significant difference in the amount recalled on a Monday morning compared to a Friday afternoon. Any difference will be due to chance or confounding factors.

More Examples

- Memory : Participants exposed to classical music during study sessions will recall more items from a list than those who studied in silence.

- Social Psychology : Individuals who frequently engage in social media use will report higher levels of perceived social isolation compared to those who use it infrequently.

- Developmental Psychology : Children who engage in regular imaginative play have better problem-solving skills than those who don’t.

- Clinical Psychology : Cognitive-behavioral therapy will be more effective in reducing symptoms of anxiety over a 6-month period compared to traditional talk therapy.

- Cognitive Psychology : Individuals who multitask between various electronic devices will have shorter attention spans on focused tasks than those who single-task.

- Health Psychology : Patients who practice mindfulness meditation will experience lower levels of chronic pain compared to those who don’t meditate.

- Organizational Psychology : Employees in open-plan offices will report higher levels of stress than those in private offices.

- Behavioral Psychology : Rats rewarded with food after pressing a lever will press it more frequently than rats who receive no reward.

Related Articles

Research Methodology

Qualitative Data Coding

What Is a Focus Group?

Cross-Cultural Research Methodology In Psychology

What Is Internal Validity In Research?

Research Methodology , Statistics

What Is Face Validity In Research? Importance & How To Measure

Criterion Validity: Definition & Examples

Which of the following is a testable hypothesis

Ashflashh is waiting for your help.

- 1.2K people helped

Explanation:

Still have questions?

Get more answers for free, you might be interested in, new questions in chemistry.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Published: 05 June 2024

Post-January 6th deplatforming reduced the reach of misinformation on Twitter

- Stefan D. McCabe ORCID: orcid.org/0000-0002-7180-145X 1 na1 ,

- Diogo Ferrari ORCID: orcid.org/0000-0003-2454-0776 2 na1 ,

- Jon Green 3 ,

- David M. J. Lazer ORCID: orcid.org/0000-0002-7991-9110 4 , 5 &

- Kevin M. Esterling ORCID: orcid.org/0000-0002-5529-6422 2 , 6

Nature volume 630 , pages 132–140 ( 2024 ) Cite this article

1754 Accesses

193 Altmetric

Metrics details

The social media platforms of the twenty-first century have an enormous role in regulating speech in the USA and worldwide 1 . However, there has been little research on platform-wide interventions on speech 2 , 3 . Here we evaluate the effect of the decision by Twitter to suddenly deplatform 70,000 misinformation traffickers in response to the violence at the US Capitol on 6 January 2021 (a series of events commonly known as and referred to here as ‘January 6th’). Using a panel of more than 500,000 active Twitter users 4 , 5 and natural experimental designs 6 , 7 , we evaluate the effects of this intervention on the circulation of misinformation on Twitter. We show that the intervention reduced circulation of misinformation by the deplatformed users as well as by those who followed the deplatformed users, though we cannot identify the magnitude of the causal estimates owing to the co-occurrence of the deplatforming intervention with the events surrounding January 6th. We also find that many of the misinformation traffickers who were not deplatformed left Twitter following the intervention. The results inform the historical record surrounding the insurrection, a momentous event in US history, and indicate the capacity of social media platforms to control the circulation of misinformation, and more generally to regulate public discourse.

This is a preview of subscription content, access via your institution

Access options

Access Nature and 54 other Nature Portfolio journals

Get Nature+, our best-value online-access subscription

24,99 € / 30 days

cancel any time

Subscribe to this journal

Receive 51 print issues and online access

185,98 € per year

only 3,65 € per issue

Buy this article

- Purchase on Springer Link

- Instant access to full article PDF

Prices may be subject to local taxes which are calculated during checkout

Similar content being viewed by others

Exposure to the Russian Internet Research Agency foreign influence campaign on Twitter in the 2016 US election and its relationship to attitudes and voting behavior

Measuring the scope of pro-Kremlin disinformation on Twitter

Using the president’s tweets to understand political diversion in the age of social media

Data availability.

Aggregate data used in the analysis are publicly available at the OSF project website ( https://doi.org/10.17605/OSF.IO/KU8Z4 ) to any researcher for purposes of reproducing or extending the analysis. The tweet-level data and specific user demographics cannot be publicly shared owing to privacy concerns arising from matching data to administrative records, data use agreements and platforms’ terms of service. Our replication materials include the code used to produce the aggregate data from the tweet-level data, and the tweet-level data can be accessed after signing a data-use agreement. For access requests, please contact D.M.J.L.

Code availability

All code necessary for reproduction of the results is available at the OSF project site https://doi.org/10.17605/OSF.IO/KU8Z4 .

Lazer, D. The rise of the social algorithm. Science 348 , 1090–1091 (2015).

Article ADS MathSciNet CAS PubMed Google Scholar

Jhaver, S., Boylston, C., Yang, D. & Bruckman, A. Evaluating the effectiveness of deplatforming as a moderation strategy on Twitter. Proc. ACM Hum.-Comput. Interact. 5 , 381 (2021).

Article Google Scholar

Broniatowski, D. A., Simons, J. R., Gu, J., Jamison, A. M. & Abroms, L. C. The efficacy of Facebook’s vaccine misinformation policies and architecture during the COVID-19 pandemic. Sci. Adv. 9 , eadh2132 (2023).

Article PubMed PubMed Central Google Scholar

Hughes, A. G. et al. Using administrative records and survey data to construct samples of tweeters and tweets. Public Opin. Q. 85 , 323–346 (2021).

Shugars, S. et al. Pandemics, protests, and publics: demographic activity and engagement on Twitter in 2020. J. Quant. Descr. Digit. Media https://doi.org/10.51685/jqd.2021.002 (2021).

Imbens, G. W., & Lemieux, T. Regression discontinuity designs: a guide to practice. J. Econom. 142 , 615–635 (2008).

Article MathSciNet Google Scholar

Gerber, A. S. & Green, D. P. Field Experiments: Design, Analysis, and Interpretation (W.W. Norton, 2012).

Grinberg, N., Joseph, K., Friedland, L., Swire-Thompson, B. & Lazer, D. Fake news on Twitter during the 2016 U.S. presidential election. Science 363 , 374–378 (2019).

Article ADS CAS PubMed Google Scholar

Munger, K. & Phillips, J. Right-wing YouTube: a supply and demand perspective. Int. J. Press Polit. 27 , 186–219 (2022).

Guess, et al. How do social media feed algorithms affect attitudes and behavior in an election campaign? Science 381 , 398–404 (2023).

Persily, N. in New Technologies of Communication and the First Amendment: The Internet, Social Media and Censorship (ed. Bollinger L. C. & Stone, G. R.) (Oxford Univ. Press, 2022).

Sevanian, A. M. Section 230 of the Communications Decency Act: a ‘good Samaritan’ law without the requirement of acting as a ‘good Samaritan’. UCLA Ent. L. Rev. https://doi.org/10.5070/LR8211027178 (2014).

Lazer, D. M. J. et al. The science of fake news. Science 359 , 1094–1096 (2018).

Suzor, N. Digital constitutionalism: using the rule of law to evaluate the legitimacy of governance by platforms. Soc. Media Soc. 4 , 2056305118787812 (2018).

Google Scholar

Napoli, P. M. Social Media and the Public Interest (Columbia Univ. Press, 2019).

DeNardis, L. & Hackl, A. M. Internet governance by social media platforms. Telecomm. Policy 39 , 761–770 (2015).

TwitterSafety. An update following the riots in Washington, DC. Twitter https://blog.x.com/en_us/topics/company/2021/protecting--the-conversation-following-the-riots-in-washington-- (2021).

Twitter. Civic Integrity Policy. Twitter https://help.twitter.com/en/rules-and-policies/election-integrity-policy (2021).

Promoting safety and expression. Facebook https://about.facebook.com/actions/promoting-safety-and-expression/ (2021).

Dwoskin, E. Trump is suspended from Facebook for 2 years and can’t return until ‘risk to public safety is receded’. The Washington Post https://www.washingtonpost.com/technology/2021/06/03/trump-facebook-oversight-board/ (4 June 2021).

Huszár, F. et al. Algorithmic amplification of politics on Twitter. Proc. Natl Acad. Sci. USA 119 , e2025334119 (2021).

Article PubMed Central Google Scholar

Guess, A. M., Nyhan, B. & Reifler, J. Exposure to untrustworthy websites in the 2016 US election. Nat. Hum. Behav. 4 , 472–480 (2020).

Sunstein, C. R. #Republic: Divided Democracy in the Age of Social Media (Princeton Univ. Press, 2017).

Timberg, C., Dwoskin, E. & Albergotti, R. Inside Facebook, Jan. 6 violence fueled anger, regret over missed warning signs. The Washington Post https://www.washingtonpost.com/technology/2021/10/22/jan-6-capitol-riot-facebook/ (22 October 2021).

Chandrasekharan, E. et al. You can’t stay here: the efficacy of Reddit’s 2015 ban examined through hate speech. Proc. ACM Hum. Comput. Interact. 1 , 31 (2017).

Matias, J. N. Preventing harassment and increasing group participation through social norms in 2,190 online science discussions. Proc. Natl Acad. Sci. USA 116 , 9785–9789 (2019).

Article ADS CAS PubMed PubMed Central Google Scholar

Yildirim, M. M., Nagler, J., Bonneau, R. & Tucker, J. A. Short of suspension: how suspension warnings can reduce hate speech on Twitter. Perspect. Politics 21 , 651–663 (2023).

Guess, A. M. et al. Reshares on social media amplify political news but do not detectably affect beliefs or opinions. Science 381 , 404–408 (2023).

Nyhan, B. et al. Like-minded sources on Facebook are prevalent but not polarizing. Nature 620 , 137–144 (2023).

Dang, S. Elon Musk’s X restructuring curtails disinformation research, spurs legal fears. Reuters https://www.reuters.com/technology/elon-musks-x-restructuring-curtails-disinformation-research-spurs-legal-fears-2023-11-06/ (6 November 2023).

Duffy, C. For misinformation peddlers on social media, it’s three strikes and you’re out. Or five. Maybe more. CNN Business https://edition.cnn.com/2021/09/01/tech/social-media-misinformation-strike-policies/index.html (1 September 2021).

Conger, K. Twitter removes Chinese disinformation campaign. The New York Times https://www.nytimes.com/2020/06/11/technology/twitter-chinese-misinformation.html (11 June 2020).

Timberg, C. & Mahtani, S. Facebook bans Myanmar’s military, citing threat of new violence after Feb. 1 coup. The Washington Post https://www.washingtonpost.com/technology/2021/02/24/facebook-myanmar-coup-genocide/ (24 February 2021).

Barry, D. & Frenkel, S. ‘Be there. Will be wild!’: Trump all but circled the date. The New York Times https://www.nytimes.com/2021/01/06/us/politics/capitol-mob-trump-supporters.html (6 January 2021).

Timberg, C. Twitter ban reveals that tech companies held keys to Trump’s power all along. The Washington Post https://www.washingtonpost.com/technology/2021/01/14/trump-twitter-megaphone/ (14 January 2021).

Dwoskin, E. & Tiku, N. How Twitter, on the front lines of history, finally decided to ban Trump. The Washington Post https://www.washingtonpost.com/technology/2021/01/16/how-twitter-banned-trump/ (16 January 2021).

Harwell, D. New video undercuts claim Twitter censored pro-Trump views before Jan. 6. The Washington Post https://www.washingtonpost.com/technology/2023/06/23/new-twitter-video-jan6/ (23 June 2023).

Romm, T. & Dwoskin, E. Twitter purged more than 70,000 accounts affiliated with QAnon following Capitol riot. The Washington Post https://www.washingtonpost.com/technology/2021/01/11/trump-twitter-ban/ (11 January 2021).

Denham, H. These are the platforms that have banned Trump and his allies. The Washington Post https://www.washingtonpost.com/technology/2021/01/11/trump-banned-social-media/ (13 January 2021).

Graphika Team. DisQualified: network impact of Twitter’s latest QAnon enforcement. Graphika Blog https://graphika.com/posts/disqualified-network-impact-of-twitters-latest-qanon-enforcement/ (2021).

Dwoskin, E. & Timberg, C. Misinformation dropped dramatically the week after Twitter banned Trump and some allies. The Washington Post https://www.washingtonpost.com/technology/2021/01/16/misinformation-trump-twitter/ (16 January 2021).

Harwell, D. & Dawsey, J. Trump is sliding toward online irrelevance. His new blog isn’t helping. The Washington Post https://www.washingtonpost.com/technology/2021/05/21/trump-online-traffic-plunge/ (21 May 2021).

Olteanu, A., Castillo, C., Boy, J. & Varshney, K. The effect of extremist violence on hateful speech online. In Proc. 12th International AAAI Conference on Web and Social Media https://doi.org/10.1609/icwsm.v12i1.15040 (ICWSM, 2018).

Lin, H. et al. High level of correspondence across different news domain quality rating sets. PNAS Nexus 2 , gad286 (2023).

Abilov, A., Hua, Y., Matatov, H., Amir, O., & Naaman, M. VoterFraud2020: a multi-modal dataset of election fraud claims on Twitter.” Proc. Int. AAAI Conf. Weblogs Soc. Media 15 , 901–912 (2021).

Calonico, S., Cattaneo, M. D. & Titiunik, R. Robust nonparametric confidence intervals for regression-discontinuity designs. Econometrica 82 , 2295–2326 (2014).

Jackson, S., Gorman, B. & Nakatsuka, M. QAnon on Twitter: An Overview (Institute for Data, Democracy and Politics, George Washington Univ. 2021).

Shearer, E. & Mitchell, A. News use across social media platforms in 2020. Pew Research Center https://www.pewresearch.org/journalism/2021/01/12/news-use-across-social-media-platforms-in-2020/ (2021).

McGregor, S. C. Social media as public opinion: How journalists use social media to represent public opinion. Journalism 20 , 1070–1086 (2019).

Hammond-Errey, M. Elon Musk’s Twitter is becoming a sewer of disinformation. Foreign Policy https://foreignpolicy.com/2023/07/15/elon-musk-twitter-blue-checks-verification-disinformation-propaganda-russia-china-trust-safety/ (15 July 2023).

Joseph, K. et al. (Mis)alignment between stance expressed in social media data and public opinion surveys. Proc. 2021 Conference on Empirical Methods in Natural Language Processing 312–324 (Association for Computational Linguistics, 2021).

Robertson, R. E. et al. Auditing partisan audience bias within Google search. Proc. ACM Hum. Comput. Interact. 2 , 148 (2018).

McCrary, J. Manipulation of the running variable in the regression discontinuity design: a density. Test 142 , 698–714 (2008).

MathSciNet Google Scholar

Roth, J., Sant’Anna, P. H. C., Bilinski, A. & Poe, J. What’s trending in difference-in-differences? A synthesis of the recent econometrics literature. J. Econom. 235 , 2218–2244 (2023).

Wing, C., Simon, K. & Bello-Gomez, R. A. Designing difference in difference studies: best practices for public health policy research. Annu. Rev. Public Health 39 , 453–469 (2018).

Article PubMed Google Scholar

Baker, A. C., Larcker, D. F. & Wang, C. C. Y. How much should we trust staggered difference-in-differences estimates? J. Financ. Econ. 144 , 370–395 (2022).

Callaway, B. & Sant’Anna, P. H. C. Difference-in-differences with multiple time periods. J. Econom. 225 , 200–230 (2021).

R Core Team. R: A Language and Environment for Statistical Computing, v.4.3.1. https://www.R-project.org/ (2023).

rdrobust: Robust data-driven statistical inference in regression-discontinuity designs. https://cran.r-project.org/package=rdrobust (2023).

Calonico, S., Cattaneo, M. D. & Titiunik, R. Optimal data-driven regression discontinuity plots. J. Am. Stat. Assoc. 110 , 1753–1769 (2015).

Article MathSciNet CAS Google Scholar

Calonico, S., Cattaneo, M. D. & Farrell, M. H. On the effect of bias estimation on coverage accuracy in nonparametric inference. J. Am. Stat. Assoc. 113 , 767–779 (2018).

Zeileis, A. & Hothorn, T. Diagnostic checking in regression relationships. R News 2 , 7–10 (2002).

Cameron, A. C., Gelbach, J. B. & Miller, D. L. Robust inference with multiway clustering. J. Bus. Econ. Stat. 29 , 238–249 (2011).

Zeileis, A. Econometric computing with HC and HAC covariance matrix estimators. J. Stat. Softw . https://doi.org/10.18637/jss.v011.i10 (2004).

Eckles, D., Karrer, B. & Johan, U. Design and analysis of experiments in networks: reducing bias from interference. J. Causal Inference https://doi.org/10.1515/jci-2015-0021 (2016).

Download references

Acknowledgements

The authors thank N. Grinberg, L. Friedland and K. Joseph for earlier technical work on the development of the Twitter dataset. Earlier versions of this paper were presented at the Social Media Analysis Workshop, UC Riverside, 26 August 2022; at the Annual Meeting of the American Political Science Association, 17 September 2022; and at the Center for Social Media and Politics, NYU, 23 April 2021. Special thanks go to A. Guess for suggesting the DID analysis. D.M.J.L. acknowledges support from the William & Flora Hewlett Foundation and the Volkswagen Foundation. S.D.M. was supported by the John S. and James L. Knight Foundation through a grant to the Institute for Data, Democracy & Politics at the George Washington University.

Author information

These authors contributed equally: Stefan D. McCabe, Diogo Ferrari

Authors and Affiliations

Institute for Data, Democracy & Politics, George Washington University, Washington, DC, USA

Stefan D. McCabe

Department of Political Science, University of California, Riverside, Riverside, CA, USA

Diogo Ferrari & Kevin M. Esterling

Department of Political Science, Duke University, Durham, NC, USA

Network Science Institute, Northeastern University, Boston, MA, USA

David M. J. Lazer

Institute for Quantitative Social Science, Harvard University, Cambridge, MA, USA

School of Public Policy, University of California, Riverside, Riverside, CA, USA

Kevin M. Esterling

You can also search for this author in PubMed Google Scholar

Contributions

The order of author listed here does not indicate level of contribution. Conceptualization of theory and research design: S.D.M., D.M.J.L., D.F., K.M.E. and J.G. Data curation: S.D.M. and J.G. Methodology: D.F. Visualization: D.F. Funding acquisition: D.M.J.L. Project administration: K.M.E., S.D.M. and D.M.J.L. Writing, original draft: K.M.E. and D.M.J.L. Writing, review and editing: K.M.E., D.F., S.D.M., D.M.J.L. and J.G.

Corresponding author

Correspondence to David M. J. Lazer .

Ethics declarations

Competing interests.

The authors declare no competing interests.

Peer review

Peer review information.

Nature thanks Jason Reifler and the other, anonymous, reviewer(s) for their contribution to the peer review of this work. Peer review reports are available.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Extended data figures and tables

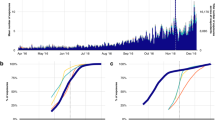

Extended data fig. 1 replication of the did results varying the number of deplatformed accounts..

DID estimates where the intervention depends on the number of deplatformed users that were followed by the not-deplatformed misinformation sharers. Results are two-way fixed effect point estimates (dots) and 95% confidence intervals (bars) of the difference-in-differences for all activity levels combined. Estimates use ordinary least squares with clustered standard errors at user-level. The Figure shows results including and excluding Trump followers (color code). The x-axis shows the minimum number of deplatformed accounts the user followed from at least one (1+) to at least ten (10+). Total sample sizes for each dosage level: Follow Trump (No): 1: 625,865; 2: 538,460; 3: 495,723; 4: 470,380; 5: 451,468; 6: 437,574; 7: 426,772; 8: 417,200; 9: 408,672; 10: 401,467; Follow Trump (Yes): 1: 688,174; 2: 570,637; 3: 514,352; 4: 481,684; 5: 460,676; 6: 444,656; 7: 432,659; 8: 421,924; 9: 413,241; 10: 405,766.

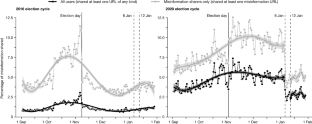

Extended Data Fig. 2 SRD results for total (bottom row) and average (top row) misinformation tweets and retweets, for deplatformed and not-deplatformed users.

Sample size includes 546 observations (days) on average across groups (x-axis), 404 before and 136 after. The effective number of observations is 64.31 days before and after on average. The estimation excludes data between Jan 6 (cutoff point) and 12 (included). January 6th is the score value 0, and January 12th the score value 1. Optimal bandwidth of 32.6 days with triangular kernel and order-one polynomial. Bars indicate 95% robust bias-corrected confidence intervals.

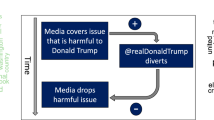

Extended Data Fig. 3 Time series of the daily mean of non-misinformation URL sharing.

Degree five polynomial regression (fitted line) before and after the deplatforming intervention, separated by subgroup (panel rows), for liberal-slant news (right column), and conservative-slant news (left column) sharing activity. Shaded area around the fitted line is the 95% confidence interval of the fitted values. As a placebo test we evaluate the effect of the intervention on sharing non-fake news for each of our subgroups. Since sharing non-misinformation does not violate Twitter’s Civic Integrity policy – irrespective of the ideological slant of the news – we do not expect the intervention to have an impact on this form of Twitter engagement; see SI for how we identify liberal and conservative slant of these domains from ref. 52 . Among the subgroups, users typically did not change their sharing of liberal or conservative non-fake news. Taking these results alongside those in Fig. 2 implies that these subgroups of users did not substitute non-misinformation conservative news sharing during and after the insurrection in place of misinformation.

Extended Data Fig. 4 Time series of misinformation tweets and retweets (panel columns), separately for high, medium and low activity users (panel rows).

Fitted straight lines describe a linear regression fitted using ordinary least squares of daily total misinformation retweeted standardized (y-axis) on days (x-axis) before January 6th and after January 12th. Shaded areas around the fitted line are 95% confidence intervals.

Extended Data Fig. 5 Replicates Fig. 5 but with adjustment covariates.

Corresponding regression tables are Supplementary Information Tables 1 to 3 . Two-way fixed effect point estimates (dots) and 95% confidence intervals (bars) of the difference-in-differences for high, moderate, and low activity users, as well as all these levels combined (x-axis). P-values (stars) are from two-sided t-tests based on ordinary least squares estimates with clustered standard errors at user-level. Estimates compare followers (treated group) and not-followers (reference group) of deplatformed users after January 12th (post-treatment period) and before January 6th (pre-treatment period). No multiple test correction was used. See Supplementary Information Tables 1 – 3 for exact values with all activity level users combined. Total sample sizes of not-followers (reference) and Trump-only followers: combined: 306,089, high: 53,962, moderate: 219,375, low: 32,003; Followers: combined: 662,216, high: 156,941, moderate: 449,560, low: 53,442; Followers (4+): combined: 463,176, high: 115,264, moderate: 302,907, low: 43,218.

Extended Data Fig. 6 Placebo test of SRD results for total (bottom row) and average (top row) shopping and sports tweets and retweets at the deplatforming intervention, among those not deplatformed.

Sample size includes 545 observations (days), 404 before the intervention and 141 after. Optimal bandwidth of 843.6 days with triangular kernel and order-one polynomial. Cutoff points on January 6th (score 0) and January 12th (score 1). Bars indicate 95% robust bias-corrected confidence intervals. These are placebo tests since tweets about sports and shoppings should not be affected by the insurrection or deplatforming.

Extended Data Fig. 7 Placebo test of SRD results for total (bottom row) and average (top row) misinformation tweets and retweets using December 20th as an arbitrary cutoff point.

Sample size includes 551 observations (days), 387 before the intervention and 164 after. Optimal bandwidth of 37.2 days with triangular kernel and order-one polynomial. Bars indicate 95% robust bias-corrected confidence intervals about the SRD coefficients. This is a placebo test of the intervention period.

Extended Data Fig. 8 Placebo test of SRD results for total (bottom row) and average (top row) misinformation tweets and retweets using January 18th as a cutoff point.

The parameters are very similar to Extended Data Fig. 7 .

Supplementary information

Supplementary information.

Supplementary Figs. 1–5 provide descriptive information about our subgroups, a replication of the panel data using the Decahose, and robustness analyses for the SRD. Supplementary Tables 1–5 show full parameter estimates for the DID models, summary statistics for follower type and activity level, and P values for the DID analyses under different multiple comparisons corrections.

Reporting Summary

Peer review file, rights and permissions.

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

Reprints and permissions

About this article

Cite this article.

McCabe, S.D., Ferrari, D., Green, J. et al. Post-January 6th deplatforming reduced the reach of misinformation on Twitter. Nature 630 , 132–140 (2024). https://doi.org/10.1038/s41586-024-07524-8

Download citation

Received : 27 October 2023

Accepted : 06 May 2024

Published : 05 June 2024

Issue Date : 06 June 2024

DOI : https://doi.org/10.1038/s41586-024-07524-8

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

By submitting a comment you agree to abide by our Terms and Community Guidelines . If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

What is Research? (Quiz)

Students also viewed

IMAGES

VIDEO

COMMENTS

Option A is an example of a testable hypothesis because it provides a specific prediction that can be tested. Explanation: In the context of science and research, a testable hypothesis is one that can be proven or disproven through experimental or observational design. It must provide a direction for the research and make clear, explicit ...

Study with Quizlet and memorize flashcards containing terms like Which of the following is a testable hypothesis? Select the best answer: -Selfies are the single worst thing to happen to our culture in the last two decades. -The number of vaccinations a child receives in the first three years of life will have no significant impact on the that child's likelihood of being diagnosed with autism ...

hypothesis. science. scientific hypothesis, an idea that proposes a tentative explanation about a phenomenon or a narrow set of phenomena observed in the natural world. The two primary features of a scientific hypothesis are falsifiability and testability, which are reflected in an "If…then" statement summarizing the idea and in the ...

Updated on January 12, 2019. A hypothesis is a tentative answer to a scientific question. A testable hypothesis is a hypothesis that can be proved or disproved as a result of testing, data collection, or experience. Only testable hypotheses can be used to conceive and perform an experiment using the scientific method .

Examples. A research hypothesis, in its plural form "hypotheses," is a specific, testable prediction about the anticipated results of a study, established at its outset. It is a key component of the scientific method. Hypotheses connect theory to data and guide the research process towards expanding scientific understanding.

The experiment provided an accurate answer to the scientific question. What was the control group for this experiment? The control group was the rats fed the normal diet without added vitamins. Study with Quizlet and memorize flashcards containing terms like What does it mean for a hypothesis to be testable?, How does the scientific method help ...

A hypothesis is a tentative answer to a scientific question. A testable hypothesis is a hypothesis that can be proved or disproved as a result of testing, data collection, or experience. Only testable hypotheses can be used to conceive and perform an experiment using the scientific method.

A. Hypotheses change every time a new experiment is conducted. B. A hypothesis cannot ever be true or false because it is an opinion. C. Experimentation cannot support a hypothesis because it only tests predictions. D. Alternative hypotheses might provide a better answer to the research question.

Answer: The correct answer would be Garden plants grow better in wet soil than in dry soil. Scientific hypothesis refers to a proposed or tentative explanation for the occurrence of a particular phenomenon. Testable hypothesis are those which can be approved or denied on the basis of results of any testing, experience, or data collection.

Answer: A hypothesis. Explanation: The hypothesis is generated via a number of means, but is usually the result of a process of inductive reasoning where observations lead to the formation of a theory. Scientists then use a large battery of deductive methods to arrive at a hypothesis that is testable, falsifiable and realistic.

Study with Quizlet and memorize flashcards containing terms like Why is the scientific method used?, which of the following is a testable hypothesis? a)Taking zinc lozenges at the first sign of cold symptoms is wise. b) Sleeping 8 hours a night makes you feel better when you have a cold. c) Avoiding contact with other people reduces the chance of catching a cold. d) Being a good driver makes ...

A testable hypothesis is one that can be investigated via experiment or observation, generating measurable, empirical data. For example, 'If I water my garden plants with a sugar-water solution, then they will grow taller than if I water them with water alone'. Here, you are predicting a possible outcome and this hypothesis is testable because ...

Study with Quizlet and memorize flashcards containing terms like What's the difference between a hypothesis and a theory?, Place the following steps in sequence A] Recognizing a problem B] Forming hypothesis C] Making inferences, In the phrase, "The scientific method is an analytic process for determining why things happen," what's the best synonym for "analytic?" and more.

Which of the following is an example of a testable scientific hypothesis? star. 5 /5. 2. verified. Verified answer. A drink that contains 4 1/2 ounces of a proof liquor… approximately how many drinks does this beverage contain? star. 4.1 /5.

Study with Quizlet and memorize flashcards containing terms like You have been asked to conduct an experiment with different flavors of ice cream. Which of the following is a scientifically testable hypothesis about ice cream? Select ALL that apply. Strawberry ice cream is delicious. Which flavor of ice cream has more Calories? Hypotheses should be written as statements, not questions ...

In a press release dated 12 January 2021 17, Twitter announced that it conducted the deplatforming intervention on 8 to 12 January, although the press release indicated that the company began to ...

Neeraj723. report flag outlined. A Scientific Hypothesis Must Be Testable. For a hypothesis to betestable means that it is possible to make observations that agree or disagree with it. If a hypothesis cannot be tested by making observations, it is not scientific. ... This statement may or may not be true, but it is not a scientific hypothesis.

Study with Quizlet and memorize flashcards containing terms like A scientific theory must be supported with empirical evidence. T/F, When researchers replicate a study, they are seeking to __________. A. prove that the hypothesis upon which the study was founded is untestable B. develop a new hypothesis C. change the study to provide new results D. support or reject the hypothesis upon which ...