- Privacy Policy

Home » Data Collection – Methods Types and Examples

Data Collection – Methods Types and Examples

Table of Contents

Data Collection

Definition:

Data collection is the process of gathering and collecting information from various sources to analyze and make informed decisions based on the data collected. This can involve various methods, such as surveys, interviews, experiments, and observation.

In order for data collection to be effective, it is important to have a clear understanding of what data is needed and what the purpose of the data collection is. This can involve identifying the population or sample being studied, determining the variables to be measured, and selecting appropriate methods for collecting and recording data.

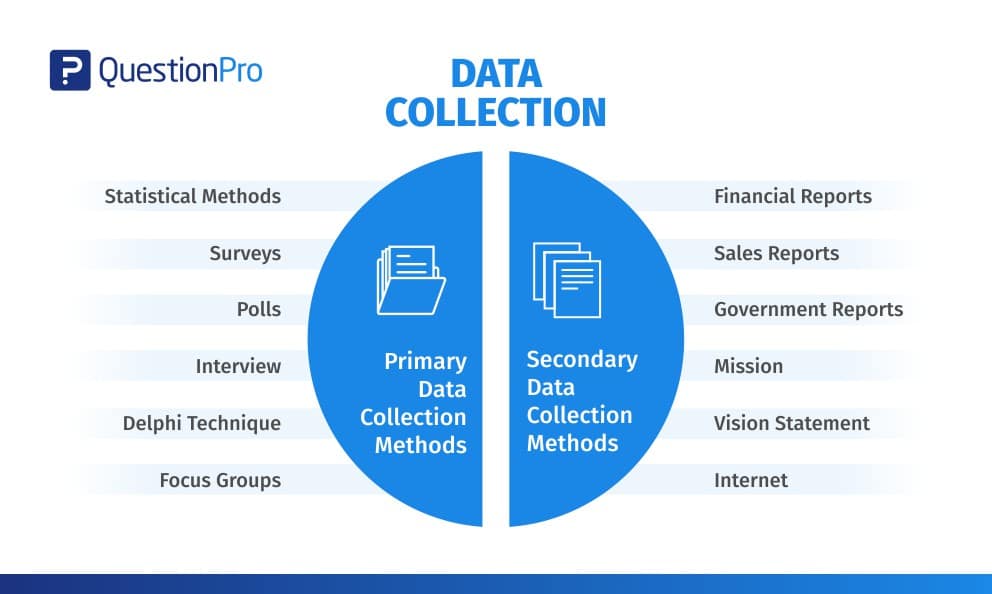

Types of Data Collection

Types of Data Collection are as follows:

Primary Data Collection

Primary data collection is the process of gathering original and firsthand information directly from the source or target population. This type of data collection involves collecting data that has not been previously gathered, recorded, or published. Primary data can be collected through various methods such as surveys, interviews, observations, experiments, and focus groups. The data collected is usually specific to the research question or objective and can provide valuable insights that cannot be obtained from secondary data sources. Primary data collection is often used in market research, social research, and scientific research.

Secondary Data Collection

Secondary data collection is the process of gathering information from existing sources that have already been collected and analyzed by someone else, rather than conducting new research to collect primary data. Secondary data can be collected from various sources, such as published reports, books, journals, newspapers, websites, government publications, and other documents.

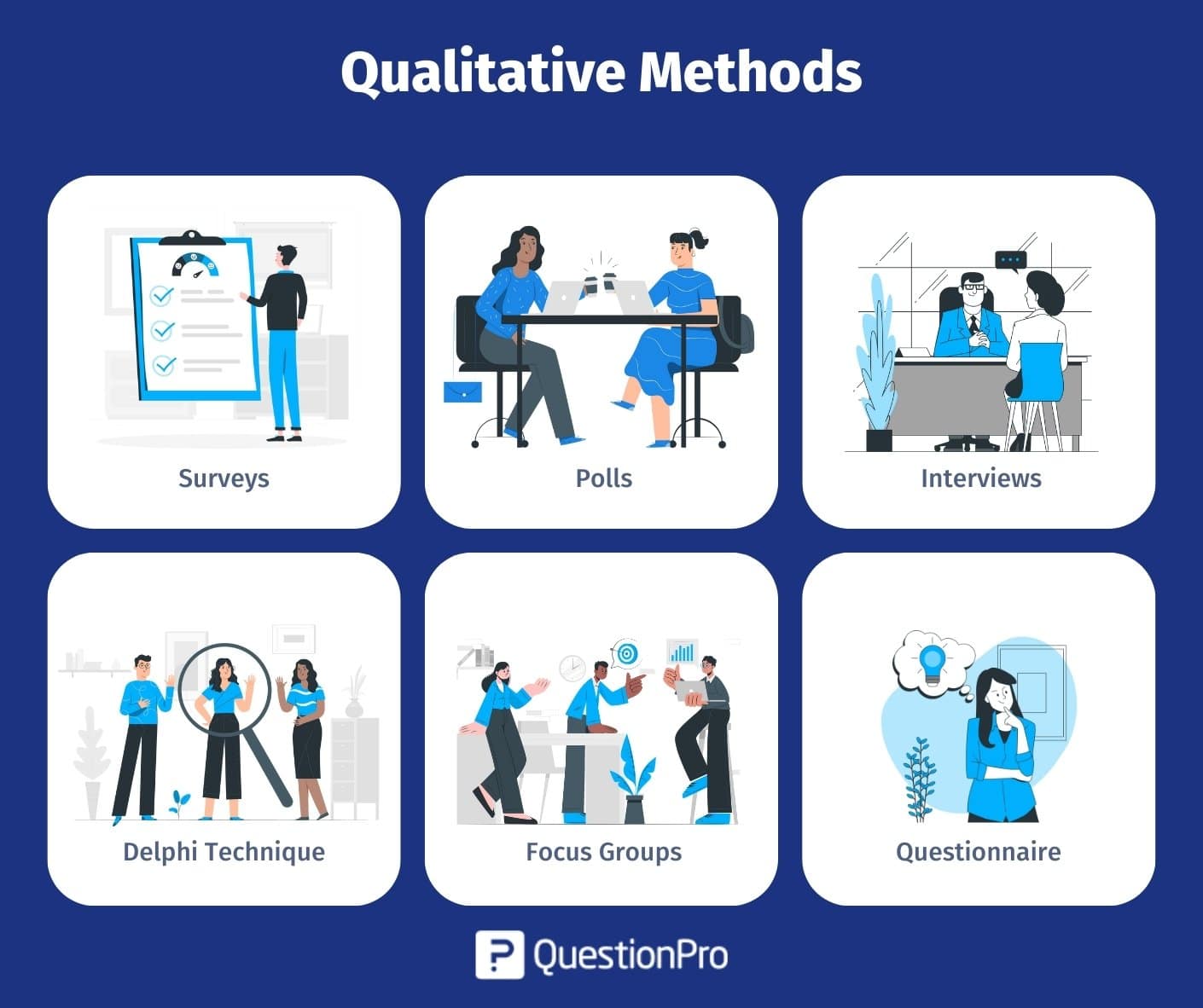

Qualitative Data Collection

Qualitative data collection is used to gather non-numerical data such as opinions, experiences, perceptions, and feelings, through techniques such as interviews, focus groups, observations, and document analysis. It seeks to understand the deeper meaning and context of a phenomenon or situation and is often used in social sciences, psychology, and humanities. Qualitative data collection methods allow for a more in-depth and holistic exploration of research questions and can provide rich and nuanced insights into human behavior and experiences.

Quantitative Data Collection

Quantitative data collection is a used to gather numerical data that can be analyzed using statistical methods. This data is typically collected through surveys, experiments, and other structured data collection methods. Quantitative data collection seeks to quantify and measure variables, such as behaviors, attitudes, and opinions, in a systematic and objective way. This data is often used to test hypotheses, identify patterns, and establish correlations between variables. Quantitative data collection methods allow for precise measurement and generalization of findings to a larger population. It is commonly used in fields such as economics, psychology, and natural sciences.

Data Collection Methods

Data Collection Methods are as follows:

Surveys involve asking questions to a sample of individuals or organizations to collect data. Surveys can be conducted in person, over the phone, or online.

Interviews involve a one-on-one conversation between the interviewer and the respondent. Interviews can be structured or unstructured and can be conducted in person or over the phone.

Focus Groups

Focus groups are group discussions that are moderated by a facilitator. Focus groups are used to collect qualitative data on a specific topic.

Observation

Observation involves watching and recording the behavior of people, objects, or events in their natural setting. Observation can be done overtly or covertly, depending on the research question.

Experiments

Experiments involve manipulating one or more variables and observing the effect on another variable. Experiments are commonly used in scientific research.

Case Studies

Case studies involve in-depth analysis of a single individual, organization, or event. Case studies are used to gain detailed information about a specific phenomenon.

Secondary Data Analysis

Secondary data analysis involves using existing data that was collected for another purpose. Secondary data can come from various sources, such as government agencies, academic institutions, or private companies.

How to Collect Data

The following are some steps to consider when collecting data:

- Define the objective : Before you start collecting data, you need to define the objective of the study. This will help you determine what data you need to collect and how to collect it.

- Identify the data sources : Identify the sources of data that will help you achieve your objective. These sources can be primary sources, such as surveys, interviews, and observations, or secondary sources, such as books, articles, and databases.

- Determine the data collection method : Once you have identified the data sources, you need to determine the data collection method. This could be through online surveys, phone interviews, or face-to-face meetings.

- Develop a data collection plan : Develop a plan that outlines the steps you will take to collect the data. This plan should include the timeline, the tools and equipment needed, and the personnel involved.

- Test the data collection process: Before you start collecting data, test the data collection process to ensure that it is effective and efficient.

- Collect the data: Collect the data according to the plan you developed in step 4. Make sure you record the data accurately and consistently.

- Analyze the data: Once you have collected the data, analyze it to draw conclusions and make recommendations.

- Report the findings: Report the findings of your data analysis to the relevant stakeholders. This could be in the form of a report, a presentation, or a publication.

- Monitor and evaluate the data collection process: After the data collection process is complete, monitor and evaluate the process to identify areas for improvement in future data collection efforts.

- Ensure data quality: Ensure that the collected data is of high quality and free from errors. This can be achieved by validating the data for accuracy, completeness, and consistency.

- Maintain data security: Ensure that the collected data is secure and protected from unauthorized access or disclosure. This can be achieved by implementing data security protocols and using secure storage and transmission methods.

- Follow ethical considerations: Follow ethical considerations when collecting data, such as obtaining informed consent from participants, protecting their privacy and confidentiality, and ensuring that the research does not cause harm to participants.

- Use appropriate data analysis methods : Use appropriate data analysis methods based on the type of data collected and the research objectives. This could include statistical analysis, qualitative analysis, or a combination of both.

- Record and store data properly: Record and store the collected data properly, in a structured and organized format. This will make it easier to retrieve and use the data in future research or analysis.

- Collaborate with other stakeholders : Collaborate with other stakeholders, such as colleagues, experts, or community members, to ensure that the data collected is relevant and useful for the intended purpose.

Applications of Data Collection

Data collection methods are widely used in different fields, including social sciences, healthcare, business, education, and more. Here are some examples of how data collection methods are used in different fields:

- Social sciences : Social scientists often use surveys, questionnaires, and interviews to collect data from individuals or groups. They may also use observation to collect data on social behaviors and interactions. This data is often used to study topics such as human behavior, attitudes, and beliefs.

- Healthcare : Data collection methods are used in healthcare to monitor patient health and track treatment outcomes. Electronic health records and medical charts are commonly used to collect data on patients’ medical history, diagnoses, and treatments. Researchers may also use clinical trials and surveys to collect data on the effectiveness of different treatments.

- Business : Businesses use data collection methods to gather information on consumer behavior, market trends, and competitor activity. They may collect data through customer surveys, sales reports, and market research studies. This data is used to inform business decisions, develop marketing strategies, and improve products and services.

- Education : In education, data collection methods are used to assess student performance and measure the effectiveness of teaching methods. Standardized tests, quizzes, and exams are commonly used to collect data on student learning outcomes. Teachers may also use classroom observation and student feedback to gather data on teaching effectiveness.

- Agriculture : Farmers use data collection methods to monitor crop growth and health. Sensors and remote sensing technology can be used to collect data on soil moisture, temperature, and nutrient levels. This data is used to optimize crop yields and minimize waste.

- Environmental sciences : Environmental scientists use data collection methods to monitor air and water quality, track climate patterns, and measure the impact of human activity on the environment. They may use sensors, satellite imagery, and laboratory analysis to collect data on environmental factors.

- Transportation : Transportation companies use data collection methods to track vehicle performance, optimize routes, and improve safety. GPS systems, on-board sensors, and other tracking technologies are used to collect data on vehicle speed, fuel consumption, and driver behavior.

Examples of Data Collection

Examples of Data Collection are as follows:

- Traffic Monitoring: Cities collect real-time data on traffic patterns and congestion through sensors on roads and cameras at intersections. This information can be used to optimize traffic flow and improve safety.

- Social Media Monitoring : Companies can collect real-time data on social media platforms such as Twitter and Facebook to monitor their brand reputation, track customer sentiment, and respond to customer inquiries and complaints in real-time.

- Weather Monitoring: Weather agencies collect real-time data on temperature, humidity, air pressure, and precipitation through weather stations and satellites. This information is used to provide accurate weather forecasts and warnings.

- Stock Market Monitoring : Financial institutions collect real-time data on stock prices, trading volumes, and other market indicators to make informed investment decisions and respond to market fluctuations in real-time.

- Health Monitoring : Medical devices such as wearable fitness trackers and smartwatches can collect real-time data on a person’s heart rate, blood pressure, and other vital signs. This information can be used to monitor health conditions and detect early warning signs of health issues.

Purpose of Data Collection

The purpose of data collection can vary depending on the context and goals of the study, but generally, it serves to:

- Provide information: Data collection provides information about a particular phenomenon or behavior that can be used to better understand it.

- Measure progress : Data collection can be used to measure the effectiveness of interventions or programs designed to address a particular issue or problem.

- Support decision-making : Data collection provides decision-makers with evidence-based information that can be used to inform policies, strategies, and actions.

- Identify trends : Data collection can help identify trends and patterns over time that may indicate changes in behaviors or outcomes.

- Monitor and evaluate : Data collection can be used to monitor and evaluate the implementation and impact of policies, programs, and initiatives.

When to use Data Collection

Data collection is used when there is a need to gather information or data on a specific topic or phenomenon. It is typically used in research, evaluation, and monitoring and is important for making informed decisions and improving outcomes.

Data collection is particularly useful in the following scenarios:

- Research : When conducting research, data collection is used to gather information on variables of interest to answer research questions and test hypotheses.

- Evaluation : Data collection is used in program evaluation to assess the effectiveness of programs or interventions, and to identify areas for improvement.

- Monitoring : Data collection is used in monitoring to track progress towards achieving goals or targets, and to identify any areas that require attention.

- Decision-making: Data collection is used to provide decision-makers with information that can be used to inform policies, strategies, and actions.

- Quality improvement : Data collection is used in quality improvement efforts to identify areas where improvements can be made and to measure progress towards achieving goals.

Characteristics of Data Collection

Data collection can be characterized by several important characteristics that help to ensure the quality and accuracy of the data gathered. These characteristics include:

- Validity : Validity refers to the accuracy and relevance of the data collected in relation to the research question or objective.

- Reliability : Reliability refers to the consistency and stability of the data collection process, ensuring that the results obtained are consistent over time and across different contexts.

- Objectivity : Objectivity refers to the impartiality of the data collection process, ensuring that the data collected is not influenced by the biases or personal opinions of the data collector.

- Precision : Precision refers to the degree of accuracy and detail in the data collected, ensuring that the data is specific and accurate enough to answer the research question or objective.

- Timeliness : Timeliness refers to the efficiency and speed with which the data is collected, ensuring that the data is collected in a timely manner to meet the needs of the research or evaluation.

- Ethical considerations : Ethical considerations refer to the ethical principles that must be followed when collecting data, such as ensuring confidentiality and obtaining informed consent from participants.

Advantages of Data Collection

There are several advantages of data collection that make it an important process in research, evaluation, and monitoring. These advantages include:

- Better decision-making : Data collection provides decision-makers with evidence-based information that can be used to inform policies, strategies, and actions, leading to better decision-making.

- Improved understanding: Data collection helps to improve our understanding of a particular phenomenon or behavior by providing empirical evidence that can be analyzed and interpreted.

- Evaluation of interventions: Data collection is essential in evaluating the effectiveness of interventions or programs designed to address a particular issue or problem.

- Identifying trends and patterns: Data collection can help identify trends and patterns over time that may indicate changes in behaviors or outcomes.

- Increased accountability: Data collection increases accountability by providing evidence that can be used to monitor and evaluate the implementation and impact of policies, programs, and initiatives.

- Validation of theories: Data collection can be used to test hypotheses and validate theories, leading to a better understanding of the phenomenon being studied.

- Improved quality: Data collection is used in quality improvement efforts to identify areas where improvements can be made and to measure progress towards achieving goals.

Limitations of Data Collection

While data collection has several advantages, it also has some limitations that must be considered. These limitations include:

- Bias : Data collection can be influenced by the biases and personal opinions of the data collector, which can lead to inaccurate or misleading results.

- Sampling bias : Data collection may not be representative of the entire population, resulting in sampling bias and inaccurate results.

- Cost : Data collection can be expensive and time-consuming, particularly for large-scale studies.

- Limited scope: Data collection is limited to the variables being measured, which may not capture the entire picture or context of the phenomenon being studied.

- Ethical considerations : Data collection must follow ethical principles to protect the rights and confidentiality of the participants, which can limit the type of data that can be collected.

- Data quality issues: Data collection may result in data quality issues such as missing or incomplete data, measurement errors, and inconsistencies.

- Limited generalizability : Data collection may not be generalizable to other contexts or populations, limiting the generalizability of the findings.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Future Research – Thesis Guide

Research Methods – Types, Examples and Guide

Data Interpretation – Process, Methods and...

Institutional Review Board – Application Sample...

Informed Consent in Research – Types, Templates...

Ethical Considerations – Types, Examples and...

Data collection in research: Your complete guide

Last updated

31 January 2023

Reviewed by

Cathy Heath

In the late 16th century, Francis Bacon coined the phrase "knowledge is power," which implies that knowledge is a powerful force, like physical strength. In the 21st century, knowledge in the form of data is unquestionably powerful.

But data isn't something you just have - you need to collect it. This means utilizing a data collection process and turning the collected data into knowledge that you can leverage into a successful strategy for your business or organization.

Believe it or not, there's more to data collection than just conducting a Google search. In this complete guide, we shine a spotlight on data collection, outlining what it is, types of data collection methods, common challenges in data collection, data collection techniques, and the steps involved in data collection.

Analyze all your data in one place

Uncover hidden nuggets in all types of qualitative data when you analyze it in Dovetail

- What is data collection?

There are two specific data collection techniques: primary and secondary data collection. Primary data collection is the process of gathering data directly from sources. It's often considered the most reliable data collection method, as researchers can collect information directly from respondents.

Secondary data collection is data that has already been collected by someone else and is readily available. This data is usually less expensive and quicker to obtain than primary data.

- What are the different methods of data collection?

There are several data collection methods, which can be either manual or automated. Manual data collection involves collecting data manually, typically with pen and paper, while computerized data collection involves using software to collect data from online sources, such as social media, website data, transaction data, etc.

Here are the five most popular methods of data collection:

Surveys are a very popular method of data collection that organizations can use to gather information from many people. Researchers can conduct multi-mode surveys that reach respondents in different ways, including in person, by mail, over the phone, or online.

As a method of data collection, surveys have several advantages. For instance, they are relatively quick and easy to administer, you can be flexible in what you ask, and they can be tailored to collect data on various topics or from certain demographics.

However, surveys also have several disadvantages. For instance, they can be expensive to administer, and the results may not represent the population as a whole. Additionally, survey data can be challenging to interpret. It may also be subject to bias if the questions are not well-designed or if the sample of people surveyed is not representative of the population of interest.

Interviews are a common method of collecting data in social science research. You can conduct interviews in person, over the phone, or even via email or online chat.

Interviews are a great way to collect qualitative and quantitative data . Qualitative interviews are likely your best option if you need to collect detailed information about your subjects' experiences or opinions. If you need to collect more generalized data about your subjects' demographics or attitudes, then quantitative interviews may be a better option.

Interviews are relatively quick and very flexible, allowing you to ask follow-up questions and explore topics in more depth. The downside is that interviews can be time-consuming and expensive due to the amount of information to be analyzed. They are also prone to bias, as both the interviewer and the respondent may have certain expectations or preconceptions that may influence the data.

Direct observation

Observation is a direct way of collecting data. It can be structured (with a specific protocol to follow) or unstructured (simply observing without a particular plan).

Organizations and businesses use observation as a data collection method to gather information about their target market, customers, or competition. Businesses can learn about consumer behavior, preferences, and trends by observing people using their products or service.

There are two types of observation: participatory and non-participatory. In participatory observation, the researcher is actively involved in the observed activities. This type of observation is used in ethnographic research , where the researcher wants to understand a group's culture and social norms. Non-participatory observation is when researchers observe from a distance and do not interact with the people or environment they are studying.

There are several advantages to using observation as a data collection method. It can provide insights that may not be apparent through other methods, such as surveys or interviews. Researchers can also observe behavior in a natural setting, which can provide a more accurate picture of what people do and how and why they behave in a certain context.

There are some disadvantages to using observation as a method of data collection. It can be time-consuming, intrusive, and expensive to observe people for extended periods. Observations can also be tainted if the researcher is not careful to avoid personal biases or preconceptions.

Automated data collection

Business applications and websites are increasingly collecting data electronically to improve the user experience or for marketing purposes.

There are a few different ways that organizations can collect data automatically. One way is through cookies, which are small pieces of data stored on a user's computer. They track a user's browsing history and activity on a site, measuring levels of engagement with a business’s products or services, for example.

Another way organizations can collect data automatically is through web beacons. Web beacons are small images embedded on a web page to track a user's activity.

Finally, organizations can also collect data through mobile apps, which can track user location, device information, and app usage. This data can be used to improve the user experience and for marketing purposes.

Automated data collection is a valuable tool for businesses, helping improve the user experience or target marketing efforts. Businesses should aim to be transparent about how they collect and use this data.

Sourcing data through information service providers

Organizations need to be able to collect data from a variety of sources, including social media, weblogs, and sensors. The process to do this and then use the data for action needs to be efficient, targeted, and meaningful.

In the era of big data, organizations are increasingly turning to information service providers (ISPs) and other external data sources to help them collect data to make crucial decisions.

Information service providers help organizations collect data by offering personalized services that suit the specific needs of the organizations. These services can include data collection, analysis, management, and reporting. By partnering with an ISP, organizations can gain access to the newest technology and tools to help them to gather and manage data more effectively.

There are also several tools and techniques that organizations can use to collect data from external sources, such as web scraping, which collects data from websites, and data mining, which involves using algorithms to extract data from large data sets.

Organizations can also use APIs (application programming interface) to collect data from external sources. APIs allow organizations to access data stored in another system and share and integrate it into their own systems.

Finally, organizations can also use manual methods to collect data from external sources. This can involve contacting companies or individuals directly to request data, by using the right tools and methods to get the insights they need.

- What are common challenges in data collection?

There are many challenges that researchers face when collecting data. Here are five common examples:

Big data environments

Data collection can be a challenge in big data environments for several reasons. It can be located in different places, such as archives, libraries, or online. The sheer volume of data can also make it difficult to identify the most relevant data sets.

Second, the complexity of data sets can make it challenging to extract the desired information. Third, the distributed nature of big data environments can make it difficult to collect data promptly and efficiently.

Therefore it is important to have a well-designed data collection strategy to consider the specific needs of the organization and what data sets are the most relevant. Alongside this, consideration should be made regarding the tools and resources available to support data collection and protect it from unintended use.

Data bias is a common challenge in data collection. It occurs when data is collected from a sample that is not representative of the population of interest.

There are different types of data bias, but some common ones include selection bias, self-selection bias, and response bias. Selection bias can occur when the collected data does not represent the population being studied. For example, if a study only includes data from people who volunteer to participate, that data may not represent the general population.

Self-selection bias can also occur when people self-select into a study, such as by taking part only if they think they will benefit from it. Response bias happens when people respond in a way that is not honest or accurate, such as by only answering questions that make them look good.

These types of data bias present a challenge because they can lead to inaccurate results and conclusions about behaviors, perceptions, and trends. Data bias can be avoided by identifying potential sources or themes of bias and setting guidelines for eliminating them.

Lack of quality assurance processes

One of the biggest challenges in data collection is the lack of quality assurance processes. This can lead to several problems, including incorrect data, missing data, and inconsistencies between data sets.

Quality assurance is important because there are many data sources, and each source may have different levels of quality or corruption. There are also different ways of collecting data, and data quality may vary depending on the method used.

There are several ways to improve quality assurance in data collection. These include developing clear and consistent goals and guidelines for data collection, implementing quality control measures, using standardized procedures, and employing data validation techniques. By taking these steps, you can ensure that your data is of adequate quality to inform decision-making.

Limited access to data

Another challenge in data collection is limited access to data. This can be due to several reasons, including privacy concerns, the sensitive nature of the data, security concerns, or simply the fact that data is not readily available.

Legal and compliance regulations

Most countries have regulations governing how data can be collected, used, and stored. In some cases, data collected in one country may not be used in another. This means gaining a global perspective can be a challenge.

For example, if a company is required to comply with the EU General Data Protection Regulation (GDPR), it may not be able to collect data from individuals in the EU without their explicit consent. This can make it difficult to collect data from a target audience.

Legal and compliance regulations can be complex, and it's important to ensure that all data collected is done so in a way that complies with the relevant regulations.

- What are the key steps in the data collection process?

There are five steps involved in the data collection process. They are:

1. Decide what data you want to gather

Have a clear understanding of the questions you are asking, and then consider where the answers might lie and how you might obtain them. This saves time and resources by avoiding the collection of irrelevant data, and helps maintain the quality of your datasets.

2. Establish a deadline for data collection

Establishing a deadline for data collection helps you avoid collecting too much data, which can be costly and time-consuming to analyze. It also allows you to plan for data analysis and prompt interpretation. Finally, it helps you meet your research goals and objectives and allows you to move forward.

3. Select a data collection approach

The data collection approach you choose will depend on different factors, including the type of data you need, available resources, and the project timeline. For instance, if you need qualitative data, you might choose a focus group or interview methodology. If you need quantitative data , then a survey or observational study may be the most appropriate form of collection.

4. Gather information

When collecting data for your business, identify your business goals first. Once you know what you want to achieve, you can start collecting data to reach those goals. The most important thing is to ensure that the data you collect is reliable and valid. Otherwise, any decisions you make using the data could result in a negative outcome for your business.

5. Examine the information and apply your findings

As a researcher, it's important to examine the data you're collecting and analyzing before you apply your findings. This is because data can be misleading, leading to inaccurate conclusions. Ask yourself whether it is what you are expecting? Is it similar to other datasets you have looked at?

There are many scientific ways to examine data, but some common methods include:

looking at the distribution of data points

examining the relationships between variables

looking for outliers

By taking the time to examine your data and noticing any patterns, strange or otherwise, you can avoid making mistakes that could invalidate your research.

- How qualitative analysis software streamlines the data collection process

Knowledge derived from data does indeed carry power. However, if you don't convert the knowledge into action, it will remain a resource of unexploited energy and wasted potential.

Luckily, data collection tools enable organizations to streamline their data collection and analysis processes and leverage the derived knowledge to grow their businesses. For instance, qualitative analysis software can be highly advantageous in data collection by streamlining the process, making it more efficient and less time-consuming.

Secondly, qualitative analysis software provides a structure for data collection and analysis, ensuring that data is of high quality. It can also help to uncover patterns and relationships that would otherwise be difficult to discern. Moreover, you can use it to replace more expensive data collection methods, such as focus groups or surveys.

Overall, qualitative analysis software can be valuable for any researcher looking to collect and analyze data. By increasing efficiency, improving data quality, and providing greater insights, qualitative software can help to make the research process much more efficient and effective.

Learn more about qualitative research data analysis software

Should you be using a customer insights hub.

Do you want to discover previous research faster?

Do you share your research findings with others?

Do you analyze research data?

Start for free today, add your research, and get to key insights faster

Editor’s picks

Last updated: 13 April 2023

Last updated: 8 February 2023

Last updated: 27 January 2024

Last updated: 17 January 2024

Last updated: 20 January 2024

Last updated: 30 January 2024

Last updated: 7 March 2023

Last updated: 18 May 2023

Last updated: 31 January 2024

Last updated: 23 January 2024

Last updated: 13 May 2024

Latest articles

Related topics, .css-je19u9{-webkit-align-items:flex-end;-webkit-box-align:flex-end;-ms-flex-align:flex-end;align-items:flex-end;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-flex-direction:row;-ms-flex-direction:row;flex-direction:row;-webkit-box-flex-wrap:wrap;-webkit-flex-wrap:wrap;-ms-flex-wrap:wrap;flex-wrap:wrap;-webkit-box-pack:center;-ms-flex-pack:center;-webkit-justify-content:center;justify-content:center;row-gap:0;text-align:center;max-width:671px;}@media (max-width: 1079px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}}@media (max-width: 799px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}} decide what to .css-1kiodld{max-height:56px;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;}@media (max-width: 1079px){.css-1kiodld{display:none;}} build next, decide what to build next.

Users report unexpectedly high data usage, especially during streaming sessions.

Users find it hard to navigate from the home page to relevant playlists in the app.

It would be great to have a sleep timer feature, especially for bedtime listening.

I need better filters to find the songs or artists I’m looking for.

Log in or sign up

Get started for free

A Guide to Data Collection: Methods, Process, and Tools

Whether your field is development economics, international development, the nonprofit sector, or myriad other industries, effective data collection is essential. It informs decision-making and increases your organization’s impact. However, the process of data collection can be complex and challenging. If you’re in the beginning stages of creating a data collection process, this guide is for you. It outlines tested methods, efficient procedures, and effective tools to help you improve your data collection activities and outcomes. At SurveyCTO, we’ve used our years of experience and expertise to build a robust, secure, and scalable mobile data collection platform. It’s trusted by respected institutions like The World Bank, J-PAL, Oxfam, and the Gates Foundation, and it’s changed the way many organizations collect and use data. With this guide, we want to share what we know and help you get ready to take the first step in your data collection journey.

Main takeaways from this guide

- Before starting the data collection process, define your goals and identify data sources, which can be primary (first-hand research) or secondary (existing resources).

- Your data collection method should align with your goals, resources, and the nature of the data needed. Surveys, interviews, observations, focus groups, and forms are common data collection methods.

- Sampling involves selecting a representative group from a larger population. Choosing the right sampling method to gather representative and relevant data is crucial.

- Crafting effective data collection instruments like surveys and questionnaires is key. Instruments should undergo rigorous testing for reliability and accuracy.

- Data collection is an ongoing, iterative process that demands real-time monitoring and adjustments to ensure high-quality, reliable results.

- After data collection, data should be cleaned to eliminate errors and organized for efficient analysis. The data collection journey further extends into data analysis, where patterns and useful information that can inform decision-making are discovered.

- Common challenges in data collection include data quality and consistency issues, data security concerns, and limitations with offline surveys . Employing robust data validation processes, implementing strong security protocols, and using offline-enabled data collection tools can help overcome these challenges.

- Data collection, entry, and management tools and data analysis, visualization, reporting, and workflow tools can streamline the data collection process, improve data quality, and facilitate data analysis.

What is data collection?

The traditional definition of data collection might lead us to think of gathering information through surveys, observations, or interviews. However, the modern-age definition of data collection extends beyond conducting surveys and observations. It encompasses the systematic gathering and recording of any kind of information through digital or manual methods. Data collection can be as routine as a doctor logging a patient’s information into an electronic medical record system during each clinic visit, or as specific as keeping a record of mosquito nets delivered to a rural household.

Getting started with data collection

Before starting your data collection process, you must clearly understand what you aim to achieve and how you’ll get there. Below are some actionable steps to help you get started.

1. Define your goals

Defining your goals is a crucial first step. Engage relevant stakeholders and team members in an iterative and collaborative process to establish clear goals. It’s important that projects start with the identification of key questions and desired outcomes to ensure you focus your efforts on gathering the right information.

Start by understanding the purpose of your project– what problem are you trying to solve, or what change do you want to bring about? Think about your project’s potential outcomes and obstacles and try to anticipate what kind of data would be useful in these scenarios. Consider who will be using the data you collect and what data would be the most valuable to them. Think about the long-term effects of your project and how you will measure these over time. Lastly, leverage any historical data from previous projects to help you refine key questions that may have been overlooked previously.

Once questions and outcomes are established, your data collection goals may still vary based on the context of your work. To demonstrate, let’s use the example of an international organization working on a healthcare project in a remote area.

- If you’re a researcher , your goal will revolve around collecting primary data to answer specific questions. This could involve designing a survey or conducting interviews to collect first-hand data on patient improvement, disease or illness prevalence, and behavior changes (such as an increase in patients seeking healthcare).

- If you’re part of the monitoring and evaluation ( M&E) team , your goal will revolve around measuring the success of your healthcare project. This could involve collecting primary data through surveys or observations and developing a dashboard to display real-time metrics like the number of patients treated, percentage of reduction in incidences of disease,, and average patient wait times. Your focus would be using this data to implement any needed program changes and ensure your project meets its objectives.

- If you’re part of a field team , your goal will center around the efficient and accurate execution of project plans. You might be responsible for using data collection tools to capture pertinent information in different settings, such as in interviews takendirectly from the sample community or over the phone. The data you collect and manage will directly influence the operational efficiency of the project and assist in achieving the project’s overarching objectives.

2. Identify your data sources

The crucial next step in your research process is determining your data source. Essentially, there are two main data types to choose from: primary and secondary.

- Primary data is the information you collect directly from first-hand engagements. It’s gathered specifically for your research and tailored to your research question. Primary data collection methods can range from surveys and interviews to focus groups and observations. Because you design the data collection process, primary data can offer precise, context-specific information directly related to your research objectives. For example, suppose you are investigating the impact of a new education policy. In that case, primary data might be collected through surveys distributed to teachers or interviews with school administrators dealing directly with the policy’s implementation.

- Secondary data, on the other hand, is derived from resources that already exist. This can include information gathered for other research projects, administrative records, historical documents, statistical databases, and more. While not originally collected for your specific study, secondary data can offer valuable insights and background information that complement your primary data. For instance, continuing with the education policy example, secondary data might involve academic articles about similar policies, government reports on education or previous survey data about teachers’ opinions on educational reforms.

While both types of data have their strengths, this guide will predominantly focus on primary data and the methods to collect it. Primary data is often emphasized in research because it provides fresh, first-hand insights that directly address your research questions. Primary data also allows for more control over the data collection process, ensuring data is relevant, accurate, and up-to-date.

However, secondary data can offer critical context, allow for longitudinal analysis, save time and resources, and provide a comparative framework for interpreting your primary data. It can be a crucial backdrop against which your primary data can be understood and analyzed. While we focus on primary data collection methods in this guide, we encourage you not to overlook the value of incorporating secondary data into your research design where appropriate.

3. Choose your data collection method

When choosing your data collection method, there are many options at your disposal. Data collection is not limited to methods like surveys and interviews. In fact, many of the processes in our daily lives serve the goal of collecting data, from intake forms to automated endpoints, such as payment terminals and mass transit card readers. Let us dive into some common types of data collection methods:

Surveys and Questionnaires

Surveys and questionnaires are tools for gathering information about a group of individuals, typically by asking them predefined questions. They can be used to collect quantitative and qualitative data and be administered in various ways, including online, over the phone, in person (offline), or by mail.

- Advantages : They allow researchers to reach many participants quickly and cost-effectively, making them ideal for large-scale studies. The structured format of questions makes analysis easier.

- Disadvantages : They may not capture complex or nuanced information as participants are limited to predefined response choices. Also, there can be issues with response bias, where participants might provide socially desirable answers rather than honest ones.

Interviews involve a one-on-one conversation between the researcher and the participant. The interviewer asks open-ended questions to gain detailed information about the participant’s thoughts, feelings, experiences, and behaviors.

- Advantages : They allow for an in-depth understanding of the topic at hand. The researcher can adapt the questioning in real time based on the participant’s responses, allowing for more flexibility.

- Disadvantages : They can be time-consuming and resource-intensive, as they require trained interviewers and a significant amount of time for both conducting and analyzing responses. They may also introduce interviewer bias if not conducted carefully, due to how an interviewer presents questions and perceives the respondent, and how the respondent perceives the interviewer.

Observations

Observations involve directly observing and recording behavior or other phenomena as they occur in their natural settings.

- Advantages : Observations can provide valuable contextual information, as researchers can study behavior in the environment where it naturally occurs, reducing the risk of artificiality associated with laboratory settings or self-reported measures.

- Disadvantages : Observational studies may suffer from observer bias, where the observer’s expectations or biases could influence their interpretation of the data. Also, some behaviors might be altered if subjects are aware they are being observed.

Focus Groups

Focus groups are guided discussions among selected individuals to gain information about their views and experiences.

- Advantages : Focus groups allow for interaction among participants, which can generate a diverse range of opinions and ideas. They are good for exploring new topics where there is little pre-existing knowledge.

- Disadvantages : Dominant voices in the group can sway the discussion, potentially silencing less assertive participants. They also require skilled facilitators to moderate the discussion effectively.

Forms are standardized documents with blank fields for collecting data in a systematic manner. They are often used in fields like Customer Relationship Management (CRM) or Electronic Medical Records (EMR) data entry. Surveys may also be referred to as forms.

- Advantages : Forms are versatile, easy to use, and efficient for data collection. They can streamline workflows by standardizing the data entry process.

- Disadvantages : They may not provide in-depth insights as the responses are typically structured and limited. There is also potential for errors in data entry, especially when done manually.

Selecting the right data collection method should be an intentional process, taking into consideration the unique requirements of your project. The method selected should align with your goals, available resources, and the nature of the data you need to collect.

If you aim to collect quantitative data, surveys, questionnaires, and forms can be excellent tools, particularly for large-scale studies. These methods are suited to providing structured responses that can be analyzed statistically, delivering solid numerical data.

However, if you’re looking to uncover a deeper understanding of a subject, qualitative data might be more suitable. In such cases, interviews, observations, and focus groups can provide richer, more nuanced insights. These methods allow you to explore experiences, opinions, and behaviors deeply. Some surveys can also include open-ended questions that provide qualitative data.

The cost of data collection is also an important consideration. If you have budget constraints, in-depth, in-person conversations with every member of your target population may not be practical. In such cases, distributing questionnaires or forms can be a cost-saving approach.

Additional considerations include language barriers and connectivity issues. If your respondents speak different languages, consider translation services or multilingual data collection tools . If your target population resides in areas with limited connectivity and your method will be to collect data using mobile devices, ensure your tool provides offline data collection , which will allow you to carry out your data collection plan without internet connectivity.

4. Determine your sampling method

Now that you’ve established your data collection goals and how you’ll collect your data, the next step is deciding whom to collect your data from. Sampling involves carefully selecting a representative group from a larger population. Choosing the right sampling method is crucial for gathering representative and relevant data that aligns with your data collection goal.

Consider the following guidelines to choose the appropriate sampling method for your research goal and data collection method:

- Understand Your Target Population: Start by conducting thorough research of your target population. Understand who they are, their characteristics, and subgroups within the population.

- Anticipate and Minimize Biases: Anticipate and address potential biases within the target population to help minimize their impact on the data. For example, will your sampling method accurately reflect all ages, gender, cultures, etc., of your target population? Are there barriers to participation for any subgroups? Your sampling method should allow you to capture the most accurate representation of your target population.

- Maintain Cost-Effective Practices: Consider the cost implications of your chosen sampling methods. Some sampling methods will require more resources, time, and effort. Your chosen sampling method should balance the cost factors with the ability to collect your data effectively and accurately.

- Consider Your Project’s Objectives: Tailor the sampling method to meet your specific objectives and constraints, such as M&E teams requiring real-time impact data and researchers needing representative samples for statistical analysis.

By adhering to these guidelines, you can make informed choices when selecting a sampling method, maximizing the quality and relevance of your data collection efforts.

5. Identify and train collectors

Not every data collection use case requires data collectors, but training individuals responsible for data collection becomes crucial in scenarios involving field presence.

The SurveyCTO platform supports both self-response survey modes and surveys that require a human field worker to do in-person interviews. Whether you’re hiring and training data collectors, utilizing an existing team, or training existing field staff, we offer comprehensive guidance and the right tools to ensure effective data collection practices.

Here are some common training approaches for data collectors:

- In-Class Training: Comprehensive sessions covering protocols, survey instruments, and best practices empower data collectors with skills and knowledge.

- Tests and Assessments: Assessments evaluate collectors’ understanding and competence, highlighting areas where additional support is needed.

- Mock Interviews: Simulated interviews refine collectors’ techniques and communication skills.

- Pre-Recorded Training Sessions: Accessible reinforcement and self-paced learning to refresh and stay updated.

Training data collectors is vital for successful data collection techniques. Your training should focus on proper instrument usage and effective interaction with respondents, including communication skills, cultural literacy, and ethical considerations.

Remember, training is an ongoing process. Knowledge gaps and issues may arise in the field, necessitating further training.

Moving Ahead: Iterative Steps in Data Collection

Once you’ve established the preliminary elements of your data collection process, you’re ready to start your data collection journey. In this section, we’ll delve into the specifics of designing and testing your instruments, collecting data, and organizing data while embracing the iterative nature of the data collection process, which requires diligent monitoring and making adjustments when needed.

6. Design and test your instruments

Designing effective data collection instruments like surveys and questionnaires is key. It’s crucial to prioritize respondent consent and privacy to ensure the integrity of your research. Thoughtful design and careful testing of survey questions are essential for optimizing research insights. Other critical considerations are:

- Clear and Unbiased Question Wording: Craft unambiguous, neutral questions free from bias to gather accurate and meaningful data. For example, instead of asking, “Shouldn’t we invest more into renewable energy that will combat the effects of climate change?” ask your question in a neutral way that allows the respondent to voice their thoughts. For example: “What are your thoughts on investing more in renewable energy?”

- Logical Ordering and Appropriate Response Format: Arrange questions logically and choose response formats (such as multiple-choice, Likert scale, or open-ended) that suit the nature of the data you aim to collect.

- Coverage of Relevant Topics: Ensure that your instrument covers all topics pertinent to your data collection goals while respecting cultural and social sensitivities. Make sure your instrument avoids assumptions, stereotypes, and languages or topics that could be considered offensive or taboo in certain contexts. The goal is to avoid marginalizing or offending respondents based on their social or cultural background.

- Collect Only Necessary Data: Design survey instruments that focus solely on gathering the data required for your research objectives, avoiding unnecessary information.

- Language(s) of the Respondent Population: Tailor your instruments to accommodate the languages your target respondents speak, offering translated versions if needed. Similarly, take into account accessibility for respondents who can’t read by offering alternative formats like images in place of text.

- Desired Length of Time for Completion: Respect respondents’ time by designing instruments that can be completed within a reasonable timeframe, balancing thoroughness with engagement. Having a general timeframe for the amount of time needed to complete a response will also help you weed out bad responses. For example, a response that was rushed and completed outside of your response timeframe could indicate a response that needs to be excluded.

- Collecting and Documenting Respondents’ Consent and Privacy: Ensure a robust consent process, transparent data usage communication, and privacy protection throughout data collection.

Perform Cognitive Interviewing

Cognitive interviewing is a method used to refine survey instruments and improve the accuracy of survey responses by evaluating how respondents understand, process, and respond to the instrument’s questions. In practice, cognitive interviewing involves an interview with the respondent, asking them to verbalize their thoughts as they interact with the instrument. By actively probing and observing their responses, you can identify and address ambiguities, ensuring accurate data collection.

Thoughtful question wording, well-organized response options, and logical sequencing enhance comprehension, minimize biases, and ensure accurate data collection. Iterative testing and refinement based on respondent feedback improve the validity, reliability, and actionability of insights obtained.

Put Your Instrument to the Test

Through rigorous testing, you can uncover flaws, ensure reliability, maximize accuracy, and validate your instrument’s performance. This can be achieved by:

- Conducting pilot testing to enhance the reliability and effectiveness of data collection. Administer the instrument, identify difficulties, gather feedback, and assess performance in real-world conditions.

- Making revisions based on pilot testing to enhance clarity, accuracy, usability, and participant satisfaction. Refine questions, instructions, and format for effective data collection.

- Continuously iterating and refining your instrument based on feedback and real-world testing. This ensures reliable, accurate, and audience-aligned methods of data collection. Additionally, this ensures your instrument adapts to changes, incorporates insights, and maintains ongoing effectiveness.

7. Collect your data

Now that you have your well-designed survey, interview questions, observation plan, or form, it’s time to implement it and gather the needed data. Data collection is not a one-and-done deal; it’s an ongoing process that demands attention to detail. Imagine spending weeks collecting data, only to discover later that a significant portion is unusable due to incomplete responses, improper collection methods, or falsified responses. To avoid such setbacks, adopt an iterative approach.

Leverage data collection tools with real-time monitoring to proactively identify outliers and issues. Take immediate action by fine-tuning your instruments, optimizing the data collection process, addressing concerns like additional training, or reevaluating personnel responsible for inaccurate data (for example, a field worker who sits in a coffee shop entering fake responses rather than doing the work of knocking on doors).

SurveyCTO’s Data Explorer was specifically designed to fulfill this requirement, empowering you to monitor incoming data, gain valuable insights, and know where changes may be needed. Embracing this iterative approach ensures ongoing improvement in data collection, resulting in more reliable and precise results.

8. Clean and organize your data

After data collection, the next step is to clean and organize the data to ensure its integrity and usability.

- Data Cleaning: This stage involves sifting through your data to identify and rectify any errors, inconsistencies, or missing values. It’s essential to maintain the accuracy of your data and ensure that it’s reliable for further analysis. Data cleaning can uncover duplicates, outliers, and gaps that could skew your results if left unchecked. With real-time data monitoring , this continuous cleaning process keeps your data precise and current throughout the data collection period. Similarly, review and corrections workflows allow you to monitor the quality of your incoming data.

- Organizing Your Data: Post-cleaning, it’s time to organize your data for efficient analysis and interpretation. Labeling your data using appropriate codes or categorizations can simplify navigation and streamline the extraction of insights. When you use a survey or form, labeling your data is often not necessary because you can design the instrument to collect in the right categories or return the right codes. An organized dataset is easier to manage, analyze, and interpret, ensuring that your collection efforts are not wasted but lead to valuable, actionable insights.

Remember, each stage of the data collection process, from design to cleaning, is iterative and interconnected. By diligently cleaning and organizing your data, you are setting the stage for robust, meaningful analysis that can inform your data-driven decisions and actions.

What happens after data collection?

The data collection journey takes us next into data analysis, where you’ll uncover patterns, empowering informed decision-making for researchers, evaluation teams, and field personnel.

Process and Analyze Your Data

Explore data through statistical and qualitative techniques to discover patterns, correlations, and insights during this pivotal stage. It’s about extracting the essence of your data and translating numbers into knowledge. Whether applying descriptive statistics, conducting regression analysis, or using thematic coding for qualitative data, this process drives decision-making and charts the path toward actionable outcomes.

Interpret and Report Your Results

Interpreting and reporting your data brings meaning and context to the numbers. Translating raw data into digestible insights for informed decision-making and effective stakeholder communication is critical.

The approach to interpretation and reporting varies depending on the perspective and role:

- Researchers often lean heavily on statistical methods to identify trends, extract meaningful conclusions, and share their findings in academic circles, contributing to their knowledge pool.

- M&E teams typically produce comprehensive reports, shedding light on the effectiveness and impact of programs. These reports guide internal and sometimes external stakeholders, supporting informed decisions and driving program improvements.

Field teams provide a first-hand perspective. Since they are often the first to see the results of the practical implementation of data, field teams are instrumental in providing immediate feedback loops on project initiatives. Field teams do the work that provides context to help research and M&E teams understand external factors like the local environment, cultural nuances, and logistical challenges that impact data results.

Safely store and handle data

Throughout the data collection process, and after it has been collected, it is vital to follow best practices for storing and handling data to ensure the integrity of your research. While the specifics of how to best store and handle data will depend on your project, here are some important guidelines to keep in mind:

- Use cloud storage to hold your data if possible, since this is safer than storing data on hard drives and keeps it more accessible,

- Periodically back up and purge old data from your system, since it’s safer to not retain data longer than necessary,

- If you use mobile devices to collect and store data, use options for private, internal apps-specific storage if and when possible,

- Restrict access to stored data to only those who need to work with that data.

Further considerations for data safety are discussed below in the section on data security .

Remember to uphold ethical standards in interpreting and reporting your data, regardless of your role. Clear communication, respectful handling of sensitive information, and adhering to confidentiality and privacy rights are all essential to fostering trust, promoting transparency, and bolstering your work’s credibility.

Common Data Collection Challenges

Data collection is vital to data-driven initiatives, but it comes with challenges. Addressing common challenges such as poor data quality, privacy concerns, inadequate sample sizes, and bias is essential to ensure the collected data is reliable, trustworthy, and secure.

In this section, we’ll explore three major challenges: data quality and consistency issues, data security concerns, and limitations with offline data collection , along with strategies to overcome them.

Data Quality and Consistency

Data quality and consistency refer to data accuracy and reliability throughout the collection and analysis process.

Challenges such as incomplete or missing data, data entry errors, measurement errors, and data coding/categorization errors can impact the integrity and usefulness of the data.

To navigate these complexities and maintain high standards, consistency, and integrity in the dataset:

- Implement robust data validation processes,

- Ensure proper training for data entry personnel,

- Employ automated data validation techniques, and

- Conduct regular data quality audits.

Data security

Data security encompasses safeguarding data through ensuring data privacy and confidentiality, securing storage and backup, and controlling data sharing and access.

Challenges include the risk of potential breaches, unauthorized access, and the need to comply with data protection regulations.

To address these setbacks and maintain privacy, trust, and confidence during the data collection process:

- Use encryption and authentication methods,

- Implement robust security protocols,

- Update security measures regularly,

- Provide employee training on data security, and

- Adopt secure cloud storage solutions.

Offline Data Collection

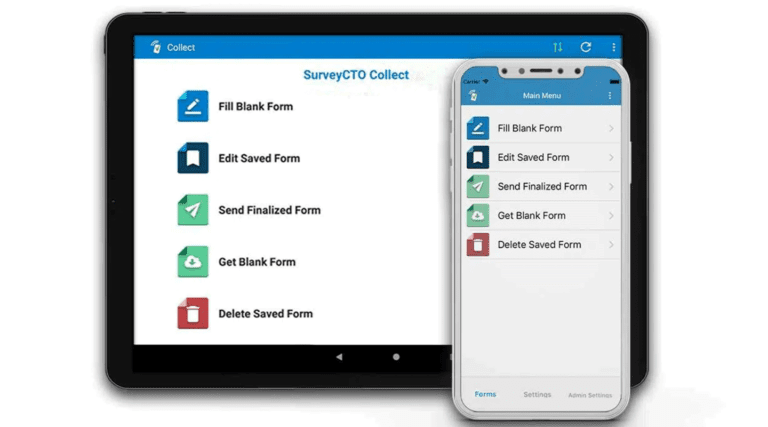

Offline data collection refers to the process of gathering data using modes like mobile device-based computer-assisted personal interviewing (CAPI) when t here is an inconsistent or unreliable internet connection, and the data collection tool being used for CAPI has the functionality to work offline.

Challenges associated with offline data collection include synchronization issues, difficulty transferring data, and compatibility problems between devices, and data collection tools.

To overcome these challenges and enable efficient and reliable offline data collection processes, employ the following strategies:

- Leverage offline-enabled data collection apps or tools that enable you to survey respondents even when there’s no internet connection, and upload data to a central repository at a later time.

- Your data collection plan should include times for periodic data synchronization when connectivity is available,

- Use offline, device-based storage for seamless data transfer and compatibility, and

- Provide clear instructions to field personnel on handling offline data collection scenarios.

Utilizing Technology in Data Collection

Embracing technology throughout your data collection process can help you overcome many challenges described in the previous section. Data collection tools can streamline your data collection, improve the quality and security of your data, and facilitate the analysis of your data. Let’s look at two broad categories of tools that are essential for data collection:

Data Collection, Entry, & Management Tools

These tools help with data collection, input, and organization. They can range from digital survey platforms to comprehensive database systems, allowing you to gather, enter, and manage your data effectively. They can significantly simplify the data collection process, minimize human error, and offer practical ways to organize and manage large volumes of data. Some of these tools are:

- Microsoft Office

- Google Docs

- SurveyMonkey

- Google Forms

Data Analysis, Visualization, Reporting, & Workflow Tools

These tools assist in processing and interpreting the collected data. They provide a way to visualize data in a user-friendly format, making it easier to identify trends and patterns. These tools can also generate comprehensive reports to share your findings with stakeholders and help manage your workflow efficiently. By automating complex tasks, they can help ensure accuracy and save time. Tools for these purposes include:

- Google sheets

Data collection tools like SurveyCTO often have integrations to help users seamlessly transition from data collection to data analysis, visualization, reporting, and managing workflows.

Master Your Data Collection Process With SurveyCTO

As we bring this guide to a close, you now possess a wealth of knowledge to develop your data collection process. From understanding the significance of setting clear goals to the crucial process of selecting your data collection methods and addressing common challenges, you are equipped to handle the intricate details of this dynamic process.

Remember, you’re not venturing into this complex process alone. At SurveyCTO, we offer not just a tool but an entire support system committed to your success. Beyond troubleshooting support, our success team serves as research advisors and expert partners, ready to provide guidance at every stage of your data collection journey.

With SurveyCTO , you can design flexible surveys in Microsoft Excel or Google Sheets, collect data online and offline with above-industry-standard security, monitor your data in real time, and effortlessly export it for further analysis in any tool of your choice. You also get access to our Data Explorer, which allows you to visualize incoming data at both individual survey and aggregate levels instantly.

In the iterative data collection process, our users tell us that SurveyCTO stands out with its capacity to establish review and correction workflows. It enables you to monitor incoming data and configure automated quality checks to flag error-prone submissions.

Finally, data security is of paramount importance to us. We ensure best-in-class security measures like SOC 2 compliance, end-to-end encryption, single sign-on (SSO), GDPR-compliant setups, customizable user roles, and self-hosting options to keep your data safe.

As you embark on your data collection journey, you can count on SurveyCTO’s experience and expertise to be by your side every step of the way. Our team would be excited and honored to be a part of your research project, offering you the tools and processes to gain informative insights and make effective decisions. Partner with us today and revolutionize the way you collect data.

Better data, better decision making, better world.

INTEGRATIONS

Data Collection Methods

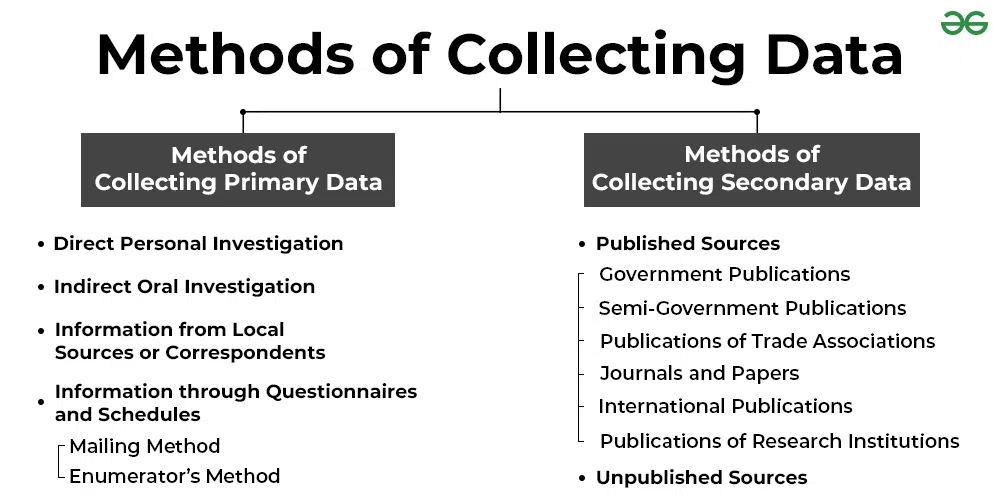

Data collection is a process of collecting information from all the relevant sources to find answers to the research problem, test the hypothesis (if you are following deductive approach ) and evaluate the outcomes. Data collection methods can be divided into two categories: secondary methods of data collection and primary methods of data collection.

Secondary Data Collection Methods

Secondary data is a type of data that has already been published in books, newspapers, magazines, journals, online portals etc. There is an abundance of data available in these sources about your research area in business studies, almost regardless of the nature of the research area. Therefore, application of appropriate set of criteria to select secondary data to be used in the study plays an important role in terms of increasing the levels of research validity and reliability.

These criteria include, but not limited to date of publication, credential of the author, reliability of the source, quality of discussions, depth of analyses, the extent of contribution of the text to the development of the research area etc. Secondary data collection is discussed in greater depth in Literature Review chapter.

Secondary data collection methods offer a range of advantages such as saving time, effort and expenses. However they have a major disadvantage. Specifically, secondary research does not make contribution to the expansion of the literature by producing fresh (new) data.

Primary Data Collection Methods

Primary data is the type of data that has not been around before. Primary data is unique findings of your research. Primary data collection and analysis typically requires more time and effort to conduct compared to the secondary data research. Primary data collection methods can be divided into two groups: quantitative and qualitative.

Quantitative data collection methods are based on mathematical calculations in various formats. Methods of quantitative data collection and analysis include questionnaires with closed-ended questions, methods of correlation and regression, mean, mode and median and others.

Quantitative methods are cheaper to apply and they can be applied within shorter duration of time compared to qualitative methods. Moreover, due to a high level of standardisation of quantitative methods, it is easy to make comparisons of findings.

Qualitative research methods , on the contrary, do not involve numbers or mathematical calculations. Qualitative research is closely associated with words, sounds, feeling, emotions, colours and other elements that are non-quantifiable.

Qualitative studies aim to ensure greater level of depth of understanding and qualitative data collection methods include interviews, questionnaires with open-ended questions, focus groups, observation, game or role-playing, case studies etc.

Your choice between quantitative or qualitative methods of data collection depends on the area of your research and the nature of research aims and objectives.

My e-book, The Ultimate Guide to Writing a Dissertation in Business Studies: a step by step assistance offers practical assistance to complete a dissertation with minimum or no stress. The e-book covers all stages of writing a dissertation starting from the selection to the research area to submitting the completed version of the work within the deadline.

John Dudovskiy

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home QuestionPro QuestionPro Products

Data Collection Methods: Types & Examples

Data is a collection of facts, figures, objects, symbols, and events gathered from different sources. Organizations collect data using various methods to make better decisions. Without data, it would be difficult for organizations to make appropriate decisions, so data is collected from different audiences at various times.

For example, an organization must collect data on product demand, customer preferences, and competitors before launching a new product. If data is not collected beforehand, the organization’s newly launched product may fail for many reasons, such as less demand and inability to meet customer needs.

Although data is a valuable asset for every organization, it does not serve any purpose until it is analyzed or processed to achieve the desired results.

What are Data Collection Methods?

Data collection methods are techniques and procedures for gathering information for research purposes. They can range from simple self-reported surveys to more complex quantitative or qualitative experiments.

Some common data collection methods include surveys , interviews, observations, focus groups, experiments, and secondary data analysis . The data collected through these methods can then be analyzed and used to support or refute research hypotheses and draw conclusions about the study’s subject matter.

Understanding Data Collection Methods

Data collection methods encompass a variety of techniques and tools for gathering quantitative and qualitative data. These methods are integral to the data collection process and ensure accurate and comprehensive data acquisition.

Quantitative data collection methods involve systematic approaches to collecting data, like numerical data, such as surveys, polls, and statistical analysis, aimed at quantifying phenomena and trends.

Conversely, qualitative data collection methods focus on capturing non-numerical information, such as interviews, focus groups, and observations, to delve deeper into understanding attitudes, behaviors, and motivations.

Combining quantitative and qualitative data collection techniques can enrich organizations’ datasets and gain comprehensive insights into complex phenomena.

Effective utilization of accurate data collection tools and techniques enhances the accuracy and reliability of collected data, facilitating informed decision-making and strategic planning.

LEARN ABOUT: Self-Selection Bias

Importance of Data Collection Methods

Data collection methods play a crucial role in the research process as they determine the quality and accuracy of the data collected. Here are some major importance of data collection methods.

- Quality and Accuracy: The choice of data collection technique directly impacts the quality and accuracy of the data obtained. Properly designed methods help ensure that the data collected is error-free and relevant to the research questions.

- Relevance, Validity, and Reliability: Effective data collection methods help ensure that the data collected is relevant to the research objectives, valid (measuring what it intends to measure), and reliable (consistent and reproducible).

- Bias Reduction and Representativeness: Carefully chosen data collection methods can help minimize biases inherent in the research process, such as sampling bias or response bias. They also aid in achieving a representative sample, enhancing the findings’ generalizability.

- Informed Decision Making: Accurate and reliable data collected through appropriate methods provide a solid foundation for making informed decisions based on research findings. This is crucial for both academic research and practical applications in various fields.

- Achievement of Research Objectives: Data collection methods should align with the research objectives to ensure that the collected data effectively addresses the research questions or hypotheses. Properly collected data facilitates the attainment of these objectives.